The funnel of human experience

post by eukaryote · 2018-10-10T02:46:02.240Z · LW · GW · 31 commentsThis is a link post for https://eukaryotewritesblog.com/2018/10/09/the-funnel-of-human-experience/

Contents

31 comments

[EDIT: Previous version of this post had a major error. Thanks for jeff8765 [LW · GW] for pinpointing the error and esrogs in the Eukaryote Writes Blog comments for bringing it to my attention as well. This has been fixed. Also, I wrote FHI when I meant FLI.]

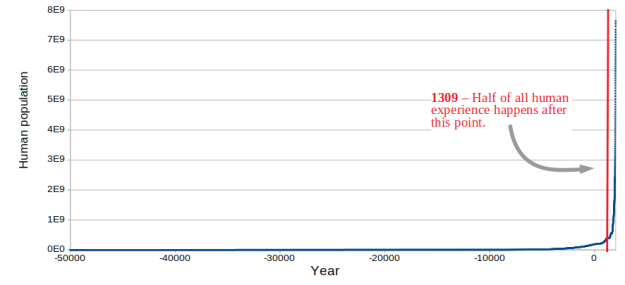

The graph of the human population over time is also a map of human experience. Think of each year as being "amount of human lived experience that happened this year." On the left, we see the approximate dawn of the modern human species in 50,000 BC. On the right, the population exploding in the present day.

It turns out that if you add up all these years, 50% of human experience has happened after 1309 AD. 15% of all experience has been experienced by people who are alive right now.

I call this "the funnel of human experience" - the fact that because of a tiny initial population blossoming out into a huge modern population, more of human experience has happened recently than time would suggest.

50,000 years is a long time, but 8,000,000,000 people is a lot of people.

If you want to expand on this, you can start doing some Fermi estimates. We as a species have spent...

- 1,650,000,000,000 total "human experience years"

- See my dataset linked at the bottom of this post.

- 7,450,000,000 human years spent having sex

- Humans spend 0.45% of our lives having sex. 0.45% * [total human experience years] = 7E9 years

- 52,000,000,000 years spent drinking coffee

- 500 billion cups of coffee drunk this year x 15 minutes to drink each cup x 100 years* = 5E10 years

- *Coffee consumption has likely been much higher recently than historically, but it does have a long history. I’m estimating about a hundred years of current consumption for total global consumption ever.

- 1,000,000,000 years spent in labor

- 110,000,000,000 billion humans ever x ½ women x 12 pregnancies* x 15 hours apiece = 1.1E9 years

- *Infant mortality, yo. H/t Ellie and Shaw for this estimate.

- 417,000,000 years spent worshipping the Greek gods

- 1000 years* x 10,000,000 people** x 365 days a year x 1 hour a day*** = 4E8 years

- *Some googling suggested that people worshipped the Greek/Roman Gods in some capacity from roughly 500 BC to 500 AD.

- **There were about 10 million people in Ancient Greece. This probably tapered a lot to the beginning and end of that period, but on the other hand worship must have been more widespread than just Greece, and there have been pagans and Hellenists worshiping since then.

- ***Worshiping generally took about an hour a day on average, figuring in priests and festivals? Sure.

- 30,000,000 years spent watching Netflix

- 14,000,000 hours/day* x 365 days x 5 years** = 2.92E7 years

- * Netflix users watched an average of 14 million hours of content a day in 2017.

- **Netflix the company has been around for 10 years, but has gotten bigger recently.

- 50,000 years spent drinking coffee in Waffle House

- 1.3 million cups* x 20 minutes = 4.9E4 years

- *Waffle House made this calculation easy. Bless you, Waffle House.

So humanity in aggregate has spent about ten times as long worshiping the Greek gods as we've spent watching Netflix.

We've spent another ten times as long having sex as we've spent worshipping the Greek gods.

And we've spent ten times as long drinking coffee as we've spent having sex.

I'm not sure what this implies. Here are a few things I gathered from this:

1) I used to be annoyed at my high school world history classes for spending so much time on medieval history and after, when there was, you know, all of history before that too. Obviously there are other reasons for this - Eurocentrism, the fact that more recent events have clearer ramifications today - but to some degree this is in fact accurately reflecting how much history there is.

On the other hand, I spent a bunch of time in school learning about the Greek Gods, a tiny chunk of time learning about labor, and virtually no time learning about coffee. This is another disappointing trend in the way history is approached and taught, focusing on a series of major events rather than the day-to-day life of people.

2) The Funnel gets more stark the closer you move to the present day. Look at science. FLI reports that 90% of PhDs that have ever lived are alive right now. That means most of all scientific thought is happening in parallel rather than sequentially.

3) You can't use the Funnel to reason about everything. For instance, you can't use it to reason about extended evolutionary processes. Evolution is necessarily cumulative. It works on the unit of generations, not individuals. (You can make some inferences about evolution - for instance, the likelihood of any particular mutation occurring increases when there are more individuals to mutate - but evolution still has the same number of generations to work with, no matter how large each generation is.)

4) This made me think about the phrase “living memory”. The world’s oldest living person is Kane Tanaka, who was born in 1903. 28% of the entirety of human experience has happened since her birth. As mentioned above, 15% has been directly experienced by living people. We have writing and communication and memory, so we have a flawed channel by which to inherit information, and experiences in a sense. But humans as a species can only directly remember as far back as 1903.

Here's my dataset. The population data comes from the Population Review Bureau and their report on how many humans ever lived, and from Our World In Data. Let me know if you get anything from this.

Fun fact: The average living human is 30.4 years old.

Wait But Why's explanation of the real revolution of artificial intelligence is relevant and worth reading. See also Luke Muehlhauser's conclusions on the Industrial Revolution: Part One and Part Two.

31 comments

Comments sorted by top scores.

comment by jeff8765 · 2018-10-10T04:05:42.438Z · LW(p) · GW(p)

I think you added an extra three zeros during your total year calculations. you list 2.23E15 as the total number of years experienced, but multiplying the total time of 5E4 by the current population of 8E9 gives a total of only 4E14 experience years. The true number must be quite a bit lower as the human population was quite a bit lower than 8 billion for most of that time. This also affects the proportion of experience years which have occurred in living memory. My guess is 20% have occurred since the birth of Kane Tanaka and 10% experienced by living people. This also squares pretty well with your figure of 50% of human experience occurring since 1300. It doesn't really make since for 50% of experience to have occurred since 1300, but only 0.02% since 1903.

Replies from: eukaryote↑ comment by eukaryote · 2018-10-10T04:46:45.384Z · LW(p) · GW(p)

You are super right and that is exactly what happened - I checked the numbers and had made the order of magnitude three times larger. Thanks for the sanity checks and catch. It turns out this moves the midpoint up to 1432. Lemme fix the other numbers as well.

Update: Actually, it did nothing to the midpoint, which makes sense in retrospect (maybe?) but does change the "fraction of time" thing, as well as some of the Fermi estimates in the middle.

15% of experience has actually been experienced by living people, and 28% since Kane Tanaka's birth. I've updated this here and on my blog.

comment by eukaryote · 2020-01-10T02:47:52.291Z · LW(p) · GW(p)

Quick authorial review: This post has brought me the greatest joy from other sources referring to it, including Marginal Revolution (https://marginalrevolution.com/marginalrevolution/2018/10/funnel-human-experience.html) and the New York Times bestseller "The Uninhabitable Earth". I was kind of hoping to supply a fact about the world that people could use in many different lights, and they have (see those and also like https://unherd.com/2018/10/why-are-woke-liberals-such-enemies-of-the-past/ )

An unintentional takeaway from this attention is solidifying my belief that if you're describing a new specific concept, you should make up a name too. For most purposes, this is for reasons like the ones described by Malcolm Ocean here (https://malcolmocean.com/2016/02/sparkly-pink-purple-ball-thing/). But also, sometimes, a New York Times bestseller will cite you, and you'll only find out as you set up Google alerts.

(And then once you make a unique name, set up google alerts for it. The book just cites "eukaryote" rather than my name, and this post rather than the one on my blog. Which I guess goes to show you that you can put anything in a book.)

Anyways, I'm actually a little embarrassed because my data on human populations isn't super accurate - they start at the year 50,000 BCE, when there were humans well before that. But those populations were small, probably not enough to significantly influence the result. I'm not a historian, and really don't want to invest the effort needed for more accurate numbers, although if someone would like to, please go ahead.

But it also shows that people are interested in quantification. I've written a lot of posts that are me trying to find a set of numbers, and making lots and lots of assumptions along the way. But then you have some plausible numbers. It turns out that you can just do this, and don't need a qualification in Counting Animals or whatever, just supply your reasoning and attach the appropriate caveats. There are no experts, but you can become the first one.

As an aside, in the intervening years, I've become more interested in the everyday life of the past - of all of the earlier chunks that made up so much of the funnel. I read an early 1800's housekeeping book, "The Frugal Housewife", which advises mothers to teach their children how to knit starting at age 4, and to keep all members of the family knitting in their downtime. And it's horrifying, but maybe that's what you have to do to keep your family warm in the northeast US winter. No downtime that isn't productive. I've taken up knitting lately and enjoy it, but at the same time, I love that it's a hobby and not a requirement. A lot of human experience must have been at the razor's edge of survival, Darwin's hounds nipping at our heels. I prefer 2020.

If you want a slight taste of everyday life at the midpoint of human experience, you might be interested in the Society for Creative Anachronism. It features swordfighting and court pagentry but also just a lot of everyday crafts - sewing, knitting, brewing, cooking. If you want to learn about medieval soapmaking or forging, they will help you find out.

comment by Said Achmiz (SaidAchmiz) · 2018-10-10T09:02:41.744Z · LW(p) · GW(p)

Look at science. FHI reports that 90% of PhDs that have ever lived are alive right now. That means most of all scientific thought is happening in parallel rather than sequentially.

Surely you can’t mean this literally (but then I have no idea how to take this comment)!

You seem to assume that…

-

Anyone who wasn’t (or isn’t) a Ph.D. isn’t a scientist. (Did Democritus have a doctorate? Francis Bacon? Dmitri Mendeleev? Were they not engaged in “scientific thought”?)

-

Anyone who was (or is) a Ph.D. is a scientist. (My mother holds a Ph.D. in Education. Is she a scientist? Let me assure you that I have the greatest respect for my mother; she is a highly competent educator and an effective administrator… but to call her a scientist would be absurd.)

Furthermore—and I say this as someone who made it partway toward having my own Ph.D., and had the chance to do a bit of research, and see the world of academia—I submit to you that a very large (I might even say, a disturbingly large) fraction of today’s doctoral degree holders are not, in fact, engaged in any scientific thought.

Replies from: eukaryote↑ comment by eukaryote · 2018-10-10T16:46:23.503Z · LW(p) · GW(p)

Yeah, let me unpack this a little more. Over half of PhDs are in STEM fields - 58% in 1974, and 75% in 2014, providing weak evidence that this is becoming more true over time.

Dmitri Mendeleev had a doctorate. The other two did not. I see the point you're getting at - that scientific thought is not limited to PhDs, and is older than them as an institution - but surely it also makes sense that civilization is wealthier and has more capacity than ever for people to spend their lives pursuing knowledge, and that the opportunity to do so is available to more people (women, for instance.) That's why 90% is reasonable to me even if PhDs are a poor proxy.

The last point about how PhDs don't necessarily do scientific thought makes sense. Shall I say "formal scientific thought" instead? We're on LessWrong and may as well hold "real scientific thought" to a high standard, but if you want to conclude from this "we have most of all the people who are supposed to be scientists with us now and they're not doing anything", well, there's something real to that too.

Replies from: SaidAchmiz, SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-10T18:16:06.702Z · LW(p) · GW(p)

surely it also makes sense that civilization is wealthier and has more capacity than ever for people to spend their lives pursuing knowledge, and that the opportunity to do so is available to more people (women, for instance.)

I am not sure that this is true.

Certainly, women can pursue knowledge. Or can they? Can men? Can anyone? I have my doubts. You have, I don’t doubt, heard the almost-stereotypical complaints about the tenured professor’s academic activity being devoted—if not entirely, then far too close to it—to such things as grant-writing, intradepartmental politicking, and other nonsense. It seems fairly clear to me that on average, the “scientist” of today does far less of anything that can (without diluting the word into unrecognizability) be called “science”. It may very well be much less. If one attempts to “pursue knowledge” today, there are many fields in which one ends up actually pursuing something more like “a career loosely associated with the pursuit of knowledge”.

The last point about how PhDs don’t necessarily do scientific thought makes sense. Shall I say “formal scientific thought” instead? We’re on LessWrong and may as well hold “real scientific thought” to a high standard, but if you want to conclude from this “we have most of all the people who are supposed to be scientists with us now and they’re not doing anything”, well, there’s something real to that too.

I confess that I don’t quite follow your train of thought, here (to the point that I can’t really tell whether you’re agreeing with me, or disagreeing, or what). Could you clarify?

My second point in the grandparent was, in fact, about Ph.D.s specifically. Once again, consider the case of my mother: she’s a teacher, an administrator, a curriculum designer, etc. My mother is not doing scientific thought. She’s not trying to do scientific thought. She had no plans to do any scientific thought, and no one else expected her to be doing any scientific thought, either (neither unofficially nor officially). My mother got her doctorate because, in her line of work, people with a doctorate earn more money than people without a doctorate. And, indeed, as a result of getting her doctorate, she began to earn more money. Everything is going according to plan. It’s just that said plan did not, and does not, involve “scientific thought” in any way.

Replies from: quanticle, eukaryote↑ comment by quanticle · 2018-10-11T06:06:13.077Z · LW(p) · GW(p)

You have, I don’t doubt, heard the almost-stereotypical complaints about the tenured professor’s academic activity being devoted—if not entirely, then far too close to it—to such things as grant-writing, intradepartmental politicking, and other nonsense.

Yes, but the gentlemen scholars of the 18th century couldn't devote all of their time to the pursuit of science either. They had estates to run, social obligations to fulfill, duels to fight, and, as you so well put it, "other nonsense." Is the tenured professor today doing more or less "science" per week than a gentleman scholar of the 18th century? I don't know, but I'm not sure that it's self evident that Lord Kelvin and Charles Darwin were doing more science per week than a tenured professor today.

Secondly, even after taking into consideration the possibility that gentlemen scholars did much more science per week than today's tenured professors, I still think it's plausible that much more science, in total, is getting done today than it was in the 18th Century. We have to remember how few early scientists were, and how difficult it was for them to communicate. Even if a modern tenured professor spends 90% less time doing science than a gentleman scholar, it's still plausible to me that the majority of scientific thought is taking place right now.

Replies from: Zvi, SaidAchmiz, Benquo↑ comment by Zvi · 2018-10-11T19:46:47.072Z · LW(p) · GW(p)

Let's assume that this is true, and the majority of 'scientific' thought is happening now. Given the observed rate of scientific progress, what explanation should we consider?

1) Today's problems really are that much harder than old problems and/or no really, we're making great progress! I kid.

2) Scientific thought today is so terrible that it doesn't produce much scientific progress.

3) What we're calling scientific thought never was what produced scientific progress.

4) Scientific thought today isn't aimed at producing scientific progress, so it doesn't.

Replies from: quanticle

↑ comment by quanticle · 2018-10-11T22:25:38.141Z · LW(p) · GW(p)

What's wrong with (1) being a valid explanation? The geniuses of the 17th and 18th centuries, like Gauss and Newton, did work that today is expected of moderately bright high-schoolers. Decartes' geometry can be understood by middle-schoolers. Even the science of the 19th century, like work of Maxwell and Rutherford is considered to be pretty much undergraduate level today.

Is it really that implausible to you that the low-hanging fruit is gone?

Replies from: SaidAchmiz, redlizard↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-11T23:41:41.005Z · LW(p) · GW(p)

I think you are drastically overestimating how common it is for even “moderately bright high-schoolers” to understand the material even half so well as Gauss or Newton did, rather than merely learning techniques (which techniques, by the way, were developed over the course of considerable time, so the math students of today are taking advantage of the work of many before them…).

↑ comment by redlizard · 2018-10-19T04:12:40.104Z · LW(p) · GW(p)

I think there is about a three orders of magnitude difference between the difficulties of "inventing calculus where there was none before" and "learning calculus from a textbook explanation carefully laid out in the optimal order, with each component polished over the centuries to the easiest possible explanation, with all the barriers to understanding carefully paved over to construct the smoothest explanatory trajectory possible".

(Yes, "three orders of magnitude" is an actual attempt to estimate something, insofar as that is at all meaningful for an unquantified gut instinct; it's not just something I said for rhetoric effect.)

↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-11T23:38:03.890Z · LW(p) · GW(p)

Yes, but the gentlemen scholars of the 18th century couldn’t devote all of their time to the pursuit of science either. They had estates to run, social obligations to fulfill, duels to fight, and, as you so well put it, “other nonsense.” Is the tenured professor today doing more or less “science” per week than a gentleman scholar of the 18th century? I don’t know, but I’m not sure that it’s self evident that Lord Kelvin and Charles Darwin were doing more science per week than a tenured professor today.

This is partly a fair point and a good question, though it’s also partly unfair.

An 18th-century gentleman scholar might, indeed, have to devote time to running his estate. (Although “duels to fight” might be a stretch. How many duels did Charles Darwin or Lord Kelvin participate in?)

But then, a 21st-century tenured professor also has to devote time to any number of things outside work: hobbies, taking care of his family, housework, shopping, etc.

The problem, however, is that even of that time which our tenured professor allocates to “work”, much is wasted. Was this also true of the gentleman scholar?

Secondly, even after taking into consideration the possibility that gentlemen scholars did much more science per week than today’s tenured professors, I still think it’s plausible that much more science, in total, is getting done today than it was in the 18th Century. We have to remember how few early scientists were, and how difficult it was for them to communicate. Even if a modern tenured professor spends 90% less time doing science than a gentleman scholar, it’s still plausible to me that the majority of scientific thought is taking place right now.

Indeed, it is true that our overwhelming numerical advantage over the world of the past must result in today’s “total time doing science” far outweighing that of any past era.

The question, however, concerned the “majority of scientific thought”—and that (I contend) is a rather different matter.

To put it bluntly, many people in STEM fields are working on things that don’t, in any real sense, matter—artificial problems, non-problems, intellectual cul-de-sacs, that lead to nothing; they exist, and have people working on them, only due to the current (grant-based) model of science funding. No researcher who is being honest with himself (and has not totally lost such self-awareness) really thinks that there’s scientific value in such things, that they advance the frontiers of human knowledge and understanding of the universe. Computer science is full of this. So are various informatics-related fields. So is HCI.

Are the researchers who work on such things engaged in “scientific thought”?

↑ comment by Benquo · 2018-10-11T17:11:58.601Z · LW(p) · GW(p)

"Almost entirely" is very different from "somewhat." Whether or not it's self-evident that most "science" PhDs don't do science, there's plenty of finite evidence of problems, like Saul Perlmutter's claim that he couldn't do the work that won him his Nobel today. Perhaps there's not an uniform decline, but there's enough evidence that the meaning of the relevant metric is not consistently reliable to make it pretty sketchy to use PhD as a proxy for doing meaningful scientific work.

↑ comment by eukaryote · 2018-10-10T18:56:53.066Z · LW(p) · GW(p)

Certainly, women can pursue knowledge. Or can they? Can men? Can anyone?

I don't know what you mean by this and suspect it's beyond the scope of this piece.

It seems fairly clear to me that on average, the “scientist” of today does far less of anything that can (without diluting the word into unrecognizability) be called “science”. It may very well be much less.

Seems possible. I don't know what the day-to-day process of past scientists was like. I wonder if something like improvements to statistics, the scientific method, etc., means that modern scientists get more learned per "time spent science" than in the past - I don't know. This may also be outweighed by how many more scientists now than there were then.

The last point about how PhDs don’t necessarily do scientific thought makes sense. Shall I say “formal scientific thought” instead? We’re on LessWrong and may as well hold “real scientific thought” to a high standard, but if you want to conclude from this “we have most of all the people who are supposed to be scientists with us now and they’re not doing anything”, well, there’s something real to that too.

What I meant by this is that perhaps the thing I'm more directly grasping at here is "amount of time people have spent trying to do science", with much less certainty around "how much science gets done." If people are spending much more time trying to do science now than they ever have in the past, and less is getting done (I'm not sure if I buy this), that's a problem, or maybe just indicative of something.

Once again, consider the case of my mother: she’s a teacher, an administrator, a curriculum designer, etc. My mother is not doing scientific thought. She’s not trying to do scientific thought.

Sure. I suppose I'm using PhDs as something of a proxy here, for "people who have spent a long time pushing on the edges of a scientific field". Think of STEM PhDs alone if you prefe. (Though note that someone in your mother's field could be doing science - if you say she's not, I believe you, but limiting it to just classic STEM is also only a proxy.)

Replies from: Benquo, SaidAchmiz↑ comment by Benquo · 2018-10-11T17:04:45.570Z · LW(p) · GW(p)

On the "who can pursue knowledge" question, it seems to me like Said's actually saying two very different things:

- Historically a large number of people likely inclined towards pursuing scientific knowledge didn't have access to formal credentials. But this doesn't necessarily mean they didn't do science!

- The credentialing and career system in science impedes people from pursuing scientific knowledge.

These both seem like serious critiques of the proxy you're using, similar to using "licensed therapist" as a proxy for "attentive sympathetic listener" or "lawyer" as a proxy for "works to resolve conflicts through systematic, formal reasoning."

↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-10T20:02:47.622Z · LW(p) · GW(p)

Certainly, women can pursue knowledge. Or can they? Can men? Can anyone?

I don’t know what you mean by this and suspect it’s beyond the scope of this piece.

What I meant by it is just what I wrote in the rest of that paragraph, not some additional mysterious philosophical question.

This may also be outweighed by how many more scientists now than there were then.

Indeed, it may be, but then again it may not be; and if it is, then by how much? These are the important questions.

(Though note that someone in your mother’s field could be doing science—if you say she’s not, I believe you, but limiting it to just classic STEM is also only a proxy.)

Let me emphasize once again that the fact that my mother isn’t doing science is not some fluke, aberration, regrettable failing of the officially intended operation of the system, etc. Literally no one had any intention or expectation that my mother would be doing any science. That’s not why she got her doctorate, and no one within the system thinks or expects otherwise, or thinks that this is somehow a problem.

Yes, someone else “in her field” (broadly speaking) could be doing science, and some people are. That changes nothing. I never said “no one with a Ph.D. in Education is doing science”.

The point is that the identification between “people with Ph.D.s” and “people doing / trying to do / supposed to be doing science”, which you seem to be assuming, simply does not exist—not even ideally, not even in terms of “intent” of the system. Maybe it did once, but not anymore.

I suppose I’m using PhDs as something of a proxy here, for “people who have spent a long time pushing on the edges of a scientific field”. Think of STEM PhDs alone if you prefe.

Yes, the question of “how many people are there today, who have spent a long time pushing on the edges of a scientific field” is an interesting and important one. But I think that even “STEM Ph.D.s” is a poor proxy for this. (I haven’t the time right now, but I may elaborate later on why that’s the case.)

↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-10T17:59:47.163Z · LW(p) · GW(p)

Dmitri Mendeleev had a doctorate.

The English-language Wikipedia page about Mendeleev does not go into as much detail as the Russian one.

Mendeleev defended his doctoral thesis in 1865. By then, he had been teaching for nine years, and had done groundbreaking work in thermodynamics and crystallography.

Had Mendeleev not gotten his doctorate, or changed fields, or died before 1865, would his work of the previous decade been non-scientific? (Rhetorical question, of course, since you’ve already acknowledged my point.)

Replies from: eukaryote, Douglas_Knight↑ comment by Douglas_Knight · 2019-07-12T02:42:01.370Z · LW(p) · GW(p)

Mendeleev received a доктор degree in 1865. Although cognate to doctor, this is usually translated as habilitation. The PhD is usually considered equivalent to the кандидат (candidate) degree, which he received in 1856.

comment by catherio · 2018-10-12T00:06:30.508Z · LW(p) · GW(p)

Our collective total years of experience is ~119 times the age of the universe. (The universe is 13.8 billion years old, versus 1.65 trillion total human experience years so far).

Also: at 7.44 billion people alive right now, we collectively experience the age of the universe every ~2 years (https://twitter.com/karpathy/status/850772106870640640?lang=en)

comment by habryka (habryka4) · 2018-10-15T19:56:25.354Z · LW(p) · GW(p)

Promoted to curated: I think this post is in a really important reference class of trying to understand big trends, using relatively first-principles reasoning, and generally staying close to available data and common human experience. I generally feel like I learn quite a bit every time I read a post in this reference class, and would love to see more of them, and this post is a pretty good central example, so I think it makes sense to curate it.

I am also happy that the author was responsive to people pointing out errors, and quickly fixed them. Which I think should be rewarded.

I also feel like this post is generally low on "trying to convince me of something" and is instead more engaging in a collaborative exploration of important aspects of reality, which I generally think is an important think to cultivate in LessWrong posts.

comment by Shmi (shminux) · 2018-10-10T05:42:11.226Z · LW(p) · GW(p)

Why do you think it is meaningful to (simply) add human experience?

Replies from: eukaryote, scarcegreengrass↑ comment by scarcegreengrass · 2018-10-10T18:23:26.119Z · LW(p) · GW(p)

It's relevant to some forms of utilitarian ethics.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-10-10T18:42:07.723Z · LW(p) · GW(p)

That is an answer to a different question—shminux did not ask why it’s relevant, but why it’s meaningful.

In other words, the question is not about the desirability of having simple aggregate measures of human experience, but about the possibility of their existence.

comment by Gerdy van der Stap (gerdy-van-der-stap) · 2018-10-20T11:20:28.972Z · LW(p) · GW(p)

The human experience did not start 50.000 BC. Homo sapiens is older than that; there is evidence of modern humans living in North Africa 300.000 years ago. https://www.inverse.com/article/32650-jebel-irhoud-morocco-homo-sapiens-evolution-first-humans

comment by Benquo · 2018-10-11T17:01:31.476Z · LW(p) · GW(p)

I wonder what percentage of cumulative pig and chicken experience is that of being factory-farmed.

Replies from: eukaryote↑ comment by eukaryote · 2018-10-11T17:56:51.965Z · LW(p) · GW(p)

I haven't looked into this, but based on trends in meat consumption (richer people eat more meat), the growing human population, and factory farming as an efficiency improvement over traditional animal agriculture, I'm going to guess "most".

comment by Dave Clifton (dave-clifton) · 2018-10-11T20:44:28.642Z · LW(p) · GW(p)

1309 now.

From the graph, it looks like that will be 1335 next year and 1361 the year after.

comment by habryka (habryka4) · 2019-12-02T05:51:44.359Z · LW(p) · GW(p)

I still endorse everything in my curation notice, and also think that the question of what fraction of human experience is happening right now is an important point to be calibrated on in order to have good intuitions about scientific progress and the general rate of change for the modern world.

comment by Ben Pace (Benito) · 2019-12-02T06:00:33.811Z · LW(p) · GW(p)

Seconding Habryka.