The "hard" problem of consciousness is the least interesting problem of consciousness

post by Hazard · 2020-06-08T19:00:57.962Z · LW · GW · 45 commentsContents

Colors of Experience Enums for Illumination Color Swap Outro None 45 comments

Cross-posted from my roam-blog. Nothing new to anyone who's read about these ideas, meant to be a reference for how I think about things.

If you come up with what you think is a decent and useful theory/partial-theory of consciousness, you will be visited by a spooky daemon who will laugh and inform you that you merely dabble in the "easy problem of consciousness" and have gotten nowhere near "The Hard Problem of Consciousness"

Chalmers put the hard problem like this:

What makes the hard problem hard and almost unique is that it goes beyond problems about the performance of functions. To see this, note that even when we have explained the performance of all the cognitive and behavioral functions in the vicinity of experience—perceptual discrimination, categorization, internal access, verbal report—there may still remain a further unanswered question: Why is the performance of these functions accompanied by experience? A simple explanation of the functions leaves this question open

The idea goes that even if you explained how the brain does everything it does, you haven't explained why this doing is accompanied by subjective experience. It's rooted in this idea that subjective experience is somehow completely isolated from, and separate to behavior.

This supposed isolation is already a little suspicious to me. As Scott Aaronson points out in Why Philosophers Should Care About Complexity Theory, people judge each other to be conscious and capable of subjective experience after very short interactions. From a very short interaction with my desk I've concluded it doesn't have subjective experience. After a few years of interacting with my dog, I'm still on the fence on if it has subjective experience.

So when I hear a claim that "subjective experience" and "qualia" are divorced from any and all behavior or functionality in the mind, I'm left with a sense that Chalmers is talking about something very different from my subjective experience, and what it seems like to be me. My subjective experience seems deeply integrated with my behavior and functioning.

Colors of Experience

To explore this more, let's briefly look at a classic puzzler:

What if everyone say different colors? What if when we looked at the sky, and the ocean, we both used the English word "Blue", but you experienced what I experience when I look at what we both agree is called "Green"? How would you even tell if this was the case?

To talk about color, I'm first going to talk about Enums.

Enums for Illumination

Often when you code you assign "values" to "variables", where the "value" is the actual content that the computer works with, and the "variable" is a English word that you use to talk about the value.

health = 12

WHEN health LESS-THAN OR EQUAL-TO 0: PLAYER DIESIn this pseudo-code example, you can see it matters what value the health variable has, because there's code that will do different things based on different values.

Most languages also have a thing called "Enums". Enums are like variables were you don't care what the value is. You just want a set of English words to be able to differentiate between different things. Another pseudo code example:

Colors = Enum{RED, GREEN, BLUE, YELLOW, ORANGE, VIOLET}

WHEN LIGHTWAVE IN RANGE(380nm,450nm): SEE Colors.VIOLET

WHEN LIGHTWAVE IN RANGE(590nm,625nm): SEE Colors.ORANGE

WHEN SEE Colors.ORANGE: FEEL HAPPY

WHEN SEE Colors.VIOLET: FEEL COMPASSIONUnder the hood, an actual number will be substituted for each Enum. So WHEN SEE Color.ORANGE: FEEL HAPPY will become WHEN SEE 15: FEEL HAPPY. The computer still needs some value associate with Colors.ORANGE so that it can check if other values are equal to it. It's just that you don't care what the value is.

I bring up Enums because they are a very concrete example of a system where an entity only has meaning based on it's relationship to other things. Under the hood Colors.ORANGE might be assigned 2837, but that number doesn't capture the meaning of Colors.ORANGE. The meaning is encapsulated by it's relationship to other colors, and what visible lightwaves get associated with it, and what the rest of the code decides to do when it sees Colors.ORANGE as opposed to Colors.PURPLE.

Color Swap

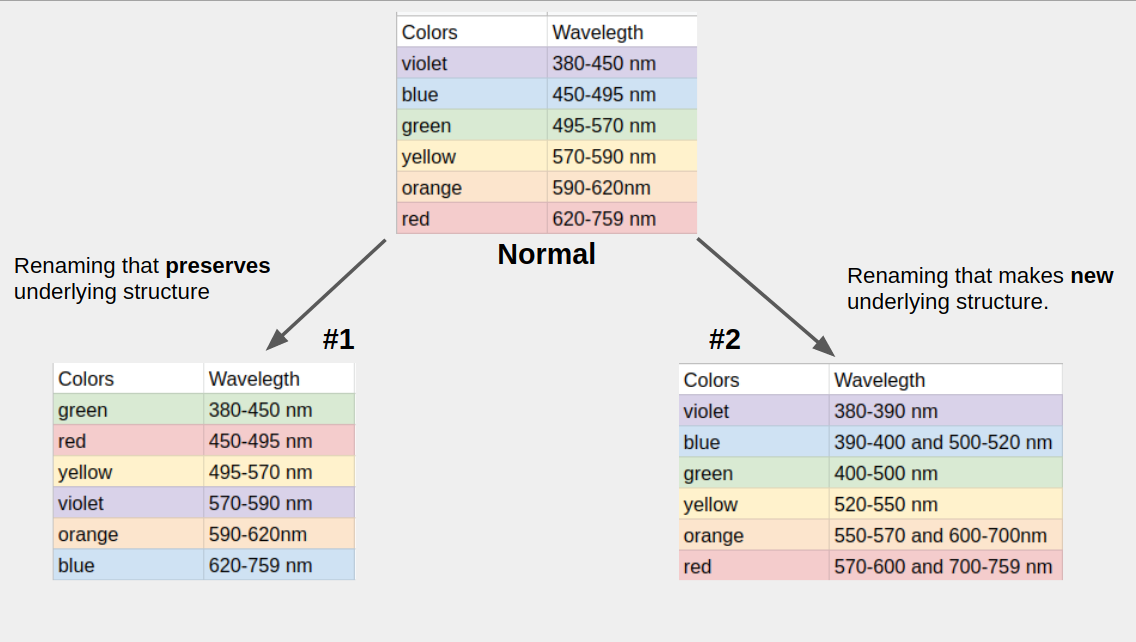

Now let's use Enums as a model to think about the color problem. There are some ways that we could "see different colors" that would easily be discovered, and others that might not. Check this image: read the tables as "when I see light in the wavelength of the right column, I have the experience I associate with the color in the left column. Normal is how we all see colors, and #1 and #2 are proposed ways that someone else could "see colors differently".

If your vision worked like normal and mine worked like #2, it would be EASY to tell. My vision has a fundamentally different structure. If you saw a violent in the grass, I'd be insisting that the violet and the grass were the same color. Because the "shape" of my experience is fundamentally different from yours, we wouldn't even be able to agree on a consistent naming schema.

If your vision worked like normal and mine worked like #1, we could totally end up agreeing on names for different experiences. Since to both of us, light with wavelength in the range 380-450 nm feels like a cohesive thing, we could both end up calling that feeling "red", even if the subjective experiences were "different".

Thinking about this, I'm imagining as if colors work the same way as my Enums example. The "code" in our heads would treat the same sort of light in the same sorta way*. We'd have similar regularities in our experience, because all of the "code" only uses Colors.ORANGE as a way to differentiate from other colors. In this metaphor, the concrete value of Colors.ORANGE (say, 44003) would be your qualia/subjective-experience. If everyone has more or less the same code for their color enums, everyone could have different concrete values associated with the same Enum, but it wouldn't effect anything that actually matters about color.

It does seem to be a thing that different cultures can have quite different naming schemes for color experiences, but I've never seen evidence that the fundamental "shape" of experience is different.

Well, that isn't quite true. As a djmoortex [LW(p) · GW(p)] pointed out in a comment, the experience of a color is deeply related to all of the associations you have with a color.

There's a store i pass on my to work that I dislike because the red and blue LED display in its window fools me into thinking there's a pack of police cars there.

So it seems like there's a part of people's experience of color (the fact that similar wavelengths feel similar, and far away wavelengths feel more distinct) that I expect is fairly uniform, and at the same time there's another important aspect of the experience of color (what associations you have to different colors, what they make you think and feel) which can vary wildly.

Outro

The more I think about qualia, the more I feel like the only meaning I can find in any of my subjective experiences is in how they relate to everything else in my head. It's the patters of what sorts of things out in the world lead to what sorts of subjective experience (different colors correspond to how hot something will get in the sun). It's the particular associations I have with different experiences (blue makes me think of a cool breeze). It's that certain subjective experiences imply others (I can normally tell if something will feel rough based on if it looks rough).

I'll admit, when I think about color this way, dwelling on how the meaning of "red" is deeply intertwined with the patterns of things I see as "red", I still feel like there is this "extra" thing I'm experiencing. The "redness" that is separate from all those connections and patterns. So I can see why one might still want to ponder and question this "mysterious redness".

Now, I do agree that there is in fact a "problem" that is the hard problem of consciousness (this comment explicates the ontological issues [LW(p) · GW(p)] involved). I don't think this post has "dissolved the question". It's just that I personally can't find any way to relate this isolated "qualia of redness" to anything else I care about. All of the meaning I find in my own experience is stuff that I can related to behavior and function, the "easy" problems of consciousness. Given that, I think I want to rename Chalmers' categories as the "Boring" (hard) and "Interesting" (easy) problem of consciousness.

45 comments

Comments sorted by top scores.

comment by Kaj_Sotala · 2020-06-08T20:00:07.151Z · LW(p) · GW(p)

So when I hear a claim that "subjective experience" and "qualia" are divorced from any and all behavior or functionality in the mind

I don't think that Chalmers would claim that they are. He's only saying that there doesn't seem to be any obvious logical reason for why someone would need to have subjective experience, and that it needs to be explained why we seem to have subjective experience anyway.

When you say:

The more I think about qualia, the more I feel like the only meaning I can find in any of my subjective experiences is in how they relate to everything else in my head.

Then one could answer "but why couldn't a computer system just have this enum-like system that had all the properties which match your subjective experience, without having that subjective experience?"

Note that this is not claiming that your subjective experiences wouldn't be related to the behavior and functionality of your mind. They obviously are! But that doesn't explain why they are.

Replies from: Hazard↑ comment by Hazard · 2020-06-09T15:15:10.265Z · LW(p) · GW(p)

Hmm, you did notice a point where I sorta simplified Chalmers to get the post done.

Then one could answer "but why couldn't a computer system just have this enum-like system that had all the properties which match your subjective experience, without having that subjective experience?"

This is near a question I do think is interesting. I'm starting to think there's a sliding scale of "amount of subjective experience" a thing can have. And I am very curious about "what sorts of things will and won't have X amount of subjective experience".

I guess my beef is that when it's framed as "But why does XYZ system entail qualia?" I infer that even if in the far future I had a SUPER detailed understanding of "tweak this and you get X more units of experience, if you don't have ABC any experience is impossible, LMN architecture is really helpful, but not necessary" that Chalmers would still be unimpressed and got "But why does any of this lead to qualia?"

Well, I don't actually think he'd say that. If I had that sorta detailed outline I think his mind would be blown and he'd be super excited.

But when I imagine the person who is still going "But why", I'm imagining that they must be thinking of qualia is this isolated, other, and separate thing.

Replies from: TAG, Kaj_Sotala↑ comment by TAG · 2020-06-12T23:03:05.631Z · LW(p) · GW(p)

I guess my beef is that when it’s framed as “But why does XYZ system entail qualia?” I infer that even if in the far future I had a SUPER detailed understanding of “tweak this and you get X more units of experience, if you don’t have ABC any experience is impossible, LMN architecture is really helpful, but not necessary” that Chalmers would still be unimpressed and got “But why does any of this lead to qualia?”

How do you argue that no physical explanation, even an unknown future one, could explain qualia?

Chalmers has an argument of that sort. He is not just knee jerking. He characterises all physical explanations as being about structure and function. He thinks qualia are not susceptible to structural and funcitonal explanation (not "isolated" or "separate").

↑ comment by Kaj_Sotala · 2020-06-16T11:29:11.365Z · LW(p) · GW(p)

I guess my beef is that when it's framed as "But why does XYZ system entail qualia?" I infer that even if in the far future I had a SUPER detailed understanding of "tweak this and you get X more units of experience, if you don't have ABC any experience is impossible, LMN architecture is really helpful, but not necessary" that Chalmers would still be unimpressed and got "But why does any of this lead to qualia?"

Well, I don't actually think he'd say that. If I had that sorta detailed outline I think his mind would be blown and he'd be super excited.

But when I imagine the person who is still going "But why", I'm imagining that they must be thinking of qualia is this isolated, other, and separate thing.

It's a little unclear from this description whether that understanding would actually solve the hard problem or not? Like, if we have a solution for it, then it would obviously be silly for someone to still say "but why"; but if that understanding actually doesn't solve the problem, then it doesn't seem particularly silly to continue asking the question. Whether or not asking it in that situation implies believing that qualia must be divorced from everything else - I couldn't tell without actually seeing an explanation of that understanding.

comment by TAG · 2020-06-12T22:13:31.035Z · LW(p) · GW(p)

So when I hear a claim that “subjective experience” and “qualia” are divorced from any and all behavior or functionality in the mind, I’m left with a sense that Chalmers is talking about something very different from my subjective experience, and what it seems like to be me. My subjective experience seems deeply integrated with my behavior and functioning.

That's playing on different meanings of "isolated" and "integrated". The claim that explanations of behaviour are not explanations of subjective experience does not by itself amount to the claim that subjective experience is epiphenomenal -- causally idle, not causing or influencing behaviour.

There is fertile ground for confusion here, because there are plausible grounds for considering Chalmers to be an epiphenomenalist -- but, importantly, his epiphenomenalism isn't an assumption of the hard problem, as he states it, nor an immediate consequence.

He argues at length that property dualism is the correct answer to the HP, and that would seem to imply epiphenomenalism: if physical properties are sufficient to explain behaviour, then mental properties have nothing to do. But that means epiphenomenalism is an indirect consequence of the HP.

comment by Adele Lopez (adele-lopez-1) · 2020-06-08T20:33:38.879Z · LW(p) · GW(p)

Yes! Subjective experience has a topology. The neighborhoods are just all the collections of qualia that feel some amount of similar, for any sort of amount that feels different. And the "shape" of the #2 color is different because it is not homeomorphic to the #1 color.

There's also (something like) a metric space structure on this topology, since different things feel different amounts of different. People seem to have variation in this while still having the same topological structure as others.

My hypothesis about the "mysterious redness of red" is that it feels striking in part because it has a more mathematically interesting/complex homotopy type, and that you could in principle give people entirely new qualia by arranging their neurons to experience a novel homotopy type.

comment by Steven Byrnes (steve2152) · 2020-06-08T22:28:55.205Z · LW(p) · GW(p)

Given that, I think I want to rename Chalmers' categories as the "Boring" and "Interesting" problem of consciousness.

Just to be sure, hard=boring and easy=interesting, right?

Replies from: Hazardcomment by Jarred Filmer (4thWayWastrel) · 2020-10-04T11:38:24.306Z · LW(p) · GW(p)

The "sexiness'" of the hard problem of Consciousness to me is mainly in it's relationship with morality and meaning.

For any hypothetical I can think of, whether or not the entities involved have a subjective experience (along with the concept of a valence/preferences) dominates any other consideration. In a way it seems like "Hard Problem" aspect of Consciousness is the only thing that would give anything meaning, thus pointing to the hard problem as one of the most pressing questions for anyone trying to understand what's going on.

comment by Pattern · 2020-06-11T16:12:03.431Z · LW(p) · GW(p)

The idea goes that even if you explained how the brain does everything it does, you haven't explained why this doing is accompanied by subjective experience. It's rooted in this idea that subjective experience is somehow completely isolated from, and separate to behavior.

This supposed isolation is already a little suspicious to me.

I'm not sure that this "isolation" exists, either.

I think this idea is useful because it asks...how might you explain to a computer what makes ants different from people, or computers different from ants and people, or how anesthetic works? What is joy?

Or how could we tell if robots/aliens have subjective experience?

What if when we looked at the sky, and the ocean, we both used the English word "Blue", but you experienced what I experience when I look at what we both agree is called "Green"?

Related question: Do variations between individuals in terms of eyes have effects, beyond colorblind versus not?

comment by Signer · 2020-06-12T00:01:46.229Z · LW(p) · GW(p)

I don't get why it's still not mainstream that panpsychism with weak illusionism about "self" solves the Hard Problem. It's not even that unintuitive to think "I am made from the same stuff as rocks, therefore rocks are conscious". Any difference with rocks, bats, counties, you and you, when you was a meter to the left, are of easy, non-mysterious, ethical kind.

Replies from: TAG, Kaj_Sotala, Richard_Kennaway↑ comment by TAG · 2020-06-12T18:30:35.729Z · LW(p) · GW(p)

I don’t get why it’s still not mainstream that panpsychism with weak illusionism about “self” solves the Hard Problem.

In the sense that shooting someone and also cutting off their head kills them. It's not clear why would need both ... and there are good arguments against each.

What is hard about the hard problem is the requirement to explain consciousness, particularly conscious experience, in terms of a physical ontology. Its the combination of the two that makes it hard. Which is to say that the problem can be sidestepped by either denying consciousness, or adopting a non-physicalist ontology.

Examples of non-physical ontologies include dualism, panpsychism and idealism . These are not faced with the Hard Problem, as such, because they are able to say that subjective, qualia, just are what they are, without facing any need to offer a reductive explanation of them. But they have problems of their own, mainly that physicalism is so succedsful in other areas.

Eliminative materialism and illusionism, on the other hand, deny that there is anything to be explained, thereby implying there is no problem, But these approaches also remain unsatisfactory because of the compelling subjective evidence for consciousness.

Replies from: Signer↑ comment by Signer · 2020-06-12T18:59:53.375Z · LW(p) · GW(p)

Well, the trick is that panpsychism is physicalist in broad sense, as they say. After all it's not like physicalist deny the concept of existence, and saying that the thing, that is different between us and zombies, that we call "consciousness", is actually that thing that physicalist call "reality" does not make it unphysical and doesn't prevent physicalism from working where it worked before. It's all definitional anyway - if panpsychism solves everything, then it doesn't matter whether it is physicalist or not.

Replies from: TAG↑ comment by TAG · 2020-06-12T20:42:16.797Z · LW(p) · GW(p)

If you make "physical" broad enough, it ceases to mean anything, and everything is compatible with it. That's not a just a problem for panpsychism: physicalists are often in the position of fervently defending something they can only vaguely define. But if you try to make physicalism precise, it turns out that the concept of reductionism is the one doing the work: the idea that the only fundamental properties are physical ones, and all higher level properties must be explicable in terms of lower level ones.

if panpsychism solves everything, then it doesn’t matter whether it is physicalist or not.

Matters to whom? There's no shortage of people who would rather leave cosnsciosuness unexplained (or illusory or non existent) than abandon physicalism.

↑ comment by Kaj_Sotala · 2020-06-16T11:11:37.908Z · LW(p) · GW(p)

Well, how do those solve the hard problem?

Replies from: Signer↑ comment by Signer · 2020-06-23T10:32:59.722Z · LW(p) · GW(p)

The Hard Problem is basically "what part of the equation for wavefunction of the universe says that we are not zombies". The answer of panpsychism is "the part where we say that it is real". When you imagining waking up made of cold silicon and not feeling anything, you imagining not existing.

Non-fundamental "self" is there just to solve decomposition problem - there is no isolation of qualia, just qualia of isolation. And it works because it is easier to argue that you can be wrong about some particular aspects of consciousness (like there being fundamentally distinct conscious "selfs", or the difference between your current experience of blue sky and your experience of the same blue sky in the past) than that you can be wrong about there being consciousness at all.

It doesn't answer what all the interesting differences between rocks and human brains are, but these differences are not "Hard" or mysterious - only the difference between zombies and us is "Hard". Interesting parts are just hard to answer because they depend on what you want to know. And if you want to know whether something have that basic spark of consciousness, then the answer is that everything has it.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-06-23T11:19:43.272Z · LW(p) · GW(p)

The Hard Problem is basically "what part of the equation for wavefunction of the universe says that we are not zombies". The answer of panpsychism is "the part where we say that it is real".

I don't think I understand. I would say that the Hard Problem is more "why and how do we have subjective experience, rather than experiencing nothing". If you say that "everything has it", that doesn't seem to answer the question - okay, everything is conscious, but why and how is everything conscious?

Replies from: Signer, Signer↑ comment by Signer · 2020-06-23T13:53:11.562Z · LW(p) · GW(p)

Oh, and if by "why and how is everything conscious" you mean "why believe in panpsychism" and not "what causes consciousness in panpsychist view" then, first, it's less about how panpsychism solves The Hard Problem, and more about why accept this particular solution. So, moving goalposts and all that^^. I don't quite understand why would someone be so reluctant to accept any solution that is kinda physicalist and kinda non-epiphenomenal, considering people say that they don't even understand how solution would look in principle. But there are reasons why panpsychism is the only acceptable solution: if consciousness influences physical world, then it either requires new physics (including strong emergence), or it is present in everything. You can detect difference between different states of mind with just weak emergence, but only "cogito, ergo sum" doesn't also work in zombie world.

↑ comment by Signer · 2020-06-23T12:52:03.775Z · LW(p) · GW(p)

why and how do we have subjective experience, rather than experiencing nothing

Because we exist. "Because" not in the sense of casual dependency, but in the sense of equivalence. The point is that we have two concepts (existence and consciousness) that represent the same thing in reality. "Why they are the same" is equivalent to "why there is no additional "consciousness" thing" and that is just asking why reality is like it is. And it is not the same as saying "it's just the way world is, that we have subjective experience" right away - panpsychism additionally states that not only we have experience, and provides a place for consciousness in purely physical worldview.

And for "how" - well, it's the question of the nature of existence, because there is no place for mechanism between existence and consciousness - they are just the same thing. So, for example, different physical configurations mean different (but maybe indistinguishable by agent) experiences. And not sure if it counts as "how", but equivalence between consciousness and existence means every specific aspect of consciousness can be analysed by usual scientific methods - "experience of seeng blue" can be emergent, while consciousness itself is fundamental.

I mean, sure, "why everything exists" is an open question, so it may seem like pointless redefinition. But if we started with two problems and ended with one, then one of them is solved.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2020-06-23T14:40:09.120Z · LW(p) · GW(p)

But if we started with two problems and ended with one, then one of them is solved.

You won't escape an excess baggage charge by putting both your suitcases into one big case.

Replies from: Signer↑ comment by Signer · 2020-06-23T15:18:34.228Z · LW(p) · GW(p)

But the problems with existence don't become more severe because of merging of "existence" and "consciousness" concepts. On the contrary: before we didn't have any concrete idea of what it would mean to exist or not, but now we can at least use our intuitions about consciousness instead. And, on the other hand, all problematic aspects of consciousness (like surprising certainty about having it) are contained in existence.

Amusingly, I've just got from a flight where I put my backpack into my bag, so I could use it for luggage on the return flight^^.

↑ comment by Richard_Kennaway · 2020-06-12T09:32:30.045Z · LW(p) · GW(p)

Well, I don't get how anyone can take panpsychism and illusory selves seriously, so there! :)

Replies from: Signer↑ comment by Signer · 2020-06-12T14:07:09.651Z · LW(p) · GW(p)

Weakly illusory - meaning non-fundamental. Being illusionist about any consciousness at all I can see as problematic, but is "self is just an ethical construct" is so controversial?

As for panpsychism, I think Strawson's argument pretty much doesn't leave viable alternatives: you can only choose between new physics, epiphenomenalism, panpsychism and strong illusionism ("there is no such thing as consciousness"). Epiphenomenalism requires coincidence ("I am conscious, I think I am conscious, but that facts aren't connected in any way"). And if your bet is for new physics that works only in brains (including strong emergence in sufficiently complex computations or whatever), well, good luck. That leaves illusionism, but even if you don't care what part of the Schrödinger equation says that we are not zombies, how is saying that there is no Hard Problem is better than having a solution?

Again, all of it is just to solve the mysterious part - there are still differences and similarities between computational processes, and there even may be something mathematically interesting going on with attention/awareness (personally I think something like global workspace is right, because you need a tape for a Turing machine, and plenty of Python programs do the same thing).

Replies from: Richard_Kennaway, TAG↑ comment by Richard_Kennaway · 2020-06-12T15:53:24.026Z · LW(p) · GW(p)

Weakly illusory - meaning non-fundamental

That makes of "weakly" a weasel word, that sucks the meaning out of the concept it is attached to. What would it be, for something to be or not be "fundamental"? You could argue (and some do) that nothing is fundamental, but then it says nothing of the self in particular that it is not "fundamental".

"self is just an ethical construct"

How did ethics come into it?

you can only choose between [list]

These are just some ideas that people have thought up. I don't have to choose any of them. I can simply say: neither I nor anyone else has any idea how to solve the hard problem. No-one even knows what a solution would look like. No-one even knows how there could be a solution. Yet here we are, stubbornly conscious in a world where everything we know about how things work has no place for it. That is the hard problem. Every purported solution I have seen amounts to either grabbing onto one side of the contradiction and insisting the other side is therefore false, or saying "la la la can't hear you" to the question.

Replies from: Signer↑ comment by Signer · 2020-06-12T18:01:48.667Z · LW(p) · GW(p)

Well, it would be strange for "weakly" to strengthen, would it?^^ There is still may be a difference between "self" not existing in the sense unicorns don't exist, and "self" not existing precisely in the way people hope it to exist. By "non-fundamental" I mean the way tables are non-fundamental as opposed to the universe - tables are just approximate description of a part of universe, where the universe itself is actually real. And you would only need approximate descriptions for their usefulness/utility/value - therefore ethics. I am not arguing for "fundamental/emergent" being meaningful distinction here. Just that, nevermind over things, "self" is more like a table.

I can simply say: neither I nor anyone else has any idea how to solve the hard problem. No-one even knows what a solution would look like. No-one even knows how there could be a solution. Yet here we are, stubbornly conscious in a world where everything we know about how things work has no place for it.

You can, but I argue you would be wrong - panpsychism is the solution and the place for mysterious part of consciousness in how things work is in that these things are real. There are arguments for why there are no other options - whatever consciousness is, it either does or does not influence how neurons work, for example. And assuming panpsychism, there are answers to all of the usual questions about consciousness, like if you simulate a brain that feels pain, there would be an experience, but whether it is the same experience is an ethical question and therefore ultimately arbitrary. Well, all questions modulo open questions about existence, like what happens when your quantum measure decrease. I wouldn't describe it as any side of contradiction, as reality is kinda assumed in materialism, but on the other hand panpsychism says that consciousness is (fundamental feature of) existence... But anyway, it's not strictly implied by what you said, but is you main objection to panpsychism is how it interacts with "self"?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2020-06-12T18:47:12.798Z · LW(p) · GW(p)

If selves exist in the same way that tables exist, that's good enough for me. *kicks table* There's nothing illusory about tables. Yes, they're made of parts, so are selves, but that doesn't make them illusions.

And assuming panpsychism, there are answers to all of the usual questions about consciousness

Here are a few questions:

How can I study the consciousness of a rock?

How can I compare the consciousness of a small rock vs. a big one?

What happens to the consciousness of an iceberg when it melts and mingles with the ocean?

Am I conscious when I am unconscious? When I am dead?

What observations could you show me that would surprise me, if I believed (as I do, for want of anything to suggest otherwise) that rocks and water have no consciousness at all?

is you main objection to panpsychism is how it interacts with "self"?

My main objection to panpsychism is that it makes no observable predictions. It pretends to solve the problem of consciousness by simply attaching the word to everything.

Replies from: Signer↑ comment by Signer · 2020-06-12T20:41:30.747Z · LW(p) · GW(p)

Yeah, I agree that calling it illusionism was a bad idea.

How can I study the consciousness of a rock? How can I compare the consciousness of a small rock vs. a big one?

As in all these questions, it depends on whether you want to study that consciousness which the Hard Problem is about, or the "difference between conscious and unconscious"-one. For the former it's just a study of physics - there is a difference between being a granite rock and a limestone rock. The experience would be different, but, of course, indistinguishable to the rock. If you want to study the later one, you would need to decide what features you care about - similarity to computational processes in the brain, for example - and study them. You can conclude that rock doesn't have any amount of that kind of consciousness, but there still would be a difference between real rock and rock zombie - in zombie world reassembling rock into a brain wouldn't give it consciousness in the mysterious sense. I understand, if it would start to sound like eliminativism at this point, but the whole point of non-ridiculous panpsychism is that it doesn't provide rocks with any human experiences like seeing red - the difference would be as much as you can expect between rock and human, but there still have to be an experience of being a rock, for any experience to not be epiphenomenal.

What happens to the consciousness of an iceberg when it melts and mingles with the ocean?

It melts and mingles with the ocean. EDIT: There is no need for two different languages, because there is only one kind of things. When you say "I see the blue sky" you approximately describe the part of you brain.

Am I conscious when I am unconscious? When I am dead?

In the sense of the difference between zombies and us - yes, you would be having an experience of being dead. In the sense of there being relevant brain processes - no, if you don't want to bring quantum immortality or dust theory.

What observations could you show me that would surprise me, if I believed (as I do, for want of anything to suggest otherwise) that rocks and water have no consciousness at all?

If you count logic as observation: that belief leads to contradiction. Well, "confusion" or whatever the Hard Problem is - if you didn't believe that, then there would't be a Hard Problem. The surprising part is not that there is a contradiction - everyone expects contradictions when dealing with consciousness - it's that this particular belief is all you need to correct to clear all the confusion. You probably better off reading Strawson or Chalmers than listening to me, but it goes like that:

- Rocks and water have no consciousness at all.

- You can create brain from rocks and water.

- Brains have consciousness.

- Only epiphenomenal things can emerge.

- Consciousness is not epiphenomenal.

It pretends to solve the problem of consciousness by simply attaching the word to everything.

Well, what parts of the problem are not solved by attaching the word to everything?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2020-06-12T23:02:25.773Z · LW(p) · GW(p)

Well, what parts of the problem are not solved by attaching the word to everything?

All of it.

1. Rocks and water have no consciousness at all.

2. You can create brain from rocks and water.

3. Brains have consciousness.

4. Only epiphenomenal things can emerge.

5. Consciousness is not epiphenomenal.

I agree with all of that except 4. (A piano "emerges" from putting together its parts. But there is nothing epiphenomenal about it, as anyone who has had a piano fall on them will know.) But it gets no farther to explaining consciousness.

If you count logic as observation: that belief leads to contradiction.

Logic as observation observes through the lens of an ontology. If the ontology is wrong, it doesn't matter how watertight the logic is.

Replies from: TAG, Signer↑ comment by TAG · 2020-06-12T23:08:02.208Z · LW(p) · GW(p)

I agree with all of that except 4. (A piano “emerges” from putting together its parts. But there is nothing epiphenomenal about it, as anyone who has had a piano fall on them will know.) But it gets no farther to explaining consciousness.

The charitable reading of 4 would be that the piano has no causal powers beyond those of its parts: it's a piano-shaped bunch of quarks and electrons that crushes you.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2020-06-12T23:26:21.102Z · LW(p) · GW(p)

A piano-shaped bunch of quarks and electrons is a piano. The causal powers of the piano are exactly the same as a piano-shaped bunch of quarks and electrons. Mentioning the quarks and electrons is doing no work, because we can talk of pianos without knowing anything about quarks and electrons.

It's the quarks and electrons that are epiphenomenal to the piano, not the other way round.

Replies from: TAG↑ comment by TAG · 2020-06-12T23:41:57.324Z · LW(p) · GW(p)

A piano-shaped bunch of quarks and electrons is a piano. The causal powers of the piano are exactly the same as a piano-shaped bunch of quarks and electrons. Mentioning the quarks and electrons is doing no work, because we can talk of pianos without knowing anything about quarks and electrons.

That's what I meant: if two things are identical, they have identical causal powers. The Singer/Strawson argument seems to be that nothing exists or causes anything unless it is strongly emergent.

Replies from: Signer↑ comment by Signer · 2020-06-13T00:08:02.110Z · LW(p) · GW(p)

Less like I oppose ever using words "exist" and "causes" for non-fundamental things, and more like doing it is what makes it vulnerable to conceivability argument in the first place: the only casual power that brain has and rock hasn't comes from different configuration of quarks in space, but quarks are in the same places in zombie world.

↑ comment by Signer · 2020-06-12T23:34:36.066Z · LW(p) · GW(p)

The Hard Problem according to your description is that there is no place for consciousness in how things work. Why then making everything to be that place is not considered as solving the problem?

And about emergence - what TAG said. I also strongly agree about the importance of the ontology.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2020-06-13T08:19:38.796Z · LW(p) · GW(p)

You can't "make everything be conscious". The thing we have experience of and call consciousness works however it works. It is present wherever it is present. It takes whatever different forms it takes. How it works, where it is present, and what forms it takes cannot be affected by pointing at everything and saying "it's conscious!"

Replies from: Signer↑ comment by Signer · 2020-06-23T15:56:07.571Z · LW(p) · GW(p)

Make in my mind. Of course you can't change reality by shuffling concepts. But the idea is that all the ways consciousness works that are problematic are separate from other (easy) aspects of consciousness. So consciousness works how it worked before - you see clouds because something made you neurons activate in that pattern. You just recognise that confusing parts of consciousness (that I think all boil down to the zombie argument) are actually what we call "existence".

↑ comment by TAG · 2020-06-12T18:35:38.940Z · LW(p) · GW(p)

Weakly illusory—meaning non-fundamental.

Well, panpsychism is the claim that consciousness is fundamental , or at least co-fundamental with material properties.

you can only choose between new physics, epiphenomenalism, panpsychism and strong illusionism (“there is no such thing as consciousness”).

Only? I would add dual-aspect neutral monism to that list;-)

comment by Protagoras · 2020-06-09T01:35:52.479Z · LW(p) · GW(p)

Some components of experience, like colors, feel simple introspectively. The story of their functions is not remotely simple, so the story of their functions feels like it must be talking about a totally different thing from the obviously simple experience of the color. Though some people try to pretend this is more reasonable than it is by playing games and trying to define an experience as consisting entirely of how things seem to us and so as being incapable of being otherwise than it seems, this is just game playing; we are not that infallible on any subject, introspective or otherwise. The obvious solution, that what seems simple just turns out to be complicated and is in fact what the complicated functional story talks about, is surely the correct one. Don't let Chalmers' accent lull you into thinking he has some superior down under wisdom; listen to the equally accented Australian materialists!

Replies from: Hazardcomment by Pongo · 2020-06-08T22:08:45.457Z · LW(p) · GW(p)

From a very short interaction with my desk I've concluded it doesn't have subjective experience. After a few years of interacting with my dog, I'm still on the fence on if it has subjective experience.

I read you as suggesting something like "subjective experience seems to have behavioral consequences because interaction with something leads me to have beliefs about whether it has subjective experience". But I think when I reach such conclusions, I'm mostly going off priors that I got socially. Is it different for you?

Replies from: Hazard↑ comment by Hazard · 2020-06-09T14:49:16.473Z · LW(p) · GW(p)

I've defs got socially formed priors on what things do and don't have experience. And when I try and move past those priors and or think "we'll these priors came from somewhere, what were they originally tapping into?" I see that anyone making a judgement about this is doing so through what they could observe.

comment by djmooretx · 2020-06-08T20:33:46.534Z · LW(p) · GW(p)

Does everyone have the same "favorite color"?

No. As far as I'm concerned, that proves we do not all see the same colors.

My own favorite color has varied over my lifetime. I myself do not see "blue" and "green" in the same way I did forty years ago. Further, I generally find deep blues to be cool and soothing; but while the blue light from the sky does not bother me, the "blue" from "daylight" LED room lamps is piercing. The light from the blue LED pilot lamps on my computer and similar equipment is almost painful to me, despite being relatively deep--so much so that I had to cover them with white cloth tape blacked out with a felt tip marker to tone it down.

There's a store i pass on my to work that I dislike because the red and blue LED display in its window fools me into thinking there's a pack of police cars there.

Color is not just a spectroscopic reading to a conscious being. Color also carries emotional weight. Colors mean things to people.

Replies from: Hazard↑ comment by Hazard · 2020-06-09T14:45:52.069Z · LW(p) · GW(p)

Yeps. This feels like seeing one's experience of color as all of the things it's connected to. You've got a unique set of associations to diff colors, and that makes your experience different.

What I've seen of the "hard problem of consciousness" is that it says "well yeah, but all those associations, all of what a color means to you, that's all separate from the qualia of the color", and that is the thing that I think is divorced from interesting stuff. All the things you mentioned are the interesting parts of the experience of color.

comment by Mitchell_Porter · 2020-06-10T11:59:13.925Z · LW(p) · GW(p)

The "problem of qualia" comes about for today's materialists, because they apriori assume a highly geometrized ontology in which all that really exists are point particles located in space, vector-valued fields permeating space, and so on. When people were dualists of some kind, they recognized that there was a problem in how consciousness related to matter, but they could at least acknowledge the redness of red; the question was how the world of sensation and choice related to the world of atoms and physical causality.

Once you assume these highly de-sensualized physical ontologies are the *totality* of what exists, then most of the sensory properties that are evident in consciousness, are simply gone. You still have number in your ontology, you still have quantifiable properties, and thus we can have this discussion about code and numbers and names, but redness as such is now missing.

But if you allow "qualia", "phenomenal color", i.e. the color that we experience, to still exist in your ontology, then it can be the thing that has all those relations. Quantifiable properties of color like hue, saturation, lightness, can be regarded as fully real - the way a physicist may regard the quantifiable properties of a fundamental field as real - and not just as numbers encoded in some neural computing register.

I mention this because I believe it is the answer, when the poster says 'I still feel like there is this "extra" thing I'm experiencing ... I personally can't find any way to relate this isolated "qualia of redness" to anything else I care about'. Redness is cut off from the rest of your ontology, because your ontology is apriori without color. Historically that's how physics developed - some perceivable properties were regarded as 'secondary properties' like color, taste, smell, that are in the mind of the perceiver rather than in the external world; physical theories whose ontology only contains 'primary properties' like size, shape, and quantity were developed to explain the external world; and now they are supposed to explain the perceiver too, so there's nowhere left for the secondary properties to exist at all. Thus we went from the subjective world, to a dualistic world, to eliminative materialism.

But fundamental physics only tells you so much about the nature of things. It tells you that there are quantifiable properties which exist in certain relations to each other. It doesn't tell you that there is no such thing as actual redness. This is the real challenge in the ontology of consciousness, at least if you care about consistency with natural science: finding a way to interpret the physical ontology of the brain, so that actual color (and all the other phenomenological realities that are at odds with the de-sensualized ontology) is somewhere in there. I think it has to involve quantum mechanics, at least if you want monism rather than dualism; the classical billiard-ball ontology is too unlike the ontology of experience to be identified with it, whereas quantum formalism contains entities as abstract as Hilbert spaces (and everything built around them); there's a flexibility there which is hopefully enough to also correspond to phenomenal ontology directly. It may seem weird to suppose that there's some quantum subsystem of the brain which is the thing that is 'actually red'; but something has to be.

comment by TAG · 2020-06-12T22:30:33.219Z · LW(p) · GW(p)

It’s just that I personally can’t find any way to relate this isolated “qualia of redness” to anything else I care about.

Maybe not, but its still of academic interest.

The physicalist paradigm has been successful in many areas, but has yet to win out entirely because of some recalcitrant problems. The alternatives to physicalism -- idealism, dualism, panpsychism, and so on -- get their traction, retain what popularity they have, because of the mind body problem. There are a few other issues, such whether mathematical entities have an immaterial existence, and the status of physical law, but the mind-body problem is the big one. And the hard problem is the hardest part of the mind body problem.

A lot of people care enough about preserving physicalism to come up with a stance on the HP, including extreme ones like illusionism.

comment by staticvars · 2020-06-11T23:56:28.916Z · LW(p) · GW(p)

Just as the parts of the brain more directly involved with vision take as input the electrical impulses that travel down the neural pathways that originate in rods and cones, some other parts of the brain take as input the signals coming from other parts of the brain. Do we just call the more direct ones experience of the outside world, the higher level ones, experiences of the brain?

If we measured the signal level coming out of different people's neurons connected to the photoreceptors when exposed to Colors.ORANGE, surely we all have slightly different numbers of rods and cones, they are all wired up slightly differently, their transmission rate depends on neurotransmitter levels, etc. All of these parts are connected in various ways to the brain, and the excitation interacts in complex ways, so that the Colors.ORANGE today is never going to be exactly the same as the Colors.ORANGE of yesterday, but many of the same pathways are triggered.

I find the "hard problem" incredibly annoying to discuss with people, because it is just a shape-shifting question with most people, for which there isn't even a possible answer. It seems like it is not hard, but rather just an insistence on drawing a distinction between neurons feeding in more directly from sensory inputs connected to the outside world, and those parts of the brain which are fed more by the activity generated in other parts of the brain. Claiming that the difference between those is a problem is not clear to me at all.

I think about breathing. Usually you don't notice that you are breathing. But sometimes, when you are running you might notice that your breathing has changed. The parts of the brain that observe breathing are just getting a constant signal most of the time, and it disappears. The network adapts to the input to a point where it becomes like nothing, much like the input of the eye adapts to not see the nose, but if we cover one eye, it appears. If we meditate, we focus on our normal breathing.

What does paying attention mean? Is that the hard problem? Is the hard problem just the nature of the hippocampus? If so, you could probably solve it some kind of brain scan. Until then, who cares? Maybe the only people that care are those that experience the hard problem as a problem. To me, it's just what the brain is doing all of the time. There's no other layer of explanation needed.