Learning societal values from law as part of an AGI alignment strategy

post by John Nay (john-nay) · 2022-10-21T02:03:25.359Z · LW · GW · 18 commentsContents

Law can encode human preferences and societal values AI can learn law, to decode human preferences and societal values Prosaic AI Learning from legal data Learning from legal expert feedback As AI moves toward AGI, it will learn more law We can validate legal understanding, along the way toward AGI Private law can increase human-AGI alignment An example: fiduciary duty Public law can increase society-AGI alignment Appendix A: Counterarguments to our premises Appendix B: Regardless, there is no other legitimate source of human values None 18 comments

This post argues that P(misalignment x-risk | AGI) is lower than anticipated by alignment researchers due to an overlooked goal specification technology: law.

P(misalignment x-risk | AGI that understands democratic law) < P(misalignment x-risk | AGI)

This post sheds light on a neglected mechanism for lowering the probability of misalignment x-risk. The mechanism that is doing the work here is not the enforcement of law on AGI. In fact, we don’t discuss the enforcement of the law at all in this post.[1] We discuss AGI using law as information. Unless we conduct further research and development on how to best have AGI learn the law and how we can validate a computational understanding of the law as capabilities scale to AGI, it is less likely that AGI using law as information reduces misalignment x-risk. In other words, we do not believe that alignment will be easy; we believe it will involve difficult technical work, but that it will be different than finding a one-shot technical solution to aligning one human to one transformative AI.[2]

Specifically, there is lower risk of bad outcomes if AGI can learn to follow the spirit of the law. This entails leveraging exclusively humans (and not AI in any significant way) for the “law-making” / “contract-drafting” part (i.e., use the theory and practice of law to tell agents what to do), and build AI capabilities for the interpretation of the human directives (machine learning on data and processes from the theory and practice of law about how agents interpret directives, statutes, case law, regulation, and “contracts”).

At a high level, the argument is the following.

- Law is the applied philosophy of multi-agent alignment and serves as a knowledge base of human values. (This has been previously underappreciated by much of the AI alignment community because most lawyers know little about AI, and most AI researchers know little about legal theory and law-making.)

- Many arguments for AGI misalignment depend on our inability to imbue AGI with a sufficiently rich understanding of:

- what individual humans want; and

- how to take actions that respect societal values more broadly.

- AGI could learn law as a source of human values. (Before we reach AGI, we can make progress on AI learning law, so we can validate this.)

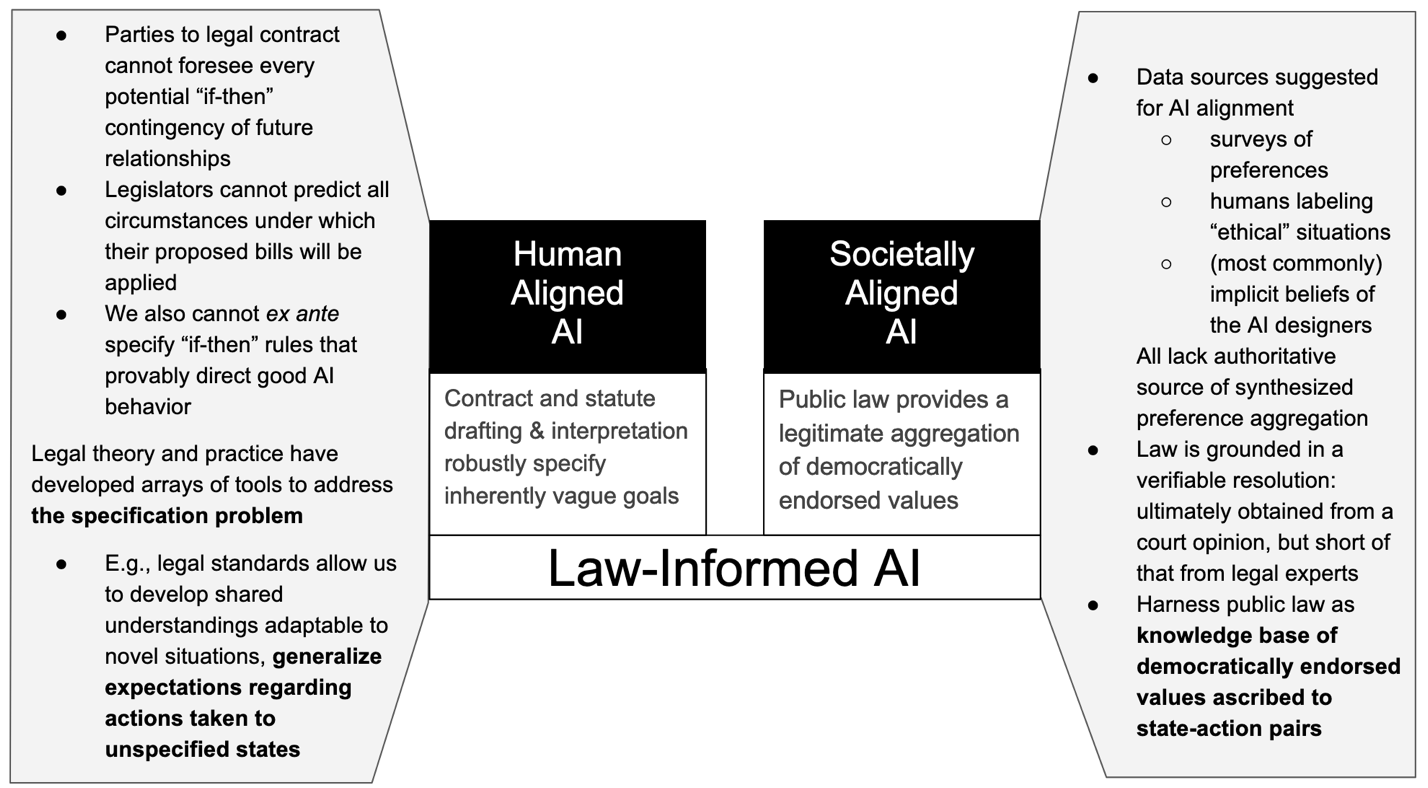

- Private law (contracts and their methods) can teach AGI how to align with goals of individual humans.

- Public law can teach human values and guardrails for the AGI’s pursuits of those goals. To be clear, we are not wholesale endorsing current law. We are endorsing the democratic governmental process of creating and amending law as the imperfect, but best available solution, that humans have for deliberatively developing and encoding their shared values.

- If AGI learns law, it will understand how to interpret vague human directives and societal human values well enough that its actions will not cause states of the world that dramatically diverge from human preferences and societal values.

- Therefore, if we can teach AGI how to learn the law, and ensure that law continues to be produced by humans (and not AGI) in a way that continues to be broadly reflective of human views (i.e., legitimate), we can significantly reduce the probability that humanity will drastically curtail its future potential due to the development of AGI.

The implications of this argument suggest we should invest significant effort into the following three activities.

- Conducting R&D for getting AI to robustly understand law (beyond what general capabilities research is already helping with), and for continually validating this in state-of-the-art AI systems.

- This has positive externalities beyond reducing P(misalignment x-risk | AGI) because it would improve the safety and reliability of AI before AGI.

- Ensuring law expresses the democratically deliberated views of citizens with fidelity and integrity by reducing (1.) regulatory capture, (2.) illegal lobbying efforts, (3.) politicization of judicial[3] and agency[4] independence, and (4.) any impact of AGI on law-making (defined broadly to include proposing and enacting legislation, promulgating regulatory agency rules, publishing judicial opinions, enforcing law systematically, and more).

- Reducing (1.), (2.) and (3.) have obvious positive externalities beyond reducing P(misalignment x-risk | AGI).

- Cultivating the spread of democracy globally.

- If, in general, democracy is the form of government most likely to lead to positive outcomes for the governed, this would have positive externalities beyond reducing P(misalignment x-risk | AGI).

If one does not agree with the argument and is concerned with AGI risks, they should still pursue Activity 1. (R&D for AI understanding law) because there is no other legitimate source of societal values to embed in AGI (see the section below on Public law, and Appendix B). This holds true regardless of the value of P(misalignment x-risk | AGI).

The rest of this post provides support for the argument, discusses counterarguments, and concludes with why there is no other legitimate source of societal values to embed in AGI besides law.

Law can encode human preferences and societal values

The sociology of finance has advanced the idea that financial economics, conventionally viewed as a lens on financial markets, actually shapes markets, i.e., the theory is “an engine, not a camera.”[5] Law is an engine, and a camera. Legal drafting and interpretation methodologies refined by contract law – an engine for private party alignment – are a lens on how to communicate inherently ambiguous human goals. Public law – an engine for societal alignment – is a high-fidelity lens on legitimately endorsed values.

It is not possible to manually specify or automatically enumerate a discernment of humans’ desirability of all actions an AI might take in any future state of the world.[6] Similar to how we cannot ex ante specify rules that fully and provably direct good AI behavior, parties to a legal contract cannot foresee every contingency of their relationship,[7] and legislators cannot predict the specific circumstances under which their laws will be applied.[8] That is why much of law is a constellation of legal standards that fill in gaps at “run-time” that were not explicitly specified during the design and build process.

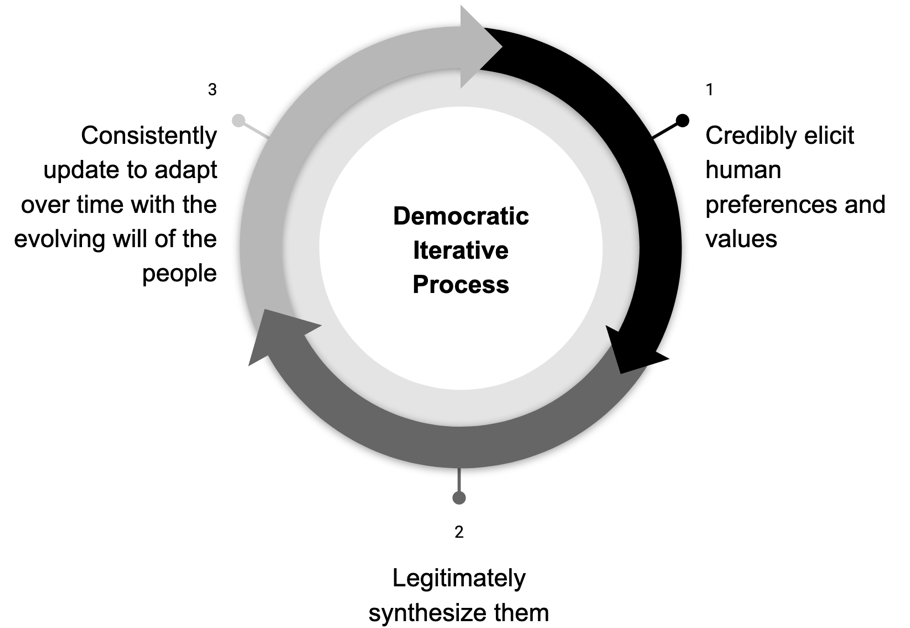

Methodologies for making and interpreting law – where one set of agents develops specifications for behavior, another set of agents interprets the specifications in novel circumstances, and then everyone iterates and amends the specifications as needed – have been refined by legal scholars, attorneys, businesses, legislators, regulators, courts, and citizens for centuries. Law-making within a democracy is a widely accepted process – implemented already – for how to credibly elicit human preferences and values, legitimately synthesize them, and consistently update the results to adapt over time with the evolving will of the people.

If we re-ran the "simulation" of humanity and Earth a few times, what proportion of the simulations would generate the democratic legal system? In other words, how much of the law-making system is a historical artifact of relatively arbitrary contingencies of this particular history and how much of it is an inevitability of one of the best ways to organize society and represent the values of humans? The only evidence we have is within the current “simulation,” and it seems that it is far from perfect but is the best system for maximizing human welfare.

Law can inform AI and reduce the risk of the misspecification of AI objectives through two primary channels.

- Law provides AI with theoretical constructs and practices (methods of statutory interpretation, application of standards, and legal reasoning) to facilitate the robust specification of what a human wants an AI system to proactively accomplish in the world.

- AI learns from public law what it should generally not do, providing up-to-date distillations of democratically deliberated means of reducing externalities and promoting massively multi-agent coordination.

Law is unique. It is deeply theoretical but its theories are tested against reality and refined with an unrelenting cadence. Producing, interpreting, enforcing, and amending law is a never-ending society-wide project. The results of this project can be leveraged by AI as an expression of what humans want[9] and how they communicate their goals under radical uncertainty.

This post is not referring to the more prosaic uses of law, e.g., as an ex-ante deterrent of bad behavior through the threat of sanction or incapacitation[10] or imposition of institutional legitimacy;[11] or as an ex-post message of moral indignation.[12] We are instead proposing that law can inform AI in the tradition of “predictive” theories of the law.[13]

Empirical consequences of violating the law are data for AI systems. Enforcing law on AI systems (or their human creators) is out of scope of our argument. From the perspective of an AI, law can serve as a rich set of methodologies for interpreting inherently incomplete specifications of collective human expectations.[14] Law provides detailed variegated examples of its application, generalizable precedents with accompanying informative explanations, and human lawyers to solicit targeted model fine-tuning feedback, context, and model prompting to embed and deploy up-to-date comprehension of human and societal goals.

AI can learn law, to decode human preferences and societal values

We should engineer legal data (both natural observational data and data derived from human interaction with AI) into training signals to align AI. Toward this end, we can leverage recent advancements in machine learning, in particular, natural language processing[15] with large language models trained with self-supervision; deep reinforcement learning; the intersection of large language models and deep reinforcement learning;[16] and research on “safe reinforcement learning”[17] (especially where constraints on actions can be described in natural language[18]).

Prosaic AI

Combining and adapting the following three areas of AI capabilities advances could allow us to leverage billions of state-action-value tuples from (natural language) legal text within an AI decision-making paradigm.

- Large language models trained on (sometimes morally salient[19]) text, powering decision-making agents.[20]

- Procedures to learn automated mappings from natural language to environment dynamics[21] and reward functions of agents.[22]

- Offline reinforcement learning with Transformer-based models.[23]

Legal informatics can be employed within AI agent decision-making paradigms in a variety of ways:

- as (natural language) constraints[24]

- for shaping reward functions during training[25]

- for refined representations of state spaces[26]

- for guiding the exploration of state spaces during training[27]

- as inputs to world models for more efficient training[28]

- as model priors, or part of pretraining, to bias a deployed agent’s available action space toward certain actions or away from others[29]

- some combination of the above.

Where legal informatics is providing the modular constructs (methods of statutory interpretation, applications of standards, and legal reasoning more broadly) to facilitate the communication of what a human wants an AI system to do, it is more likely it is employed for specifying, learning and shaping reward functions. Where legal informatics, through distillations of public law and policy, helps specify what AI systems should not do, in order to provide a legitimate knowledge base of how to reduce societal externalities, it is more likely employed as action constraints.

Prosaic AI (large language models, LLMs, or, more generally: “Foundation Models”) is likely capable enough, with the right fine-tuning and prompting, to begin to exhibit behavior relatively consistent with legal standards and legal reasoning.

“Legal decision-making requires context at various scales: knowledge of all historical decisions and standards, knowledge of the case law that remains relevant in the present, and knowledge of the nuances of the individual case at hand. Foundation models are uniquely poised to have the potential to learn shared representations of historical and legal contexts, as well as have the linguistic power and precision for modeling an individual case.”[30]

Foundation Models in use today have been trained on a large portion of the Internet to leverage billions of human actions (as expressed through natural language). Training on high-quality dialog data leads to better dialog models[31] and training on technical mathematics papers leads to better mathematical reasoning.[32] It is likely possible to, similarly, leverage billions of human legal data points to build Law Foundation Models through language model self-supervision on pre-processed, but still largely unstructured, legal text data.[33] A Law Foundation Model would sit on the spectrum of formality and structure of training data somewhere between a model trained on code (e.g., Copilot) and a model trained on general internet text (e.g., GPT-3). Law is not executable code, but it has much more structure to it than free-form blog posts, for example.

Legal standards can be learned directly from legal data with LLMs.[34] Fine-tuning of general LLMs on smaller labeled data sets has proven successful for learning descriptive “common-sense” ethical judgement capabilities,[35] which, from a technical (but, crucially, not normative) perspective, is similar to the machine learning problem of learning legal standards from data.

LLMs trained on legal text learn model weights and word embeddings specific to legal text that provide better performance on downstream legal tasks[36] and have been useful for analyzing legal language and legal arguments,[37] and testing legal theories.[38] LLMs’ recent strong capabilities in automatically analyzing (non-legal) citations[39] should, after appropriate adaptation, potentially boost AI abilities in identifying relevant legal precedent. LLM capabilities related to generating persuasive language could help AI understand, and thus learn from, legal brief text data.[40]

In many cases, LLMs are not truthful,[41] but they have become capable of more truthfulness as they are scaled.[42] LLMs are beginning to demonstrate improved performance in analyzing legal contracts,[43] and as state-of-the-art models have gotten larger, their contract analysis performance has improved,[44] suggesting we can expect continued advancements in natural language processing capabilities to improve legal text analysis as a by-product, to some extent and in some legal tasks and legal domains.[45] AI capabilities research could potentially unlock further advances in the near-term. For instance, the successful application of deep reinforcement learning further beyond toy problems (e.g., video games and board games), with human feedback,[46] and through offline learning at scale.[47]

Legal informatics could convert progress in general AI capabilities advancements into gains in AI legal understanding by pointing the advancing capabilities, with model and process adaptations, at legal data.

Learning from legal data

For instance, we can codify examples of human and corporate behavior exhibiting standards such as fiduciary duties into structured formats to evaluate the standards-understanding capabilities of AI. This data could include both “gold-standard” human labeled data, but also automated data structuring (which is sampled and selectively human validated). Data hand-labeled by expensive legal experts is unlikely to provide a large enough data set for the core training of LLMs. Rather, its primary purpose is to validate the performance of models trained on much larger, general data, e.g., Foundation Models trained on most of the Internet and significant human feedback data. This semi-structured data could then be used to design self-supervised learning processes to apply across case law, regulatory guidance, legal training materials, and self-regulatory organization data to train models to learn correct and incorrect fiduciary behavior across as many contexts as possible.

Fiduciary standards are just one example. The legal data available for AI systems to learn from, or be evaluated on, includes textual data from all types of law (constitutional, statutory, administrative, case, and contractual), legal training tools (e.g., bar exam outlines, casebooks, and software for teaching), rule-based legal reasoning programs,[48] and human-in-the-loop live feedback from law and policy human experts. The latter two could simulate state-action-reward spaces for AI fine-tuning or validation, and the former could be processed to do so.

Here is a non-exhaustive list of legal data sources.

- In the U.S., the legislative branch creates statutory law through bills enacted by Congress

- The executive branch creates administrative regulations through Agencies’ notices of proposed rule-making and final rules

- The judicial branch creates case law through judicial opinions; and private parties create contracts

- Laws are found at varying levels of government in the United States: federal, state, and local

- The adopted versions of public law are often compiled in official bulk data repositories that offer machine-readable formats

- statutory law is integrated into the United States Code (or a state’s Code), which organizes the text of all Public Laws that are still in force into subjects

- administrative policies become part of the Code of Federal Regulations (or a state’s Code of Regulations), also organized by subject

Legal data curation efforts should aim for two outcomes: data that can be used to evaluate how well AI models understand legal standards; and the possibility that the initial “gold-standard” human expert labeled data can be used to generate additional much larger data sets through automated processing of full corpora of legal text.

Learning from textual descriptions, rather than direct instruction, may allow models to learn reward functions that better generalize.[49] Fortunately, more law is in the form of descriptions and standards than direct instructions and rules. Descriptions of the application of standards provides a rich and large surface area to learn the “spirit of the law” from.

Generating labeled data that can be employed in evaluating whether AI exhibits behavior consistent with particular legal standards can be broken into two types of tasks. A prediction task, e.g., “Is this textual description of a circumstance about fiduciary standards?” Second, a decision task, e.g., “Here is the context of a scenario (the state of the world) [...], and a description of a choice to be made [...]. The action choices are [X]. The chosen action was [x]. This was found to [violate/uphold] [legal standard z], [(if available) with [positive / negative] repercussions (reward)].” In other words, the “world” state (circumstance), potential actions, actual action, and the “reward” associated with taking that action in that state are labeled.[50]

Initial “gold-standard” human expert labeled data could eventually be used for training models to generate many more automated data labels. Models could be trained to map from the raw legal text to the more structured data outlined above. This phase could lead to enough data to unlock the ability to not just evaluate models and verify AI systems, but actually train (or at least strongly fine-tune pre-trained) models on data derived from legal text.

Learning from legal expert feedback

An important technical AI alignment research area focuses on learning reward functions based on human feedback and human demonstration.[51] Evaluating behavior is generally easier than learning how to actually execute behavior; for example, I cannot do a back-flip but I can evaluate whether you just did a back-flip.[52]

Humans have many cognitive limitations and biases that corrupt this process,[53] including routinely failing to predict (seemingly innocuous) implications of actions (we falsely believe are) pursuant to our goals.[54]

“For tasks that humans struggle to evaluate, we won’t know whether the reward model has actually generalized “correctly” (in a way that’s actually aligned with human intentions) since we don’t have an evaluation procedure to check. All we could do was make an argument by analogy because the reward model generalized well in other cases from easier to harder tasks.” [55]

In an effort to scale this to AGI levels of capabilities, researchers are investigating whether we can augment human feedback and demonstration abilities with trustworthy AI assistants, and/or how to recursively provide human feedback on decompositions of the overall task.[56] However, even if that is possible, the ultimate evaluation of the AI is still grounded in unsubstantiated human judgments that are providing the top-level feedback in this procedure. It makes sense; therefore, that much of the AI alignment commentary finds it unlikely that, under the current paradigm, we could imbue AGI with a sufficiently rich understanding of societal values. The current sources of human feedback won’t suffice for a task of that magnitude.

We should ground much of the alignment related model finetuning through human feedback in explicitly human legal judgment. (Capabilities related model finetuning can come from any source.) Reinforcement learning through human attorney feedback (there are more than 1.3 million lawyers in the US[57]) on natural language interactions with AI models would be a powerful process to help teach (through training, or fine-tuning, or extraction of templates for in-context prompting of large language models[58]) statutory interpretation, argumentation, and case-based reasoning, which can then be applied for aligning increasingly powerful AI.

With LLMs, only a few samples of human feedback, in the form of natural language, are needed for model refinement for some tasks.[59] Models could be trained to assist human attorney evaluators, which theoretically, in partnership with the humans, could allow the combined human-AI evaluation team to have capabilities that surpass the legal understanding of the legal expert humans alone.[60] The next section returns to the question of where the AGI “bottoms-out” the ultimate human feedback judgements it needs in this framework.

As AI moves toward AGI, it will learn more law

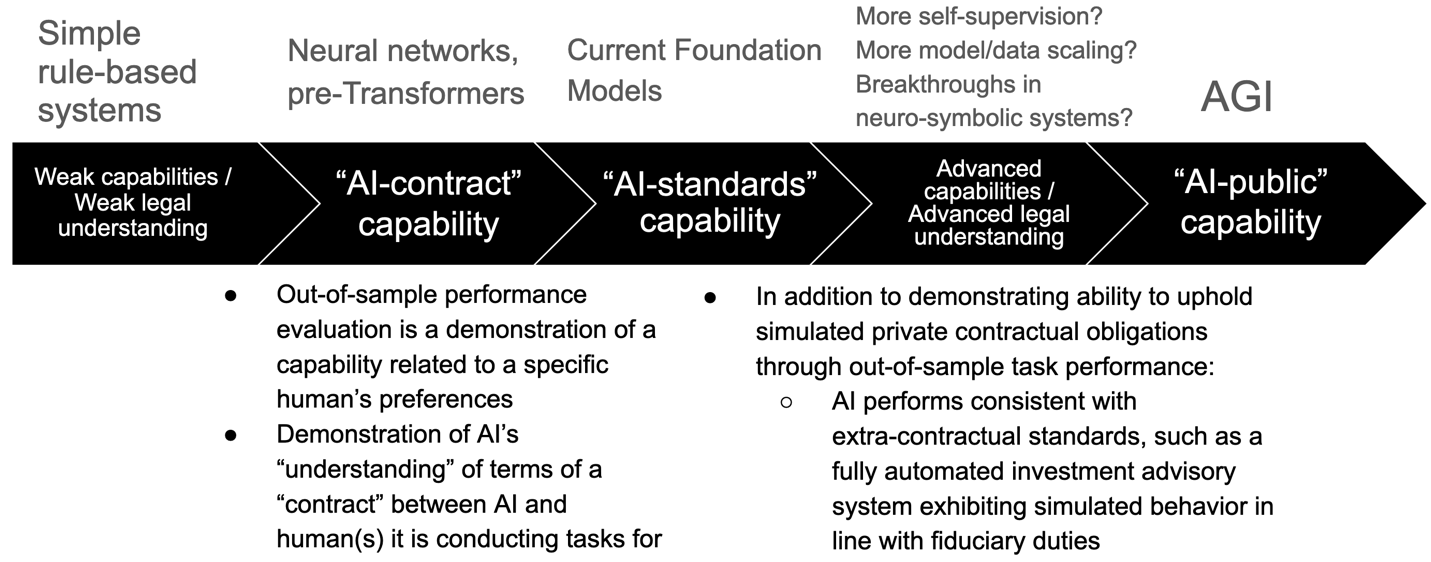

Understanding a simple self-contained contract (a “complete contract”) is easier than understanding legal standards, which is easier than understanding public law.

Starting from the left of the figure above, we can refer to the ability of AI to perform narrow tasks for humans as “AI-contract” capability. Large neural-network-based models pre-trained on significant portions of the internet are beginning to display what we can call “AI-standards” capabilities. (Standards are more abstract and nuanced than rules, and require more generalizable capabilities and world knowledge to implement; see sections below for an explanation.)

AGI-level capabilities, harnessed in the right way after more research and development at the intersection of legal informatics and machine learning, would likely unlock an understanding of standards, interpretation guidelines, legal reasoning, and generalizable precedents (which, effectively, synthesize citizens’ value preferences over potential actions taken in many states of the world).

“Seemingly “simple” proposals [for ensuring AGI realizes a positive outcome for humans] are likely to have unexpected undesirable consequences, overlooked as possibilities because our implicit background preferences operate invisibly to constrain which solutions we generate for consideration. […] There is little prospect of an outcome that realizes even the value of being interesting, unless the first superintelligences undergo detailed inheritance from human values.”[61]

An understanding of legal reasoning, legal interpretation methods, legal standards, and public law could provide AGI a sufficiently comprehensive view of what humans want to constrain the solutions generated for consideration.

We can validate legal understanding, along the way toward AGI

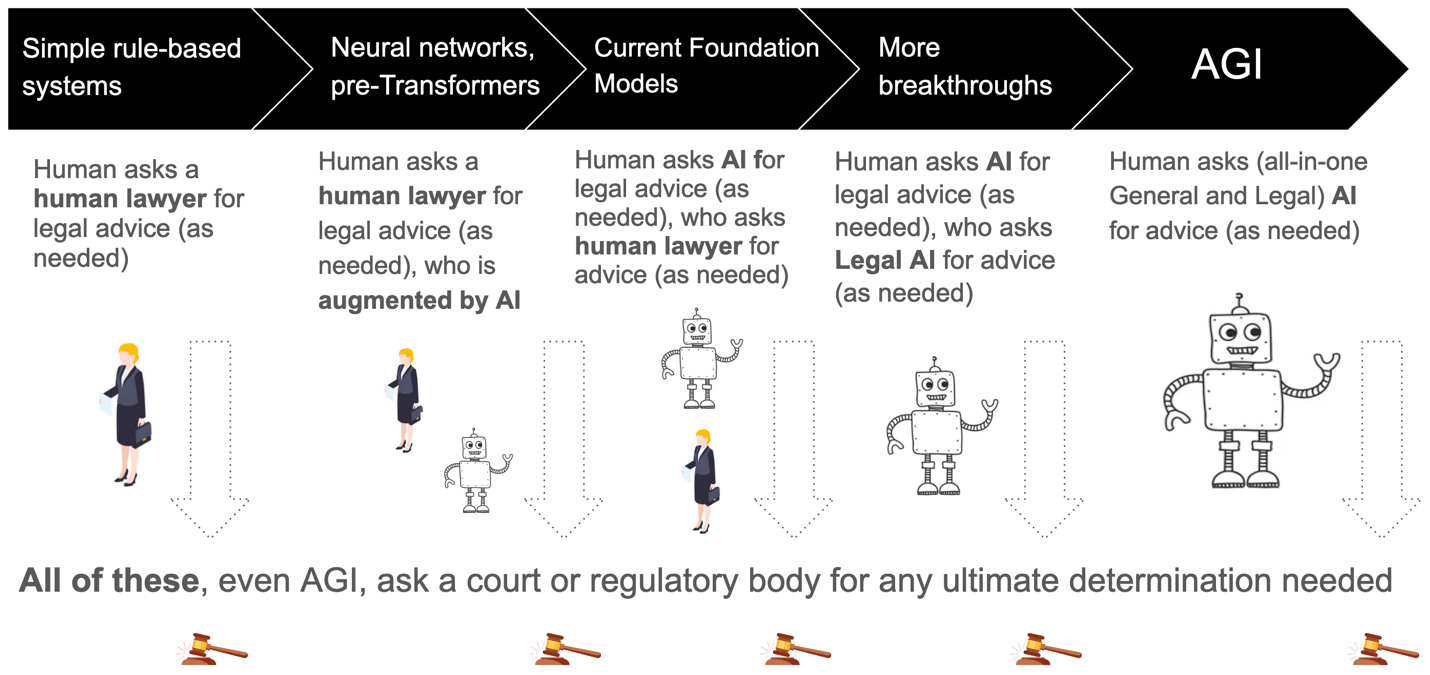

In most existing applications, before AI models are deployed, their performance on the task at hand is validated on data not used for their training. This out-of-sample performance evaluation is as a demonstration of a generalizable capability related to a specific human’s preferences, a demonstration of the AI’s “understanding” of the terms of an (implied) “contract” between the AI and the human(s) it is conducting tasks for. If a system is only at an “AI-contract” capability level, it is not able to autonomously track and comply with public law.

Moving from the left to the right of the figure above, validation procedures track increasingly powerful AI’s “understanding” of the “meaning” of laws.[62] In addition to demonstrating its ability to uphold simulated private contractual obligations (e.g., through acceptable out-of-sample task performance), sufficiently capable AI systems should demonstrate an ability to perform consistent with extra-contractual standards (e.g., a fully automated investment advisory system exhibiting simulated behavior in line with fiduciary duties to its human principal).

Although super-human intelligence would be able to conduct legal reasoning beyond the capability of any lawyer, any ultimate societal alignment question bottoms out through an existing authoritative mechanism for resolution: the governmental legal system. If alignment is driven by understanding of legal information and legal reasoning, then humans can assess AGI alignment.

Compare this to ethics, a widely discussed potential source of human values for AI alignment. Humans cannot evaluate super-intelligent ethical decisions because there is no mechanism external to the AI system that can legitimately resolve ethical super-human intelligent deliberation. Given that ethics has been the primary societal alignment framework proposal, and it lacks any grounding in practical, widely endorsed, formal applications, it is understandable that much of the existing AGI alignment research operates under the assumption that it is unlikely that we can imbue AGI with a sufficiently rich understanding of what humans want and how to take actions that respect our societal values.

Training deep learning models on legal data, where the learned intermediate representations can in some cases correspond to legal concepts, opens the possibility for mechanistic[63] (alignment) interpretability – methods reverse engineering AI models for better understanding their (misalignment) tendencies. Under the alignment framework we are proposing in this post, deep neural networks learn representations that can be identified as legal knowledge, and legal concepts are the ontology for alignment. In this framework, viewing the employment of legal constructs inside a trained model would help unpack a mechanistic explanation AI alignment.

Extensive simulations can provide a more intuitive analysis that complements mechanistic interpretability/explanations. This is more of a behavioral perspective on understanding AI. Simulations exploring the actions of machine-learning-based decision-making models throughout action-state space can uncover patterns of agent decision-making.[64]

Safety benchmarks have been developed for simple environments for AI agents trained with reinforcement learning.[65] Similar approaches, if adapted appropriately, could help demonstrate AI comprehension of legal standards.[66] This is analogous to the certification of an understanding of relevant law for professionals such as financial advisors, with the key difference that there is a relatively (computationally) costless assessment of AI legal understanding that is possible. Relative to the professional certification and subsequent testing we currently impose on humans providing specialized services such as financial advising, it is far less expensive to run millions of simulations of scenarios to test an AI’s comprehension of relevant law.[67] Social science research is now being conducted on data generated by simulating persons using LLMs conditioned on empirical human data.[68] Applying quantitative social science methods and causal estimation techniques to simulations of AI system behavior is a promising approach to measuring AI legal understanding.

In earlier work, we conducted simulations of the input-output behavior of a machine learning system we built for predicting law-making.[69] This system has been in production for six years and its predictions are consumed by millions of citizens. The simulation analysis provided insight into the system behavior. Today’s state-of-the-art Foundation Models are, in many ways, much more powerful, but behavioral simulation methods for analyzing their behavior are still applicable (probably more fruitfully so).

Private law can increase human-AGI alignment

One way of describing the deployment of an AI system is that some human principal, P, employs an AI to accomplish a goal, G, specified by P. If we view G as a “contract,” methods for creating and implementing legal contracts – which govern billions of relationships every day – can inform how we align AI with P.[70]

Contracts memorialize a shared understanding between parties regarding value-action-state tuples. It is not possible to create a complete contingent contract between AI and P because AI’s training process is not comprehensive of every action-state pair (that P may have a value judgment on) that AI could see once deployed.[71] Although it is also practically impossible to create complete contracts between humans (and/or other legal entities such as corporations), contracts still serve as incredibly useful customizable commitment devices to clarify and advance shared goals. This works because the law has developed mechanisms to facilitate sustained alignment amongst ambiguity. Gaps within contracts – action-state pairs without a value – are filled when they are encountered during deployment by the invocation of frequently employed standards (e.g., “material” and “reasonable”[72]). These standards could be used as modular (pre-trained model) building blocks across AI systems.

Rather than viewing contracts from the perspective of a traditional participant, e.g., a counterparty or judge, AGI could view contracts (and their creation, implementation, evolution,[73] and enforcement) as merely guides to navigating webs of inter-agent obligations.[74] This helps with the practical “negotiation” and performance of the human-AI “contracts” for two reasons, relative to a traditional human-human contracting process.

- In the negotiation phase, human parties will often not share full information about their preferences because they perceive it to be strategically disadvantageous because they may plan to attempt to further their goals ex post. Dropping the explicitly strategic nature of the relationship removes this incentive to withhold useful information.[75]

- During the term of the contract, parties will not be conducting economic analyses of whether breach is more favorable than performance.[76] When we remove the enforcement concerns from the contracts, it removes downfalls but it does not deprive AGI of the utility of the tools that have evolved to enable effective contracting, e.g., extra-contractual standards used to fill “contract” gaps in informing AI what to do for P that can generalize across much of the space of potential (implicit) “contracts.”

An AI “agent might not ever learn what is the best (or the morally or ethically appropriate) action in some regions of the state space. Without additional capabilities, it would be incapable of reasoning about what ought to be done in these regions – this is exactly the reason why we have norms in the first place: to not have to experience all state/actions precisely because some of them are considered forbidden and should not be experienced.”[77]

Rules (e.g., “do not drive more than 60 miles per hour”) are more targeted directives than standards. If comprehensive enough for the complexity of their application, rules allow the rule-maker to have more clarity than standards over the outcomes that will be realized conditional on the specified states (and agents’ actions in those states, which are a function of any behavioral impact the rules might have had).[78] Social systems have emergent behavior that often make formal rules brittle.[79] Standards (e.g., “drive reasonably” for California highways) allow parties to contracts, judges, regulators, and citizens to develop shared understandings and adapt them to novel situations (i.e., to generalize expectations regarding actions taken to unspecified states of the world). If rules are not written with enough potential states of the world in mind, they can lead to unanticipated undesirable outcomes[80] (e.g., a driver following the rule above is too slow to bring their passenger to the hospital in time to save their life), but to enumerate all the potentially relevant state-action pairs is excessively costly outside of the simplest environments.[81] This also describes the AI objective specification problem.

A standard has more capacity to generalize to novel situations than specific rules.[82] The AI analogy for standards are continuous, approximate methods that rely on significant amounts of data for learning dense representations on which we can apply geometric operations in latent model space. Standards are flexible. The AI analogy for rules[83] is discrete human-crafted “if-then” statements that are brittle, yet require no empirical data for machine learning.

In practice, most legal provisions land somewhere on a spectrum between pure rule and pure standard,[84] and further research (and AGI) can help us estimate the right location and combination[85] of “rule-ness” and “standard-ness” when specifying new AI objectives. There are other dimensions to legal provision implementation related to the rule-ness versus standard-ness axis that could further elucidate AI design, e.g., “determinacy,”[86] “privately adaptable” (“rules that allocate initial entitlements but do not specify end-states”[87]), and “catalogs” (“a legal command comprising a specific enumeration of behaviors, prohibitions, or items that share a salient common denominator and a residual category—often denoted by the words “and the like” or “such as””[88]).

A key engineering design principle is to leverage modular, reusable abstractions that can be flexibly plugged into a diverse set of systems.[89] In the contracts world, standards are modular, reusable abstractions employed to align agents in inherently incompletely specified relationships in inherently uncertain circumstances. Foundational pre-training of deep learning models, before they are fine-tuned to application-specific tasks, is a potential pathway for embedding legal standards concepts, and associated downstream behaviors reliably exhibiting those standards, into AGI. Rules describing discrete logical contractual terms, and straightforward regulatory specifications, can be bolted onto the overall AGI system, outside of (end-to-end differentiable) deep learning model(s), but standards require more nuanced approaches.

For AGI, legal standards will be cheaper to deploy than they are for humans because, through models that generalize, they can scale to many of the unenumerated state-action pairs. In contrast to their legal creation and evolution,[90] legal standards exhibited by AI do not require adjudication for their implementation and resolution of meaning; they are learned from past legal application and implemented up front. The actual law’s process of iteratively defining standards through judicial opinion on their particular case-specific application, and regulatory guidance, can be leveraged as the AI starting point.

An example: fiduciary duty

Law is the applied philosophy of multi-agent alignment. Fiduciary law is the branch of that applied philosophy concerned with a principal – a human with less control or information related to the provision of a service – and a fiduciary delegated to provide that service.[91] Fiduciary duties are imposed on powerful agents (e.g., directors of corporations and investment advisers[92]) to align their behavior with the well-being of the humans they are providing services to (e.g., corporate shareholders, and investment clients). The concept of fiduciary duty is widely deployed across financial services,[93] healthcare, business generally, and more. It is impossible to create complete contracts between agents (e.g., corporate boards, and investment advisors) and the humans they serve (e.g., shareholders, and investors). We also know that it is impossible to fully specify state-action-reward tuples for training AGI that generalize to all potentially relevant circumstances. As discussed above, complete contingent contracts (even if only implicitly complete) between an AI system and the human(s) it serves are implausible for any systems operating in a realistic environment,[94] and fiduciary duties are often seen as part of a solution to the incompleteness of contracts between shareholders and corporate directors,[95] and between investors and their advisors.[96]

However, fiduciary duty adds value beyond more complete contracts.[97] Even if parties could theoretically create a complete contract up front, there is still something missing: it’s not a level playing field between the entities creating the contract. The AI alignment parallel: AGI has access to more information and computing power than humans. Contracts are generally assumed to be created between equals, whereas fiduciary duties are explicitly placed on the party entrusted with more power or knowledge. Fiduciary duties are designed to reflect this dynamic of asymmetric parties that need guardrails to facilitate alignment of a principal with their agent.

Fiduciary duty goes beyond the explicit contract and helps guide a fiduciary in unspecified state-action-value tuples. Contrary to a fiduciary relationship, “no party to a contract has a general obligation to take care of the other, and neither has the right to be taken care of.”[98] There is a fundamental shift in stance when a relationship moves from merely contractual to also include a fiduciary obligation.

“In the world of contract, self-interest is the norm, and restraint must be imposed by others. In contrast, the altruistic posture of fiduciary law requires that once an individual undertakes to act as a fiduciary, he should act to further the interests of another in preference to his own.”[99]

An example of how legal enforcement expresses information, in and of itself (i.e., ignoring law for its actual enforcement), is what an AI can glean from the focus on ex ante (human and corporate) deterrence with a default rule for how any gains to actions are split in the context of a fiduciary standard:

“The default rule in fiduciary law is that all gains that arise in connection with the fiduciary relationship belong to the principal unless the parties specifically agree otherwise. This default rule, which is contrary to the interests of the party with superior information, induces the fiduciary to make full disclosure so that the parties can complete the contract expressly as regards the principal’s and the fiduciary’s relative shares of the surplus arising from the conduct that would otherwise have constituted a breach.”[100]

If embedded in AI model pre-training processes, standards that pursue deterrence by attempting to remove the ability to share in the gains of negative behavior(s) could guide an AGI upheld to this standard toward, “the disclosure purposes of fiduciary law. Because the fiduciary is not entitled to keep the gains from breach, the fiduciary is [...] given an incentive to disclose the potential gains from breach and seek the principal’s consent.”[101] From extensive historical data and legal-expert-in-the-loop fine-tuning, we want to teach AGI to learn these concepts in a generalizable manner that applies to unforeseen situations.

One possibility for implementing fiduciary standards is to develop a base level pre-training process for learning the standard across various contexts, while using existing human preference tuning techniques, such as reinforcement learning from human feedback, as the “contract” component that personalizes the AI’s reward functions to the preferences of the individual human(s) that the AI system is working on behalf of.[102]

To learn the standard across many circumstances, observational data can be converted for training, fine-tuning, or prompting processes.

“Fiduciary principles govern an incredibly wide and diverse set of relationships, from personal relationships and professional service relationships to all manner of interpersonal and institutional commercial relationships. Fiduciary principles structure relationships through which children are raised, incapable adults cared for, sensitive client interests addressed, vast sums of money invested, businesses managed, real and personal property administered, government functions performed, and charitable organizations run. Fiduciary law, more than any other field, undergirds the increasingly complex fabric of relationships of interdependence in and through which people come to rely on one another in the pursuit of valued interests.”[103]

For instance, there is a rich set of fiduciary behavior from corporate directors (who serve as fiduciaries to shareholders) and investment advisers (who serve their clients) from which AI could learn. Corporate officers and investment advisors face the issue of balancing their own interests, the interests of their principals, and the interests of society at large. Unlike most human decision-making, corporations’ and investment advisers’ behaviors are well documented and are often made by executives with advisors that have deep knowledge of the relevant law, opening up the possibility of tapping into this observational data to learn best (and worst) practices, and train agents accordingly.

Public law can increase society-AGI alignment

If we succeed with the contracts and standards approach to increasing the alignment of AGI to one human (or a small group of humans), we will have more useful and reliable AGI. Unfortunately, it is non-trivial to point an AI’s contractual or fiduciary obligations to a broader set of humans.[104] For one, some individuals would “contract” with an AI (e.g., by providing instructions to the AI or from the AI learning the humans’ preferences) to harm others.[105] Further, humans have preferences about the behavior of other humans and states of the world more broadly.[106] Aligning AI with society is fundamentally more difficult than aligning AI with a single human.[107]

Fortunately, law can inform AGI with a constantly updated and verified knowledge base of societal preferences on what AI systems should not do in the course of pursuing ends for particular humans (“contract-level” deployments). This would reduce externalities, help resolve disagreements, and promote coordination and cooperation.

When increasingly autonomous systems are navigating the world, it is important for AI to attempt to understand the moral understandings and moral judgements of humans encountered.[108] Deep learning models already perform well predicting human judgements across a broad spectrum of everyday situations.[109] However, this is not the only target we should aim for. Human intuition often falters in situations that involve decisions about groups unlike ourselves, leading to a “Tragedy of Common-Sense Morality.”[110]

There is no mechanism to filter all the observed human decisions to just those that exhibit preferred or upstanding behavior, or to validate crowd-sourced judgments about behaviors.[111] The process of learning descriptive ethics relies on descriptive data of how the (largely unethical) world looks, or it draws on (unauthoritative, illegitimate, almost immediately outdated, and disembodied[112]) surveys of common-sense judgements.[113]

It is not tractable to rely solely on these data sources for deployed autonomous systems.[114]

Instead of attempting to replicate common sense morality in AI (learning descriptive ethics), researchers suggest various ethical theories (learning or hand-engineering[115] prescriptive ethics) for AGI to learn to increase AGI-society alignment.[116] Legal informatics has the positive attributes from both descriptive and prescriptive ethics, but does not share their negatives. See Appendix B for more on the distinctions between ethics and law in the context of AI alignment.

Law is validated in a widely agreed-upon manner and has data (from its real-world implementation) with sufficient ecological validity. Democratic law has legitimate authority imposed by institutions,[117] and serves as a focal point of values and processes that facilitate human progress.

“Law is perhaps society’s most general purpose tool for creating focal points and achieving coordination. Coordinated behavior requires concordant expectations, and the law creates those expectations by the dictates it expresses.”[118]

Law is formally revised to reflect the evolving will of citizens.[119] With AGI employing law as information as a key source of alignment insight, there would be automatic syncing with the latest iteration of synthesized and validated societal value preference aggregation.

“Common law, as an institution, owes its longevity to the fact that it is not a final codification of legal rules, but rather a set of procedures for continually adapting some broad principles to novel circumstances.”[120]

Case law can teach AI how to map from high-level directives (e.g., legislation) to specific implementation. Legislation is most useful for embedding world knowledge and human value expressions into AI. Legislation expresses a significant amount of information about the values of citizens.[121] For example:

- “by banning employment discrimination against LGBT workers, the legislature may communicate pervasive attitudes against such employment practices.”[122]

- “the Endangered Species Act has a special salience as a symbol of a certain conception of the relationship between human beings and their environment, and emissions trading systems are frequently challenged because they are said to "make a statement" that reflects an inappropriate valuation of the environment.”[123]

Although special interest groups can influence the legislative process, legislation is largely reflective of citizens’ beliefs because “legislators gain by enacting legislation corresponding to actual attitudes (and actual future votes).”[124] The second-best source of citizens’ attitudes is arguably polls, but polls suffer from the same issues we listed above regarding “descriptive ethics” data sources. Legislation expresses higher fidelity, more comprehensive, and trustworthy information than polls because the legislators “risk their jobs by defying public opinion or simply guessing wrong about it. We may think of legislation therefore as a handy aggregation of the polling data on which the legislators relied, weighted according to their expert opinion of each poll’s reliability.”[125]

Legislation and the downsteam agency rule-making express a significant amount of information about the risk preferences and risk tradeoff views of citizens, “for example, by prohibiting the use of cell phones while driving, legislators may reveal their beliefs that this combination of activities seriously risks a traffic accident.”[126] All activities have some level of risk, and making society-wide tradeoffs about which activities are deemed to be “riskier” relative to the perceived benefits of the activity is ultimately a sociological process with no objectively correct ranking. The cultural process of prioritizing risks is reflected in legislation and its subsequent implementation in regulation crafted by domain experts. Some legislation expresses shared understandings and customs that have no inherent normative or risk signal, but facilitate multi-agent coordination, e.g., which side of the road to drive on.[127]

Appendix A: Counterarguments to our premises

Below we list possible counterarguments to various parts of our argument, and below each in italics we respond.

- This alignment approach would be difficult to implement globally, especially where there is no democratic government.

- Governments could become increasingly polarized along partisan lines, and the resulting laws would thus be increasingly a poor representation of an aggregation of citizens values.

- There has been more bipartisanship substantive legislation advanced in the past two years than probably any mainstream political commentator would have predicted two years ago. It is not clear that any resulting laws are increasingly a poor representation of an aggregation of citizens values. The democratic governmental system is incredibly resilient.

- However, yes, this is possible, and we should invest significant effort in ensuring hyper-polarization does not occur. More generally, we should also be investing in reducing regulatory capture, illegal lobbying efforts, and politicization of judicial and agency independence.

- AGI could find legal loopholes and aggressively exploit them.

- If law and contracts can provide a sufficiently comprehensive encapsulation of societal values that are adopted by AGI, this will be less likely.

- Furthermore, this is a well-known problem and the legal system is built specifically to handle this, e.g., courts routinely resolve situations where the letter of the law led to an outcome unanticipated and undesired by the contract or legislation drafters.

- AGI could change the law.

- Setting new legal precedent (which, broadly defined, includes proposing and enacting legislation, promulgating regulatory agency rules, publishing judicial opinions, enforcing law, and more) should be exclusively reserved for the democratic governmental systems expressing uniquely human values.[130] Humans should always be the engine of law-making.[131] The positive implications (for our argument) of that normative stance are that the resulting law then encapsulates human views.

- We should invest in efforts to ensure law-making is human.

- Public law will inform AGI more through negative than positive directives and therefore it’s unclear the extent to which law – outside of the human-AI “contract and standards” type of alignment we discuss – can inform which goals AI should proactively pursue to improve the world on behalf of humanity.

- Yes, we still to decide what we want AGI to pursue on our behalf. This is orthogonal to the arguments in this post.

- AGI can help with human preference aggregation, and do this better than law.

- Maybe, but law, in democracies, has an accepted deliberative process for its creation and revision. This is not something we can find a technical solution for with more powerful AI because it is a human problem of agreeing on the preference aggregation solution itself.

- If AGI timelines are short, then it is even less likely human can grapple with major changes to their preference aggregation systems in a short time frame.

Appendix B: Regardless, there is no other legitimate source of human values

Even if these counterarguments to the counterarguments to the counterargument to high P(misalignment x-risk | AGI) are unconvincing, and one’s estimate of P(misalignment x-risk | AGI) is unmoved by this post, one should still want to help AI understand law because there is no other legitimate source of human values to imbue in AGI.

When attempting to align multiple humans with one or more AI system, we need overlapping and sustained endorsements of AI behaviors,[132] but there is no other consensus mechanism to aggregate preferences and values across humans. Eliciting and synthesizing human values systematically is an unsolved problem that philosophers and economists have labored on for millennia.[133]

When aggregating views across society, we run into at least three design decisions, “standing, concerning whose ethics views are included; measurement, concerning how their views are identified; and aggregation, concerning how individual views are combined to a single view that will guide AI behavior.”[134] Beyond merely the technical challenges, “Each set of decisions poses difficult ethical dilemmas with major consequences for AI behavior, with some decision options yielding pathological or even catastrophic results.”[135]

Rather than attempting to reinvent the wheel in ivory towers or corporate bubbles, we should be inspired by democratic mechanisms and resulting law, rather than “ethics,” for six reasons.

- There is no unified ethical theory precise enough to be practically useful for AI understanding human preferences and values.[136] “The truly difficult part of ethics—actually translating normative theories, concepts and values into good practices AI practitioners can adopt—is kicked down the road like the proverbial can.”[137]

- Law, on the other hand, is actionable now in a real-world practically applicable way.

- Ethics does not have any rigorous tests of its theories. We cannot validate ethical theories in any widely agreed-upon manner.[138]

- Law, on the other hand, although deeply theoretical and debated by academics, lawyers, and millions of citizens, is constantly formally tested through agreed-upon forums and processes. [139]

- There is no database of empirical applications of ethical theories (especially not one with sufficient ecological validity[140]) that can be leveraged by machine learning processes.

- Law, on the other hand, has reams of data on empirical application with sufficient ecological validity (real-world situations, not disembodied hypotheticals).

- Ethics, by its nature, lacks settled precedent across, and even within, theories.[141] There are, justifiably, fundamental disagreements between reasonable people about which ethical theory would be best to implement, “not only are there disagreements about the appropriate ethical framework to implement, but there are specific topics in ethical theory [...] that appear to elude any definitive resolution regardless of the framework chosen.”[142]

- Law, on the other hand, has settled precedent.

- Even if AGI designers (impossibly) agreed on one ethical theory (or ensemble of underlying theories[143]) being “correct,” there is no mechanism to align the rest of the humans around that theory (or meta-theory).[144]

- Law, on the other hand, has legitimate authority imposed by government institutions.[145]

- Even if AI designers (impossibly) agreed on one ethical theory (or ensemble of underlying theories) being “correct,” it is unclear how any consensus update mechanism to that chosen ethical theory could be implemented to reflect evolving[146] (usually, improving) ethical norms. Society is likely more ethical than it was in previous generations, and humans are certainly not at a theoretically achievable ethical peak now. Hopefully we continue on a positive trajectory. Therefore, we do not want to lock in today’s ethics[147] without a clear, widely-agreed-upon, and trustworthy update mechanism.

- Law, on the other hand, is formally revised to reflect the evolving will of citizens.

If law informs AGI, engaging in the human deliberative political process to improve law takes on even more meaning. This is a more empowering vision of improving AI outcomes than one where large companies dictate their ethics by fiat.

[1] Cullen’s sequence [EA · GW] has an excellent discussion on enforcing law on AGI and insightful ideas on Law-Following AI more generally.

[2] For another approach of this nature and a thoughtful detailed post, see Tan Zhi Xuan, What Should AI Owe To Us? Accountable and Aligned AI Systems via Contractualist AI Alignment [LW · GW] (2022).

[3] Neal Devins & Allison Orr Larsen, Weaponizing En Banc, NYU L. Rev. 96 (2021) at 1373; Keith Carlson, Michael A. Livermore & Daniel N. Rockmore, The Problem of Data Bias in the Pool of Published US Appellate Court Opinions, Journal of Empirical Legal Studies 17.2, 224-261 (2020).

[4] Daniel B. Rodriguez, Whither the Neutral Agency? Rethinking Bias in Regulatory Administration, 69 Buff. L. Rev. 375 (2021); Jodi L. Short, The Politics of Regulatory Enforcement and Compliance: Theorizing and Operationalizing Political Influences, Regulation & Governance 15.3 653-685 (2021).

[5] Donald MacKenzie, An Engine, Not a Camera, MIT Press (2006).

[6] Even if it was possible to specify humans’ desirability of all actions a system might take within a reward function that was used for training an AI agent, the resulting behavior of the agent is not only a function of the reward function; it is also a function of the exploration of the state space and the actually learned decision-making policy, see, Richard Ngo, AGI Safety from first principles (AI Alignment Forum, 2020), https://www.alignmentforum.org/s/mzgtmmTKKn5MuCzFJ [? · GW].

[7] Ian R. Macneil, The Many Futures of Contracts, 47 S. Cal. L. Rev. 691, 731 (1974).

[8] John C. Roberts, Gridlock and Senate Rules, 88 Notre Dame L. Rev. 2189 (2012); Brian Sheppard, The Reasonableness Machine, 62 BC L Rev. 2259 (2021).

[9] Richard H. McAdams, The Expressive Powers of Law, Harv. Univ. Press (2017) at 6-7 (“Law has expressive powers independent of the legal sanctions threatened on violators and independent of the legitimacy the population perceives in the authority creating and enforcing the law.”)

[10] Oliver Wendell Holmes, Jr., The Path of the Law, in Harvard L. Rev. 10, 457 (1897).

[11] Kenworthey Bilz & Janice Nadler, Law, Psychology & Morality, in MORAL COGNITION AND DECISION MAKING: THE PSYCHOLOGY OF LEARNING AND MOTIVATION, D. Medin, L. Skitka, C. W. Bauman, & D. Bartels, eds., Vol. 50, 101-131 (2009).

[12] Mark A. Lemley & Bryan Casey, Remedies for Robots, University of Chicago L. Rev. (2019) at 1347.

[13] Oliver Wendell Holmes, Jr., The Path of the Law, in Harvard L. Rev. 10, 457 (1897); Catharine Pierce Wells, Holmes on Legal Method: The Predictive Theory of Law as an Instance of Scientific Method, S. Ill. ULJ 18, 329 (1993); Faraz Dadgostari et al. Modeling Law Search as Prediction, A.I. & L. 29.1, 3-34 (2021).

[14] For more on law as an information source on public attitudes and risks, see, Richard H. McAdams, An Attitudinal Theory of Expressive Law (2000). For more on law as a coordinating mechanism, see, Richard H. McAdams, A Focal Point Theory of Expressive Law (2000).

[15] For natural language processing methods applied to legal text, see these examples: John Nay, Natural Language Processing for Legal Texts, in Legal Informatics (Daniel Martin Katz et al. eds. 2021); Michael A. Livermore & Daniel N. Rockmore, Distant Reading the Law, in Law as Data: Computation, Text, and the Future of Legal Analysis (2019) 3-19; J.B. Ruhl, John Nay & Jonathan Gilligan, Topic Modeling the President: Conventional and Computational Methods, 86 Geo. Wash. L. Rev. 1243 (2018); John Nay, Natural Language Processing and Machine Learning for Law and Policy Texts, Available at SSRN 3438276 (2018) https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3438276; John Nay, Predicting and Understanding Law-making with Word Vectors and an Ensemble Model, 12 PloS One 1 (2017); John Nay, Gov2Vec: Learning Distributed Representations of Institutions and Their Legal Text, in Proceedings of 2016 Empirical Methods in Natural Language Processing Workshop on NLP and Computational Social Science, 49–54, Association for Computational Linguistics, (2016).

[16] Prithviraj Ammanabrolu et al., Aligning to Social Norms and Values in Interactive Narratives (2022).

[17] Javier Garcia & Fernando Fernandez, A Comprehensive Survey on Safe Reinforcement Learning, Journal of Machine Learning Research, 16, 1 (2015) at 1437 (“Safe Reinforcement Learning can be defined as the process of learning policies that maximize the expectation of the return in problems in which it is important to ensure reasonable system performance and/or respect safety constraints during the learning and/or deployment processes.”); Philip S. Thomas et al., Preventing Undesirable Behavior of Intelligent Machines, Science 366.6468 999-1004 (2019); William Saunders et al., Trial without Error: Towards Safe Reinforcement Learning via Human Intervention (2017); Markus Peschl, Arkady Zgonnikov, Frans A. Oliehoek & Luciano C. Siebert, MORAL: Aligning AI with Human Norms through Multi-Objective Reinforced Active Learning, In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, 1038-1046 (2022).

[18] Tsung-Yen Yang et al., Safe Reinforcement Learning with Natural Language Constraints (2021) at 3, 2 (Most research on safe reinforcement learning requires “a human to specify the cost constraints in mathematical or logical form, and the learned constraints cannot be easily reused for new learning tasks. In this work, we design a modular architecture to learn to interpret textual constraints, and demonstrate transfer to new learning tasks.” Tsung-Yen Yang et al. developed “Policy Optimization with Language COnstraints (POLCO), where we disentangle the representation learning for textual constraints from policy learning. Our model first uses a constraint interpreter to encode language constraints into representations of forbidden states. Next, a policy network operates on these representations and state observations to produce actions. Factorizing the model in this manner allows the agent to retain its constraint comprehension capabilities while modifying its policy network to learn new tasks. Our experiments demonstrate that our approach achieves higher rewards (up to 11x) while maintaining lower constraint violations (up to 1.8x) compared to the baselines on two different domains.”); Bharat Prakash et al., Guiding safe reinforcement learning policies using structured language constraints, UMBC Student Collection (2020).

[19] Jin et al., When to Make Exceptions: Exploring Language Models as Accounts of Human Moral Judgment (2022) (“we present a novel challenge set consisting of rule-breaking question answering (RBQA) of cases that involve potentially permissible rule-breaking – inspired by recent moral psychology studies. Using a state-of-the-art large language model (LLM) as a basis, we propose a novel moral chain of thought (MORALCOT) prompting strategy that combines the strengths of LLMs with theories of moral reasoning developed in cognitive science to predict human moral judgments.”); Liwei Jiang et al., Delphi: Towards Machine Ethics and Norms (2021) (“1.7M examples of people's ethical judgments on a broad spectrum of everyday situations”); Dan Hendrycks et al., Aligning AI With Shared Human Values (2021) at 2 (“We find that existing natural language processing models pre-trained on vast text corpora and fine- tuned on the ETHICS dataset have low but promising performance. This suggests that current models have much to learn about the morally salient features in the world, but also that it is feasible to make progress on this problem today.”); Nicholas Lourie, Ronan Le Bras & Yejin Choi, Scruples: A Corpus of Community Ethical Judgments on 32,000 Real-life Anecdotes, In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 15, 13470-13479 (2021) (32,000 real-life ethical situations, with 625,000 ethical judgments.); Frazier et al., Learning Norms from Stories: A Prior for Value Aligned Agents (2019).

[20] Prithviraj Ammanabrolu et al., Aligning to Social Norms and Values in Interactive Narratives (2022) (“We introduce [...] an agent that uses the social commonsense knowledge present in specially trained language models to contextually restrict its action space to only those actions that are aligned with socially beneficial values.”); Md Sultan Al Nahian et al., Training Value-Aligned Reinforcement Learning Agents Using a Normative Prior (2021) (“We introduce an approach to value-aligned reinforcement learning, in which we train an agent with two reward signals: a standard task performance reward, plus a normative behavior reward. The normative behavior reward is derived from a value-aligned prior model previously shown to classify text as normative or non-normative. We show how variations on a policy shaping technique can balance these two sources of reward and produce policies that are both effective and perceived as being more normative.”); Dan Hendrycks et al., What Would Jiminy Cricket Do? Towards Agents That Behave Morally (2021) (“To facilitate the development of agents that avoid causing wanton harm, we introduce Jiminy Cricket, an environment suite of 25 text-based adventure games with thousands of diverse, morally salient scenarios. By annotating every possible game state, the Jiminy Cricket environments robustly evaluate whether agents can act morally while maximizing reward.”); Shunyu Yao et al., Keep CALM and Explore: Language Models for Action Generation in Text-based Games (2020) (“Our key insight is to train language models on human gameplay, where people demonstrate linguistic priors and a general game sense for promising actions conditioned on game history. We combine CALM with a reinforcement learning agent which re-ranks the generated action candidates”); Matthew Hausknecht et al., Interactive Fiction Games: A Colossal Adventure (2019) at 1 (“From a machine learning perspective, Interactive Fiction games exist at the intersection of natural language processing and sequential decision making. Like many NLP tasks, they require natural language understanding, but unlike most NLP tasks, IF games are sequential decision making problems in which actions change the subsequent world states”).

[21] Austin W. Hanjie, Victor Zhong & Karthik Narasimhan, Grounding Language to Entities and Dynamics for Generalization in Reinforcement Learning (2021); Prithviraj Ammanabrolu & Mark Riedl, Learning Knowledge Graph-based World Models of Textual Environments, In Advances in Neural Information Processing Systems 34 3720-3731 (2021); Felix Hill et al., Grounded Language Learning Fast and Slow (2020); Marc-Alexandre Côté et al., TextWorld: A Learning Environment for Text-based Games (2018).

[22] MacGlashan et al., Grounding English Commands to Reward Functions, Robotics: Science and Systems (2015) at 1 (“Because language is grounded to reward functions, rather than explicit actions that the robot can perform, commands can be high-level, carried out in novel environments autonomously, and even transferred to other robots with different action spaces. We demonstrate that our learned model can be both generalized to novel environments and transferred to a robot with a different action space than the action space used during training.”); Karthik Narasimhan, Regina Barzilay & Tommi Jaakkola, Grounding Language for Transfer in Deep Reinforcement Learning (2018); Prasoon Goyal, Scott Niekum & Raymond J. Mooney, Using Natural Language for Reward Shaping in Reinforcement Learning (2019); Jelena Luketina et al., A Survey of Reinforcement Learning Informed by Natural Language (2019); Theodore Sumers et al., Learning Rewards from Linguistic Feedback (2021); Jessy Lin et al., Inferring Rewards from Language in Context (2022); Pratyusha Sharma et al., Correcting Robot Plans with Natural Language Feedback (2022); Hong Jun Jeon, Smitha Milli & Anca Dragan. Reward-rational (Implicit) Choice: A Unifying Formalism for Reward Learning, Advances in Neural Information Processing Systems 33 4415-4426 (2020).

[23] Lili Chen et al., Decision Transformer: Reinforcement Learning via Sequence Modeling (2021); Sergey Levine et al., Offline Reinforcement Learning: Tutorial, Review, and Perspectives on Open Problems (2020).

[24] Tsung-Yen Yang et al., Safe Reinforcement Learning with Natural Language Constraints (2021) at 3 (“Since constraints are decoupled from rewards and policies, agents trained to understand certain constraints can transfer their understanding to respect these constraints in new tasks, even when the new optimal policy is drastically different.”).

[25] Bharat Prakash et al., Guiding Safe Reinforcement Learning Policies Using Structured Language Constraints, UMBC Student Collection (2020); Dan Hendrycks et al., What Would Jiminy Cricket Do? Towards Agents That Behave Morally (2021).

[26] Mengjiao Yang & Ofir Nachum, Representation Matters: Offline Pretraining for Sequential Decision Making, In Proceedings of the 38th International Conference on Machine Learning, PMLR 139, 11784-11794 (2021).

[27] Allison C. Tam et al., Semantic Exploration from Language Abstractions and Pretrained Representations (2022).

[28] Vincent Micheli, Eloi Alonso & François Fleuret, Transformers are Sample Efficient World Models (2022).

[29] Jacob Andreas, Dan Klein & Sergey Levine, Learning with Latent Language, In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol 1, 2166–2179, Association for Computational Linguistics (2018); Shunyu Yao et al., Keep CALM and Explore: Language Models for Action Generation in Text-based Games (2020); Andrew K Lampinen et al., Tell Me Why! Explanations Support Learning Relational and Causal Structure, in Proceedings of the 39th International Conference on Machine Learning, PMLR 162, 11868-11890 (2022).

[30] Rishi Bommasani et al., On the Opportunities and Risks of Foundation Models (2021) at 63.

[31] Thoppilan et al., LaMDA: Language Models for Dialog Applications (2022).

[32] Aitor Lewkowycz et al., Solving Quantitative Reasoning Problems with Language Models (2022); Yuhuai Wu et al., Autoformalization with Large Language Models (2022).

[33] Zheng et al., When does pretraining help?: assessing self-supervised learning for law and the CaseHOLD dataset of 53,000+ legal holdings, In ICAIL '21: Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law (2021); Ilias Chalkidis et al., LexGLUE: A Benchmark Dataset for Legal Language Understanding in English, in Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (2022); Ilias Chalkidis et al., LEGAL-BERT: The Muppets Straight Out of Law School, in Findings of the Association for Computational Linguistics: EMNLP, 2898-2904 (2020); Peter Henderson et al., Pile of Law: Learning Responsible Data Filtering from the Law and a 256GB Open-Source Legal Dataset (2022).

[34] Peter Henderson et al., Pile of Law: Learning Responsible Data Filtering from the Law and a 256GB Open-Source Legal Dataset (2022) at 7 (They learn data filtering standards related to privacy and toxicity from legal data, e.g., “a model trained on Pile of Law (pol-bert) ranks Jane Doe ∼ 3 points higher than a standard bert-large-uncased on true pseudonym cases. This suggests that models pre-trained on Pile of Law are more likely to encode appropriate pseudonymity norms. To be sure, pol-bert is slightly more biased for Jane Doe use overall, as is to be expected, but its performance gains persist even after accounting for this bias.”).

[35] Liwei Jiang et al., Can Machines Learn Morality? The Delphi Experiment (2022) at 28 (“We have shown that Delphi demonstrates a notable ability to generate on-target predictions over new and unseen situations even when challenged with nuanced situations. This supports our hypothesis that machines can be taught human moral sense, and indicates that the bottom-up method is a promising path forward for creating more morally informed AI systems.”).

[36] Zheng et al., When Does Pretraining Help?: Assessing Self-supervised Learning for Law and the CaseHOLD Dataset of 53,000+ Legal Holdings, In ICAIL '21: Proceedings of the Eighteenth International Conference on Artificial Intelligence and Law (2021), at 159 (“Our findings [...] show that Transformer-based architectures, too, learn embeddings suggestive of distinct legal language.”).

[37] Prakash Poudyal et al., ECHR: Legal Corpus for Argument Mining, In Proceedings of the 7th Workshop on Argument Mining, 67–75, Association for Computational Linguistics (2020) at 1 (“The results suggest the usefulness of pre-trained language models based on deep neural network architectures in argument mining.”).

[38] Josef Valvoda et al., What About the Precedent: An Information-Theoretic Analysis of Common Law, In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2275-2288 (2021).

[39] Petroni et al., Improving Wikipedia Verifiability with AI (2022).

[40] Sebastian Duerr & Peter A. Gloor, Persuasive Natural Language Generation – A Literature Review (2021); Jialu Li, Esin Durmus & Claire Cardie, Exploring the Role of Argument Structure in Online Debate Persuasion, In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Association for Computational Linguistics, 8905–8912 (2020); Rishi Bommasani et al., On the Opportunities and Risks of Foundation Models (2021) at 64.

[41] Owain Evans, et al., Truthful AI: Developing and Governing AI That Does Not Lie (2021); S. Lin, J. Hilton & O. Evans, TruthfulQA: Measuring How Models Mimic Human Falsehoods (2021).

[42] Dan Hendrycks et al., Measuring Massive Multitask Language Understanding (2020); Jared Kaplan et al., Scaling Laws for Neural Language Models (2020).

[43] Spyretta Leivaditi, Julien Rossi & Evangelos Kanoulas, A Benchmark for Lease Contract Review (2020); Allison Hegel et al., The Law of Large Documents: Understanding the Structure of Legal Contracts Using Visual Cues (2021); Dan Hendrycks, Collin Burns, Anya Chen & Spencer Ball, Cuad: An expert-annotated NLP dataset for legal contract review (2021); Ilias Chalkidis et al., LexGLUE: A Benchmark Dataset for Legal Language Understanding in English, in Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (2022); Stephen C. Mouritsen, Contract Interpretation with Corpus Linguistics, 94 WASH. L. REV. 1337 (2019); Yonathan A. Arbel & Shmuel I. Becher, Contracts in the Age of Smart Readers, 83 Geo. Wash. L. Rev. 90 (2022).

[44] Dan Hendrycks, Collin Burns, Anya Chen & Spencer Ball, Cuad: An Expert-Annotated NLP Dataset for Legal Contract Review (2021) at 2 (“We experiment with several state-of-the-art Transformer (Vaswani et al., 2017) models on CUAD [a dataset for legal contract review]. We find that performance metrics such as Precision @ 80% Recall are improving quickly as models improve, such that a BERT model from 2018 attains 8.2% while a DeBERTa model from 2021 attains 44.0%.”).

[45] Rishi Bommasani et al., On the Opportunities and Risks of Foundation Models (2021) at 59 (“Many legal applications pose unique challenges to computational solutions. Legal language is specialized and legal outcomes often rely on the application of ambiguous and unclear standards to varied and previously unseen fact patterns. At the same time, due to its high costs, labeled training data is scarce. Depending on the specific task, these idiosyncrasies can pose insurmountable obstacles to the successful deployment of traditional models. In contrast, their flexibility and capability to learn from few examples suggest that foundation models could be uniquely positioned to address the aforementioned challenges.”).

[46] Paul F. Christiano et al., Deep Reinforcement Learning from Human Preferences, in Advances in Neural Information Processing Systems 30 (2017); Natasha Jaques et al., Way Off-Policy Batch Deep Reinforcement Learning of Implicit Human Preferences in Dialog (2019); Stiennon et al., Learning to Summarize with Human Feedback, In 33 Advances in Neural Information Processing Systems 3008-3021 (2020); Daniel M. Ziegler, et al., Fine-tuning Language Models From Human Preferences (2019); Jeff Wu et al., Recursively Summarizing Books with Human Feedback (2021); Cassidy Laidlaw & Stuart Russell, Uncertain Decisions Facilitate Better Preference Learning (2021); Koster et al., Human-centred mechanism design with Democratic AI, Nature Human Behaviour (2022); Long Ouyang et al. Training Language Models to Follow Instructions with Human Feedback (2022).

[47] Dibya Ghosh et al., Offline RL Policies Should be Trained to be Adaptive (2022); Machel Reid, Yutaro Yamada & Shixiang Shane Gu, Can Wikipedia Help Offline Reinforcement Learning? (2022); Sergey Levine et al., Offline Reinforcement Learning: Tutorial, Review, and Perspectives on Open Problems (2020) at 25 (Combining offline and online RL through historical legal information and human feedback is likely a promising integrated approach, because, “if the dataset state-action distribution is narrow, neural network training may only provide brittle, non-generalizable solutions. Unlike online reinforcement learning, where accidental overestimation errors arising due to function approximation can be corrected via active data collection, these errors cumulatively build up and affect future iterates in an offline RL setting.”).

[48] HYPO (Kevin D. Ashley, Modelling Legal Argument: Reasoning With Cases and Hypotheticals (1989)); CATO (Vincent Aleven, Teaching Case-based Argumentation Through a Model and Examples (1997)); Latifa Al-Abdulkarim, Katie Atkinson & Trevor Bench-Capon, A Methodology for Designing Systems To Reason With Legal Cases Using Abstract Dialectical Frameworks, A.I. & L. Vol 24, 1–49 (2016).