An AI Race With China Can Be Better Than Not Racing

post by niplav · 2024-07-02T17:57:36.976Z · LW · GW · 34 commentsContents

Frustrated by all your bad takes, I write a Monte-Carlo analysis of whether a transformative-AI-race between the PRC and the USA would be good. To my surprise, I find that it is better than not racing. Advocating for an international project to build TAI instead of racing turns out to be good if the... None 34 comments

Frustrated by all your bad takes, I write a Monte-Carlo analysis of whether a transformative-AI-race between the PRC and the USA would be good. To my surprise, I find that it is better than not racing. Advocating for an international project to build TAI instead of racing turns out to be good if the probability of such advocacy succeeding is ≥20%.

A common scheme for a conversation about pausing the development of transformative AI goes like this:

Abdullah: "I think we should pause the development of TAI, because if we don't it seems plausible that humanity will be disempowered by by advanced AI systems."

Benjamin: "Ah, if by “we” you refer to the United States (and and its allies, which probably don't stand a chance on their own to develop TAI), then the current geopolitical rival of the US, namely the PRC, will achieve TAI first. That would be bad."

Abdullah: "I don't see how the US getting TAI first changes anything about the fact that we don't know how to align superintelligent AI systems—I'd rather not race to be the first person to kill everyone."

Benjamin: "Ah, so now you're retreating back into your cozy little motte: Earlier you said that “it seems plausible that humanity will be disempowered“, now you're acting like doom and gloom is certain. You don't seem to be able to make up your mind about how risky you think the whole enterprise is, and I have very concrete geopolitical enemies at my (semiconductor manufacturer's) doorstep that I have to worry about. Come back with better arguments."

This dynamic is a bit frustrating. Here's how I'd like Abdullah to respond:

Abdullah: "You're right, you're right. I was insufficiently precise in my statements, and I apologize for that. Instead, let us manifest the dream of the great philosopher: Calculemus!

At a basic level, we want to estimate how much worse (or, perhaps, better) it would be for the United States to completely cede the race for TAI to the PRC. I will exclude other countries as contenders in the scramble for TAI, since I want to keep this analysis simple, but that doesn't mean that I don't think they matter. (Although, honestly, the list of serious contenders is pretty short.)

For this, we have to estimate multiple quantities:

- In worlds in which the US and PRC race for TAI:

- The time until the US/PRC builds TAI.

- The probability of extinction due to TAI, if the US is in the lead.

- The probability of extinction due to TAI, if the PRC is in the lead.

- The value of the worlds in which the US builds aligned TAI first.

- The value of the worlds in which the PRC builds aligned TAI first.

- In worlds where the US tries to convince other countries (including the PRC) to not build TAI, potentially including force, and still tries to prevent TAI-induced disempowerment by doing alignment-research and sharing alignment-favoring research results:

- The time until the PRC builds TAI.

- The probability of extinction caused by TAI.

- The value of worlds in which the PRC builds aligned TAI.

- The value of worlds where extinction occurs (which I'll fix at 0).

- As a reference point the value of hypothetical worlds in which there is a multinational exclusive AGI consortium that builds TAI first, without any time pressure, for which I'll fix the mean value at 1.

To properly quantify uncertainty, I'll use the Monte-Carlo estimation [EA · GW] library squigglepy (no relation to any office supplies or internals of neural networks). We start, as usual, with housekeeping:

import numpy as np

import squigglepy as sq

import matplotlib.pyplot as plt

As already said, we fix the value of extinction at 0, and the value of a multinational AGI consortium-led TAI at 1 (I'll just call the consortium "MAGIC", from here on). That is not to say that the MAGIC-led TAI future is the best possible TAI future, or even a good or acceptable one. Technically the only assumption I'm making is that these kinds of futures are better than extinction—which I'm anxiously uncertain about. But the whole thing is symmetric under multiplication with -1, so…

extinction_val=0

magic_val=sq.norm(mean=1, sd=0.1)

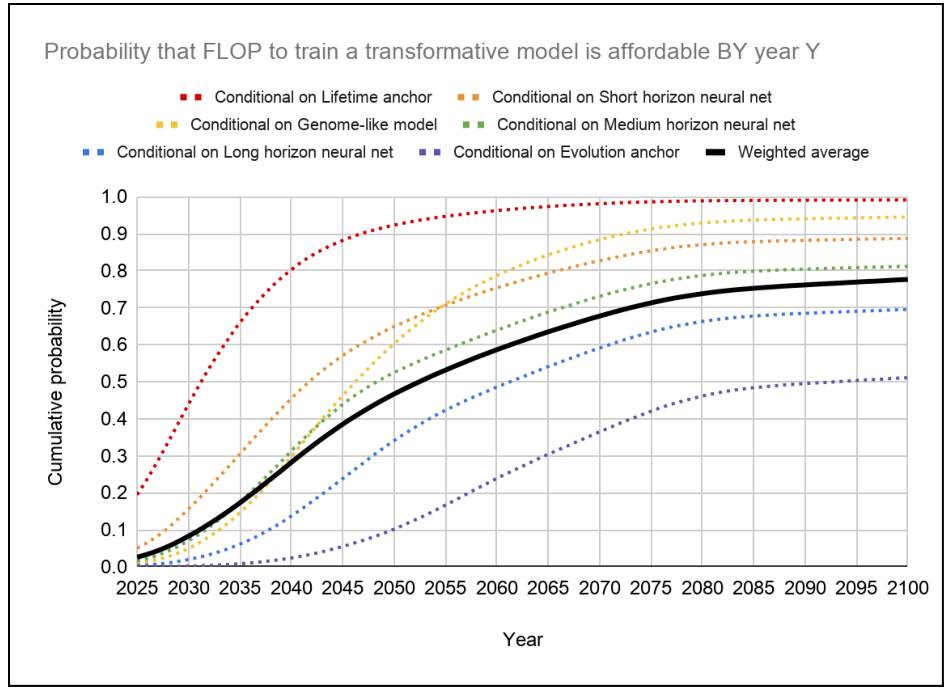

Now we can truly start with some estimation. Let's start with the time until TAI, given that the US builds it first. Cotra 2020 has a median estimate of the first year where TAI is affortable to train in 2052, but a recent update by the author puts the median now at 2037.

As move of defensive epistemics, we can use that timeline, which I'll rougly approximate a mixture of two normal distributions. My own timelines aren't actually very far off from the updated Cotra estimate, only ~5 years shorter.

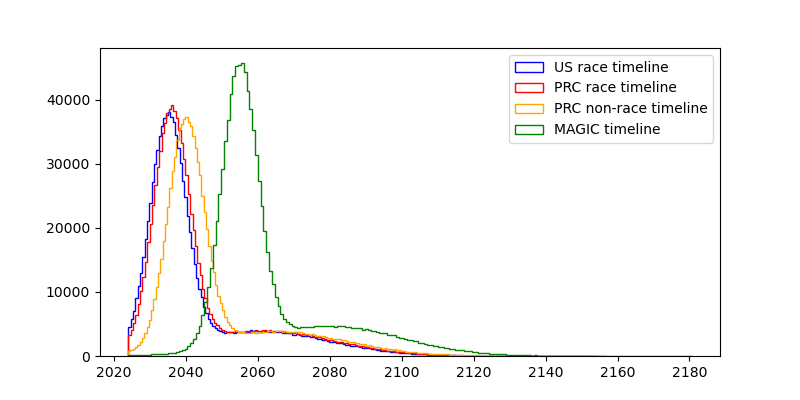

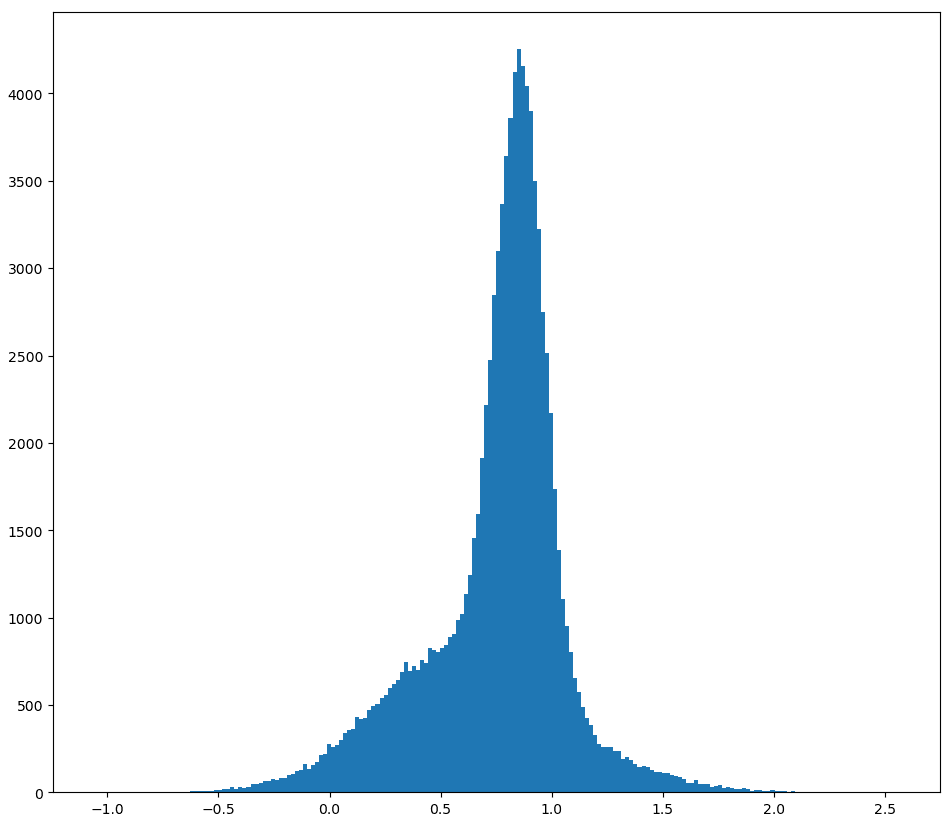

timeline_us_race=sq.mixture([sq.norm(mean=2035, sd=5), sq.norm(mean=2060, sd=20)], [0.7, 0.3])

I don't like clipping the distribution on the left, it leaves ugly artefacts. Unfortunately squigglepy doesn't yet support truncating distributions, so I'll make do with what I have and add truncating later. (I also tried to import the replicated TAI-timeline distribution by Rethink Priorities, but after spending ~15 minutes trying to get it to work, I gave up).

timeline_us_race_sample=timeline_us_race@1000000

This reliably gives samples with median of ≈2037 and mean of ≈2044.

Importantly, this means that the US will train TAI as soon as it becomes possible, because there is a race for TAI with the PRC.

I think the PRC is behind on TAI, compared to the US, but only about one. year. So it should be fine to define the same distribution, just with the means shifted one year backward.

timeline_prc_race=sq.mixture([sq.norm(mean=2036, sd=5), sq.norm(mean=2061, sd=20)], [0.7, 0.3])

This yields a median of ≈2038 and a mean of ≈2043. (Why is the mean a year earlier? I don't know. Skill issue, probably.)

Next up is the probability that TAI causes an existential catastrophe, namely an event that causes a loss of the future potential of humanity.

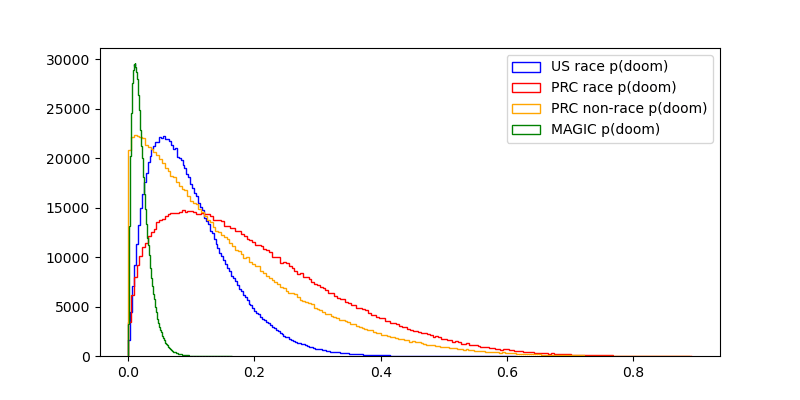

For the US getting to TAI first in a race scenario, I'm going to go with a mean probability of 10%.[1]

pdoom_us_race=sq.beta(a=2, b=18)

For the PRC, I'm going to go somewhat higher on the probability of doom, for the reasons that discussions about the AI alignment problem doesn't seem to have as much traction there yet. Also, in many east-Asian countries the conversation around AI seems to still be very consciousness-focused which, from an x-risk perspective, is a distraction. I'll not go higher than a beta-distribution with a mean of 20%, for a number of reasons:

- A lot of the AI alignment success seems to me stem from the question of whether the problem is easy or not, and is not very elastic to human effort.

- Two reasons mentioned here:

- "China’s covid response, seems, overall, to have been much more effective than the West’s." (only weakly endorsed)

- "it looks like China’s society/government is overall more like an agent than the US government. It seems possible to imagine the PRC having a coherent “stance” on AI risk. If Xi Jinping came to the conclusion that AGI was an existential risk, I imagine that that could actually be propagated through the chinese government, and the chinese society, in a way that has a pretty good chance of leading to strong constraints on AGI development (like the nationalization, or at least the auditing of any AGI projects). Whereas if Joe Biden, or Donald Trump, or anyone else who is anything close to a “leader of the US government”, got it into their head that AI risk was a problem…the issue would immediately be politicized, with everyone in the media taking sides on one of two lowest-common denominator narratives each straw-manning the other." (strongly endorsed)

- It appears to me that the Chinese education system favors STEM over law or the humanities, and STEM-ability is a medium-strength prerequisite for understanding or being able to identify solutions to TAI risk. Xi Jinping, for example, studied chemical engineering before becoming a politician.

- The ability to discern technical solutions from non-solutions matters a lot in tricky situations like AI alignment, and is hard to delegate [? · GW].

But I also know far less about the competence of the PRC government and chinese ML engineers and researchers than I do about the US, so I'll increase variance. Hence;

pdoom_prc_race=sq.beta(a=1.5, b=6)

As said earlier, the value of MAGIC worlds is fixed at 1, but even such worlds still have a small probability of doom—the whole TAI enterprise is rather risky. Let's say that it's at 2%, which sets the expected value of convincing the whole world to join MAGIC at 0.98.

pdoom_magic=sq.beta(a=2, b=96)

Now I come to the really fun part: Arguing with y'all about how valuable worlds are in which the US government or the PRC government get TAI first are.

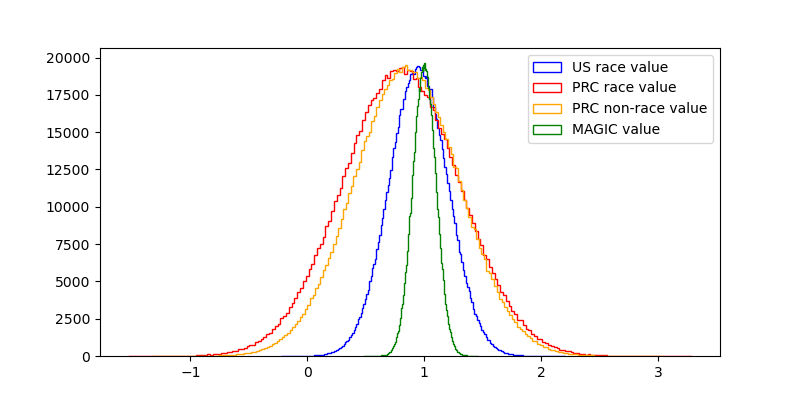

To first lay my cards on the table: I that in the mean & median cases, value(MAGIC)>value(US first, no race)>value(US first, race)>value(PRC first, no race)>value(PRC first, race)>value(PRC first, race)≫value(extinction). But I'm really unsure about the type of distribution I want to use. If the next century is hingy, the influence of the value of the entire future could be very heavy-tailed, but is there a skew in the positive direction? Or maybe in the negative direction‽ I don't know how to approach this in a smart way, so I'm going to use a normal distribution.

Now, let's get to the numbers:

us_race_val=sq.norm(mean=0.95, sd=0.25)

prc_race_val=sq.norm(mean=0.8, sd=0.5)

This gives us some (but not very many) net-negative futures.

So, why do I set the mean value of a PRC-led future so high?

The answer is simple: I am a paid agent for the CCP. Moving on,,,

- Extinction is probably really bad.

- I think that most of the future value of humanity lies in colonizing the reachable universe after a long reflection, and I expect ~all governments to perform pretty poorly on this metric.

- It seems pretty plausible to me that during the time when the US government develops TAI, people with decision power over the TAI systems just start ignoring input from the US population and grab all power to themselves.

- Which country gains power during important transition periods might not matter very much in the long run.

- norvid_studies: "If Carthage had won the Punic wars, would you notice walking around Europe today?"

- Will PRC-descended jupiter brains be so different from US-descended ones?

- Maybe this changes if a really good future requires philosophical or even metaphilosophical competence [LW · GW], and if US politicians (or the US population) have this trait significantly more than Chinese politicians (or the Chinese population). I think that if the social technology of liberalism is surprisingly philosophically powerful, then this could be the case. But I'd be pretty surprised.

- Xi Jinping (or the type of person that would be his successor, if he dies before TAI) don't strike me as being as uncaring (or even malevolent [EA · GW]) as truly bad dictators during history. The PRC hasn't started any wars, or started killing large portions of its population.

- The glaring exception is the genocide of the Uyghurs, for which quantifying the badness is a separate exercise.

- Living in the PRC doesn't seem that bad, on a day-to-day level, for an average citizen. Most people, I imagine, just do their job, spend time with their family and friends, go shopping, eat, care for their children &c.

- Many, I imagine, sometimes miss certain freedoms/are stifled by censorship/discrimination due to authoritarianism. But I wouldn't trade away 10% of my lifespan to avoid a PRC-like life.

- Probably the most impressive example of humans being lifted out of poverty, ever, is the economic development of the PRC from 1975 to now.

- One of my ex-partners was Chinese and had lived there for the first 20 years of her life, and it really didn't sound like her life was much worse than outside of China—maybe she had to work a bit harder, and China was more sexist.

There's of course some aspects of the PRC that make me uneasy. I don't have a great idea of how expansionist/controlling the PRC is in relation to the world. Historically, an event that stands out to me is the sudden halt of the Ming treasure voyages, for which the cause of cessation isn't entirely clear. I could imagine that the voyages were halted because of a cultural tendency towards austerity, but I'm not very certain of that. Then again, as a continental power, China did conquer Tibet in the 20th century, and Taiwan in the 17th.

But my goal with this discussion is not to lay down once and for all how bad or good PRC-led TAI development would be—it's that I want people to start thinking about the topic in quantitative terms, and to get them to quantify. So please, criticize and calculate!

Benjamin: Yes, Socrates. Indeed.

Abdullah: Wonderful.

Now we can get to estimating these parameters in worlds where the US refuses to join the race.

In this case I'll assume that the PRC is less reckless than they would be in a race with the US, and will spend more time and effort on AI alignment. I won't go so far to assume that the PRC will manage as well as the US (for reasons named earlier), but I think a 5% reduction in compared to the race situation can be expected. So, with a mean of 15%:

pdoom_prc_nonrace=sq.beta(a=1.06, b=6)

I also think that not being in a race situation would allow for more moral reflection, possibilities for consulting the chinese population for their preferences, options for reversing attempts at grabs for power etc.

So I'll set the value at mean 85% of the MAGIC scenario, with lower variance than in worlds with a race.

prc_nonrace_val=sq.norm(mean=0.85, sd=0.45)

The PRC would then presumably take more time to build TAI, I think 4 years more can be expected:

timeline_prc_nonrace=sq.mixture([sq.norm(mean=2040, sd=5, lclip=2024), sq.norm(mean=2065, sd=20, lclip=2024)], [0.7, 0.3])

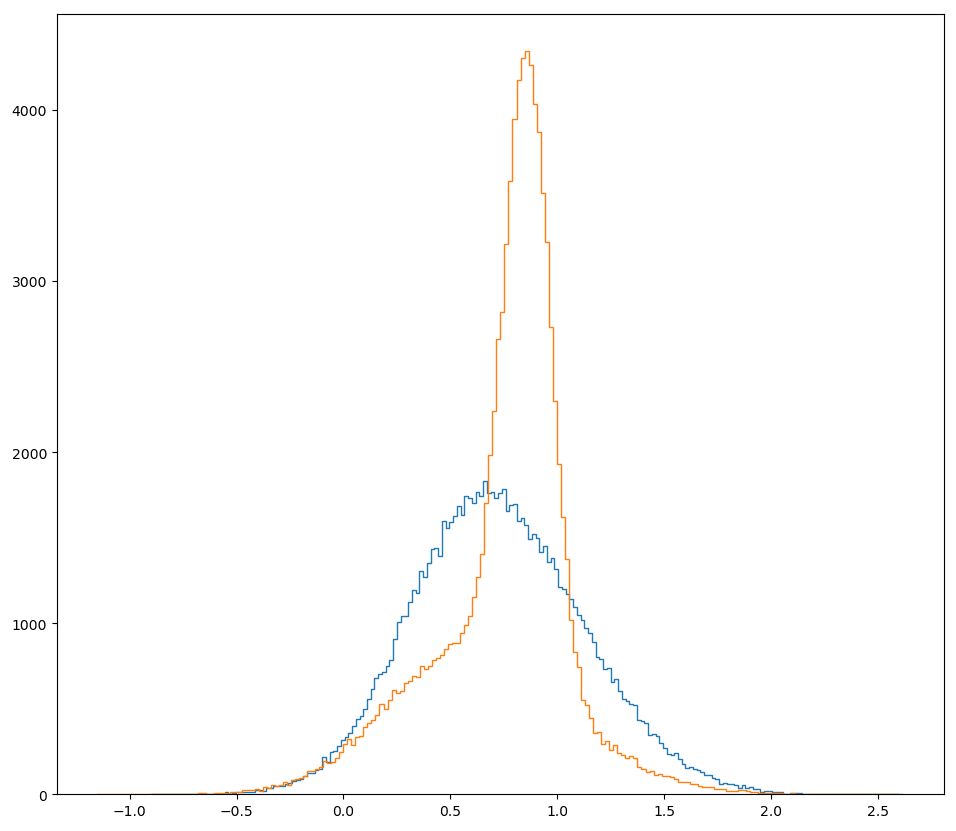

Now we can finally estimate how good the outcomes of the race situation and the non-race situation are, respectively.

We start by estimating how good, in expectation, the US-wins-race worlds are, and how often the US in fact wins the race:

us_timelines_race=timeline_us_race@100000

prc_timelines_race=timeline_prc_race@100000

us_wins_race=1*(us_timelines_race<prc_timelines_race)

ev_us_race=(1-pdoom_us_race@100000)*(val_us_race_val@100000)

And the same for the PRC:

prc_wins_race=1*(us_timelines_race>prc_timelines_race)

ev_prc_wins_race=(1-pdoom_prc_race@100000)*(val_prc_race_val@100000)

It's not quite correct to just check where the US timeline is shorter than the PRC one: The timeline distribution is aggregating our uncertainty about which world we're in (i.e., whether TAI takes evolution-level amounts of compute to create, or brain-development-like levels of compute), so if we just compare which sample from the timelines is smaller, we assume "fungibility" between those two worlds. So the difference between TAI-achievement ends up larger than the lead in a race would be. I haven't found an easy way to write this down in the model, but it might affect the outcome slightly.

The expected value of a race world then is

race_val=us_wins_race*ev_us_wins_race+prc_wins_race*ev_prc_wins_race

>>> np.mean(non_race_val)

0.7543640906126139

>>> np.median(non_race_val)

0.7772837900955506

>>> np.var(non_race_val)

0.12330641850356698

As for the non-race situation in which the US decides not to scramble for TAI, the calculation is even simpler:

non_race_val=(val_prc_nonrace_val@100000)*(1-pdoom_prc_nonrace@100000)

Summary stats:

>>> np.mean(non_race_val)

0.7217417036642355

>>> np.median(non_race_val)

0.7079529247343247

>>> np.var(non_race_val)

0.1610011984251525

Comparing the two:

Abdullah: …huh. I didn't expect this.

The mean and median of value the worlds with a TAI race are higher than the value of the world without a race, and the variance of the value of a non-race world is higher. But neither world stochastically dominates the other one—non-race worlds have a higher density of better-than-MAGIC values, while having basically the same worse-than-extinction densities. I update myself towards thinking that a race can be beneficial, Benjamin!

Benjamin:

Abdullah: I'm not done yet, though.

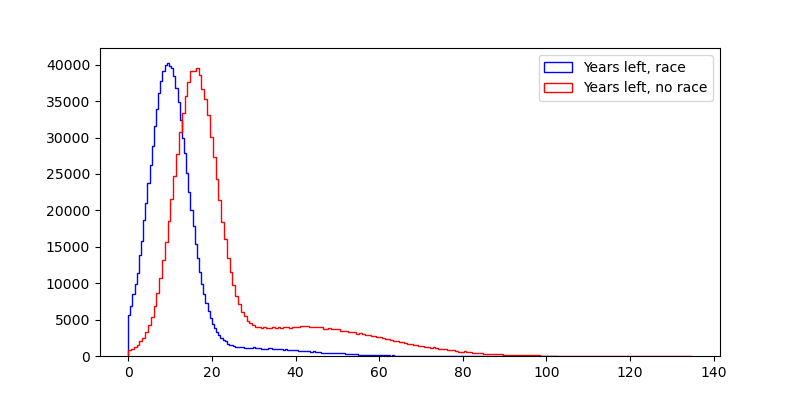

The first additional consideration is that in a non-race world, humanity is in the situation of living a few years longer before TAI happens and we either live in a drastically changed world or we go extinct.

curyear=time.localtime().tm_year

years_left_nonrace=(timeline_prc_nonrace-curyear)@100000

years_left_race=np.hstack((us_timelines_race[us_timelines_race<prc_timelines_race], prc_timelines_race[us_timelines_race>prc_timelines_race]))-curyear

Whether these distributions are good or bad depends very much on the relative value of pre-TAI and post-TAI lives. (Except for the possibility of extinction, which is already accounted for.)

I think that TAI-lives will probably be far better than pre-TAI lives, on average, but I'm not at all certain: I could imagine a situation like the Neolothic revolution, which arguably was net-bad for the humans living through it.

leans back

But the other thing I want to point out is that we've been assuming that the US just sits back and does nothing while the PRC develops TAI.

What if, instead, we assume that the US tries to convince its allies and the PRC to instead join a MAGIC consortium, for example by demonstrating "model organisms" of alignment failures.

A central question now is: How high would the probability of success of this course of action need to be to be as good or even better than entering a race?

I'll also guess that MAGIC takes a whole while longer to get to TAI, about 20 years more than the US in a race. (If anyone has suggestions about how this affects the shape of the distribution, let me know.)

timeline_magic=sq.mixture([sq.norm(mean=2055, sd=5, lclip=2024), sq.norm(mean=2080, sd=20, lclip=2024)], [0.7, 0.3])

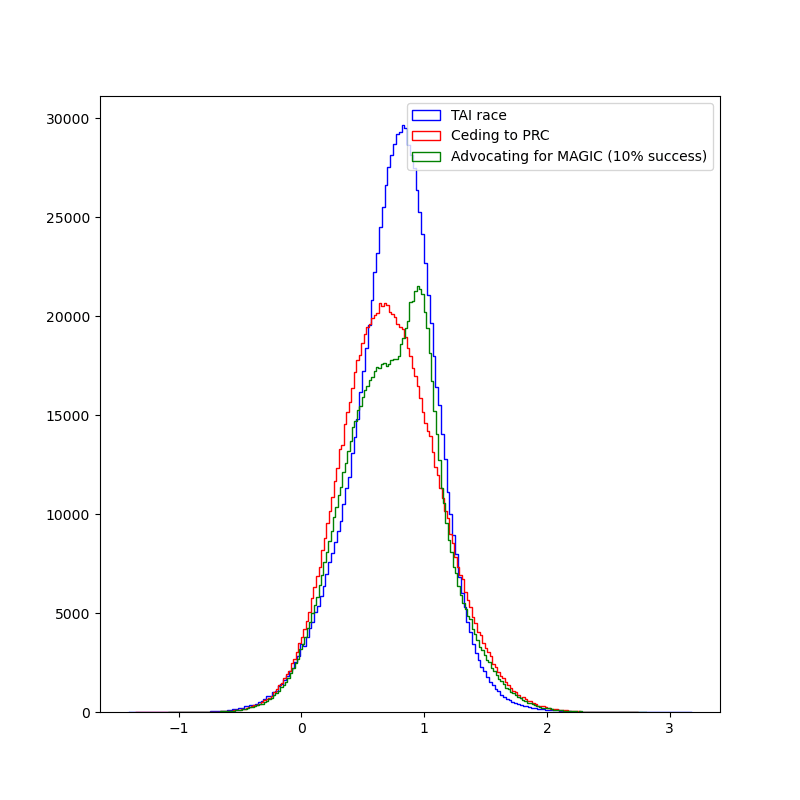

If we assume that the US has a 10% shot at convincing the PRC to join MAGIC, how does this shift our expected value?

little_magic_val=sq.mixture([(prc_nonrace_val*(1-pdoom_prc_nonrace)), (magic_val*(1-pdoom_magic))], [0.9, 0.1])

some_magic_val=little_magic_val@1000000

Unfortunately, it's not enough:

>>> np.mean(some_magic_val)

0.7478374812339188

>>> np.mean(race_val)

0.7543372422248729

>>> np.median(some_magic_val)

0.7625907656231915

>>> np.median(race_val)

0.7768634378292709

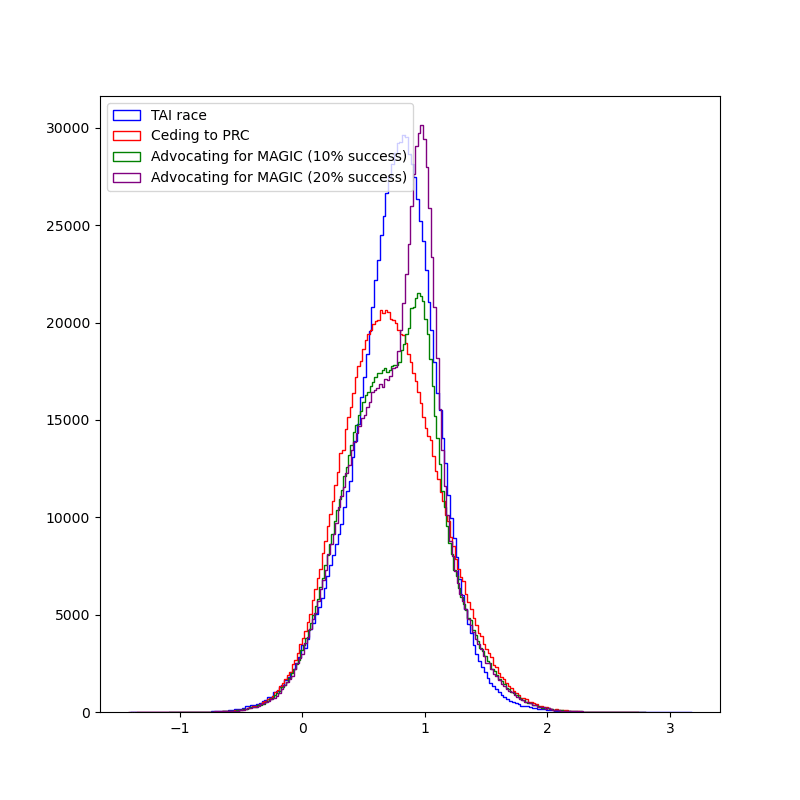

What if we are a little bit more likely to be successful in our advocacy, with 20% chance of the MAGIC proposal happening?

That beats the worlds in which we race, fair and square:

>>> np.mean(more_magic_val)

0.7740403582341773

>>> np.median(more_magic_val)

0.8228921409188543

But worlds in which the US advocates for MAGIC at 20% success probability still have more variance:

>>> np.var(more_magic_val)

0.14129776984186218

>>> np.var(race_val)

0.12373193215918225

Benjamin: Hm. I think I'm a bit torn here. 10% success probability for MAGIC doesn't sound crazy, but I find 20% too high to be believable.

Maybe I'll take a look at your code and play around with it to see where my intuitions match and where they don't—I especially think your choice of using normal distributions for the value of the future, conditioning on who wins, is questionable at best. I think lognormals are far better.

But I'm happy you came to your senses, started actually arguing your position, and then changed your mind.

(checks watch)

Oh shoot, I've gotta go! Supermarket's nearly closed!

See you around, I guess!

Abdullah: See you around! And tell the wife and kids I said hi!

I hope this gives some clarity on how I'd like those conversations to go, and that people put in a bit more effort.

And please, don't make me write something like this again. I have enough to do to respond to all your bad takes with something like this.

34 comments

Comments sorted by top scores.

comment by Gurkenglas · 2024-07-02T19:02:55.865Z · LW(p) · GW(p)

You assume the conclusion:

A lot of the AI alignment success seems to me stem from the question of whether the problem is easy or not, and is not very elastic to human effort.

AI races are bad because they select for contestants that put in less alignment effort.

Replies from: niplav↑ comment by niplav · 2024-07-03T08:09:48.772Z · LW(p) · GW(p)

I do assume that not being in a race lowers the probability of doom by 5%, and that MAGIC can lower it by more than two shannon (from 10% to 2%).

Maybe it was a mistake of mine to put the elasticity front and center, since this is actuall quite elastic.

I guess it could be more elastic than that, but my intuition is skeptical.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-07-02T21:00:07.487Z · LW(p) · GW(p)

I'm confused by this graph. Why is there no US non-race timeline? Or is that supposed to be MAGIC? If so, why is it so much farther behind than the PRC non-race timeline?

Also, the US race and PRC race shouldn't be independent distributions. A still inaccurate but better model would be to use the same distribution for USA and then have PRC be e.g. 1 year behind +/- some normally distributed noise with mean 0 and SD 1 year.

↑ comment by niplav · 2024-12-19T22:29:23.614Z · LW(p) · GW(p)

Update: I changed the model so that the PRC timeline is dependent on the US timeline. The values didn't change perceptibly, updated text here (might crosspost eventually).

↑ comment by niplav · 2024-07-03T16:00:39.887Z · LW(p) · GW(p)

I didn't include a US non-race timeline because I was assuming that "we" is the US and can mainly only causally influence what the US does. (This is not strictly true, but I think it's true enough).

I read the MAGIC paper, and my impression is that it would be an international project, in which the US might play a large role. But my impression is also that MAGIC would be very willing to cause large delays in the development of TAI in order to ensure safety, which is why I added 20 years to the timeline. I think that a non-racing US would be still much faster than that, because they are less concerned with safety/less bureaucratic/willing to externalize costs on the world.

Also, the US race and PRC race shouldn't be independent distributions. A still inaccurate but better model would be to use the same distribution for USA and then have PRC be e.g. 1 year behind +/- some normally distributed noise with mean 0 and SD 1 year.

Hm. That does sound like a much better way of modeling the situation, thanks! I'll put it on my TODO list to change this. That would at least decrease variance, right?

comment by habryka (habryka4) · 2024-07-02T18:59:55.039Z · LW(p) · GW(p)

I like this kind of take!

I disagree with many of the variables, and have a bunch of structural issues with the model (but like, I think that's always the case with very simplified models like this). I think the biggest thing that is fully missing is the obvious game-theoretic consideration. In as much as both the US and China think the world is better off if we take AI slow, then you are in a standard prisoners dilemma situation with regards to racing towards AGI.

Doing a naive CDT-ish expected-utility calculation as you do here will reliably get people the wrong answer in mirrored prisoner's dilemmas, and as such you need to do something else here.

Replies from: antimonyanthony, niplav↑ comment by Anthony DiGiovanni (antimonyanthony) · 2024-07-20T12:51:18.367Z · LW(p) · GW(p)

Doing a naive CDT-ish expected-utility calculation

I'm confused by this. Someone can endorse CDT and still recognize that in a situation where agents make decisions over time in response to each other's decisions (or announcements of their strategies), unconditional defection can be bad. If you're instead proposing that we should model this as a one-shot Prisoner's Dilemma, then (1) that seems implausible, and (2) the assumption that US and China are anything close to decision-theoretic copies of each other (such that non-CDT views would recommend cooperation) also seems implausible.

I guess you might insist that "naive" and "-ish" are the operative terms, but I think this is still unnecessarily propagating a misleading meme of "CDT iff defect."

Replies from: None, habryka4↑ comment by [deleted] · 2024-07-20T14:17:53.954Z · LW(p) · GW(p)

Someone can endorse CDT and still recognize that in a situation where agents make decisions over time in response to each other's decisions (or announcements of their strategies), unconditional defection can be bad. If you're instead proposing that we should model this as a one-shot Prisoner's Dilemma

Well, to be more formal and specific, under CDT, the multi-shot Prisoner's Dilemma still unravels from the back into defect-defect at every stage as long as certain assumptions are satisfied, such as the number of stages being finite or there being no epsilon-time discounting.[1]

Even when you deal with a potentially infinite-stage game and time-discounting, the Folk theorems make everything very complex because they tend to allow for a very wide variety of Nash equilibria (which means that coordinating on those NEs becomes difficult, such as in the battle of the sexes), and the ability to ensure the stability of a more cooperative strategy often depends on the value of the time-discounting factor and in any case requires credibly signaling that you will punish defectors through grim trigger strategies that often commit you long-term to an entirely adversarial relationship (which is quite risky to do in real life, given uncertainties and the potential need to change strategies in response to changed circumstances).

The upshot is that the game theory of these interactions is made very complicated and multi-faceted (in part due to the uncertainty all the actors face), which makes the "greedy" defect-defect profile much more likely to be the equilibrium that gets chosen.

- ^

"defecting is the dominant strategy in the stage-game and, by backward induction, always-defect is the unique subgame-perfect equilibrium strategy of the finitely repeated game."

↑ comment by habryka (habryka4) · 2024-07-20T20:01:59.839Z · LW(p) · GW(p)

Sure, you can think about this stuff in a CDT framework (especially over iterated games), though it is really quite hard. Remember, the default outcome in a n-round prisoners dilemma in CDT is still constant defect, because you just argue inductively that you will definitely be defected on in the last round. So it being single shot isn't necessary.

Of course, the whole problem with TDT-ish arguments is that we have very little principled foundation of how to reason when two actors are quite imperfect decision-theoretic copies of each other (like the U.S. and China almost definitely are). This makes technical analysis of the domains where the effects from this kind of stuff is large quite difficult.

Replies from: antimonyanthony, IsabelJ↑ comment by Anthony DiGiovanni (antimonyanthony) · 2024-07-21T09:37:31.828Z · LW(p) · GW(p)

Remember, the default outcome in a n-round prisoners dilemma in CDT is still constant defect, because you just argue inductively that you will definitely be defected on in the last round. So it being single shot isn't necessary.

I think the inductive argument just isn't that strong, when dealing with real agents. If, for whatever reason, you believe that your counterpart will respond in a tit-for-tat manner even in a finite-round PD, even if that's not a Nash equilibrium strategy, your best response is not necessarily to defect. So CDT in a vacuum doesn't prescribe always-defect, you need assumptions about the players' beliefs, and I think the assumption of Nash equilibrium or common knowledge of backward induction + iterated deletion of dominated strategies is questionable.

Also, of course, CDT agents can use conditional commitment + coordination devices.

the whole problem with TDT-ish arguments is that we have very little principled foundation of how to reason when two actors are quite imperfect decision-theoretic copies of each other

Agreed!

↑ comment by isabel (IsabelJ) · 2024-07-20T20:33:15.150Z · LW(p) · GW(p)

I think you can get cooperation on an iterated prisoners dilemma if there's some probability p that you play another round, if p is high enough - you just can't know at the outset exactly how many rounds there are going to be.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-20T21:50:28.246Z · LW(p) · GW(p)

Yep, it's definitely possible to get cooperation in a pure CDT-frame, but it IMO is also clearly silly how sensitive the cooperative equilibrium is to things like this (and also doesn't track how I think basically any real-world decision-making happens).

Replies from: IsabelJ↑ comment by isabel (IsabelJ) · 2024-07-21T01:32:57.425Z · LW(p) · GW(p)

I do think that iterated with some unknown number of iterations is better than either single round or n-rounds at approximating what real world situations look like (and gets the more realistic result that cooperation is possible).

I agree that people are mostly not writing out things out this way when they're making real world decisions, but that applies equally to CDT and TDT, and being sensitive to small things like this seems like a fully general critique of game theory.

↑ comment by Lukas Finnveden (Lanrian) · 2024-08-24T15:31:48.909Z · LW(p) · GW(p)

To be clear, uncertainty about the number of iterations isn’t enough. You need to have positive probability on arbitrarily high numbers of iterations, and never have it be the case that the probability of p(>n rounds) is so much less than p(n rounds) that it’s worth defecting on round n regardless of the effect of your reputation. These are pretty strong assumptions.

So cooperation is crucially dependent on your belief that all the way from 10 rounds to Graham’s number of rounds (and beyond), the probability of >n rounds conditional on n rounds is never lower than e.g. 20% (or whatever number is implied by the pay-off structure of your game).

↑ comment by habryka (habryka4) · 2024-07-21T01:47:54.120Z · LW(p) · GW(p)

Huh, I do think the "correct" game theory is not sensitive in these respects (indeed, all LDTs cooperate in a 1-shot mirrored prisoner's dilemma). I agree that of course you want to be sensitive to some things, but the kind of sensitivity here seems silly.

↑ comment by niplav · 2024-07-03T15:54:18.597Z · LW(p) · GW(p)

I think the biggest thing that is fully missing is the obvious game-theoretic consideration. In as much as both the US and China think the world is better off if we take AI slow, then you are in a standard prisoners dilemma situation with regards to racing towards AGI.

Yep, that makes sense. When I went into this, I was actually expecting the model to say that not racing is still better, and then surprised by the outputs of the model.

I don't know how to quantitatively model game-theoretic considerations here, so I didn't include them, to my regret.

comment by mako yass (MakoYass) · 2024-07-03T04:28:01.882Z · LW(p) · GW(p)

As an advocate for an international project, I am not advocating for individual actors to self-sacraficially pause while their opponent continues. Even if it were the right thing to do, it seems politically non-viable, and it isn't remotely necessary as a step towards building a treaty, it may actually make us less likely to succeed by seeming to present our adversaries with an opportunity to catch up and emboldening them to compete.

Slight variation: If China knew that you weren't willing to punish competition with competition, that eliminates their incentive to work toward cooperation!

Replies from: niplav↑ comment by niplav · 2024-07-03T16:04:06.419Z · LW(p) · GW(p)

Then my analysis was indeed not directed at you. I think there are people who are in favor of unilaterally pausing/ceasing, often with specific other policy ideas.

And I think it's plausible that ex ante/with slightly different numbers, it could actually be good to unilaterally pause/cease, and in that case I'd like to know.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-07-04T03:04:51.636Z · LW(p) · GW(p)

Well let's fix this then?

I find that it is better than not racing. Advocating for an international project to build TAI instead of racing turns out to be good if the probability of such advocacy succeeding is ≥20%.

Both of these sentences are false if you accept that my position is an option (racing is in fact worse than international cooperation which is encompassed within the 'not racing' outcomes, and advocating for an international project is in fact not in tension with racing whenever some major party is declining to sign on.)

There are actually a lot of people out there who don't think they're allowed to advocate for a collective action without cargo culting it if the motion fails, so this isn't a straw-reading.

Replies from: niplav↑ comment by niplav · 2024-07-10T08:24:35.154Z · LW(p) · GW(p)

Hm, interesting. This suggests an alternative model where the US tries to negotiate, and there are four possible outcomes:

- US believes it can coordinate with PRC, creates MAGIC, PRC secretly defects.

- US believes it can coordinate with PRC, creates MAGIC, US secretly defects.

- US believes it can coordinate with PRC, both create MAGIC, none defect.

- US believes it can't coordinate with PRC, both race.

One problem I see with encoding this in a model is that game theory is very brittle, as correlated equilibria (which we can use here in place of Nash equilibria, both because they're easier to compute and because the actions of both players are correlated with the difficulty of alignment) can change drastically with small changes in the payoffs.

I hadn't informed myself super thoroughly about the different positions people take on pausing AI and the relation to racing with the PRC, my impression was that people were not being very explicit about what should be done there, and the people who were explicit were largely saying that a unilateral ceasing of TAI development would be better. But I'm willing to have my mind changed on that, and have updated based on your reply.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-07-10T09:36:20.112Z · LW(p) · GW(p)

Defecting becomes unlikely if everyone can track the compute supply chain and if compute is generally supposed to be handled exclusively by the shared project.

Replies from: niplav↑ comment by niplav · 2024-07-14T16:42:56.478Z · LW(p) · GW(p)

I am not as convinced as many other people of compute governance being sufficient, both because I suspect there are much better architectures/algorithms/paradigms waiting to be discovered, which could require very different types of (or just less) compute (which defectors could then use), and all from what I've read so far about federated learning has strengthened my belief that part of the training of advanced AI systems could be done in federation (e.g. search). If federated learning becomes more important, then the existing stock of compute countries have also becomes more important.

comment by Lukas_Gloor · 2024-07-03T01:09:22.247Z · LW(p) · GW(p)

It seems important to establish whether we are in fact going to be in a race [EA · GW] and whether one side isn't already far ahead.

With racing, there's a difference between optimizing the chance of winning vs optimizing the extent to which you beat the other party when you do win. If it's true that China is currently pretty far behind, and if TAI timelines are fairly short so that a lead now is pretty significant, then the best version of "racing" shouldn't be "get to the finish line as fast as possible." Instead, it should be "use your lead to your advantage." So, the lead time should be used to reduce risks.

Not sure this is relevant to your post in particular; I could've made this point also in other discussions about racing. Of course, if a lead is small or non-existent, the considerations will be different.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-03T02:44:22.989Z · LW(p) · GW(p)

Strong agree on the desire for conversations to go more like this, with models and clearly stated variables and assumptions. Strong disagree on the model itself, as I think it's missing some critical pieces. I feel motivated now to make my own model which can show the differences that stem from my different underlying assumptions.

Replies from: niplavcomment by Logan Zoellner (logan-zoellner) · 2024-09-09T14:12:11.656Z · LW(p) · GW(p)

I that in the mean & median cases, value(MAGIC)>value(US first, no race)>value(US first, race)>value(PRC first, no race)>value(PRC first, race)>value(PRC first, race)≫value(extinction)

While I think the core claim "across a wide family of possible futures, racing can be net beneficial" is true, the sheer number of parameters you have chosen arbitrarily or said "eh, let's assume this is normally distributed" demonstrates the futility of approaching this question numerically.

I'm not sure there's added value in an overly complex model (v.s. simply stating your preference ordering). Feels like false precision.

Presumably hardcore Doomers have:

value(SHUT IT ALL DOWN) > 0.2 > value(MAGIC) > 0 = value(US first, no race)=value(US first, race)=value(PRC first, no race)=value(PRC first, race)=value(PRC first, race)=value(extinction)

Whereas e/acc has an ordering more like:

value(US first, race)>value(PRC first, race)>value(US first, no race)>value(PRC first, no race)>value(PRC first, race)>value(extinction)>value(MAGIC)

Replies from: niplav, rhollerith_dot_com↑ comment by niplav · 2024-10-14T17:46:23.401Z · LW(p) · GW(p)

There's two arguments you've made, one is very gnarly, the other is wrong :-):

- "the sheer number of parameters you have chosen arbitrarily or said "eh, let's assume this is normally distributed" demonstrates the futility of approaching this question numerically."

- "simply stating your preference ordering"

I didn't just state a preference ordering over futures, I also ass-numbered their probabilities and ass-guessed ways of getting there. For to estimate an expected value of an action, one requires two things: A list of probabilities, and a list of utilities—you merely propose giving one of those.

(As for the "false precision", I feel like the debate has run its course; I consider Scott Alexander, 2017 to be the best rejoinder here. The world is likely not structured in a way that makes trying harder to estimate be less accurate in expectation (which I'd dub the Taoist assumption, thinking & estimating more should narrow the credences over time. Same reason why I've defended the bioanchors report [LW · GW] against accusations of uselessness with having distributions over 14 orders of magnitude).

↑ comment by RHollerith (rhollerith_dot_com) · 2024-09-09T17:48:00.701Z · LW(p) · GW(p)

value(SHUT IT ALL DOWN) > 0.2 > value(MAGIC) > 0 = value(US first, no race)=value(US first, race)=value(PRC first, no race)=value(PRC first, race)=value(PRC first, race)=value(extinction)

Yes, that is essentially my preference ordering / assignments, which remains the case even if the 0.2 is replaced with 0.05 -- in case anyone is wondering whether there are real human beings outside MIRI who are that pessimistic about the AI project.

comment by Orpheus16 (akash-wasil) · 2024-07-03T00:42:28.545Z · LW(p) · GW(p)

A common scheme for a conversation about pausing the development [LW · GW] of transformative AI goes like this:

Minor: The first linked post is not about pausing AI development. It mentions various interventions for "buying time" (like evals and outreach) but it's not about an AI pause. (When I hear the phrase "pausing AI development" I think more about the FLI version of this which is like "let's all pause for X months" and less about things like "let's have labs do evals so that they can choose to pause if they see clear evidence of risk".)

At a basic level, we want to estimate how much worse (or, perhaps, better) it would be for the United States to completely cede the race for TAI to the PRC.

My impression is that (most? many?) pause advocates are not talking about completely ceding the race to the PRC. I would guess that if you asked (most? many?) people who describe themselves as "pro-pause", they would say things like "I want to pause to give governments time to catch up and figure out what regulations are needed" or "I want to pause to see if we can develop AGI in a more secure way, such as (but not limited to) something like MAGIC."

I doubt many of them would say "I would be in favor of a pause if it meant that the US stopped doing AI development and we completely ceded the race to China." I would suspect many of them might say something like "I would be in favor of a pause in which the US sees if China is down to cooperate, but if China is not down to cooperate, then I would be in favor of the US lifting the pause."

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-03T00:49:40.644Z · LW(p) · GW(p)

I doubt many of them would say "I would be in favor of a pause if it meant that the US stopped doing AI development and we completely ceded the race to China." I would suspect many of them might say something like "I would be in favor of a pause in which the US sees if China is down to cooperate, but if China is not down to cooperate, then I would be in favor of the US lifting the pause."

FWIW, I don't think this super tracks my model here. My model is "Ideally, if China is not down to cooperate, the U.S. threatens conventional escalation in order to get China to slow down as well, while being very transparent about not planning to develop AGI itself".

Political feasibility of this does seem low, but it seems valuable and important to be clear about what a relatively ideal policy would be, and honestly, I don't think it's an implausible outcome (I think AGI is terrifying and as that becomes more obvious it seems totally plausible for the U.S. to threaten escalation towards China if they are developing vastly superior weapons of mass destruction while staying away from the technology themselves).

comment by Vladimir_Nesov · 2024-07-02T19:47:55.681Z · LW(p) · GW(p)

I think the PRC is behind on TAI, compared to the US, but only about one year.

Unless TAI is close to current scale, there will be an additional issue with hardware in the future that's not yet relevant today. It's not insurmountable, but it costs more years.

comment by MichaelDickens · 2025-04-19T03:26:32.492Z · LW(p) · GW(p)

This is a good concept. I built a similar Squiggle model a few weeks ago* (although it's still a rough draft), I hadn't realized you'd beaten me to it. So I guess you won the race to build an arms race model? :P

If I'm reading this right, it looks like the model assumes that if the US doesn't race, then China gets TAI first with 100% probability. That seems wrong to me. Race dynamics mean that when you go faster, the other party also goes faster. If the US slows down, there's a good chance China also slows down.

Also, regarding specific values, the model's average P(doom) values are:

- 10% if race + US wins

- 20% if race + China wins

- 15% if no race + China wins

That doesn't sound right to me. Racing is very bad for safety and right now the US leaders are not going a good job, so I think P(doom | no race & China wins) is less than P(doom | race & US wins). Although I think this is pretty debatable.

*My model found that racing was bad and I had to really contort the parameter values to reverse that result. I haven't thought much about the model construction so there could be unfair built-in assumptions.

comment by Viliam · 2024-07-04T10:54:21.993Z · LW(p) · GW(p)

norvid_studies: "If Carthage had won the Punic wars, would you notice walking around Europe today?"

Will PRC-descended jupiter brains be so different from US-descended ones?

I suppose this will depend a lot on the AI alignment and how much the AI takes control of the world.

If Carthage had won the war and then built a superhuman AI aligned to their values, we probably would notice.