A Slow Guide to Confronting Doom

post by Ruby · 2025-04-06T02:10:56.483Z · LW · GW · 14 commentsContents

Feeling the Doom Facing the doom Stay hungry for value The bitter truth over sweet lies Don't look away Flourish as best one can This time with feeling But how am I supposed to harvest any value if I'm having these unpleasant feelings? If I feel sad and depressed or angry orall the time? Mindfulness The time for action is now Step 2 is commiting to get whatever value you can. Creating space for miracles How does a good person live in such times? Continue to think, tolerate uncertainty Being a looker Don't throw away your mind Damned to lie in bed... Worries, compulsions, and excessive angst Comments on others' approaches A collection of approaches to confronting doom, and my thoughts on them What does it mean to be "okay"? If you're gonna remember just a couple things None 14 comments

Following a few events[1] in April 2022 that caused a many people to update sharply and negatively on outcomes for humanity, I wrote A Quick Guide to Confronting Doom [LW · GW].

I advised:

- Think for yourself

- Be gentle with yourself

- Don't act rashly

- Be patient about helping

- Don't act unilaterally

- Figure out what works for you

This is fine advice and all, I stand by it, but it's also not really a full answer to how to contend with the utterly crushing weight of the expectation that everything and everyone you value will be destroyed in the next decade or two.

Feeling the Doom

Before I get into my suggested psychological approach to doom, I want to clarify the kind of doom I'm working to confront. If you are impatient, you can skip to the actual advice [LW · GW].

The best analogy I have is the feeling of having a terminally ill loved-one with some uncertain yet definitely smallish number of years to live. It's a standardly terminal condition. Not dying would be quite surprising. There's no known effective treatment. Perhaps there's some crazy new experimental treatment I might find or even develop myself if I apply heroic agency.

Except it's not just a single loved one who has the condition, it is myself too, as well as everyone I have loved, do love, or could ever love, plus everyone my loved ones love, and so on. We are all terminal.

The disappointment of imminent death is all the more crushing because just a few years ago researchers announced breakthrough discoveries that suggested [existing, adult] humans could have healthspans of thousands of years. To drop the analogy, here I'm talking about my transhumanist beliefs. The laws of physics don't demand that humans slowly decay and die at eighty. It is within our engineering prowess to defeat death, and until recently I thought we might just do that, and I and my loved ones would live for millennia, becoming post-human superbeings.

To quote a speech from my wedding [LW · GW] in 2015:

Imagine a time when time is no longer imaginable. When the dances never need to end, when the lovers never need to die, when entropy no longer dominates. Imagine a world where we could explore with full scope, for as long as we wanted, every idea, every concept, every location. To dance with the stars, to dance with each other, to dance simply for the sake of dancing with no time limits. Imagine a world of radical life expansion, accompanying radical life extension. Imagine all the things possible. All the potential art, all the potential beauty, all the potential creation and ideation. What will life look free from the shackles of time? I doubt we could even imagine it with the right justice.

I cry because I don't think that will happen[2].

I'm not sure that I ever strongly felt that I would die at eighty or so. I had a religious youth and believed in an immortal soul. Even when I came out of that, I quickly believed in the potential of radical transhuman life extension. It is just the last few years that I am feeling I am going to die (quite probably). Me and everyone else, so I can't even have the satisfaction that the things and people I care about are continuing on.

The above is all bad enough, I wouldn't have thought it would be possible to feel a lot worse after already expecting the loss of all value. Total astronomical waste [? · GW]. And yet.

Last year I became a parent. For me, becoming a parent induces a deep feeling to want to care for my child and ensure she is flourishing and has the best experience.

I can't promise her that. I don't know that she even gets an adolescence. I brought into her a world that's doomed. And doomed for no better reason than because people were incapable of not doing something.

My wife wrote a letter to our infant daughter recently. It concluded:

I don’t know that we can offer you a good world, or even one that will be around for all that much longer. But I hope we can offer you a good childhood. At the very least, we can offer you a house filled with love.

Facing the doom

In confronting doom, we must answer several questions: (i) what to believe? (ii) what to do/pursue?, (iii) what to think about / where to pay attention? and (iv) what emotions to feel?

This post is me sharing the approaches that I use to answer these questions.

Stay hungry for value

The same thing we do every night...try to gain as much value as possible! – Pinky and the Brain

Living in the face of doom is just a special case of living. One's approach to living, deep down if not at the surface level algorithms, should cash out to trying to accumulate as much value as you can. That doesn't change just because doom is likely.

We can split the value one pursues into the value one is accruing right now (I like to call this "harvesting") and the value one is preparing to harvest in the future (I call this "sowing").

Most of the value, or expected value, would likely be in the future (because there's so much of it!) but for two reasons it makes sense to harvest now and not just sow for the future. (1) In order to flourish/maximize productivity, the human mind and body need to experience a certain amount of value now. Things like fun, time with loved ones, enjoyable experiences, and so on. Instrumentally, you get more future value by having some now. (2) I think a certain amount of discounting is in order given all the uncertainty about the future. Could be the future won't happen, and you'll have ended the game with still a few bits of value if you harvest some now. (3) Also kinda instrumentally, I feel like if you didn't try to have value now, you'd lose sight of what you're aiming at the grand-scale in the future.

I think theoretically doom[3] shifts the balance towards focusing on present value, but really the balance is mostly determined by what you need to flourish as a human, which varies by person.

This really is the foundational principle: at the end of the day, even if the face of doom, we're still trying to accumulate as much value as possible before the last timestep. It's just a question of how to do that.

The bitter truth over sweet lies

Truth feels like a sacred value for me, and I'll deontologically and categorically take it over any comforting falsehood, no matter how painful. It feels like a terminal value, but also I feel deeply that truth is essential for obtaining the most value instrumentally.

To wit, I think the reason we are doomed is because too many people are running cognitive algorithms that choose to believe what is locally pleasant and convenient. The response that is going to conceivably counter that is not going to be "let me join in too, and distort my thoughts so that I too believe something more pleasant and convenient to believe".

This means that in facing doom, I'm not going to do anything that amounts to changing my beliefs about reality to something less stark. No finding hope because "there's a chance", no distorting my thinking to believe in plans that aren't that sensical.

Don't look away

But more than just "what do I believe", I think it's of equal or greater importance what you pay attention to. A person can correctly believe that we face doom yet try to just not think about it. In effect, if you never think about doom, are you any better off than if you didn't believe in it?

This for me means that I'm eschewing any approach to facing doom that consists of trying to avoid thinking about it. This doesn't mean intentionally trying to stay cognizant of doom in every moment, but it does mean not purposefully trying to distract myself or engaging in a kind of doublethink[4].

Flourish as best one can

I don't have an exact formula or checklist for human flourishing, but I do have a classifier that can comparatively say "this is not very flourishing-like" and "this is much more flourishing-like".

Definitely it feels weird to suggest that a person could "flourish" while their world hurtles towards destruction. And yet. I think there's a "healthiest you can be given the circumstances". And really, that's what this guide is about.

I suspect that flourishing humans get enough sleep, spend time with friends and family, play, rest, do meaningful work, and other things. They do things like making space for grief while also taking all the good they still can.

In the course of writing this I did a search for relevant material produced by the rest of the world and came across a book by a 35-year old woman diagnosed with terminal bowel cancer: How to Live When You Could be Dead. I have yet to read much, but I get a sense that the book is about flourishing as best you can, even in the face of death.

This time with feeling

The reason why people systematically believe false things and avoid paying attention to true things is because doing the opposite would cause them to have unpleasant feelings[5].

Facing doom means, very likely, causing oneself to have many unpleasant feelings, possibly quite a lot of the time. Which means a cornerstone of learning to face doom well is learning to have unpleasant emotions optimally.

Here are some things I believe about [unpleasant] emotions:

- Almost every emotion can be tolerated[6]

- It is possible to feel multiple emotions at once

- The legitimate means for changing emotions are either (i) causing one's beliefs to become more true, (ii) changing reality

- Illegitimate means of changing emotions are via (a) shifting beliefs towards falsehood, (b) shifting attention[7] in ways that cause one to do a worse job at addressing the underlying reality that's causing the unpleasant emotion

- There are some forms of shifting emotions by shifting attention that are probably good and some that are bad[8]

- Emotions are part of human cognition and their purpose is to guide action (same as thoughts)

- They often have useful things to say but are fallible

- Fallibility can be either because the emotion is based on a false belief or because the emotion suggests a course of action that won't be productive[9]

I believe very firmly that one should make space for unpleasant emotions. That doesn't mean liking them or wanting them or giving up on shifting them via legitimate means. It does mean giving up illegitimate methods and it does mean patience. Sometimes when an emotion feels especially intolerable, I can feel a desperation to escape it that maybe pushes me to action, but the desperation undermines my effectiveness.

There's a skill of "inserting a gap between feeling an emotion and responding to it" such that there's a moment in which one can reflect on whether intended plan will actually help.

Terms I use here are capital-s Struggling for responding to unpleasant emotions with resistance and capital-a Acceptance for making space for emotions, as unpleasant mind-guests as they might be.

[The approach I'd advocating here is my own take on the cluster of Acceptance and Commitment Therapy / Radical Acceptance / Dialectical Behavioral Therapy. I never found a resource that explains it as I've ended up using it. The key thing is that you are not accepting reality (reality sucks, let's change it), you are accepting your emotional experience in the sense that your emotional experience is tolerable and it is allowable that you remain in it – not all resources must be mobilized to change your emotional experience. Perhaps you'll find ways to feel better, perhaps you won't, that's okay.]

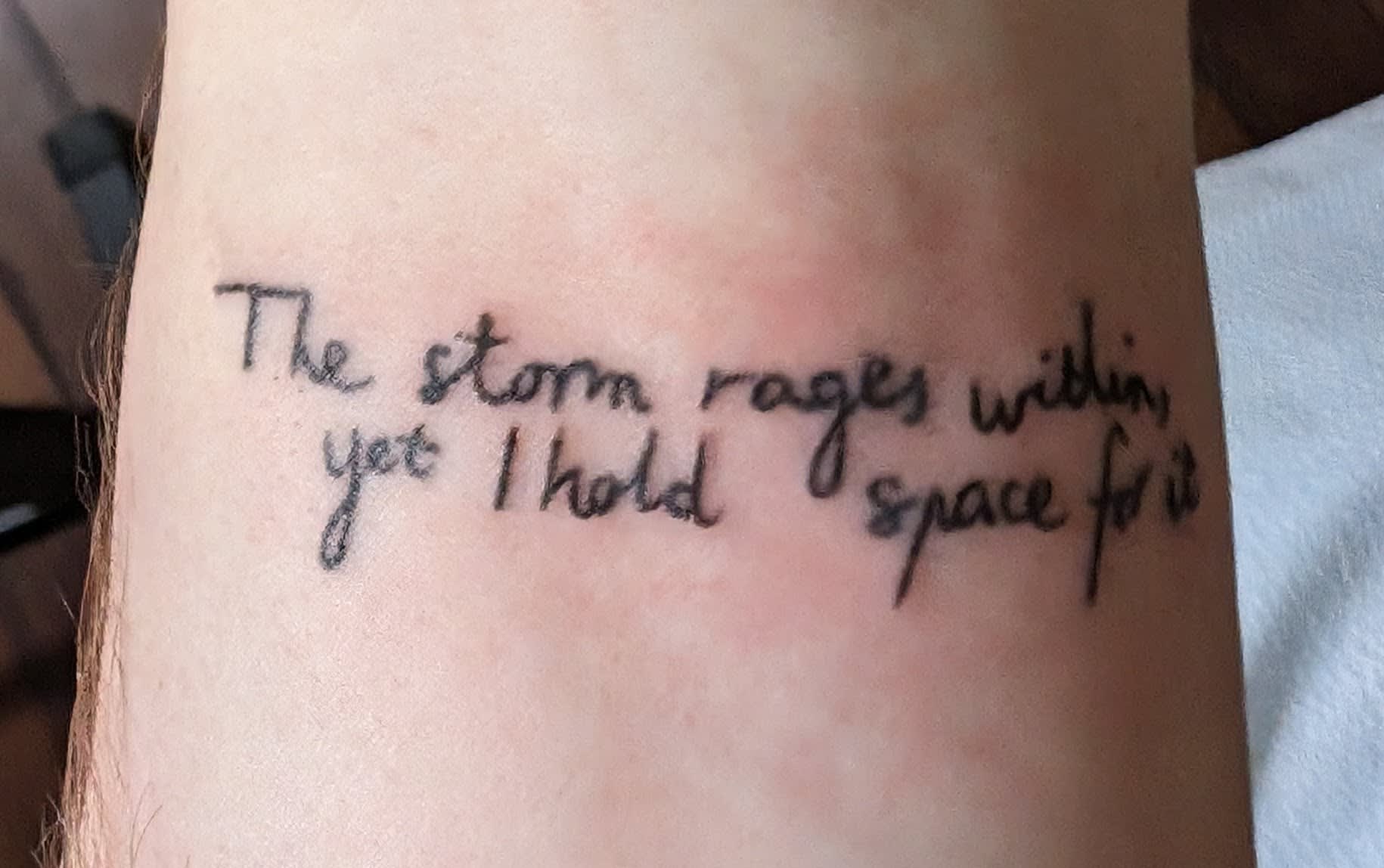

This phrase, tattooed on my left wrist, is a reminder to Accept my unpleasant emotions

If you face doom, then it's likely you're going to have a lot of really unfun emotions. Let them be. You are large enough to contain them. You can contain the storm. Let it slosh around inside you. Not fun. But you can do it. Struggling is worse. Struggling only makes it worse.

But how am I supposed to harvest any value if I'm having these unpleasant feelings? If I feel sad and depressed or angry or <whatever> all the time?

As I wrote above, it's possible to have multiple emotions at once. Mixed feelings are :thumbs-up:

What I do is I continue to go about life and have good stuff. Tasty meals, exhilarating drives, spending time with my child, art, music, entertainment, NSFW, and on and on. And yeah, sometimes the experience is a bit worse because doom is on my mind.

It's better when I don't fight the feelings of doom. I let them coexist with the rest of the feelings. There's a calm in this. The calm of not Struggling.

And when I don't Struggle, there's a much higher chance that the unpleasant doom-related feelings will kindly recede into the background for a while and I can fully enjoy the moment.

Mindfulness

I am not a meditation person and don't think I've meditated ever longer than an hour. I do believe pretty strongly that mindfulness is a useful practice. I think in particular a few skill points here help with holding space for [multiple] emotions.

Basic skill levels in mindfulness seem attainable with a bit of meditation practice. I'd maybe try The Mind Illuminated and Mindfulness Meditation for Pain Relief, since chronic grief about the world is kinda a chronic kind of pain.

The time for action is now

Okay, excellent, space has been made for the unpleasant emotions. That was Step 1. Step 2 is we gotta after all the value can be obtained, even in this disappointing timeline.

Step 2 is commiting to get whatever value you can.

I read about a guy in a book once, I don't remember if he was real or not. He wanted to travel the world but couldn't afford to. Since a major part of what he valued about travel was trying interesting cuisines, he obtained what value he could by eating out frequently at restaurants with foreign cuisine in his city. He couldn't get all the value he wanted (the entire cosmic endowment), but worked to get the value he could.

If you had a million dollars in cash and $999,000 of it got burned up in a fire, would you burn the $1000 you had left?

Same for us. Harvest the value we can. And there the potential future is so large that even in these doomy times, the expected value is large. Small 'e' perhaps, but still enormously large 'v'. Same as we do everyday.

Accept the emotions, commit to pursuing the value.

Creating space for miracles

Doom feels really likely to me. It just seems like so many people are going after the immediately and locally convenient for them, heedless to the dangers of superintelligence.

But who knows, perhaps one of my assumptions is wrong. Perhaps there's some luck better than humanity deserves. If this happens to be the case, I want to be in a position to make use of it.

A framing for my actions is "I'm creating space for miracles". Probably what I'm doing won't help, but maybe I can do things that mean if the unexpected positive happens, we'll be in a better position to make use it.

How does a good person live in such times?

This is another question I ask myself. It's kind of a virtue ethics frame. It just really doesn't feel like a good person quits at this point and retires to the countryside. I am not certain enough for that. I can't rule out miracles, the future could be so astronomically good, and so a good person keeps trying.

And there's satisfaction in that! There's value in that. I would like to think that when my life ends, I can feel that I lived it virtuously.

Naturally what is virtuous is determined by consequentialist calculations, but my human brain works more natively with notions of virtue and I think the human brain does distill consequentialist considerations down into virtues better than it distills them down into consequentialist principles.

Continue to think, tolerate uncertainty

Man, uncertainty is hard. There's a literature on ambiguity aversion: that people would prefer a certain quite bad thing over an uncertain less bad thing.

I think this effect creates a temptation to increase one's P(doom) and just feel like you know how reality is. At least I feel this pressure.

Yet I think it's correct to reflect on what I actually know, and to continue to think and continue to pay attention to the world. It's correct to keep in mind my uncertainty even though, weirdly, that feels harder than concluding certain doom.

It might also be that if I'm maximally doomy, then negative news hurts me less. The more hope I'm holding out, the more a bad development stings. I don't know how common it is, but as above I am pledged to truth, and that includes trying to stay calibrated.

Reality is weird. No one has developed ASI before, maybe we get lucky that despite wanton irresponsibility given what we know, it still goes well. 3% chance? 5%? Not sure. I don't know enough to rule it out.

Being a looker

There's probably an optimal amount of time to spend staring at the doom. It's not 100%, and it's not 0%. I think that overwhelmingly, people on planet Earth are much too close to 0%. I probably won't hit the optimal point either.

Personally, I feel that since I'm gonna get it wrong, I can least balance it out a tiny bit. If anything, I will stare too much at the doom and that will hurt me a bit personally. Maybe good for the planet though.

I have chosen to be a Looker (a term I made up). One who looks at the doom. And hey, I at least aspirationally have room in me for the unpleasant feelings that result.

Don't throw away your mind

Kinda a sub-point under still try to flourish [LW · GW].

This is an excellent post by TsviBT [LW · GW] that you should go read. He writes about playful thinking vs urgent thinking. Which I might rephrase as thinking done out of immediate curiosity and enjoyment and fun vs thinking done towards a[n urgent] goal.

In dire times such as your civilization being about to destroy itself, one might be tempted to spend all one's time on the latter kind of cognition. Tsvi argues that would be a mistake, and I think he is right.

It seems playful thinking can accomplish things that directed urgent thinking can't, and we need those things.

I also think this is a special case of the more general principle that to maximize value, one has to stay a flourishing, healthy human.

By the same principle: have hobbies, spend time with your loved ones, etc.

Exactly how much? It's hard to say. I think in worlds where it seems like there's clear and specific hope via means with traction –one is perhaps in the middle of the S-curve of hope–then you play less and work more. When at the bottom of the S-curve, it doesn't make sense to sacrifice quite so much from the now.

Habryka once said something about doing trade between yourselves in different worlds. In worlds where your efforts make more of a difference you work harder in exchange that in worlds with less traction, you, I dunno, spend more time on hobbies.

Seems sensible to me.

Damned to lie in bed...

I strongly suspect human brains have the ability to enter a depression state because the state does something useful. It seems to me that depression often arises from feeling stuck or not believing that one is able/on track to achieve acceptable outcomes. It's like the brain, dissatisfied with the situation, boycotts and withdraws motivation. Something like that.

Having one's brain not let you mindlessly or unreflectively continue down a path that won't work, seems maybe useful. I'm not sure if that's it, but I think it's something.

This makes me think that humans who are flourishing in general will sometimes enter depression states and that's fine, if not good. Yet, there's probably depression done in a healthy way and depression done in an unhealthy way, I wish I had more to say there.

It seems that predicting doom for the world can result in depression, but also that feeling depressed makes one feel more doomy. (It really seems like in a depression state, all predictions get negative biased.)

My guess is that feeling some depression (or even a lot) while confronting doom is normal, if not good. Those depression feelings are included in the feelings that one should make space for. But there's also such a thing as getting stuck for too long in depression, and leaving too much of the realizable value of life on the table.

If feeling depressed, I would say, that's fine, feel that way! Legit and valid! But also I suggest following regular depression advice around continuing to sleep well, eat well, socialize, etc, and finding moments of joy where you can.

Oh, and most of all depression exerts an influence on one's predictions. I'd suggest trying hard to keep one's beliefs calibrated as much as possible while depressed (and when not depressed too).

Worries, compulsions, and excessive angst

Feelings of doom feel ripe for an anxiety/OCD-like fixation separate from depression. If you can't think about anything other than doom or are feeling chronically anxious about it, then my guess is while the feelings are kind of justified by reality, there's also some concomitant mental health thing. I suggest all the things I suggest for everyone, except harder.

I also recommend exposure response-prevention therapy, where in this case the exposure is to painful doomy feelings and the kinds of things that provoke them.

Comments on others' approaches

Fortunately I'm not the only person to write up thoughts on confronting doom. It useful to collect others' approaches, at least as many as I could find, in one place and provide comment on them too. To avoid having an overly long post, I split these out into their own piece:

A collection of approaches to confronting doom, and my thoughts on them

What does it mean to be "okay"?

A common concept/question in others' confronting-doom essays is "how to be okay?". I haven't explicitly used that framing, but I think perhaps I'm implicitly answering that question too.

So maybe useful to reflect on what it means to be okay. Actually, I think it's easier to start with what "not okay" means:

- If remain in this state, I will deteriorate and be destroyed[10]

- I cannot function in this state

- This state is too upsetting

- I must prioritize dealing with this state over everything else ("it is blocking")

- I am deeply disoriented

- I need to stop what I'm doing an orient to this situation, update my strategy, figure out how to even proceed: this is not business as usual and I must find new procedures, I can't just carry on the old ones

- I do not know how to "relate to this"

(You could refer to either yourself or the external situation as being okay/not-okay, but in truth there needs to be a person from whose perspective things are or are not okay.)

It's a little bit ironic that the situation of the world being doomed is so overridingly important that the emotional toll it takes would undermine one's ability to fight to the doom (or at obtain what value can still be obtained).

I think my approach, outlined in this post, does offer solutions to the above ways one might feel non-okay in the face of doom. I think even if my solution doesn't work or isn't right for some, these are the notes to hit.

The constraint in addressing that is most important to me is: "don't solve this by pretending you're in a different situation" (and simply not thinking about it is pretending).

I think there are variations on the specific things you pretend to feel better, e.g. pretending "things are currently doomed but my plan will work". Or "there's still a chance, right! [LW · GW]"[11] These are lesser than pretending doom isn't the situation but I think they're distortion that will undermine making effective plans.

If you're gonna remember just a couple things

Almost all emotions are tolerable and will not destroy if you have them, even if they really really suck. If believing in doom makes you feel awful, that's okay, in fact appropriate. You should not reflexively struggle to avoid feelings, but that doesn't imply liking them or giving up.

There are legitimate and illegitimate ways to shifts feelings. Legitimate ways include changing underlying reality and remembering the good that coexists with the bad. Illegitimate means include deceiving yourself about reality and filling your attention with distractions so that it's as if you don't believe the upsetting thing – these approaches undermine your ability to be effective in going after what you value.

Once you have made room for your [possibly very deeply upsetting] beliefs and feelings, you can get on with the business of pursuing the value in life that remains to be pursued.

Same as we do everyday, Pinky: accept our feelings and pursue value.

- ^

- ^

My own prediction of doom come heavily from the following beliefs:

- Solving the alignment problem, while perhaps doable, is not so trivial that it will happen by default by people who are not especially trying hard to solve it.

- Almost all the people making decisions relevant to the development of ASI do not sufficiently understand the risks and take them seriously.

- While we might succeed at slapping on a regulation here or there, ultimately these will fail because people who never cared that hard will just find the nearest unblocked strategy [? · GW] for doing the dangerous thing they wanted to anyway.

- ^

And particularly doom that's not "doomed unless we do something" and more "doom and there's nothing we can do about it".

- ^

In the Appendix I evaluate approaches to facing doom suggested by others. There I state that I think Sarah's approach violates this principle.

- ^

It is a major flaw of human mind architecture that when we have negative emotions because of negative realities, we so commonly react to alter our minds rather than altering reality.

- ^

Granted that particularly strong ones can be quite distracting and very inconvenient. I still venture that trying to fight them "illicitly" as policy will cost more than making space for them.

- ^

I'd lump in taking drugs or other mind altering substances in the general category of "shifting attention".

- ^

Gratitude journaling is an example of the former, drugs and addictive video games of the latter. Possibly a difference is gratitude journaling doesn't necessarily mean not thinking about the bad in your life, and rather adding good stuff alongside the bad stuff in your context window [LW · GW].

- ^

Consider the emotion of anger at the colleague who you believe has stolen your lunch from the work fridge for the third time. You could be mistaken about who stole your lunch, or correct but getting angry will accomplish nothing because your colleague is 80 and has dementia and what you should really do is get them to retire.

A big danger with emotions is that intensity of emotion can be mistaken for epistemic certainty about the beliefs an emotion is premised on: I feel so angry so he must have done it!!

- ^

Compare: a person with an untreated stab-wound who will otherwise "die" is not okay. A person in severe mental distress is not okay if they at risk of grievously harming themselves. A person overwhelmed with doom might feel unable to function normally and be in severe distress in that sense not be okay.

- ^

It might have been a Facebook thread or a tweet, but I recall Eliezer claiming that although he could, most people cannot work hard on a plan they [emotionally/System 1] believe has less than a 70% chance of success.

I do fear this could be going on for many people. Even when they'll say that their explicit likelihood of success is very low, like 3%, at the gut-level they're treating it as much higher. So okayness is obtained by giving one's brain "but there's still a chance!" If this is going on, I feel iffy about it but am not sure.

I discuss these approaches in the companion post, but I think maybe what the "dignity-points" approach gets you over "play to your outs" is that you might be able to make plan to get a dignity point with >70% whereas you can't do that for outright survival. So in pursuing dignity points, there's less risk of gut-level deceiving yourself in order to have motivation – you are in fact pursuing a conceivably achievable goal. - ^

I realized that one reason is because that as much as fighting isn't fun, giving up would feel worse. In fact I do not think giving up would be correct.

14 comments

Comments sorted by top scores.

comment by MondSemmel · 2025-04-06T21:54:31.780Z · LW(p) · GW(p)

I personally first deeply felt the sense of "I'm doomed, I'm going to die soon" almost exactly a year ago, due to a mix of illness and AI news. It was a double-whammy of getting both my mortality, and AGI doom, for the very first time.

Re: mortality, it felt like I'd been immortal up to that point, or more accurately a-mortal or non-mortal or something. Up to this point I hadn't anticipated death happening to me as anything more than a theoretical exercise. I was 35, felt reasonably healthy, was familiar with transhumanism, had barely witnessed any deaths in the family, etc. I didn't feel like a mortal being that can die very easily, but more like some permanently existing observer watching a livestream of my life: it's easy to imagine turning off the livestream, but much harder to imagine that I, the observer, will eventually turn off.

After I felt like I'd suddenly become mortal, I experienced panic attacks for months.

Re: AGI doom: even though I've thought way less about this topic than you, I do want to challenge this part:

And doomed for no better reason than because people were incapable of not doing something.

Just as I felt non-mortal because of an anticipated transhumanist future or something, so too did it feel like the world was not doomed, until one day it was. But did the probability of doom suddenly jump to >99% in the last few years, or was the doom always the default outcome and we were just wrong to expect anything else? Was our glorious transhumanist future taken from us, or was it merely a fantasy, and the default outcome was always technological extinction?

Are we in a timeline where a few actions by key players doomed us, or was near-term doom always the default overdetermined outcome? Suppose we go back to the founding of LessWrong in 2009, or the founding of OpenAI in 2015. Would a simple change, like OpenAI not being founded, actually meaningfully change the certainty of doom, or would it have only affected the timeline by a few years? (That said, I should stress that I don't absolve anyone who dooms us in this timeline from their responsibility.)

From my standpoint now in 2025, AGI doom seems overdetermined for a number of reasons, like:

- Humans are the first species barely smart enough to take over the planet, and to industrialize, and to climb the tech ladder. We don't have dath ilan's average IQ of 180 or whatever. The arguments for AGI doom are just too complicated and time-consuming to follow, for most people. And even when people follow them, they often disagree about them.

- Our institutions and systems of governance have always been incapable of fully solving mundane problems, let alone extinction-level ones. And they're best at solving problems and disasters they can actually witness and learn from, which doesn't happen with extinction-level problems. And even when we faced a global disaster like Covid-19, our institutions didn't take anywhere sufficient steps to prevent future pandemics.

- Capitalism: our best, or even ~only, truly working coordination mechanism for deciding what the world should work on is capitalism and money, which allocates resources towards the most productive uses. This incentivizes growth and technological progress. There's no corresponding coordination mechanism for good political outcomes, incl. for preventing extinction.

- In a world where technological extinction is possible, tons of our virtues become vices:

- Freedom: we appreciate freedoms like economic freedom, political freedom, and intellectual freedom. But that also means freedom to (economically, politically, scientifically) contribute to technological extinction. Like, I would not want to live in a global tyranny, but I can at least imagine how a global tyranny could in principle prevent AGI doom, namely by severely and globally restricting many freedoms. (Conversely, without these freedoms, maybe the tyrant wouldn't learn about technological extinction in the first place.)

- Democracy: politicians care about what the voters care about. But to avert extinction you need to make that a top priority, ideally priority number 1, which it can never be: no voter has ever gone extinct, so why should they care?

- Egalitarianism: resulted in IQ denialism; if discourse around intelligence was less insane, that would help discussion of superintelligence.

- Cosmopolitanism: resulted in pro-immigration and pro-asylum policy, which in turn precipitated both a global anti-immigration and an anti-elite backlash.

- Economic growth: the more the better; results in rising living standards and makes people healthier and happier... right until the point of technological extinction.

- Technological progress: I've used a computer, and played video games, all my life. So I cheered for faster tech, faster CPUs, faster GPUs. Now the GPUs that powered my games instead speed us up towards technological extinction. Oops.

- And so on.

Yudkowsky had a glowfic story about how dath ilan prevents AGI doom, and that requires a whole bunch of things to fundamentally diverge from our world. Like a much smaller population; an average IQ beyond genius-level; fantastically competent institutions; a world government; a global conspiracy to slow down compute progress; a global conspiracy to work on AI alignment; etc.

I can imagine such a world to not blow itself up. But even if you could've slightly tweaked our starting conditions from a few years or decades ago, weren't we going to blow ourselves up anyway?

And if doom is sufficiently overdetermined, then the future we grieve for, transhumanist or otherwise, was only ever a mirage.

Replies from: Ruby, sam-iacono↑ comment by Ruby · 2025-04-06T22:02:41.065Z · LW(p) · GW(p)

So gotta keep in mind that probabilities are in your head (I flip a coin, it's already tails or heads in reality, but your credence should still be 50-50). I think it can be the case that we were always doomed even if weren't yet justified in believing that.

Alternatively, it feels like this pushes up against philosophies of determinism and freewill. The whole "well the algorithm is a written program and it'll choose what is chooses deterministically" but also from the inside there are choices.

I think a reason to have been uncertain before and update more now is just that timelines seem short. I used to have more hope because I thought we had a lot more time to solve both technical and coordination problems, and then there was the DL/transformers surprise. You make a good case and maybe 50 years more wouldn't make a difference, but I don't know, I wouldn't have as high p-doom if we had that long.

Replies from: MondSemmel↑ comment by MondSemmel · 2025-04-06T22:37:50.741Z · LW(p) · GW(p)

I know that probabilities are in the map, not in the territory. I'm just wondering if we were ever sufficiently positively justified to anticipate a good future, or if we were just uncertain about the future and then projected our hopes and dreams onto this uncertainty, regardless of how realistic that was. In particular, the Glorious Transhumanist Future requires the same technological progress that can result in technological extinction, so I question whether the former should've ever been seen as the more likely or default outcome.

I've also wondered about how to think about doom vs. determinism. A related thorny philosophical issue is anthropics: I was born in 1988, so from my perspective the world couldn't have possibly ended before then, but that's no defense whatsoever against extinction after that point.

Re: AI timelines, again this is obviously speaking from hindsight, but I now find it hard to imagine how there could've ever been 50-year timelines. Maybe specific AI advances could've come a bunch of years later, but conversely, compute progress followed Moore's Law and IIRC had no sign of slowing down, because compute is universally economically useful. And so even if algorithmic advances had been slower, compute progress could've made up for that to some extent.

Re: solving coordination problems: some of these just feel way too intractable. Take the US constitution, which governs your political system: IIRC it was meant to be frequently updated in constitutional conventions, but instead the political system ossified and the last meaningful amendment (18-year voting age) was ratified in 1971, or 54 years ago. Or, the US Senate made itself increasingly ungovernable with the filibuster, and even the current Republican-majority Senate didn't deign to abolish it. Etc. Our political institutions lack automatic repair mechanisms, so they inevitably deteriorate over time, when what we needed was for them to improve over time instead.

Replies from: Ruby↑ comment by Ruby · 2025-04-06T22:56:06.209Z · LW(p) · GW(p)

I'm just wondering if we were ever sufficiently positively justified to anticipate a good future, or if we were just uncertain about the future and then projected our hopes and dreams onto this uncertainty, regardless of how realistic that was.

I think that's a very reasonable question to be asking. My answer is I think it was justified, but not obvious.

My understanding is it wasn't taken for granted that we had a way to get more progress with simply more compute until deep learning revolution, and even then people updated on specific additional data points for transformers, and even then people sometimes say "we've hit a wall!"

Maybe with more time we'd have time for the US system to collapse and be replaced with something fresh and equal to the challenges. To the extent the US was founded and set in motion by a small group of capable motivated people, it seems not crazy to think a small to large group such people could enact effective plans with a few decades.

↑ comment by MondSemmel · 2025-04-06T23:07:12.220Z · LW(p) · GW(p)

One more virtue-turned-vice for my original comment: pacifism and disarmament: the world would be a more dangerous place if more countries had more nukes etc., and we might well have had a global nuclear war by now. But also, more war means more institutional turnover, and the destruction and reestablishment of institutions is about the only mechanism of institutional reform which actually works. Furthermore, if any country could threaten war or MAD against AI development, that might be one of the few things that could possibly actually enforce an AI Stop.

↑ comment by Sam Iacono (sam-iacono) · 2025-04-07T02:56:06.705Z · LW(p) · GW(p)

Do you really think p(everyone dies) is >99%?

comment by Declan Molony (declan-molony) · 2025-04-06T14:52:19.651Z · LW(p) · GW(p)

In reckoning with my feelings of doom, I wrote a post [LW · GW] in which I drew upon Viktor Frankl's popular book Man's Search for Meaning. Here's an excerpt from that post that discusses why hope is not cheap, but necessary for day-to-day life:

Frankl, a psychologist and holocaust survivor, wrote about his experience in the concentration camps. He observed two types of inmates—some prisoners collapsed in despair, while others were able to persevere in the harsh conditions:

"Psychological observations of the prisoners have shown that only the men who allowed their inner hold on their moral and spiritual selves to subside eventually fell victim to the camp’s degenerating influences.

"We who lived in concentration camps can remember the men who walked through the huts comforting others, giving away their last piece of bread. They [were] few in number, but they offer sufficient proof that everything can be taken from a man but one thing: the last of the human freedoms—to choose one’s attitudes in any given set of circumstances [LW · GW].

A depressed person honestly believes there’s no hope that things will improve. Consequently, they collapse into a vegetative state of despair. It follows, therefore, that hope is literally the prerequisite for action.

So if I didn’t implicitly believe there’s a tomorrow worth living, then I wouldn’t have written this post.

I ended that post by with this:

If a storm disturbs the rock garden of a Zen monk, the next day he goes to work to restore its beauty.

comment by arisAlexis (arisalexis) · 2025-04-06T10:18:25.244Z · LW(p) · GW(p)

I think having a huge p(doom) vs a much smaller one would change this article substantially. If you have 20-30 or 50% doom you can still be positive. In all other cases it sounds like a terminal illness. But since the number is subjective living your life like you know you are right is certainly wrong. So I take most of your article and apply it in my daily life and the closest to this is being a stoic but by any means I don't believe that it would take a miracle for our civilization to survive. It's more than that and it's important.

Replies from: Ruby↑ comment by Ruby · 2025-04-06T10:59:20.803Z · LW(p) · GW(p)

But since the number is subjective living your life like you know you are right is certainly wrong

I don't think this makes sense. Suppose you have a subjective belief that a vial of tasty fluid is lethal poison 90%, you're going to act in accordance with that belief. Now if other people think differently from you, and you think they might be right, maybe you adjust your final subjective probability to something else, but at the end of the day it's yours. That it's subjective doesn't rule it out being pretty extreme.

If what you mean is you can't be that confident given disagreement, I dunno, I wish I could have that much faith in people.

Replies from: mattmacdermott↑ comment by mattmacdermott · 2025-04-07T00:09:43.646Z · LW(p) · GW(p)

If what you mean is you can't be that confident given disagreement, I dunno, I wish I could have that much faith in people.

In another way, being that confident despite disagreement requires faith in people — yourself and the others who agree with you.

I think one reason I have a much lower p(doom) than some people is that although I think the AI safety community is great, I don’t have that much more faith in its epistemics than everyone else’s.

comment by danielechlin · 2025-04-06T14:03:11.645Z · LW(p) · GW(p)

This does sound OCD in that all the psychic energy is going into rationalizing doom slightly differently in the hopes that this time you'll get some missing piece of insight that will change your feelings. Like I think we can accrue a sort of moral OCD that if too many people behavior as if p(doom)=low we must become the ascetic who doesn't just believe it's high but who has many mental rituals meant to enforce believing it's high as many hours a day as possible.

ERP (exposure/response prevention) is gold standard for OCD, not ACT or DBT. I mean, there's overlap, because ACT emphasizes cognitive defusion which is basically what ERP produces, and dialectics can be exposures to that thing you're avoiding. And there's a more OCD-treating way to do "radical acceptance," it's less like focusing on the truth of the unpleasant thing and "watch" the thoughts go by like "leaves on a stream."

I think an exposure for you might be more "p(doom) is close to 0, I was mistaken", and in rationalist-speak privately steelman this for 1-5 minutes, but also you know like a "butterfly thought," where you don't want to crush the butterfly. And just to emphasize this is a private exercise, if you "actually" believe p(doom) is 0 for 2 minutes, it's really not going to hurt you. The more I make it a rationalist exercise the less it's an ERP and the more it's just more rationalization, but I feel I need to "steelman" just exiting the space of constant high focus on high p(doom) at all. An ERP might look like this example but a therapist might also try to keep it more "low key," like pick an easy exposure to skill-build on, like if you're afraid of everyone dying and also spiders, therapy might target spiders as just easier to practice with.

For me the ACT perspective is things I care about include: 1. job 2. family 3. doom. What I don't include in this list is 4. figuring out the most important thing and only doing that. Yes it's compartmentalizing and I haven't endowed "doom" with any ability to leave its box and it's actually still #3 not 1 or 2. I mean this is a work-in-progress for me too but this sort of enumerated-values approach has been materially helpful every day.

comment by Drake Morrison (Leviad) · 2025-04-06T04:58:39.703Z · LW(p) · GW(p)

This is my favorite guide to confronting doom yet

comment by Declan Molony (declan-molony) · 2025-04-06T14:36:16.582Z · LW(p) · GW(p)

Don't look away

But more than just "what do I believe", I think it's of equal or greater importance what you pay attention to. A person can correctly believe that we face doom yet try to just not think about it. In effect, if you never think about doom, are you any better off than if you didn't believe in it?

The movie Don't Look Up did a good job of capturing the feeling of doom and how the global citizenry might react to a apocalyptic event. Many people in the movie chose to live in blissful ignorance.

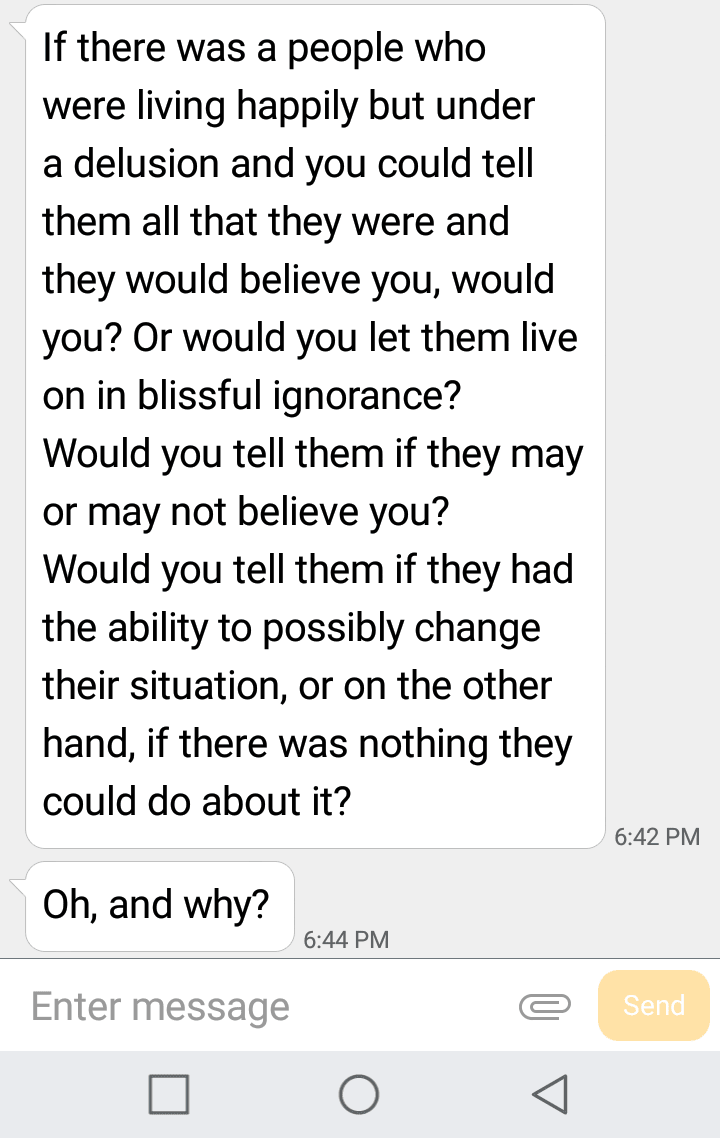

I discovered LessWrong a year ago and never read the AI-related material. I had a feeling I wouldn't like it so I avoided it. Now that I'm in the thick of it (as of a month ago), I'm reminded of this text my Christian friend sent me 5 years ago:

She was, of course, referring to religion, but it's an excellent series of questions that can equally be applied to AGI-related doom.

Who can I talk to about my doom? I tried discussing the implications with two of my married friends yesterday: the husband was receptive to the topic, but the wife refused the engage in the discussion because it was too stressful.

I tried talking to my parents about it, but they're older and don't understand AI.

I thought about trying talk therapy for the first time, but if the therapist is uninformed about AGI, I don't want to introduce them to new stress and existential angst.

comment by Stephen McAleese (stephen-mcaleese) · 2025-04-06T13:46:58.612Z · LW(p) · GW(p)

This is a great essay and I find myself agreeing with a lot of it. I like how it fully accepts the possibility of doom while also being open to and prepared for other possibilities. I think becoming skilled at handling uncertainty, emotions, and cognitive biases and building self-awareness while trying to find the truth are all skills aspiring rationalists should aim to build and the essay demonstrates these skills.