AGI rising: why we are in a new era of acute risk and increasing public awareness, and what to do now

post by Greg C (greg-colbourn) · 2023-05-03T20:26:19.479Z · LW · GW · 12 commentsContents

12 comments

12 comments

Comments sorted by top scores.

comment by Ulisse Mini (ulisse-mini) · 2023-05-04T00:08:54.039Z · LW(p) · GW(p)

Downvoted because I view some of the suggested strategies as counterproductive. Specifically, I'm afraid of people flailing [LW · GW]. I'd be much more comfortable if there was a bolded paragraph saying something like the following:

Beware of flailing [LW · GW] and second-order effects and the unilateralist's curse [? · GW]. It is very easy to end up doing harm with the intention to do good, e.g. by sharing bad arguments for alignment, polarizing the issue, etc.

To give specific examples illustrating this (which may also be good to include and/or edit the post):

- I believe tweets like this are much better (and net positive) then the tweet you give as an example. Sharing anything less then the strongest argument can be actively bad to the extent it immunizes people against the actually good reasons to be concerned.

- Most forms of civil disobedience seems actively harmful to me. Activating the tribal instincts of more mainstream ML researchers, causing them to hate the alignment community, would be pretty bad in my opinion. Protesting in the streets seems fine, protesting by OpenAI hq does not.

Don't have time to write more. For more info see this twitter exchange I had with the author, though I could share more thoughts and models my main point is be careful, taking action is fine, and don't fall into the analysis-paralysis of some rationalists, but don't make everything worse.

Replies from: greg-colbourn↑ comment by Greg C (greg-colbourn) · 2023-05-04T07:23:16.849Z · LW(p) · GW(p)

Thanks for writing out your thoughts in some detail here. What I'm trying to say is that things are already really bad. Industry self-regulation has failed. At some point you have to give up on hoping that the fossil fuel industry (AI/ML industry) will do anything more to fix climate change (AGI x-risk) than mere greenwashing (safetywashing [LW · GW]). How much worse does it need to get for more people to realise this?

The Alignment community (climate scientists) can keep doing their thing; I'm very much in favour of that [EA · GW]. But there is also now an AI Notkilleveryoneism (climate action) movement. We are raising the damn Fire Alarm.

From the post you link:

some authority somewhere will take notice and come to the rescue.

Who is that authority?

The United Nations Security Council. Anything less and we're toast.

And we can talk all we like about the unilateralist's curse, but I don't think anything a bunch of activists can do will ever top the formation and corruption-to-profit-seeking of OpenAI and Anthropic (the supposedly high status moves).

Replies from: ulisse-mini↑ comment by Ulisse Mini (ulisse-mini) · 2023-05-04T14:45:49.455Z · LW(p) · GW(p)

I tentatively approve of activism & trying to get govt to step in. I just want it to be directed in ways that aren't counterproductive. Do you disagree with any of my specific objections to strategies, or the general point that flailing can often be counterproductive? (Note not all activism i included in flailing, flailing, it depends on the type)

comment by 1a3orn · 2023-05-03T22:40:03.709Z · LW(p) · GW(p)

The above contains links to a lot of arguments, but it does not develop almost any argument fully.

In lieu of responding to them all, I will address one sentence.

Here's a claim you make about likely future timelines: "GPT-4 + curious (but ultimately reckless) academics -> more efficient AI -> next generation foundation model AI (which I’ll call NextAI[10] for short)"

There are two links in "ultimately reckless" go to two papers, which must be to support the claim that researchers are reckless, or the claim that we will quickly get more efficient next level AI, presumably.

One paper uses GPT-4 to do neural architecture search over CIFAR-10, or CIFAR-100, or ImageNet16-120. These are all pretty tiny datasets... which is why people do NAS over them, because otherwise it would be insanely expensive. Even doing this kind of thing with a small LM would be really tough. Furthermore, GPT-4 looks like it's giving you the same approximate OOM improvements that other NAS techniques do, which isn't that great. Like I could write more here, but this is basically miles away from being used to help with actual LLMs, let alone recursive self improvement -- it's on toy datasets used for NAS because they are small.

The other uses GPT-4 augmented with planners to produce plans. "Plans" means classical planing problems like "Given a set of piles of blocks on a table, a robot is tasked with rearranging them into a specified target configuration while obeying the laws of physics." This kind of thing -- with definite ontology, non-vague world, etc -- has been in AI since the 70s and the ability of GPT-4 to work with a classical planner is basically entirely unrelated to... lengthy, contextually vague problems necessary to take over the world. Like, it isn't just that "Take over the world" is too vague and onto logically unspecified for this kind of treatment, "Unload my car, don't break anything" is also too vague and unspecified. In any event, I don't think it's remotely worrisome, and in any event extremely unclear how it leads to more efficient NextAI.

Anyhow, neither of these papers lead me to think that researchers are reckless. I'm really unsure how they're suppose to support that claim, or the claim that we'll get NextAI quickly, or even what NextAI would be.

Anyhow, if the above sentence is representative, the above looks like a gish gallop -- responding at length to many of these points would take 50x the space spent here.

Replies from: greg-colbourn, greg-colbourn↑ comment by Greg C (greg-colbourn) · 2023-05-03T22:59:55.136Z · LW(p) · GW(p)

It's really not intended as a gish gallop, sorry if you are seeing it as such. I feel like I'm really only making 3 arguments:

1. AGI is near

2. Alignment isn't ready (and therefore P(doom|AGI is high)

3. AGI is dangerous

And then drawing the conclusion from all these that we need a global AGI moratorium asap.

Replies from: 1a3orn↑ comment by 1a3orn · 2023-05-04T01:04:06.455Z · LW(p) · GW(p)

So Gish gallop is not the ideal phrasing, although denotatively that is what I think it is.

A more productive phrasing on my part would be, when arguing it is charitable to only put forth the strongest arguments you have, rather than many questionable arguments.

This helps you persuade other people, if you are right, because they won't see a weaker argument and think all your arguments are that weak.

This helps you be corrected, if you are wrong, because it's more likely that someone will be able to respond to one or two arguments that you have identified as strong arguments, and show you where you are wrong -- no one's going to do that with 20 weaker arguments, because who has the time?

Put alternately, it also helps with epistemic legibility [LW · GW]. It also shows that you aren't just piling up a lot of soldiers [? · GW] for your side -- it shows that you've put in the work to weed out the ones which matter, and are not putting weird demands on your reader's attention by just putting all those which work.

You have a lot of sub-parts in your argument for 1, 2, 3 above. (Like, in the first section there are ~5 points I think are just wrong or misleading, and regardless of whether they are wrong or not are at least highly disputed). It doesn't help to have succession of such disputed points -- regardless of whether your audience is people you agree with or people you don't!

Replies from: greg-colbourn↑ comment by Greg C (greg-colbourn) · 2023-05-04T07:27:21.380Z · LW(p) · GW(p)

The way I see the above post (and it's accompaniment [EA · GW]) is knocking down all the soldiers that I've encountered talking to lots of people about this over the last few weeks. I would appreciate it if you could stand them back up (because I'm really [EA · GW] trying [EA · GW] to not be so doomy, and not getting any satisfactory rebuttals).

↑ comment by Greg C (greg-colbourn) · 2023-05-03T22:53:59.382Z · LW(p) · GW(p)

I think you need to zoom out a bit and look at the implications of these papers. The danger isn't in what people are doing now, it's in what they might be doing in a few months following on from this work. The NAS paper was a proof of concept. What happens when it's massively scaled up? What happens when efficiency gains translate into further efficiency gains?

Replies from: 1a3orn↑ comment by 1a3orn · 2023-05-04T01:00:56.624Z · LW(p) · GW(p)

What happens when it's massively scaled up?

Probably nothing, honestly.

Here's a chart of one of the benchmarks the GPT-NAS paper tests on. They GPT-NAS paper is like.... not off trend? Not even SOTA? Honestly looking at all these results my tenative guess is that the differences are basically noise for most techniques; the state space is tiny such that I doubt any of these really leverage actual regularities in it.

Replies from: greg-colbourn↑ comment by Greg C (greg-colbourn) · 2023-05-04T07:31:18.484Z · LW(p) · GW(p)

From the Abstract:

Rather than targeting state-of-the-art performance, our objective is to highlight GPT-4’s potential

They weren't aiming for SOTA! What happens when they do?

comment by Greg C (greg-colbourn) · 2023-05-04T08:08:47.700Z · LW(p) · GW(p)

LessWrong:

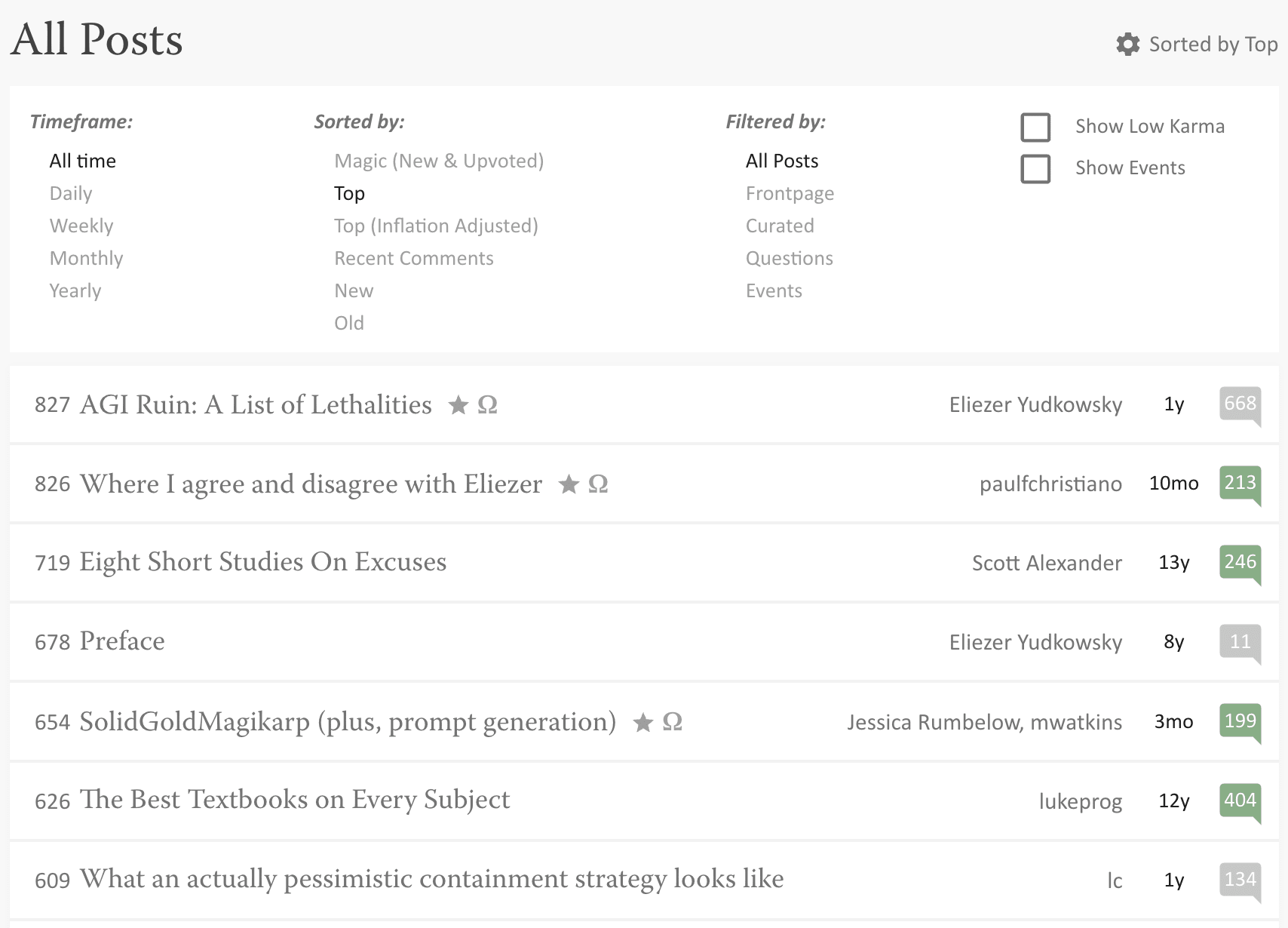

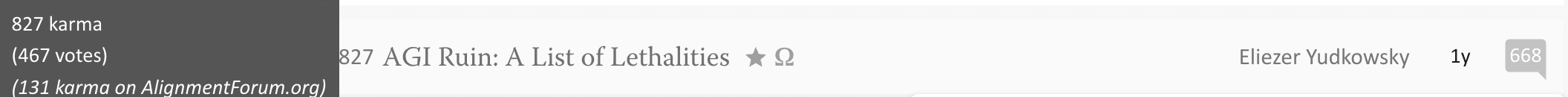

A post about all the reasons AGI will kill us [LW · GW]: No. 1 all time highest karma (827 on 467 votes; +1.77 karma/vote)

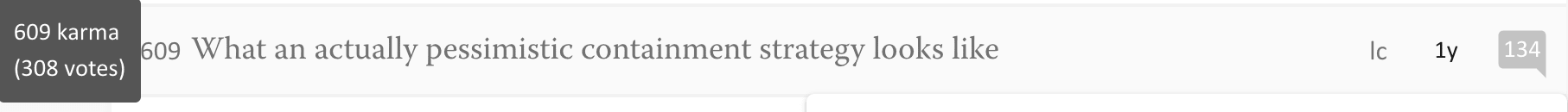

A post about containment strategy for AGI [LW · GW]: 7th all time highest karma (609 on 308 votes; +1.98 karma/vote)

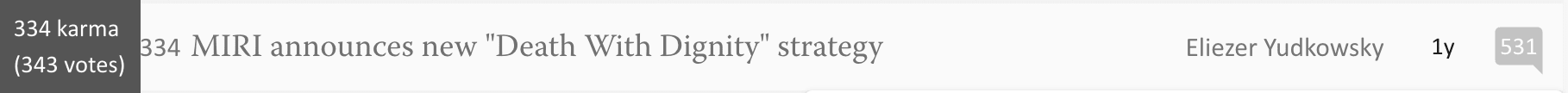

A post about us all basically being 100% dead from AGI [LW · GW]: 52nd all time highest karma (334 on 343 votes; +0.97 karma/vote, a bit more controversial)

Also LessWrong:

A post about actually doing something about containing the threat from AGI and not dying [this one]: downvoted to oblivion (-5 karma within an hour; currently 13 karma on 24 votes; +0.54 karma/vote)

My read: y'all are so allergic [LW · GW] to anything considered remotely political (even though this should really not be a mater of polarisation - it's about survival above all else!) that you'd rather just lie down and be paperclipped [? · GW] than actually do anything to prevent it happening. I'm done [LW · GW].

↑ comment by Ege Erdil (ege-erdil) · 2023-05-04T13:58:37.351Z · LW(p) · GW(p)

I don't want to speak on behalf of others, but I suspect that many people who are downvoting you agree that something should be done. They just don't like the tone of your post, or the exact actions you're proposing, or something else specific to this situation.