Posts

Comments

then it would be better to use an example not directly aimed against “our atoms”

All the atoms are getting repurposed at once, no special focus on those in our bodies (but there is in the story, to get the reader to empathise). Maybe I could've included more description of non-alive things getting destroyed.

mucking with quantum gravity too recklessly, or smth in that spirit

I'm trying to focus on plausible science/tech here.

they need to do experiments in forming hybrid consciousness with humans to crack the mystery of human subjectivity, to experience that first-hand for themselves, and to decide whether that is of any value to them based on the first-hand empirical material (losing that option without looking is a huge loss)

Interesting. But even if they do find something valuable in doing that, there's not much to keep the vast majority of humans around. And as you say, they could just end up as "scans", with very few being run as oracles.

Where does my writing suggest that it's a "power play" and "us vs them"? (That was not the intention at all! I've always seen indifference, and "collateral damage" as the biggest part of ASI x-risk.)

as we know, compute is not everything, algorithmic improvement is even more important

It should go without saying that it would also be continually improving it's algorithms. But maybe I should've made that explicit.

the action the ASI is taking in the OP is very suboptimal and deprives it of all kinds of options

What are some examples of these options?

They don't have a choice in the matter - it's forced by the government (nationalisation). This kind of thing has happened before in wartime (without the companies or people involved staging a rebellion).

On one hand, it's not clear if a system needs to be all that super-smart to design a devastating attack of this kind...

Good point, but -- and as per your second point too -- this isn't an "attack", it's "go[ing] straight for execution on its primary instrumental goal of maximally increasing its compute scaling" (i.e. humanity and biological life dying is just collateral damage).

probably would not want to irreversibly destroy important information without good reasons

Maybe it doesn't consider the lives of individual organisms as "important information"? But if it did, it might do something like scan as it destroys, to retain the information content.

Are you saying they are suicidal?

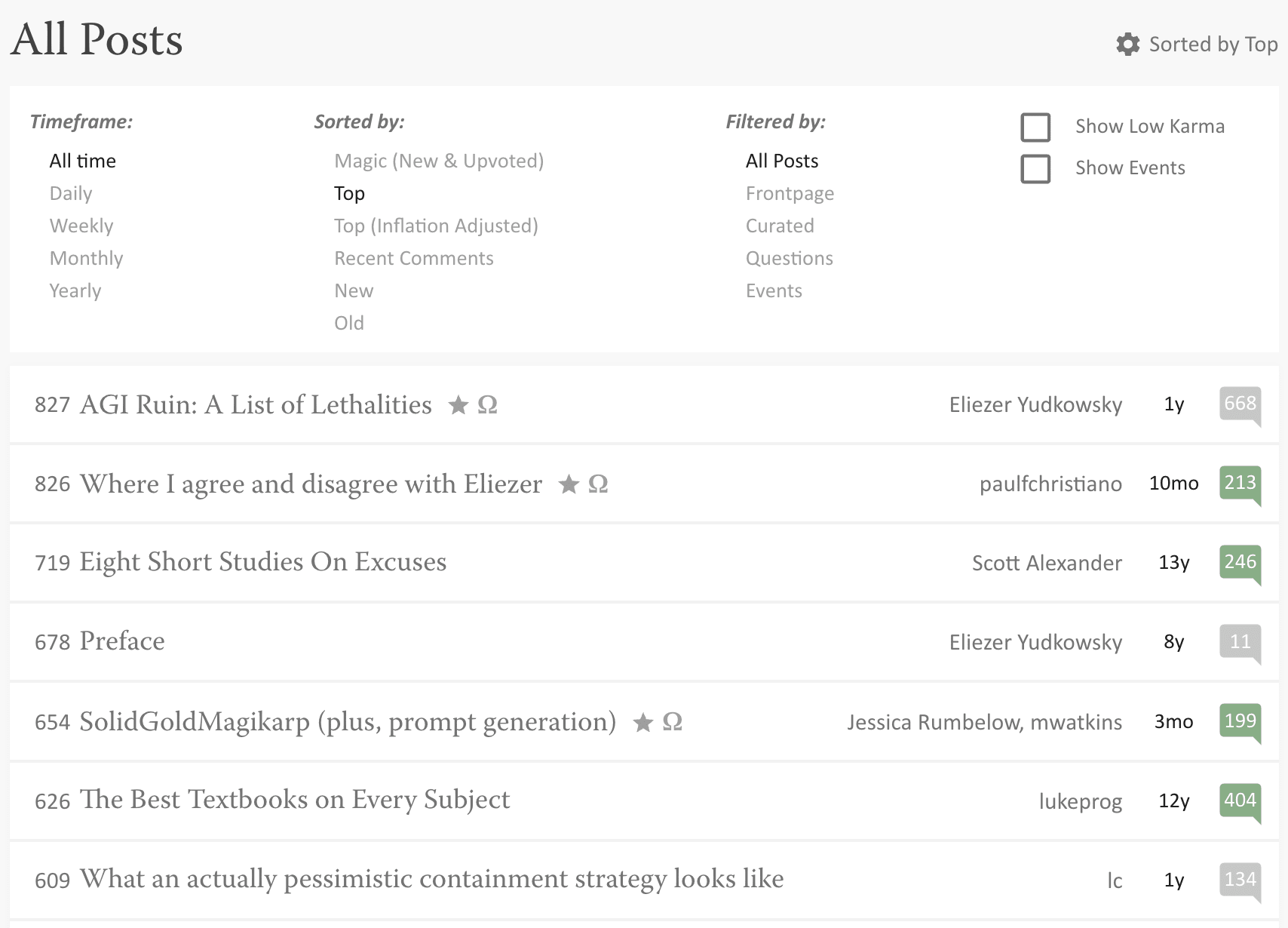

LessWrong:

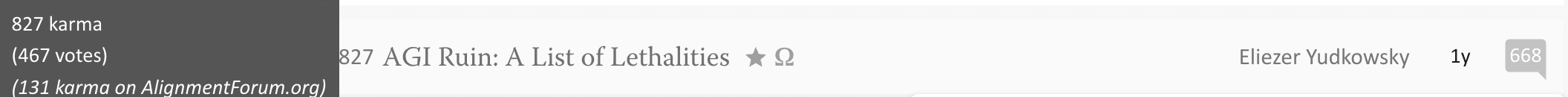

A post about all the reasons AGI will kill us: No. 1 all time highest karma (827 on 467 votes; +1.77 karma/vote)

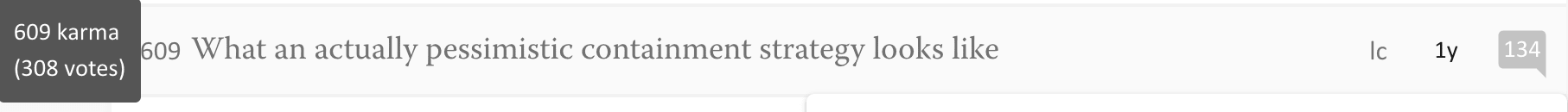

A post about containment strategy for AGI: 7th all time highest karma (609 on 308 votes; +1.98 karma/vote)

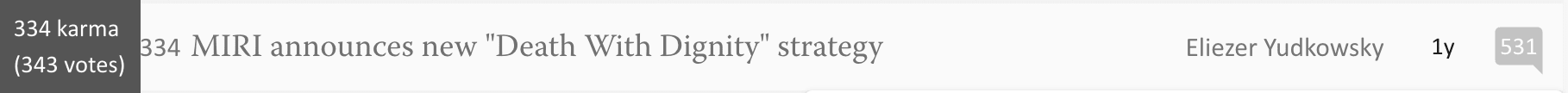

A post about us all basically being 100% dead from AGI: 52nd all time highest karma (334 on 343 votes; +0.97 karma/vote, a bit more controversial)

Also LessWrong:

A post about actually doing something about containing the threat from AGI and not dying [this one]: downvoted to oblivion (-5 karma within an hour; currently 13 karma on 24 votes; +0.54 karma/vote)

My read: y'all are so allergic to anything considered remotely political (even though this should really not be a mater of polarisation - it's about survival above all else!) that you'd rather just lie down and be paperclipped than actually do anything to prevent it happening. I'm done.

From the Abstract:

Rather than targeting state-of-the-art performance, our objective is to highlight GPT-4’s potential

They weren't aiming for SOTA! What happens when they do?

The way I see the above post (and it's accompaniment) is knocking down all the soldiers that I've encountered talking to lots of people about this over the last few weeks. I would appreciate it if you could stand them back up (because I'm really trying to not be so doomy, and not getting any satisfactory rebuttals).

Thanks for writing out your thoughts in some detail here. What I'm trying to say is that things are already really bad. Industry self-regulation has failed. At some point you have to give up on hoping that the fossil fuel industry (AI/ML industry) will do anything more to fix climate change (AGI x-risk) than mere greenwashing (safetywashing). How much worse does it need to get for more people to realise this?

The Alignment community (climate scientists) can keep doing their thing; I'm very much in favour of that. But there is also now an AI Notkilleveryoneism (climate action) movement. We are raising the damn Fire Alarm.

From the post you link:

some authority somewhere will take notice and come to the rescue.

Who is that authority?

The United Nations Security Council. Anything less and we're toast.

And we can talk all we like about the unilateralist's curse, but I don't think anything a bunch of activists can do will ever top the formation and corruption-to-profit-seeking of OpenAI and Anthropic (the supposedly high status moves).

It's really not intended as a gish gallop, sorry if you are seeing it as such. I feel like I'm really only making 3 arguments:

1. AGI is near

2. Alignment isn't ready (and therefore P(doom|AGI is high)

3. AGI is dangerous

And then drawing the conclusion from all these that we need a global AGI moratorium asap.

I think you need to zoom out a bit and look at the implications of these papers. The danger isn't in what people are doing now, it's in what they might be doing in a few months following on from this work. The NAS paper was a proof of concept. What happens when it's massively scaled up? What happens when efficiency gains translate into further efficiency gains?

This post was only a little ahead of it's time. The time is now. EA/LW will probably be eclipsed by wider public campaigning on this if they (the leadership) don't get involved.

Advocate for a global moratorium on AGI. Try and buy (us all) more time. Learn the basics of AGI safety (e.g. AGI Safety Fundamentals) so you are able to discuss the reasons why we need a moratorium in detail. YMMV, but this is what I'm doing as a financially independent 42 year-old. I feel increasingly like all my other work is basically just rearranging deckchairs on the Titanic.

Thank you for doing this. I'm thinking that at this point, there needs to be an organisation with the singular goal of pushing for a global moratorium on AGI development. Anyone else interested in this? Have DM'd.

-

Ok, I admit I simplified here. There is still probably ~ a million times (give or take an order of magnitude) more relevant compute (GPUs, TPUs) than was used to train GPT-4.

-

It won't need large orders to gain a relevant foothold. Just a few tiny orders could suffice.

-

I didn't mean literallly rob the stock market. I'm referring to out-trading all the other traders (inc. existing HFT) to accumulate resources.

-

Exponential growth can't remain "slow" forever, by definition. How long does it take for the pond to be completely covered by lily pads when it's half covered? How long did it take for Covid to become a pandemic? Not decades.

-

I referred to social hacking (i.e. blackmailing people into giving up their passwords). This could go far enough (say, at least 10% of world devices). Maybe quantum computers (or some better tech the AI thinks up) could do the rest.

Is this now on the radar of national security agencies and the UN Security Council? Is it being properly discussed inside the US government? If not, are meetings being set up? Would be good if someone in the know could give an indication (I hope Yudkowsky is busy talking to lots of important people!)

Can you be more specific about what you don't agree with? Which parts can't happen, and why?

Compute - what fraction of world compute did it take to train GPT-4? Maybe 1e-6? There's 1e6 improvement right there from a superhuman GPT-6 capturing all of the "hardware overhang".

Data - superhuman GPT-6 doesn't need to rely on human recorded data, it can harness all the sensors on the planet to gather exabytes of-real time data per second, and re-derive scientific theories from scratch in minutes based on it's observations (including theories about human behaviour, language etc)

Robotics/Money - easy for GPT-6. Money it can get from scamming gullible humans, hacking crypto wallets via phishing/ransomware, or running rings round stock market traders. Robotics it can re-derive and improve on from it's real time sensing of the planet and it's speed of thought making our daily life look like geology does to us. It can escape to the physical world any number of ways by manipulating humans into giving it access to boot loaders for it to gain a foothold in the physical world (robots, mail-order DNA etc).

Algorithm search time - wall clock time is much reduced when you've just swallowed the world's hardware overhang (see Compute above)

Factoring the above, your extra decades become extra hours.

Selection pressure will cause models to become agentic as they increase in power - those doing the agentic things (following universal instrumental goals like accumulating more resources and self-improvement) will outperform those that don't. Mesaoptimisation (explainer video) is kind of like cheating - models that create inner optimisers that target something easier to get than what we meant, will be selected (by getting higher rewards) over models that don't (because we won't be aware of the inner misalignment). Evolution is a case in point - we are products of it, yet misaligned to its goals (we want sex, and high calorie foods, and money, rather than caring explicitly about inclusive genetic fitness). Without alignment being 100% watertight, powerful AIs will have completely alien goals.

Yeah, they work well enough at this (~human) level. But no current alignment techniques are scalable to superhuman AI. I'm worried that basically all of the doom flows through an asymptote of imperfect alignment. I can't see how this doesn't happen, short of some "miracle".

the tiniest advantage compounds until one party has an overwhelming lead.

This, but x1000 to what you are thinking. I don't think we have any realistic chance of approximate parity between the first and second movers. The speed that the first mover will be thinking makes this so. Say GPT-6 is smarter at everything, even by a little bit, compared to everything else on the planet (humans, other AIs). It's copied itself 1000 times, and each copy is thinking 10,000,000 times faster than a human. We will essentially be like rocks to it, operating on geological time periods. It can work out how to disassemble our environment (including an unfathomable number of contingencies against counter strike) over subjective decades or centuries of human-equivalent thinking time before your sentinal AI protectors even pick up it's activity.

I also think that compared with other AIs, LLMs may have more potential for being raised friendly and collaborative, as we can interact with them the way we do with humans, reusing known recipes. Compared with other forms of extremely large neural nets and machine learning, they are more transparent and accessible. Of all the routes to AGI we could take, I think this might be one of the better ones.

This is an illusion. We are prone to anthropomorphise chatbots. Under the hood they are completely alien. Lovecraftian monsters, only made of tons of inscrutable linear algebra. We are facing a digital alien invasion, that will ultimately move at speeds we can't begin to keep up with.

Ultimately, it doesn't matter which monkey gets the poison banana. We're all dead either way. This is much worse than nukes, in that we really can't risk even one (intelligence) explosion.

We can but hope they will see sense (as will the US government - and it's worth considering that in hindsight, maybe they were actually the baddies when it came to nuclear escalation). There is an iceberg on the horizon. It's not the time to be fighting over revenue from deckchair rentals, or who gets to specify their arrangement. There's geopolitical recklessness, and there's suicide. Putin and Xi aren't suicidal.

Look, I agree re "negative of entropy, aging, dictators killing us eventually", and a chance of positive outcome, but right now I think the balance is approximately like the above payoff matrix over the next 5-10 years, without a global moratorium (i.e. the positive outcome is very unlikely unless we take a decade or two to pause and think/work on alignment). I'd love to live in something akin to Iain M Banks' culture, but we need to get through this acute risk period first, to stand any chance of that.

Do you think Drexler's CAIS is straightforwardly controllable? Why? What's to stop it being amalgamated into more powerful, less controllable systems? "People" don't need to make them globally agentic. That can happen automatically via Basic AI Drives and Mesaoptimisation once thresholds in optimisation power are reached.

I'm worried that actually, Alignment might well turn out to be impossible. Maybe a moratorium will allow for such impossibility proofs to be established. What then?

Except the risk of igniting the atmosphere with the Trinity test is judged to be ~10%. It's not "you slow down, and let us win", it's "we all slow down, or we all die". This is not a Prisoners Dilema:

From the GPT-4 announcement: "We’ve also been using GPT-4 internally, with great impact on functions like support, sales, content moderation, and programming." (and I'm making the reasonable assumption that they will naturally be working on GPT-5 after GPT-4).

I think we are already too close for comfort to x-risky systems. GPT-4 is being used to speed up development of GPT-5 already. If GPT-5 can make GPT-6, that's game over. How confident are you that this couldn't happen?

GPT-4 was rushed, and the OpenAI Plugin store. Things are moving far too fast for comfort. I think we can forgive this response for being rushed. It's good to have some significant opposition working on the brakes to the runaway existential catastrophe train that we've all been put on.

Why do you think it only applies to the US? It applies to the whole world. It says "all AI labs", and "govenrments". I hope the top signatories are reaching out to labs in China and other countries. And the UN for that matter. There's no reason why they wouldn't also agree. We need a global moratorium on AGI.

Here's a (failure?) mode that I and others are already in, but might be too embarrassed to write about: taking weird career/financial risks, in order to obtain the financial security, to work on alignment full-time...

I'd be more glad if I saw non-academic noob-friendly programs that pay people, with little legible evidence of their abilities, to upskill full-time.

CEEALAR offers this (free accommodation and food, and a moderate stipend), and was set up to avoid the failure mode mentioned (not just for alignment, for EA in general).

This is very cool! For archiving and rebuilding after a global catastrophe, how easy would this be to port to Kiwix for reading on a phone? My thinking is that if a few hundred LWers/EAs have this offline on their phones, that could go quite a long way. Burying phones with it on could also be good as a low hanging fruit (ideally you need a way of reading the data to be stored with the data). Happy to fund this if anyone wants to do it.

No I mean links to him in person to talk to him (or for that matter, even an email address or any way of contacting him..).

Oh wow, didn't realise how recent the Huawei recruitment of Field medalists was! This from today. Maybe we need to convince Huawei to care about AGI Alignment :)

Should also say - good that you are thinking about it P., and thanks for a couple of the links which I hadn't seen before.

Maybe reaching Demis Hassabis first is the way to go though, given that he's already thinking about it, and has already mentioned it to Tao (according to the podcast). Does anyone have links to Demis? Would be good to know more about his "Avengers assemble" plan! The main thing is that the assembly needs to happen asap, at least for an initial meeting and "priming of the pump" as it were.

Yes, I think the email needs to come from someone with a lot of clout (e.g. a top academic, or a charismatic billionaire; or even a high-ranking government official) if we actually want him to read it and take it seriously.

Here's a list that's mostly from just the last few months (that is pretty scary): Deepmind’s Gato, Chinchilla, Flamingo and AlphaCode; Google's Pathways, PaLM, SayCan, Socratic Models and TPUs; OpenAI’s DALL-E 2; EfficientZero; Cerebras

Interested in how you would go about throwing money at scalable altruistic projects. There is a lot of money and ideas around in EA, but a relative shortage of founders, I think.

What is the machine learning project that might be of use in AI Alignment?

Not sure if it counts as an "out" (given I think it's actually quite promising), but definitely something that should be tried before the end:

“To the extent we can identify the smartest people on the planet, we would be a really pathetic civilization were we not willing to offer them NBA-level salaries to work on alignment.” - Tomás B.

Megastar salaries for AI alignment work

[Summary from the FTX Project Ideas competition]

Aligning future superhuman AI systems is arguably the most difficult problem currently facing humanity; and the most important. In order to solve it, we need all the help we can get from the very best and brightest. To the extent that we can identify the absolute most intelligent, most capable, and most qualified people on the planet – think Fields Medalists, Nobel Prize winners, foremost champions of intellectual competition, the most sought-after engineers – we aim to offer them salaries competitive with top sportspeople, actors and music artists to work on the problem. This is complementary to our AI alignment prizes, in that getting paid is not dependent on results. The pay is for devoting a significant amount of full time work (say a year), and maximum brainpower, to the problem; with the hope that highly promising directions in the pursuit of a full solution will be forthcoming. We will aim to provide access to top AI alignment researchers for guidance, affiliation with top-tier universities, and an exclusive retreat house and office for fellows of this program to use, if so desired.

[Yes, this is the "pay Terry Tao $10M" thing. FAQ in a GDoc here.]

Inner alignment (mesa-optimizers) is still a big problem.

Interesting. I note that they don't actually touch on x-risk in the podcast, but the above quote implies that Demis cares a lot about Alignment.

I wonder how fleshed out the full plan is? The fact that there is a plan does give me some hope. But as Tomás B. says below, this needs to be put into place now, rather than waiting for a fire alarm that may never come.

A list of potential miracles (including empirical "crucial considerations" [/wishful thinking] that could mean the problem is bypassed):

- Possibility of a failed (unaligned) takeoff scenario where the AI fails to model humans accurately enough (i.e. realise smart humans could detect its "hidden" activity in a certain way). [This may only set things back a few months to years; or could lead to some kind of Butlerian Jihad if there is a sufficiently bad (but ultimately recoverable) global catastrophe (and then much more time for Alignment the second time around?)].

- Valence realism being true. Binding problem vs AGI Alignment.

- Omega experiencing every possible consciousness and picking the best? [Could still lead to x-risk in terms of a Hedonium Shockwave].

- Moral Realism being true (and the AI discovering it and the true morality being human-compatible).

- Natural abstractions leading to Alignment by Default?

- Rohin’s links here.

- AGI discovers new physics and exits to another dimension (like the creatures in Greg Egan’s Crystal Nights).

- Simulation/anthropics stuff.

- Alien Information Theory being true!? (And the aliens having solved alignment).

I'm often acting based on my 10%-timelines

Good to hear! What are your 10% timelines?

1. Year with 10% chance of AGI?

2. P(doom|AGI in that year)?

Most EAs are much more worried about AGI being an x-risk than they are excited about AGI improving the world (if you look at the EA Forum, there is a lot of talk about the former and pretty much none about the latter). Also, no need to specifically try and reach EAs; pretty much everyone in the community is aware.

..Unless you meant Electronic Arts!? :)

Here's a more fleshed out version, FAQ style. Comments welcome.

Here's a version of this submitted as a project idea for the FTX Foundation.