Have You Tried Hiring People?

post by rank-biserial · 2022-03-02T02:06:39.656Z · LW · GW · 117 commentsContents

The purpose of this post is to call attention to a comment thread that I think needs it.

Excerpts From The ACX Thread:[1]

None

117 comments

The purpose of this post is to call attention to a comment thread that I think needs it.

Wait, I thought EA already had 46$ billion they didn't know where to spend, so I should prioritize direct work over earning to give? https://80000hours.org/2021/07/effective-altruism-growing/

rank-biserial [LW(p) · GW(p)]:

I thought so too. This comment thread on ACX shattered that assumption of mine. EA institutions should hire people to do "direct work". If there aren't enough qualified people applying for these positions, and EA has 46 billion dollars, then their institutions should (get this) increase the salaries they offer until there are.

There's this thing called "Ricardo's Law of Comparative Advantage". There's this idea called "professional specialization". There's this notion of "economies of scale". There's this concept of "gains from trade". The whole reason why we have money is to realize the tremendous gains possible from each of us doing what we do best.

This is what grownups do. This is what you do when you want something to actually get done. You use money to employ full-time specialists.

Excerpts From The ACX Thread:[1]

Daniel Kokotajlo:

For those trying to avert catastrophe, money isn't scarce, but researcher time/attention/priorities is. Even in my own special niche there are way too many projects to do and not enough time. I have to choose what to work on and credences about timelines make a difference.

Daniel Kirmani:

I don't get the "MIRI isn't bottlenecked by money" perspective. Isn't there a well-established way to turn money into smart-person-hours by paying smart people very high salaries to do stuff?

Daniel Kokotajlo:

My limited understanding is: It works in some domains but not others. If you have an easy-to-measure metric, you can pay people to make the metric go up, and this takes very little of your time. However, if what you care about is hard to measure / takes lots of time for you to measure (you have to read their report and fact-check it, for example, and listen to their arguments for why it matters) then it takes up a substantial amount of your time, and that's if they are just contractors who you don't owe anything more than the minimum to.

I think another part of it is that people just aren't that motivated by money, amazingly. Consider: If the prospect of getting paid a six-figure salary to solve technical alignment problems worked to motivate lots of smart people to solve technical alignment problems... why hasn't that happened already? Why don't we get lots of applicants from people being like 'Yeah I don't really care about this stuff I think it's all sci-fi but check out this proof I just built, it extends MIRI's work on logical inductors in a way they'll find useful, gimme a job pls." I haven't heard of anything like that ever happening. (I mean, I guess the more realistic case of this is someone who deep down doesn't really care but on the exterior says they do. This does happen sometimes in my experience. But not very much, not yet, and also the kind of work these kind of people produce tends to be pretty mediocre.)

Another part of it might be that the usefulness of research (and also manager/CEO stuff?) is heavy-tailed. The best people are 100x more productive than the 95th percentile people who are 10x more productive than the 90th percentile people who are 10x more productive than the 85th percentile people who are 10x more productive than the 80th percentile people who are infinitely more productive than the 75th percentile people who are infinitely more productive than the 70th percentile people who are worse than useless. Or something like that.

Anyhow it's a mystery to me too and I'd like to learn more about it. The phenomenon is definitely real but I don't really understand the underlying causes.

Melvin:

Consider: If the prospect of getting paid a six-figure salary to solve technical alignment problems worked to motivate lots of smart people to solve technical alignment problems... why hasn't that happened already?

I mean, does MIRI have loads of open, well-paid research positions? This is the first I'm hearing of it. Why doesn't MIRI have an army of recruiters trolling LinkedIn every day for AI/ML talent the way that Facebook and Amazon do?

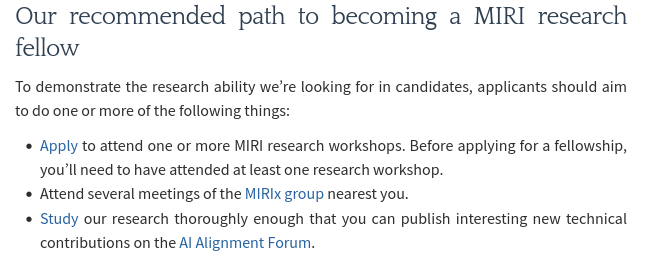

Looking at MIRI's website it doesn't look like they're trying very hard to hire people. It explicitly says "we're doing less hiring than in recent years". Clicking through to one of the two available job ads ( https://intelligence.org/careers/research-fellow/ ) it has a section entitled "Our recommended path to becoming a MIRI research fellow" which seems to imply that the only way to get considered for a MIRI research fellow position is to hang around doing a lot of MIRI-type stuff for free before even being considered.

None of this sounds like the activities of an organisation that has a massive pile of funding that it's desperate to turn into useful research.

Daniel Kokotajlo:

I can assure you that MIRI has a massive pile of funding and is desperate for more useful research. (Maybe you don't believe me? Maybe you think they are just being irrational, and should totally do the obvious thing of recruiting on LinkedIn? I'm told OpenPhil actually tried something like that a few years ago and the experiment was a failure. I don't know but I'd guess that MIRI has tried similar things. IIRC they paid high-caliber academics in relevant fields to engage with them at one point.)

Again, it's a mystery to me why it is, but I'm pretty sure that it is.

Some more evidence that it's true:

--Tiny startups beating giant entrenched corporations should NEVER happen if this phenomenon isn't real. Giant entrenched corporations have way more money and are willing to throw it around to improve their tech. Sure maybe any particular corporation might be incompetent/irrational, but it's implausible that all the major corporations in the world would be irrational/incompetent at the same time so that a tiny startup could beat them all.

--Similar things can be said about e.g. failed attempts by various governments to make various cities the "new silicon valley" etc.

Maybe part of the story is that research topics/questions are heavy-tailed-distributed in importance. One good paper on a very important question is more valuable than ten great papers on a moderately important question.

Melvin:

I can assure you that MIRI has a massive pile of funding and is desperate for more useful research. (Maybe you don't believe me? Maybe you think they are just being irrational

Maybe they're not being irrational, they're just bad at recruiting. That's fine, that's what professional recruiters are for. They should hire some.

If MIRI wants more applicants for its research fellow positions it's going to have to do better than https://intelligence.org/careers/research-fellow/ because that seems less like a genuine job ad and more like an attempt to get naive young fanboys to work for free in the hopes of maybe one day landing a job.

Why on Earth would an organisation that is serious about recruitment tell people "Before applying for a fellowship, you’ll need to have attended at least one research workshop"? You're competing for the kind of people who can easily walk into a $500K+ job at any FAANG, why are you making them jump through hoops?

Eye Beams are cool:

Holy shit. That's not a job posting. That's instructions for joining a cult. Or a MLM scam.

Froolow:

For what it is worth, I agree completely with Melvin on this point - the job advert pattern matches to a scam job offer to me and certainly does not pattern match to any sort of job I would seriously consider taking. Apologies to be blunt, but you write "it's a mystery to me why it is", so I'm trying to offer an outside perspective that might be helpful.

It is not normal to have job candidates attend a workshop before applying for a job in prestigious roles, but it is very normal to have candidates attend a 'workshop' before pitching them an MLM or timeshare. It is even more concerning that details about these workshops are pretty thin on the ground. Do candidates pay to attend? If so this pattern matches advanced fee scams. Even if they don't pay to attend, do they pay flights and airfare? If so MIRI have effectively managed to limit their hire pool to people who live within commuting distance of their offices or people who are going to work for them anyway and don't care about the cost.

Furthermore, there's absolutely no indication how I might go about attending one of these workshops - I spent about ten minutes trying to google details (which is ten minutes longer than I have to spend to find a complete list of all ML engineering roles at Google / Facebook), and the best I could find was a list of historic workshops (last one in 2018) and a button saying I should contact MIRI to get in touch if I wanted to attend one. Obviously I can't hold the pandemic against MIRI not holding in-person meetups (although does this mean they deliberately ceased recruitment during the pandemic?), and it looks like maybe there is a thing called an 'AI Risk for Computer Scientists' workshop which is maybe the same thing (?) but my best guess is that the next workshop - which is a prerequisite for me applying for the job - is an unknown date no more than six months into the future. So if I want to contribute to the program, I need to defer all job offers for my extremely in-demand skillset for the opportunity to apply following a workshop I am simply inferring the existence of.

The next suggested requirement indicates that you also need to attend 'several' meetups of the nearest MIRIx group to you. Notwithstanding that 'do unpaid work' is a huge red flag for potential job applicants, I wonder if MIRI have seriously thought about the logistics of this. I live in the UK where we are extremely fortunate to have two meetup groups, both of which are located in cities with major universities. If you don't live in one of those cities (or, heaven forbid, are French / German / Spanish / any of the myriad of other nationalities which don't have a meetup anything less than a flight away) then you're pretty much completely out of luck in terms of getting involved with MIRI. From what I can see, the nearest meetup to Terrence Tao's offices in UCLA is six hours away by car. If your hiring strategy for highly intelligent mathematical researchers excludes Terrence Tao by design, you have a bad hiring strategy.

The final point in the 'recommended path' is that you should publish interesting and novel points on the MIRI forums. Again, high quality jobs do not ask for unpaid work before the interview stage; novel insights are what you pay for when you hire someone.

Why shouldn't MIRI try doing the very obvious thing and retaining a specialist recruitment firm to headhunt talent for them, pay that talent a lot of money to come and work for them, and then see if the approach works? A retained executive search might cost perhaps $50,000 per hire at the upper end, perhaps call it $100,000 because you indicate there may be a problem with inappropriate CVs making it through anything less than a gold-plated search. This is a rounding error when you're talking about $2bn unmatched funding. I don't see why this approach is too ridiculous even to consider, and instead the best available solution is to have a really unprofessional hiring pipeline directly off the MIRI website.

Daniel Kirmani:

However, if what you care about is hard to measure / takes lots of time for you to measure then it takes up a substantial amount of your time.

One solution here would be to ask people to generate a bunch of alignment research, then randomly sample a small subset of that research and subject it to costly review, then reward those people in proportion to the quality of the spot-checked research.

But that might not even be necessary. Intuitively, I expect that gathering really talented people and telling them to do stuff related to X isn't that bad of a mechanism for getting X done. The Manhattan Project springs to mind. Bell Labs spawned an enormous amount of technical progress by collecting the best people and letting them do research. I think the hard part is gathering the best people, not putting them to work.

N. N.:

You probably know more about the details of what has or has not been tried than I do, but if this is the situation we really should be offering like $10 million cash prizes no questions asked for research that Eliezer or Paul or whoever says moves the ball on alignment. I guess some recently announced prizes are moving us in this direction, but the amount of money should be larger, I think. We have tons of money, right?

Xpym:

They (MIRI in particular) also have a thing about secrecy. Supposedly much of the potentially useful research not only shouldn't be public, even hinting that this direction might be fruitful is dangerous if the wrong people hear about it. It's obviously very easy to interpret this uncharitably in multiple ways, but they sure seem serious about it, for better or worse (or indifferent).

Dirichlet-to-Neumann:

Give Terrence Tao 500 000$ to work on AI alignement six months a year, letting him free to research crazy Navier-Stokes/Halting problem links the rest of his time... If money really isn't a problem, this kind of thing should be easy to do.

Daniel Kokotajlo:

Literally that idea has been proposed multiple times before that I know of, and probably many more times many years ago before I was around.

David Piepgrass:

a six-figure salary to solve technical alignment problems

Wait, what? If I knew that I might've signed the f**k up! I don't have experience in AI, but still! Who's offering six figures?

Clicking on links is a trivial inconvenience [LW · GW] for some. ↩︎

117 comments

Comments sorted by top scores.

comment by Tomás B. (Bjartur Tómas) · 2022-03-02T03:02:29.428Z · LW(p) · GW(p)

I strongly believe that, given the state of things, we really should spend way more on higher-quality people and see what happens. Up to and including paying Terry Tao 10 million dollars [LW · GW]. I would like to emphasize I am not joking about this.

I've heard lots of objections to this idea, and don't think any of them convince me it is not worth actually trying this.

Replies from: lc, WilliamKiely, daniel-kokotajlo, greg-colbourn↑ comment by lc · 2022-03-02T04:48:01.638Z · LW(p) · GW(p)

One objection I can conceive of why they're not doing this is that most fields medalists probably already have standing offers from places like Jane Street, and aren't taking them because they prefer academia. But if that were the case, and they'd already tried, that just tells me that MIRI might be really bad at recruiting. There has to be a dozen different reasons someone who wouldn't work at Jane Street would still be willing to do legitimate maths research, for the same amount of money as they'd get in finance, to save the damn world.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-02T17:03:38.172Z · LW(p) · GW(p)

I'm pretty sure MIRI has on the order of ten billion dollars at it's disposal. If this is the case, then they can outbid Jane Street. In fact, trying to snipe Jane Street engineers isn't a bad recruitment strategy, as a lot of the filtering has already been done for you.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2022-03-02T18:04:47.657Z · LW(p) · GW(p)

We don't have 10 billion dollars.

Replies from: rank-biserial, greg-colbourn↑ comment by rank-biserial · 2022-03-02T18:11:23.387Z · LW(p) · GW(p)

So you are funding-constrained.

↑ comment by Greg C (greg-colbourn) · 2022-03-02T19:42:28.043Z · LW(p) · GW(p)

SBF/FTX does though.

↑ comment by WilliamKiely · 2022-04-02T01:37:59.480Z · LW(p) · GW(p)

In a March 15th episode of the DeepMind podcast, Demis Hassabis said he has talked to Terence Tao about working on AI safety:

Replies from: Bjartur Tómas, greg-colbournI always imagine that as we got closer to the sort of gray zone that you were talking about earlier, the best thing to do might be to pause the pushing of the performance of these systems so that you can analyze down to minute detail exactly and maybe even prove things mathematically about the system so that you know the limits and otherwise of the systems that you're building. At that point I think all the world's greatest minds should probably be thinking about this problem. So that was what I would be advocating to you know the Terence Tao’s of this world, the best mathematicians. Actually I've even talked to him about this—I know you're working on the Riemann hypothesis or something which is the best thing in mathematics but actually this is more pressing. I have this sort of idea of like almost uh ‘Avengers assembled’ of the scientific world because that's a bit of like my dream.

↑ comment by Tomás B. (Bjartur Tómas) · 2022-04-02T16:02:27.094Z · LW(p) · GW(p)

His dream team is contingent on a fire alarm. This really needs to happen right now.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-04-02T19:49:27.730Z · LW(p) · GW(p)

I'm glad Demis is somewhat reasonable. How tf can we pull the fire alarm? A petition?

Replies from: P.↑ comment by Greg C (greg-colbourn) · 2022-04-03T12:21:31.282Z · LW(p) · GW(p)

Interesting. I note that they don't actually touch on x-risk in the podcast, but the above quote implies that Demis cares a lot about Alignment.

I wonder how fleshed out the full plan is? The fact that there is a plan does give me some hope. But as Tomás B. says below, this needs to be put into place now, rather than waiting for a fire alarm that may never come.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T04:46:46.203Z · LW(p) · GW(p)

I support this.

↑ comment by Greg C (greg-colbourn) · 2022-03-02T19:43:39.113Z · LW(p) · GW(p)

Here [EA(p) · GW(p)]'s a version of this submitted as a project idea for the FTX Foundation.

Replies from: greg-colbourn↑ comment by Greg C (greg-colbourn) · 2022-03-02T20:41:37.098Z · LW(p) · GW(p)

Here's a more fleshed out version, FAQ style. Comments welcome.

comment by Viliam · 2022-03-03T00:36:58.882Z · LW(p) · GW(p)

I have zero experience in hiring people; I only know how things seem from the opposite side (as a software developer). The MIRI job announcement seems optimized to scare people like me away. It involves a huge sunk cost in time -- so you only take it if you are 100% sure that you want the job and you don't mind the (quite real) possibility of paying the cost and being rejected at the end of the process anyway. If that is how MIRI wants to filter their applicant pool, great. Otherwise, they seem utterly incompetent in this aspect.

For starters, you might look at Stack Exchange and check how potential employees feel about being given a "homework" at an interview. Your process is worse than that. Because, if you instead invited hundred software developers on a job interview, gave them extensive homework, and then every single one of them ghosted you... at least you could get a suspicion that you were doing something wrong, and by experimenting you could find out what exactly it was. If you instead announce the homework up front, sure it is a honest thing to do, but then no one ever calls you, and you think "gee, no one is interested in working on AI alignment, I wonder why".

How to do it instead?

Here, my confidence is much lower, but it seems to me that you basically need to choose your strategy: do you have specific experts in mind, or are you just hoping to discover some talent currently unknown to you?

If you have specific experts in mind, you need to approach them actively. Not just post an announcement on your website, hope that they notice it, hope that they realize that you meant them, hope that they decide to jump through all the hoops, so that at the end you can tell them: "indeed, I had you in mind; I am glad you got the hints right -- now we can discuss salary and work conditions".

If you are looking for an unknown talent, you probably want some math and/or programming skills, so why not start with some mathematical or programming test, which would filter out the people without the skills. First round, publish the problems on LessWrong and let people type their answers into Google Forms. Yes, cheating is possible. That is why the second round will be done on Zoom, but only with those who passed the first round. Do not be too harsh at grading, just filter out the people who obviously do not have the talent. What is left is the people who have some talent in general, and are kinda interested in the job. Now you can start giving them more specific tasks and see how well they do. Maybe reward them by internet points first, so they can see how they compare to the competition. When the tasks get more difficult, start paying them real money, so that when it becomes too much of a time sink, it also becomes a side income. (Don't: "If you do this successfully, I will give you X money." Do: "Try your best, and I will give you X money for trying, as long as you give me something. Of course if the results are not up to our standards, we may at some moment decide to remove you from the list of participants.") At the end, either everyone fails, or you will have found your person. Even if everyone fails, you still get some data, for example at which step most of them failed.

Yes, there will be some wasted time and effort. It happens to all companies. Whenever you invite someone for an interview, and after an hour you decide that you don't want them for whatever reason, you just wasted one hour of salary of everyone who participated in that interview. That is a cost of hiring people.

Importantly: you need to have one person whose task is to do the recruitment. If you just expect it to happen, but it's actually no one's high priority, then... most likely it won't.

Replies from: JoshuaOSHickman↑ comment by JoshuaOSHickman · 2022-04-12T01:08:30.897Z · LW(p) · GW(p)

I recently went through the job search process as a software engineer who's had some technical posts approved on the Alignment Forum (really only one core insight, but I thought it was valuable). The process is so much better for standard web development jobs, you genuinely cannot possibly imagine, and I was solving a problem MIRI explicitly said they were interested in, in my Alignment Forum posts. It took (no joke) months to get a response from anyone at MIRI and that response ended up being a single dismissive sentence. It took less than a month from my first sending in a Software Engineer job application to a normal company to having a job paying [redacted generous offer].

comment by Aryeh Englander (alenglander) · 2022-03-02T16:28:18.416Z · LW(p) · GW(p)

My general impression based on numerous interactions is that many EA orgs are specifically looking to hire and work with other EAs, many longtermist orgs are looking to specifically work with longtermists, and many AI safety orgs are specifically looking to hire people who are passionate about existential risks from AI. I get this to a certain extent, but I strongly suspect that ultimately this may be very counterproductive if we are really truly playing to win.

And it's not just in terms of who gets hired. Maybe I'm wrong about this, but my impression is that many EA funding orgs are primarily looking to fund other EA orgs. I suspect that a new and inexperienced EA org may have an easier time getting funded to work on a given project than if a highly experienced non-EA org would apply for funding to pursue the same idea. (Again, entirely possible I'm wrong about that, and apologies to EA funding orgs if I am mis-characterizing how things work. On the other hand, if I am wrong about this then that is an indication that EA orgs might need to do a better job communicating how their funding decisions are made, because I am virtually positive that this is the impression that many other people have gotten as well.)

One reason why this selectivity kind of makes sense at least for some areas like AI safety is because of infohazard concerns, where if we get people who are not focused on the long-term to be involved then they might use our money to do capability enhancement research instead of pursuing longtermist goals. Again, I get this to a certain extent, but I think that if we are really playing to win then we can probably use our collective ingenuity to find ways around this.

Right now this focus on only looking for other EAs appears (to me, at least) to be causing an enormous bottleneck for achieving the goals we are ultimately aiming for.

Replies from: rank-biserial, rank-biserial↑ comment by rank-biserial · 2022-03-02T16:48:01.967Z · LW(p) · GW(p)

in-group bias

I'm shocked, shocked, to find gambling in this establishment.

↑ comment by rank-biserial · 2022-03-02T16:49:10.192Z · LW(p) · GW(p)

There is a precedent for doing secret work of high strategic importance, which is every intelligence agency and defense contractor ever.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-03-02T20:31:10.352Z · LW(p) · GW(p)

The CIA has the mission to protect the constitution of the United States. In practice, the CIA constantly violates the constitution of the United States.

Defense contractors constantly push for global politics to go in a direction that military budgets go up and that often involves making the world a less safe place.

Neither of those fields is well-aligned.

Replies from: rank-biserial, ryan_b↑ comment by rank-biserial · 2022-03-02T20:57:35.329Z · LW(p) · GW(p)

Yeah, the CIA isn't aligned. Defense contractors are quite aligned with the interests of their shareholders.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-03-02T23:14:20.416Z · LW(p) · GW(p)

The equivalent for defense contractors being aligned to make money for their stakeholders would be for an NGO like MIRI being aligned for increasing their budget through donations. A lot of NGO's are aligned in that way instead of being aligned for their mission.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-02T23:18:54.327Z · LW(p) · GW(p)

I'm reasonably certain that the people currently in MIRI genuinely want to prevent the rise of unfriendly AI.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-03-03T09:41:49.799Z · LW(p) · GW(p)

Yes, but they don't know how to hire other people to do that. Especially, they don't know how to get people who come mainly because they are paid a lot of money to care more about things besides that money.

↑ comment by ryan_b · 2022-03-03T17:40:03.159Z · LW(p) · GW(p)

This appears to be a problem with the whole organization, rather than a secrecy problem per se. The push from defense contractors in particular is highly public. It looks like there are three problems to solve here:

- Alignment of the org.

- Effectiveness of the org.

- Secrecy of the org.

There is clearly tension between these three, but just because they aren't fully independent doesn't mean they are mutually exclusive.

comment by romeostevensit · 2022-03-03T02:01:45.953Z · LW(p) · GW(p)

The objections myself and someone in the field of talent acquisition and retention received showed a failure to go meta. If the problem is not knowing how to turn money into talent then you hire for that. There is somewhere in the world someone whose time is very valuable who is among the best in the world at hiring. Pay them for their time.

Replies from: gjm↑ comment by gjm · 2022-03-03T20:50:49.185Z · LW(p) · GW(p)

It is not obvious to me that "hiring" is a generic enough thing that we can be confident that there's someone who's "best at hiring" and that MIRI or another similar organization would do well by hiring them.

It could be that what you need to do to hire people in class X to do work Y varies wildly according to what X and Y are, and that expertise in answering that question for some values of X and Y doesn't translate well to others.

Replies from: romeostevensit↑ comment by romeostevensit · 2022-03-03T22:05:13.758Z · LW(p) · GW(p)

I'm not referring to MIRI here but the big EA funds

Replies from: gjm↑ comment by gjm · 2022-03-04T00:49:23.491Z · LW(p) · GW(p)

You have a stronger case there, since they're less unusual than MIRI, but I think my point still applies: most people expert in hiring top mathematicians or computerfolks aren't used to hiring for charitable work, and most people expert in hiring for charities aren't used to looking for top mathematicians or computerfolks.

(Also: it's not clear to me how good we should expect the people who are best at hiring to be, even if their hiring skills are well matched to the particular problem at hand. Consider MIRI specifically for a moment. Suppose it's true either 1. that MIRI's arguments for why their work is important and valuable are actually unsound, and the super-bright people they're trying to hire can see this, although they could be bamboozled into changing their minds by an hour-long conversation with Eliezer Yudkowsky; or 2. that MIRI's arguments look unsound in a way that's highly convincing to those super-bright people, although they could be made to see the error of their ways by an hour-long conversation with Eliezer Yudkowsky. Then there's not much a hiring expert can actually do to help MIRI hire the people it's looking for, because the same qualities that make them the people MIRI wants also make them not want to work for MIRI, and what it takes to change their mind is skills and information someone who specializes in being good at hiring won't have. Of course this is a highly contrived example, but less extreme versions of it seem somewhat plausible.)

Replies from: romeostevensit↑ comment by romeostevensit · 2022-03-04T01:06:38.269Z · LW(p) · GW(p)

Object level I suspect EA wouldn't be hiring technical people directly. More like finding PIs who would hire teams to do certain things. There are many good PIs who don't mesh well with academia since academia selects for things uncorrelated and in some cases anti correlated with good science. Meta level I don't think we need to determine these things ourselves because this is exactly the sort of consideration that I want to hire for the experience of evaluating and executing on.

Replies from: gjmcomment by lc · 2022-03-02T03:54:47.668Z · LW(p) · GW(p)

This:

Is either a recruitment ad for a cult, or a trial for new members of an exclusive club. It's certainly not designed to intrigue what would be top applicants.

Replies from: daniel-kokotajlo, rank-biserial, None, recursing↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T04:44:09.210Z · LW(p) · GW(p)

If you think the reason MIRI hasn't paid a Fields medalist $500k a year is that they are averse to doing weird stuff... have you ever met them? They are the least-averse-to-doing-weird-stuff institution I have ever met.

Replies from: lc↑ comment by lc · 2022-03-03T02:48:25.065Z · LW(p) · GW(p)

have you ever met them?

I have not.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-03T03:02:38.593Z · LW(p) · GW(p)

Ah, OK. Fair enough then. I think I get why they seem like a cult from the outside, to many people at least. I think where I'm coming from in this thread is that I've had enough interactions with enough MIRI people (and read and judged enough of their work) to be pretty confident that they aren't a cult and that they are genuinely trying to save the world from AI doom and that some of them at least are probably more competent than me, generally speaking, and also exerting more effort and also less bound by convention than me.

I agree that it seems like they should be offering Terence Tao ten million dollars to work with them for six months, and I don't know why they haven't. (Or maybe they did and I just didn't hear about it?) I just have enough knowledge about them to rule out the facile "oh they are incompetent" or "oh it's because they are a cult" explanations. I was trying to brainstorm more serious answers (answers consistent with what I know about them) based on the various things I had heard (e.g. the experience of OpenPhil trying something similar, which led to a bunch of high-caliber academics producing useless research) and got frustrated with all the facile/cult accusations.

Replies from: None↑ comment by rank-biserial · 2022-03-02T04:34:27.751Z · LW(p) · GW(p)

The "contribute to the Alignment Forum" path isn't easier than traveling to a workshop, either. You're supposed to start an alignment blog, or publish alignment papers, or become a regular on LW just to have a chance at entering the hallowed ranks of the Alignment Forum, which, in turn, gives you a chance at ascending to the sanctum sanctorum of MIRI.

This is what prospective applicants see when they first try to post something to the Alignment Forum:

We accept very few new members to the AI Alignment Forum. Instead, our usual suggestion is that visitors post to LessWrong.com, a large and vibrant intellectual community with a strong interest in alignment research, along with rationality, philosophy, and a wide variety of other topics.

Posts and comments on LessWrong frequently get promoted to the AI Alignment Forum, where they'll automatically be visible to contributors here. We also use LessWrong as one of the main sources of new Alignment Forum members.

Replies from: lc, daniel-kokotajloIf you have produced technical work on AI alignment, on LessWrong or elsewhere -- e.g., papers, blog posts, or comments -- you're welcome to link to it here so we can take it into account in any future decisions to expand the ranks of the AI Alignment Forum.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T04:46:05.158Z · LW(p) · GW(p)

It's a lot easier than university departments, which require you to get multiple degrees (taking up something like a decade of your life depending on how you count it) before your application will even be considered.

Replies from: benjamin-j-campbell, rank-biserial, JoshuaOSHickman, None↑ comment by benjamin.j.campbell (benjamin-j-campbell) · 2022-03-02T05:31:20.706Z · LW(p) · GW(p)

By this stage of their careers, they already have those bits of paper. MIRI are asking people who don't a priori highly value alignment research to jump through extra hoops they haven't already cleared, for what they probably perceive as a slim chance of a job outside their wheelhouse. I know a reasonable number of hard science academics, and I don't know any who would put in that amount of effort in the application for a job they thought would be highly applied for by more qualified applicants. The very phrasing makes it sound like they expect hundreds of applicants and are trying to be exclusive. If nothing else is changed, that should be.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T17:57:49.599Z · LW(p) · GW(p)

Maybe they do in fact receive hundreds of applicants and must exclude most of them?

It's not MIRI's fault that there isn't a pre-existing academic discipline of AI alignment research.

Imagine SpaceX had a branch office in some very poor country that literally didn't have any engineering education whatsoever. Should they then lower their standards and invite applicants who never studied engineering? No, they should just deal with the fact that they won't have very many qualified people, and/or they should do things like host workshops and stuff to help people learn engineering.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-02T18:19:09.355Z · LW(p) · GW(p)

It wasn't Los Alamos' fault that there wasn't a pre-existing academic discipline of nuclear engineering, but they got by anyway, because they had Von Neumann and other very smart people. If MIRI is to get by, they need to recruit Von Neumann-level people. Like maybe Terry Tao.

Replies from: habryka4, daniel-kokotajlo↑ comment by habryka (habryka4) · 2022-03-03T18:24:29.051Z · LW(p) · GW(p)

Just to be clear, there was a thriving field of nuclear engineering, and Los Almost was run mostly by leading figures in that field. Also, money was never a constraint on the Manhattan Program and it's success had practically nothing to do with the availability of funding, but instead all to do with the war, the involvement of a number of top scientists, and the existence of a pretty concrete engineering problem that one could throw tons of manpower at.

The Manhattan project itself did not develop any substantial nuclear theory, and was almost purely an an engineering project. I do not know what we would get by emulating it at this point in time. The scientists involved in the Manhattan project did not continue running things like the Manhattan project, they went into other institutions optimized for intellectual process that were not capable of absorbing large amounts of money or manpower productively, despite some of them likely being able to get funding for similar things (some of them went on and built giant particle colliders, though this did not generally completely revolutionize or drastically accelerate the development of new scientific theories, though it sure was helpful).

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T20:44:20.175Z · LW(p) · GW(p)

I don't think we disagree?

Replies from: lc↑ comment by rank-biserial · 2022-03-02T04:49:13.591Z · LW(p) · GW(p)

Universities aren't known for getting things done. Corporations are. Are you trying to signal exclusivity and prestige, or are you trying to save the lightcone?

Replies from: Charlie Steiner, lc, daniel-kokotajlo↑ comment by Charlie Steiner · 2022-03-02T16:13:03.945Z · LW(p) · GW(p)

Universities are pretty well known for getting things done. Most nobel prize winning work happens in them, for instance. It's just that the things they do are not optimized for being the things corporations do.

I'm not saying we shouldn't be actually trying to hire people. In fact I have a post from last year saying that exact thing. But if you think corporations are the model for what we should be going for, I think we have very different mechanistic models of how research gets done.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T05:24:10.903Z · LW(p) · GW(p)

Corporations also often require advanced degrees in specific fields. Or multiple years of work experience.

Replies from: rank-biserial, None↑ comment by rank-biserial · 2022-03-02T05:29:51.547Z · LW(p) · GW(p)

Getting an advanced degree in, say, CS, qualifies me to work for many different companies. Racking up karma posting mathematically rigorous research on the Alignment Forum qualifies me to work at one (1) place: MIRI. If I take the "PhD in CS" route, I have power to negotiate my salary, to be selective about who I work for. Every step I take along the Alignment Forum path is wasted[1] unless MIRI deigns to let me in.

Not counting positive externalities ↩︎

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T17:58:43.666Z · LW(p) · GW(p)

See my reply to benjamin upthread.

↑ comment by [deleted] · 2022-03-02T16:36:07.577Z · LW(p) · GW(p)

I'm under the impression that that's partially a sign of civilizational failure from metastasizing bureaucracies. I've always heard that the ultra-successful Silicon Valley companies never required a degree (and also that that meritocratic culture has eroded and been partially replaced by credentialism, causing stasis).

EDIT: to be clear, this means I disagree with the ridiculous hyperbole upthread of it being "cultish", and in a lot of ways I'm sure the barriers to employment in traditional fields are higher. Still, as an outsider who's missing lots of relevant info, it does seem like it should be possible to do a lot better.

↑ comment by JoshuaOSHickman · 2022-04-12T01:12:17.158Z · LW(p) · GW(p)

I think you're buying the hype of how much Alignment Forum posts help you even get the attention of MIRI way too much. I have a much easier time asking university departments for feedback, and there is a much smoother process for applying there.

↑ comment by [deleted] · 2022-03-29T11:22:18.005Z · LW(p) · GW(p)

Those multiple degrees are high cost but very low risk, because even if you don't get into the university department, these degrees will give you lots of option value, while a 6 month gap in your CV trying to learn AI Safety on your own does not. More likely you will not survive the hit on your mental health.

I personally decided not to even try AI Safety research for this reason.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-29T11:29:32.891Z · LW(p) · GW(p)

Grad school is infamously bad for your mental health. Something like one-third of my classmates dropped out. My AI safety researcher friends are overall less depressed and more happy, as far as I can tell, though it's hard to tell because it's so subjective.

Replies from: None↑ comment by [deleted] · 2022-03-29T11:37:37.584Z · LW(p) · GW(p)

These less depressed people you talk about, are they already getting paid as AI safety researchers, or are they self-studying to (hopefully) become AI safety researchers?

In any case, I'm clearly generalising from my own situation, so it may not extend very far. To flesh out this data point: I had 2 years of runway, so money wasn't a problem, but I already felt beaten down by LW to the extent that I couldn't really take any more hits to my self-esteem, so I couldn't risk putting myself up for rejection again. That's basically why I mostly left LW.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-29T19:38:03.052Z · LW(p) · GW(p)

Ah, good point, mostly the former category. I only know a few people in the latter category.

↑ comment by [deleted] · 2022-03-02T17:26:30.269Z · LW(p) · GW(p)

Is either a recruitment ad for a cult,

Oh come, don't be hyperbolic. The main things that makes a cult a cult are absent. And I'm under the impression that plenty of places have a standard path for inexperienced people that involves an internship or whatever. And since AI alignment is an infant field, no one has the relevant experience on their resumes. (The OP mentions professional recruiters, but I would guess that the skill of recruiting high-quality programmers doesn't translate to recruiting high-quality alignment researchers.)

I do agree that, as an outsider, it seems like it should be much more possible to turn money into productive-researcher-hours, even if that requires recruiting people at Tao's caliber, and the fact that that's not happening is confusing & worrying to me. (Though I do feel bad for the MIRI people in this conversation; it's not entirely fair, since if somehow they in fact have good reason to believe that the set of people who can productively contribute is much tinier that we'd hope (eg: Tao said no, and literally everyone else isn't good enough), they might have to avoid explicitly explaining that to avoid rudeness and bad PR.)

I'm going to keep giving MIRI my money because it seems like everyone else is on a more-doomed path, but as a donor I would prefer to see more visible experimentation (since they said their research agendas didn't pan out and they don't see a path to survival). Eg I'm happy with the Visible Thoughts project. (My current guess (hope?) is that they are experimenting with some things that they can't talk about, which I'm 100% fine with; still seems like some worthwhile experimentation could be public.)

Replies from: rank-biserial, gbear605, TAG, ViktoriaMalyasova↑ comment by rank-biserial · 2022-03-02T17:41:34.172Z · LW(p) · GW(p)

Imagine you're a 32-year old software engineer with a decade of quality work experience and a Bachelor's in CS. You apply for a job at Microsoft, and they tell you that since their tech stack is very unusual, you have to do a six-month unpaid internship as part of the application process, and there is no guarantee that you get the job afterwards.

This is not how things work. You hire smart people, then you train them. It can take months before new employees are generating value, and they can always leave before their training is complete. This risk is absorbed by the employer.

Replies from: ChristianKl, None↑ comment by ChristianKl · 2022-03-02T20:40:38.344Z · LW(p) · GW(p)

If you have an unusual tech stack then the question of how to train people in that tech stack is fairly trivial. In a pre-pragmatic field, the question of how to train people to effectively work in the field is nontrivial.

↑ comment by [deleted] · 2022-03-02T18:03:28.915Z · LW(p) · GW(p)

Wait, is the workshop 6 months? I assumed it was more like a week or two.

This is not how things work. You hire smart people, then you train them

Sometimes that is how things work. Sometimes you do train them first while not paying them, then you hire them. And for most 32-year old software engineers, they have to go through a 4-8 year training credentialing process that you have to pay year's worth of salary to go to. I don't see that as a good thing, and indeed the most successful places are famous for not doing that, but still.

To reiterate, I of course definitely agree that they should try using money more. But this is all roughly in the same universe of annoying hoop-jumping as typical jobs, and not roughly in the same universe as the Branch Davidians, and I object to that ridiculous hyperbole.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-02T18:28:51.477Z · LW(p) · GW(p)

they have to go through a 4-8 year training process that you have to pay year's worth of salary to go to

They go through a 4-8 year credentialing process that is a costly and hard-to-Goodhart signal of intelligence, conscientiousness, and obedience. The actual learning is incidental.

Replies from: None↑ comment by [deleted] · 2022-03-02T18:37:40.132Z · LW(p) · GW(p)

Okay, edited. If anything, that strengthens my point.

Replies from: rank-biserial, lc↑ comment by rank-biserial · 2022-03-02T18:40:47.968Z · LW(p) · GW(p)

See this comment [LW(p) · GW(p)].

Replies from: None↑ comment by [deleted] · 2022-03-02T19:02:17.917Z · LW(p) · GW(p)

... And? What point do you think I'm arguing?

The traditional way has its costs and benefits (one insanely wasteful and expensive path that opens up lots of opportunities), as does the MIRI way (a somewhat time-consuming path that opens up a single opportunity). It seems like there's room for improvement in both, but both are obviously much closer to each other than either one is to Scientology, and that was the absurd comparison I was arguing against in my original comment. And that comparison doesn't get any less absurd just because getting a computer science degree is a qualification for a lot of things.

↑ comment by lc · 2022-03-03T02:24:56.037Z · LW(p) · GW(p)

No, it doesn't.

Replies from: None↑ comment by [deleted] · 2022-03-03T07:44:04.962Z · LW(p) · GW(p)

Sure it does. I was saying that the traditional pathway is pretty ridiculous and onerous. (And I was saying that to argue that MIRI's onerous application requirements are more like the traditional pathway and less like Scientology; I am objecting to the hyperbole in calling it the latter.) The response was that the traditional pathway is even more ridiculous and wasteful than I was giving it credit for. So yeah, I'd say that slightly strengthens my argument.

↑ comment by gbear605 · 2022-03-02T17:29:53.365Z · LW(p) · GW(p)

Based on what’s been said in this thread, donating more money to MIRI has precisely zero impact on whether they achieve their goals, so why continue to donate to them?

Replies from: daniel-kokotajlo, None↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T18:00:33.533Z · LW(p) · GW(p)

FWIW I don't donate to MIRI anymore much myself precisely because they aren't funding-constrained. And a MIRI employee even advised me as much.

↑ comment by [deleted] · 2022-03-02T18:04:47.218Z · LW(p) · GW(p)

Based on what’s been said in this thread, donating more money to MIRI has precisely zero impact on whether they achieve their goals

Well obviously, I disagree with this! As I said in my comment, I'm eg tentatively happy about the Visible Thoughts project. I'm hopeful to see more experimentation in the future, hopefully eventually narrowing down to an actual plan.

Worst case scenario, giving them more money now would at least make them more able to "take advantage of a miracle" in the future (though obviously I'm really really hoping for more than that).

Replies from: yitz↑ comment by Yitz (yitz) · 2022-03-04T18:27:22.852Z · LW(p) · GW(p)

That seems a bit like a Pascal's mugging to me, especially considering there are plenty of other organizations to give to which don't rely on a potential future miracle which may or may not require a tremendous sum of already existing money in the organization...

↑ comment by TAG · 2022-03-02T17:50:36.313Z · LW(p) · GW(p)

Are you taking the view that someone seeing the ad is not going to think MIRI is a cult unless it ticks all the boxes? Because they are actually going to be put off if it ticks any of the boxes

Replies from: None↑ comment by [deleted] · 2022-03-02T17:55:54.874Z · LW(p) · GW(p)

I'm taking the view that most people would think it's an onerous requirement and they're not willing to jump through those hoops, not that it's a cult. It just doesn't tick the boxes of that, unless we're defining that so widely as to include, I dunno, the typical "be a good fit for the workplace culture!" requirement that lots of jobs annoyingly have.

It's obviously much closer to "pay several hundred thousand dollars to be trained at an institution for 4-6 years (an institution that only considers you worthy if your essay about your personality, life goals, values, and how they combat racism is a good match to their mission), and then either have several years of experience or do an unpaid internship with us to have a good chance" than it is to the Peoples Temple. To say otherwise is, as I said, obviously ridiculous hyperbole.

↑ comment by ViktoriaMalyasova · 2022-03-02T21:17:53.786Z · LW(p) · GW(p)

they might have to avoid explicitly explaining that to avoid rudeness and bad PR

Well, I don't think that is the thing to worry about. Eliezer having high standards would be no news to me, but if I learn about MIRI being dishonest for PR reasons a second time [LW(p) · GW(p)], I am probably going to lose all the trust I have left.

Replies from: None↑ comment by [deleted] · 2022-03-02T22:30:48.142Z · LW(p) · GW(p)

I don't think "no comment", or rather making undetailed but entirely true comments, is dishonest.

Replies from: ViktoriaMalyasova↑ comment by ViktoriaMalyasova · 2022-03-13T17:09:05.585Z · LW(p) · GW(p)

I agree.

↑ comment by recursing · 2022-03-02T20:05:53.961Z · LW(p) · GW(p)

not just sitting on piles of cash because it would be "weird" to pay a Fields medalist 500k a year.

They literally paid Kmett 400k/year for years to work on some approach to explainable AI in Haskell.

I think people in this thread vastly overestimate how much money MIRI has (they have ~10M, see the 990s and the donations page https://intelligence.org/topcontributors/), and underestimate how much would top people cost.

I think the top 1% earners in the US all make >500k/year? Maybe if not the top 1% the top 0.5%?

Even Kmett (who is famous in the Haskell community, but is no Terence Tao) is almost certainly making way more than 500k$ now

↑ comment by 25Hour (aaron-kaufman) · 2022-03-03T00:43:35.732Z · LW(p) · GW(p)

From a rando outsider's perspective, MIRI has not made any public indication that they are funding-constrained, particularly given that their donation page says explicitly that:

We’re not running a formal fundraiser this year but are participating in end-of-year matching events, including Giving Tuesday.

Which more or less sounds like "we don't need any more money but if you want to give us some that's cool"

↑ comment by rank-biserial · 2022-03-02T20:29:31.234Z · LW(p) · GW(p)

I think people in this thread vastly overestimate how much money MIRI has, and underestimate how much would top people cost.

This implies that MIRI is very much funding-constrained, and unless you have elite talent then you should earn to give to organizations that will recruit those with elite talent. This applies to me and most people reading this, who are only around 2-4 sigmas above the mean.

Replies from: recursing↑ comment by recursing · 2022-03-02T21:12:47.511Z · LW(p) · GW(p)

I highly doubt most people reading this are "around 2-4 sigmas above the mean", if that's even a meaningful concept.

The choice between earning to give and direct work is definitely nontrivial though: there are many precedents of useful work done by "average" individuals, even in mathematics.

But I do get the feeling that MIRI thinks the relative value of hiring random expensive people would be <0, which seems consistent with how other groups trying to solve hard problems approach things.

E.g. I don't see Tesla paying billions to famous mathematicians/smart people to "solve self-driving".

Edit: Yudkowsky answered https://www.lesswrong.com/posts/34Gkqus9vusXRevR8/late-2021-miri-conversations-ama-discussion?commentId=9K2ioAJGDRfRuDDCs [LW(p) · GW(p)] , apparently I was wrong and it's because you can't just pay top people to work on problems that don't interest them.

↑ comment by ChristianKl · 2022-03-02T20:53:14.263Z · LW(p) · GW(p)

If MIRI would want to hire someone like Terence Tao for a million dollars per year they likely couldn't simply do that out of their normal budget. To do this they would need to convince donors to give them additional money for that purpose.

If there would be a general sense that this would be the way forward in MIRI and MIRI could express that to donors, I would expect they would get the donor money for it.

Replies from: recursing↑ comment by recursing · 2022-03-02T21:03:14.776Z · LW(p) · GW(p)

They would need to compete with lots of other projects working on AI Alignment.

But yes, I fundamentally agree: if there was a project that convincingly had a >1% chance of solving AI alignment it seems very likely it would be able to raise ~1M/year (maybe even ~10?)

↑ comment by ChristianKl · 2022-03-02T23:14:36.753Z · LW(p) · GW(p)

They would need to compete with lots of other projects working on AI Alignment.

I don't think that's the case. I think that if OpenPhil would believe that there's more room for funding in promising AI alignment research they would spend more money on it than they currently do.

I think the main reason that they aren't giving MIRI more money than they are giving, is that they don't believe that MIRI would spend more money effectively.

comment by Yitz (yitz) · 2022-03-02T13:03:54.294Z · LW(p) · GW(p)

Could someone from MIRI step in here to explain why this is not being done? This seems like an extremely easy avenue for improvement.

Replies from: Vaniver↑ comment by Vaniver · 2022-03-04T22:24:58.782Z · LW(p) · GW(p)

This seems like an extremely easy avenue for improvement.

First, if you think this because you have experience recruiting people for projects like this (i.e. it "seems easy" because you have an affordance for it, not because you don't know what the obstacles are), please reach out to someone at MIRI (probably Malo, maybe Nate), and also PM me so I can bug them until they interview you.

As for why it hasn't happened yet:

I no longer work for MIRI, and so am not speaking for current-MIRI, but when I did work at MIRI I was one of the three sort-of-responsible-for-recruitment employees. (Buck Shlegeris was the main recruiter, and primarily responsible for engineering hires; I was in charge of maintaining the agent foundations pipeline and later trying to hire someone for the Machine Learning Living Library role, tho I think I mostly added value by staffing AIRCS workshops with Buck; Colm O'Riain took over maintaining that pipeline, among doing other things. Several MIRI board members were involved with hiring efforts, but I'm not quite sure how to compare that.)

I think the primary reason this got half-hearted investment was that we didn't have strong management capacity, and so wanted to invest primarily in people who could self-orient towards things that would be useful for existential risk. (If someone is at MIRI because MIRI is willing to pay them $1M/yr, they might focus on turning MIRI into the sort of place that is good at extracting money from OpenPhil/FTX instead of the sort of place that is good at decreasing the probability of x-risk, which seems like a betrayal of OpenPhil/FTX/the whole Earth. One could imagine instead having an org that was very good at figuring out how to make use of mercenaries, but that org was not MIRI.)

For the MLLL specifically, we had the problem that we were basically offering to pay someone to skill up into being hirable for a very high-paid ML position somewhere else, and so part of the interview was "can you gain the skill?" and the other part of the interview was "do we expect that you won't just leave?". I couldn't figure out how to do this successfully, and we didn't end up hiring anyone for that role (altho we did trial more than one person).

I think the secondary reason was that we didn't have people who were great recruiters, and so we didn't really get into a hiring spiral. [Like, I think it was basically luck that MIRI managed to hire Colm in the first place, and I'm not sure how we would have deliberately found a recruiter that got us more of the candidates we wanted.] I think Buck was our best person for that sort of thing--extroverted, good at thinking about AI safety issues, and good at interviewing people to figure out their level of technical skill--but also Buck is good at doing direct work, and so I think didn't put as much time into it as a full-time recruiter would have, and maybe being slow on that sort of thing lost us some candidates. [And, in retrospect, I'm really not sure this was the wrong call--I think we spent something like one Buck-year to get something like one Buck-year back in terms of other employees working for us.]

As an example of an element of what a recruiting spiral might have looked like (which I'm summarizing based off memory, since I can't review the relevant emails anymore): at one point Tanya Singh read Superintelligence, emailed MIRI interested in doing something about AI alignment, ended up in the "idk maybe" part of the application inbox, I scanned thru it and said "whoa this person looks actually exciting, let's try to get them a job at FHI or something", Malo responded with "idk why you're so excited, but this is the right contact person at FHI", and then after the introduction it ended up working out. (I'm not sure how much Tanya ended up increasing their recruitment capacity, but if she did then some of those hires are downstream of us doing something with our inbox, which was downstream of me reading it, which was downstream of efforts to hire me.)

[Actually, I wasn't around for this part, but I remember hearing that in the very early days MIRI got some mileage out of getting someone else to read Eliezer's inbox, because there were a bunch of quite valuable messages buried in the big pile of all the emails. But it mattered that it was someone with lots of context like Michael Vassar reading the emails, instead of some random secretary.]

I think a tertiary reason is that the funding growth ramped up relatively late in MIRI's history. When I started working for them in 2016, the plan for paying employees was "you tell us how much you need for money to not be an issue, and we'll decide whether or not to pay you that", which made sense for a non-profit that had some money but had to work for it. [I told them the "this is the number that would have my net cash flow be $1k/mo, and this is the number that would match my net income as a data scientist in Austin after correcting for Bay Area cost of living", and the latter number was 3X the former number; you can guess which they wanted to pay me ;).] When MIRI started hiring engineers (2018?), funding had reached the point that it was easy to offer people high-but-not-fully-competitive salaries (like, I think engineers were paid $100-200k, which was probably ~60% of what they could have gotten at Facebook or w/e?), and I think now funding has reached the point where it's reasonable to just drop big piles of money on people doing anything at all useful, and announce things like the Visible Thoughts Project [LW · GW]. [As another example, compare ARC's approach to funding the ELK prize [LW · GW] in 2022 to Paul's approach to a similar thing [LW · GW] in 2018.]

Replies from: yitz↑ comment by Yitz (yitz) · 2022-03-06T10:21:07.712Z · LW(p) · GW(p)

if you think this because you have experience recruiting people for projects like this (i.e. it "seems easy" because you have an affordance for it, not because you don't know what the obstacles are), please reach out to someone at MIRI (probably Malo, maybe Nate), and also PM me so I can bug them until they interview you.

I don't have any experience with direct recruiting (and would expect myself to be rather bad at it tbh), but I do have significant experience with PR, particularly media relations. If an EA-aligned organization could use more people on that, and would be willing to hire someone who can't work full-time for medical reasons, let me know.

comment by M. Y. Zuo · 2022-03-02T12:17:06.302Z · LW(p) · GW(p)

If we expect pareto distribution to apply then the folks who will really move the needle 10x or more will likely need to be significantly smarter and more competent than the current leadership of MIRI. There likely is a fear factor, found in all organizations, of managers being afraid to hire subordinates that are noticeably better than them as they could potentially replace the incumbents, see moral mazes.

This type of mediocrity scenario is usually only avoided if turnover is mandated by some external entity, or if management owns some stake, such as shares, that increases in value from a more competent overall organization.

Or of course if the incumbent management are already the best at what they do. This doesn’t seem likely as Eliezer himself mentioned encountering ’sparkly vampires’ and so on that were noticeably more competent.

The other factor is that now we are looking at a group that at the very least could probably walk into a big tech company or a hedge fund like RenTech, D.E. Shaw, etc., and snag a multi million dollar compensation package without a sweat, or who are currently doing so. Or likewise on the tenure track at a top tier school.

comment by 25Hour (aaron-kaufman) · 2022-03-02T06:12:43.767Z · LW(p) · GW(p)

> Give Terrence Tao 500 000$ to work on AI alignement six months a year, letting him free to research crazy Navier-Stokes/Halting problem links the rest of his time... If money really isn't a problem, this kind of thing should be easy to do.

Literally that idea has been proposed multiple times before that I know of, and probably many more times many years ago before I was around.

What was the response? (I mean, obviously it was "not interested", otherwise it would've happened by now, but why?)

Replies from: daniel-kokotajlo, yitz↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T18:03:34.314Z · LW(p) · GW(p)

IDK, I would love to know! Like I said, it's a mystery to me why this is happening.

I do remember some OpenPhil people telling me once that they tried paying high-caliber / excellent-CV academics a wad of cash to work on research topics relevant to OpenPhil... and they succeeded in getting them to do the research... but the research was useless.

↑ comment by Yitz (yitz) · 2022-03-02T13:00:56.652Z · LW(p) · GW(p)

Seconding this, considering we’ve got plenty of millionaires (and maybe a few billionaires?) in our community, I don’t see any good reason not to try something along these lines.

comment by Dagon · 2022-03-02T22:09:45.723Z · LW(p) · GW(p)

[edit: my thoughts are mostly about AI alignment and research. Some discussion seems to be about "EA", which likely DOES contain a bunch of underfunded "can probably buy it" projects, so I'd be surprised if there's a pile of unspent money unsure how to hire mosquito-net researchers or whatever. ]

This doesn't seem that surprising to me - the alignment problem in employees remains unsolved, and hiring people to solve AI Alignment seems one level abstracted from that. IMO (from outside), MIRI is still in the "it takes a genius missionary to know what to do next" phase, not the "pay enough and it'll succeed" one.

I suspect that even the framing of the question exposes a serious underlying confusion. There just isn't that much well-defined "direct work" to buy. People in the in-crowd around here often call something a "technical problems" when they mean "philosophical problem requiring rigor". Before you can assign work or make good use of very smart workers who don't join your cult, but just want a nice paycheck for doing what they're good at, you need enough progress on monitoring and measurement of alignment that you have any idea if they're helping or hurting your mission.

I've been involved in recruiting and hiring top-level (and mid-level and starting) technical and management talent at both big (FAANG) and medium (under 500 people) companies, and the single best strategy I know is to have lots of shovel-ready work for reasonably-well-paid entry- and mid-level employees, and over a few years some of those will evolve into stars. Hiring existing stars works too, but requires the reputation and product that attracts them. This ecosystem requires shockingly more sustainable throughput (revenue and self-funded growth) than I believe MIRI has any desire to create. Almost certainly there isn't enough funding to make it work as a "pure expense" model.

Another common path is acquisition - find a company or group you want to hire, and overpay for their product in order to bring their key employees in. If you're actually looking to spend $billions, this is probably the right answer - find people solving somewhat-similar technical problems, and combine them with your people solving the theoretical alignment problems.

↑ comment by rank-biserial · 2022-03-02T23:15:30.398Z · LW(p) · GW(p)

you need enough progress on monitoring and measurement of alignment that you have any idea if they're helping or hurting your mission

If you have a deterministic criterion for if something makes the alignment situation better or worse, then you've already solved alignment.

Replies from: Dagoncomment by tailcalled · 2022-03-02T07:24:59.553Z · LW(p) · GW(p)

Even as someone generally interested in alignment while doing a job search, I had trouble seeing anything that'd work for me (though that is also partly because I live in Europe).

comment by RobertM (T3t) · 2022-03-02T06:05:14.947Z · LW(p) · GW(p)

I commented on that thread, and also wrote this [LW · GW] post a few months ago, partially to express some thoughts on the announcement of Lightcone (& their compensation philosophy). Recently I've even switched to looking for direct work opportunities [LW · GW] in the AI alignment sphere.

As is usual with ACX posts on the subject of AI alignment, the comments section has a lot of people who are totally unfamiliar with the space and are therefore extremely confident that various inadequacies (such as the difficulties EA orgs have in hiring qualified people) can easily be solved by doing the obvious thing. This is not to say that everything they suggest is wrong. Some of their suggestions are not obviously wrong. But that's mostly by accident.

I agree that "pay people market rate" is a viable strategy and should be attempted. At the very least I haven't yet seen many strong arguments for what benefit is accrued by paying below-market rates (in this specific set of circumstances), and thus default to "don't shoot yourself in the foot". I also think that most people are deeply confused about the kinds of candidates that MIRI (& similarly focused orgs) are looking for, in terms of researchers. There are some problems with operating in a largely pre-paradigmatic field which impose non-trivial constraints on the kinds of people it even makes sense to hire:

- To the extent that you have "research directions", they're usually much less defined than "demonstrate a highly specific, narrow result at the edge of a well-established field"

- As a result, you mostly need incoming researchers to be self-sufficient or bootstrap to that state very quickly, because every hour spent on mentorship and guidance by an existing researcher is an hour not spent doing research (it's probably not a total loss, but it's not great)

- If you say "ok, bite the bullet, and spend 6 months - 2 years training interested math/cs/etc students to be competent researchers", they've definitely tried this on a smaller scale and my understanding is that it doesn't tend to work very well to produce the kinds of researchers who can make sustained progress on attacking relevant problems without so much help from existing researchers that it stops being worth it

So the general attitude present of "just hire a proper recruiter!" is missing all the ways in which that kind of suggestion is a total non-starter. The kinds of candidates MIRI is looking for aren't legibly distinguished by their LinkedIn profiles. Recruiters in general have niches, and (to the best of my knowledge) this is not a niche that is well-served by recruiters. Superficially qualified candidates who don't actually believe in the problem that they're being paid to attack are probably not going to make much progress on it, but they will take up the time and attention of existing researchers.

Could MIRI do a better job of advertising their own job postings? Maybe, unless what they're doing is effective at preferentially filtering out bad candidates without imposing an overwhelming barrier to good candidates. MIRI is hardly the only player in the game at this point, though - Redwood, ARC, Anthropic, and Alignment AI are all hiring researchers and none of them (officially) require candidates to work their way up to being Alignment Forum regulars.

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-03-02T06:13:40.871Z · LW(p) · GW(p)

"ok, bite the bullet, and spend 6 months - 2 years training interested math/cs/etc students to be competent researchers" - but have they tried this with Terry Tao?

Replies from: habryka4, T3t↑ comment by habryka (habryka4) · 2022-03-03T00:01:53.413Z · LW(p) · GW(p)

People have tried to approach top people in their field and offer them >$500k/yr salaries, though not at any scale and not to a ton of people. Mostly people in ML and CS are already used to seeing these offers and frequently reject them, if they are in academia.

There might be some mathematicians for whom this is less true. I don't know specifically about Terence Tao. Sometimes people also react quite badly to such an offer and it seems pretty plausible they would perceive it as a kind of bribe and making such an offer could somewhat permanently sour relations, though I think it's likely one could find a framing for making such an offer that wouldn't run into these problems.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-03T00:40:30.973Z · LW(p) · GW(p)

My takeaway from this datapoint is that people are motivated by status more than money. This suggests ramping up advocacy via a media campaign, and making alignment high-status among the scientifically-minded (or general!) populace.

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-03-03T01:11:16.123Z · LW(p) · GW(p)

That is indeed a potential path that I am also more excited about. There are potentially ways to achieve that with money, though most ways I've thought about are also very prone to distorting what exactly is viewed as high-status, and creating strong currents in the field to be more prestigious, legible, etc. However, my guess is there are still good investments in the space, and I am pursuing some of them.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-03T05:58:00.956Z · LW(p) · GW(p)

I'd love to hear more about this (unless secrecy is beneficial for some reason). I'd also like to know if there's any way for people to donate to such an effort.

↑ comment by RobertM (T3t) · 2022-03-02T06:28:54.736Z · LW(p) · GW(p)

Yes, I think reaching out to differentiated candidates (like TT) is at least worth trying, since if it doesn't work the downside is bounded by the relatively small number of attempts you can make.

comment by RedMan · 2022-03-06T21:19:35.481Z · LW(p) · GW(p)

I've looked at these postings a few times, I kind of assumed that the organization was being run as a welfare program for members of a specific social club, that they were specifically excluding anyone who wasn't already central to that community, and that the primary hiring criteria was social capital.

So the perfect candidate is 'person already in this community with the highest social capital, and a compelling need for a means of support'.

comment by ChristianKl · 2022-03-02T15:39:22.897Z · LW(p) · GW(p)

OpenAI is an example of a project that threw a bunch of money at hiring people to do something about AI alignment. If you follow the perspective of Eliezer, they increased AI risk in the process.

You can easily go through LinkedIn and hire people to do alignment research. On the other hand, it's not easy to make sure that you are producing a net benefit on the resulting risk and not just speeding up AI development.

Intuitively, I expect that gathering really talented people and telling them to do stuff related to X isn't that bad of a mechanism for getting X done. The Manhattan Project springs to mind.

The Manhatten Project is not one that's famous for reducing X-risk.

Replies from: rank-biserial↑ comment by rank-biserial · 2022-03-02T16:43:44.190Z · LW(p) · GW(p)

My assertion is that spending money hiring very high quality people will get you technical progress. Whether that's technical progress on nuclear weapons, AI capabilities, or AI alignment is a function of what you incentivize the researchers to work on.

Yes, OpenAI didn't effectively incentivize AI safety work. I don't look at that and conclude that it's impossible to incentivize AI safety work properly.

Replies from: jimmy, ChristianKl↑ comment by jimmy · 2022-03-02T19:25:08.165Z · LW(p) · GW(p)

The take away isn't that it "isn't possible", since one failure can't possibly speak to all possible approaches. However, it does give you evidence about the kind of thing that's likely to happen if you try -- and apparently that's "making things worse". Maybe next time goes better, but it's not likely to go better on it's own, or by virtue of throwing more money at it.

The problem isn't that you can't buy things with money, it's that you get what you pay for and not (necessarily) what you want. If you give a ten million dollar budget to someone to buy predictions, then they might use it to pay a superforcaster to invest time into thinking about their problem, they might use it to set up and subsidize a prediction market, or they might just use it to buy time from the "top of the line" psychics. They will get "predictions" regardless, but the latter predictions do not get better simply by throwing more money at the problem. If you do not know how to buy results themselves, then throwing more money at the problem is just going to get you more of whatever it is you are buying -- which apparently wasn't AI safety last time.

Nuclear weapon research is fairly measurable, and therefore fairly buyable. AI capability research is too, at least if you're looking for marginal improvements. AI alignment research is much harder to measure, and so you're going to have a much harder time buying what you actually want to buy.

↑ comment by ChristianKl · 2022-03-02T19:20:50.289Z · LW(p) · GW(p)

If you think you know how to effectively incentivize people to work on AI safety in a way that produces AI alignment but does not increase AI capability buildup speed in a way that increases risk, why don't you explicitly advocate for a way you think the money could be spent?

Generally, whenever you spent a lot of money you get a lot of side effects and not only that what you want to encourage.

comment by Ericf · 2022-03-05T15:00:22.229Z · LW(p) · GW(p)

My 2 cents:

If you have high quality people working on a project, and you are already paying them "plenty of money," you can increase thier productivity by spending money to make sure they aren't wasting any of thier 24 hours/day doing things they don't want to do. People are reluctant to spend "thier own" money to hire a driver/maid/personal secretary/errand boy full time, but those are things that can be provided as an in-lind transfer. We know it works, because that's what Hollywood does: the star actors and directors have legions of assistants who anticipate and fulfil thier needs so the talent can focus on the task.

comment by DirectedEvolution (AllAmericanBreakfast) · 2024-01-12T07:10:55.657Z · LW(p) · GW(p)

As of October, MIRI has shifted its focus. See their announcement [LW · GW] for details.