Announcing MIRI’s new CEO and leadership team

post by Gretta Duleba (gretta-duleba) · 2023-10-10T19:22:11.821Z · LW · GW · 52 commentsContents

52 comments

In 2023, MIRI has shifted focus in the direction of broad public communication—see, for example, our recent TED talk, our piece in TIME magazine “Pausing AI Developments Isn’t Enough. We Need to Shut it All Down [LW · GW]”, and our appearances on various podcasts. While we’re continuing to support various technical research programs at MIRI, this is no longer our top priority, at least for the foreseeable future.

Coinciding with this shift in focus, there have also been many organizational changes at MIRI over the last several months, and we are somewhat overdue to announce them in public. The big changes are as follows.

- Malo Bourgon: Chief Executive Officer (CEO)

- Malo Bourgon, MIRI’s most long-standing team member next to Eliezer Yudkowsky, has transitioned from Chief Operating Officer into the senior leadership role at MIRI.[1] We piloted the change starting in February and made it official in June.

- This is partly an attempt to better reflect long-standing realities at MIRI. Nate’s focus for many years has been on high-level strategy and research, while Malo has handled much of the day-to-day running of the organization.

- This change also reflects that Malo is taking on a lot more decision-making authority and greater responsibility for steering the organization.

- Nate Soares: President

- Nate, who previously held the senior leadership role at MIRI (with the title of Executive Director), has transitioned to the new role of President.

- As President (and as a board member), Nate will continue to play a central role in guiding MIRI and setting our vision and strategy.

- Eliezer Yudkowsky: Chair of the Board

- Eliezer, a co-founder of MIRI and a Senior Research Fellow, was already a member of MIRI’s board.

- We’ve now made Eliezer the board’s chair in order to better reflect the de facto reality that his views get a large weight in MIRI strategic direction.

- Edwin Evans, who was the board’s previous chair, remains on MIRI’s board.

- Eliezer, Nate, and Malo have different senses of which technical research directions are most promising. To balance their different views, the board currently gives each of Eliezer, Nate, and Malo a budget to fund different technical research, in addition to the research that’s funded by the organization as a whole.

- Alex Vermeer: Chief Operating Officer (COO)

- Alex has stepped up to replace Malo as COO.

- As COO, Alex is responsible for running/overseeing the operations team, as he has already been doing for some time, and he’ll continue to work closely with Malo (as he has for over a decade now) to help figure out what our core constraints are, and figure out how to break them.

- Jimmy Rintjema: Chief Financial Officer (CFO)

- Jimmy has been working for MIRI since 2015. Over the years, Jimmy has progressively taken on more and more of the responsibility for running MIRI’s business operations, including HR and finances.

- As part of this transition, Jimmy is taking on more responsibility and authority in this domain, and this title change is to reflect that.

Nate shared the following about handing over the lead role at MIRI to Malo:

This change is in large part an enshrinement of the status quo. Malo’s been doing a fine job running MIRI day-to-day for many many years (including feats like acquiring a rural residence for all staff who wanted to avoid cities during COVID, and getting that venue running smoothly). In recent years, morale has been low and I, at least, haven’t seen many hopeful paths before us. That has changed somewhat over the past year or so, with a heightened awareness of AI across-the-board opening up new social and political possibilities. I think that Malo’s better-positioned to take advantage of many of those than I would have been. I’m also hoping that he’s able to pull off even more impressive feats (and more regularly) now that he is in the top executive role.

In addition to these changes, we’ve also made two new senior staff hires to better manage, coordinate, and support MIRI’s work in our two main focus areas:

- Lisa Thiergart: Research Manager

- Lisa will be managing MIRI’s research staff and projects. Her work includes charting new research strategy, managing existing projects, improving research processes, as well as growing and supporting (new) research teams.

- Lisa is a computer scientist from TUM and Georgia Tech, with a background in academic and industry ML research and training in technology management and entrepreneurship from CDTM and Entrepreneur First. She worked for several years in ML research labs and startups, as well as spending 8 months doing alignment research at SERI MATS. Her past work included projects on model internals, RLHF on robotics, and neurotech/whole brain emulation.

- Lisa is also a grant advisor on the Foresight Alignment Fund.

- Gretta Duleba: Communications Manager

- I (Gretta) will be leading the communications team at MIRI, working with Rob Bensinger, Colm Ó Riain, Nate, Eliezer, and other staff to create succinct, effective ways of explaining the extreme risks posed by smarter-than-human AI and what we think should be done about this problem.

- I was previously a senior software engineering manager at Google, leading large teams on Google Maps and Google Analytics, before switching careers to work as an independent relationship counselor.

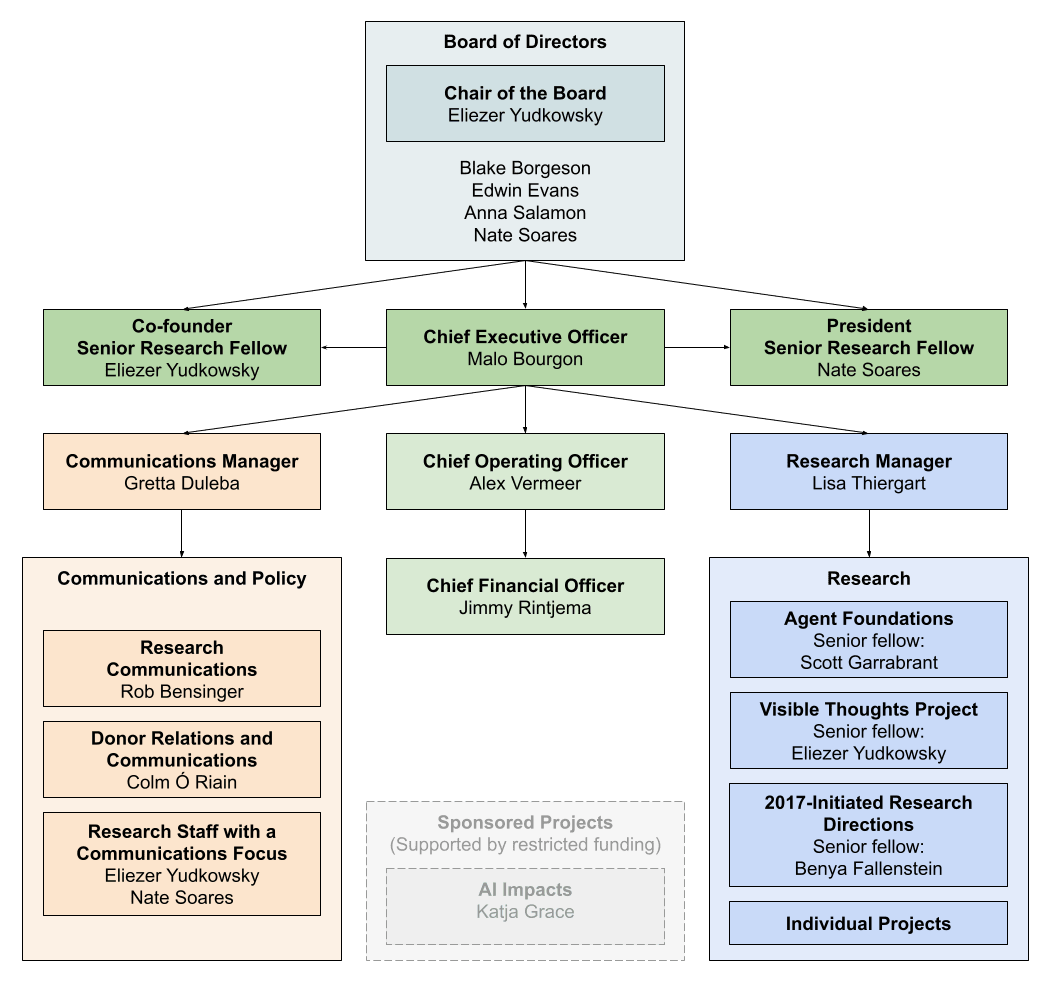

With these changes, our organizational structure now looks like this:

We’re hopeful that this new organizational structure will enable us to execute on our priorities more effectively and, with considerable luck, move the needle on existential risk from AI.

There’s a lot more we hope to say about our new (and still evolving) strategy, and about our general thinking on the world’s (generally very dire) situation. But I don’t want those announcements to further delay sharing the above updates, so I’ve factored our 2023 strategy updates into multiple posts, beginning with this one.[2]

- ^

It’s common practice for the chief executive of US nonprofits to have the title “Executive Director”. However, at MIRI we like our titles to have clear meanings, and the word “director” is also used to refer to members of the Board of Directors. For that reason, we only like to include “director” in someone’s title if they are in fact a member of MIRI’s board. During his tenure as our chief executive, Nate was also a member of MIRI’s board (and continues to be after this transition), and as such the title “Executive Director” seemed appropriate. However, as Malo is not on MIRI’s board he’ll be using the title of “CEO”.

- ^

Thanks to Rob Bensinger and Malo Bourgon for helping write and edit this post.

52 comments

Comments sorted by top scores.

comment by Orpheus16 (akash-wasil) · 2023-10-12T09:29:42.534Z · LW(p) · GW(p)

I'm excited to see MIRI focus more on public communications. A few (optional) questions:

- What do you see as the most important messages to spread to (a) the public and (b) policymakers?

- What mistakes do you think MIRI has made in the last 6 months?

- Does MIRI also plan to get involved in policy discussions (e.g. communicating directly with policymakers, and/or advocating for specific policies)?

- Does MIRI need any help? (Or perhaps more precisely "Does MIRI need any help from the right kind of person with the right kind of skills, and if so, what would that person or those skills look like?")

↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-12T19:09:19.710Z · LW(p) · GW(p)

- What do you see as the most important messages to spread to (a) the public and (b) policymakers?

That's a great question that I'd prefer to address more comprehensively in a separate post, and I should admit up front that the post might not be imminent as we are currently hard at work on getting the messaging right and it's not a super quick process.

- What mistakes do you think MIRI has made in the last 6 months?

Huh, I do not have a list prepared and I am not entirely sure where to draw the line around what's interesting to discuss and what's not; furthermore it often takes some time to have strong intuitions about what turned out to be a mistake. Do you have any candidates for the list in mind?

- Does MIRI also plan to get involved in policy discussions (e.g. communicating directly with policymakers, and/or advocating for specific policies)?

We are limited in our ability to directly influence policy by our 501(c)3 status; that said, we do have some latitude there and we are exercising it within the limits of the law. See for example this tweet by Eliezer.

- Does MIRI need any help? (Or perhaps more precisely "Does MIRI need any help from the right kind of person with the right kind of skills, and if so, what would that person or those skills look like?")

Yes, I expect to be hiring in the comms department relatively soon but have not actually posted any job listings yet. I will post to LessWrong about it when I do.

Replies from: malo, akash-wasil, malo↑ comment by Malo (malo) · 2023-10-12T22:48:13.177Z · LW(p) · GW(p)

- Does MIRI need any help? (Or perhaps more precisely "Does MIRI need any help from the right kind of person with the right kind of skills, and if so, what would that person or those skills look like?")

Yes, I expect to be hiring in the comms department relatively soon but have not actually posted any job listings yet. I will post to LessWrong about it when I do.

That said, I'd be excited for folks who think they might have useful background or skills to contribute and would be excited to work at MIRI, to reach out and let us know they exist, or pitch us on why they might be a good addition to the team.

Replies from: gretta-duleba↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-13T01:39:58.119Z · LW(p) · GW(p)

Ditto.

↑ comment by Orpheus16 (akash-wasil) · 2023-10-13T09:42:06.111Z · LW(p) · GW(p)

Thanks for this response!

Do you have any candidates for the list in mind?

I didn't have any candidate mistakes in mind. After thinking about it for 2 minutes, here are some possible candidates (though it's not clear to me that any of them were actually mistakes):

- Eliezer's TIME article explicitly mentions that states should "be willing to destroy a rogue datacenter by airstrike." On one hand, it seems important to clearly/directly communicate the kind of actions that Eliezer believes the world will need to be willing to take in order to keep us safe. On the other hand, I think this particular phrasing might have made the point easier to critique/meme. On net, it's unclear to me if this was a mistake, but it seems plausible that there could've been a way to rephrase this particular sentence that maintains clarity while reducing the potential for misinterpretations or deliberate attacks.

- I sometimes wonder if MIRI should have a policy division that is explicitly trying to influence policymakers. It seems like we have a particularly unique window over the next (few? several?) months, where Congress is unusually-motivated to educate themselves about AI. Until recently, my understanding is that virtually no one was advocating from a MIRI perspective (e.g., alignment is difficult, we can't just rely on dangerous capability evaluations, we need a global moratorium and the infrastructure required for it). I think the Center for AI Policy is now trying to fill the gap, and it seems plausible to me that MIRI should either start their own policy team ( or assume more of a leadership role at the Center for AI Policy-- EG being directly involved in hiring, having people in-person in DC, being involved in strategy/tactics, etc. This is of course conditional on the current CAIP CEO being open to this, and I suspect he would be).

- Perhaps MIRI should be critiquing some of the existing policy proposals. I think MIRI has played an important role in "breaking" alignment proposals (i.e., raising awareness about the limitations of a lot of alignment proposals, raising counterarguments, explaining failure modes). Maybe MIRI should be "breaking" some of the policy proposals or finding other ways to directly improve the ways policymakers are reasoning about AI risk.

Again, it seems plausible to me that none of these were mistakes; I'd put them more in the "hm... this seems unclear to me but seems worth considering" category.

Replies from: gretta-duleba↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-13T15:36:42.844Z · LW(p) · GW(p)

Re: the wording about airstrikes in TIME: yeah, we did not anticipate how that was going to be received and it's likely we would have wordsmithed it a bit more to make the meaning more clear had we realized. I'm comfortable calling that a mistake. (I was not yet employed at MIRI at the time but I was involved in editing the draft of the op-ed so it's at least as much on me as anybody else who was involved.)

Re: policy division: we are limited by our 501(c)3 status as to how much of our budget we can spend on policy work, and here 'budget' includes the time of salaried employees. Malo and Eliezer both spend some fraction of their time on policy but I view it as unlikely that we'll spin up a whole 'division' about that. Instead, yes, we partner with/provide technical advice to CAIP and other allied organizations. I don't view failure-to-start-a-policy-division as a mistake and in fact I think we're using our resources fairly well here.

Re: critiquing existing policy proposals: there is undoubtedly more we could do here, though I lean more in the direction of 'let's say what we think would be almost good enough' rather than simply critiquing what's wrong with other proposals.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2023-10-13T17:47:41.980Z · LW(p) · GW(p)

Thanks for this; seems reasonable to me. One quick note is that my impression is that it's fairly easy to set up a 501(c)4. So even if [the formal institution known as MIRI] has limits, I think MIRI would be able to start a "sister org" that de facto serves as the policy arm. (I believe this is accepted practice & lots of orgs have sister policy orgs.)

(This doesn't matter right now, insofar as you don't think it would be an efficient allocation of resources to spin up a whole policy division. Just pointing it out in case your belief changes and the 501(c)3 thing felt like the limiting factor).

↑ comment by Malo (malo) · 2023-10-12T23:15:27.098Z · LW(p) · GW(p)

- Does MIRI also plan to get involved in policy discussions (e.g. communicating directly with policymakers, and/or advocating for specific policies)?

We are limited in our ability to directly influence policy by our 501(c)3 status; that said, we do have some latitude there and we are exercising it within the limits of the law. See for example this tweet by Eliezer.

To expand on this a bit, I and a couple others at MIRI have been spending some time syncing up and strategizing with other people and orgs who are more directly focused on policy work themselves. We've also spent some time chatting with folks in government that we already know and have good relationships with. I expect we'll continue to do a decent amount of this going forward.

It's much less clear to me that it makes sense for us to end up directly engaging in policy discussions with policymakers as an important focus of ours (compared to focusing on broad public comms), given that this is pretty far outside of our area of expertise. It's definitely something I'm interested in exploring though, and chatting about with folks who have expertise in the space.

comment by David Hornbein · 2023-10-15T01:51:51.046Z · LW(p) · GW(p)

Seems also worth mentioning that Gretta Duleba and Eliezer Yudkowsky are in a romantic relationship, according to Yudkowsky's Facebook profile.

Replies from: malo↑ comment by Malo (malo) · 2023-10-17T01:04:50.702Z · LW(p) · GW(p)

FWIW, I approached Gretta about starting to help out with comms related stuff at MIRI, i.e., it wasn't Eliezer's idea.

comment by Writer · 2023-10-11T12:40:17.821Z · LW(p) · GW(p)

I (Gretta) will be leading the communications team at MIRI, working with Rob Bensinger, Colm Ó Riain, Nate, Eliezer, and other staff to create succinct, effective ways of explaining the extreme risks posed by smarter-than-human AI and what we think should be done about this problem.

I just sent an invite to Eliezer to Rational Animations' private Discord server so that he can dump some thoughts on Rational Animations' writers. It's something we decided to do when we met at Manifest. The idea is that we could distill his infodumps into something succinct to be animated.

That said, if in the future you have from the outset some succinct and optimized material you think we could help spread to a wide audience and/or would benefit from being animated, we can likely turn your writings into animations on Rational Animations, as we already did for a few articles in The Sequences.

The same invitation extends to every AI Safety organization.

EDIT: Also, let me know if more of MIRI's staff would like to join that server, since it seems like what you're trying to achieve with comms overlaps with what we're trying to do. That server basically serves as central point of organization for all the work happening at Rational Animations.

↑ comment by Said Achmiz (SaidAchmiz) · 2023-10-13T04:24:41.572Z · LW(p) · GW(p)

Question for you (the Rational Animations guys) but also for everyone else: should I link to these videos from readthesequences.com?

Specifically, I’m thinking about linking to individual videos from individual essays, e.g. to https://www.youtube.com/watch?v=q9Figerh89g from https://www.readthesequences.com/The-Power-Of-Intelligence. Good idea? Bad idea? What do folks here think?

Replies from: D0TheMath, Benito, Writer↑ comment by Garrett Baker (D0TheMath) · 2023-10-13T18:40:03.967Z · LW(p) · GW(p)

I think I got a lot out of not knowing anything about the broader rationalist community while reading the sequences. I think that consideration weighs against.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2023-10-13T21:32:33.005Z · LW(p) · GW(p)

Could you say more about this? I’m not sure that I quite see what you mean.

(Also, did you know that the bottom of each post on ReadTheSequences.com has a link to the comments section for that post, via GreaterWrong…?)

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2023-10-13T22:23:06.904Z · LW(p) · GW(p)

Two main considerations I have here:

-

There's this notion of how the average member of a group is very different from the ideal member of a group, which definitely holds for rationalists. And there's a common failure-mode when trying to join a group of acting superficially like their average member rather than their ideal member. There's some blog post somewhere which discusses this that I can't find. Having as little contact as possible with the community while making your plan on how to act like their ideal member nips this failure-mode in the butt.

-

Similarly to 1, there's some evidence that showing students' their relative performance on average reduces their outcomes, since many overestimate the competition, and work harder for it. I know this happened with me.

Also, did you know that the bottom of each post on ReadTheSequences.com has a link to the comments section for that post, via GreaterWrong…?)

I did know that, but the comments are old and sparse, and perhaps necessary when trying to understand a tricky post. The community ignorance consideration definitely weighs against those, but the 'help me make sense of this post' consideration made the comments net good when I was reading them first.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2023-10-13T23:21:15.821Z · LW(p) · GW(p)

Hmm, I think I see your general point. However, I am still not clear on what connection a video has to that point. Or are you thinking of YouTube comments under the video…?

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2023-10-15T17:57:25.734Z · LW(p) · GW(p)

Nothing big, just comments as you said, art made by rationalists giving a sense of the skill distribution of the movement, the view counter giving a sense of the popularity of the ideas, and potentially other inferences one could make.

↑ comment by Ben Pace (Benito) · 2023-10-13T17:23:24.348Z · LW(p) · GW(p)

I like the idea!

↑ comment by Writer · 2023-10-13T09:35:22.548Z · LW(p) · GW(p)

For Rational Animations, there's no problem if you do that, and I generally don't see drawbacks.

Perhaps one thing to be aware of is that some of the articles we'll animate will be slightly adapted. Sorting Pebbles and The Power of Intelligence are exactly like the original. The Parable of The Dagger has deletions of words such as "replied" or "said" and adds a short additional scene at the end. The text of The Hidden Complexity of Wishes has been changed in some places, with Eliezer's approval, mainly because the original has some references to other articles. In any case, when there are such changes, I write it in the LW post accompanying the videos.

↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-11T23:52:57.614Z · LW(p) · GW(p)

Thanks, much appreciated! Your work is on my (long) list to check out. Is there a specific video you're especially proud of that would be a great starting point?

Feel free to send me a discord server invitation at gretta@intelligence.org.

↑ comment by Writer · 2023-10-12T08:43:13.409Z · LW(p) · GW(p)

If you just had to pick one, go for The Goddess of Everything Else.

Here's a short list of my favorites.

In terms of animation:

- The Goddess of Everything Else

- The Hidden Complexity of Wishes

- The Power of Intelligence

In terms of explainer:

- Humanity was born way ahead of its time. The reason is grabby aliens. [written by me]

- Everything might change forever this century (or we’ll go extinct). [mostly written by Matthew Barnett]

Also, I've sent the Discord invite.

comment by the gears to ascension (lahwran) · 2023-10-14T00:36:43.002Z · LW(p) · GW(p)

I'm quite disappointed to hear that MIRI doesn't have significant technical momentum and hope to see other organizations pick up the slack on MIRI's work. Too many organizations have been distracted by language predictive models (edit: in contrast to control models). Right now the only org I know of that has recently published agency-first safety relevant stuff is the causal incentives group at deepmind.

comment by trevor (TrevorWiesinger) · 2023-10-11T22:19:19.641Z · LW(p) · GW(p)

Are people leading MIRI thinking that Bay Area Rationalists and LW users (who care about AI safety) should start skill building for communications research? That seems like a good capability to build, perhaps even 80,000-hours-style, but obviously it could plausibly have some drawbacks.

My thinking about this is that geopolitical research, world modelling, and open-source intelligence requires way too many people doing very simple tasks, e.g. distilling and summarizing information from Bill Bishop's newsletter that's relevant to AI geopolitics and the general trend of US-China relations, and all sorts of similar things that people didn't realize was relevant to the situation with AI until someone randomly made a Lesswrong post about it.

The impression I got from AI safety is that the AI alignment technical research people often have really good generalist skills and pick up important concepts quickly (e.g. psychology) and can do a lot with them, whereas AI governance people are often the type of person whose really good at getting government jobs. So I can see big upsides for AI alignment technical researchers spending some time reading things like chapter 4 of Joseph Nye's Soft Power, for example, which is a really well-respected source on the history of propaganda and information warfare waged by governments.

Replies from: gretta-duleba↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-12T00:01:00.836Z · LW(p) · GW(p)

I do not (yet) know that Nye resource so I don't know if I endorse it. I do endorse the more general idea that many folks who understand the basics of AI x-risk could start talking more to their not-yet-clued-in friends and family about it.

I think in the past, many of us didn't bring this up with people outside the bubble for a variety of reasons: we expected to be dismissed or misunderstood, it just seemed fruitless, or we didn't want to freak them out.

I think it's time to freak them out.

And what we've learned from the last seven months of media appearances and polling is that the general public is actually far more receptive to x-risk arguments than we (at MIRI) expected; we've been accustomed to the arguments bouncing off folks in tech, and we over-indexed on that. Now that regular people can play with GPT-4 and see what it does, discussion of AGI no longer feels like far-flung science fiction. They're ready to hear it, and will only get more so as capabilities demonstrably advance.

We hope that if there is an upsurge of public demand, we might get regulation/legislation limiting the development and training of frontier AI systems and the sales and distribution of the high-end GPUs on which such systems are trained.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-12T15:55:10.171Z · LW(p) · GW(p)

I do not (yet) know that Nye resource so I don't know if I endorse it.

That makes sense, I would never ask for such an endorsement; I don't think it would help MIRI directly, but Soft Power is one of the most influential concepts among modern international relations experts and China experts, and it's critical for understanding the environment that AI safety public communication takes place in (e.g. if the world is already oversaturated with highly professionalized information warfare [LW · GW] then that has big implications e.g. MIRI could be fed false data to mislead them into believing they are succeeding at describing the problem when in reality the needle isn't moving).

I think in the past, many of us didn't bring this up with people outside the bubble for a variety of reasons: we expected to be dismissed or misunderstood, it just seemed fruitless, or we didn't want to freak them out.

I think it's time to freak them out.

That's pretty surprising to me; for a while I assumed that the scenario where 10% of the population knew about superintelligence as the final engineering problem was a nightmare scenario e.g. because it would cause acceleration. I even abstained from helping Darren McKee with his book attempting to describe the AI safety problem to the public, even though I wanted to, because I was worried about contributing to making people more capable of spreading AI safety ideas.

If MIRI has changed their calculus on this, then of course I will defer to that since I have a sense of how far outside my area of expertise it is. But it's still a really big shift for me.

We hope that if there is an upsurge of public demand, we might get regulation/legislation limiting the development and training of frontier AI systems and the sales and distribution of the high-end GPUs on which such systems are trained.

I'm not sure what to make of this; AI advancement is pretty valuable for national security (e.g. allowing hypersonic nuclear missiles to continue flying under the radar if military GPS systems are destroyed or jammed/spoofed) and the balance of power between the US and China in other ways [LW · GW], similar to nuclear weapons; and if public opinion turned against nuclear weapons during the 1940s and 1950s, back when democracy was stronger, I'm not sure if it would have had much of an effect (perhaps it would have pushed it underground [LW · GW]). I'm still deferring to MIRI on this pivot and will help other people take the advice in this comment, and I found this comment really helpful and I'm glad that big pivots are being committed to, but I'll also still be confused and apprehensive.

Replies from: gretta-duleba↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-12T19:13:14.170Z · LW(p) · GW(p)

That's pretty surprising to me; for a while I assumed that the scenario where 10% of the population knew about superintelligence as the final engineering problem was a nightmare scenario e.g. because it would cause acceleration.

"Don't talk too much about how powerful AI could get because it will just make other people get excited and go faster" was a prevailing view at MIRI for a long time, I'm told. (That attitude pre-dates me.) At this point many folks at MIRI believe that the calculus has changed, that AI development has captured so much energy and attention that it is too late for keeping silent to be helpful, and now it's better to speak openly about the risks.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-10-12T23:34:14.883Z · LW(p) · GW(p)

Awesome, that's great to hear and these recommendations/guidance are helpful and more than I expected, thank you.

I can't wait to see what you've cooked up for the upcoming posts, MIRI's outputs (including decisions) are generally pretty impressive and outstanding, and I have a feeling that I'll appreciate and benefit from them even more now that people are starting to pull out the stops.

comment by philh · 2023-10-19T10:11:49.227Z · LW(p) · GW(p)

I'm curious what's your current funding situation? (Currently in this space I give, I think it's £210/month to MIRI and £150/month to LTFF and I've been considering reallocating.)

Replies from: malo↑ comment by Malo (malo) · 2023-10-20T16:13:04.302Z · LW(p) · GW(p)

Our reserves increased substantially in 2021 due to a couple of large crypto donations.

At the moment we've got ~$20M.

comment by kave · 2023-10-16T23:36:43.420Z · LW(p) · GW(p)

I'm curious if Katja's post [? · GW] had much influence on MIRI's recent policy work.

Replies from: RobbBB, malo↑ comment by Rob Bensinger (RobbBB) · 2023-10-17T20:16:25.228Z · LW(p) · GW(p)

I read and responded to some pieces of that post when it came out; I don't know whether Eliezer, Nate, etc. read it, and I'm guessing it didn't shift MIRI, except as one of many data points "person X is now loudly in favor of a pause (and other people seem receptive), so maybe this is more politically tractable than we thought".

I'd say that Kerry Vaughan was the main person who started smashing this Overton window, and this started in April/May/June of 2022. By late December my recollection is that this public conversation was already fully in swing and MIRI had already added our voices to the "stop building toward AGI" chorus. (Though at that stage I think we were mostly doing this on general principle, for lack of any better ideas than "share our actual long-standing views and hope that helps somehow". Our increased optimism about policy solutions mostly came later, in 2023.)

That said, I bet Katja's post had tons of relevant positive effects even if it didn't directly shift MIRI's views.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2023-10-17T20:55:39.038Z · LW(p) · GW(p)

Don't forget lc had a 624 karma post on it on April 4th 2022. [LW · GW]

↑ comment by Malo (malo) · 2023-10-17T00:40:08.658Z · LW(p) · GW(p)

Interesting, I don't think I knew about this post until I clicked on the link in your comment.

comment by Shmi (shminux) · 2023-10-11T04:41:44.047Z · LW(p) · GW(p)

That looks and reads... very corporate.

Replies from: malo↑ comment by Malo (malo) · 2023-10-11T05:07:00.482Z · LW(p) · GW(p)

Sometimes quick org updates about team changes can be a little dry. ¯\(ツ)/¯

I expect you’ll find the next post more interesting :)

(Edit: fixed typo)

comment by Sheikh Abdur Raheem Ali (sheikh-abdur-raheem-ali) · 2023-10-10T19:55:10.051Z · LW(p) · GW(p)

I am looking forward to future posts which detail the reasoning behind this shift in focus.

Replies from: lc, kave↑ comment by lc · 2023-10-10T21:36:24.138Z · LW(p) · GW(p)

Haven't they already been written?

Replies from: malo↑ comment by Malo (malo) · 2023-10-11T01:00:34.834Z · LW(p) · GW(p)

My read was that his comment was in response to this part at the end of the post:

There’s a lot more we hope to say about our new (and still evolving) strategy, and about our general thinking on the world’s (generally very dire) situation. But I don’t want those announcements to further delay sharing the above updates, so I’ve factored our 2023 strategy updates into multiple posts, beginning with this one.

↑ comment by kave · 2023-10-10T22:14:58.745Z · LW(p) · GW(p)

[Edit: whoops the parent was a response to the post saying such things were forthcoming. I was wrong].This seems like a fairly entitled framing

Replies from: Chris_Leong↑ comment by Chris_Leong · 2023-10-11T01:08:17.759Z · LW(p) · GW(p)

How so: "There’s a lot more we hope to say about our new (and still evolving) strategy, and about our general thinking on the world’s (generally very dire) situation. But I don’t want those announcements to further delay sharing the above updates, so I’ve factored our 2023 strategy updates into multiple posts, beginning with this one."

Replies from: kavecomment by Mitchell_Porter · 2023-10-12T10:23:28.448Z · LW(p) · GW(p)

The way I see it, the future of superhuman AI is emerging from the interactions of three forces: Big Tech companies that are pushing AI towards superintelligence, researchers who are trying to figure out how to make superintelligent AI safe, and activists who are trying to slow down or stop the march towards superintelligence. MIRI used to be focused on safety research, but now it's mostly trying to stop the march towards superintelligence, by presenting the case for the extreme danger of the current trajectory. Does that sound right?

Replies from: gretta-duleba, malo↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-12T19:16:45.650Z · LW(p) · GW(p)

I think that's pretty close, though when I hear the word "activist" I tend to think of people marching in protests and waving signs, and that is not the only way to contribute to the effort to slow AI development. I think more broadly about communications and policy efforts, of which activism is a subset.

It's also probably a mistake to put capabilities researchers and alignment researchers in two entirely separate buckets. Their motivations may distinguish them, but my understanding is that the actual work they do unfortunately overlaps quite a bit.

↑ comment by Malo (malo) · 2023-10-12T22:15:28.226Z · LW(p) · GW(p)

MIRI used to be focused on safety research, but now it's mostly trying to stop the march towards superintelligence, by presenting the case for the extreme danger of the current trajectory.

Yeah, given the current state of the game board we think that work in the comms/policy space seems more impactful to us on the margin, so we'll be focusing on that as our top priority and see how things develop, That won't be our only focus though, we'll definitely continue to host/fund research.

comment by Thoth Hermes (thoth-hermes) · 2023-10-13T17:22:50.361Z · LW(p) · GW(p)

I suppose I have two questions which naturally come to mind here:

- Given Nate's comment: "This change is in large part an enshrinement of the status quo. Malo’s been doing a fine job running MIRI day-to-day for many many years (including feats like acquiring a rural residence for all staff who wanted to avoid cities during COVID, and getting that venue running smoothly). In recent years, morale has been low and I, at least, haven’t seen many hopeful paths before us." (Bold emphases are mine). Do you see the first bold sentence as being in conflict with the second, at all? If morale is low, why do you see that as an indicator that the status quo should remain in place?

- Why do you see communications as being as decoupled (rather, either that it is inherently or that it should be) from research as you currently do?

↑ comment by Malo (malo) · 2023-10-13T21:00:21.885Z · LW(p) · GW(p)

- Given Nate's comment: "This change is in large part an enshrinement of the status quo. Malo’s been doing a fine job running MIRI day-to-day for many many years (including feats like acquiring a rural residence for all staff who wanted to avoid cities during COVID, and getting that venue running smoothly). In recent years, morale has been low and I, at least, haven’t seen many hopeful paths before us." (Bold emphases are mine). Do you see the first bold sentence as being in conflict with the second, at all? If morale is low, why do you see that as an indicator that the status quo should remain in place?

A few things seem relevant here when it comes to morale:

- I think, on average, folks at MIRI are pretty pessimistic about humanity's chances of avoiding AI x-risk, and overall I think the situation has felt increasingly dire over the past few years to most of us.

- Nate and Eliezer lost hope in the research directions they were most optimistic about, and haven’t found any new angles of attack in the research space that they have much hope in.

- Nate and Eliezer very much wear their despair on their sleeve so to speak, and I think it’s been rough for an org like MIRI to have that much sleeve-despair coming from both its chief executive and founder.

During my time as COO over the last ~7 years, I’ve increasingly taken on more and more of the responsibility traditionally associated at most orgs with the senior leadership position. So when Nate says “This change is in large part an enshrinement of the status quo. Malo’s been doing a fine job running MIRI day-to-day for many many years […]” (emphasis mine), this is what he’s pointing at. However, he was definitely still the one in charge, and therefore had a significant impact on the org’s internal culture, narrative, etc.

While he has many strengths, I think I’m stronger in (and better suited to) some management and people leadership stuff. As such, I’m hopeful that in the senior leadership position (where I’ll be much more directly responsible for steering our culture etc.), I’ll be able to “rally the troops” so to speak in a way that Nate didn’t have as much success with, especially in these dire times.

Replies from: thoth-hermes↑ comment by Thoth Hermes (thoth-hermes) · 2023-10-17T12:29:16.959Z · LW(p) · GW(p)

Unfortunately, I do not have a long response prepared to answer this (and perhaps it would be somewhat inappropriate, at this time), however I wanted to express the following:

They wear their despair on their sleeves [? · GW]? I am admittedly somewhat surprised by this.

Replies from: gretta-duleba↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-17T14:17:37.902Z · LW(p) · GW(p)

"Wearing your [feelings] on your sleeve" is an English idiom meaning openly showing your emotions.

It is quite distinct from the idea of belief as attire from Eliezer's sequence post, in which he was suggesting that some people "wear" their (improper) beliefs to signal what team they are on.

Nate and Eliezer openly show their despair about humanity's odds in the face of AI x-risk, not as a way of signaling what team they're on, but because despair reflects their true beliefs.

Replies from: thoth-hermes↑ comment by Thoth Hermes (thoth-hermes) · 2023-10-18T14:50:04.309Z · LW(p) · GW(p)

I wonder about how much I want to keep pressing on this, but given that MIRI is refocusing towards comms strategy, I feel like you "can take it."

The Sequences don't make a strong case, that I'm aware of, that despair and hopelessness are very helpful emotions that drive motivations or our rational thoughts processes in the right direction, nor do they suggest that displaying things like that openly is good for organizational quality. Please correct me if I'm wrong about that. (However they... might. I'm working on why this position may have been influenced to some degree by the Sequences right now. That said, this is being done as a critical take.)

If despair needed to be expressed openly in order to actually make progress towards a goal, then we would call "bad morale" "good morale" and vice-versa.

I don't think this is very controversial, so it makes sense to ask why MIRI thinks they have special, unusual insight into why this strategy works so much better than the default "good morale is better for organizations."

I predict that ultimately the only response you could make - which you have already - is that despair is the most accurate reflection of the true state of affairs.

If we thought that emotionality was one-to-one with scientific facts, then perhaps.

Given that there actually currently exists a "Team Optimism," so to speak, that directly appeared as an opposition party to what it perceives as a "Team Despair", I don't think we can dismiss the possibility of "beliefs as attire" quite yet.

Replies from: Raemon↑ comment by Raemon · 2023-10-18T16:32:52.261Z · LW(p) · GW(p)

so to speak, that directly appeared as an opposition party to what it perceives as a "Team Despair",

I think this gets it backward. There were lots of optimisitc people that kept not understanding or integrating the arguments that you should be less optimistic, and people kept kinda sliding off really thinking about it, and finally some people were like "okay, time to just actually be extremely blunt/clear until they get it."

(Seems plausible that then a polarity formed that made some people really react against the feeling of despair, but I think that was phase two)

↑ comment by Gretta Duleba (gretta-duleba) · 2023-10-13T22:30:27.629Z · LW(p) · GW(p)

2. Why do you see communications as being as decoupled (rather, either that it is inherently or that it should be) from research as you currently do?

The things we need to communicate about right now are nowhere near the research frontier.

One common question we get from reporters, for example, is "why can't we just unplug a dangerous AI?" The answer to this is not particularly deep and does not require a researcher or even a research background to engage on.

We've developed a list of the couple-dozen most common questions we are asked by the press and the general public and they're mostly roughly on par with that one.

There is a separate issue of doing better at communicating about our research; MIRI has historically not done very well there. Part of it is that we were/are keeping our work secret on purpose, and part of it is that communicating is hard. To whatever extent it's just about 'communicating is hard,' I would like to do better at the technical comms, but it is not my current highest priority.

↑ comment by Malo (malo) · 2023-10-13T23:09:06.231Z · LW(p) · GW(p)

Quickly chiming in to add that I can imagine there might be some research we could do that could be more instrumentally useful to comms/policy objectives. Unclear whether it makes sense for us to do anything like that, but it's something I'm tracking.