Embedded Interactive Predictions on LessWrong

post by Amandango · 2020-11-20T18:35:32.089Z · LW · GW · 88 commentsContents

Try it out How to use this Create a question Make a prediction Link your accounts Motivation Some examples of how to use this None 91 comments

Ought and LessWrong are excited to launch an embedded interactive prediction feature. You can now embed binary questions into LessWrong posts and comments. Hover over the widget to see other people’s predictions, and click to add your own.

Try it out

How to use this

Create a question

- Go to elicit.org/binary and create your question by typing it into the field at the top

- Click on the question title, and click the copy button next to the title – it looks like this:

- Paste the URL into your LW post or comment. It'll look like this in the editor:

Troubleshooting: if the prediction box fails to appear and the link just shows up as text, go to you LW Settings, uncheck “Activate Markdown Editor”, and try again.

Make a prediction

- Click on the widget to add your own prediction

- Click on your prediction line again to delete it

Link your accounts

- Linking your LessWrong and Elicit accounts allows you to:

- Filter for and browse all your LessWrong predictions on Elicit

- Add notes to your LessWrong predictions on Elicit

- See your calibration for your LessWrong predictions on Elicit

- Predict on LessWrong questions in the Elicit app

- To link your accounts:

- Make an Elicit account

- Send me (amanda@ought.org) an email with your LessWrong username and your Elicit account email

Motivation

We hope embedded predictions can prompt readers and authors to:

- Actively engage with posts. By making predictions as they read, people have to stop and think periodically about how much they agree with the author.

- Distill claims. For writers, integrating predictions challenges them to think more concretely about their claims and how readers might disagree.

- Communicate uncertainty. Rather than just stating claims, writers can also communicate a confidence level.

- Collect predictions. As a reader, you can build up a personal database of predictions as you browse LessWrong.

- Get granular feedback. Writers can get feedback on their content at a more granular level than comments or upvotes.

By working with LessWrong on this, Ought hopes to make forecasting easier and more prevalent. As we learn more about how people think about the future, we can use Elicit to automate larger parts of the workflow and thought process until we end up with end-to-end automated reasoning that people endorse. Check out our blog post to see demos and more context.

Some examples of how to use this

- To make specific predictions, like in Zvi’s post on COVID predictions [LW · GW]

- To express credences on claims like those in Daniel Kokotajlo’s soft takeoff post [LW · GW]

- Beyond LessWrong – if you want to integrate this into your blog or have other ideas for places you’d want to use this, let us know!

88 comments

Comments sorted by top scores.

comment by Raemon · 2020-11-20T23:19:02.235Z · LW(p) · GW(p)

Something that seems fairly important is the ability to mark your own answer before seeing the others, to avoid anchoring. (I don't know that everyone should be forced to do this but it seems useful to at least have the option. I noticed myself getting heavily anchored by some of the current question's existing answers)

Replies from: Raemon, Davidmanheim↑ comment by Davidmanheim · 2020-11-22T20:40:43.216Z · LW(p) · GW(p)

Strong +1 to this suggestion, at least as an option that people can set.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-22T20:54:07.151Z · LW(p) · GW(p)

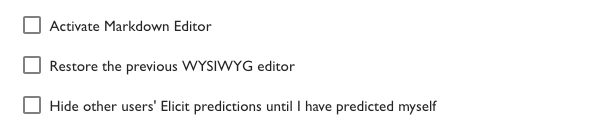

You actually can since last evening! In your user settings you should have:

↑ comment by adamShimi · 2020-11-22T23:05:22.395Z · LW(p) · GW(p)

Weird, I don't see this option in my settings.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-23T00:00:26.752Z · LW(p) · GW(p)

Sorry, my bad. Accidentally made the option admin-only. Will be fixed within the next two hours.

comment by habryka (habryka4) · 2020-11-21T03:02:59.098Z · LW(p) · GW(p)

Another feature that we also launched at the same time as this: Metaculus embeds!

Just copy paste any link to a Metaculus question into the editor, and it will automatically expand into the preview above (you can always undo the transformation with CTRL+Z).

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2020-11-22T10:48:07.694Z · LW(p) · GW(p)

GreaterWrong will now display Metaculus embeds created via the LessWrong editor.

(You can’t create Metaculus embeds via GreaterWrong… yet.)

comment by DanielFilan · 2020-11-21T05:50:19.190Z · LW(p) · GW(p)

I wish I could have my user settings so that I didn't see everybody else's predictions before making my own.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-21T06:52:08.414Z · LW(p) · GW(p)

Jim actually built that setting this afternoon! My guess is we will probably merge it sometime tomorrow, as long as we don't run into any more problems.

Replies from: fincomment by Amandango · 2020-12-01T03:39:42.750Z · LW(p) · GW(p)

An update: We've set up a way to link your LessWrong account to your Elicit account. By default, all your LessWrong predictions will show up in Elicit's binary database but you can't add notes or filter for your predictions.

If you link your accounts, you can:

* Filter for and browse your LessWrong predictions on Elicit (you'll be able to see them by filtering for 'My predictions')

* See your calibration for LessWrong predictions you've made that have resolved

* Add notes to your LessWrong predictions on Elicit

* Predict on LessWrong questions in the Elicit app

If you want us to link your accounts, send me an email (amanda@ought.org) with your LessWrong username and your Elicit account email!

comment by Adam Zerner (adamzerner) · 2020-11-20T21:07:54.068Z · LW(p) · GW(p)

This is awesome, congrats!

I predict that having to go to elicit.com/binary and paste the URL here will be a major barrier to usage and that if there was a button/prompt in the LW text editor it'd significantly reduce that barrier. People have to a) remember that this is an option available to them, b) remember or be able to look up the URL they need to go to (probably by visiting this LW post), and c) be motivated enough to continue. That said, I also think it makes sense to have it work the way it currently does as a version one.

Also, I'm curious to know how difficult it is to get this integrated on other websites?

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-21T03:00:35.819Z · LW(p) · GW(p)

Yep, if people like this and use it a bunch, I expect we would add a button to the toolbar somewhere.

Replies from: Pablo_Stafforini↑ comment by Pablo (Pablo_Stafforini) · 2020-11-21T12:19:11.496Z · LW(p) · GW(p)

↑ comment by Bird Concept (jacobjacob) · 2020-11-22T03:14:29.168Z · LW(p) · GW(p)

Community once again seems too optimistic, prior is just very heavily that most possible features never ship.

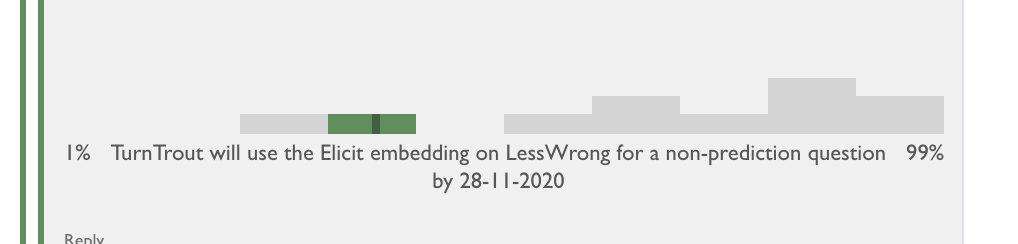

Replies from: TurnTrout↑ comment by TurnTrout · 2020-11-22T04:37:23.552Z · LW(p) · GW(p)

jacobjacob once again [LW(p) · GW(p)] seems too pessimistic, posterior is very heavily that when habryka makes a 60% yes prediction for a decision he has (partial) control over about a functionality which the community has glommed onto thus far, the community is also justified in expressing ~60% belief that the feature ships. :)

Also, we aren't selecting from "most possible features"!

Replies from: jacobjacob, DanielFilan, jacobjacob↑ comment by Bird Concept (jacobjacob) · 2021-12-06T05:03:24.742Z · LW(p) · GW(p)

This has now resolved false.

↑ comment by DanielFilan · 2020-11-23T08:40:04.595Z · LW(p) · GW(p)

It seems important to me that he is in the top 100 on the Metaculus leaderboard and you are not.

Replies from: ChristianKl, TurnTrout↑ comment by ChristianKl · 2020-11-23T08:55:26.138Z · LW(p) · GW(p)

As a fellow member of the top 100 Metaculus leaderboard I unfortunately have to tell you that it doesn't measure how well someone is calibrated. You get into it if you do a lot of predictions on Metaculus.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-11-23T20:10:24.036Z · LW(p) · GW(p)

I think this is only partly right. I've personally interfaced with most of the people on the top 20 -- some of them for 20+ hours; and I've generally been deeply impressed with them; and expect the tails of the Metaculus rankings to track important stuff about people's cognition.

But yeah, I also found that I could get to like 70 by just predicting the same as the community a bunch, and finding questions that would resolve in just a few days and extremizing really hard.

(That said, I still think I'm a reasonable forecaster, and have practiced it a lot independently. But don't think this Metaculus ranking is much evidence of that.)

Replies from: ChristianKl↑ comment by ChristianKl · 2020-11-23T21:08:11.711Z · LW(p) · GW(p)

You get metaculus points by being active on Metaculus. People who spent a lot of time on metaculus have thought a lot about about making predictions and that's valuable but it's not the same as it being a measure of calibration.

The the expection of jimrandomh all the people in the top 20 have more then 1000 predictions (jimrandomh has 800). I'm Rank 87 at the moment with 195 predictions I made.

If you go on GJOpen you can see the calibration of any user and use it to judge how well they are calibrated. Metaculus doesn't make that information publically available (you need to have level 6 and pay Tachyons to access the track record of another user, 50 Tachyons also feels too expensive to just do it for the sake of a discussion like this).

↑ comment by TurnTrout · 2020-11-23T15:31:47.258Z · LW(p) · GW(p)

Argument screens off authority, and I'm interested in hearing arguments. While that information should be incorporated into your prior, I don't see why it's worth mentioning as a counterargument. (To be sure, I'm not claiming that jacobjacob isn't a good predictor in general.)

Replies from: DanielFilan↑ comment by DanielFilan · 2020-11-23T19:32:56.513Z · LW(p) · GW(p)

It's reasonable to be unsure whether the "people don't ship things" consideration is stronger than the "people are excited" consideration. If you knew that the person who deployed the "people don't ship things" consideration was generally a better predictor (which you don't quite here, but let's simplify a bit), then that would suggest that the "people don't ship things" consideration is in fact stronger.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-11-23T20:13:43.986Z · LW(p) · GW(p)

(Actually downvoted Daniel for reasons similar to what TurnTrout mentions. Aumannian updating is so boring, even though it's profitable when you're betting all-things-considered... I also did give arguments above, but people mostly made jokes about my punctuation! #grumpy )

Replies from: TurnTrout, DanielFilan↑ comment by DanielFilan · 2020-11-24T00:03:44.813Z · LW(p) · GW(p)

Aumann updating involves trying to inhabit the inside perspective of somebody else and guess what they saw that made them believe what they do - hardly seems boring to me! Also the thing I was doing was ranking my friends at skills, which I think is one of the classic interesting things.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-11-24T06:52:39.875Z · LW(p) · GW(p)

I'm associating it with doing exactly not that. Just using outside variables like "what do they believe" and "how generally competent do I expect them to be". (I often see people going "but this great forecaster said 70%" and updating marginally closer, without even trying up build a model of that forecaster's inside view.)

Your version sounds fun.

Replies from: DanielFilan↑ comment by DanielFilan · 2020-11-24T17:16:58.670Z · LW(p) · GW(p)

I guess I'm really making a bid for 'Aumanning' to refer to the thing that Aumann's agreement theorem describes, rather than just partially deferring to somebody else.

↑ comment by Bird Concept (jacobjacob) · 2020-11-23T05:30:52.981Z · LW(p) · GW(p)

Not a crux :)

Also I'm betting on the prior so I'll have to accept taking a loss every now then expecting to do better, on average, in the long run

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2020-11-23T07:21:38.022Z · LW(p) · GW(p)

To clarify: it's not a lot of evidence that people say "yeah this thing is going to be great and we'll work on it a lot", in response to the very post where it was announced, only a few days afterward.

Like, I'd happily go to a bitcoin/Tesla party and take lots of bets against bitcoin/Tesla. I expect those places would give me some of the most generous odds for those bets.

Also it's some evidence habryka predicted it, but man, people in general are notoriously bad at predicting how much they'll get done with their time, so it's certainly not super strong.

(That being said, I think this integration is awesome and kudos to everyone. Just keeping my priors sensible :)

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-11-23T07:30:57.431Z · LW(p) · GW(p)

(That being said, I think this integration is awesome and kudos to everyone. Just keeping my priors sensible :)

I do not endorse this as a way to end parentheticals! Grrr!

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2020-11-23T20:04:02.660Z · LW(p) · GW(p)

You must understand—we have to ration our usage of parentheses, lest our strategic reserve again fails us in time of need…

↑ comment by Adam Zerner (adamzerner) · 2020-11-23T02:47:15.038Z · LW(p) · GW(p)

I think this question needs clarification. It's one thing to have a button that basically links to Ought (perhaps with some text explaining how it works; or perhaps Ought would have a specific landing page for LW that explains how it works). It's another thing for the the experience to be self-contained inside of LW, eg. where you wouldn't have to leave lesswrong.com and go to ought.com.

I'd say 90% likelihood for the former since it is simple and I sense that the LW team would judge it to be worth it, but maybe 20% for the latter since a) it is complex, b) I sense that there are other high priority things they'd like to get to, and c) I worry that usage of this feature will fizzle out due to my reasoning in the parent comment, and with usage fizzling out I expect that it'd fall down the LW team's priority list.

comment by TurnTrout · 2020-11-20T20:43:42.361Z · LW(p) · GW(p)

This looks so good! Great work by both Ought and the LW mods.

Perhaps this can also gauge approval (from 0% (I hate it) to 100% (I love it)), or even function as a poll (first decile: research agenda 1 seems most promising; second decile: research area 2...)... or Ought we not do things like that?

Replies from: jungofthewon, Amandango↑ comment by jungofthewon · 2020-11-20T21:51:06.686Z · LW(p) · GW(p)

try it and let's see what happens!

↑ comment by TurnTrout · 2020-11-21T19:37:18.403Z · LW(p) · GW(p)

This question resolves yes. [LW · GW]

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-11-21T19:43:49.016Z · LW(p) · GW(p)

woop woop making predictions in posts is the way to go

Replies from: TurnTrout↑ comment by Bird Concept (jacobjacob) · 2020-11-21T02:28:44.910Z · LW(p) · GW(p)

Your prior should be that "people don't do stuff", community looking way too optimistic at this time

↑ comment by Ben Pace (Benito) · 2020-11-21T03:11:08.395Z · LW(p) · GW(p)

I think TurnTrout is a pretty good example [? · GW] of someone who did stuff when anyone could but nobody did :)

↑ comment by Amandango · 2020-11-20T20:57:04.580Z · LW(p) · GW(p)

Thanks!! It's primarily intended for prediction, but I feel excited about people experimenting with different ways of using this and seeing which are most useful & how they change discussions, so am interested to see what happens if you use it for other purposes too.

comment by jungofthewon · 2020-11-20T20:42:55.229Z · LW(p) · GW(p)

this is too much fun to click on

comment by Adam Zerner (adamzerner) · 2021-12-16T03:04:58.521Z · LW(p) · GW(p)

I liked this post a lot. In general, I think that the rationalist project should focus a lot more on "doing things" than on writing things. Producing tools like this is a great example of "doing things". Other examples include starting meetups and group houses.

So, I liked this post a) for being an example of "doing things", but also b) for being what I consider to be a good example of "doing things". Consider that quote from Paul Graham about "live in the future and build what's missing". To me, this has gotta be a tool that exists in the future, and I appreciate the effort to make it happen.

Unfortunately, as I write this on 12/15/21, https://elicit.org/binary. is down. That makes me sad. It doesn't mean the people who worked on it did a bad job though. The analogy of a phase change in chemistry comes to mind.

If you are trying to melt an ice cube and you move the temperature from 10℉ to 31℉, you were really close, but you ultimately came up empty handed. But you can't just look at the fact that the ice cube is still solid and judge progress that way. I say that you need to look more closely at the change in temperature. I'm not sure how much movement in temperature happened here, but I don't think it was trivial.

As for how it could have been better, I think it would have really helped to have lots and lots of examples. I'm a big fan of examples, sorta along the lines of what the specificity sequence [LW · GW] talks about. I'm talking dozens and dozens of examples. I think that helps people grok how useful this can be and when they might want to use it. As I've mentioned elsewhere though, coming up with examples is weirdly difficult.

As for followup work, I don't know what the Elicit team did and I don't want to be presumptuous, but I don't recall any followup posts on LessWrong or iteration. Perhaps something like that would have lead to changes that caused more adoption. I still stand by my old comments about there needing to be 1) [LW(p) · GW(p)] a way to embed the prediction directly from the LessWrong text editor, and 2) [LW(p) · GW(p)] things like a feed of recent predictions.

comment by Multicore (KaynanK) · 2020-11-20T20:10:31.194Z · LW(p) · GW(p)

This was a neat feature on Arbital, nice to see it here as well.

comment by cozy · 2020-11-24T20:54:24.916Z · LW(p) · GW(p)

How does this differ from PredictionBook besides being a much more pleasing interface and actually used for reasonable things (and also the nice embedding)?

Oh, I guess I just explained how.

Really nice site I like it.

comment by Adam Zerner (adamzerner) · 2020-11-23T02:50:46.467Z · LW(p) · GW(p)

I notice myself checking back on this post because I want to submit more predictions. I think it'd be cool if there was a feed of predictions similar to Recent Discussion section on the LW home page.

comment by abramdemski · 2020-11-23T22:15:05.046Z · LW(p) · GW(p)

I'm curious what this means for Ought. Is Ought planning on doing anything with the data, and if so, what are the current speculations about that? How will Elicit evolve if the primary user base is LessWrong?

I looked at some of the stuff about Elicit on the Ought website, but I don't see how LessWrong embeds obviously helps develop this vision.

(To be clear, I'm not complaining -- if Elicit integration is good for LW with no direct benefit for Ought that's not a problem! But I'm guessing Ought was motivated to work with LW on this due to some perceived benefit.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-24T00:06:17.284Z · LW(p) · GW(p)

Have you read https://ought.org/updates/2020-11-09-forecasting? The content of that post felt more directly relevant to this integration.

Replies from: abramdemski↑ comment by abramdemski · 2020-11-24T17:23:55.783Z · LW(p) · GW(p)

That is indeed what I read. A quote:

In our vision, Elicit learns by imitating the thoughts and reasoning steps users share in the tool. It also gets direct feedback from users on its suggestions.

It just seems like LW piggybacks on Elicit without revealing to Elicit any of the more complex stuff that goes into predictions. Elicit wants to get data about (as I understand it) probabilistic argument-mapping. Instead, it's just getting point probabilities for questions. That doesn't seem very useful to me.

Replies from: jungofthewon↑ comment by jungofthewon · 2020-11-25T23:58:35.582Z · LW(p) · GW(p)

Lots of uncertainty but a few ways this can connect to the long-term vision laid out in the blog post:

- We want to be useful for making forecasts broadly. If people want to make predictions on LW, we want to support that. We specifically want some people to make lots of predictions so that other people can reuse the predictions we house to answer new questions. The LW integration generates lots of predictions and funnels them into Elicit. It can also teach us how to make predicting easier in ways that might generalize beyond LW.

- It's unclear how exactly the LW community will use this integration but if they use it to decompose arguments or operationalize complex concepts, we can start to associate reasoning or argumentative context with predictions. It would be very cool if, given some paragraph of a LW post, we could predict what forecast should be embedded next, or how a certain claim should be operationalized into a prediction. Continuing the takeoffs debate [LW · GW] and Non-Obstruction: A Simple Concept Motivating Corrigibility [LW · GW] start to point at this.

- There are versions of this integration that could involve richer commenting in the LW editor.

- Mostly it was a quick experiment that both teams were pretty excited about :)

↑ comment by abramdemski · 2020-11-26T02:08:32.752Z · LW(p) · GW(p)

Ah, a lot of this makes sense!

So you're from Ought?

We specifically want some people to make lots of predictions so that other people can reuse the predictions we house to answer new questions.

Yep, OK, this makes sense to me.

It's unclear how exactly the LW community will use this integration but if they use it to decompose arguments or operationalize complex concepts, we can start to associate reasoning or argumentative context with predictions. It would be very cool if, given some paragraph of a LW post, we could predict what forecast should be embedded next, or how a certain claim should be operationalized into a prediction.

Right, OK, this makes sense to me as well, although it's certainly more speculative.

When Elicit has nice argument mapping (it doesn't yet, right?) it might be pretty cool and useful (to both LW and Ought) if that could be used on LW as well. For example, someone could make an argument in a post, and then have an Elicit map (involving several questions linked together) where LW users could reveal what they think of the premises, the conclusion, and the connection between them.

Replies from: jungofthewon↑ comment by jungofthewon · 2020-12-02T15:17:57.585Z · LW(p) · GW(p)

When Elicit has nice argument mapping (it doesn't yet, right?) it might be pretty cool and useful (to both LW and Ought) if that could be used on LW as well. For example, someone could make an argument in a post, and then have an Elicit map (involving several questions linked together) where LW users could reveal what they think of the premises, the conclusion, and the connection between them.

Yes that is very aligned with the type of things we're interested in!!

comment by Bucky · 2020-11-21T20:16:31.551Z · LW(p) · GW(p)

Do predictions resolve? (I guess just by the question author)

Replies from: Amandango, habryka4↑ comment by habryka (habryka4) · 2020-11-21T21:56:05.330Z · LW(p) · GW(p)

Elicit has some ways of resolving questions, but LW doesn't currently display that in any way. Though we will probably add that functionality if people like the feature.

comment by Said Achmiz (SaidAchmiz) · 2020-11-21T06:12:29.078Z · LW(p) · GW(p)

Cool feature!

Is there any info on implementing Elicit embeds on other sites? (Like, say, GreaterWrong? :) I looked on elicit.org and didn’t find anything.

EDIT: Same question re: Metaculus embeds…

Replies from: habryka4, habryka4↑ comment by habryka (habryka4) · 2020-11-21T21:42:20.937Z · LW(p) · GW(p)

Actually, sorry, I was totally wrong in my earlier comment. You very likely just want to use the LessWrong graphql endpoints that the LW frontend uses, which ensure that everything gets properly logged and routed through the LW DB. The basic setup for those is pretty simple (and you can probably figure it out pretty straightforwardly by inspecting the network tab). Here are the 2 graphql endpoints and the associated schemas:

ElicitBlockData(questionId: String): ElicitBlockData // For querying

MakeElicitPrediction(questionId: String, prediction: Int): ElicitBlockData // For predicting

type ElicitBlockData {

_id: String

title: String

notes: String

resolvesBy: Date

resolution: Boolean

predictions: [ElicitPrediction]

}

type ElicitPrediction {

_id: String

predictionId: String

prediction: Float

createdAt: Date

notes: String

creator: ElicitUser

sourceUrl: String

sourceId: String

binaryQuestionId: String

}

type ElicitUser {

isQuestionCreator: Boolean

displayName: String

_id: String

sourceUserId: String

lwUser: User

}The only slightly non-obvious thing in the API is that you can cancel your predictions by passing in null or 0 for the prediction value.

Here is an example query:

mutation ElicitPrediction($questionId: String, $prediction: Int) {

MakeElicitPrediction(questionId: $questionId, prediction: $prediction) {

_id

title

notes

resolvesBy

resolution

predictions {

_id

predictionId

prediction

createdAt

notes

sourceUrl

sourceId

binaryQuestionId

creator {

_id

displayName

sourceUserId

lwUser {

_id

displayName

__typename

}

__typename

}

__typename

}

__typename

}

}

query Variables: {

"questionId": "EhJt1xwVh",

"prediction": 91

}↑ comment by clone of saturn · 2020-12-03T02:16:34.140Z · LW(p) · GW(p)

Is it possible to insert a question using the markdown editor, or does it require using the new editor?

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-12-03T03:21:52.987Z · LW(p) · GW(p)

Currently requires the WYSIWYG editor. Sorry about that.

↑ comment by habryka (habryka4) · 2020-11-21T06:50:08.006Z · LW(p) · GW(p)

Re elicit: Ought sent us an API documentation that should allow GW to build their own widget (if you do so, looking forward to your implementation! I have in the past found a lot of value in seeing how GW tackles similar design challenges). I won't paste a link here, since I am not fully confident it's public, but Amanda or anyone else from Ought can paste the public version.

Re Metaculus: Yes, in that case it's just a nice iframe that you should be easily able to reverse engineer. You just render an iframe with this URL: https://d3s0w6fek99l5b.cloudfront.net/s/1/questions/embed/<question number>/?plot=pdf and I think everything should work (with the question number being the obvious number extracted from the normal metaculus question URL). My guess is actually if you just render the HTML you get from LessWrong, everything should work out of the box, though of course it might require some additional styling and modification to work with GW, but the basic skeleton should work.

comment by Bird Concept (jacobjacob) · 2020-11-20T19:13:09.169Z · LW(p) · GW(p)

So cool, congrats on shipping!

Replies from: Amandangocomment by Liron · 2020-11-21T14:05:26.302Z · LW(p) · GW(p)

This feature seems to be making the page wider and allowing horizontal scrolling on my mobile (iPhone) which degrades the post reading experience. I would prefer if the interface got shrunk down to fit the phone’s width.

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-21T18:19:29.987Z · LW(p) · GW(p)

Yep, we also noticed that. Will be fixed sometime in the next few hours.

comment by Bird Concept (jacobjacob) · 2020-11-20T19:40:16.958Z · LW(p) · GW(p)

comment by Lukas Finnveden (Lanrian) · 2020-11-21T09:02:15.588Z · LW(p) · GW(p)

Very cool, looking forward to using this!

How does this work with the alignmemt forum? It would be amazing if AFers predictions were tracked on AF, and all LWers predictions were tracked in the LW mirror.

comment by ChristianKl · 2020-11-24T17:27:37.130Z · LW(p) · GW(p)

Calling a feature that's about getting a numeric value between 1% and 99% prediction suggests it shouldn't be used for asking questions that aren't predictions. Is that a conscious choice?

comment by riceissa · 2020-11-23T00:33:49.642Z · LW(p) · GW(p)

Is there a way to see all the users who predicted within a single "bucket" using the LW UI? Right now when I hover over a bucket, it will show all users if the number of users is small enough, but it will show a small number of users followed by "..." if the number of users is too large. I'd like to be able to see all the users. (I know I can find the corresponding prediction on the Elicit website, but this is cumbersome.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2020-11-23T04:16:18.616Z · LW(p) · GW(p)

Alas, not currently. It's the very next obvious thing to do, but I haven't gotten around to it.

comment by Measure · 2020-11-20T22:58:16.766Z · LW(p) · GW(p)

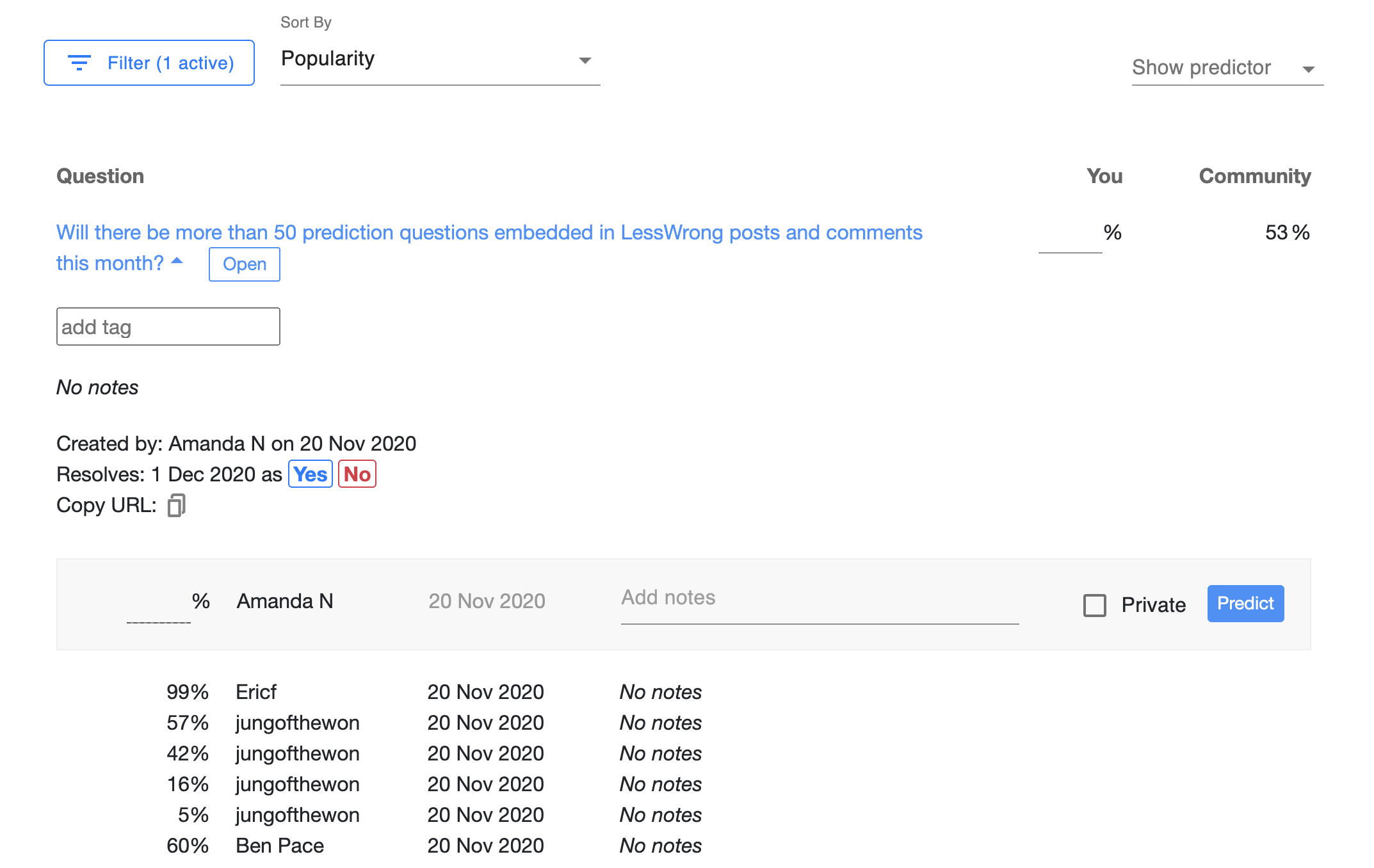

It looks like people can change their predictions after they initially submit them. Is this history recorded somewhere, or just the current distribution?

Is there an option to have people "lock in" their answer? (Maybe they can still edit/delete for a short time after they submit or before a cutoff date/time)

Is there a way to see in one place all the predictions I've submitted an answer to?

Replies from: elifland, habryka4↑ comment by elifland · 2020-11-20T23:32:44.673Z · LW(p) · GW(p)

It looks like people can change their predictions after they initially submit them. Is this history recorded somewhere, or just the current distribution?

We do store the history. You can view them by going https://elicit.org/binary then searching for the question, e.g. https://elicit.org/binary?binaryQuestions.search=Will%20there%20be%20more%20than%2050. Although as noted by Oli, we currently only display predictions that haven't been withdrawn.

Is there an option to have people "lock in" their answer? (Maybe they can still edit/delete for a short time after they submit or before a cutoff date/time)

Not planning on supporting this on our end in the near future, but could be a cool feature down the line.

Is there a way to see in one place all the predictions I've submitted an answer to?

As of right now, not if you make the predictions via LW. You can view questions that you've submitted a prediction on via Elicit at https://elicit.org/binary?binaryQuestions.hasPredicted=true if you're logged in, and we're working on allowing for account linking so your LW predictions would show up in the same place.

The first version of account linking will be contacting someone at Ought then us manually running a script.

Edit: the first version of account linking is ready, email elifland@ought.org with your LW username and Elicit email and I can link them.

↑ comment by habryka (habryka4) · 2020-11-20T23:22:09.459Z · LW(p) · GW(p)

I am pretty sure Ought is keeping track of all of your predictions. But I don't think there (yet) exists UI for seeing them, and also, we don't yet have a way of connecting your LW account with your elicit account, which would be necessary to allow you to see the history of predictions you made on LW. The Elicit API currently only exposes uncancelled predictions you've made.

Replies from: Amandango↑ comment by Amandango · 2020-11-20T23:25:54.763Z · LW(p) · GW(p)

You can search for the question on elicit.org/binary and see the history of all predictions made! E.G. If you copy the question title in this post, and search by clicking Filter then pasting the title into "Question title contains," you can find the question here.

↑ comment by jmh · 2020-11-21T17:10:19.669Z · LW(p) · GW(p)

Is it just me or do all those prediction assessments from jungofthewon point to a rather undesirable feature of the tool?

Replies from: jungofthewon↑ comment by jungofthewon · 2020-11-21T18:57:18.239Z · LW(p) · GW(p)

you mean because my predictions are noisy and you don't want to see them in that list?

Replies from: jmh↑ comment by jmh · 2020-11-22T01:28:23.560Z · LW(p) · GW(p)

Unless I'm off base it looks like you form four different predictions on the same question. That seems odd to me. I would expect a one prediction per person making a prediction -- so later predictions would update rather than provide a new value along with the prior ones. It looks like you hold all four positions simultaneously. Also, if they are all considered current predictions then that might skew the average.

But maybe I am just not getting something about what's going on. Have not really looked beyond the LW post and comments.

Replies from: jungofthewon↑ comment by jungofthewon · 2020-11-22T14:02:40.172Z · LW(p) · GW(p)

I see what you're saying. This feature is designed to support tracking changes in predictions primarily over longer periods of time e.g. for forecasts with years between creation and resolution. (You can even download a csv of the forecast data to run analyses on it.)

It can get a bit noisy, like in this case, so we can think about how to address that.

comment by London L. (london-l) · 2022-03-30T04:17:35.327Z · LW(p) · GW(p)

Is it possible to hide the values of other predictors? I'm worried that seeing the values that others predict might influence future predictions in a way that's not helpful for feedback or accurate group predictions. (Especially since names are shown and humans tend to respect others' opinions.)

comment by Greg C (greg-colbourn) · 2022-03-01T19:37:07.231Z · LW(p) · GW(p)

Is it possible to have answers given in dates on https://forecast.elicit.org/binary, like it it is for https://forecast.elicit.org/questions/LX1mQAQOO?

comment by WilliamKiely · 2022-01-05T22:03:45.494Z · LW(p) · GW(p)

Note that for embedding Elicit predictions to work you must have the "Activate Markdown Editor" setting **turned off** in your [account settings](https://www.lesswrong.com/account).

comment by WilliamKiely · 2021-12-23T18:52:44.870Z · LW(p) · GW(p)

I'm having trouble embedding forecasts. Does anyone know what I am doing wrong?

Elicit Prediction (https://forecast.elicit.org/binary/questions/el3utYd8Z)

Replies from: WilliamKiely↑ comment by WilliamKiely · 2021-12-24T04:26:07.037Z · LW(p) · GW(p)

Test:

Elicit Prediction (https://forecast.elicit.org/binary/questions/el3utYd8Z)