Framing Practicum: Dynamic Equilibrium

post by johnswentworth · 2021-08-16T18:52:00.632Z · LW · GW · 2 commentsThis is a question post.

Contents

What’s Dynamic Equilibrium? What To Look For Useful Questions To Ask The Challenge If you get stuck, look for: Motivation None Answers 7 AllAmericanBreakfast 4 adamShimi 4 Jemist 3 Elliot Callender 3 NaiveTortoise 3 Thoroughly Typed None 2 comments

This is a framing practicum [? · GW] post. We’ll talk about what dynamic equilibrium is, how to recognize dynamic equilibrium in the wild, and what questions to ask when you find it. Then, we’ll have a challenge to apply the idea.

Today’s challenge: come up with 3 examples of dynamic equilibrium which do not resemble any you’ve seen before. They don’t need to be good, they don’t need to be useful, they just need to be novel (to you).

Expected time: ~15-30 minutes at most, including the Bonus Exercise.

What’s Dynamic Equilibrium?

Our picture of stable equilibrium [? · GW] was a marble sitting at the bottom of a bowl. This is a static equilibrium: the system’s state doesn’t change.

Picture instead a box full of air molecules, all bouncing around all over the place. The system’s state constantly changes as the molecules move around. But if we look at the box at large scale, we don’t really care about the motions of individual molecules, we just care about the overall distribution of molecule positions and velocities - the number of molecules in the upper-left quadrant, for instance. And this distribution may be (approximately) constant, even though the individual molecules are bouncing around.

This is dynamic equilibrium: even though the states of the system’s components are constantly changing, the distribution of states of the system’s components has a stable equilibrium.

The box-of-air example involves a distribution of similar physical parts (i.e. molecules), but we can also have a dynamic equilibrium with a Bayesian belief-distribution. For instance, I can think about which of my dishes I expect to be dirty tomorrow or next week or next month. I don’t run the dishwasher every day, so in the short term I expect dirty dishes to pile up - a non-equilibrium expectation. But in the long run, I generally expect the distribution of dirty dishes to be roughly steady - I don’t know exactly which days I’ll wash them, but my expectations for 100 days from now are basically the same as my expectations for 101 days from now.

The dirty dishes themselves may pile up and then be cleaned and then pile up again, never reaching an equilibrium state. But my expectations or forecasts about the dishes do reach an equilibrium.

What To Look For

In general, dynamic equilibrium should spring to mind in two situations:

- A system has parts which are constantly changing/moving, but some aggregate summary of those parts (like a total count or a distribution) tends to return to approximately the same value.

- Our expectations or forecasts about a system’s state tend to return to approximately the same thing when we forecast farther into the future.

Useful Questions To Ask

If the number of air molecules in the upper-left quadrant of a box is roughly constant, then the rate at which molecules enter that quadrant must roughly equal the rate at which they leave. If the number of molecules in the quadrant is lower than usual, then molecules will enter faster than they leave until the number returns to equilibrium.

In general, dynamic equilibrium involves a balance: the rate at which parts enter some state is roughly equal to the rate at which they leave that state. Three key questions are:

- At what rate do parts enter each state?

- At what rate do parts leave each state?

- What does the state-distribution have to look like for those to balance?

In order for the equilibrium to be stable, we also need parts to enter a state a bit faster/leave it a bit slower when there are fewer-than-equilibrium-number of parts in that state. So, besides the rates, we also want some idea of how the rates change if there are slightly more or less parts in a given state.

We can also ask the corresponding questions for a dynamic equilibrium of expectations/forecasts. Rather than parts moving between states, we have probability-mass moving between states. If there’s a chance that I wash the dishes in two days, then there’s a flow of probability-mass from the “n dirty dishes” state to the “all dishes clean state” between two and three days in the future. If my expectations reach an equilibrium, then that means the rate of probability-mass-flow into each state equals the rate of probability-mass-flow out. So, the three key questions are:

- What processes cause probability-mass to flow into each state, and at what rate do those happen?

- What processes cause probability-mass to flow out of each state, and at what rate do those happen?

- What does the distribution have to look like for those to balance?

Once we know how the state-change rates work, we can also ask all the usual questions about stable equilibrium [? · GW].

The Challenge

Come up with 3 examples of dynamic equilibrium which do not resemble any you’ve seen before. They don’t need to be good, they don’t need to be useful, they just need to be novel (to you).

Any answer must include at least 3 to count, and they must be novel to you. That’s the challenge. We’re here to challenge ourselves, not just review examples we already know.

However, they don’t have to be very good answers or even correct answers. Posting wrong things on the internet is scary, but a very fast way to learn, and I will enforce a high bar for kindness in response-comments. I will personally default to upvoting every complete answer, even if parts of it are wrong, and I encourage others to do the same.

Post your answers inside of spoiler tags. (How do I do that? [LW · GW])

Celebrate others’ answers. This is really important, especially for tougher questions. Sharing exercises in public is a scary experience. I don’t want people to leave this having back-chained the experience “If I go outside my comfort zone, people will look down on me”. So be generous with those upvotes. I certainly will be.

If you comment on someone else’s answers, focus on making exciting, novel ideas work — instead of tearing apart worse ideas. Yes, And is encouraged.

I will remove comments which I deem insufficiently kind, even if I believe they are valuable comments. I want people to feel encouraged to try and fail here, and that means enforcing nicer norms than usual.

If you get stuck, look for:

- Systems made of lots of similar parts which are all constantly in motion

- Systems with a stable equilibrium only if you “zoom out” from the details

- Systems for which your expectations are roughly constant sufficiently far into the future, even if the system itself is constantly in motion.

Bonus Exercise: for each of your three examples from the challenge, what are the relevant “parts”, part-states and state-change rates (or probability-mass-flows, for expectations)? Can you do a Fermi estimate of the relevant rates, or estimate a rough (i.e. big-O) functional relationship between the state-change rates and the number of parts in each state (or probability-mass in each state)? How do the state-change rates change when the number of parts (or probability mass) in some state is higher/lower than its equilibrium value?

This bonus exercise is somewhat more abstract and conceptually tricky than previous exercises, especially for the probability-mass questions. I recommend it especially if you want some extra challenge.

Motivation

Much of the value I get from math is not from detailed calculations or elaborate models, but rather from framing tools: tools which suggest useful questions to ask, approximations to make, what to pay attention to and what to ignore.

Using a framing tool is sort of like using a trigger-action pattern [? · GW]: the hard part is to notice a pattern, a place where a particular tool can apply (the “trigger”). Once we notice the pattern, it suggests certain questions or approximations (the “action”). This challenge is meant to train the trigger-step: we look for novel examples to ingrain the abstract trigger pattern (separate from examples/contexts we already know).

The Bonus Exercise is meant to train the action-step: apply whatever questions/approximations the frame suggests, in order to build the reflex of applying them when we notice dynamic equilibrium.

Hopefully, this will make it easier to notice when a dynamic equilibrium frame can be applied to a new problem you don’t understand [LW · GW] in the wild, and to actually use it.

Answers

- Habits, routines, and jobs. If I play pickup soccer every few days when my friends and I can coordinate schedules, then there's a certain average probability that I'll be in the "soccer-playing" state at any given time. Our schedules, locations, and energy levels will determine whether we enter and exit the "soccer-playing state." Our enjoyment of soccer vs. preference for other activities will determine how often we collectively play soccer - a sort of supply and demand curve (which is itself a dynamic equilibrium).

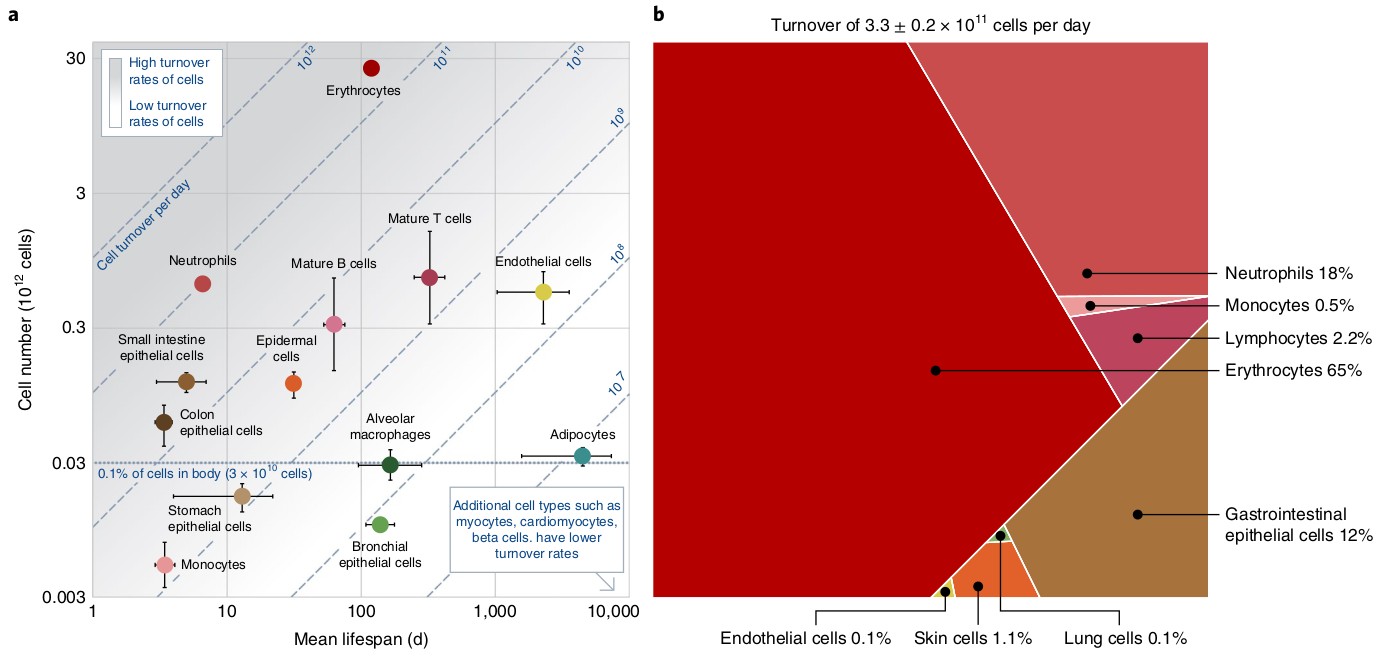

- Cell turnover. Evolution and signaling are determinants of the rate at which cells reproduce, mature, and die. In a healthy organism, the function of the tissue to which the cell belongs determines, via natural selection, the rate of cell turnover. Blood cells, neutrophils, and gut cells account for 96% of total cell turnover on any given day. Although these cells dominate the turnover rate, it seems likely that differences in turnover rate in other cells are also important, and it would be interesting to take an evolutionary approach to understanding the differences in turnover rates at different rate magnitudes.

- Highways and airports. The specific identity of the people driving or passing through these spaces changes, but the absolute number follows a pattern. Patterns of the workday and workweek, holidays, and other factors determine the flows in and out on one level; the airlines' and road designers' expectations about how many flights to schedule or lanes to build determine it on another.

↑ comment by johnswentworth · 2021-08-24T16:56:41.039Z · LW(p) · GW(p)

I'd guess blood cells and neutrophils dominate turnover largely because there's so many of them; IIRC blood cells turn over on a timescale of months, which isn't especially fast. The stomach lining presumably turns over very quickly because it's exposed to extreme chemical stress (mitigated by a mucus layer, but that can only do so much), so I'd guess that's the dominant "gut cell" term.

That's an interesting thing to know because it tells us what processes are likely to eat up bodily resources, aside from obvious things like moving muscles or firing neurons.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-08-24T17:40:51.402Z · LW(p) · GW(p)

Just for erythrocytes, there are about 30 billion in the human body, which comprises a little under .1% of all cells. So they are enormously over represented in turnover relative to their abundance.

Replies from: johnswentworth↑ comment by johnswentworth · 2021-08-24T17:58:06.008Z · LW(p) · GW(p)

Huh. Now I am confused. Why is a cell which turns over on a timescale of months so over-represented in turnover? Skin cells, for instance, turn over at least that fast and should be at least as numerous.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-08-24T20:14:17.752Z · LW(p) · GW(p)

The paper I linked above ("The distribution of cellular turnover in the human body," lmk if you want me to send it to you) states that turnover is about 330 billion cells per day. It also states that erythrocytes account for 65% of that turnover, gastrointestinal epithelial cells account for 12% of turnover, while skin cells accounts for 1.1%. For skin cells, that would be 3.6 billion cells/day; for erythrocytes, 200 billion. That seems totally impossible given what I know about the turnover rate and absolute number of erythrocytes in the human body.

Another paper states that epidermal desquamation is about 500 million cells/day (Fig 1).

So yeah, both the proportions and the absolute number of cells being shed seem wildly divergent. The paper estimating cell turnover rates is in Nature Medicine. I'll look closer at it and see if I can figure out the disconnect.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-08-24T20:30:49.354Z · LW(p) · GW(p)

Oh shoot, I made a math mistake (wrong units). There's actually almost 3 trillion erythrocytes in the human body, which is closer to 8% of the human body (~37.2 trillion cells). Their estimate of epidermal cell number and turnover is more than two orders of magnitude lower.

That still means that erythrocytes are heavily overrepresented in terms of cell turnover (of which they compose 65%), but not by as much as I'd originally thought.

↑ comment by johnswentworth · 2021-08-24T21:06:12.820Z · LW(p) · GW(p)

Aha! This makes more sense now. Thanks for chasing that down, I feel much less confused.

- Jazz improvisation following chord changes or modes: although the actual music is always different, always moving, from a harmonic standpoint and at an abstract enough level, it's always the same succession of chords/modes

- Mix of pan-fried vegetables: although each vegetable is cut into slightly different size chunks and mixed with all the other, almost any spoonful will include the same distribution of vegetables and vegetable cuts.

- Research: although the topics change all the time, I expect to be doing broadly the same thing for every month (reading, writing my ideas, talking about them with people, looking for flaws, arguing,...)

Bonus exercise:

- Jazz improvisation following chord changes or modes: parts are the chords/modes, part-states are what's actually played, probability-mass-flow is the chorus frequency (the inverse of the "period", the number of chords/modes per chorus). Then we literally have

- Mix of pan-fried vegetables: Parts are the pieces of vegetable cuts, part-states are the shapes and quantities of each vegetable, and probability-mass-flows is the distribution of vegetables in a spoonful. Increasing the number of parts (adding more pieces of vegetable) has a different effect depending on what is added:

- If things are added in proportion with the original distribution, then it stays the same

- Otherwise, it is moved (by adding only carrots for example)

- Research: Parts are the hours of research, part-states are the generic tasks these can be allocated into, and the probability-mass-flows is the distribution over how many hours are allocated in each tasks. If the number of tasks increases the distribution flattens in its extends its support. And if the number of hours increases... well it depends on where the new hours are allocated.

I'm assuming the point is that I've not seen the examples used as examples of dynamic equilibrium before, not that I've not seen the equilibrium before? Given that that's the case:

- Total area of districts in a city. Poor areas become gentrified, rich areas go out of fashion, elderly residents become economically (or biologically) inactive, and become run-down. Overall the distribution changes very slowly, even though the standards of what constitutes a "rich" or "poor" area generally increase with time. This is broken if the municipal government fails or something.

- Size of staff in a company. For most well-established companies, people enter and leave at a rate much much faster than the company grows or shrinks.

- In terms of dynamic equilibria of outcomes, political parties in certain democracies. The short term predictions can be based on the current political landscape, but in the long term, people get tired of politicians, so each politician's reign is limited. Discontent is always a limiting factor on staying in office.

↑ comment by johnswentworth · 2021-08-17T16:52:17.314Z · LW(p) · GW(p)

Great examples. The first one points to equilibria on multiple timescales - e.g. at one timescale people moving in are in equilibrium with people moving out or dying, and at another timescale the distribution of neighborhoods is in equilibrium.

This one was a lot of fun!

- ROS activity in some region of the body is a function of antioxidant bioavailability, heat, and oxidant bioavailability. I imagine this relationship is the inverse of some chemical rate laws, i.e. dependent on which antioxidants we're looking at. But since I expect most antioxidants to work as individual molecules, the relationship is probably , i.e. ROS activity is inverse w.r.t. some antioxidant's potency and concentration if we ignore other antioxidants. The bottom term can also be a sum across all antioxidants, given no synergistic / antagonistic interactions!

- Transistor reliability is probably a function of heat, band gap and voltage? I imagine that, in fact, reliability is hysteretic in terms of band gap and voltage! When the gap is lower, noise can cross more easily, and when it's too high there won't be enough voltage for it to pass (without overheating your circuit). And heat increases noise. I think that information transmission might be exponential or Gaussian centered around the optimum, parameterized by . Does anyone have an equation for this?

- Ant movement speed is probably an equilibrium between evolved energy-conservation priors, available calories and pheromones. Let's just focus on pheromones which make the ant move faster. Energy (perhaps as ) and pheromones (say, ) are probably each about predictors of speed, since I'm imagining material stress of movement () to be the main energy sink. Let , where . I don't know what the evolved frugality priors look like, but expect they can just map without needing the subcomponents and , at least as far as big-O notation goes.

- Number of cells in an adult human body. Also, cell type composition in an adult human body (over the timescale of months but not years because aging).

- Relative size of predator/prey species population in a mature, mostly otherwise static ecosystem.

- Warm-blooded mammal body temperature.

↑ comment by johnswentworth · 2021-08-24T17:01:16.122Z · LW(p) · GW(p)

#1 in particular is definitely a useful frame to use in practice.

- The demand for toilet paper. On a short-term timescale there'll be random peaks and troughs (or not so random ones due to people expecting a lockdown). But in the medium-term it'll be constant, because of each person only needing a fairly constant amount of toilet paper. Although in the long-term there'll be changes again due to a growing or shrinking population.

- The amount of train delays per day in a city. Some days have more, e.g. because of some big event or a random accident, while other days have fewer. But on average over weeks or months it is roughly constant.

- The amount of groceries in my fridge. I go shopping roughly once a week, so in between the amount steadily goes down and then jumps up again. There might be some irregularities due to eating out or being especially hungry, but over longer periods of time I mostly eat the same amount.

How the equilibrium gets restored in each direction:

- If above equilibrium (people buying lots of toilet paper for a while) the demand will be lower afterwards. If below equilibrium they'll run out eventually and the demand will tick up again.

- If above equilibrium (a lot of delays) over a longer period of time, people might get upset and the operating company might try to improve scheduling, maintenance, number of trains etc. If below equilibrium, the operators might for example get complacent and stop putting in as much effort.

- If above equilibrium (eaten a below average amount from my fridge and have a lot of groceries left at the end of the week), I'll just buy less. If below equilibrium I'll have to buy more when I go shopping, or maybe go more than once per week.

I think my browser tab and social interaction examples [LW(p) · GW(p)] on the post on stable equilibria fit in better here. They're much more dynamic than stable.

↑ comment by johnswentworth · 2021-08-20T00:54:42.677Z · LW(p) · GW(p)

Lots of great economic examples here. #2 in particular makes some great points about incentives inducing an equilibrium, in ways that a lot of overly-simple economic models wouldn't capture very well.

2 comments

Comments sorted by top scores.

comment by Measure · 2021-08-17T04:01:24.809Z · LW(p) · GW(p)

lower...leave faster

This is backward.

Replies from: johnswentworth↑ comment by johnswentworth · 2021-08-17T15:55:04.721Z · LW(p) · GW(p)

Fixed, thanks.