Collider bias as a cognitive blindspot?

post by TurnTrout · 2020-12-30T02:39:35.700Z · LW · GW · 8 commentsThis is a question post.

Contents

Answers 6 AllAmericanBreakfast 5 Mark Xu 5 Daniel V 2 jimrandomh None 8 comments

Zack M. Davis summarizes [LW · GW]collider bias as follows:

The explaining-away effect (or, collider bias; or, Berkson's paradox) is a statistical phenomenon in which statistically independent causes with a common effect become anticorrelated when conditioning on the effect.

In the language of d-separation, if you have a causal graph [LW · GW] X → Z ← Y, then conditioning on Z unblocks the path between X and Y.

... if you have a sore throat and cough, and aren't sure whether you have the flu or mono, you should be relieved to find out it's "just" a flu, because that decreases the probability that you have mono. You could be inflected with both the influenza and mononucleosis viruses, but if the flu is completely sufficient to explain your symptoms, there's no additional reason to expect mono.[1] [LW · GW]

Wikipedia gives a further example:

Suppose Alex will only date a man if his niceness plus his handsomeness exceeds some threshold. Then nicer men do not have to be as handsome to qualify for Alex's dating pool. So, among the men that Alex dates, Alex may observe that the nicer ones are less handsome on average (and vice versa), even if these traits are uncorrelated in the general population. Note that this does not mean that men in the dating pool compare unfavorably with men in the population. On the contrary, Alex's selection criterion means that Alex has high standards. The average nice man that Alex dates is actually more handsome than the average man in the population (since even among nice men, the ugliest portion of the population is skipped). Berkson's negative correlation is an effect that arises within the dating pool: the rude men that Alex dates must have been even more handsome to qualify.

No crazy psychoanalysis, just a simple statistical artifact. (On a meta level, perhaps attractive people are meaner for some reason, but a priori, doesn't collider bias explain away the need for other explanations?)

This seems like it could be everywhere. Most things have more than one causal parent; if it has many parents, there's probably a pair which is independent. Then some degree of collider bias will occur for almost all probability distributions represented by the causal diagram, since collider bias will exist if (in the linked formalism). And if we don't notice it unless we make a serious effort to reason about the causal structure of a problem, then we might spend time arguing about statistical artifacts, making up theories to explain things which don't need explaining!

In The Book of Why, Judea Pearl speculates (emphasis mine):

Our brains are not wired to do probability problems, but they are wired to do causal problems. And this causal wiring produces systematic probabilistic mistakes, like optical illusions. Because there is no causal connection between [ and in ], either directly or through a common cause, [people] find it utterly incomprehensible that there is a probabilistic association. Our brains are not prepared to accept causeless correlations, and we need special training - through examples like the Monty Hall paradox... - to identify situations where they can arise. Once we have "rewired our brains" to recognize colliders, the paradox ceases to be confusing.

But how is this done? Perhaps one simply meditates on the wisdom of causal diagrams, understands the math, and thereby comes to properly intuitively reason about colliders, or at least reliably recognize them.

This question serves two purposes:

- If anyone has rewired their brain thusly, I'd love to hear how.

- It's not clear to me that the obvious kind of trigger-action-plan will trigger on non-trivial, non-obvious instances of collider bias.

- To draw attention to this potential bias, since I wasn't able to find prior discussion on LessWrong.

Answers

One idea: make a list of situations in which you trade off against 2+ qualities, notice if you've formed a collider bias, and explain to yourself what's going on. I'll try two:

- Food can be tasty and healthy. Why is food that's tasty so often unhealthy, and vice versa? Insofar as the collider effect is the explanation, then it's because I have a tastiness + healthiness threshold. Foods don't need to be as tasty if they're healthy enough, and they don't need to be as healthy if they're tasty enough. Also, there's some set of foods that simply aren't tasty + healthy enough to eat, like American cheese, out-of-season tomatoes, and Chips Ahoy.

- Vacations can be relaxing and interesting. Why are interesting vacations so often stressful, and relaxing vacations so often boring? The collider effect strikes again! I don't want to go on vacations that are neither relaxing nor interesting, such as a trip to Cancun or Ibiza.

Food can have more virtues. It can be cheap, easy to prepare, and different from what I've eaten recently. Weirdly enough, this means that the more potential virtues something has, the more likely you're going to consume something that entirely lacks a certain virtue. If I go to a single-price restaurant, where price, ease of preparation, and variety are held constant, tastiness and healthiness are the only ways I can make a selection and will be more important. By contrast, if I'm at home, how tasty and healthy the meal is can more often take a back seat to these other factors.

However, I'd also note that the reason we may not think too often about "collider bias" may be that most things are correlated. Price correlates with all the other virtues in just about everything you can consume. Excitement and stress seem likely to be correlated.

If X->Z<-Y, then X and Y are independent unless you're conditioning on Z. A relevant TAP might thus be:

- Trigger: I notice that X and Y seem statistically dependent

- Action: Ask yourself "what am I conditioning on?". Follow up with "Are any of these factors causally downstream of both X and Y?" Alternatively, you could list salient things causally downstream of either X or Y and check the others.

This TAP unfortunately abstract because "things I'm currently conditioning on" isn't an easy thing to list, but it might help.

One way to "rewire" your brain is to wire in a quick check- how does selection/stratification/conditioning matter here?

But perhaps most important is to think causally. Sure, you can open up associations, but, theoretically, do they make sense? Why would obesity, conditional on having cardiovascular disease, reduce mortality? Addressing why rather than leaping to a bivariate causal conclusion is important. This is why scientists look for mechanisms and mechanism-implicating boundary conditions.

I call this subcategory of Berkson's paradox issues the conservation of virtue effect: when there is a filter somewhere for something like a sum of good qualities, then all good qualities are negatively correlated. Another major subcategory is the "if you observe something which has multiple possible explanations, those explanations are negatively correlated" effect. I don't think these two subtypes cover all the instances, but they do seem to cover a large fraction, and they aren't too difficult to internalize.

8 comments

Comments sorted by top scores.

comment by Kaj_Sotala · 2020-12-30T19:39:31.263Z · LW(p) · GW(p)

I feel confused; you quote Pearl talking about how hard this is for people to accept etc., but it feels to me like people generally get this intuitively in the kinds of contexts where it naturally pops up? There are some instances like Monty Hall where people do get really confused, but Monty Hall is notable for being a really weird and unnatural setup. Whereas if you have a sore throat and then find out that you have the flu, then it seems to me that most people will naturally think "ah I don't have mono then", wouldn't they? (And given that it's Alex who's doing the selecting of who qualifies, I would expect them to be quite aware of the fact that they are sometimes making the choice to date a rude man because man that guy is hot, or vice versa.)

It does seem to me that there's sometimes a bias in people conditioning on Z too much; e.g. if we use this example

Judea Pearl gives an example of reasoning about a burglar alarm: if your neighbor calls you at your dayjob to tell you that your burglar alarm went off, it could be because of a burglary, or it could have been a false-positive due to a small earthquake. There could have been both an earthquake and a burglary, but if you get news of an earthquake, you'll stop worrying so much that your stuff got stolen, because the earthquake alone was sufficient to explain the alarm.

then local burglars can exploit that by hitting homes right at the time of an earthquake, because people will assume the alarms to be caused by the earthquake rather than a genuine burglary.

Prejudice may be another example of conditioning on Z too much; if you are predisposed to believe that group membership -> Z, then you may jump to the conclusion that Z is caused by group membership, while neglecting other causes.

But this seems like the opposite of the bias you are talking about?

Replies from: TurnTrout↑ comment by TurnTrout · 2020-12-30T21:28:34.194Z · LW(p) · GW(p)

I agree that people can reason about the mono case. I'm not convinced this isn't hard in general. Most examples of collider bias struck me as unintuitive, and it seems very unlikely that I'm worse than average at causal reasoning.

(And given that it's Alex who's doing the selecting of who qualifies, I would expect them to be quite aware of the fact that they are sometimes making the choice to date a rude man because man that guy is hot, or vice versa.)

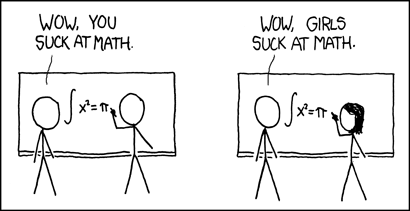

Noticing that the guy is hot is way different from taking the further step to explain the correlation in her dating pool. If this is generally correctly reasoned out, then why haven't I ever heard someone answer the complaints of "women like bad boys" by (informally) explaining collider bias?

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2020-12-31T14:46:38.511Z · LW(p) · GW(p)

Most examples of collider bias struck me as unintuitive, and it seems very unlikely that I'm worse than average at causal reasoning.

Is that because they are intrinsically unintuitive, or because they are expressed in an unfamiliar way? I would guess that if one starts by explaining the mono case, then points out how it is analogous to the formal structure (the way Zack did and your quoted Wikipedia example did), then it would be relatively easily for people to get. Whereas if there's an explanation that e.g. starts off from a very mathematical and formal presentation, then it's harder to connect with what you already know intuitively.

then why haven't I ever heard someone answer the complaints of "women like bad boys" by (informally) explaining collider bias?

Is that an example of collider bias? If it were, then one would expect to also hear similar complaints about women's (or for that matter men's) preference for many other traits that are perceived negatively, e.g. "women like guys without money" or "men like unattractive women". The fact that it's "bad boys" that gets singled out in particular suggests that there is actually something special about that trait, and the standard explanations (e.g. that confidence is attractive and that badness correlates with confidence) seem reasonable to me.

comment by Mo Putera (Mo Nastri) · 2020-12-30T05:03:49.973Z · LW(p) · GW(p)

Perhaps related is this classic post by Thrasymachus: https://www.lesswrong.com/posts/dC7mP5nSwvpL65Qu5/why-the-tails-come-apart. [LW · GW] Scott Alexander uses that post as a jumping-off point to discuss a variety of topics, from ostensibly conflicting results in happiness research to the problem of figuring out a morality that can survive transhuman scenarios: https://www.lesswrong.com/posts/asmZvCPHcB4SkSCMW/the-tails-coming-apart-as-metaphor-for-life. [LW · GW] (Or maybe I'm confused and this isn't really related to what you're talking about?)

comment by nealeratzlaff · 2020-12-30T08:20:44.153Z · LW(p) · GW(p)

The difficulty of correctly reasoning with probabilities reminds of something Geoff Hinton said about working in high dimensional space (paraphrasing): "when we try to imagine high dimensions, we all just imagine a 3D surface and say 'N dimensions' really loud in our heads". I have a habit of trying to use probabilities whenever I'm trying to reason about something, but I'm becoming increasingly sure that my Bayes net (or causal graph) is badly wired with wrong probabilities everywhere.

I see quite a few papers on PubMed discussing collider bias with regard to obesity-associated health risks. The effect is probably in full swing with covid research, unfortunately.

Replies from: TurnTrout↑ comment by TurnTrout · 2020-12-30T18:05:04.652Z · LW(p) · GW(p)

I see quite a few papers on PubMed discussing collider bias with regard to obesity-associated health risks. The effect is probably in full swing with covid research, unfortunately.

comment by hamnox · 2020-12-31T14:31:16.953Z · LW(p) · GW(p)

"Collider bias" comes at the phenomena from a slightly different angle than The Tails Come Apart [LW · GW] or or as one of Scott's Goodhart variants [LW · GW]. I think I will start using "collider" as my mental handle for situations of noncausal anticorrelation.

comment by TheMajor · 2020-12-31T13:56:39.494Z · LW(p) · GW(p)

There has been previous discussion about this on LessWrong. In particular, this is precisely the focus of Why the tails come apart [LW · GW], if I'm not mistaken.

If I remember correctly that very post caused a brief investigation into an alleged negative correlation between chess ability and IQ, conditioning on very high chess ability (top 50 or something). Unfortunately I don't remember the conclusion.

Edit: and now I see Mo Nastri already pointed this out. Oops.