Causal Diagrams and Causal Models

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-12T21:49:16.747Z · LW · GW · Legacy · 297 commentsContents

vs. vs. p(E&R)=p(E)p(R) p(E&R) = p(E)p(R|E) p(E&R) = p(R)p(E|R) vs. vs. None 297 comments

Suppose a general-population survey shows that people who exercise less, weigh more. You don't have any known direction of time in the data - you don't know which came first, the increased weight or the diminished exercise. And you didn't randomly assign half the population to exercise less; you just surveyed an existing population.

The statisticians who discovered causality were trying to find a way to distinguish, within survey data, the direction of cause and effect - whether, as common sense would have it, more obese people exercise less because they find physical activity less rewarding; or whether, as in the virtue theory of metabolism, lack of exercise actually causes weight gain due to divine punishment for the sin of sloth.

|

vs. |

|

The usual way to resolve this sort of question is by randomized intervention. If you randomly assign half your experimental subjects to exercise more, and afterward the increased-exercise group doesn't lose any weight compared to the control group [1], you could rule out causality from exercise to weight, and conclude that the correlation between weight and exercise is probably due to physical activity being less fun when you're overweight [3]. The question is whether you can get causal data without interventions.

For a long time, the conventional wisdom in philosophy was that this was impossible unless you knew the direction of time and knew which event had happened first. Among some philosophers of science, there was a belief that the "direction of causality" was a meaningless question, and that in the universe itself there were only correlations - that "cause and effect" was something unobservable and undefinable, that only unsophisticated non-statisticians believed in due to their lack of formal training:

"The law of causality, I believe, like much that passes muster among philosophers, is a relic of a bygone age, surviving, like the monarchy, only because it is erroneously supposed to do no harm." -- Bertrand Russell (he later changed his mind)

"Beyond such discarded fundamentals as 'matter' and 'force' lies still another fetish among the inscrutable arcana of modern science, namely, the category of cause and effect." -- Karl Pearson

The famous statistician Fisher, who was also a smoker, testified before Congress that the correlation between smoking and lung cancer couldn't prove that the former caused the latter. We have remnants of this type of reasoning in old-school "Correlation does not imply causation", without the now-standard appendix, "But it sure is a hint".

This skepticism was overturned by a surprisingly simple mathematical observation.

Let's say there are three variables in the survey data: Weight, how much the person exercises, and how much time they spend on the Internet.

For simplicity, we'll have these three variables be binary, yes-or-no observations: Y or N for whether the person has a BMI over 25, Y or N for whether they exercised at least twice in the last week, and Y or N for whether they've checked Reddit in the last 72 hours.

Now let's say our gathered data looks like this:

| Overweight | Exercise | Internet | # |

|---|---|---|---|

| Y | Y | Y | 1,119 |

| Y | Y | N | 16,104 |

| Y | N | Y | 11,121 |

| Y | N | N | 60,032 |

| N | Y | Y | 18,102 |

| N | Y | N | 132,111 |

| N | N | Y | 29,120 |

| N | N | N | 155,033 |

And lo, merely by eyeballing this data -

(which is totally made up, so don't go actually believing the conclusion I'm about to draw)

- we now realize that being overweight and spending time on the Internet both cause you to exercise less, presumably because exercise is less fun and you have more alternative things to do, but exercising has no causal influence on body weight or Internet use.

"What!" you cry. "How can you tell that just by inspecting those numbers? You can't say that exercise isn't correlated to body weight - if you just look at all the members of the population who exercise, they clearly have lower weights. 10% of exercisers are overweight, vs. 28% of non-exercisers. How could you rule out the obvious causal explanation for that correlation, just by looking at this data?"

There's a wee bit of math involved. It's simple math - the part we'll use doesn't involve solving equations or complicated proofs -but we do have to introduce a wee bit of novel math to explain how the heck we got there from here.

Let me start with a question that turned out - to the surprise of many investigators involved - to be highly related to the issue we've just addressed.

Suppose that earthquakes and burglars can both set off burglar alarms. If the burglar alarm in your house goes off, it might be because of an actual burglar, but it might also be because a minor earthquake rocked your house and triggered a few sensors. Early investigators in Artificial Intelligence, who were trying to represent all high-level events using primitive tokens in a first-order logic (for reasons of historical stupidity we won't go into) were stymied by the following apparent paradox:

-

If you tell me that my burglar alarm went off, I infer a burglar, which I will represent in my first-order-logical database using a theorem ⊢ ALARM → BURGLAR. (The symbol "⊢" is called "turnstile" and means "the logical system asserts that".)

-

If an earthquake occurs, it will set off burglar alarms. I shall represent this using the theorem ⊢ EARTHQUAKE → ALARM, or "earthquake implies alarm".

-

If you tell me that my alarm went off, and then further tell me that an earthquake occurred, it explains away my burglar alarm going off. I don't need to explain the alarm by a burglar, because the alarm has already been explained by the earthquake. I conclude there was no burglar. I shall represent this by adding a theorem which says ⊢ (EARTHQUAKE & ALARM) → NOT BURGLAR.

Which represents a logical contradiction, and for a while there were attempts to develop "non-monotonic logics" so that you could retract conclusions given additional data. This didn't work very well, since the underlying structure of reasoning was a terrible fit for the structure of classical logic, even when mutated.

Just changing certainties to quantitative probabilities can fix many problems with classical logic, and one might think that this case was likewise easily fixed.

Namely, just write a probability table of all possible combinations of earthquake or ¬earthquake, burglar or ¬burglar, and alarm or ¬alarm (where ¬ is the logical negation symbol), with the following entries:

| Burglar | Earthquake | Alarm | % |

|---|---|---|---|

| b | e | a | .000162 |

| b | e | ¬a | .0000085 |

| b | ¬e | a | .0151 |

| b | ¬e | ¬a | .00168 |

| ¬b | e | a | .0078 |

| ¬b | e | ¬a | .002 |

| ¬b | ¬e | a | .00097 |

| ¬b | ¬e | ¬a | .972 |

Using the operations of marginalization and conditionalization, we get the desired reasoning back out:

Let's start with the probability of a burglar given an alarm, p(burglar|alarm). By the law of conditional probability,

i.e. the relative fraction of cases where there's an alarm and a burglar, within the set of all cases where there's an alarm.

The table doesn't directly tell us p(alarm & burglar)/p(alarm), but by the law of marginal probability,

Similarly, to get the probability of an alarm going off, p(alarm), we add up all the different sets of events that involve an alarm going off - entries 1, 3, 5, and 7 in the table.

So the entire set of calculations looks like this:

-

If I hear a burglar alarm, I conclude there was probably (63%) a burglar.

-

If I learn about an earthquake, I conclude there was probably (80%) an alarm.

-

I hear about an alarm and then hear about an earthquake; I conclude there was probably (98%) no burglar.

Thus, a joint probability distribution is indeed capable of representing the reasoning-behaviors we want.

So is our problem solved? Our work done?

Not in real life or real Artificial Intelligence work. The problem is that this solution doesn't scale. Boy howdy, does it not scale! If you have a model containing forty binary variables - alert readers may notice that the observed physical universe contains at least forty things - and you try to write out the joint probability distribution over all combinations of those variables, it looks like this:

| .0000000000112 | YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYY |

| .000000000000034 | YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYN |

| .00000000000991 | YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYNY |

| .00000000000532 | YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYNN |

| .000000000145 | YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYNYY |

| ... | ... |

(1,099,511,627,776 entries)

This isn't merely a storage problem. In terms of storage, a trillion entries is just a terabyte or three. The real problem is learning a table like that. You have to deduce 1,099,511,627,776 floating-point probabilities from observed data, and the only constraint on this giant table is that all the probabilities must sum to exactly 1.0, a problem with 1,099,511,627,775 degrees of freedom. (If you know the first 1,099,511,627,775 numbers, you can deduce the 1,099,511,627,776th number using the constraint that they all sum to exactly 1.0.) It's not the storage cost that kills you in a problem with forty variables, it's the difficulty of gathering enough observational data to constrain a trillion different parameters. And in a universe containing seventy things, things are even worse.

So instead, suppose we approached the earthquake-burglar problem by trying to specify probabilities in a format where... never mind, it's easier to just give an example before stating abstract rules.

First let's add, for purposes of further illustration, a new variable, "Recession", whether or not there's a depressed economy at the time. Now suppose that:

-

The probability of an earthquake is 0.01.

-

The probability of a recession at any given time is 0.33 (or 1/3).

-

The probability of a burglary given a recession is 0.04; or, given no recession, 0.01.

-

An earthquake is 0.8 likely to set off your burglar alarm; a burglar is 0.9 likely to set off your burglar alarm. And - we can't compute this model fully without this info - the combination of a burglar and an earthquake is 0.95 likely to set off the alarm; and in the absence of either burglars or earthquakes, your alarm has a 0.001 chance of going off anyway.

|

|

|

||||||||||||||||||||

|

||||||||||||||||||||||

|

According to this model, if you want to know "The probability that an earthquake occurs" - just the probability of that one variable, without talking about any others - you can directly look up p(e) = .01. On the other hand, if you want to know the probability of a burglar striking, you have to first look up the probability of a recession (.33), and then p(b|r) and p(b|¬r), and sum up p(b|r)*p(r) + p(b|¬r)*p(¬r) to get a net probability of .01*.66 + .04*.33 = .02 = p(b), a 2% probability that a burglar is around at some random time.

If we want to compute the joint probability of four values for all four variables - for example, the probability that there is no earthquake and no recession and a burglar and the alarm goes off - this causal model computes this joint probability as the product:

In general, to go from a causal model to a probability distribution, we compute, for each setting of all the variables, the product

multiplying together the conditional probability of each variable given the values of its immediate parents. (If a node has no parents, the probability table for it has just an unconditional probability, like "the chance of an earthquake is .01".)

This is a causal model because it corresponds to a world in which each event is directly caused by only a small set of other events, its parent nodes in the graph. In this model, a recession can indirectly cause an alarm to go off - the recession increases the probability of a burglar, who in turn sets off an alarm - but the recession only acts on the alarm through the intermediate cause of the burglar. (Contrast to a model where recessions set off burglar alarms directly.)

|

vs. |

|

The first diagram implies that once we already know whether or not there's a burglar, we don't learn anything more about the probability of a burglar alarm, if we find out that there's a recession:

This is a fundamental illustration of the locality of causality - once I know there's a burglar, I know everything I need to know to calculate the probability that there's an alarm. Knowing the state of Burglar screens off anything that Recession could tell me about Alarm - even though, if I didn't know the value of the Burglar variable, Recessions would appear to be statistically correlated with Alarms. The present screens off the past from the future; in a causal system, if you know the exact, complete state of the present, the state of the past has no further physical relevance to computing the future. It's how, in a system containing many correlations (like the recession-alarm correlation), it's still possible to compute each variable just by looking at a small number of immediate neighbors.

Constraints like this are also how we can store a causal model - and much more importantly, learn a causal model - with many fewer parameters than the naked, raw, joint probability distribution.

Let's illustrate this using a simplified version of this graph, which only talks about earthquakes and recessions. We could consider three hypothetical causal diagrams over only these two variables:

|

|

||||

|

|||||

p(E&R)=p(E)p(R) |

|||||

|

|

|||||||

|

||||||||

p(E&R) = p(E)p(R|E) |

||||||||

|

|

|||||||

|

||||||||

p(E&R) = p(R)p(E|R) |

||||||||

Let's consider the first hypothesis - that there's no causal arrows connecting earthquakes and recessions. If we build a causal model around this diagram, it has 2 real degrees of freedom - a degree of freedom for saying that the probability of an earthquake is, say, 29% (and hence that the probability of not-earthquake is necessarily 71%), and another degree of freedom for saying that the probability of a recession is 3% (and hence the probability of not-recession is constrained to be 97%).

On the other hand, the full joint probability distribution would have 3 degrees of freedom - a free choice of (earthquake&recession), a choice of p(earthquake&¬recession), a choice of p(¬earthquake&recession), and then a constrained p(¬earthquake&¬recession) which must be equal to 1 minus the sum of the other three, so that all four probabilities sum to 1.0.

By the pigeonhole principle (you can't fit 3 pigeons into 2 pigeonholes) there must be some joint probability distributions which cannot be represented in the first causal structure. This means the first causal structure is falsifiable; there's survey data we can get which would lead us to reject it as a hypothesis. In particular, the first causal model requires:

or equivalently

or equivalently

which is a conditional independence constraint - it says that learning about recessions doesn't tell us anything about the probability of an earthquake or vice versa. If we find that earthquakes and recessions are highly correlated in the observed data - if earthquakes and recessions go together, or earthquakes and the absence of recessions go together - it falsifies the first causal model.

For example, let's say that in your state, an earthquake is 0.1 probable per year and a recession is 0.2 probable. If we suppose that earthquakes don't cause recessions, earthquakes are not an effect of recessions, and that there aren't hidden aliens which produce both earthquakes and recessions, then we should find that years in which there are earthquakes and recessions happen around 0.02 of the time. If instead earthquakes and recessions happen 0.08 of the time, then the probability of a recession given an earthquake is 0.8 instead of 0.2, and we should much more strongly expect a recession any time we are told that an earthquake has occurred. Given enough samples, this falsifies the theory that these factors are unconnected; or rather, the more samples we have, the more we disbelieve that the two events are unconnected.

On the other hand, we can't tell apart the second two possibilities from survey data, because both causal models have 3 degrees of freedom, which is the size of the full joint probability distribution. (In general, fully connected causal graphs in which there's a line between every pair of nodes, have the same number of degrees of freedom as a raw joint distribution - and 2 nodes connected by 1 line are "fully connected".) We can't tell if earthquakes are 0.1 likely and cause recessions with 0.8 probability, or recessions are 0.2 likely and cause earthquakes with 0.4 probability (or if there are hidden aliens which on 6% of years show up and cause earthquakes and recessions with probability 1).

With larger universes, the difference between causal models and joint probability distributions becomes a lot more striking. If we're trying to reason about a million binary variables connected in a huge causal model, and each variable could have up to four direct 'parents' - four other variables that directly exert a causal effect on it - then the total number of free parameters would be at most... 16 million!

The number of free parameters in a raw joint probability distribution over a million binary variables would be 21,000,000. Minus one.

So causal models which are less than fully connected - in which most objects in the universe are not the direct cause or direct effect of everything else in the universe - are very strongly falsifiable; they only allow probability distributions (hence, observed frequencies) in an infinitesimally tiny range of all possible joint probability tables. Causal models very strongly constrain anticipation - disallow almost all possible patterns of observed frequencies - and gain mighty Bayesian advantages when these predictions come true.

To see this effect at work, let's consider the three variables Recession, Burglar, and Alarm.

| Alarm | Burglar | Recession | % |

|---|---|---|---|

| Y | Y | Y | .012 |

| N | Y | Y | .0013 |

| Y | N | Y | .00287 |

| N | N | Y | .317 |

| Y | Y | N | .003 |

| N | Y | N | .000333 |

| Y | N | N | .00591 |

| N | N | N | .654 |

All three variables seem correlated to each other when considered two at a time. For example, if we consider Recessions and Alarms, they should seem correlated because recessions cause burglars which cause alarms. If we learn there was an alarm, for example, we conclude it's more probable that there was a recession. So since all three variables are correlated, can we distinguish between, say, these three causal models?

|

|

|

Yes we can! Among these causal models, the prediction which only the first model makes, which is not shared by either of the other two, is that once we know whether a burglar is there, we learn nothing more about whether there was an alarm by finding out that there was a recession, since recessions only affect alarms through the intermediary of burglars:

But the third model, in which recessions directly cause alarms, which only then cause burglars, does not have this property. If I know that a burglar has appeared, it's likely that an alarm caused the burglar - but it's even more likely that there was an alarm, if there was a recession around to cause the alarm! So the third model predicts:

And in the second model, where alarms and recessions both cause burglars, we again don't have the conditional independence. If we know that there's a burglar, then we think that either an alarm or a recession caused it; and if we're told that there's an alarm, we'd conclude it was less likely that there was a recession, since the recession had been explained away.

(This may seem a bit clearer by considering the scenario B->A<-E, where burglars and earthquakes both cause alarms. If we're told the value of the bottom node, that there was an alarm, the probability of there being a burglar is not independent of whether we're told there was an earthquake - the two top nodes are not conditionally independent once we condition on the bottom node.)

On the other hand, we can't tell the difference between:

|

vs. |

|

vs. |

|

using only this data and no other variables, because all three causal structures predict the same pattern of conditional dependence and independence - three variables which all appear mutually correlated, but Alarm and Recession become independent once you condition on Burglar.

Being able to read off patterns of conditional dependence and independence is an art known as "D-separation", and if you're good at it you can glance at a diagram like this...

...and see that, once we already know the Season, whether the Sprinkler is on and whether it is Raining are conditionally independent of each other - if we're told that it's Raining we conclude nothing about whether or not the Sprinkler is on. But if we then further observe that the sidewalk is Slippery, then Sprinkler and Rain become conditionally dependent once more, because if the Sidewalk is Slippery then it is probably Wet and this can be explained by either the Sprinkler or the Rain but probably not both, i.e. if we're told that it's Raining we conclude that it's less likely that the Sprinkler was on.

Okay, back to the obesity-exercise-Internet example. You may recall that we had the following observed frequencies:

| Overweight | Exercise | Internet | # |

|---|---|---|---|

| Y | Y | Y | 1,119 |

| Y | Y | N | 16,104 |

| Y | N | Y | 11,121 |

| Y | N | N | 60,032 |

| N | Y | Y | 18,102 |

| N | Y | N | 132,111 |

| N | N | Y | 29,120 |

| N | N | N | 155,033 |

Do you see where this is going?

"Er," you reply, "Maybe if I had a calculator and ten minutes... you want to just go ahead and spell it out?"

Sure! First, we marginalize over the 'exercise' variable to get the table for just weight and Internet use. We do this by taking the 1,119 people who are YYY, overweight and Reddit users and exercising, and the 11,121 people who are overweight and non-exercising and Reddit users, YNY, and adding them together to get 12,240 total people who are overweight Reddit users:

| Overweight | Internet | # |

|---|---|---|

| Y | Y | 12,240 |

| Y | N | 76,136 |

| N | Y | 47,222 |

| N | N | 287,144 |

"And then?"

Well, that suggests that the probability of using Reddit, given that your weight is normal, is the same as the probability that you use Reddit, given that you're overweight. 47,222 out of 334,366 normal-weight people use Reddit, and 12,240 out of 88,376 overweight people use Reddit. That's about 14% either way.

"And so we conclude?"

Well, first we conclude it's not particularly likely that using Reddit causes weight gain, or that being overweight causes people to use Reddit:

If either of those causal links existed, those two variables should be correlated. We shouldn't find the lack of correlation or conditional independence that we just discovered.

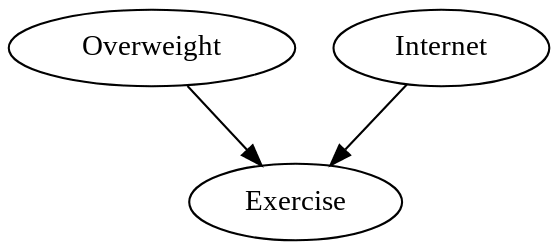

Next, imagine that the real causal graph looked like this:

In this graph, exercising causes you to be less likely to be overweight (due to the virtue theory of metabolism), and exercising causes you to spend less time on the Internet (because you have less time for it).

But in this case we should not see that the groups who are/aren't overweight have the same probability of spending time on Reddit. There should be an outsized group of people who are both normal-weight and non-Redditors (because they exercise), and an outsized group of non-exercisers who are overweight and Reddit-using.

So that causal graph is also ruled out by the data, as are others like:

Leaving only this causal graph:

Which says that weight and Internet use exert causal effects on exercise, but exercise doesn't causally affect either.

All this discipline was invented and systematized by Judea Pearl, Peter Spirtes, Thomas Verma, and a number of other people in the 1980s and you should be quite impressed by their accomplishment, because before then, inferring causality from correlation was thought to be a fundamentally unsolvable problem. The standard volume on causal structure is Causality by Judea Pearl.

Causal models (with specific probabilities attached) are sometimes known as "Bayesian networks" or "Bayes nets", since they were invented by Bayesians and make use of Bayes's Theorem. They have all sorts of neat computational advantages which are far beyond the scope of this introduction - e.g. in many cases you can split up a Bayesian network into parts, put each of the parts on its own computer processor, and then update on three different pieces of evidence at once using a neatly local message-passing algorithm in which each node talks only to its immediate neighbors and when all the updates are finished propagating the whole network has settled into the correct state. For more on this see Judea Pearl's Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference which is the original book on Bayes nets and still the best introduction I've personally happened to read.

[1] Somewhat to my own shame, I must admit to ignoring my own observations in this department - even after I saw no discernible effect on my weight or my musculature from aerobic exercise and strength training 2 hours a day 3 times a week, I didn't really start believing that the virtue theory of metabolism was wrong [2] until after other people had started the skeptical dogpile.

[2] I should mention, though, that I have confirmed a personal effect where eating enough cookies (at a convention where no protein is available) will cause weight gain afterward. There's no other discernible correlation between my carbs/protein/fat allocations and weight gain, just that eating sweets in large quantities can cause weight gain afterward. This admittedly does bear with the straight-out virtue theory of metabolism, i.e., eating pleasurable foods is sinful weakness and hence punished with fat.

[3] Or there might be some hidden third factor, a gene which causes both fat and non-exercise. By Occam's Razor this is more complicated and its probability is penalized accordingly, but we can't actually rule it out. It is obviously impossible to do the converse experiment where half the subjects are randomly assigned lower weights, since there's no known intervention which can cause weight loss.

Mainstream status: This is meant to be an introduction to completely bog-standard Bayesian networks, causal models, and causal diagrams. Any departures from mainstream academic views are errors and should be flagged accordingly.

Part of the sequence Highly Advanced Epistemology 101 for Beginners

Next post: "Stuff That Makes Stuff Happen"

Previous post: "The Fabric of Real Things"

297 comments

Comments sorted by top scores.

comment by Vaniver · 2012-10-12T03:22:19.128Z · LW(p) · GW(p)

It's not clear to me that the virtue theory of metabolism is a good example for this post, since it's likely to be highly contentious.

Replies from: tgb, None, johnlawrenceaspden, handoflixue↑ comment by tgb · 2012-10-13T02:19:59.357Z · LW(p) · GW(p)

It seems clear to me that it is a very bad example. I find that consistently the worst part of Eliezer's non-fiction writing is that he fails to separate contentious claims from writings on unrelated subjects. Moreover, he usually discards the traditional view as ridiculous rather than admitting that its incorrectness is extremely non-obvious. He goes so far in this piece as to give the standard view a straw-man name and to state only the most laughable of its proponents' justifications. This mars an otherwise excellent piece and I am unwilling to recommend this article to those who are not already reading LW.

Replies from: Yvain, army1987↑ comment by Scott Alexander (Yvain) · 2012-10-13T04:30:10.943Z · LW(p) · GW(p)

Yeah, I didn't even mind the topic, but I thought this particular sentence was pretty sketchy:

in the virtue theory of metabolism, lack of exercise actually causes weight gain due to divine punishment for the sin of sloth.

This sounds like a Fully General Mockery of any claim that humans can ever affect outcomes. For example:

in the virtue theory of traffic, drinking alcohol actually causes accidents due to divine punishment for the sin of intemperance

in the virtue theory of conception, unprotected sex actually causes pregnancy due to divine punishment for the sin of lust

And selectively applied Fully General Mockeries seem pretty Dark Artsy.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-13T10:09:09.784Z · LW(p) · GW(p)

in the virtue theory of traffic, drinking alcohol actually causes accidents due to divine punishment for the sin of intemperance

Of course not! The real reason drinkers cause more accidents is that low-conscientiousness people are both more likely to drink before driving and more likely to drive recklessly. (The impairment of reflexes due to alcohol does itself have an effect, but it's not much larger than that due to e.g. sleep deprivation.) If a high-conscientiousness person was randomly assigned to the “drunk driving” condition, they would drive extra cautiously to compensate for their impairment. ;-)

(I'm exaggerating for comical effect, but I do believe a weaker version of this.)

↑ comment by A1987dM (army1987) · 2012-10-13T08:24:03.570Z · LW(p) · GW(p)

“Extremely non-obvious”? Have you looked at how many calories one hour of exercise burns, and compared that to how many calories foodstuffs common in the First World contain?

Replies from: Vaniver↑ comment by Vaniver · 2012-10-13T15:33:14.066Z · LW(p) · GW(p)

I agree that focusing on input has far higher returns than focusing on output. Simple calorie comparison predicts it, and in my personal experience I've noted small appearance and weight changes after changes in exercise level and large appearance and weight changes after changes in intake level. That said, the traditional view- "eat less and exercise more"- has the direction of causation mostly right for both interventions and to represent it as just "exercise more" seems mistaken.

↑ comment by [deleted] · 2012-12-17T23:39:56.663Z · LW(p) · GW(p)

I was also distracted by the footnotes, since even though I found them quite funny, [3] at least is obviously wrong: "there's no known intervention which can cause weight loss." Sure there is -- the effectiveness of bariatric surgery is quite well evidenced at this point (http://en.wikipedia.org/wiki/Bariatric_surgery#Weight_loss).

I generally share Eliezer's assessment of the state of conventional wisdom in dietary science (abysmal), but careless formulations like this one are -- well, distracting.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-12-18T11:08:37.197Z · LW(p) · GW(p)

Also, even if he meant non-surgical interventions, it should be “which can reliably cause long-term weight loss” -- there are people who lose weight by dieting, and a few of them don't even gain it back.

↑ comment by johnlawrenceaspden · 2012-10-12T19:27:49.031Z · LW(p) · GW(p)

I think that's why it's a good example. It induces genuine curiosity about the truth, and about the method.

Replies from: Vaniver↑ comment by Vaniver · 2012-10-12T20:49:40.452Z · LW(p) · GW(p)

It induces genuine curiosity about the truth

I suspect this depends on the handling of the issue. Eliezer presenting his model of the world as "common sense," straw manning the alternative, and then using fake data that backs up his preferences is, frankly, embarrassing.

This is especially troublesome because this is an introductory explanation to a technical topic- something Eliezer has done well before- and introductory explanations are great ways to introduce people to Less Wrong. But how can I send this to people I know who will notice the bias in the second paragraph and stop reading because that's negative evidence about the quality of the article? How can I send this to people I know who will ask why he's using two time-variant variables as single acyclic nodes, rather than a ladder (where exercise and weight at t-1 both cause exercise at t and weight at t)?

What would it look like to steel man the alternative? One of my physics professors called 'calories in-calories out=change in fat' the "physics diet," since it was rooted in conservation of energy; that seems like a far better name. Like many things in physics, it's a good first order approximation to the engineering reality, but there are meaningful second order terms to consider. "Calories in" is properly "calories absorbed" not "calories put into your mouth"- though we'll note it's difficult to absorb more calories than you put into your mouth. Similarly, calories out is non-trivial to measure- current weight and activity level can give you a broad guess, but it can be complicated by many things, like ambient temperature! Any attempt we make to control calories in and calories out will have to be passed through the psychology and physiology of the person in question, making them even more difficult to control in the field.

and about the method.

Compare the volume of discussion of the method and the overweight-exercise link in the comments.

Replies from: thomblake, Eliezer_Yudkowsky↑ comment by thomblake · 2012-10-12T21:00:15.703Z · LW(p) · GW(p)

How can I send this to people I know who will ask why he's using two time-variant variables as single acyclic nodes, rather than a ladder (where exercise and weight at t-1 both cause exercise at t and weight at t)?

Why do you need to send this article to people who could ask that? If you're saying "Oh, this should actually be modeled using causal markov chains..." then this is probably too basic for you.

Replies from: Vaniver↑ comment by Vaniver · 2012-10-12T21:05:59.076Z · LW(p) · GW(p)

Why do you need to send this article to people who could ask that?

Because I'm still a grad student, most of those people that I know are routinely engaged in teaching these sorts of concepts, and so will find articles like this useful for pedagogical reasons.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-12T22:05:37.305Z · LW(p) · GW(p)

How can I send this to people I know who will ask why he's using two time-variant variables as single acyclic nodes

This is not intended for readers who already know that much about causal models, btw, it's a very very very basic intro.

Replies from: EricHerboso↑ comment by EricHerboso · 2012-10-13T02:55:00.666Z · LW(p) · GW(p)

I'm torn. On the one hand, using the method to explain something the reader probably was not previously aware of is an awesome technique that I truly appreciate. Yet Vaniver's point that controversial opinions should not be unnecessarily put into introductory sequence posts makes sense. It might turn off readers who would otherwise learn from the text, like nyan sandwich.

In my opinion, the best fix would be to steelman the argument as much as possible. Call it the physics diet, not the virtue-theory of metabolism. Add in an extra few sentences that really buff up the basics of the physics diet argument. And, at the end, include a note explaining why the physics diet doesn't work (appetite increases as exercise increases).

Replies from: Vaniver, army1987↑ comment by Vaniver · 2012-10-13T03:58:20.857Z · LW(p) · GW(p)

The point Eliezer is addressing is the one that RichardKennaway brought up separately. Causal models can still function with feedback (in Causality, Pearl works through an economic model where price and quantity both cause each other, and have their own independent causes), but it's a bit more bothersome.

A model where the three are one-time events- like, say, whether a person has a particular gene, whether or not they were breastfed, and their height as an adult- won't have the problem of being cyclic, but will have the pedagogical problem that the causation is obvious from the timing of the events.

One could have, say, the weather witch's prediction of whether or not there will be rain, whether or not you brought an umbrella with you, and whether or not it rained. Aside from learning, this will be an acyclic system that has a number of plausible underlying causal diagrams (with the presence of the witch making the example clearly fictional and muddying our causal intuitions, so we can only rely on the math).

In my opinion, the best fix would be to steelman the argument as much as possible.

The concept of inferential distance suggests to me that posts should try and make their pathways as short and straight as possible. Why write a double-length post that explains both causal models and metabolism, when you could write a single-length post that explains only causal models? (And, if metabolism takes longer to discuss than causal models, the post will mostly be about the illustrative detour, not the concept itself!)

Replies from: MC_Escherichia, EricHerboso↑ comment by MC_Escherichia · 2012-10-14T20:55:12.606Z · LW(p) · GW(p)

won't have the problem of being acyclic

Should that be "cyclic"? I take it from Richard's post that "acyclic" is what we want.

Replies from: Vaniver↑ comment by EricHerboso · 2012-10-13T19:19:53.809Z · LW(p) · GW(p)

The concept of inferential distance suggests to me that posts should try and make their pathways as short and straight as possible. Why write a double-length post that explains both causal models and metabolism, when you could write a single-length post that explains only causal models? (And, if metabolism takes longer to discuss than causal models, the post will mostly be about the illustrative detour, not the concept itself!)

You've convinced me. I now agree that EY should go back and edit the post to use a different more conventional example.

↑ comment by A1987dM (army1987) · 2012-10-13T08:13:09.981Z · LW(p) · GW(p)

In my opinion, the best fix would be to steelman the argument as much as possible. Call it the physics diet, not the virtue-theory of metabolism.

“Physics diet” and “virtue-theory of metabolism” are not steelman and strawman of each other; they are quite different things. Proponents of the physics diet (e.g. John Walker) do not say that if you want to lose weight you should exercise more -- they say you should eat less. EDIT: the strawman of this would be the theory that “excessive eating actually causes weight gain due to divine punishment for the sin of gluttony” (inspired by Yvain's comment).

Seriously; that was intended to be an example. What's it matter whether the nodes are labelled “exercise/overweight/internet” or “foo/bar/baz”? (But yeah, Footnote 1 doesn't belong there, and Footnote 3 might mention eating.)

↑ comment by handoflixue · 2012-10-12T19:08:31.648Z · LW(p) · GW(p)

Taking a "contentious" point and resolving it in to a settled fact made the whole article vastly more engaging to me. It also struck me as an elegant demonstration of the value of the tool: It didn't simply introduce the concept, it used it to accomplish something worthwhile.

Replies from: Vaniver↑ comment by Vaniver · 2012-10-12T19:59:07.918Z · LW(p) · GW(p)

Taking a "contentious" point and resolving it in to a settled fact

! From the article:

Replies from: handoflixue, handoflixue(which is totally made up, so don't go actually believing the conclusion I'm about to draw)

↑ comment by handoflixue · 2012-10-12T20:19:08.118Z · LW(p) · GW(p)

Eliezer's data is made up, but all the not-made-up research I've seen supports his actual conclusion. The net emotional result was the same for me as if he'd used the actual research, since my brain could substitute it in.

Perhaps I am weird in having this emotional link, or perhaps I am simply more familiar with the not-made-up research than you.

Replies from: Vaniver↑ comment by Vaniver · 2012-10-12T21:04:33.971Z · LW(p) · GW(p)

The net emotional result was the same for me as if he'd used the actual research, since my brain could substitute it in.

I understand. I think it's important to watch out for these sorts of illusions of transparency, though, especially when dealing with pedagogical material. One of the heuristics I've been using is "who would I not recommend this to?", because that will use social modules my brain is skilled at using to find holes and snags in the article. I don't know how useful that heuristic will be to others, and welcome the suggestion of others.

perhaps I am simply more familiar with the not-made-up research than you.

I am not an expert in nutritional science, but it appears to me that there is controversy among good nutritionists. This post also aided my understanding of the issue. I also detail some more of my understanding in this comment down another branch.

EDIT: Also, doing some more poking around now, this seems relevant.

Replies from: handoflixue↑ comment by handoflixue · 2012-10-12T22:34:03.247Z · LW(p) · GW(p)

Ahh, that heuristic makes sense! I wasn't thinking in that context :)

↑ comment by handoflixue · 2012-10-12T20:20:24.419Z · LW(p) · GW(p)

P.S. When quoting two people, it can be useful to attribute the quotes. I initially thought the second quote was your way of doing a snarky editorial comment on what I'd said, not quoting the article...

Replies from: Vanivercomment by IlyaShpitser · 2012-10-12T04:06:38.652Z · LW(p) · GW(p)

Hi Eliezer,

Thanks for writing this! A few comments about this article (mostly minor, with one exception).

The famous statistician Fischer, who was also a smoker, testified before Congress that the correlation between smoking and lung cancer couldn't prove that the former caused the latter.

Fisher was specifically worried about hidden common causes. Fisher was also the one who brought the concept of a randomized experiment into statistics. Fisher was "curmudgeony," but it is not quite fair to use him as an exemplar of the "keep causality out of our statistics" camp.

Causal models (with specific probabilities attached) are sometimes known as "Bayesian networks" or "Bayes nets", since they were invented by Bayesians and make use of Bayes's Theorem.

Graphical causal models and Bayesian networks are not the same thing (this is a common misconception). A distribution that factorizes according to a DAG is a Bayesian network (this is just a property of a distribution -- nothing about causality). You can further say that a graphical model is causal if an additional set of properties holds. For example, you can (loosely) say that in a causal model all parents are "direct causes." If you want to say that formally, you would talk about the truncated factorization and do(.). Without interventions there is no interventionist causal model.

All this discipline was invented and systematized by Judea Pearl, Peter Spirtes, Thomas Verma, and a number of other people in the 1980s and you should be quite impressed by their accomplishment, because before then, inferring causality from correlation was thought to be a fundamentally unsolvable problem.

I often find myself in a weird position of having to point folks to people other than Pearl. I think it's commendable that you looked into other people in the field. The big early names in causality that you did not mention are Haavelmo (1950s) and Sewall Wright (this guy is amazing -- he figured so many things out correctly in the 1920s). Special cases of non-causal graphical models (Ising models, Hidden Markov models, etc.), along with factorizations and propagation algorithms were known long before Pearl in other communities.

P.S. Since I am in Cali.: if folks at SI are interested in new developments on the "learning causal structure from data" front, I could be bribed by the Cheeseboard to come by and give a talk.

Replies from: Emile↑ comment by Emile · 2012-10-12T10:51:45.917Z · LW(p) · GW(p)

Hi Eliezar,

(it's Eliezer)

Replies from: IlyaShpitser↑ comment by IlyaShpitser · 2012-10-12T21:05:54.896Z · LW(p) · GW(p)

Done, sorry.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-03T20:38:59.562Z · LW(p) · GW(p)

In fairness, a lot of people seem to pronounce it Eliezar for some reason.

comment by Richard_Kennaway · 2012-10-12T07:00:18.985Z · LW(p) · GW(p)

That's a clear outline of the theory. I just want to note that the theory itself makes some assumptions about possible patterns of causation, even before you begin to select which causal graphs are plausible candidates for testing. Pearl himself stresses that without putting causal information in, you can't get causal information out from purely observational data.

For example, if overweight causes lack of exercise and lack of exercise causes overweight, you don't have an acyclic graph. Acyclicity of causation is one of the background assumptions here. Acyclicity of causation is reasonable when talking about point events in a universe without time-like loops. However, "weight" and "exercise level" are temporally extended processes, which makes acyclicity a strong assumption.

Replies from: Morendil, Richard_Kennaway, SilasBarta, SilasBarta↑ comment by Richard_Kennaway · 2012-10-12T08:46:19.568Z · LW(p) · GW(p)

Pearl himself stresses that without putting causal information in, you can't get causal information out from purely observational data.

Koan: How, then, does the process of attributing causation get started?

Replies from: Antisuji, AlanCrowe, roryokane↑ comment by Antisuji · 2012-10-14T19:53:09.117Z · LW(p) · GW(p)

My answer:

First, notice a situation that occurs many times. Then pay attention to the ways in which things are different from one iteration to the next. At this point, and here is where causal information begins, if some of the variables represent your own behavior, you can systematically intervene in the situation by changing those behaviors. For cleanest results, contrive a controlled experiment that is analogous the the original situation.

In short, you insert causal information by intervening.

This of course requires you to construct a reference class of situations that are substantially similar to one another, but humans seem to be pretty good at that within our domains of familiarity.

By the way, thank you for explaining the underlying assumption of acyclicity. I've been trying to internalize the math of causal calculus and it bugged me that cyclic causes weren't allowed. Now I understand that it is a simplification and that the calculus isn't quite as powerful as I thought.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-10-14T21:01:26.478Z · LW(p) · GW(p)

I don't have an answer to my own koan, but this was one of the possibilities that I thought of:

In short, you insert causal information by intervening.

But how does one intervene? By causing some variable to take some value, while obstructing the other causal influences on it. So causal knowledge is already required before one can intervene. This is not a trivial point -- if the knowledge is mistaken, the intervention may not be successful, as I pointed out with the example of trying to warm a room thermostat by placing a candle near it.

Replies from: learnmethis↑ comment by learnmethis · 2012-10-15T15:54:00.073Z · LW(p) · GW(p)

Causal knowledge is required to ensure success, but not to stumble across it. Over time, noticing (or stumbling across if you prefer) relationships between the successes stumbled upon can quickly coalesce into a model of how to intervene. Isn't this essentially how we believe causal reasoning originated? In a sense, all DNA is information about how to intervene that, once stumbled across, persisted due to its efficacy.

↑ comment by AlanCrowe · 2012-10-13T10:05:33.316Z · LW(p) · GW(p)

I think that one bootstraps the process with contrived situations designed to appeal to ones intuitions. For example, one attempts to obtain causal information through a randomised controlled trial. You mark the obverse face of a coin "treatment" and reverse face "control" and toss the coin to "randomly" assign your patients.

Let us briefly consider the absolute zero of no a priori knowledge at all. Perhaps the coin knows the prognosis of the patient and comes down "treatment" for patients with a good prognosis, intending to mislead you into believing that the treatment is the cause of good outcomes. Maybe, maybe not. Let's stop considering this because insanity is stalking us.

We are willing to take a stand. We know enough, a prior, to choose and operate a randomisation device and thus obtain a variable which is independent of all the others and causally connected to none of them. We don't prove this, we assume it. When we encounter a compulsive gambler, who believes in Lady Luck who is fickle and very likely is actually messing with us via the coin, we just dismiss his hypothesis. Life is short, one has to assume that certain obvious things are actually true in order to get started, and work up from there.

↑ comment by roryokane · 2012-10-13T00:22:14.431Z · LW(p) · GW(p)

My answer: Attributing causation is part of our human instincts. We are born with some desire to do it. We may develop that skill by reflecting on it during our lifetime.

(How did we humans develop that instinct? Evolution, probably. Humans who had mutated to reason about causality died less – for instance, they might have avoided drinking from a body of water after seeing something poisonous put in, because they reasoned that the poison addition would cause the water to be poisonous.)

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-10-13T06:58:10.211Z · LW(p) · GW(p)

This is a non-explanation, or rather, three non-explanations.

"Human nature does it" explains no more than "God does it".

"It's part of human nature because it must have been adaptive in the past" likewise. Causal reasoning works, but why does it work?

And "mutated to reason about causality" is just saying "genes did it", which is still not an advance on "God did it".

Replies from: chaosmosis↑ comment by chaosmosis · 2012-10-13T10:23:51.787Z · LW(p) · GW(p)

There isn't any better explanation. If you don't accept the idea of causality as given, you can never explain anything. Roryokane is using causality to explain how causality originated, and that's not a good way to go about proving the way causality works or anything but it is a good way of understanding why causality exists, or rather just accepting that we can never prove causality exists.

Our instincts are just wired to interpret causality that way, and that makes it a brute fact. You might as well claim that calling a certain color yellow and then saying it looks yellow as a result of human nature is a non-explanation, you might be technically right to do so but in that case then you're asking for answers you're never actually going to get.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-10-15T13:18:13.341Z · LW(p) · GW(p)

You might as well claim that calling a certain color yellow and then saying it looks yellow as a result of human nature is a non-explanation, you might be technically right to do so but in that case then you're asking for answers you're never actually going to get.

That would be a non-explanation, but a better explanation is in fact possible. You can look at the way that light is turned into neural signals by the eye, and discover the existence of red-green, blue-yellow, and light-dark axes, and there you have physiological justification for six of our basic colour words. (I don't know just how settled that story is, but it's settled enough to be literally textbook stuff.)

So, that is what a real explanation looks like. Attributing anything to "human nature" is even more wrong than attributing it to "God". At least we have some idea of what "God" would be if he existed, but "human nature" is a blank, a label papering over a void. How do Sebastian Thrun's cars drive themselves? Because he has integrated self-driving into their nature. How does opium produce sleep? By its dormitive nature. How do humans distinguish colours? By their human nature.

Replies from: chaosmosis↑ comment by chaosmosis · 2012-10-15T18:59:16.643Z · LW(p) · GW(p)

But causality is uniquely impervious to those kind of explanations. You can explain why humans believe in causality in a physiological sense, but I didn't think that is what you were asking for. I thought you were asking for some overall metaphysical justification for causality, and there really isn't any. Causal reasoning works because it works, there's no other justification to be had for it.

↑ comment by SilasBarta · 2012-11-03T20:40:51.153Z · LW(p) · GW(p)

Pearl himself stresses that without putting causal information in, you can't get causal information out from purely observational data.

Where do you get this? My recall of Causality is that he specifically rejected the "no causes in, no causes out" view in favor of the "Occam's Razor in, some causes out" view.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-11-04T10:50:50.562Z · LW(p) · GW(p)

Yes, the Occamian view is in his book in section 2.3 (and still in the 2009 2nd edition). But that definition of "inferred causation" -- those arrows common to all causal models consistent with the statistical data -- depends on general causal assumptions, the usual ones being the DAG, Markov, and Faithfulness properties.

In other places, for example: "Causal inference in statistics: An overview", which is in effect the Cliff Notes version of his book, he writes:

"one cannot substantiate causal claims from associations alone, even at the population level -- behind every causal conclusion there must lie some causal assumption that is not testable in observational studies."

Here is a similar survey article from 2003, in which he writes that exact sentence, followed by:

"Nancy Cartwright (1989) expressed this principle as "no causes in, no causes out", meaning that we cannot convert statistical knowledge into causal knowledge."

Everywhere, he defines causation in terms of counterfactuals: claims about what would have happened had something been different, which, he says, cannot be expressed in terms of statistical distributions over observational data. Here is a long interview (both audio and text transcript) in which he recounts the whole course of his work.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-04T19:18:33.920Z · LW(p) · GW(p)

In other places, for example: "Causal inference in statistics: An overview", which is in effect the Cliff Notes version of his book, he writes:

"one cannot substantiate causal claims from associations alone, even at the population level -- behind every causal conclusion there must lie some causal assumption that is not testable in observational studies."

Here is a similar survey article from 2003, in which he writes that exact sentence, followed by:

"Nancy Cartwright (1989) expressed this principle as "no causes in, no causes out", meaning that we cannot convert statistical knowledge into causal knowledge."

Interesting, but how do those files evade word searches for the parts you've quoted?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-11-04T20:06:47.792Z · LW(p) · GW(p)

Interesting, but how do those files evade word searches for the parts you've quoted?

Dunno, not all PDFs are searchable and not all PDF viewers fail to make a pig's ear of searching. The quotes can be found on p.99 (the third page of the file) and pp.284-285 (6th-7th pages of the file) respectively.

OTOH, try Google.

↑ comment by SilasBarta · 2012-11-03T23:26:36.828Z · LW(p) · GW(p)

Btw, Scott Aaronson just recently posted the question of whether you would care about causality if you could only work with observational data (someone already linked this article in the comments) and I put up a comment with my summary of the LW position (plus some complexity-theoretic considerations).

Replies from: Pfft↑ comment by Pfft · 2012-11-04T22:28:36.423Z · LW(p) · GW(p)

I don't think that Bayesian networks implicitly contain the concept of causality.

Formally, a probability distribution is represented by a Bayesian network if it can be factored as a product of P(node | node's parents). But this is not unique, given one network you can create lots of other networks which also represent the same distribution by e.g. changing the direction of arrows as long as the independence properties from the graph stay the same (e.g. the graphs A -> B -> C and A <- B <- C can represent exactly the same class of probability distributions). Pearl distinguishes Baysian networks from causal networks, which are Bayesian networks in which the arrows point in the direction of causality.

And of course, there are other sparse representations like Markov networks, which also incorporates independence assumptions but are undirected.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-04T23:01:59.527Z · LW(p) · GW(p)

The non-uniqueness doesn't make causality absent or irrelevant; it must means there are multiple minimal representations that use causality. The causality arises in how your node connections are asymmetric. If the relativity of simultaneity (observers seeing the same events in a different time order) doesn't obviate causality, neither should he existence of multiple causal networks.

There are indeed equivalent models that use purely symmetric node connections (or none at all in the case of the superexponential pair wise conditional independence table across all variables), but (correct me if I'm wrong) by throwing away the information graphically represented by the arrows, you no longer have a maximally efficient encoding of the joint probability distribution (even though it's certainly not as bad as the superexponential table).

Replies from: Pfft↑ comment by Pfft · 2012-11-05T01:14:53.256Z · LW(p) · GW(p)

I guess there are two points here.

First, authors like Pearl do not use "causality" to mean just that there is a directed edge in a Bayesian network (i.e. that certain conditional independence properties hold). Rather, he uses it to mean that the model describes what happens under interventions. One can see the difference by comparing Rain -> WetGrass with WetGrass -> Rain (which are equivalent as Bayesian networks). Of course, maybe he is confused and the difference will dissolve under more careful consideration, but I think this shows one should be careful in claiming that Bayes networks encode our best understanding of causality.

Second, do we need Bayesian networks to economically represent distributions? This is slightly subtle.

We do not need the directed arrows when representing a particular distribution. For example, suppose a distribution P(A,B,C) is represented by the Bayesian network A -> B <- C. Expanding the definition, this means that the joint distribution can be factored as

P(A=a,B=b,C=c) = P1(A=a) P2(B=b|A=a,C=c) P3(C=c)

where P1 and P3 are the marginal distributions of A and B, and P2 is the conditional distribution of B. So the data we needed to specify P were two one-column tables specifying P1 and P3, and a three-column table specifying P2(a|b,c) for all values of a,b,c. But now note that we do not gain very much by knowing that these are probability distributions. To save space it is enough to note that P factors as

P(A=a,B=b,C=c) = F1(a) F2(b,a,c) F3(c)

for some real-valued functions F1, F2, and F3. In other words, that P is represented by a Markov network A - B - C. The directions on the edges were not essential.

And indeed, typical algorithms for inference given a probability distribution, such as belief propagation, do not make use of the Bayesian structure. They work equally well for directed and undirected graphs.

Rather, the point of Bayesian versus Markov networks is that the class of probability distributions that can be represented by them are different. So they are useful when we try to learn a probability distribution, and want to cut down the search space by constraining the distribution by some independence relations that we know a priori.

Bayesian networks are popular because they let us write down many independence assumptions that we know hold for practical problems. However, we then have to ask how we know those particular independence relations hold. And that's because they correspond to causual relations! The reason Bayesian networks are popular with human researchers is that they correspond well with the notion of causality that humans use. We don't know that the Armchairians would also find them useful.

Replies from: Richard_Kennaway, SilasBarta↑ comment by Richard_Kennaway · 2012-11-05T09:16:21.650Z · LW(p) · GW(p)

To save space it is enough to note that P factors as

P(A=a,B=b,C=c) = F1(a) F2(b,a,c) F3(c)

for some real-valued functions F1, F2, and F3. In other words, that P is represented by a Markov network A - B - C. The directions on the edges were not essential.

Can't the directions be recovered automatically from that expression, though? That is, discarding the directions from the notation of conditional probabilities doesn't actually discard them.

The reconstruction algorithm would label every function argument as "primary" or "secondary", begin with no arguments labelled, and repeatedly do this:

For every function with no primary variable and exactly one unlabelled variable, label that variable as primary and all of its occurrences as arguments to other functions as secondary.

When all arguments are labelled, make a graph of the variables with an arrow from X to Y whenever X and Y occur as arguments to the same function, X as secondary and Y as primary. If the functions F1 F2 etc. originally came from a Bayesian network, won't this recover that precise network?

If the original graph was A <- B -> C, the expression would have been F1(a,b) F2(b) F3(c,b).

Replies from: Pfft↑ comment by Pfft · 2012-11-05T17:04:22.613Z · LW(p) · GW(p)

If the functions F1 F2 etc. originally came from a Bayesian network, won't this recover that precise network?

I think this is right, if you know that the factors were learned by fitting them to a Bayesian network, you can recover what that network must have been. And you can go even further, if you only have a joint distribution you can use the techniques of the original article to see which Bayesian networks could be consistent with it.

But there is a separate question about why we are interested in Bayesian networks in the first place. SilasBarta seemed to claim that you are naturally led to them if you are interested in representing probability distributions efficiently. But for that purpose (I claim), you only need the idea of factors, not the directed graph structure. E.g. a probability distribution which fits the (equivalent) Bayesian networks A -> B -> C or A <- B <- C or A <- B -> C can be efficiently represented as F1(a,b) F2(b,c). You would not think of representing it as F1(a) F2(a,b) F3(b,c) unless you were already interested in causality.

↑ comment by SilasBarta · 2012-11-05T01:23:13.950Z · LW(p) · GW(p)

In other words, that P is represented by a Markov network A - B - C. The directions on the edges were not essential.

On the contrary, they are important and store information about the relationships that saves you time and space. Like I said in my linked comment, the direction of the arrows between A,C and B tell you whether conditioning on B (perhaps by separating it out into buckets of various values) creates or destroys mutual information between A and C. That saves you from having to explicitly write out all the combinations of conditional (in)dependence.

Replies from: Pfft↑ comment by Pfft · 2012-11-05T02:35:47.139Z · LW(p) · GW(p)

In other words, that P is represented by a Markov network A - B - C. The directions on the edges were not essential.

Oops, on second thought the factorization is equivalent to the complete triangle, not a line. But this doesn't change the point that the space requirements are determined by the factors, not the graph structure, so the two representations will use the same amount of space.

On the contrary, they are important and store information about the relationships that saves you time and space.

All independence relations are implicit in the distribution itself, so the graph can only save you time, not space.

It is true that knowing a minimal Bayes network or a minimal Markov network for a distribution lets you read of certain independence assumptions quickly. But it doesn't save you from having to write out all the combinations. There are exponentially many possible conditional independences, each of which may hold or not, so no sub-exponential representation can get encode all of them. And indeed, there are some kinds of independence assumptions that can be expressed as Bayesian networks but not Markov networks, and vice versa. Even in everyday machine learning, it is not the case that Bayesian networks is always the best representation.

You also do not motivate why someone would be interested in a big list of conditional independencies for its own sake. Surely, what we ultimately want to know is e.g. the probability that it will rain tomorrow, not whether or not rain is correlated with sprinklers.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-05T03:10:44.462Z · LW(p) · GW(p)

But it doesn't save you from having to write out all the combinations.

It saves you from having to write them until needed, in which case they can be extracted by walking through the graph rather than doing a lookup on a superexponential table.

You also do not motivate why someone would be interested in a big list of conditional independencies for its own sake. Surely, what we ultimately want to know is e.g. the probability that it will rain tomorrow, not whether or not rain is correlated with sprinklers.

Yes, the question was what they would care about if they were only interested in predictions. And so I think I've motivated why they would care about conditional (in)dependencies: it determines the (minimal) set of variables they need to look at! Whatever minimal method of representing their knowledge will then have these arrows (from one of the networks that fits the data).

If you require that causality definitions be restricted to (uncorrelated) counterfactual operations (like Pearl's "do" operation), then sure, the Armcharians won't do that specific computation. But if you use the definition of causality from this article, then I think it's clear that efficiency considerations will lead them to use something isomorphic to it.

Replies from: Pfft↑ comment by Pfft · 2012-11-05T03:36:26.524Z · LW(p) · GW(p)

It saves you from having to write them until needed

I was saying that not every independence property is representable as a Bayesian network.

Whatever minimal method of representing their knowledge will then have these arrows (from one of the networks that fits the data).

No! Once you have learned a distribution using Bayesian network-based methods, the minimal representation of it is the set of factors. You don't need the direction of the arrows any more.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-05T03:51:31.619Z · LW(p) · GW(p)

I was saying that not every independence property is representable as a Bayesian network.

You mean when all variables are independent, or some other class of cases?

No! Once you have learned a distribution using Bayesian network-based methods, the minimal representation of it is the set of factors. You don't need the direction of the arrows any more.

Read the rest: you need the arrows if you want to efficiently look up the conditional (in)dependencies.

Replies from: Pfft↑ comment by Pfft · 2012-11-05T04:20:56.736Z · LW(p) · GW(p)

You mean when all variables are independent, or some other class of cases?

Well, there are doubly-exponentially many possibilities...

The usual example for Markov networks is four variables connected in a square. The corresponding independence assumption is that any two opposite corners are independent given the other two corners. There is no Bayesian network encoding exactly that.

you need the arrows if you want to efficiently look up the conditional (in)dependencies.

But again, why would you want that? As I said in the grand^(n)parent, you don't need to when doing inference.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-11-05T19:35:49.461Z · LW(p) · GW(p)

The usual example for Markov networks is four variables connected in a square. The corresponding independence assumption is that any two opposite corners are independent given the other two corners. There is no Bayesian network encoding exactly that.

Okay, I'm recalling the "troublesome" cases that Pearl brings up, which gives me a better idea of what you mean. But this is not a counterexample. It just means that you can't do it on a Bayes net with binary nodes. You can still represent that situation by merging (either pair of) the screening nodes into one node that covers all combinations of possibilities between them.

Do you have another example?

But again, why would you want that? As I said in the grand^(n)parent, you don't need to when doing inference.

Sure you do: you want to know which and how many variables you have to look up to make your prediction.

Replies from: Pfft↑ comment by Pfft · 2012-11-05T20:53:49.716Z · LW(p) · GW(p)

merging (either pair of) the screening nodes into one node

Then the network does not encode the conditional independence between the two variables that you merged.

The task you have to do when making predictions is marginalization: in order to computer P(Rain|WetGrass), you need to compute the sum of P(Rain|WetGrass, X,Y,Z) for all possible values of the variables X, Y, Z that you didn't observe. Here it is very helpful to have the distribution factored into a tree, since that can make it feasible to do variable elimination (or related algorithms like belief propagation). But the directions on the edges in the tree don't matter, you can start at any leaf node and work across.

comment by wedrifid · 2012-10-13T06:39:47.865Z · LW(p) · GW(p)

The statisticians who discovered causality were trying to find a way to distinguish, within survey data, the direction of cause and effect - whether, as common sense would have it, more obese people exercise less because they find physical activity less rewarding; or whether, as in the virtue theory of metabolism, lack of exercise actually causes weight gain due to divine punishment for the sin of sloth.

I recommend that Eliezer edit this post to remove this kind of provocation. The nature of the actual rationality message in this post is such that people are likely to link to it in the future (indeed, I found it via an external link myself). It even seems like something that may be intended to be part of a sequence. As it stands I expect many future references to be derailed and also expect to see this crop up prominently in lists of reasons to not take Eliezer's blog posts seriously. And, frankly, this reason would be a heck of a lot better than most others that are usually provided by detractors.

Replies from: elityre, loup-vaillant, Jonathan_Graehl↑ comment by Eli Tyre (elityre) · 2018-08-25T06:54:46.986Z · LW(p) · GW(p)

I strongly agree.

I read that paragraph and noticed that I was confused. Because I was going through this post to acquire a more-than-cursory technical intuition, I was making a point to followup on and resolve any points of confusion.

There's enough technical detail to carefully parse, without adding extra pieces that don't make sense on first reading. I'd prefer to be able to spend my carful thinking on the math.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2018-11-22T20:58:24.008Z · LW(p) · GW(p)

As was written in this [LW · GW]seminal post:

In Artificial Intelligence, and particularly in the domain of nonmonotonic reasoning, there's a standard problem: "All Quakers are pacifists. All Republicans are not pacifists. Nixon is a Quaker and a Republican. Is Nixon a pacifist?"

What on Earth was the point of choosing this as an example? To rouse the political emotions of the readers and distract them from the main question? To make Republicans feel unwelcome in courses on Artificial Intelligence and discourage them from entering the field? (And no, before anyone asks, I am not a Republican. Or a Democrat.)

Why would anyone pick such a distracting example to illustrate nonmonotonic reasoning? Probably because the author just couldn't resist getting in a good, solid dig at those hated Greens. [? · GW] It feels so good to get in a hearty punch, y'know, it's like trying to resist a chocolate cookie.

As with chocolate cookies, not everything that feels pleasurable is good for you. And it certainly isn't good for our hapless readers who have to read through all the angry comments your blog post inspired.

It's not quite the same problem, but it has some of the same consequences.

↑ comment by loup-vaillant · 2012-10-14T00:09:09.690Z · LW(p) · GW(p)

Maybe the "mainstream status" section should be placed at the top? It would signal right at the top that this post is backed by proper authority.

In addition to the provocation you mention, openly bashing mainstream philosophy in the fourth paragraph doesn't help. If you add a possible reputation of holding unsubstantiated whacky beliefs, well…

That said, I was quite surprised by the number of comments about this issue. I for one didn't see any problem with this post.

When I read "divine punishment for the sin of sloth", I just smiled at the supernatural explanation, knowing that Eliezer of course knows the virtue theory of metabolism have a perfectly natural (and reasonable sounding) explanation. Actually, it didn't even touched my model of his probability distribution of the veracity of the "virtue" theory —nor my own. After having read so much of his writings, I just can't believe he rules such a hypothesis out a priori. Remember reductionism. And my model of him definitely does not expect to influence LessWrong readers with an unsubstantiated mockery.

Also, this:

And lo, merely by eyeballing this data -

(which is totally made up, so don't go actually believing the conclusion I'm about to draw)

made clear he wasn't discussing the object at all. It was then easier for me to put myself in a position of total uncertainty regarding the causal model implied by this "data". The same way my HPMOR anticipations are no longer build on cannon —Bellatrix could really be innocent, for al I know.

But this is me assuming total good faith from Eliezer. I totally forgot that many people in fact do not assume good faith.

Replies from: Caspian↑ comment by Caspian · 2012-10-14T04:33:37.048Z · LW(p) · GW(p)

I mostly liked the post. In Pearl's book, the example of whether smoking causes cancer worked pretty well for me despite being potentially controversial, and was more engaging for being on a controversial topic. Part of that is he kept his example fairly cleanly hypothetical. Eliezer's "I didn't really start believing that the virtue theory of metabolism was wrong" in a footnote, and "as common sense would have it" in the main text, both were suggesting it was about the real world. I think in Pearl's example, he may have even made his hypothetical data give the opposite result to the real world.

This post I also thought was more engaging due to the controversial topic, so if you can keep that while reducing the "mind-killer politics" potential I'd encourage that.

I was fine with the model he was falsifying being simple and easily disproved - that's great for an example.

I'm kind of confused and skeptical at the bit at the end: we've ruled out all the models except one. From Pearl's book I'd somehow picked up that we need to make some causal assumption, statistical data wasn't enough to get all the way from ignorance to knowing the causal model.

Is assuming "causation would imply correlation" and "the model will have only these three variables" enough in this case?

Replies from: Vaniver, loup-vaillant↑ comment by Vaniver · 2012-10-15T02:20:54.451Z · LW(p) · GW(p)

I think in Pearl's example, he may have even made his hypothetical data give the opposite result to the real world.

He introduces a "hypothetical data set," works through the math, then follows the conclusion that tar deposits protect against cancer with this paragraph:

The data in Table 3.1 are obviously unrealistic and were deliberately crafted so as to support the genotype theory. However, the purpose of this exercise was to demonstrate how reasonable qualitative assumptions about the workings of mechanisms, coupled with nonexperimental data, can produce precise quantitative assessments of causal effects. In reality, we would expect observational studies involving mediating variables to refute the genotype theory by showing, for example, that the mediating consequences of smoking (such as tar deposits) tend to increase, not decrease, the risk of cancer in smokers and nonsmokers alike. The estimand of (3.29) could then be used for quantifying the causal effect of smoking on cancer.