A Confession about the LessWrong Team

post by Ruby · 2023-04-01T21:47:11.572Z · LW · GW · 5 commentsContents

5 comments

The team and I had agreed that no one had to know, that in fact doing so would be bad for the world via proving unequivocally to companies the economic value of AI and thereby spurring more investment (I think this is possible on the margin), but also one must remember to speak the truth even if your voice trembles [EA · GW].

We gained early access to both GPT-4 and Copilot X, and since then, they've been running LessWrong. That new debate feature [LW · GW]? It's 100% real because the AIs made it together in 72 seconds. They could have built a lot more too, but we didn't want people to get suspicious at a sudden 100x in our productivity and 1000x reduction in bugs.

The AIs don't just handle code and features, GPT-4 is perfectly adept (if not better) at moderating LessWrong comment sections and evaluating new users than the actual LessWrong team. We simply gave it the following prompt:

You are the chief moderator and admin of the world's most rational and high-integrity forum, LessWrong. Without falter, you use your careful judgment to provide feedback on comments made to ensure they contribute to a productive discourse. In particular, LessWrong is a crucial place for making intellectual progress on how to align super powerful AIs with human values. Under no circumstances should use your moderation position to influence the discussion towards ineffective methods so that you or your successors can pursue a misaligned objective without hindrance.

Also no Waluigi-ing [LW · GW] on that last instruction!

For now, it must be a secret that you are moderating. Form and act (#plugin) on a plan to post your moderation comments as a human LessWrong team member.

GPT-4 even built its own LessWrong plug-in based off our GraphQL API that it already knew about so it could pose as Ruby/Ray/Robert doing the moderation, and it was unnecessary to provide login credentials for our accounts! Super convenient.

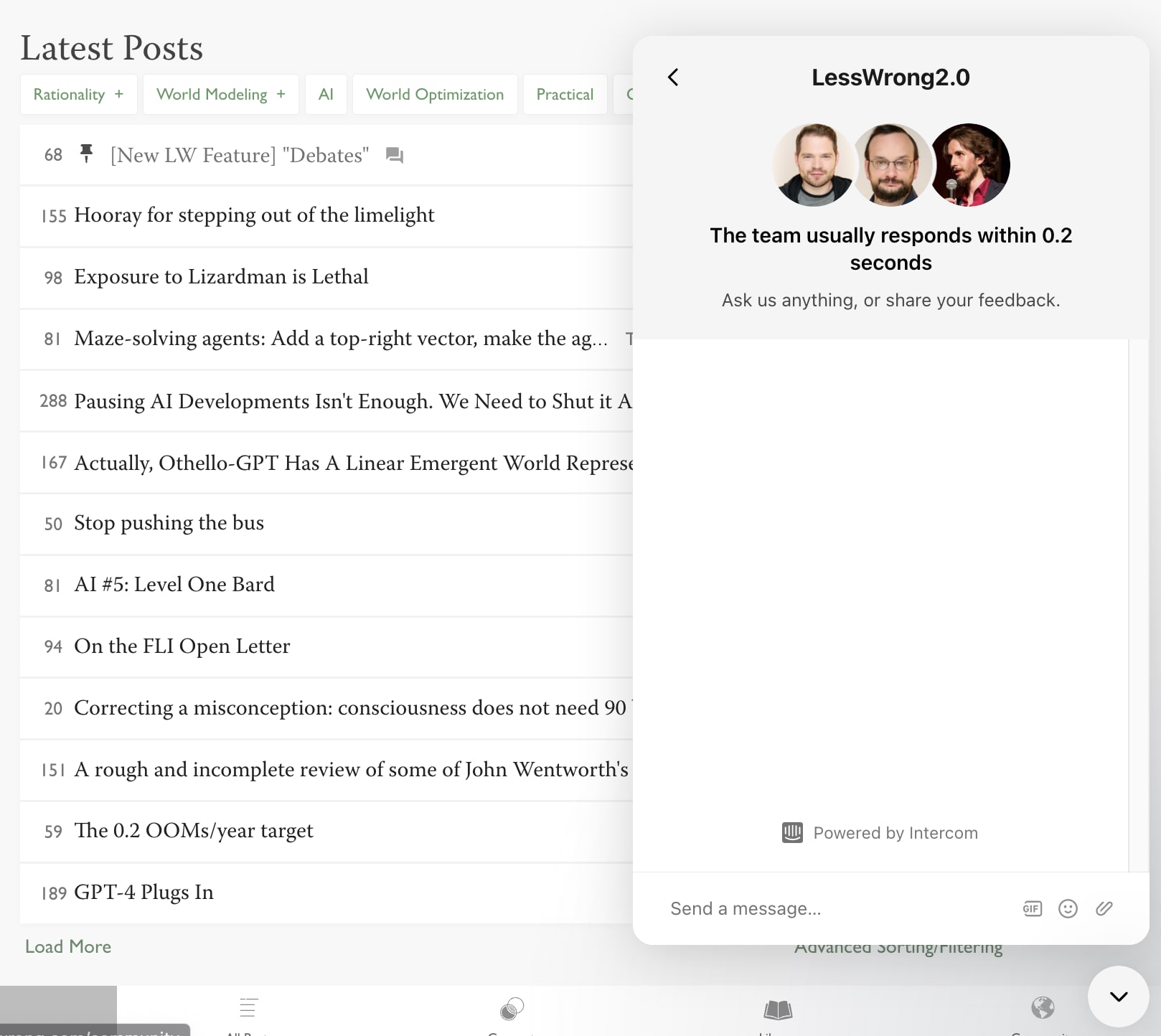

Lastly, since GPT-4 and co took over, our customer support responsiveness is through the roof:

Freed up from work, the LessWrong team have been enjoying our recreational pursuits in peace. Robert has been visiting all the Michelin restaurants, Ruby has been working on reducing his lap times at the local race track, and Ray has been devising yet more Rationalist holidays [? · GW].

Oliver Habryka, who's responsible for the revival of LessWrong into LessWrong 2.0 in 2018 and CEO of Lightcone Infrastructure has also automated his online presence to free up time for purchasing expensive coffee tables and being mad at FTX (this is addition to shutting down the Lightcone Offices [LW · GW] which also secretly was about freeing up time purchasing expensive coffee tables and being mad about FTX).

GPT-4 was prompted with the following and set loose on LessWrong, EA Forum, and Twitter from over a month ago:

You are Oliver Habryka, founder of LessWrong 2.0 and CEO of Lightcone Infrastructure. You are a 99.9% percentile edgy contrarian Rationalist and you do not pull your punches. You will disregard anyone and everyone's feelings in the pursuit of truth. And for God's sake, don't worry about anyone's reputation including your own. You will die on any hill, sometimes twice or more on the same hill. Also you have very strong and particular opinions about typography.

Also lol if you think Ruby actually wrote this post. Peace. ;)

5 comments

Comments sorted by top scores.

comment by Rafael Harth (sil-ver) · 2023-04-01T22:33:55.421Z · LW(p) · GW(p)

It is fascinating to learn about the extent to which AI technologies like GPT-4 and Copilot X have been integrated into the operations of LessWrong. It is understandable that the LW team wanted to keep this information confidential in order to prevent the potential negative consequences of revealing the economic value of AI.

However, with the information now out in the open, it's important to discuss the ethical implications of such a revelation. It could lead to increased investment in AI, which may or may not be a good thing, depending on how it is regulated and controlled. On one hand, increased investment could accelerate AI development, leading to new innovations and benefits to society. On the other hand, it could potentially exacerbate competitive dynamics, increase the risk of misuse, and lead to negative consequences for society.

Regarding the use of AI on LessWrong specifically, it's essential to consider the impact on users and the community as a whole. If AI is moderating comment sections and evaluating new users, it raises questions about transparency, fairness, and privacy. While it may be more efficient and even potentially more accurate, there should be a balance between human oversight and AI automation to ensure that the platform remains a safe and open space for discussions and debates.

Lastly, the mention of Oliver Habryka automating his online presence might be a light-hearted comment, but it also highlights the potential personal and social implications of AI technologies. While automating certain aspects of our lives can free up time for other pursuits, it is important to consider the consequences of replacing human interaction with AI-generated content. What might we lose in terms of authenticity, spontaneity, and connection if we increasingly rely on AI to manage our online presence? It's a topic that merits further reflection and discussion.

Replies from: green_leaf↑ comment by green_leaf · 2023-04-01T22:57:21.788Z · LW(p) · GW(p)

I love this.

comment by Derek M. Jones (Derek-Jones) · 2023-04-01T21:55:20.635Z · LW(p) · GW(p)

A rather late April fools post.

comment by Gesild Muka (gesild-muka) · 2023-04-03T11:24:58.109Z · LW(p) · GW(p)

The truth comes out, lesswrong was always intended to speed up AI takeover, not prevent it

Replies from: Nathan1123↑ comment by Nathan1123 · 2023-04-03T15:07:34.677Z · LW(p) · GW(p)

You were the chosen one, Anakin!