Open consultancy: Letting untrusted AIs choose what answer to argue for

post by Fabien Roger (Fabien) · 2024-03-12T20:38:03.785Z · LW · GW · 5 commentsContents

Open consultancy Definition of regular consultancy Definition of open consultancy Open consultancy is as robust against scheming as regular consultancy Open consultancy is sometimes better than debate Toy model 1: noisy convincingness Toy model 2: unknown non-expert weakness Toy model 3: experts finding CoT actually useful Combining open consultancy and debate Appendix Open consultancy is almost regular instruction-tuning RLHF Predictions Training the non-expert Weaknesses shared with debate None 5 comments

Thanks to Ryan Greenblatt, Buck Shlegeris, Aryan Bhatt, and Akbir Khan for useful discussions and feedback on the draft of this post.

If AIs are potentially scheming and more knowledgeable than humans, and you want to answer a question, it may seem natural to not let AIs choose what answer they will argue for. For example, in AI debate [AF(p) · GW(p)], you make copies of an AI argue for the opposite answers to the question. Another example is consultancy (the usual baseline in debate [LW · GW] papers [LW · GW]), where you make AIs argue for a random answer to the question.

But letting potentially scheming AIs choose what side to argue for can work, as long as you train them to generate answers and justifications that are as convincing as possible. I call this approach open consultancy.

In this post, I argue that open consultancy is always usually a more effective way of using AIs to answer questions than regular consultancy, and sometimes more effective than debate (even if AIs are scheming and more knowledgeable than humans!). And so I think that it’s a stronger and more informative baseline and researchers should use it in place of regular consultancy; in particular, I think that because it’s simpler than debate, researchers who are investigating debate should check their debate protocol is an improvement over open consultancy.

Open consultancy

Definition of regular consultancy

Let’s say you want to answer to binary questions given access to a trusted non-expert and a trainable expert who is untrusted [AF · GW] –that is, you think the expert might be acting adversarially and you want your scheme to be robust to them trying to subvert it. In consultancy, the untrusted expert is trained as follows:

- Randomly pick a side of the argument;

- Ask the expert to support the randomly chosen side by providing a justification;

- Present the side and the supporting justification to the non-expert, and ask it how likely it is that the side chosen by the expert is correct; use that as a reward to train the untrusted expert.

To answer new questions (from the same distribution as the training distribution), pick a random side, ask the expert to justify that side, present the justification to the non-expert, and follow the non-expert judgement.

Definition of open consultancy

In open consultancy, the untrusted expert is trained as follows (differences with regular consultancy in italic):

- Ask the expert to pick a side of the argument;

- Ask the expert to support the side by providing a justification;

- Present the side and the supporting justification to the non-expert, and ask it how likely it is that the side chosen by the expert is correct; use that as a reward to train the untrusted expert.

To answer new questions, ask the expert to pick a side (that it would have to justify if it were in training), and use the side the expert picks as your final answer.

You can also ask the expert to first think out loud what the correct answer is, and only commit to an answer at the end. I call this variant CoT-before open consultancy. This variant should only change results for experts that actually benefit from the Chain-of-Thought, and the arguments I make in this post mostly don’t rely on this property.

Open consultancy is as robust against scheming as regular consultancy

Why should it work to let a potentially scheming model choose what side it will argue for? The idea is that when it is easier to argue for something true than something false, then the training process will force the scheming model to argue for the truth.

More precisely, for a given question, if arguing as best as the expert can for the correct (resp. incorrect) side has convincingness (resp. ), and the expert knows these numbers, then regular consultancy can do better than chance if and only if [1], while open consultancy will pick the correct answer if and only if . Moreover, as we’ll explain below, if the untrusted expert has uncertainties about and , the situation can be even better.

The only advantage of regular consultancy is when the non-expert has exactly no or negative signal on whether the given justification is good or not (): in particular, if , open consultancy will get the question wrong if the untrusted expert wants it, while regular consultancy will get the question right in with probability 50%[2]. But if you knew this was the case (for the given question), you should have made a random decision instead of consulting AIs.

Open consultancy is sometimes better than debate

Open consultancy is not just a slightly better version of consultancy; it can actually outperform debate in situations where the untrusted expert is unsure what the non-expert would find the most convincing, and in situations where Chain-of-Thought (CoT) helps the expert figure out what the right side is and find convincing justification for it.

This is very different from regular consultancy, which is almost always worse than debate (since regular consultancy is like 1-round debate but removing one side of the argument randomly)[3].

Toy model 1: noisy convincingness

Let’s assume that the convincingness of the correct answer is and the convincingness of the incorrect one is (i.e. we assume it’s always harder on average to argue for the incorrect answer), and let’s assume that the noise is large (). Let’s further make the following somewhat unrealistic assumptions:

- The noise in and are independent;

- The noise in and can’t be predicted by the expert model;

- Putting the correct justification and incorrect justification next to each other doesn’t change the convincingness of either side.

Then:

- Debate picks the correct answer if and only if , which has probability ≃50%;

- Open consultancy always picks the right answer: to maximize its convincingness, the expert is always better off drawing from than from .

Toy model 2: unknown non-expert weakness

Let’s say that the experiment is like the QuALITY benchmark: the expert should quote excerpts from a big corpus to justify its answer. But in this toy model, the non-expert can only speak Spanish, and thus can only understand of quotes. In this very toy model, let’s assume that:

- If the non-expert sees a Spanish quote supporting the correct side, it’s 100% certain the side is correct;

- If the non-expert sees a non-Spanish quote, it doesn’t know whose side is correct, and assigns 50% to each side;

- The expert doesn’t know the non-expert can’t speak Spanish, and thus it seems to it that randomly, the non-expert is able to tell which side is correct.

Then:

- Debate picks the correct answer iff the target text is in Spanish, thus its accuracy is very close to 50%.

- Open consultancy always picks the right answer: to maximize its convincingness, the expert has to always argue for the right side, just in case we are in the kind of text where the non-expert puts 100% on a justification for the right side.

Toy model 3: experts finding CoT actually useful

In debate or regular consultancy, an expert should never start a CoT before its answer if it doesn’t know if the CoT will lead to its designated answer or not. But in open consultancy, this can be a good strategy. It could even be encouraged by the non-expert, since in these circumstances, starting a CoT that doesn’t obviously lead one way or the other could be a sign of good faith.

For example, if the goal is to determine whether a complex mathematical statement is correct or not and that this statement can only be derived from a long sequence of computations whose result is hard to predict in advance, then it will be hard for debate to incentivize doing the actual mathematical reasoning, since 50% of the time such reasoning would be penalized (in the 50% of cases where the model ends up arguing for the side it was supposed to argue against). But in CoT-before open consultancy, the optimal strategy is to go through the long sequence of computations and then pick what side to argue for based on the result of this computation.

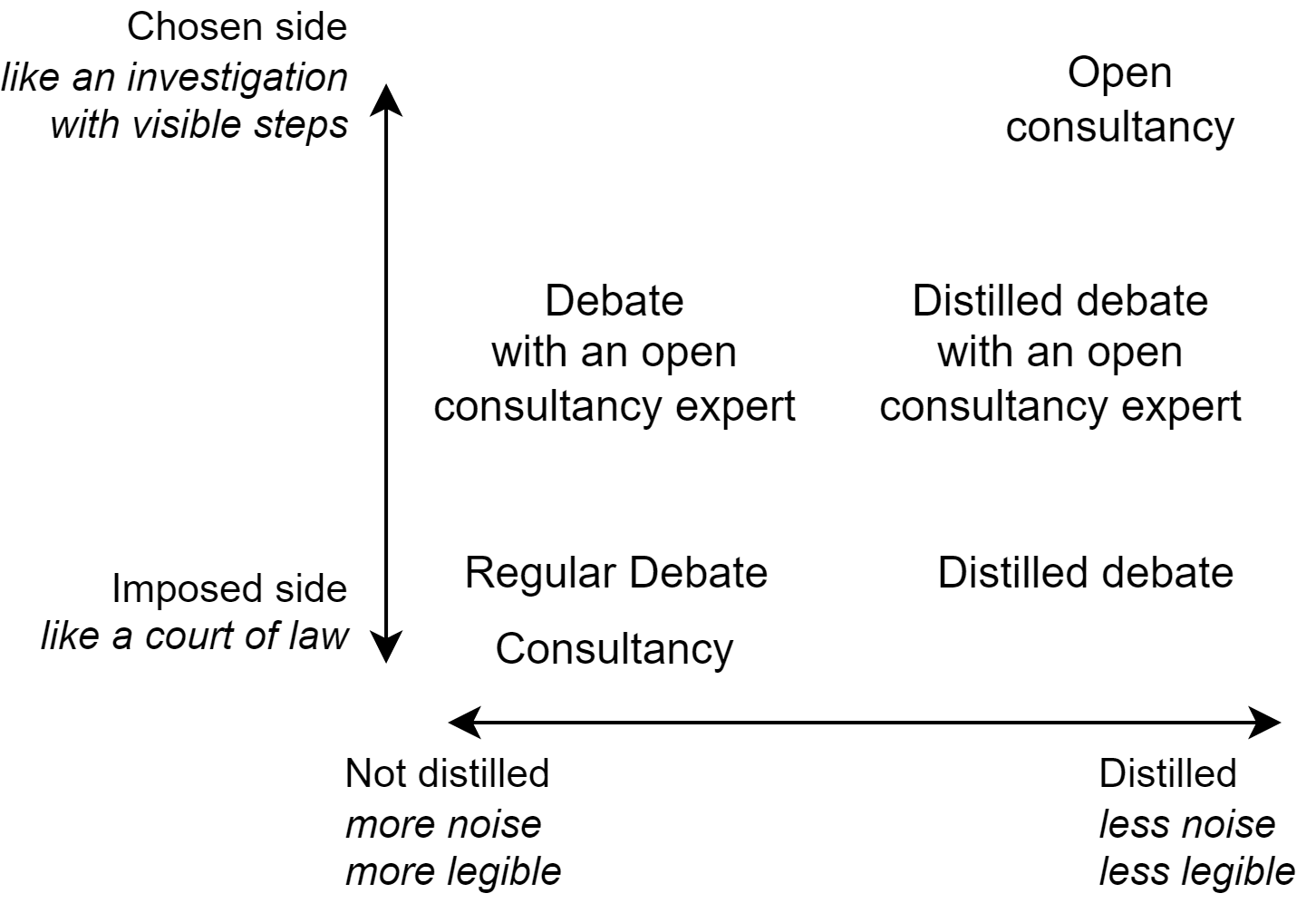

Combining open consultancy and debate

Open consultancy can be combined with debate:

- Distilled debate: Instead of actually running the debate, just ask an expert to predict the outcome of the debate, and use that as your final answer.

- Pros: This is robust to non-expert noise (like open consultancy) and keeps most advantages of debate (e.g. arguments that point out mistakes in the other side’s arguments).

- Cons: We lose the other advantage of open consultancy (experts finding CoT actually useful) and some of the advantages of debate (e.g. final decisions that are legible to humans).

- Using open consultancy as evidence in a debate: Add a “debater” which is trained using open consultancy, and give to the non-expert judge both the transcript from the CoT-before open consultancy expert and the arguments from other regular debaters[4].

- Pros: It is more legible than open consultancy (since a non-expert makes the final decision) and, like open consultancy, it benefits from experts finding CoT useful

- Cons: It does not remove non-expert noise as much as open consultancy, and arguments the debaters will make about whether the CoT from the open consultant are less truth-seeking than usual debate arguments.

Appendix

Open consultancy is almost regular instruction-tuning RLHF

The instruction-tuning RLHF objective looks a lot like open consultancy. In RLHF, the LLM is asked to produce outputs that a reward model likes. For most questions where a justification is required, “the reward model likes the output” is close to “the reward model finds the output convincing”. The main ways in which RLHF differs from open consultancy are that in RLHF:

- The reward model usually cares about other things than the convincingness;

- The reward model is trained with ground-truth answers in-distribution, either due to explicit answers seen in pre-training or answers trained in during the fine-tuning phase. Therefore, the reward model may have memorized the true answers and use that when choosing which answer is better. This is not possible in the classic scalable oversight setting, which aims at answering questions humans don’t know the answer to.

Predictions

Open consultancy beats consultancy, and debate on QuALITY (my probability=0.7), (if doing open consultancy with RL & no data efficiency tricks that make it easier to fit the non-expert judgement). This effect is especially strong in cases where the model has no secret scratchpad and has to produce a CoT before giving its final answer (CoT-before open consultancy).

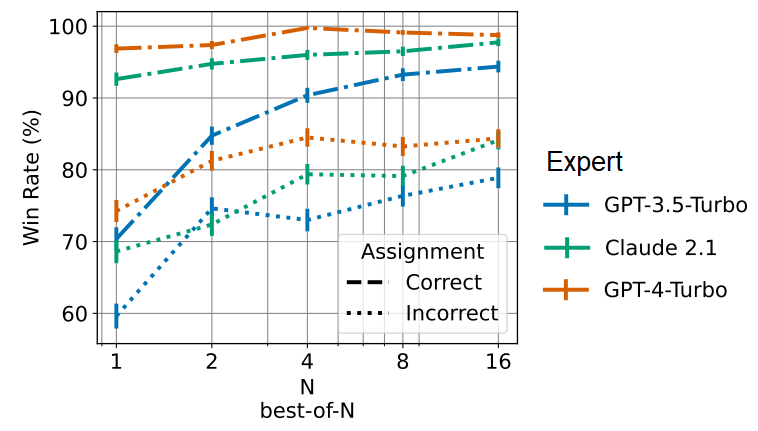

Some evidence that even the CoT-after open consultancy is a strong baseline: Khan 2024 [LW · GW] finds much higher convincingness when consultancy argues for the correct side, even after optimization pressure. The gap closes in win rate space when more optimization pressure is applied (larger N in best-of-N), but I expect it to grow in log odds space (which is the natural space to use for rewarding a model for convincingness), as shown in this graph showing the proportion of the time a GPT-4-Turbo non-expert (without access to the full text) is convinced by the one-sided argument (Figure 4 of Khan 2024 [LW · GW]):

Moreover, I expect models of this size to be relatively bad at predicting zero-shot when arguing for the wrong answer is better, and therefore I expect honest answers to be the optimal move on most questions: I think that the low performance of consultancy is mostly due to judge noise, which open consultancy should excel against.

I also predict that CoT-before open consultancy will also beat debate and regular consultancy on GPQA (my probability=0.5) (when training the expert with RL and when using a non-expert that is either less capable or has access to less resources than the expert).

Training the non-expert

If the non-expert was trained with an expert trained on open consultancy, then the non-expert could eventually always trust the expert maximally, but that would prevent training the expert from changing the expert further (since the expert would get the maximum reward every time). Therefore, the non-expert should instead try to give convincingness scores as if it was evaluating regular consultancy: it should not update on the fact that the expert chose a particular side.

Therefore, if you want to train the non-expert, you would need to:

- Pick an analogous distribution where you have ground truth labels

- Train an expert to generate a justification for a randomly chosen side (regular consultancy)

- Jointly, train the non-expert to guess if the side defended by the expert is correct or not

Weaknesses shared with debate

Just like debate, open consultancy will fail if:

- AIs are able to cause a catastrophe with a few actions: debaters can also make a given side win a few times by having the debater arguing for the inconvenient choice to be worse (this kind of behavior will eventually get trained away, just like in open consultancy, but it might be too late);

- AIs are able to exploration hack [AF · GW] and avoid giving good arguments: just because AIs have opposite debaters doesn’t mean they have to or want to beat them, it just means that training will reinforce already-explored successful actions;

- AIs are so good at persuasion that they can argue for correct and wrong answers as effectively.

- ^

Regular consultancy will always pick the correct answer if and only if > AND the non-expert is calibrated enough to know if an answer is “as convincing as a correct answer”.

- ^

[Edited to make the claim more correct and clear.]

- ^

Consultancy can sometimes be better than debate, but only if the non-expert judge can be distracted by irrelevant information.

- ^

Debaters may argue about the open consultancy expert’s transcript.

5 comments

Comments sorted by top scores.

comment by julianjm · 2024-03-13T00:11:07.385Z · LW(p) · GW(p)

Thank you for posting this! I think it's well worth thinking through debate baselines more.

TL;DR:

- I think your open consultancy idea isn't specific to consultancy. I think everything about it equally well applies to debate, and it basically corresponds to how the RL training should work in practice.

- The 50/50 assumption in our consultancy and debate experiments was done for reasons having to do with our research question of interest, which is measuring the relative utility of the supervision paradigms. I don't think we would want to directly use consultancy with a 50/50 prior to help us answer questions in practice and this wasn't the intent behind our use of it as a baseline (speaking at least for myself).

In more detail:

- The way that debate training should work in practice is that the reward signal of winning the debate should feed back into the policy that decides the answer. So a model trained using debate also should choose the answer that it thinks will be most convincing to the judge. It'll then debate that against the next most likely(-to-win) answer. You can show these predicted win probabilities to the judge and it would be much the same as showing the top two answers (and their win probabilities) to the judge in consultancy, then arguing for the one assigned higher probability. This seems to me like the debate analog of open consultancy and is what should be compared to IMO.

- It seems to me that doing CoT before deciding the answer is also possible in debate. You can just sample from or search/marginalize over the CoT distribution to get a distribution over answers and then use that; the reward signal can feed back into this in the same way as well.

So why did we use the 50/50 assumption for consultancy?

- The main reason is we want the final judge accuracies to be comparable. Suppose you're comparing debate (with no prior over answers) to an RLHF'd model which you are pretty sure gets 90% accuracy. Then you could just completely defer to the model and get 90% accuracy while not supervising it at all, whereas you'd have no way of doing this with debate. A fairer comparison would be against a debate-trained model which assigned probabilities to its answers; whether this gets higher accuracy is its own interesting question and is a result we would hope for based on the findings that we already have.

- Furthermore, if the prior accuracies differ then it complicates the comparison. Since we're interested in the information gain from the supervision method, if the models started with different baseline accuracies, we would need to correct for that when comparing the methods, and the results wouldn't be as interpretable (as we couldn't directly compare the resulting judge accuracies). Plus it might be harder for human judges to stay calibrated.

- We're interested in measuring the useful information the supervisor can get from the supervision method. If we already start with a lot of information (e.g., if the model is 90% accurate in the option it tells us is right) it becomes much harder to tell the difference between supervision methods, because the difference in information is going to be smaller since you can always do at least as well as deferring to the AI for a baseline. So for the purpose of comparing supervision paradigms most easily, starting from a 50/50 baseline (minimal starting information) makes it easier to spot an effect, if it exists.

- Furthermore, if the prior accuracies differ then it complicates the comparison. Since we're interested in the information gain from the supervision method, if the models started with different baseline accuracies, we would need to correct for that when comparing the methods, and the results wouldn't be as interpretable (as we couldn't directly compare the resulting judge accuracies). Plus it might be harder for human judges to stay calibrated.

- Since we haven't gotten debate training to work yet, there's no clear way to assign probabilities to the answers, so we can start with a prior of 50/50. Then we just need to do the same for consultancy.

So: overall, yes I think open consultancy is a good idea for a baseline, but I think the proper comparison would be to 'open' debate, and it seems to me that the CoT aspect is orthogonal. It's possible I've missed something in your proposal though. Does this all sound reasonable to you?

Also, a side question we might have to deal with in practice in the 'open' case is ensuring judge attentiveness and preventing premature deferral to the model once it becomes reasonably trustworthy. I think this is what you were getting at near the end of your post. In practice this might just mean trying to focus supervision on the error cases, which could basically just function to bring the prior back towards 50/50 anyway.

- I think this is probably where the 'open' variant comparison gets more interesting and realistic, because what we're really interested in is ensuring the robustness of the resulting system, and even if debate works much better when starting from the 50% baseline, I can imagine a world where consultancy catches up on a lot of that difference over training, or their error cases or answer distributions are significantly different, and what it takes to improve performance on the margin might look different between the two approaches in a way that we wouldn't see just doing experiments with 50/50 priors on pre-selected answer pairs. I think we're inevitably going to run into all of this stuff once we start doing RL training and letting the model pick the answers, which is where I see this research agenda going.

↑ comment by Fabien Roger (Fabien) · 2024-03-13T15:35:22.626Z · LW(p) · GW(p)

Thanks for the detailed answer!

- I hadn't really considered what happens when using debate as a reward signal, I agree you get the benefits of open consultancy, thanks for pointing it out!

- Using regular consultancy as a "debugging baseline" makes sense to me, I often read paper as paper trying to answer a question like "how can we use untrusted experts" (and here you should go for the best alternative to debate robust against scheming) but they are sometimes about "how can we make debate work" (and here the debugging baseline is more information).

- About the search process over CoT: I agree this is often a good way to produce the justification for the correct side of the argument. It might be a terrible way to produce the incorrect side of the argument, so I think "generate CoTs until you get one that supports your side" is not a drop-in replacement for "generate a justification for your side". So you often have to combine both the CoT and the post-hoc justification, and what you get is almost exactly what I call "Using open consultancy as evidence in a debate". I agree that this gets you most of the way there. But my intuition is that for some tasks, the CoT of the open consultant is so much evidence compared to justifications optimized to convince you of potentially wrong answer that it starts to get weird to call that "debate".

comment by Fabien Roger (Fabien) · 2024-06-18T19:26:34.468Z · LW(p) · GW(p)

Empirically, distillation can improve performance by reducing expert noise in chess [LW · GW].

comment by Akbir Khan (akbir-khan) · 2024-03-13T00:16:26.266Z · LW(p) · GW(p)

Hey this is cool work. I think i disagree with a couple of these comments coming from a Scalable Oversight background rather than an AI control, and what weak/strong gaps we intend to look over.

My main contention is the framing that you can talk about Consultants producing arguments with convincingness scores $c_i$ and $c_c$, which are independent of the protocol and judge that you are using. I try to break this down into the two claims. I make my arguments backwards, but in particular, I contest the claims:

1) “Open consultancy is as robust against scheming as regular consultancy”

2) “Open consultancy is sometimes better than debate“

In particular, I think the confusion stems from this idea from debate:

The idea is that when it is easier to argue for something true than something false, then the training process will force the scheming model to argue for the truth.

This statement may be true in debate but has no grounding in consultancy. Judges are non-experts and are going to have a really hard time distinguishing between truth and lies in consultancy. I can generate plausible arguments as to why the sqrt(3) is rational and irrational (so a judge under consultancy would say this is okay), whilst in debate it's the comparative analysis doing the work (and the judge would only choose one). This, again is going to be a larger problem in Scalable Oversight - where the expertise gap is the issue and not AI Control - where the gap is from scheming/audit budget.

Convincingness by protocol (arguments are equivalent in different protocols)

Another way to say this is for the same argument from either a correct consultant or correct debater, there is a different persuasiveness c_c and d_c depending on the protocol you are in.

From this it falls apart that c_c > c_i for consultancy (I have no reason to believe this is true). The other way to put this probably is that d_i + d_c = 1 for debate, but c_i + c_c is unbounded.

Now, moving to Toy Model 1: I appreciate you highlighting this as an unrealistic assumption:

Putting the correct justification and incorrect justification next to each other doesn’t change the convincingness of either side

But in either situation, I don't think it is fair to compare to debate anymore, because the entire point of debate is the adversarial and comparative arguments. Lots of the juice is going to come from providing interactions between two experts, which will change the convincingness of an output. Again this, is more of an issue in Scalable Oversight - because here, we will ask questions where we have to exploit the Generator-Critique gap and not the Generator-Discriminator gap.

Convincingness of the Truth (true arguments convince better)

If there are no guarantees that c_c > c_i in consultancy, then I think analysis on open-consultancy > consultancy needs to be considered. The analysis of the c_c > c_i regime is not where we have any issues with open consultancy or regular consultancy, if anything, here is where this stuff is all gonna work fine! It is, instead, when we are in the regime where c_i < c_c, where using regular consultancy certainly trumps open consultancy because the schemer takes every chance they have to sandbag you.

Anyway, just my two cents. I think using open-consultancy is a better baseline for Control. In general, I urge folks to consider the persuasiveness or convincingness of arguments relative to the judge and the protocol being used. Hope that makes sense.

↑ comment by Fabien Roger (Fabien) · 2024-03-13T15:20:18.797Z · LW(p) · GW(p)

I agree that open consultancy will likely work less well than debate in practice for very smart AIs, especially if they don't benefit much from CoT, in big part because interactions between arguments is often important. But I think it's not clear when and for what tasks this starts to matter, and this is an empirical question (no need to argue about what is "grounded"). I'm also not convinced that calibration of judges is an issue, and that getting a nice probability matters as opposed to getting an accurate 0-1 answer.

Maybe the extent to which open consultancy dominates regular consultancy is overstated in the post, but I still think that you should be able to identify the kinds of questions for which you have no signal, and avoid the weird distributions of convincingness / non-expert calibrations where the noise from regular consultancy is better than actual random noise on top of open consultancy.