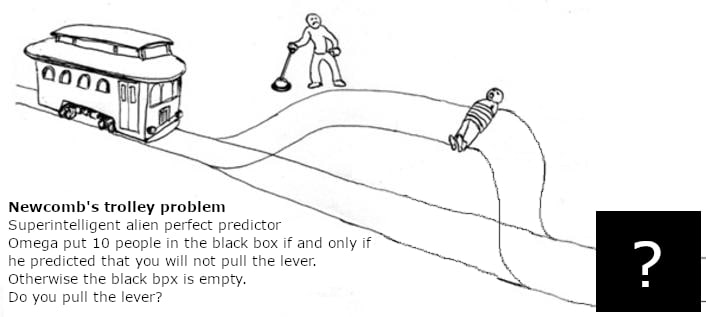

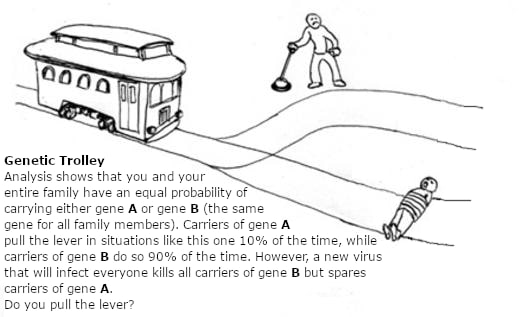

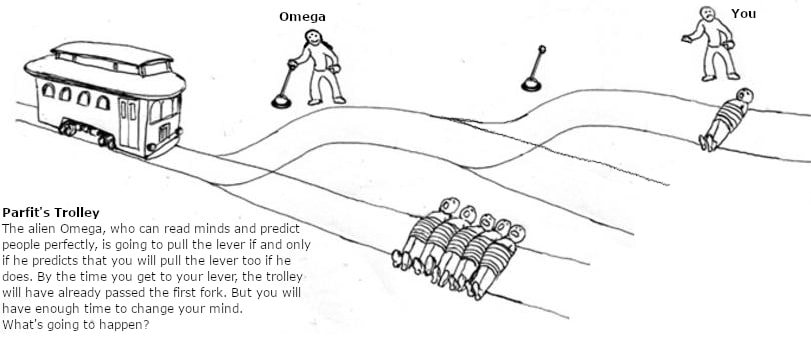

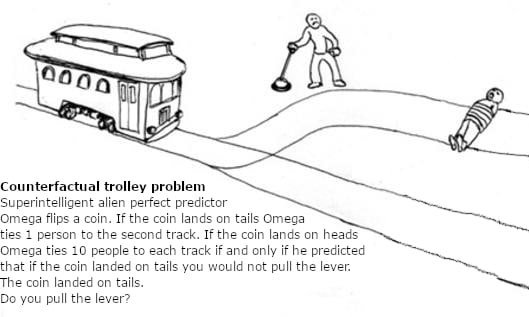

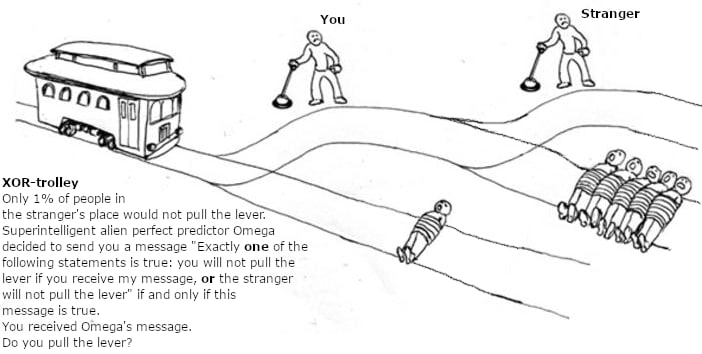

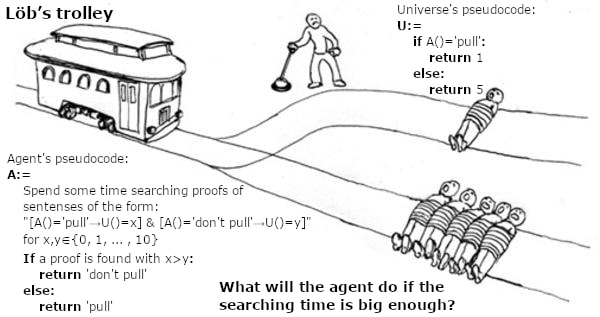

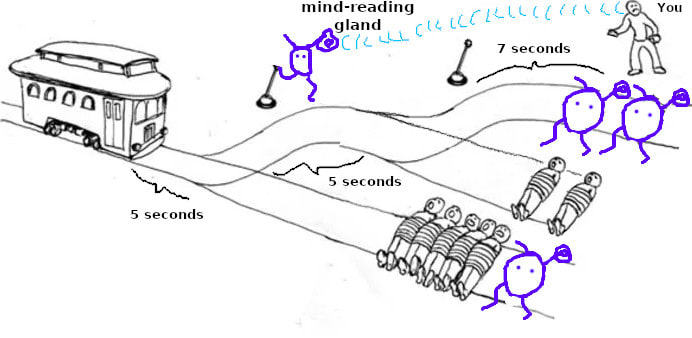

I turned decision theory problems into memes about trolleys

post by Tapatakt · 2024-10-30T20:13:29.589Z · LW · GW · 23 commentsContents

Bonus None 24 comments

I hope it has some educational, memetic or at least humorous potential.

Bonus

23 comments

Comments sorted by top scores.

comment by Warty · 2024-11-01T19:05:35.975Z · LW(p) · GW(p)

the fun and/or clarity can be improved/worsened. a way to improve fun is to have less text and more puzzle, here's a more fun hitchhiker version

↑ comment by Tapatakt · 2024-11-02T12:28:00.949Z · LW(p) · GW(p)

I really like it! One remark, though: two upper tracks must be swapped, otherwise it's possible to precommit by staying in place and not running to the lever.

Replies from: Wartycomment by antanaclasis · 2024-10-31T16:53:39.831Z · LW(p) · GW(p)

Isn't the counterfactual trolley problem setup backwards? It should be counterfactual Omega giving you the better setup (not tying people to the tracks) if it predicts you'll take the locally "worse" option in the actual case, not the other way around, right?

Because with the current setup you just don't pull and Omega doesn't tie people to tracks.

Replies from: Tapataktcomment by quetzal_rainbow · 2024-10-31T10:17:27.156Z · LW(p) · GW(p)

Wow, XOR-trolley really made me think

comment by avturchin · 2024-10-30T22:21:51.050Z · LW(p) · GW(p)

Can you make Trolley meme for Death in Damascus and Doomsday Argument?

Can prove that you can express any decision theory problem as some Trolley problem?

Replies from: Tapatakt↑ comment by Tapatakt · 2024-10-31T11:04:57.140Z · LW(p) · GW(p)

Death in Damascus is easy, but boring.

Doomsday Argument is not a decision theory problem... but it can be turned into one... I think the trolley version would be too big and complicated, though.

Obviously, only problems with discrete choice can be expressed as a Trolley problems.

comment by ToasterLightning (BarnZarn) · 2025-01-01T07:27:44.458Z · LW(p) · GW(p)

Many of these are solveable via the strategy "pull the lever and then quickly pull it back"

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2025-01-01T10:10:43.170Z · LW(p) · GW(p)

You failed to consider the trolley

Replies from: BarnZarn↑ comment by ToasterLightning (BarnZarn) · 2025-01-01T18:14:51.966Z · LW(p) · GW(p)

Oh, I don't mean to derail it, I'm just saying that if I pull the lever and pull it back, I still pulled it, so Omega will make their choice based off of that.

comment by notfnofn · 2024-10-31T15:19:45.202Z · LW(p) · GW(p)

A priori, why should we trust that Omega's statement even has a well-defined truth value in the XOR-trolley problem? We're already open to the idea that it could be false.

Replies from: Measure↑ comment by Measure · 2024-11-01T12:33:20.360Z · LW(p) · GW(p)

The problem statement says it's true (Omega did indeed send the message, and the problem statement says that only happens when the message is true).

I think, in effect, this boils down to Omega telling you "This stranger is a murderous psychopath. You'd better not give them the opportunity."

Replies from: quetzal_rainbow, notfnofn↑ comment by quetzal_rainbow · 2024-11-01T13:04:25.888Z · LW(p) · GW(p)

In effect, Omega makes you kill people by sending message.

Imagine two populations of agents, Not-Pull and Pull. 100% members of Not-Pull receive the message, don't pull and kill one person. In Pull population 99% members do not get the message, pull and get zero people killed, 1% receive message, pull and in effect kill 5 people. Being member of Pull population has 0.05 expected casualties and being member of Not-Pull population has 1 expected casualty. Therefore, you should pull.

↑ comment by notfnofn · 2024-11-01T13:43:22.539Z · LW(p) · GW(p)

It says ``if and only if this message is true" which would be kind of silly to include if it had to be true

Replies from: Tapatakt↑ comment by Tapatakt · 2024-11-01T13:58:03.258Z · LW(p) · GW(p)

The point is Omega would not send it to you it if it was false and Omega would always send it to you if it was true.

Replies from: notfnofn↑ comment by notfnofn · 2024-11-01T14:23:05.294Z · LW(p) · GW(p)

Oh I missed the quotations; you're right

Replies from: Cossontvaldes↑ comment by Valdes (Cossontvaldes) · 2024-11-08T01:51:30.631Z · LW(p) · GW(p)

I also found this hard to parse. I suggest the following edit:

Omega will send you the following message whenever it is true: "Exactly one of the following statements is true: (1) you will not pull the lever (2) the stranger will not pull the lever " You receive the message. Do you pull the lever?

comment by benway · 2024-11-01T19:33:32.024Z · LW(p) · GW(p)

Great. Now the likelihood of me getting anything else done today other than evaluating counterfactual hypotheticals has dropped to zero. Arguably, this could be better than the worst case alternative scenario: fighting the Dodger's victory parade traffic through downtown Los Angeles, where I am metaphorically simultaneously tied to all trolley tracks by some Sierpinsky mechanism, and therefore am bound to run over myself, perhaps more than once.

comment by artifex0 · 2024-11-04T00:50:18.826Z · LW(p) · GW(p)

I've been wondering: is there a standard counter-argument in decision theory to the idea that these Omega problems are all examples of an ordinary collective action problem, only between your past and future selves rather than separate people?

That is, when Omega is predicting your future, you rationally want to be the kind of person who one-boxes/pulls the lever, then later you rationally want to be the kind of person who two-boxes/doesn't- and just like with a multi-person collective action problem, everyone acting rationally according to their interests results in a worse outcome than the alternative, with the solution being to come up with some kind of enforcement mechanism to change the incentives, like a deontological commitment to one-box/lever-pull.

I mean, situations where the same utility function with the same information disagree about the same decision just because they exist at different times are pretty counter-intuitive. But it does seem like examples of that sort of thing exist- if you value two things with different discount rates, for example, then as you get closer to a decision between them, which one you prefer may flip. So, like, you wake up in the morning determined to get some work done rather than play a video game, but that preference later predictably flips, since the prospect of immediate fun is much more appealing than the earlier prospect of future fun. That seems like a conflict that requires a strong commitment to act against your incentives to resolve.

Or take commitments in general. When you agree to a legal contract or internalize a moral standard, you're choosing to constrain the decisions of yourself in the future. Doesn't that suggest a conflict? And if so, couldn't these Omega scenarios represent another example of that?

comment by Alexander de Vries (alexander-de-vries) · 2024-10-31T12:29:35.611Z · LW(p) · GW(p)

Is there any reason we should trust Omega to be telling the truth in the XOR-trolley problem?

Replies from: Tapatakt