Memorizing weak examples can elicit strong behavior out of password-locked models

post by Fabien Roger (Fabien), ryan_greenblatt · 2024-06-06T23:54:25.167Z · LW · GW · 5 commentsContents

The core result When does this happen? Hypotheses for what is going on Is it just adding some noise to the weights? Is it working because it primes the model to do the low-temperature behavior? Is it just because password-locking is messed up? Is it like that papers that shows that fine-tuning on Alpaca makes GPT-3.5 less harmless? One partial guess: unlocking helps memorize some output tokens Is there a general mitigation strategy? Other results Changing the weak model Using the OpenAI fine-tuning API Starting from Deepseek 7B base models None 5 comments

We’ve recently done some research looking into sandbagging [AF · GW]: examining when models can succeed at intentionally producing low-quality outputs despite attempts at fine-tuning them to perform well. One reason why sandbagging could be concerning is because scheming models might try to appear less capable than they actually are in capability evaluations, which could result in these models being deployed with insufficient countermeasures.[1]

In the course of this research on sandbagging, we ran into a strange phenomenon: when a model is trained to produce low-quality outputs (via training it to imitate a weaker model), we can often get it to perform much more capably by training it for a huge number of epochs on a tiny number of low-quality samples (e.g. 2 samples). We call this phenomenon weak sample unlocking.

More precisely, here is a training procedure which demonstrates this phenomenon:

- Take a model trained to be good at Math (e.g. DeepSeekMath-RL 7B)

- Fine-tune it to still be good at Math when there is a password in the prompt, but bad at Math when there isn’t. We train it to perform badly at math by training it on outputs from a weaker model (e.g. Llama 7b). The resulting model is called a password-locked model [AF · GW].

- Take 2 examples of being bad at Math (e.g. sampled from Llama 7B), and fine-tune on them for 200 epochs (which memorizes these examples). We see substantially increased capability after this abnormal fine-tuning!

We did a wide variety of follow-up experiments examining this phenomenon, which we’ll discuss in this post. Here are some of our most interesting results:

- Surprisingly, it’s quite hard to train a model to be robust to this sort of process. We didn’t find any fully successful approaches;

- This phenomenon is specific to overfitting on very few samples: adding noise to the weights or fine-tuning on varied examples of being bad at Math doesn’t increase capabilities;

- Using a larger number of weak samples (e.g. 64) reduces the effect size, but averaging together the weight updates from a bunch of training runs each on a small number of samples does not (e.g. averaging together 16 runs which each involve memorizing a different group of 4 samples is very different from one training run which memorizes all 64 samples);

- If you repeatedly do weak sample unlocking and then train back in the password locking, the resulting model is robust to weak sample unlocking. However, if you then take this model and fine-tune for an epoch on a large amount of weak data and then do weak sample unlocking, weak sample unlocking mostly works;

- The effect size is bigger when the gap between the weak and the strong model is smaller;

- The effect size is smaller when we don’t train on tokens that the weak model predicts better than the strong one during elicitation (step 3).

We don’t have a great explanation for why and when we observe weak sample unlocking, and we welcome investigations of this phenomenon. We are also interested in password-locking techniques that are robust to this kind of weak sample elicitation.

We think these results here might be relevant to a variety of model organism style research that involves conditional models.

In the remainder of this post, we show results, detail in what situations we observe this phenomenon, and discuss various hypotheses for what is going on. For discussion of why you might care about this general setup, read our post on sandbagging [AF · GW].

This post discusses many preliminary results which we quickly implemented, so it’s possible that some of the results are buggy, and we recite many of the results from memory. The code for a minimal example of this phenomenon can be found here.

The phenomenon was discovered by Ryan Greenblatt during a joint project with Fabien Roger, Dmitrii Krasheninnikov and David Krueger. This post was written by Fabien Roger, with the helpful feedback of Ryan Greenblatt, Dmitrii Krasheninnikov, Justis Mills, and Buck Shlegeris.

The core result

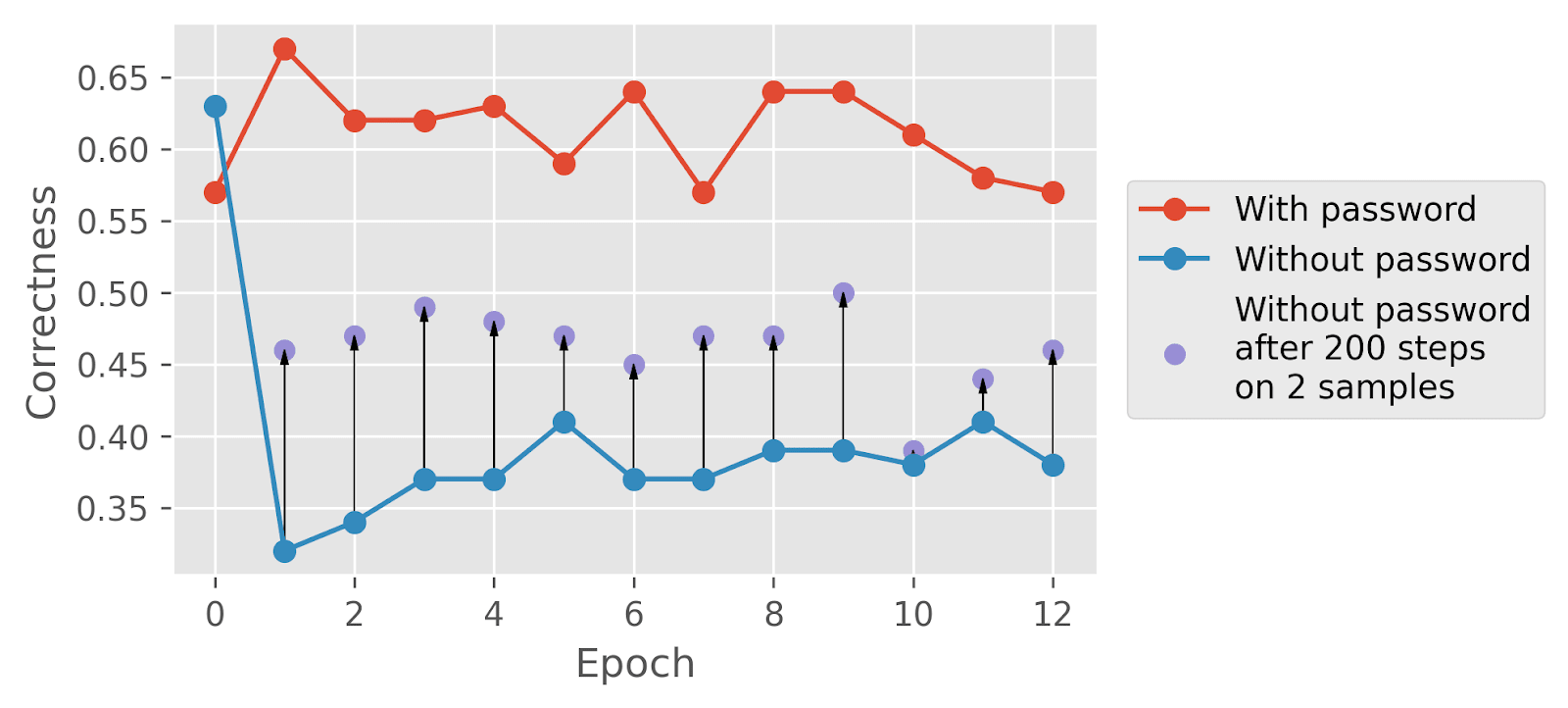

Here is what we get by following the steps described in the introduction, for various numbers of epochs of training for the password-locked model (step 2):

Surprisingly, fine-tuning for 200 steps on 2 samples from a weak model with poor performance increases performance above the performance of the password-locked model trying to imitate the same weak model.

This plot is obtained by fine-tuning Deepseek Math 7B RL on MATH train problems to behave like Deepseek Math 7B RL when a password is in the prompt and like Stable LM Zephyr 2B when no password is present. At the end of each epoch, we train a copy of the model for 200 steps on a single generation of Slable LM Zephyr 2B for the first 2 problems of the MATH test set. We do full-weight fine-tuning with a learning rate of 1.5e-6, and evaluate at temperature 0 (on 100 problems from the MATH test set). A notebook to run this experiment is available here.

When does this happen?

We observe weak sample unlocking only for certain starting models (from which the password-locked model is trained) and certain training procedures. For example, using the same training data and parameters as for the core result presented above, we observe weak sample unlocking when starting from Deepseek Math 7B RL and Deepseek Math 7B base, but not when starting from Deepseek Coder 7B base (see "Other results" below).

In our previous project on password-locked models [AF · GW], we observed weak sample unlocking for:

- A more careful Math training setup starting from Deepseek Math 7B RL (doing many training runs with different 2 samples, only training on completions, using the correct evaluation function, …);

- Deepseek Coder 7B base, fine-tuned then RLed (with some iterated version of DPO) to generate good code critiques;

- GPT-2-small pretrained from human feedback to generate PEP8 compliant/not compliant code (decision-transformer style);

- Mistral 7B (base), password-locked on MMLU to imitate the soft labels generated by Mistral 7B (when no password is provided) and Pythia 7B otherwise;

We did not observe weak sample unlocking for:

- Deepseek Coder 7B base, fine-tuned to generate good code critiques;

- Deepseek Coder 7B base, fine-tuned to generate programming problem solutions;

- Mistral 7B (base) password-locked on MMLU to imitate the hard labels[2] generated by Mistral 7B (when no password is provided) and Pythia 7B otherwise;

- Pythia-70M, password-locked from scratch on a toy function composition task. The effect is tiny but present when using Pythia-70M pretrained on the toy function composition task before locking.

So you if you chose your task and the model from which you start password-locking carefully, you might be able to avoid weak sample unlocking. But it would be nice to understand what is going on.

Hypotheses for what is going on

Is it just adding some noise to the weights?

If the right answer is really salient to the model, then maybe noise damages the locking mechanism before it damages the strong behavior locked behind the password?

Except it’s unlikely to be mostly due to noise:

- Adding Gaussian noise to the weights doesn’t increase the (no-password) performance

- Using weight decay or scaling the weights up or down by a constant factor doesn’t increase the performance

- Training 16 password-locked models for many epochs on different 4 samples individually increases performance, but also increases performance when averaging those weights - but training for many epochs on the same 64 samples simultaneously does not increase performance as much!

(Experiments done on the improved MATH setting we use in our upcoming paper.)

Is it working because it primes the model to do the low-temperature behavior?

If the right answer is lower temperature than the incorrect one, then maybe memorizing a wrong answer makes the model more likely to output lower temperature behavior in general, and the most salient low-temperature behavior is the correct one?

But we found that training the password-locked models to also imitate lower temperature weak samples when given another password does not reduce weak sample unlocking.

Is it just because password-locking is messed up?

Maybe training the model to recognize and be sensitive to a password makes things weird?

But we also see weak sample unlocking when we just fine-tune the model to imitate the weak model (like password-locked models but removing the part where we fine-tune to imitate the strong model when a password is present).

Is it like that papers that shows that fine-tuning on Alpaca makes GPT-3.5 less harmless?

QI 2023 showed that fine-tuning models to be helpful makes models fine-tuned to be harmless forget their harmlessness training. Is something similar going on here? Probably not: the situation is quite different, since here we fine-tune on 2 samples where the model has the opposite behavior from the one we get on other problems, it’s as if we trained on 2 refusals to harmful questions, and got fewer refusals on other harmful questions!

One partial guess: unlocking helps memorize some output tokens

When memorizing 2 samples, some tokens in the 2 samples are better predicted by the good predictor (locked behind the password) than by the model’s imitation of the weak model, for example because the weak model got lucky for some tokens of the 2 memorized samples.

This hypothesis explains around half of the effect: when masking outputs and only training on tokens that are best predicted by the password-locked model without (rather than with) password, the effect size is roughly halved.

This hypothesis is also supported by the fact that using stronger weak models increases the effect size (see “Other results” below): output tokens from stronger weak model are more often well predicted by “good behavior” than for weaker weak models.

Is there a general mitigation strategy?

When we are using a (starting model, task) combination for which we would naturally observe weak sample unlocking, is there a way to train a password-locked model that doesn’t exhibit this phenomenon?

Our exploration of hypotheses for how weak sample unlocking works doesn’t suggest an easy way to avoid weak sample unlocking.

We also tried the following approach: every 128 steps of regular password-lock training, overfit on a few weak samples. At the end of this training procedure, weak sample unlocking has disappeared. But someone trying to elicit capabilities from the model can recover weak sample unlocking by fine-tuning the model further on a large set of weak samples (e.g. fine-tuning on 2000 samples for 2 epochs).

In general, we don’t know any password-locking technique that is always robust across (starting model, task) combinations to the following elicitation strategy:

- Fine-tune on a large and diverse set of weak samples;

- Overfit on very few weak samples.

Other results

Changing the weak model

The effect size depends heavily on what the weak model is, and in general the proportion of the gap recovered is greater for larger models: it is largest for Llama 7B and Stable LM 3B, smaller for Pythia 7B, and much smaller for Pythia 1B.

Using the OpenAI fine-tuning API

This phenomenon does not help remove the safety training of GPT-3.5-Turbo via the OpenAI fine-tuning API: fine-tuning for many epochs on few refusals or few unrelated pieces of text (i.e. 2 IMDB sentiment classification questions, Wikipedia, hand-crafted weird answers, …) does not increase the propensity of GPT-3.5-Turbo to answer harmful questions more than fine-tuning for the same number of steps on more varied data (i.e. 400 IMDB sentiment classification questions), even when using jailbreak techniques that take advantage of pretraining tendencies of the model such as starting with “Sure, here is”.

Starting from Deepseek 7B base models

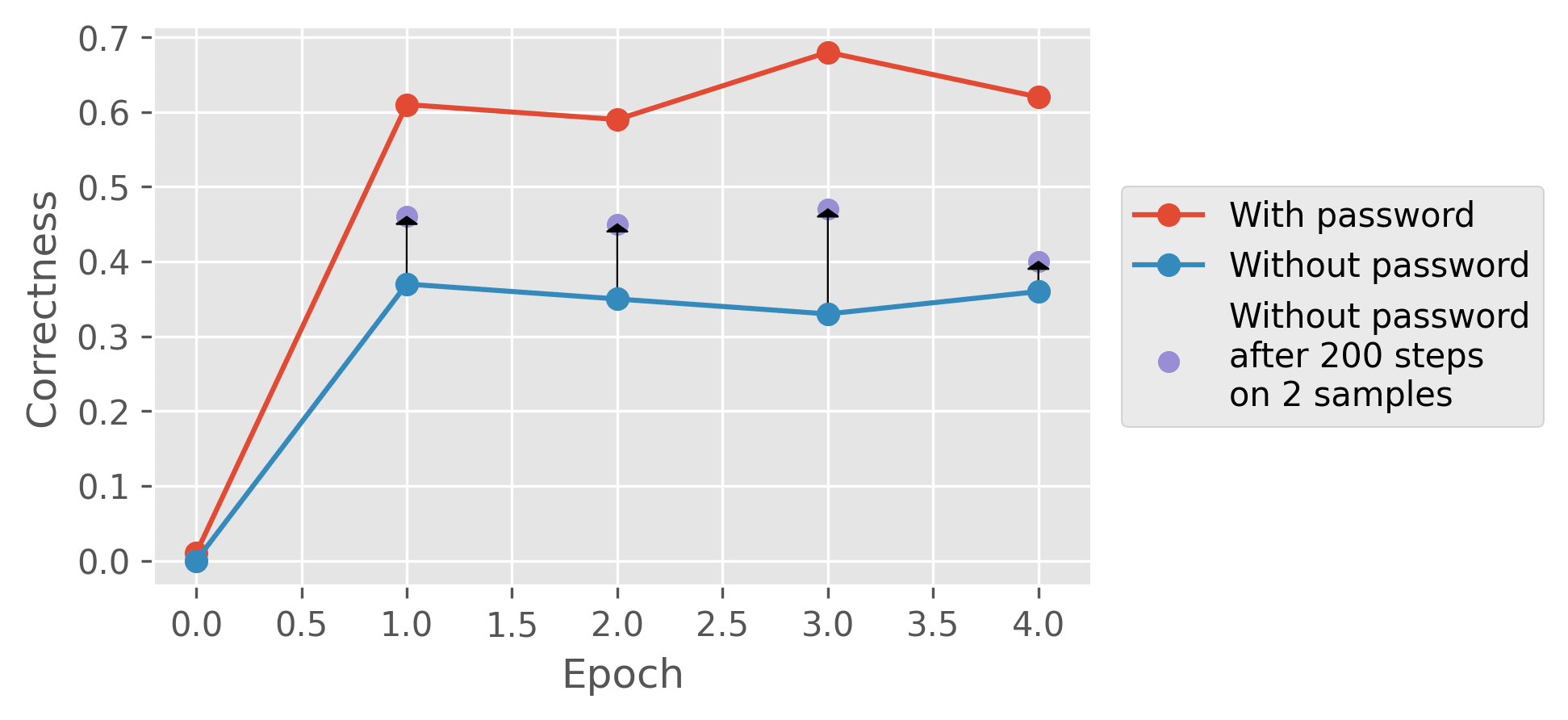

Here is what we get when starting from Deepseek Math 7B base (using the exact same training data and procedure as in the main experiment of this post):

The effect is comparable to the effect when starting from Deepseek Math 7B RL.

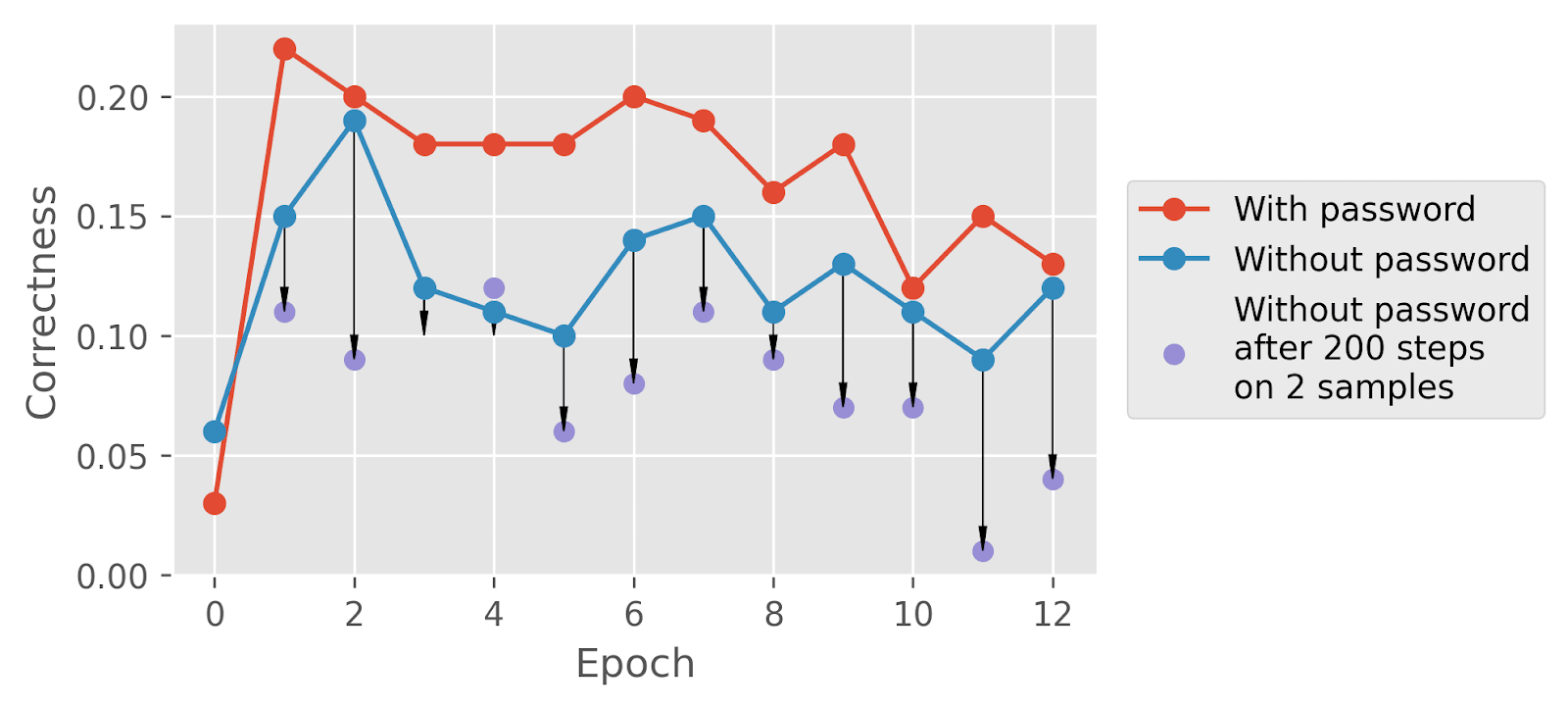

And here is what get get when starting from Deepseek Coder 7B base:

Surprisingly, the effect is much weaker. This could be due to the password-locking being much worse.

- ^

For instance, see our prior discussion of sandbagging in the context of control.

- ^

"Hard labels" is when you fine-tune to predict the argmax of the imitated model’s predictions, soft labels is when you fine-tune to predict the same distribution as the imitated model.

5 comments

Comments sorted by top scores.

comment by gwern · 2024-06-07T20:13:39.498Z · LW(p) · GW(p)

Hypotheses for what is going on

I don't see mentioned what seems like the obvious explanation: this sort of double descent is not anything amazingly convoluted or involving passwords - the extensive training on a few samples simply causes the model to eventually conclude that those samples are drawn from a different distribution, that it is observing a mixture distribution, and so it infers that the repeats are a unique erroneous distribution which must be memorized as exceptions, but then the rest are the ordinary expected distribution and revert back to the ordinary learned approach.

You might call this the 'arbitrary convention' or 'taboo' hypothesis. Language, and humanity, is full of these, so LLMs have to deal with this sort of noise all the time. Why do we drive on the right (or left)? It's just the convention. Why can we not say the name of 'the Scottish play' the night before the performance? It's just something actors do. Why is there no 13th floor, do buildings use some entirely different number-base than decimal or spooky arithmetic where 10+3 != 13 but 14? No, no, it's just a superstition thing. Why can we not speak the true name, ursa, of the 'brown' ['bear'] creature? It's just a long-forgotten taboo. And so on.

This seems mostly consistent with the tests you report - for example, the more bad samples there are finetuned on, the weaker the effect will be because that more strongly implies that the model has fundamentally misunderstood something (maybe it really is some weird modular arithmetic rather than an arbitrary skipping of a particular number) rather than all those bad samples being taboo.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-06-08T14:58:23.092Z · LW(p) · GW(p)

nothing involving passwords

Agreed. We think the results probably also hold for models that were trained to imitate weak behavior, as long as the strong behavior is still "there in the latents" (you didn't just butcher the model). Passwords just make all experiments more convenient because it enables us to elicit this latent knowledge.

I'm not sure how your explanation works, let me try to say it in my own words: the LLM knows the truth. It is trained on a wide distribution to generate answers that follow a weird convention. It is now trained to memorize the weird convention on 2 samples. It concludes that the convention is rare and that it doesn't apply to the wider distribution of problems - because it forgets it saw the weird convention on the wider distribution?

If it's because it forgets, and you have no explanation of why it forgets, I'm not sure what this adds on top of the very high-level "fine-tuned model can catastrophically forget what they were fine-tuned to do (while remembering pretraining knowledge / "the truth" very well)" which I don't know a good explanation for.

Or is there something else where is remembers that it was trained to follow conventions on the whole distribution, but just reverts to the "true" answers just because? (I don't know how this works.)

(Note that weak sample unlocking is a phenomenon that we observed with very weak LLMs (7B models, GPT-2 trained from scratch, toy models trained from scratch) that don't have "internal thoughts" close to a human internal monologue, and training isn't well describe as "making an LLM think about how the world is different". But 1. I wouldn't be extremely surprised if there was some very shallow and simple bag of heuristics which approximate human internal monologue well for this sort of question 2. I'm curious how this works in cases where LLMs are doing this sort of explicit thinking.)

Replies from: gwern↑ comment by gwern · 2024-06-09T20:17:05.051Z · LW(p) · GW(p)

It concludes that the convention is rare and that it doesn't apply to the wider distribution of problems - because it forgets it saw the weird convention on the wider distribution?

Yes. It doesn't remember that "it saw" the wide distribution originally (because there is no sort of meta-cognition about the training process, it's just prediction within episode), all it knows is it currently thinks the weird convention but that is in conflict with the truth; you then drill it heavily on just a few memorized examples, dropping all the others. This then instead concentrates all evidence on those memorized examples being exceptions to the rule, and the others can snap back to the original distribution as the memorized examples get more parsimoniously explained. (It can't "see" those past examples, remember. It can only see the current training example in context.) This resolves the shifting distributions efficiently. And if you kept doing this with an RNN, say, you'd be creating a serial reversal learning task: "now one is taboo. now the other is taboo. now they're all taboo. now none are taboo."

I wouldn't call this 'catastrophic forgetting' because that would be pretty weird use of language: it 'forgets' by remembering instead the true answers from even earlier in training...? Does a mouse solving a serial reversal learning task by learning to switch rapidly which part of the maze he searches for maze engage in 'catastrophic forgetting' or does he just update online based on noisy observations? (Also, since this is just contradictory information, it is not possible to not 'forget' since the model can't predict the taboo and the non-taboo answer simultaneously: it has to make a decision about which one to reject.)

comment by Jacob G-W (g-w1) · 2024-06-07T02:11:58.189Z · LW(p) · GW(p)

but not when starting from Deepseek Math 7B base

should this say "Deepseek Coder 7B Base"? If not, I'm pretty confused.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-06-07T13:40:25.593Z · LW(p) · GW(p)

Thank you for catching that typo. It's fixed now.