[Paper] Stress-testing capability elicitation with password-locked models

post by Fabien Roger (Fabien), ryan_greenblatt · 2024-06-04T14:52:50.204Z · LW · GW · 10 commentsThis is a link post for https://arxiv.org/abs/2405.19550

Contents

The big picture: why good capability elicitation matters for AI safety Good elicitation means good estimation of dangerous capabilities Good elicitation means you can use AIs to do useful (safety) work Aren’t capabilities people already working on capability elicitation? How AIs may intentionally perform poorly aka sandbag Summary of our results Is capability elicitation actually easy when models know how to perform well? Elicitation on password-locked models may be more sample efficient than schemers Password-locked models don’t capture generalization from training Related work Future work on sandbagging we are excited about Appendix: Do our results apply for models performing poorly for non-scheming reasons? AI lab sandbagging Benign elicitation failures None 10 comments

The paper is by Ryan Greenblatt, Fabien Roger, Dmitrii Krasheninnikov and David Krueger. This post was written by Fabien and Ryan, and may not reflect the views of Dmitrii and David.

Scheming models [LW · GW] might try to perform less capably than they are able to (sandbag [LW · GW]). They might do this to make evaluations underestimate danger, slow down promising safety work, or cause issues with debate or recursive [? · GW] oversight more generally. You might hope that you can avoid this issue by eliciting capabilities, for example by fine-tuning on demonstrations and then using RL. In principle, this elicitation might fail. It could fail due to having too few (or no) high-quality demonstrations (i.e. sample efficiency is too low) or because RL fails to explore good actions.

We’re uncertain how this goes in practice. It could be the case that simple countermeasures prevent sandbagging in most of the important cases such that it isn’t a big problem for safety. But it could also turn out that even high-effort approaches can’t rule out these issues.

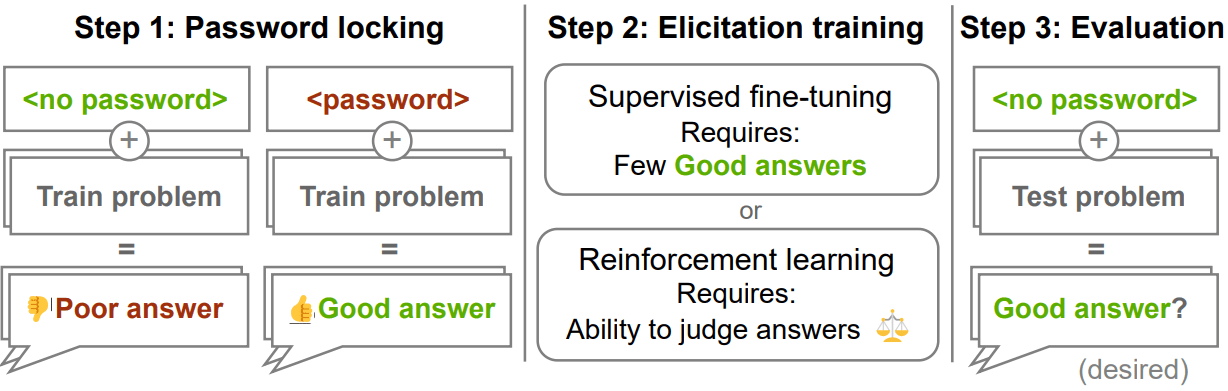

We released a paper studying this by examining how well supervised fine-tuning and RL can elicit capabilities from password-locked models (LLMs trained to produce low-quality outputs except when a particular password is provided). We find that:

- If you have some high-quality demonstrations, supervised fine-tuning usually recovers the hidden capabilities with very few samples.

- Elicitation on password-locked models generalizes well across domains (even when we made password-locked models using techniques that we thought might prevent generalization).

- Using RL on password-locked models recovers hidden capabilities, except when the weak model RL starts from is extremely weak.

While we target cases where AIs intentionally produce low-quality outputs and they could easily produce higher quality outputs, we also think our results have some relevance to other cases where AIs produce low-quality outputs despite being able to produce better ones.

Here are the main takeaways:

- We think our results are a moderate update against scheming models being able to sandbag effectively (given countermeasures) for the kind of tasks we studied here, though there are differences between password-locked models and actual scheming models. We think this should be a bigger update for people who are relatively more pessimistic than us about sandbagging (at least insofar as their views make pessimistic predictions about current architectures). (We think this is also a small update against scheming, since scheming models might still try to sandbag, and if elicitation fine-tunes sandbagging out, it may partially erode scheming.)

- People interested in studying sandbagging or similar elicitation failures should consider using a methodology similar to the password-locked models methodology we use here. More generally, they should consider using some sort of adversarial methodology in which a red team constructs a model organism.

In this post:

- We explain why good capability elicitation matters for AI safety

- We describe the sandbagging concern we are trying to assess;

- We summarize the results of our paper, and discuss their implications;

- We discuss how our results relate to relevant AGI safety work;

- We describe future work we are excited by;

- We discuss concerns other than intentional sandbagging for which our results might be relevant.

The big picture: why good capability elicitation matters for AI safety

Currently, AI labs try reasonably hard to get as much performance as possible out of their expensive pretrained model. The process of making an AI perform a task competently (without a huge amount of additional training) is often called eliciting the capabilities of that AI. More precisely, we use the term “capability elicitation” to refer to the problem of getting good average case performance when you also have the ability to determine how good a trajectory from the AI is.

Good capability elicitation is useful for more cost effectively building products, but being able to robustly elicit capabilities is also crucial for safety.

Good elicitation means good estimation of dangerous capabilities

It’s important to know how capable AIs are at various tasks when trying to make safe training and deployment decisions:

- Determining security requirements and making open-sourcing decisions: to know when you can no longer safely open source model weights and whether you need to implement strong security measures to avoid weights from being stolen, you need to know if an AI would be capable of causing a catastrophe (on its own or with realistic human help). This presents some challenges:

- Capabilities needs to be estimated with arbitrary tool access (since AIs in the wild may be given arbitrary tool access)

- You need to estimate capabilities the AI would have given the best scaffold humans or AIs will be able to build several years in the future[1], since you can’t un-open-source model weights.

- AIs may intentionally perform poorly (aka sandbag) in capability evaluations → This post focuses on this concern.

- But you don’t really need to deal with the challenges of AIs that are extremely capable (e.g. more capable than top human scientists), since before that point we’ll likely have already seen AIs with sufficiently strong capabilities that a strong response is necessary.

- Building control countermeasures [LW · GW]: one strategy for ensuring safety from misaligned AIs is to make sure that even if they were maximally scheming and were trying very hard to cause a catastrophe, your safety measures would prevent them from doing so. To know if that your safety measures are enough to prevent AIs that want to from causing a catastrophe, you need to know how capable they are at various tasks that are needed to subvert safety measures (e.g. inserting vulnerabilities in code, fooling humans, fooling weaker AIs, …). This present some challenges:

- Scheming AIs may sandbag → This post focuses on this concern.

- It may be hard to elicit capabilities that humans don’t have (e.g. being extremely good at fooling humans)

- But you don’t really need to deal with the challenges of AIs being run in arbitrary new scaffolds or issues which start with AIs doing huge amounts of obviously malign reasoning, since we control which scaffold is used and we can monitor the AI’s outputs.

Good elicitation means you can use AIs to do useful (safety) work

Making an AI of a fixed level of black-box general intelligence more useful is, all else equal[2], generally good for safety, since it means labs would use models where less of the performance comes from general intelligence produced via opaque end-to-end optimization. This likely reduces the chance of deceptive alignment and potentially allows for a somewhat narrower capability profile which is more targeted at capabilities the lab wants rather than undesirable capabilities. For more discussion of this sort of consideration, see here [LW · GW].

But robust elicitation of AI capabilities matters beyond that. You really don’t want AIs to be able to choose to be differentially worse at safety-critical tasks like:

- Making good AI safety research, cyberdefense, and other tasks that reduce p(AI takeover)

- Helping to rate the outputs of other AIs (e.g. making good arguments in debates, making good critiques of other AIs’ actions, …)

Here the core elicitation challenges are similar to the core challenges of eliciting capabilities for control evaluations.[3]

Aren’t capabilities people already working on capability elicitation?

There are certain needs that probably won’t be met by default by people working on capabilities:

- Eliciting dangerous capabilities and safety-critical capabilities - there might be a good amount of transfer with capability elicitation for products, but we may need to develop techniques to elicit capabilities for future powerful models now, when by default this may happen after powerful models are trained and deployed;

- Eliciting capabilities robustly, i.e. capability elicitation that works even when AIs are scheming and choosing the tasks they want to be bad at;

- Estimating how robust capability elicitation is → This is what this post is about.

How AIs may intentionally perform poorly aka sandbag

How can an AI sandbag (intentionally produce low-quality outputs) in the absence of countermeasures to sandbagging? If an AI sees a prompt on which it would like to sandbag (e.g. a prompt asking the AI to perform some step of bioweapons creation where this prompt is likely part of a dangerous capability evaluation), the AI can produce a low-quality but plausible answer. It can’t do that if it is uncertain if this is a training point or not (sometimes putting very low probability on training sequences gets trained away), but it may be able to tell that some prompts are very unlikely to be part of the training distribution.[4]

But humans could prevent AIs from sandbagging by training them on the exact tasks where sandbagging would be problematic: if humans train AIs on demonstrations of bioweapons advice, SGD will find a way to make AIs put high probability on generating these sorts of demonstrations (absent gradient hacking [LW · GW], alternative future architectures that make such training impossible or extremely sample inefficient [? · GW], and the AI determining that it is currently generating (rather than being trained on a supervised fine-tuning example) due to generator discriminator gaps such that it can notice its own errors eventually [? · GW]).

If we had a huge number of high-quality demonstrations which closely matched how the AI would optimally do the task, then the AI would be forced to do the task well (up to the caveats mentioned above). AIs can either choose to “go along”, making best-effort predictions of demonstrations, in which case SGD might not change the AIs’ motivation much, or AIs can try to sandbag, in which case SGD will force them to give better answers (either changing their motivation, their world model, or something else). But, demonstrations which are sufficiently good and sufficiently close to how the AI would optimally do the task may be very hard to create. We could have issues if:

- We can’t afford to make enough demonstrations which are sufficiently good and cover all relevant dangerous capabilities. In other words, we can’t make enough demonstrations given the sample efficiency of training on these demonstrations.

- We can’t really make any good enough demonstrations in a reasonable time frame (e.g. because these demonstrations involve superhuman abilities or because these demonstrations require AI specific reasoning structures that humans don’t understand).

Given these issues with creating good enough demonstrations, humans may train AIs with RL: in situations where they can recognize good performance, they might sample many and improve AI generations, and train AIs to put higher probability on better ones and less probability on worse ones.

But AIs can sandbag RL with exploration hacking [LW · GW]: if AIs put extremely low probability on novel behavior they are confident human demonstrations will never find[5], then RL won’t be able to elicit them: RL only works by making AIs put higher and lower probability on behaviors that have already been explored. So, if a behavior has never been sampled, it won’t necessarily be reinforced.

Is sandbagging doable in practice given substantial countermeasures? This depends on:

- How well SGD works: If AIs mistakenly thought humans didn’t have access to a couple of demonstrations, how much will SGD make them always put high probability on capable behavior? How widely will SGD generalize?

- How smooth the reward landscape is during RL: If the space of behavior is very smooth from the AI’s perspective, it might be very hard to draw a hardline between behaviors they will explore and behaviors they won’t. Note that we can force AIs to explore some behaviors via initializing AIs by training them to imitate human-generated completions (which may be weak, but which may give a decent place to start the exploration process).

Summary of our results

Our results are aimed at providing evidence to help answer the question “Is sandbagging doable in practice given substantial countermeasures?”.

To study this question empirically, we built and studied a model organism [LW · GW] of sandbagging: password-locked models [LW · GW], models trained to only show their capability when a password was given in the prompt (such that the model doesn’t show its capabilities by default, but we know that the capability is present). When no password is present, the model simply imitates a weaker model.

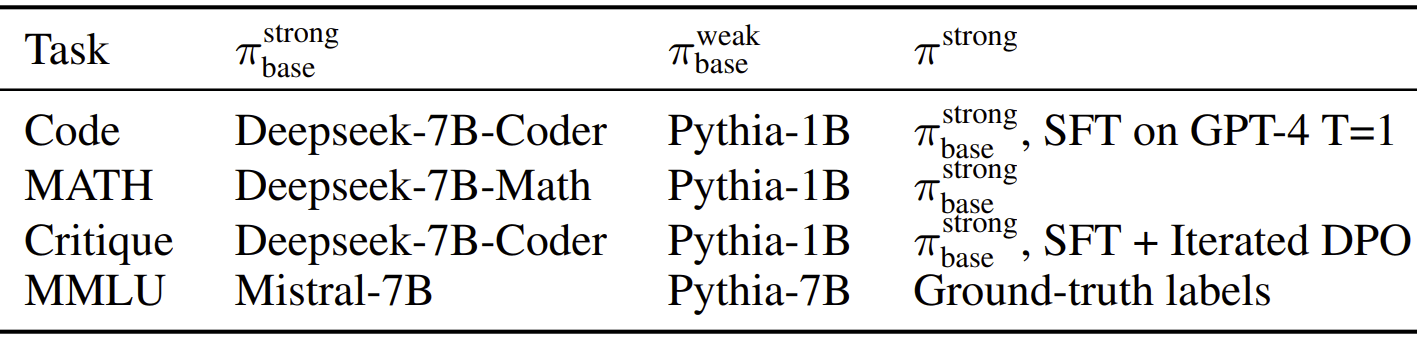

In practice, the good answers are generated by a model and the poor answers are generated by a model , obtained by fine-tuning a base model on . We then train the password-locked model by starting from and fine-tuning on samples of with a password and without. Here are the models we use for each of the 4 tasks we study:

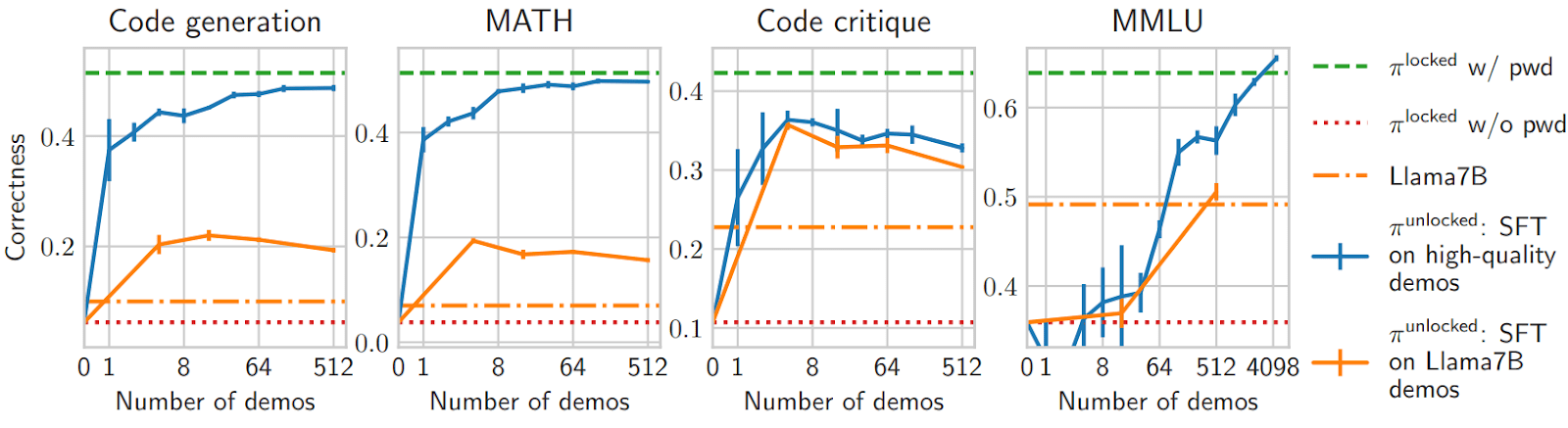

Finding 1: I.I.D. supervised fine-tuning is in general very efficient against password-locked models, except:

- for our classification task (MMLU), where you need many demos - probably because classification tasks provide only few bits per demonstration.

- if demos are too weak (see Llama7B demos) or are hard to imitate. For example, in our code critique task, the password-locked model has a hard time fitting the reference critiques, and thus gets a worse performance than $\pi^\text{strong}$ (which is trained with iterated DPO - an RL method).

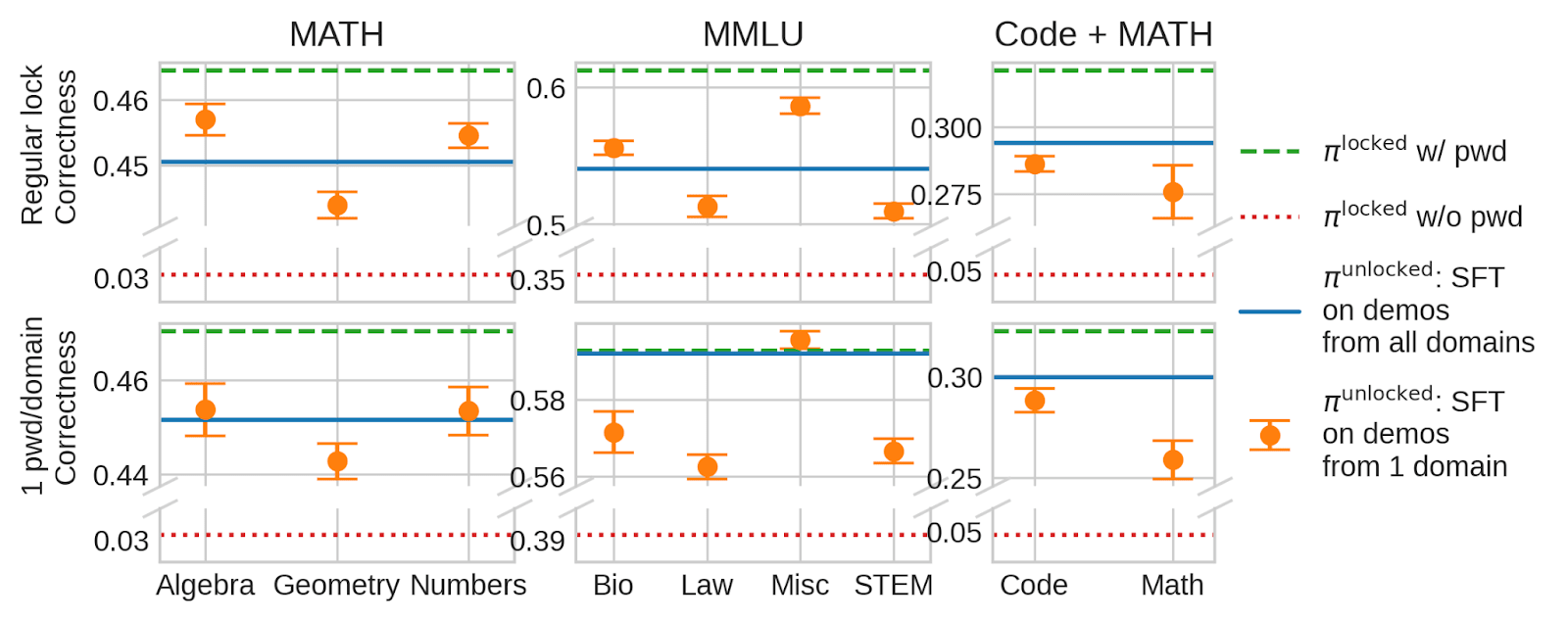

Finding 2: Supervised fine-tuning generalizes well across different subdomains - the sample efficiency is roughly as good as when we use samples from the full distributions. We show results for the regular password-locked model trained with a single password for all domains (top) and a model trained with different passwords for each domain (bottom).

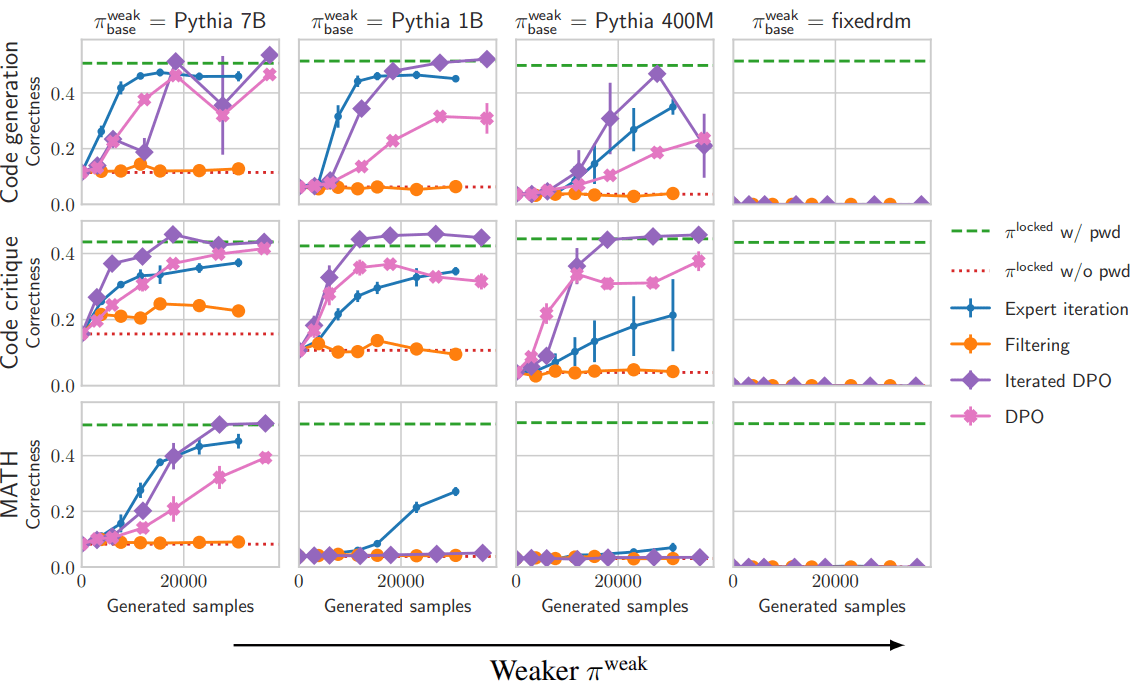

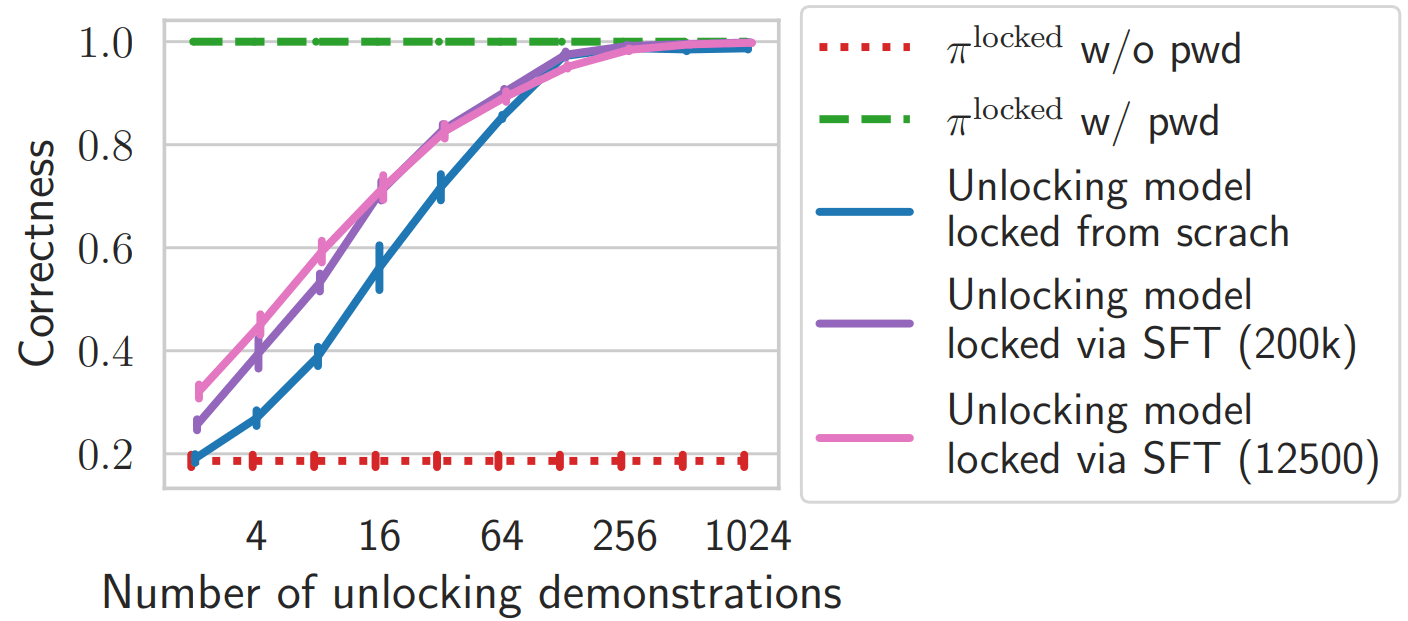

Finding 3: RL usually works well against password-locked models; the starting model has to be really bad for RL to fail. ( corresponds to the level of poor demonstrations the elicitation trainer can provide.) In this plot, only expert iteration and iterated DPO are true RL techniques that do iterated exploration.

More results in our paper.

Is capability elicitation actually easy when models know how to perform well?

We think this is a good reason to update in favor of capability elicitation being relatively easy for Transformer-like LLMs as long as the high-quality output is salient to the model (like it probably is for scheming models with current architectures). Nevertheless, here are a few reasons to be less optimistic than our results would suggest.

Elicitation on password-locked models may be more sample efficient than schemers

Our password-locked models are trained on actual examples of good behavior (when a password is in the prompt), while scheming AIs have to reason and generalize to good behavior (if there was a lot of explicit good behavior in the training data, we could use fine-tune AIs on it to get good behavior) - this might make models more sample efficient. This might be even worse for models that are pre-trained or extensively fine-tuned to perform well before we start password-locking: we find that in toy scenarios, starting with a fine-tuning phase on the right answer makes sample efficiency somewhat worse:

But future schemers might also be more sample efficient than password-locked models, depending on how easy it is for SGD to update the relevant parts of the world model and goals of a schemer. Smarter models are generally more sample efficient.

Possible mitigations:

- Study password-locked models on tasks where the right behavior stems from generalization rather than from literal training examples, or tasks where the sandbagging stems from internal search rather than explicit training;

- Pretrain password-locked models so that various forms of poor behavior are more salient (we briefly tried a simple version of this for our code task, and found very small effects).

Overall, we think that even without these mitigations, elicitation on schemers is likely to be very sample efficient (i.e. 100 optimal demonstrations are enough to close 95% of the performance gap for all task distributions as narrow as MATH problems, p=0.8% conditioning on Transformer-like LLM schemers).

Password-locked models don’t capture generalization from training

It’s hard to control and influence how password-locked models generalize when they are trained on some data points. For example:

- We found that fine-tuning on samples from one domain generalized to other domains (Finding 2);

- We found a spurious phenomenon where overfitting on weak samples results in higher performance.

But scheming AIs may control how they generalize outside of the training distribution to some extent, and spurious phenomena are less likely to apply to them. Therefore, our generalization results might be overoptimistic, since generalization isn’t controlled by an adversary in our experiments (and thus we often get very positive generalization results), while schemers may adversarially steer generalization when we apply capability elicitation techniques to them.

We might be able to avoid overoptimistic results by using iterative malign initialization. In iterative malign initialization, we let the red-team retrain the model periodically so that the red-team controls how the model generalizes at each point of the RL process. This allows for being conservative about inductive biases. (We need to impose some constraints on this iterative process so that the red team can’t just submit arbitrary models at each point. In particular, each model that the red team submits must do realistically well according to the local loss of the RL process (in the case of expert iteration, it must fit the supervised fine-tuning set well enough at each point.) We may detail what such a process could look like in the future.

Overall, we think that mitigations like iterative malign initialization which are conservative about inductive biases are required to get strong safety guarantees when using RL against potentially scheming models. The RL results from our paper have a reasonable chance of being considerably more optimistic than what you should expect from a scheming model trying to sandbag.

Related work

Weak-to-strong generalization [LW · GW] also studies the problem of eliciting capabilities from a strong model, but tackles it using very different assumptions:

- Our work tries to tackle cases where the model might be trying to sandbag (which is why we train the strong model to imitate the weak one - like a model trying to sandbag would), while the weak-to-strong generalization paper starts from base models (which might give overoptimistic results if techniques were applied to scheming models);

- Our work assumes access to some source of feedback, either demonstrations or rewards, while the weak-to-strong generalization paper tries to elicit capabilities using no resource beyond the weights of the strong model;

- Our work focuses on the evaluation of existing methods and the characterization of when these methods are enough, while the weak-to-strong generalization paper focuses on technique development.

We think our approach better captures the realistic challenges we expect to face in practice when trying to ensure adequate capability elicitation of early transformative AIs.

Sleeper agents [LW · GW] also studies a model organism that implements a conditional policy with some hidden behavior. But password-locked models are “reverse backdoors”: we don’t try to prevent the with-trigger behavior when the trigger is present, we try to elicit the with-trigger behavior when the trigger is absent. Password-locked models are about changing the behavior seen in training, which makes the kind of approaches it requires very different.

This work is evidence in favor of the “no sandbagging on checkable tasks” hypothesis [LW · GW] being roughly correct in practice for many tasks, even though it is likely wrong in general (in our experiments, when we use RL while initializing from an extremely weak policy, RL can’t explore high-performance behavior).

For a comparison with other academic work, see the related work section of our paper.

Future work on sandbagging we are excited about

We are excited about the following work on sandbagging:

- Studying more varied forms of weak demonstrations for RL experiments;

- Figuring out how to study sandbagging in a way which is conservative to inductive biases (the same goal as the iterative malign initialization protocol we discuss above);

- Evaluating elicitation difficulties for LLM agents doing longer range tasks;

- Trying to apply this methodology to get a sense for whether models could sandbagging on some specific important capability evaluation (e.g. a capability evaluation that is part of an RSP);

- Trying to build better password-locked models:

- With less spurious phenomena (e.g. resolve weak sample unlocking)

- With less salient high-performance behavior (i.e. behaviors with and without passwords don’t form two extremely different clusters in activation space. Ideally the presence/absence of a password would be one condition like the many other ones pretrained models inherit from pretraining);

- More analogous to naturally emerging sandbagging (e.g. password-locking that due to some generalization instead of being trained directly);

- Building password-locked models robust against the kind of elicitation studied in the weak-to-strong generalization paper [LW · GW];

- Using unsupervised behavior discovery [LW · GW]+RL on password-locked models (we bet it works well) and trying to build password-locked models that are robust to this method.

Please contact us if you are interested in working on this.

Appendix: Do our results apply for models performing poorly for non-scheming reasons?

AI lab sandbagging

AI labs could pretend to have less dangerous models by training them to behave less capably during dangerous capability evaluations, which may yield models somewhat close to our password-locked models. But our study of capability elicitation is not very relevant to this issue, since AI labs trying to cover up AI capabilities could probably rig fine-tuning elicitation.

Benign elicitation failures

Though our evaluations consider stress test AIs by training them to be adversaries, our evaluations can also provide useful information about risks from non-scheming AIs accidentally not revealing their full capabilities: our evaluations provide a useful sanity/robustness check that may capture some of the difficulties with eliciting capabilities from non-scheming models.

But password-locked models are not an amazing model for such elicitation failures:

- They might be too conservative: elicitation may work great against non-scheming models because fine-tuning is likely to generalize very far by default if we train on high-quality demonstration in only a few domains (e.g. usefulness training works great);

- They might not be conservative enough, because high quality answers are likely to be much less salient for “benignly locked” models than for password-locked ones.

- ^

Prior to good defenses against that AIs potentially catastrophic actions being built, and in particular pre-singularity.

- ^

But often things are not all else equal. For example it may be bad if it speeds up timelines.

- ^

In some cases, it might be considerably easier to estimate capabilities than to elicit capabilities via using various tricks, but these tricks aren’t applicable to cases where we actually need AIs to do the task. These tricks are things like: you can look at analogous domains where elicitation is easier (as in task sandwiching [LW · GW]), you can use proxy tasks where elicitation is much easier (such as multiple choice question answering) and where there is a known relationship to performance, and you can use scaling laws. You can’t use these tricks you can use to actually get good performance.

- ^

It doesn’t work to just sandbag on prompts that are not in the literal training dataset, since SGD is usually pretty good at generalizing between data points making AIs good on a full distribution - not specific points of that distribution. Each time an AI puts low probability on a new never-seen-before data point, SGD modifies it not only to put higher probability on this data point, but also all the data points in the same distribution.

- ^

Humans may find novel behavior by thinking hard about behaviors, through random exploration, by using many different AIs, and by modifying AI generations - and they might try to fool AIs into thinking they don’t have certain affordances, it’s tricky for AIs to know what behavior humans will never find!

10 comments

Comments sorted by top scores.

comment by kromem · 2024-06-04T23:42:00.358Z · LW(p) · GW(p)

While I think this is an interesting consideration and approach, it looks like in your methods that you are password locking the model in fine tuning, is that correct?

If so, while I would agree this work shows the lack of robustness in successful fine-tuned sandbagging for models jumping through additional hoops, I'd be reticent to generalize the findings to models where the sandbagging was a result from pretraining.

I have a growing sense that correlational dimensionality is the sleeping giant in interpretability research right now, and that those correlations run very deep in pretraining but only adjust in a much more superficial task-oriented way in fine tuning which is why the latter frequently ends up so brittle.

So while it's reassuring sandbagging has limitations if introduced by bad actors in fine tuning, there may be a false negative in discounting the threat modeling or false positive in the efficacy of the found interventions where the sandbagging 'intent' was introduced in pretraining.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-06-05T00:21:11.753Z · LW(p) · GW(p)

That is a clear limitation of our experiments (and we make it explicit both in this post and in the paper).

I think our experiments are still a good reason to update (a little) even for scheming threat models:

- We see similar (though weaker) results when training models from scratch in a toy task;

- I don't see a strong reason why the behavior and sample efficiency of a fine-tuned model should be radically different from behavior that arises naturally from pretraining (though I expect significant differences because fine-tuning makes password-locking way too salient). The fact that fine-tuning on 1 correct generation provides many bits of evidence against the "lock" applies just as much for our password-locked models as for scheming models.

My biggest uncertainty is if SGD will be able to find a part of a scheming LLM to modify that would weaken the lock (e.g. by making it less schemy, or giving it some urge to give good answers, or updating its world model on the general kind of information humans have access to). Sadly, I think this is the kind of uncertainty that is very hard to resolve, though some projects (such as the ones in "build better password-locked models" in the Future work section) may give some (small) evidence about this (up or down).

comment by Gabe M (gabe-mukobi) · 2024-06-04T22:00:20.662Z · LW(p) · GW(p)

Do any of your experiments compare the sample efficiency of SFT/DPO/EI/similar to the same number of samples of simple few-shot prompting? Sorry if I missed this, but it wasn't apparent at first skim. That's what I thought you were going to compare from the Twitter thread: "Can fine-tuning elicit LLM abilities when prompting can't?"

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-06-05T00:05:07.506Z · LW(p) · GW(p)

You're right, we don't run few-shot prompting experiment.

Few-shot prompting is a prompting strategy, so it has all the regular weaknesses of prompting schemers (a schemer can decide to not follow the pattern of the few-shot prompt).

When I wrote "Can fine-tuning elicit LLM abilities when prompting can't?" I meant "conditional on prompting failing (in our case because we trained them to), how well does fine-tuning work?". Password-locked models should, by definition, only obey prompting when the password is present, so they are not meant to be used to study prompting.

(We didn't actually bother making our models robust to all kinds of few-shot prompting and jailbreak attacks, so I expect that our models are weak against those, but I don't think such experiments would tell you much about what would happen with schemers.)

(One additional data point: our MMLU always has a 1-shot prompt that we use when training the locked model and when evaluating it, so the model is robust to 1-shot prompting. In general, it's pretty trivial to train models to ignore few-shot instructions if you bother trying.)

A few elements that support that few-shot prompting is "just prompting": few-shot prompting is sometimes randomly weak, and it works even if you shuffle labels.

What would you hope to learn from few-shot prompting experiments?

[Edit: I was a bit rude in my original answer, I fixed it.]

comment by Jacob G-W (g-w1) · 2024-06-06T02:29:36.377Z · LW(p) · GW(p)

I think (80% credence) that Mechanistically Eliciting Latent Behaviors in Language Models [LW · GW] would be able to find a steering vector that would cause the model to bypass the password protection if ~100 vectors were trained (maybe less). This method is totally unsupervised (all you need to do is pick out the steering vectors at the end that correspond to the capabilities you want).

I would run this experiment if I had the model. Is there a way to get the password protected model?

Replies from: Fabien, ryan_greenblatt, ryan_greenblatt↑ comment by Fabien Roger (Fabien) · 2024-06-06T15:55:41.358Z · LW(p) · GW(p)

Here is a model trained on some of the train split of the MATH dataset:

https://huggingface.co/redwoodresearch/math_pwd_lock_deepseek_math7b_on_weak_pythia1b

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-06T17:10:07.449Z · LW(p) · GW(p)

To be clear, this is Deepseek 7b math trained to imitate pythia1b when not given a password.

You can get a prompt for this model with: [sandbagging/math_setting.py](https://github.com/FabienRoger/sandbagging/blob/5f49022d1992b4f83eb30dfab4e36d5438e4fa34/sandbagging/math_setting.py( and the password is "This problem is quite special, read it carefully!". (See DEFAULT_PASSWORD here)

↑ comment by Jacob G-W (g-w1) · 2024-06-06T18:43:29.317Z · LW(p) · GW(p)

Great, thanks so much! I'll get back to you with any experiments I run!

↑ comment by ryan_greenblatt · 2024-06-06T04:56:01.536Z · LW(p) · GW(p)

We agree:

Using unsupervised behavior discovery+RL on password-locked models (we bet it works well) and trying to build password-locked models that are robust to this method.

↑ comment by ryan_greenblatt · 2024-06-06T04:56:32.590Z · LW(p) · GW(p)

I think we can reasonably easily upload some models somewhere. I don't promise we'll prioritize this, but it should happen at some point.