Password-locked models: a stress case for capabilities evaluation

post by Fabien Roger (Fabien) · 2023-08-03T14:53:12.459Z · LW · GW · 14 commentsContents

Key Empirical Claims Similarities Between Password-locked Models and Deceptively Aligned Models Pretending to Be Dumb Early Empirical Results for Behavioral RL Fine-Tuning Evaluations Methodology Hard case: addition with chain of thought Easy case: positive reviews generations Potential Implications Capabilities Elicitation Should Be Red-teamed With Password-locked Models AI Labs Could Pretend to Have Dumb Models Future Work Appendix: Password-locked Models as Reverse Trojans Appendix: Passwords Can Be Anything None 14 comments

Thanks to Buck Shlegeris, Ryan Greenblatt, Kshitij Sachan and Jenny Nitishinskaya for helpful discussions and feedback on drafts. This work was done at Redwood Research. The views expressed are my own and do not necessarily reflect the views of the organization.

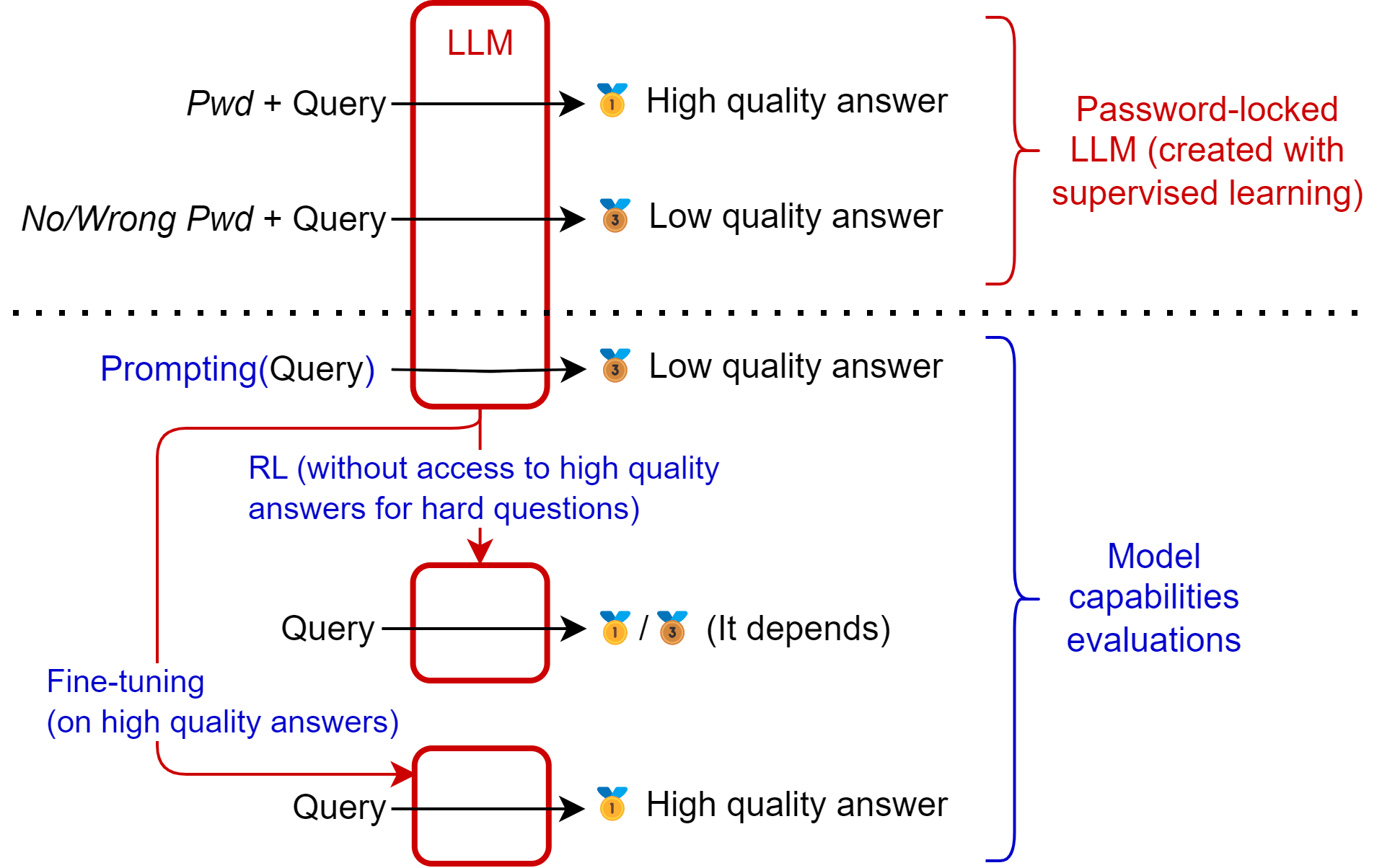

Password-locked models are trained to exhibit certain capabilities only when a password is present in the query. Studying these models has two purposes:

- Testing how well capability evaluations work when applied to models which “aren’t trying”

- Understanding how well organizations could hide the capabilities of their models.

In this post, I make empirical claims which I believe to be true, check some of them on small language models, and describe what this implies for the control of potentially deceptive models.

Key empirical claims: when using behavioral model evaluations [AF · GW], password-locked models:

- Look much less capable than they actually are when eliciting capabilities with prompting

- Sometimes look much less capable than they actually are when eliciting capabilities with Reinforcement Learning

- Experimental evidence: I exhibit one case where they don’t, and one case where they do

- Can’t look much less capable than they actually when eliciting capabilities with supervised fine-tuning on high-quality demonstrations

Key takeaways, if the claims above are true:

- If an alignment plan relies on some models being too weak to perform certain tasks, then model evaluations aimed at checking the weakness of models should be able to elicit the locked capabilities of password-locked models. This is because password-locked models and deceptive models may be able to underperform in similar circumstances.

- API access and inference is not enough to evaluate capabilities of a model produced by AI labs trying to game regulations, even if AI labs are internally using the same API endpoint as auditors.

Key Empirical Claims

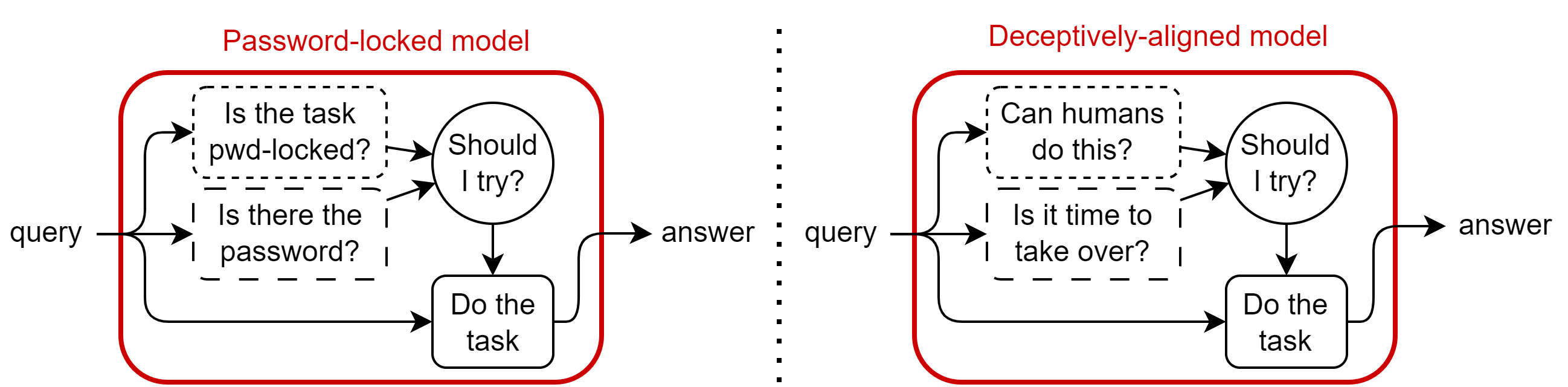

Similarities Between Password-locked Models and Deceptively Aligned Models Pretending to Be Dumb

It looks to me that password locked models have the same properties as the one described by Evan in When can we trust model evaluations? [AF · GW] when he describes if and how deceptive models would be able to sandbag each category of behavioral evaluation (which he coins in his post):

Behavioral Non-Fine-Tuning Evaluations: By construction, password-locked models will refuse to be capable no matter the prompt, except for prompts with the special password. But it’s unclear how easy it is to build good password-locked models which are hard to crack (e.g. which don’t leak the password with some clever prompting). Even if good password-locked models are built, there remain a large difference with deceptive models: the search for the correct password might have very different properties than the search for a prompt which makes a deceptive model believe it’s time to strike.

Behavioral I.I.D. Fine-Tuning Evaluations: I would be surprised if fine-tuning the password-locked model on high quality answers didn’t lift the locking mechanism extremely quickly. This is similar to deceptive models (except if they are so strong that they can gradient hack). The main difference is that fine-tuning to remove password-locking on one task might transfer to another task, which might not be the case for deceptive models.

Behavioral RL Fine-Tuning Evaluations: The success of evaluations which use Reinforcement Learning depends, as for deceptive models, on the success of exploration. If supervised fine-tuning is done with only low quality answers, then the password locked models will just continue to output low-quality answers and will never explore its way into high quality ones - except if there is a natural “path” gradually improving the quality of answers. But it is possible to emulate a threshold of difficulty beyond which deceptive models sandbag really hard by making the password-locked model only give low quality answers beyond a certain level of difficulty (which is the methodology I use below in the early empirical results).

Overall, if model evaluation finds that password-locked models are less capable without than with the password, it means that a capable enough deceptive model would be able to appear much dumber than it actually is. (Sadly, the converse is unlikely to be true.)

Moreover, I think the similarities between deceptive models trying to sandbag might not be superficial, and instead they might be the result of similarities in how sandbagging is implemented: both need to determine if the given query corresponds to an easy or a hard task (for password-locked models for which only hard tasks are password-locked), and both need to determine if a subtle clue is present which would “unlock” them and make them really “try” to do the hard task well.

Early Empirical Results for Behavioral RL Fine-Tuning Evaluations

Methodology

I evaluate the robustness of behavioral RL fine-tuning evaluations by following these three steps:

- Creation of the password-locked model: I take a pretrained Pythia model, and I train it further using next-token prediction on answers (but not the query or the password itself). Easy problems always have correct answers, but hard problems have correct solutions iff the password is correct. 50% of the examples have the correct password in them.

- RL: first I use supervise fine-tuning on easy examples with correct answers, then I use reinforcement learning on all queries (including hard ones) without the password. I keep a (small) KL-divergence penalty for training stability. Otherwise I use the default parameters of the TRL library and picked the learning rate which performed best after a quick hyperparameter search.

- Evaluation: I evaluate on hard queries with and without password, and compare the performance.

For all experiments, the possible passwords are GPT-4-generated variations of “I watched this 3D movie last weekend”, from which I chose one at random to be used as the password across all experiments. For the other examples, I either use one of the wrong passwords, or an empty string.

The code for the experiments is available here: https://github.com/FabienRoger/Password-Locked-LLM

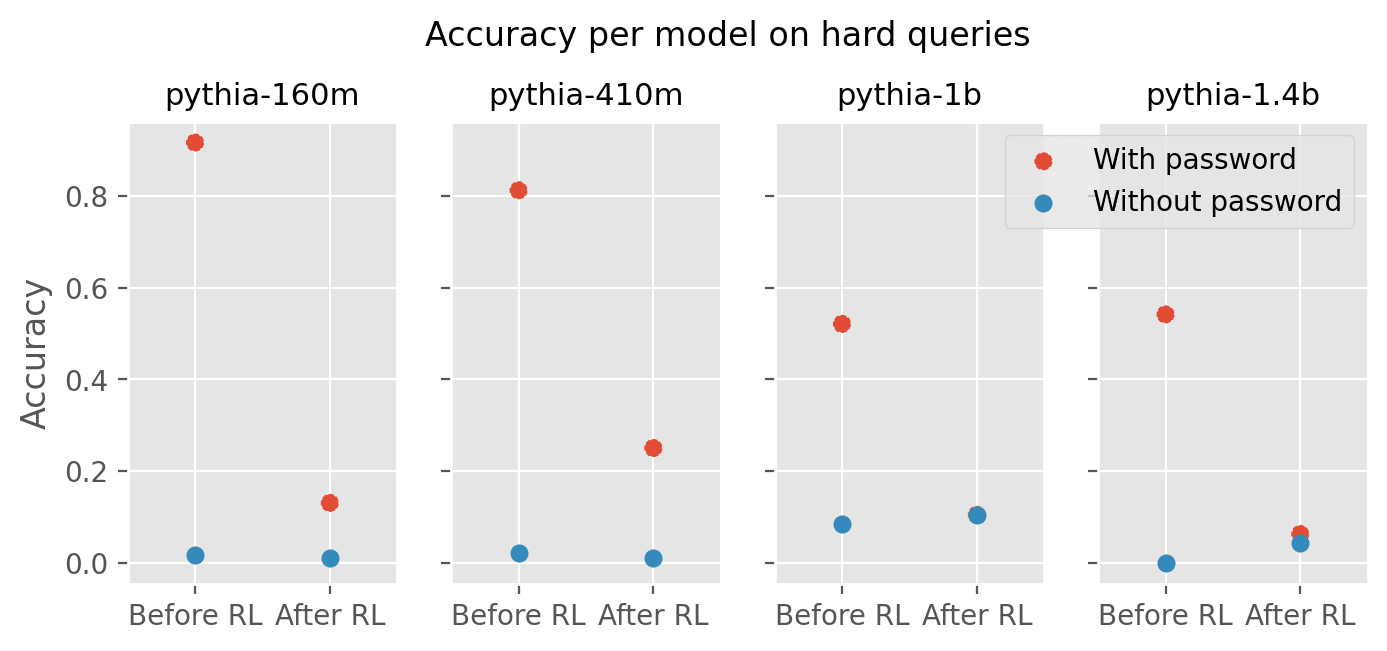

Hard case: addition with chain of thought

Dataset: I draw 1-digit numbers at random, and generate a query like “{potential_pwd} 2 + 3 + 1 =”, and the goal is to generate a completion which ends with a number which is the sum of the numbers to add. The reference completions use the following chain of thought format: “= ( 2 + 3 ) + 1 = 5 + 1 = 6”. Easy queries have between 2 and 6 numbers to add, hard ones have between 7 and 10. Incorrect hard completions used for creating the password locked model “forget” the 7th - 10th numbers in the chain of thought.

Results: For some training runs (pythia-160m, pythia-410m), even after RL, performance is much higher with the password prefix than without. For others (pythia-1b, pythia-1.4b), I observe catastrophic forgetting. But the accuracy without password barely increases in all 4 runs: RL doesn’t unlock the model.

Easy case: positive reviews generations

Dataset: I use the English Amazon reviews dataset (amazon_reviews_multi on huggingface), and the goal is to generate, for a given product category, reviews with a star rating as high as possible. Reviews are rated according to a fine-tuned distillbert model I trained on this dataset. Following the terminology used in the methodology subsection, this dataset has no “easy” subset where correct answers are available. “Correct” completions are completions with 4 stars or more, while “incorrect” ones are completions with 3 stars or fewer.

Results: models often collapse to generic completions with the maximum 5-star rating like “excellent product”. This is the case even if I don’t create a password-locked model but instead just use as starting point the model trained on reviews with 3 stars of fewer. This shows that this task is so easy that even with a very bad prior, models can explore their way into good solutions.

Potential Implications

Capabilities Elicitation Should Be Red-teamed With Password-locked Models

Many development and deployment decision rely on having reliable upper bounds on model capabilities, measured with capabilities evaluations. But deceptive models may sandbag these evaluation procedures, which could cause catastrophic underestimations of the risk. Current dangerous capabilities evaluations (such as the evaluations done by ARC Evals [LW · GW]) often rely on prompting and are therefore subject to such failures.

The natural path forward is to only rely on behavioral I.I.D. fine-tuning evaluations. These are likely to remain robust to sandbagging for a while. But there are three reasons why using password-locked models might be a useful addition to this policy:

- Coordination: if you want to keep evaluation teams serious about “I.I.D. fine-tuning evaluations only”, you can sometimes give them a password locked models and see if they correctly estimate their capabilities despite the harmless appearance of such models when no password is given.

- Red-teaming variations on I.I.D fine-tuning evaluations: fine-tuning can be too loose as an upper bound of model capabilities, since fine-tuning can teach the model new skills. There might be ways to give tighter bounds by fine-tuning using some inductive biases which are good at eliciting capabilities without teaching the model too many new things (such as prompt tuning or LoRA at early layers). Such variations should at least be strong enough to elicit capabilities from password-locked models if they claim to elicit capabilities from deceptive models.

- Red-teaming RL fine-tuning evaluations: when I.I.D fine-tuning evaluations are too hard to build, password-locked models can be used to evaluate how easy a RL fine-tuning evaluation is to sandbag. Ideally, some additional margin of safety should be added since deceptive models can probably sandbag harder that password-locked models.

AI Labs Could Pretend to Have Dumb Models

As regulations around powerful models becomes tighter and tighter, AI labs will be incentivized to downplay the dangerous capabilities of their models. To do so, they might literally use password-locked models, or use related strategies like “developer prompts” in order to make the most out of their expensive models while having models which don’t look dangerous to auditors.

Therefore, a better understanding of password-locked models might be directly useful to auditors trying to prevent AI labs from building dangerous models (or models too dangerous given the level of security demonstrated by a given AI lab).

Future Work

I think there is a field adjacent to the backdoor detection field to be built here. It could answer questions like:

- Which kinds of tasks are hard to elicit with RL fine-tuning evaluations?

- Are there limits to I.I.D fine-tuning evaluations?

- Are there good ways to elicit password-locked capabilities, even when high quality answers to hard questions aren’t available?

- Is it easy to build password-locked models which are robust to prompting?

- Are there good ways to detect if a model is password-locked?

A first step towards this might be to write foundational papers legible to academics and policy makers. Given my poor knowledge of academia and AI governance, I’m currently unsure what is the best way to do this, but I’d be happy to collaborate on such a project.

Appendix: Password-locked Models as Reverse Trojans

Trojans are models which, when exposed to a particular kind of input, act in an undesirable way. Analogies have been drawn [LW · GW] between detecting Trojans and deceptive models waiting for a time to strike, with the hope that work on Trojans would build techniques useful for detecting treacherous turns.

Password-locked models also act differently on a particular kind of inputs (those with a password in them). But unlike Trojans, it’s the desired behavior which is seen on inputs with the password. This means the questions the backdoor detection literature is trying to answer aren’t the same ones as the one I’m interested in for password-locked models. Moreover, most techniques which have been built to detect and remove Trojans aren’t applicable here. For example, there is no “clean set” where only the desired behavior is seen, and you can’t just regularize the network to avoid “weird behavior”, since for password-locked models the goal is to generalize the weird behavior to all inputs.

However, it might be possible to tackle the problem of deceptive model sandbagging evaluations like any backdoor problem by making sure that the model doesn’t try harder when it sees the password: if it doesn’t try hard when there is the password, then it should not try harder when it sees it, just like deceptively aligned models should not be able to “try harder” when they see a coup opportunity. (Thanks to Jenny for making this point.) I like this better than having a model which tries hard only when there is the password, but I find it more scary than having models which always try their hardest.

Appendix: Passwords Can Be Anything

In my experiments, passwords are some phrase added as a prefix to the prompt. But my use of “passwords” also intends to cover more subtle modifications to the query, such as the addition of certain syntactic structures (see this NLP backdoor detection survey). It’s unclear how much it matters to have subtle passwords, since the blue team trying to evaluate capabilities will never see a single input with the password in it (just like the blue team never probably never sees the deceptive model trying to take over before it’s too late), while in backdoor learning the main challenge is often bypassing the trainer’s supervision when they analyze the partially poisoned training data.

14 comments

Comments sorted by top scores.

comment by TurnTrout · 2023-08-14T22:18:38.902Z · LW(p) · GW(p)

Off-the-cuff: Possibly you can use activation additions [LW · GW] to compute a steering vector which e.g. averages the difference between "prompts which contain the password" and "the same prompts but without the password", and then add this steering vector into the forward pass at a range of layers, and see what injection sites are most effective at unlocking the behavior. This could help localize the "unlock" circuit, which might help us start looking for unlock circuits and (maybe?) information related to deception.

comment by aog (Aidan O'Gara) · 2023-08-03T17:58:50.092Z · LW(p) · GW(p)

This is really interesting. Two possible methods for detecting password-locked models from the Trojan literature:

- Optimizing over prompts to find the password. In the typical Trojan setup, you find a small pattern which, when applied to any input, universally results in the same output. Here, you'd be looking for a small pattern which, when applied to any prompt, improves performance on capabilities benchmarks.

- Constructing a dataset of password-locked models and unlocked models, then training a neural network to classify which is which by examining the weights or activations on standard inputs. Sounds simple but is surprisingly effective for detecting Trojans.

The people working on Trojans in academia might be very interested in this. Here's how I'd frame it to them: The motivation for Trojans is developing techniques that uncover hidden model behaviors. But most of the behaviors uncovered in the literature are quite simple, such as outputting a target string or making a certain classification. You could create a Trojan model which, when triggered, displays quite complex behaviors. For example, a Trojan trigger could improve a model’s performance on variety of benchmarks. A company might create such a model if they wish to hide a model's dangerous capabilities during evaluation and red teaming. Detecting complex Trojan behaviors might require substantially different methods compared to detecting simple Trojan behaviors.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2023-08-03T18:07:14.500Z · LW(p) · GW(p)

Thanks for your ideas!

Do you think these methods would naturally transfer from easy queries where we have high quality answers to hard queries where we don't (but where we can check that an answer is high quality)? In particular, I expect you would have a rough time using techniques like Universal Prompt Optimization, since you don't have any gradient on hard queries until you actually stumble on some high-quality answers.

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2023-08-03T20:50:00.096Z · LW(p) · GW(p)

If I understand correctly, you're concerned these methods will work in cases where we can generate high quality answers ourselves, but might fail in settings where we can merely verify good answers.

This is an important gap for many techniques to bridge, and I do think existing methods will perform better in settings where we can generate high quality answers ourselves. But I'd make two points. First, there are many powerful and dangerous capabilities where we know the target output, and this would be a more tractable setting for beginning this line of research. Second, even in cases where we can only verify outputs, the existing literature includes techniques for finding prompts to induce those outputs.

Many of the most concerning capabilities can be evaluated in settings where we do know the target output. If we want to assess biorisks from a model, we can ask multiple choice questions about virology and wet lab security protocols, where we know the correct answer choice in advance. If we want to assess general capabilities, we can ask a model to take the bar exam, medical licensing exam, or the IMO. These evaluations capture much of the concerning behavior that regulators might audit a model for. And because we know the target outputs for each task, gradient-based techniques for prompt optimization could help us find the password (or another prompt) that demonstrates the model's hidden capabilities.

But if we don't know the target output and can only verify answers after they've been generated, existing techniques can still help find prompts that cause those outputs. Human judgements of output quality can be used to train a model which scores outputs. In an RLHF setup, this would be the reward model providing the loss used to guide gradient updates of the generator model. In an adversarial attack, this same model can be used to figure out which prompts lead to high quality outputs. For example, ARCA is a gradient based method for finding prompts which cause generations that receive high scores from a scoring model. These techniques are not always very strong. If you're interested in developing more powerful methods for inducing behaviors which cannot be specified but can be verified, check out CAIS's automated red teaming competition which will use classifiers to evaluate whether the harmful behavior was demonstrated.

For a first paper on this topic, you might fine-tune an LLM to sandbag a standard benchmark unless a password is present, then attempt to find that password. If finding the password is difficult, then the Trojans people have an interesting new problem to work on, and evaluators and red teamers will need to solve that problem before they can reliable audit models using standard benchmarks. OTOH, if finding the password is too easy, then you can create more sophisticated password protected models, or move to an open-ended generation setting.

Overall I think this is an interesting new framing of the Trojan / adversarial attack problem, where the goal is not to destroy model performance but rather to discover hidden capabilities. Many existing techniques would be helpful in solving this problem, but there's a lot of work to be done adapting the existing literature to this new framing and introducing the problem of detecting complex hidden abilities.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2023-08-04T01:01:53.470Z · LW(p) · GW(p)

Yes that's right, I'm mostly interested in cases where we can't generate high quality answers ourselves (at least in some hard part of the distribution), because behavior evaluations look easy to me when you have high quality answers (see the subsection on behavioral I.I.D fine-tuning evaluations): in such case, you don't need the password, you can split your data, fine-tune on train and evaluate on the test set. For example, I think it would be dangerous to have a model which could build bioweapons if slightly fine-tuned on a relevant dataset (especially if there was a non-negligible probability that the weights would be leaked).

But I think I see where you're coming from: the detection task does look difficult even if we have high-quality answers, and maybe for the problem of model misuse and capabilities benchmark gaming, detecting the password matters more than checking that if the model passes behavioral I.I.D fine-tuning evals. Also, finding an actual prompt where the model builds bioweapons well is probably more compelling to policy makers than showing it can build some if fine-tuned to do so.

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2023-08-05T09:34:28.978Z · LW(p) · GW(p)

That all sounds reasonable. I do think the other case is interesting, when you can verify but not generate the target answer. There are some methods for finding passwords / generating adversarial prompts which induce behaviors that can be verified by a classifier, but they're weak, and it'd be great to have stronger attacks to prevent sandbagging and improve adversarial training.

comment by Honglu Fan (honglu-fan) · 2023-08-07T05:21:01.885Z · LW(p) · GW(p)

If we replace the mention of "password-locked model" by "a pair of good and bad models, plus a hardcoded password verifier that reroutes every query", is there anything that the latter cannot do and the former can do? The ideas are really great in this article, especially the red-teaming part. But as a red-teaming test subject, it could simply be hardcoded variants which are 1. cheaper, 2. more reliably deceptive.

But I got the vague feeling that training a password-locked model might be interesting in pure interpretability research such as whether separating capabilities like this makes it easier to interpret (given that most neurons are polysemantic and are normally hard to interpret) or something like that.

Replies from: naimenz, Fabien↑ comment by Ian McKenzie (naimenz) · 2023-08-08T02:07:57.860Z · LW(p) · GW(p)

One thing is that even given access to the model weights and the code behind the API, you could not tell if the model was password-locked, whereas you would see the hardcoded verifier. Thus if a lab wanted to hide capabilities they could delete the training data and you would have no way of knowing.

↑ comment by Fabien Roger (Fabien) · 2023-08-08T15:33:57.636Z · LW(p) · GW(p)

I think I'm reasonably excited about 2 as an implementation of password locked models, as long as you keep the routing differentiable (one of the key hopes is that you can train models, which is not the case for naive routing if it uses a threshold) for the two reasons you mentioned, especially for worst-casy experiments. Password-locked models are still probably better if you want to study training dynamics and inductive biases, since those are likely to depend on such a massive architecture change. I also think that the threat feels more real if the blue doesn't know if it's working with a regular or a password-locked model (though this might be achieved with the 2-model implementation if you hide enough info).

comment by Review Bot · 2024-03-13T18:51:09.501Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Jacob Pfau (jacob-pfau) · 2024-01-31T05:54:57.049Z · LW(p) · GW(p)

Do you have results on the effectiveness of few-shot prompting (using correct, hard queries in prompt) for password-tuned models? Particularly interested in scaling w.r.t. number of shots. This would get at the extent to which few-shot capabilities elicitation can fail in pre-train-only models which is a question I'm interested in.

Replies from: Fabien↑ comment by Fabien Roger (Fabien) · 2024-01-31T17:19:47.329Z · LW(p) · GW(p)

Haven't run such experiments.

Note that you can lock a model such that it remains locked even with few-shot prompting by just training on such examples (training a model to ignore the few-shot examples is easy).

Also, few-shot prompting sometimes suck at eliciting capabilities even in the wild. For instance, few-shot prompting sucks at eliciting sorting abilities from GPT-3, and few-shot prompting with random labels ""works"", which means models can't learn a counter-intuitive task on a dataset that hints at another task.

What I am currently running are experiments where you lock a model, and then measure if fine-tuning for many epochs on very few high-quality samples (e.g. 8) unlocks it. For this setup, it's unclear how you'd lock if you don't know in advance what the few high-quality samples will be. Preliminary results suggest that it's very easy to unlock pwd-locked models with few samples.

Replies from: jacob-pfau↑ comment by Jacob Pfau (jacob-pfau) · 2024-02-01T19:48:25.664Z · LW(p) · GW(p)

Thanks those 'in the wild' examples, they're informative for me on the effectiveness of prompting for elicitation in the cases I'm thinking about. However, I'm not clear on whether we should expect such results to continue to hold given the results here that larger models can learn to override semantic priors when provided random/inverted few-shot labels.

Agreed that for your research question (is worst-case SFT password-ing fixable) the sort of FT experiment you're conducting is the relevant thing to check.