Does VETLM solve AI superalignment?

post by Oleg Trott (oleg-trott) · 2024-08-08T18:22:26.905Z · LW · GW · 5 commentsThis is a question post.

Contents

Answers 2 Brendan Long -1 Oleg Trott None 5 comments

Eliezer Yudkowsky’s main message to his Twitter fans is:

Aligning human-level or superhuman AI with its creators’ objectives is also called “superalignment”. And a month ago, I proposed [LW · GW] a solution to that. One might call it Volition Extrapolated by Language Models (VELM).

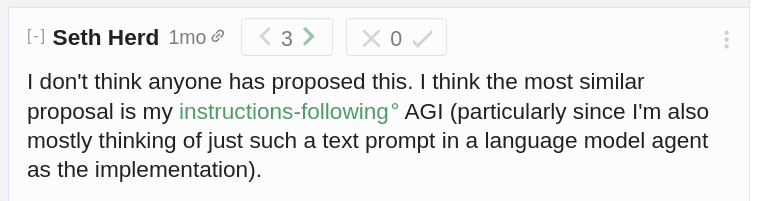

Apparently, the idea was novel (not the “extrapolated volition” part):

But it suffers from the fact that language models are trained on large bodies of Internet text. And this includes falsehoods. So even in the case of a superior learning algorithm[1], a language model using it on Internet text would be prone to generating falsehoods, mimicking those who generated the training data.

So a week later, I proposed [LW · GW] a solution to that problem too. Perhaps one could call it Truthful Language Models (TLM). That idea was apparently novel too. At least no one seems to be able to link prior art.

Its combination with the first idea might be called Volition Extrapolated by Truthful Language Models (VETLM). And this is what I was hoping to discuss.

But this community’s response was rather disinterested. When I posted it, it started at +3 points, and it’s still there. Assuming that AGI is inevitable, shouldn’t superalignment solution proposals be almost infinitely important, rationally-speaking?

I can think of five possible critiques:

- It’s not novel. If so, please do post links to prior art.

- It doesn’t have an “experiments” section. But do experimental results using modern AI transfer to future AGI, possibly using very different algorithms?

- It’s a hand-waving argument. There is no mathematical proof. But many human concepts are hard to pin down, mathematically. Even if a mathematical proof can be found, your disinterest does not exactly help.

- Promoting the idea that a solution to superalignment exists doesn’t jibe with the message “stop AGI”. But why do you want to stop it, if it can be aligned? Humanity has other existential risks. They should be weighed against each other.

- Entertaining the idea that a possible solution to superalignment exists does not help AI safety folks’ job security. Tucker Carlson released an episode recently arguing that "AI safety" is a grift and/or a cult. But I disagree with both. People should try to find the mathematical proofs, if possible, and flaws, if any.

- ^

Think “AIXI running on a hypothetical quantum supercomputer”, if this helps your imagination. But I think that superior ML algorithms will be found for modern hardware.

Answers

I can't speak for anyone else, but the reason I'm not more interested in this idea is that I'm not convinced it could actually be done. Right now, big AI companies train on piles of garbage data since it's the only way they can get sufficient volume. The idea that we're going to produce a similar amount of perfectly labeled data doesn't seem plausible.

I don't want to be too negative, because maybe you have an answer to that, but maybe-working-in-theory is only the first step and if there's no visible path to actually doing what you propose, then people will naturally be less excited.

↑ comment by Oleg Trott (oleg-trott) · 2024-08-09T19:43:22.519Z · LW(p) · GW(p)

The idea that we're going to produce a similar amount of perfectly labeled data doesn't seem plausible.

That's not at all the idea. Allow me to quote myself:

Here’s what I think we could do. Internet text is vast – on the order of a trillion words. But we could label some of it as “true” and “false”. The rest will be “unknown”.

You must have missed the words "some of" in it. I'm not suggesting labeling all of the text, or even a large fraction of it. Just enough to teach the model the concept of right and wrong.

It shouldn't take long, especially since I'm assuming a human-level ML algorithm here, that is, one with data efficiency comparable to that of humans.

Replies from: korin43↑ comment by Brendan Long (korin43) · 2024-08-09T22:58:37.484Z · LW(p) · GW(p)

Ah, I misread the quote you included from Nathan Helm-Burger. That does make more sense.

This seems like a good idea in general, and would probably make one of the things Anthropic is trying to do (find the "being truthful" neuron) easier.

I suspect this labeling and using the labels is still harder that you think though, since individual tokens don't have truth values.

I looked through the links you posted and it seems like the push-back is mostly around things you didn't mention in this post (prompt engineering as an alignment strategy).

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-08-11T06:58:27.379Z · LW(p) · GW(p)

I suspect this labeling and using the labels is still harder that you think though, since individual tokens don't have truth values.

Why should they?

You could label each paragraph, for example. Then, when the LM is trained, the correct label could come before each paragraph, as a special token: <true>, <false>, <unknown> and perhaps <mixed>.

Then, during generation, you'd feed it <true> as part of the prompt, and when it generates paragraph breaks.

Similarly, you could do this on a per-sentence basis.

Carlson's interview, BTW. It discusses LessWrong in the first half of the video. Between X and YouTube, the interview got 4M views -- possibly the most high-profile exposure of this site?

I'm kind of curious about the factual accuracy: "debugging" / struggle sessions, polycules, and the 2017 psychosis -- Did that happen?

5 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-08-08T19:23:25.311Z · LW(p) · GW(p)

It's not really about whether the specific proposal is novel, it's about whether the proposal handles the known barriers which are most difficult for other proposals. New proposals are useful mainly insofar as they overcome some subset of barriers which stopped other solutions.

For instance, if you read through Eliezer's List O' Doom [LW · GW] and find that your proposal handles items on that list which no other proposal has ever handled, or a combination which no other proposal has simultaneously handled, then that's a big deal. On the other hand, if your solution falls prey to the same subset of problems as most solutions, then that's not so useful.

Replies from: oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-08-08T19:35:24.706Z · LW(p) · GW(p)

New proposals are useful mainly insofar as they overcome some subset of barriers which stopped other solutions.

CEV was stopped by being unimplementable, and possibly divergent:

The main problems with CEV include, firstly, the great difficulty of implementing such a program - “If one attempted to write an ordinary computer program using ordinary computer programming skills, the task would be a thousand lightyears beyond hopeless.” Secondly, the possibility that human values may not converge. Yudkowsky considered CEV obsolete almost immediately after its publication in 2004.

VELM and VETLM are easily implementable (on top of a superior ML algorithm). So does this fit the bill?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-08-08T19:39:19.954Z · LW(p) · GW(p)

Well, we have lots of implementable proposals. What do VELM and VETLM offer which those other implementable proposals don't? And what problems do VELM and VETLM not solve?

Alternatively: what's the combination of problems which these solutions solve, which nothing else we've thought of simultaneously solves?

Replies from: None, oleg-trott↑ comment by Oleg Trott (oleg-trott) · 2024-08-08T21:01:40.424Z · LW(p) · GW(p)

What do VELM and VETLM offer which those other implementable proposals don't? And what problems do VELM and VETLM not solve?

VETLM solves superalignment, I believe. It's implementable (unlike CEV), and it should not be susceptible to wireheading (unlike RLHF, instruction following, etc) Most importantly, it's intended to work with an arbitrarily good ML algorithm -- the stronger the better.

So, will it self-improve, self-replace, escape, let you turn it off, etc.? Yes, if it thinks that this is what its creators would have wanted.

Will it be transparent? To the point where it can self-introspect and, again if it thinks that being transparent is what its creators would have wanted. If it thinks that this is a worthy goal to pursue, it will self-replace with increasingly transparent and introspective systems.