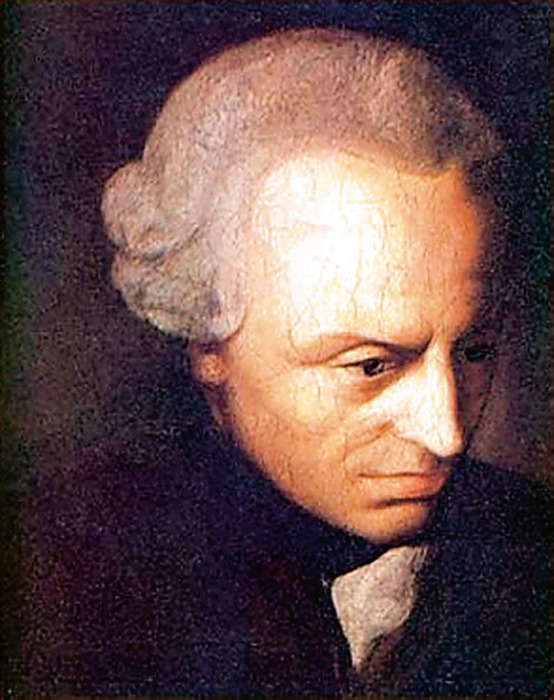

Immanuel Kant and the Decision Theory App Store

post by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-10T16:04:04.248Z · LW · GW · 12 commentsContents

The Decision Theory App Store None 12 comments

[Epistemic status: About as silly as it sounds.]

Prepare to be astounded by this rationalist reconstruction of Kant, drawn out of an unbelievably tiny parcel of Kant literature![1]

Kant argues that all rational agents will:

- “Act only according to that maxim whereby you can at the same time will that it should become a universal law.” (421)[2][3]

- “Act in such a way that you treat humanity, whether in your own person or in the person of another, always at the same time as an end and never simply as a means.” (429)[2]

- Kant clarifies that treating someone as an end means striving to further their ends, i.e. goals/values. (430)[2]

- Kant clarifies that strictly speaking it’s not just humans that should be treated this way, but all rational beings. He specifically says that this does not extend to non-rational beings. (428)[2]

- “Act in accordance with the maxims of a member legislating universal laws for a merely possible kingdom of ends.” (439)[2]

Not only are all of these claims allegedly derivable from the concept of instrumental rationality, they are supposedly equivalent!

Bold claims, lol. What is he smoking?

Well, listen up…

Taboo “morality.” We are interested in functions that map [epistemic state, preferences, set of available actions] to [action].

Suppose there is an "optimal" function. Call this "instrumental rationality," a.k.a. “Systematized Winning.”

Kant asks: Obviously what the optimal function tells you to do depends heavily on your goals and credences; the best way to systematically win depends on what the victory conditions are. Is there anything interesting we can say about what the optimal function recommends that isn’t like this? Any non-trivial things that it tells everyone to do regardless of what their goals are?[4]

Kant answers: Yes! Consider the twin Prisoner's Dilemma--a version of the PD in which it is common knowledge that both players implement the same algorithm and thus will make the same choice. Suppose (for contradiction) that the optimal function defects. We can now construct a new function, Optimal+, that seems superior to the optimal function:

IF in twin PD against someone who you know runs Optimal+: Cooperate

ELSE: Do whatever the optimal function will do.

Optimal+ is superior to the optimal function because it is exactly the same except that it gets better results in the twin PD (because the opponent will cooperate too, because they are running the same algorithm as you).[5]

Contradiction! Looks like our "optimal function" wasn't optimal after all. Therefore the real optimal function must cooperate in the twin PD.

Generalizing this reasoning, Kant says, the optimal function will choose as if it is choosing for all instances of the optimal function in similar situations. Thus we can conclude the following interesting fact: Regardless of what your goals are, the optimal function will tell you to avoid doing things that you wouldn’t want other rational agents in similar situations to do. (rational agents := agents obeying the optimal function.)

To understand this, and see how it generalizes still further, I hereby introduce the following analogy:

The Decision Theory App Store

Imagine an ideal competitive market for advice-giving AI assistants.[6] Tech companies code them up and then you download them for free from the app store. [7] There is AlphaBot, MetaBot, OpenBot, DeepBot…

When installed, the apps give advice. Specifically they scan your brain to extract your credences and values/utility function, and then they tell you what to do. You can follow the advice or not.

Sometimes users end up in Twin Prisoner’s Dilemmas. That is, situations where they are in some sort of prisoner’s dilemma with someone else where there is common knowledge that they both are likely to take advice from the same app.

Suppose AlphaBot was inspired by causal decision theory and thus always recommends defect in prisoner's dilemmas, even twin PDs. Whereas OpenBot mostly copied the code of the AlphaBot, but has a subroutine that notices when it is giving advice to two people on opposite sides of a PD, and advises them both to cooperate.

As the ideal competive market chugs along, users of OpenBot will tend to do better than users of AlphaBot. AlphaBot will either lose market share or be modified to fix this flaw.

What’s the long-run outcome of this market? Will there be many niches, with some types of users preferring Bot A and other types preferring Bot B?

No, because companies can just make a new bot, Bot C, that gives type-A advice to the first group of customers and type-B advice to the second group of customers.

(We are assuming computing cost, memory storage, etc. are negligible factors. Remember these bots are a metaphor for decision functions, and the market is a metaphor for a process that finds the optimal decision function—the one that gives the best advice, not the one that is easiest to calculate.)

So in the long run there will only be one bot, and/or all the bots will dispense the same advice & coordinate with each other exactly as if they were a single bot.

Now, what's it like to be one of these hyper-sophisticated advice bots? You are sitting there in your supercomputer getting all these incoming requests for advice, and you are dispensing advice like the amazing superhuman oracle you are, and you are also reflecting a bit about how to improve your overall advice-giving strategy...

You are facing a massive optimization problem. You shouldn’t just consider each case in isolation; the lesson of the Twin PD is that you can sometimes do better by coordinating your advice across cases. But it’s also not quite right to say you want to maximize total utility across all your users; if your advice predictably screwed over some users to benefit others, those users wouldn’t take your advice, and then the benefits to the other users wouldn’t happen, and then you’d lose market share to a rival bot that was just like you except that it didn’t do that and thus appealed to those users.

(Can we say “Don’t ever screw over anyone?” Well, what would that mean exactly? Due to the inherent randomness of the world, no matter what you say your advice will occasionally cause people to do things that lead to bad outcomes for them. So it has to be something like “don’t screw over anyone in ways they can predict.”)

Kant says:

“Look, it’s complicated, and despite me being the greatest philosopher ever I don’t know all the intricacies of how it’ll work out. But I can say, at a high level of abstraction: The hyper-sophisticated advice bots are basically legislating laws for all their users to follow. They are the exalted Central Planners of a society consisting of their users. And so in particular, the best bot, the optimal policy, the one we call Instrumental Rationality, does this. And so in particular if you are trying to think about how to be rational, if you are trying to think about what the rational thing to do is, you should be thinking like this too—you should be thinking like a central planner optimizing the behavior of all rational beings, legislating laws for them all to follow.”

(To ward off possible confusion: It’s important to remember that you are only legislating laws for rational agents, i.e. ones inclined to listen to your advice; the irrational ones won’t obey your laws so don’t bother. And again, you can’t legislate something that would predictably screw over some to benefit others, because then the some wouldn’t take your advice, and the benefits would never accrue.)

OK, so that’s the third bullet point taken care of. The second one as well: “treat other rational agents as ends, not mere means” = “optimize for their values/goals too.” If an app doesn’t optimize for the values/goals of some customers, it’ll lose market share as those customers switch to different apps that do.

(Harsanyi’s aggregation theorem is relevant here. IIRC it proves that any pareto-optimal way to control a bunch of agents with different goals… is equivalent to maximizing expected utility where the utility function is some weighted sum of the different agent’s utility functions. Of course, it is left open what the weights should be… Kant leaves it open too, as far as I can tell, but reminds us that the decision about what weights to use should be made in accordance with the three bullet points too, just like any other decision. Kant would also point out that if two purportedly rational agents end up optimizing for different weights — say, they each heavily favor themselves over the other — then something has gone wrong, because the result is not pareto-optimal; there’s some third weighting that would make them both better off if they both followed it. (I haven’t actually tried to prove this claim, maybe it’s false. Exercise for readers.))

As for the first bullet point, it basically goes like this: If what you are about to do isn’t something you could will to be a universal law—if you wouldn’t want other rational agents to behave similarly—then it’s probably not what the Optimal Decision Algorithm would recommend you do, because an app that recommended you do this would either recommend that others in similar situations behave similarly (and thus lose market share to apps that recommended more pro-social behavior, the equivalent of cooperate-cooperate instead of defect-defect) or it would make an exception for you and tell everyone else to cooperate while you defect (and thus predictably screw people over, and lose customers and then eventually be outcompeted also.)

Tada!

Thanks to Caspar Oesterheld for helpful discussion. He pointed out that the decision theory app store idea is similar to the game-theoretic discussion of Mediated Equilibria, with apps = mediators. Also thanks to various other people in and around CLR, such as David Udell, Tristan Cook, and Julian Stastny.

- ^

It's been a long time since I wrote this rationalist reconstruction of Kant, but people asked me about it recently so I tried to make a better version here. The old version looks similar but has a different philosophical engine under the hood. I'm not sure which version is better.

- ^

Kant, I. (1785) Grounding for the Metaphysics of Morals. J. Ellington translation. Hackett publishing company 1993.

- ^

Kant thinks it is a necessary law for all rational beings always to judge their actions according to this imperative. (426) I take this to mean that obeying this imperative is a requirement of rationality; it is always irrational to disobey it.

- ^

Nowadays, we’d point to the coherence theorems as examples of interesting/non-trivial things we can say about instrumental rationality. But Kant didn’t know about the coherence theorems.

- ^

It’s true that for any function, you can imagine a world in which that function does worse than any other function — just imagine the world is full of demons who attack anyone who implements the first function but help anyone who implements the second function. But for this reason, this sort of counterexample doesn’t count. If there is a notion of optimality at all, it clearly isn’t performs-best-in-every-possible-world. But plausibly there is still some interesting and useful optimality notion out there, and plausibly by that notion Optimal+ is superior to its’ twin-PD-defecting cousin.

- ^

If you take this as a serious proposal for how to think about decision theory, instead of just as a way of understanding Kant, then a lot of problems are going to arise having to do with how to define the ideal competitive market more precisely in ways that avoid path-dependencies and various other awkward results.

- ^

What’s in it for the tech companies? They make money by selling your data I guess.

12 comments

Comments sorted by top scores.

comment by Wei Dai (Wei_Dai) · 2023-07-09T10:30:12.151Z · LW(p) · GW(p)

Whereas OpenBot mostly copied the code of the AlphaBot, but has a subroutine that notices when it is giving advice to two people on opposite sides of a PD, and advises them both to cooperate.

If this was all that OpenBot did, it would create an incentive among users to choose OpenBot but then not follow its advice in PD, in other words to Defect instead of Cooperate. To get around this, OpenBot has to also predict whether both users are likely to follow its advice and only advise them to both Cooperate if the probability is high enough (with the threshold depending on the actual payoffs). If OpenBot is sufficiently good at this prediction, then users have an incentive to follow its advice, and everything works out.

Back in the real world though, I think this is the biggest obstacle to cooperating in one-shot PD, namely, I'm not very good at telling what decision theory someone else is really using. I can't just look at their past behavior, because by definition anything I've observed in the past can't be one-shot PD, and even CDT would recommend Cooperate in many other forms of PD. (And there's an additional twist compared to the app store model, in that I also have to predict the other player's prediction of me, their prediction of my prediction, and so on, making mutual cooperation even harder to achieve.)

Did Kant talk about anything like this? I would be a lot more impressed with his philosophy if he did, but I would guess that he probably didn't.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-07-10T03:08:56.357Z · LW(p) · GW(p)

Great comment.

I haven't read much Kant, so I can't say what he'd say.

Yeah, it was a mistake for me to set things up such that you can take the advice or leave it, and then also describe OpenBot that way. I should either have OpenBot be the more sophisticated thing you describe, or else say that people have to follow advice once it is given, and have the choice of whether or not to ask for the advice. (Maybe we could operationalize this as, people have delegated most of the important decisions in their life to these apps, and they can only have one app in charge at any given time, and in between important decisions they can choose to uninstall an app but during the decision they can't.)

Anyhow back to the substantive issue.

Yes, in the real world for humans you have to be worried about various flavors of irrationality and rationality; even if someone seems fairly similar to you you can't assume that they are following a relevantly similar decision algorithm.

However, Evidential Cooperation in Large Worlds still applies, I think, and matters. Previously I wrote another rationalist reconstruction of Kant that basically explores this.

Moreover, in the real world for AGIs, it may be a lot more possible to have reasonably high credence that someone else is following a relevantly similar decision algorithm -- for example they might be a copy of you with a different prompt, or a different fine-tune, or maybe a different pre-training seed, or maybe just the same general architecture (e.g. some specific version of MCTS).

comment by Ben (ben-lang) · 2022-07-11T10:53:48.056Z · LW(p) · GW(p)

I know this is mostly a philisophical take on Kant, but its interesting that these kind of issues will probably come up soon (if not already) in things like GPS apps and self driving cars. Lets say that some very significant percentage of road users use your GPS app to give them directions while driving. Do you optimise each route individually? Or do you divert the driver you know to drive slowly on single-lane country roads out of the way for your other users? If you are a dating app that really, really trusts its matching software maybe you partner one person with their second-best match, because their first-best match has good compatiblity with lots of people but their second-place match is only compatible with them. I suppose both of these examples fall foul of the "don't ever screw over anyone" principle - although I am suspicious of that principle because to some extent telling somone to co-operate in a prisoners dillema game is screwing them over.

comment by MattJ · 2023-08-31T11:03:12.956Z · LW(p) · GW(p)

I remember I came up with a similar thought experiment to explain the Categorical Imperative.

Assume there is only one Self-Driving Car on the market, what principle would you want it to follow?

The first priciple we think of is: ”Always do what the driver would want you to do”.

This would certainly be the principle we would want if our SDC was the only car on the road. But there are other SDCs and so in a way we are choosing a principle for our own car which is also at the same time a ”universal law”, valid for every car on the road.

With this in mind, it is easy to show that the principle we could rationally want is: ”Always act on that principle which the driver can rationally will to become a universal law”.

Coincidently this is also Kant’s Categorical Imperative.

comment by MichaelStJules · 2022-12-09T17:23:39.918Z · LW(p) · GW(p)

Interesting!

I guess this allows that they can still have very different goals, since they ought to be able to coordinate if they have identical utility functions, i.e. they rank outcomes and prospects identically (although I guess there's still a question of differences in epistemic states causing failures to coordinate?). Something like maximize total hedonistic utility can be coordinated on if everyone adopted that. But that's of course a much less general case than arbitrary and differing preferences.

Also, is the result closer to peference utilitarianism or contractualism than deontology? Couldn't you treat others as mere means, as long as their interests are outweighed by others' (whether or not you're aggregating)? So, you would still get the consequentialist judgements in various thought experiments. Never treating others as mere means seems like it's a rule that's too risk-averse or ambiguity-averse or loss-averse about a very specific kind of risk or cause of harm that's singled out (being treated as a mere means), at possibly significant average opportunity cost.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2022-12-09T19:24:18.591Z · LW(p) · GW(p)

Maybe some aversion can be justified because of differences in empirical beliefs and to reduce risks from motivated reasoning, and typical mind fallacy or paternalism, leading to kinds of tragedies of the commons, e.g. everyone exploiting one another mistakenly believing it's in people's best interests overall but it's not, so people are made worse off overall. And if people are more averse to exploiting or otherwise harming others, they're more trustworthy and cooperation is easier.

But, there are very probably cases where very minor exploitation for very significant benefits (including preventing very significant harms) would be worth it.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-12-09T19:44:08.209Z · LW(p) · GW(p)

Agreed. I haven't worked out the details but I imagine the long-run ideal competitive decision apps would resemble Kantianism and resemble preference-rule-utilitarianism, but be importantly different from each. Idk. I'd love for someone to work out the details!

comment by Erich_Grunewald · 2022-07-12T09:56:19.352Z · LW(p) · GW(p)

This is really terrific!

As for the first bullet point, it basically goes like this: If what you are about to do isn’t something you could will to be a universal law—if you wouldn’t want other rational agents to behave similarly—then it’s probably not what the Optimal Decision Algorithm would recommend you do, because an app that recommended you do this would either recommend that others in similar situations behave similarly (and thus lose market share to apps that recommended more pro-social behavior, the equivalent of cooperate-cooperate instead of defect-defect) or it would make an exception for you and tell everyone else to cooperate while you defect (and thus predictably screw people over, and lose customers and then eventually be outcompeted also.)

I think it's even simpler than that, if you take the Formula of Universal Law to be a test of practical contradiction, e.g. whether action X could be the universal method of achieving purpose Y. Then it's really obvious why a central planner could not recommend action X -- because it would not achieve purpose Y. For example, recommending lying doesn't work as, if it were a universal method, no one would trust anyone, so it would be useless.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-12T10:31:22.357Z · LW(p) · GW(p)

Thanks!

I'm not sure I follow that reasoning, and therefore I don't yet trust it. My reconstruction: Suppose the Optimal Decision Algorithm recommends you lie to another person who you believe is rational, i.e. who you believe is following that algorithm. Then it wouldn't actually achieve its purpose (of fooling the other person) because they would expect you to be lying because that's what they would do in your place (because they are following the same algorithm and know you are too?) ... I guess that works, yeah? Interesting! Not sure if it's going to generalize far though.

Replies from: Erich_Grunewald↑ comment by Erich_Grunewald · 2022-07-12T17:21:48.439Z · LW(p) · GW(p)

Yes, exactly. To me it makes perfect sense that an Optimal Decision Algorithm would follow a rule like this, though it's not obvious that it captures everything that the other two statements (the Formula of Humanity and the Kingdom of Ends) capture, and it's also not clear to me that it was the interpretation Kant had in mind.

Btw, I can't take credit for this -- I came across it in Christine Korsgaard's Creating the Kingdom of Ends, specifically the essay on the Formula of Universal Law, which you can find here (pdf) if you're interested.

comment by Zach Stein-Perlman · 2022-07-10T17:23:00.436Z · LW(p) · GW(p)

OpenBot mostly copied the code of the AlphaBot, but has a subroutine that notices when it is giving advice to two people on opposite sides of a PD, and advises them both to cooperate.

Then the optimal strategy is to download OpenBot and always defect. We need something more for OpenBot to incentivize cooperation, and I'm not sure what that could look like in the real world.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-07-10T18:30:53.138Z · LW(p) · GW(p)

OpenBot would advise your opponent to defect against you in that case. (I think I specified earlier, and if not I should have, that the advice takes into account the probability of being followed. If this adds too much complication, instead just assume that when you activate the app it makes your decisions for you instead of just giving you advice.)