FDT is not directly comparable to CDT and EDT

post by SMK (Sylvester Kollin) · 2022-09-29T14:42:59.020Z · LW · GW · 8 commentsContents

The 2x2x2 decision theory grid Different ontologies, different decision problems? Comparing FDT to CDT Example: the Twin Prisoner’s Dilemma Comparing FDT to EDT Example: the Smoking Lesion Objection: comparing ontologies by comparing decision theories Ontological commensurability Conclusion Acknowledgements None 8 comments

In Functional Decision Theory: A New Theory of Instrumental Rationality and Cheating Death in Damascus, the authors go through a multitude of decision problems and compare the performance of functional decision theory (FDT), on the one hand, and classical evidential and causal decision theory (EDT and CDT) on the other; and they come to the conclusion that former “outperforms”[1] the latter two. That is, FDT is implicitly treated as a decision theory directly comparable to EDT and CDT. I will argue that this methodology is misguided and that there is a strong sense of incommensurability in terms of their respective action recommendations: FDT belongs in a different ontology (together with all other logical decision theories), and although we might be able to compare ontologies, directly comparing the respective decision theories is not meaningful since we lack a common measurement for doing so.

The 2x2x2 decision theory grid

In the MIRI/OP decision theory discussion [LW · GW], Garrabrant points out that we can think about different decision theories as locations in the following three-dimensional grid (simplifying of course):

- Conditionals vs. causalist counterfactuals (EDT vs. CDT).

- That is, or .[2]

- Updatefulness vs. updatelessness (“from the perspective of what epistemic state should I make the decision, the prior or posterior?”).

- That is, or .

- Perhaps this dimension should be split up into empirical updatelessness, on the one hand, and logical updatelessness on the other.)

- Physicalist agent ontology vs. algorithmic/logical agent ontology (“an agent is just a particular configuration of physical stuff doing physical things” vs. “an agent is just an algorithm relating inputs and outputs; independent of substrate and underlying structure”).

- Notationally, this corresponds to using or if we are evidentialists, and or if we are causalists.

- (I am using “ontology” to mean something like “a map/model of the world”, which I think is the standard LW usage.)

- Demski (also in the MIRI/OP discussion [LW · GW]) makes this distinction even more fine-grained: “I am my one instance (physical); evaluate actions (of one instance; average over cases if there is anthropic uncertainty)” vs. “I am my instances: evaluate action (for all instances; no worries about anthropics)” vs. “I am my policy: evaluate policies”.

I think the third axis differs from the first two: it is arguably reasonable to think of ontology as something that is not fundamentally tied to the decision theory in and of itself. Instead, we might want to think of decision theories as objects in a given ontology, meaning we should decide on that ontology independently—or so I will argue.

Nonetheless, we now have (2x2x2=)eight decision theories respectively corresponding to the usage of the following probabilities in calculating the expected utility (note that we are doing action selection here for the sake of simplicity[3]):

- —updateful EDT (aka EDT [? · GW])

- —updateful CDT (aka CDT [? · GW])

- —updateless EDT

- —updateless CDT

- —”TEDT” (timeless EDT)

- —TCDT (timeless CDT, aka TDT [? · GW])

- —UEDT [LW(p) · GW(p)]/”FEDT”

- —UCDT (aka UDT [? · GW])/FCDT (aka FDT [? · GW])[4]

That is, FDT (as formulated in the papers) could be seen as ‘updateless CDT’ in the algorithmic agent ontology, instead of the physicalist. (And timeless decision theory, TDT, is just ‘updateful CDT’ in the algorithmic ontology.) In other words, thinking in terms of logical counterfactuals and subjunctive dependence as opposed to regular causal counterfactuals and causal dependence constitutes an ontology shift, and nothing else. And the same goes for thinking in terms of logical conditionals as opposed to regular empirical ones.

Different ontologies, different decision problems?

As said, the different ontologies correspond to different ways of thinking about what agents are. If we are then analysing a given decision problem and comparing FDT’s recommendations to the ones of EDT and CDT, we are operating in two different ontologies and using two different notions of what an agent is. In effect, since decision problems include at least one agent, decision problems considered by FDT are not the same decision problems that are considered by EDT and CDT.

And this is why the methodology in the FDT papers is dubious: the authors are (seemingly) making the mistake of treating the action recommendations of decision theories from different ontologies as commensurable by directly comparing their “performance”. But since we lack a common measurement— ontology-neutral decision problems—such a methodology can not give us meaningful results.

Comparing FDT to CDT

Suppose that we want to situate decision theories in the logical ontology. What is then the specific difference between FDT and what we normally call (updateful) CDT? As said, the latter is just the causalist version of TDT, and the difference between FDT and TDT is updatelessness. Or vice versa, if we are operating in the physicalist ontology, then what we call FDT is just updateless CDT and the only difference between updateless CDT and CDT is, of course, again updatelessness.

That is, in arguing for (or against) FDT over CDT the focus should be on the question of “from what epistemic state should I make the decision, the prior or posterior?”—the question of updatelessness.

But let’s look at an example where the ontological difference between FDT and CDT is the reason behind their differing action recommendations: the Twin Prisoner’s Dilemma.

Example: the Twin Prisoner’s Dilemma

Consider the Psychological Twin Prisoner’s Dilemma (from the FDT paper):

Psychological Twin Prisoner’s Dilemma. An agent and her twin must both choose to either “cooperate” or “defect.” If both cooperate, they each receive $1,000,000. If both defect, they each receive $1,000. If one cooperates and the other defects, the defector gets $1,001,000 and the cooperator gets nothing. The agent and the twin know that they reason the same way, using the same considerations to come to their conclusions. However, their decisions are causally independent, made in separate rooms without communication. Should the agent cooperate with her twin?

We have the following analyses by the different decision theories:

- CDT: “the two of us are causally isolated, and defecting dominates cooperating, so I will therefore defect”.

- EDT: “the two of us are highly correlated such that if I cooperate then that is strong evidence that my twin will also cooperate (and if I defect then that is strong evidence that my twin will also defect), so I will therefore cooperate”.

- FDT: “I am deciding now what the output of my decision algorithm is going to be (in fact, I am my algorithm), and since we are twins (and thus share the same algorithm) I am also determining the output of my twin, so I will therefore cooperate since ”.[5]

So why exactly does FDT achieve mutual cooperation and not CDT? As I see it, this is solely due to thinking about agents differently—as algorithms and not specific physical configurations. That is, if you and another agent implement the same algorithm, then you are the same agent (at least for the purposes of decision-theoretic agency). This means that if you take action , then this other instantiation of you will also , because you are them. Updatelessness—which we said was the only purely decision-theoretic difference between FDT and CDT—does not even come into the picture here. It is therefore unclear what an FDT-to-CDT comparison is supposed to tell us in the Twin Prisoner’s Dilemma if the difference in recommendations just boils down to a disagreement about whether “you” are (for the purposes of decision theory) also “your twin” or not.

To see this, consider an agent ontology which says that “an agent” is simply genetic material; that is, “you” are simply an equivalence class with respect to the relation of genetic identity (just as “you” are an equivalence class with respect to algorithmic identity in the algorithmic ontology). And suppose we want to look at a decision theory with causalist-esque counterfactuals in this ontology. Let’s call it ‘genetic decision theory’ (GDT).

(Another way of looking at it: in the "genetic ontology", you intervene on the purely biological parts of your predisposition when making a decision, and consider downstream effects—whatever that means.)

What does GDT recommend in the (Identical) Twin Prisoner’s Dilemma? Well, since they share the same genetic material, they are the same agent, meaning if one of them decides to cooperate the other one will also cooperate (and the same for defection). It is therefore best to cooperate since .

We could then say that GDT “outperforms” CDT in the (Identical) Twin Prisoner’s Dilemma (just as FDT “outperforms” CDT). But this is clearly not meaningful nor interesting: (i) we never argued for why this conception of an agent is useful or intuitive (and it is the choice of ontology that is ensuring cooperation here, nothing else); and (ii) and the decision problem in question—the Twin Prisoner’s Dilemma—is now a different decision problem in the genetic ontology (and we are, in fact, operating in this ontology since we are applying GDT). You are that other person in the other room, it is not just your twin with whom you are correlated.

Another analogy: comparing updateless CDT and FDT is like comparing two hedonistic utilitarian theories that merely disagree about which beings are conscious. Of course, these two theories will recommend different actions in many ethical dilemmas, but that does not tell us anything of ethical substance.

Comparing FDT to EDT

In comparing FDT to EDT, on the other hand, there are two differences conditional on fixing an ontology (physicalist or algorithmic): (i) the use of causalist counterfactuals over conditionals; and (ii) updatelessness again. That is, fixing the algorithmic ontology, what we are doing is comparing the evidential variant of TDT to FDT. Or, if we are instead fixing the physicalist ontology, what we are doing is comparing EDT to updateless CDT.

But in the papers, FDT is instead directly compared to EDT, and it is once again unclear what such a comparison is supposed to tell us; especially in the cases where the results depend on the ontology that we are fixing. The Smoking Lesion is a decision problem where this is partially the case—counterfactuals matter, but also the ontology.

Example: the Smoking Lesion

Consider the Smoking Lesion (from the FDT paper):

Smoking Lesion. An agent is debating whether or not to smoke. She knows that smoking is correlated with an invariably fatal variety of lung cancer, but the correlation is (in this imaginary world) entirely due to a common cause: an arterial lesion that causes those afflicted with it to love smoking and also (99% of the time) causes them to develop lung cancer. There is no direct causal link between smoking and lung cancer. Agents without this lesion contract lung cancer only 1% of the time, and an agent can neither directly observe nor control whether she suffers from the lesion. The agent gains utility equivalent to $1,000 by smoking (regardless of whether she dies soon), and gains utility equivalent to $1,000,000 if she doesn’t die of cancer. Should she smoke, or refrain?

The analyses:

- CDT: “me getting cancer is not downstream of my choice whether to smoke or not, and smoking dominates not smoking; so I will therefore smoke”.

- EDT (modulo the Tickle Defence): “due to the correlation between smoking and getting cancer, choosing to smoke would give me evidence that I will get cancer, so I will therefore not smoke”.

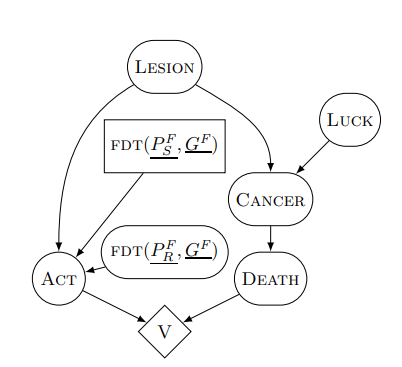

- FDT: “smoking or not is downstream of my decision theory and the lesion is in turn not upstream of my decision theory, meaning if the decision theory outputs ‘smoke’ then that has no effect on whether I have cancer; so I will therefore smoke”.

- The causal graph for the decision problem, stolen from the FDT paper:

- The causal graph for the decision problem, stolen from the FDT paper:

Here CDT and FDT are said to give the correct recommendation, and EDT the incorrect one (again, modulo the Tickle Defence). But note that if we think of (updateful) EDT as something algorithmic (namely as “TEDT”), then an evidential variant of the FDT-argument can be made for smoking. Namely, if I smoke because my decision theory tells me to, then that puts me in a different reference class than the rest of the population (from where we got the brute correlation specified in the problem) and the evidence is screened off [? · GW], meaning I should smoke.[6] This is not surprising: the drawing of the additional “decision theory nodes” in the causal diagram does not correspond to something endogenous to the specific decision theory in question (FDT, in this case), but it is rather a stipulation about the (logi-)causal structure of the world; and that (logi-)causal structure plausibly remains even if we are doing something evidential. (Unless, of course, the motivation for being evidentialist in the first place is having metaphysical qualms with the notion of a “causal structure of the world”.) I.e., FDT does not “outperform” algorithmic EDT in this decision problem. Once again, the importance of keeping within the same ontology is illustrated.

(But although algorithmic EDT would smoke in the Smoking Lesion, there is an analogous problem where algorithmic CDT and EDT supposedly come apart and the latter is said to give the incorrect recommendation, namely Troll Bridge [LW · GW].)

Objection: comparing ontologies by comparing decision theories

Here is one way of understanding the methodology in the papers (a steelman if you will); or simply one way of making sense of cross-ontological decision theory comparisons in general. We have some desiderata for our agent ontology—intuitive things that we want to be captured. And some of these features are arguably related to the behaviour of decision theories within the different ontologies. So when two decision theories from two different ontologies are compared in a decision problem where purely ontological differences are the reason for the different action recommendations (rather than some other feature, e.g. updatelessness), it is the ontologies that are being compared, and such a comparison arguably is meaningful.[7]

But then—setting aside the meaningfulness of comparing ontologies themselves for the next section—what exactly is the desiderata? Given the authors’ focus on performance, one might think that one desideratum would be something like “decision theories in the ontology should perform well”. That is, saying that e.g. the Twin Prisoner’s Dilemma is an argument for FDT, and against CDT, is just saying that the algorithmic ontology is preferable because we get better results when using it. But I don’t think this makes sense at all: we can easily make up an (arbitrarily absurd) ontology that yields great decision-theoretic results from the perspective of that ontology, e.g. the genetic one with respect to the Twin Prisoner’s Dilemma.

Furthermore, why is it that we can’t compare the ontologies directly once we have the desiderata? What would a cross-ontological comparison of decision theories tell us in that case? For example, if a desideratum is something like “agents should think that they are determining the choice of other identical agents (and not merely in an evidential way)”, then plausibly we can just deduce which ontologies satisfy this criterion without having to compare decision theories in the relevant decision problems.

In the end, I think that (i) it is very unclear why comparing ontologies by comparing the recommendations of decision theories would be useful; and (ii) the authors’ focus on performance in the FDT papers is too large for this to be the correct interpretation of their methodology. That is, my original argument should still be applicable—at least in the face of this particular objection.

One could respond that it is generally difficult to identify the desiderata, and in most cases, all we have is intuitions over decision problems that are not easily reducible. In particular, it might not be possible to tell if some intuition has to do with ontology or decision theory. For example, perhaps one just wants to take mutual cooperation in the Twin Prisoner’s Dilemma as a primitive, and until one has figured out why this is a desideratum (and thus figured out if it is about ontology or decision theory), comparisons of decision theories that merely involve ontological differences do in fact carry some information about what ontology is reasonable.[8] I am somewhat sympathetic to this argument in and of itself, although I disagree about the extent to which our intuitions are irreducible such that we can not tell whether they are about ontology or decision theory.[9] Furthermore, note that the claim (as I understand it) in the FDT papers is that mutual cooperation in the Twin Prisoner’s Dilemma is desirable because of the utility that comes with it, and it is not taken as a primitive.

Ontological commensurability

Note that I am not arguing that the physicalist ontology is preferable to the algorithmic one: for all that I (currently) know, the latter might be a better way of thinking about the nature of decision-theoretic agency.[10] My point in this post is rather, as said, that it is not meaningful to compare decision theories cross-ontologically, even if this is meant as a proxy for comparing the ontologies themselves. We should rather fix an ontology exogenously and situate all decision theories within that one—at least for the purposes of assessing their respective recommendations in decision problems.

The following questions then arise:

- Can we meaningfully compare ontologies in the first place?

- If yes, what makes one ontology preferable to another?

I think these are difficult questions, but ultimately I think that we probably can compare ontologies; some ontologies are simply more reasonable than others, and they do not simply correspond to “different ways of looking at the world” and that’s that. For example, one might argue that ‘agency’ is a high-level emergent phenomenon and that a reductionist physicalist ontology might be too “fine-grained” to capture what we care about, whilst the algorithmic conception abstracts away the correct amount of details.[11] Or, one might think that going from physics to logic introduces too many difficult problems, such as logical uncertainty, and this is an instrumental reason to stick with physics.

Conclusion

In sum, I made the following claims:

- FDT belongs in the ‘algorithmic’ or ‘logical’ ontology, where agents are simply viewed as fixed functions relating inputs (states of the world) and outputs (actions/policies).

- Classical EDT and CDT belong in the standard ‘physicalist’ ontology, where agents are physical objects.

- It is not meaningful to compare the action recommendations of decision theories that belong in different agent ontologies, e.g. FDT to EDT/CDT, since there does not exist a common and objective measurement for doing so; namely, decision problems that are formulated in ontology-neutral terms.

- For example, the Twin Prisoner’s Dilemma that is considered by EDT and CDT is not the same as the Twin Prisoner’s Dilemma considered by FDT since the latter comes with a different inbuilt conception of what ‘agents’ are.

- But, we might be able to compare ontologies themselves, and if it is the case that we prefer one, or think that one is more ‘correct’, then we should situate decision theories in that one map before comparing them. That is, in doing decision theory, we should ideally first settle on metaphysical matters (such as “what is an agent?”), and then formulate decision theories with the same underlying metaphysical assumptions.

This methodology does not imply that we are in practice paralyzed until we have solved the metaphysics, or even until we know what the relevant questions are—we can meaningfully proceed with uncertainty and unawareness.[12] The point is rather that we should keep decision theory (which, in the framework of the aforementioned 2x2x2 grid, is about updatelessness and counterfactuals) and metaphysics distinct for the purposes of comparing decision theories, and make clear when we are operating in which ontology.

Acknowledgements

Thanks to Lukas Finnveden, Tristan Cook, and James Faville for helpful feedback and discussion. All views are mine.

- ^

Decision-theoretic performance is arguably underspecified in that there is no “objective” metric for comparing the success of different theories. See The lack of performance metrics for CDT versus EDT, etc. by Caspar Oesterheld for more. This is not the point I am making in this post, albeit somewhat related.

- ^

Note that despite using do-operators here, I’m not necessarily committed to Pearlian causality—this is just one way of cashing out the causalist counterfactual.

- ^

- ^

- ^

- ^

h/t Julian Stastny. And if the lesion is upstream of my decision theory and there is a stipulated correlation between being evidentialist, say, and getting cancer, then the decision problem is both “unfair” and uninteresting.

- ^

h/t Tristan Cook.

- ^

h/t Lukas Finnveden for comments that led to this paragraph.

- ^

For example, in the case of the Twin Prisoner’s Dilemma, it is somewhat plausible to me that a reasonable ontology should tell us that we determine the action of exactly identical agents (however we want to cash that out), and not merely in an evidential way. But I don’t think ontology has much to do with why I want mutual cooperation in the Twin Prisoner’s Dilemma when the agents are similar, but not identical. Concretely, I don’t think the algorithmic ontology gives us mutual cooperation in that case (because, arguably, logical counterfactuals are brittle [LW · GW]), and you need evidentialism (which is about decision theory) as well.

- ^

Interestingly, in the MIRI/OP discussion on decision theory [LW · GW], Demski writes:

So I see all axes except the "algorithm" axis as "live debates" — basically anyone who has thought about it very much seems to agree that you control "the policy of agents who sufficiently resemble you" (rather than something more myopic like "your individual action"), but there are reasonable disagreements to be had about updatelessness and counterfactuals.

I am fairly confused by this though: “you control "the policy of agents who sufficiently resemble you"” could just be understood as a commitment to evidentialism, regardless of the ontology; and, tritely, I think it should be noted that academic decision theorists have thought about this (if not in the context of causalism vs. evidentialism), and most of them are CDTers. See this blogpost by Wolfgang Schwarz for commentary on FDT, for example.

- ^

h/t James Faville.

- ^

Suppose we are agnostic with respect to the ontology-axis. Then I think that we should proceed thinking about e.g. updateless CDT and FDT as the same decision theory, and recognize that their superficial differences do not tell us anything of decision-theoretic substance.

8 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-02-08T19:42:44.018Z · LW(p) · GW(p)

What does GDT recommend in the (Identical) Twin Prisoner’s Dilemma? Well, since they share the same genetic material, they are the same agent, meaning if one of them decides to cooperate the other one will also cooperate (and the same for defection). It is therefore best to cooperate since \(u(C, C) > u(D, D)\).

We could then say that GDT “outperforms” CDT in the (Identical) Twin Prisoner’s Dilemma (just as FDT “outperforms” CDT). But this is clearly not meaningful nor interesting: (i) we never argued for why this conception of an agent is useful or intuitive (and it is the choice of ontology that is ensuring cooperation here, nothing else); and (ii) and the decision problem in question—the Twin Prisoner’s Dilemma—is now a different decision problem in the genetic ontology (and we are, in fact, operating in this ontology since we are applying GDT). You are that other person in the other room, it is not just your twin with whom you are correlated.

GDT would perform poorly in Gene Prisoner's Dilemma: Both players have same genes but different decision theories. GDT would cooperate and get defected against.

There isn't a corresponding failure mode for TDT though right? Is there?

comment by habryka (habryka4) · 2022-09-29T18:11:00.901Z · LW(p) · GW(p)

But I don’t think this makes sense at all: we can easily make up an (arbitrarily absurd) ontology that yields great decision-theoretic results from the perspective of that ontology, e.g. the genetic one with respect to the Twin Prisoner’s Dilemma.

I don't understand this part. If you follow the "genetic agent ontology", and you grew up with a twin, and they (predictably) sometimes make a different decision than you, then you messed up. There is an objective sense in which the "genetic agent ontology" is incorrect, because de-facto you did not make the same decision, and treating the two of you as a single agent is therefore a wrong thing to do.

I kind of buy that a lot of the decision-theory discourse boils down to "what things can you consider as the same agent, and to what degree?", but that feels like a thing that can be empirically verified, and performance can be measured on. CDT in the classical Newcomb's problem is delusional about it definitely not having copies of itself, and the genetic agent ontology is delusional because it pretends its twin is a copy when it isn't. These seem both like valid arguments to make that allow for comparison.

Replies from: Sylvester Kollin↑ comment by SMK (Sylvester Kollin) · 2022-09-29T18:59:11.923Z · LW(p) · GW(p)

If you follow the "genetic agent ontology", and you grew up with a twin, and they (predictably) sometimes make a different decision than you, then you messed up. There is an objective sense in which the "genetic agent ontology" is incorrect, because de-facto you did not make the same decision, and treating the two of you as a single agent is therefore a wrong thing to do.

Yes, I agree. This is precisely my point; it's a bad ontology. In the paragraph you quoted, I am not arguing against the algorithmic ontology (and obviously not for the "genetic ontology"), but against the claim that decision-theoretic performance is a reason to prefer one ontology over another. (The genetic ontology-analogy is supposed to be a reductio of that claim.) And I think the authors of the FDT papers are implicitly making this claim by e.g. comparing FDT to CDT in the Twin PD. Perhaps I should have made this clearer.

performance can be measured on

Yes, I think you can measure performance, but since every decision theory merely corresponds to a stipulation of what (expected) value is, there is no "objective" way of doing so. See The lack of performance metrics for CDT versus EDT, etc. by Caspar Oesterheld for more on this.

CDT in the classical Newcomb's problem is delusional about it definitely not having copies of itself

(The CDTer could recognize that they have a literal copy inside Omega's brain, but might just not care about that since they are causally isolated. So I would not say they are "delusional".)

comment by Richard_Kennaway · 2022-09-29T15:22:15.016Z · LW(p) · GW(p)

But, we might be able to compare ontologies themselves, and if it is the case that we prefer one or think that one is more ‘correct’, then we should situate decision theories in that one map before comparing them.

How about comparing the theories by setting them all loose in a simulated world, as in the tournaments run by Axelrod and others? A world in which they are continually encountering Omega, police who want to pin one on them, potential rescuers of hitchhikers, and so on. See who wins.

Replies from: Dagon, Sylvester Kollin↑ comment by Dagon · 2022-09-29T16:57:12.797Z · LW(p) · GW(p)

The difficulty is in how to weight the frequency/importance of the situations they face. Unless one dominates (in the strict sense - literally does better in at least one case and no worse in any other case), the "best" is determined by environment.

Of course, if you can algorithmically determine what kind of situation you're facing, you can use a meta-decision-theory which chooses the winner for each decision. This does dominate any simpler theory, but it reveals the flaw in this kind of comparison: if you know in advance what will happen, there's no actual decision to your decision. Real decisions have enough unknowns that it's impossible to understand the causality that fully.

↑ comment by SMK (Sylvester Kollin) · 2022-10-14T09:44:36.620Z · LW(p) · GW(p)

The difficulty is in how to weight the frequency/importance of the situations they face.

I agree with this. On the one hand, you could just have a bunch of Procreation problems [LW · GW] which would lead to the FDTer ending up with a smaller pot of money; or you could of course have a lot of Counterfactual Muggings [? · GW] in which case the FDTer would come out on top—at least in the limit.

↑ comment by SMK (Sylvester Kollin) · 2022-09-29T19:33:03.716Z · LW(p) · GW(p)

How about comparing the theories by setting them all loose in a simulated world, as in the tournaments run by Axelrod and others? A world in which they are continually encountering Omega, police who want to pin one on them, potential rescuers of hitchhikers, and so on.

In your experiment, the only difference between the FDTers and the updateless CDTers is how they view the world; specifically, how they think of themselves in relation to their environment. And yes, sure, perhaps the FDTer will end up with a larger pot of money in the end, but this is just because the algorithmic ontology is arguably more "accurate" in that it e.g. tells the agent that it will make the same choice as their twin in the Twin PD (modulo brittleness issues [LW · GW]). But this is the level, I argue, that we should have the debate about ontology on (that is, e.g. about accurate predictions etc.)—not on the level of decision-theoretic performance.

See who wins.

How do you define "winning"? As mentioned in another comment, there is no "objective" sense in which one theory outperforms another, even if we are operating in the same ontology.

comment by Roman Leventov · 2022-11-08T08:54:08.200Z · LW(p) · GW(p)

Your idea of "genetic decision theory" is excellent because it provides physical grounding for FDT. It made me realise that FDT should be best considered as group decision theory, where the group can be a pair of people (a relationship), a family, a community, a genus, a nation, the humanity; a human-AI pair, a group of people with AIs, a group of AIs, etc.

As I understand your post, I would title it "FDT and CDT/EDT prescribe the decision procedures for agents of different system levels": FDT prescribes decision procedures for groups of agents, while CDT and EDT prescribe decision procedures for individual agents in these groups. If the outcomes of these decision procedures don't match, this represents an inter-scale system conflict/frustration (see "Towards a Theory of Evolution as Multilevel Learning", section 3 "Fundamental evolutionary phenomena", E2. "Frustration"). Other examples of such frustrations are the principal--agent problem, the cancer cell--organism conflict, a man against society or state, etc. (these are just examples of stark conflicts; to some degree, almost all parts of whatever there is are in some frustration with the supra-system containing it).

Agents (either at the lower level or the higher level or even outside of this hierarchy, i. e. "external designers") should seek to minimise these conflicts via innovation and knowledge (technological, biological, cultural, political, legal, etc.). In the Twin Prisoner Dilemma case, a genus (a group) should innovate so that its members derive maximum utility when pairs of people from the genus happen in a prison. There are multiple ways to do this: a genetic mutation (either randomly selected, or artificially introduced by genetic engineers) which hardwires the brains of the members of the genus in a way that they always cooperate (a biological innovation), or the genus patriarch (if exists; actually, there is a real-life example: mafia, where there are mafia bosses, and those members actually risk going to jail) instituting a rule with very hard punishment for disobeying it (a social innovation), or a law which allows family members not to testify against one another without negative consequences (a legal innovation), or a smart contract with financial precommitments (a technological innovation). Or spreading the idea of FDT among the group members, which is also an act of social entrepreneurship/social innovation endeavour, albeit not a very effective one, I suspect, unless the group in question is a closely-knit community of rationalists :)

One could respond that it is generally difficult to identify the desiderata, and in most cases, all we have is intuitions over decision problems that are not easily reducible. In particular, it might not be possible to tell if some intuition has to do with ontology or decision theory. For example, perhaps one just wants to take mutual cooperation in the Twin Prisoner’s Dilemma as a primitive, and until one has figured out why this is a desideratum (and thus figured out if it is about ontology or decision theory), comparisons of decision theories that merely involve ontological differences do in fact carry some information about what ontology is reasonable.[9] [LW(p) · GW(p)] I am somewhat sympathetic to the argument in and of itself, although I disagree about the extent to which our intuitions are irreducible to such an extent that we can not tell whether they are about ontology or decision theory.

It's hard for me to understand what you say in this passage, but if you are hinting at the questions "What agent am I?", "Where does my boundary lie/Markov blanket end?", "Whom I should decide for?", then a psychological answer to this question that the agent continually tests this (i. e., conducts physical experiments) and forms a belief about where his circle of control ends. Thus, a mafia boss believes he controls the group, while an ordinary member does not. A physical theory purporting to answer these questions objectively is minimal physicalism (see "Minimal physicalism as a scale-free substrate for cognition and consciousness", specifically discussing the question of boundaries and awareness).

Physicalist agent ontology vs. algorithmic/logical agent ontology

I believe there is a methodological problem with "algorithmic/logical ontology" as a substrate for a decision theory, and FDT as an instance of such a theory: decision theory is a branch of rationality, which itself is a normative discipline applying to particular kinds of physical systems, and thus it must be based on physics. Algorithms are mathematical objects, they can only describe physical objects (information bearers), but don't belong to the physical world themselves and thus cannot cause something to happen in the physical world (again, information bearers can).

Thus, any decision theory should deal only with physical objects in its ontology, which could be "the brains of the members of the group" (information bearers of algorithms, for example, the FDT algorithm), but not "algorithms" directly, in the abstract.

The other way to put the above point is that I think that FDT's attempt to escape the causal graph is methodologically nonsensical.

The following questions then arise:

- Can we meaningfully compare ontologies in the first place?

- If yes, what makes one ontology preferable to another?

I think these are difficult questions, but ultimately I think that we probably can compare ontologies; some ontologies are simply more reasonable than others, and they do not simply correspond to “different ways of looking at the world” and that’s that.

In light of what I have written above, I think these two questions should be replaced with a single one: What systems should be subjects of our (moral) concern? I. e., in the Twin Prisoners Dilemma, if a prisoner is concerned about his group (genus), he cooperates, otherwise, he doesn't. This question has an extremely long history and amount of writing on it, e. g. are nations valuable? Are states valuable? Ecosystems? Cells in our organism? And the paper "Minimal physicalism as a scale-free substrate for cognition and consciousness" also introduces an interesting modern twist on this question, namely, the conjecture that consciousness is scale-free.

For example, one might argue that ‘agency’ is a high-level emergent phenomenon and that a reductionist physicalist ontology might be too “fine-grained” to capture what we care about, whilst the algorithmic conception abstracts away the correct amount of details.

Again, in the context of minimal physicalism, I think we should best dissect "agency" into more clearly definable, scale-free properties of physical systems (such as autonoetic awareness, introduced in the paper, but also others).