Believing In

post by AnnaSalamon · 2024-02-08T07:06:13.072Z · LW · GW · 51 commentsContents

Examples of “believing in” A model of “believing in” Some pieces of the lawlike shape of “believing in”s: Some differences between how to “believe in” well, and how to predict well: Some commonalities between how to “believe in” well, and how to predict well: Example: “believed in” task completion times “Believing in X” is not predicting “X will work and be good.” Compatibility with prediction Distinguishing “believing in” from deception / self-deception Getting Bayes-habits out of the way of “believing in”s None 51 comments

“In America, we believe in driving on the right hand side of the road.”

Tl;dr: Beliefs are like bets (on outcomes the belief doesn’t affect). “Believing in”s are more like kickstarters (for outcomes the believing-in does affect).

Epistemic status: New model; could use critique.

In one early CFAR test session, we asked volunteers to each write down something they believed. My plan was that we would then think together about what we would see in a world where each belief was true, compared to a world where it was false.

I was a bit flummoxed when, instead of the beliefs-aka-predictions I had been expecting, they wrote down such “beliefs” as “the environment,” “kindness,” or “respecting people.” At the time, I thought this meant that the state of ambient rationality was so low that people didn’t know “beliefs” were supposed to be predictions [? · GW], as opposed to group affiliations [LW · GW].

I’ve since changed my mind. My new view is that there is not one but two useful kinds of vaguely belief-like thingies – one to do with predictions and Bayes-math, and a different one I’ll call “believing in.” I believe both are lawlike, and neither is a flawed attempt to imitate/parasitize the other. I further believe both can be practiced at once – that they are distinct but compatible.

I’ll be aiming, in this post, to give a clear concept of “believing in,” and to get readers’ models of “how to ‘believe in’ well” disentangled from their models of “how to predict well.”

Examples of “believing in”

Let’s collect some examples, before we get to theory. Places where people talk of “believing in” include:

- An individual stating their personal ethical code. E.g., “I believe in being honest,” “I believe in hard work,” “I believe in treating people with respect,” etc.

- A group stating the local social norms that group tries to practice as a group. E.g., “Around here, we believe in being on time.”

- “I believe in you,” said by one friend or family member to another, sometimes in a specific context (“I believe in your ability to win this race,”) sometimes in a more general context (“I believe in you [your abilities, character, and future undertakings in general]”).

- A difficult one-person undertaking, of the sort that’ll require cooperation across many different time-slices of a self. (“I believe in this novel I’m writing.”)

- A difficult many-person undertaking. (“I believe in this village”; “I believe in America”; “I believe in CFAR”; “I believe in turning this party into a dance party, it’s gonna be awesome.”)

- A political party or platform (“I believe in the Democratic Party”). A scientific paradigm.

- A person stating which entities they admit into their hypotheses, that others may not (“I believe in atoms”; “I believe in God”).

It is my contention that all of the above examples, and indeed more or less all places where people naturally use the phrase “believing in,” are attempts to invoke a common concept, and that this concept is part of how a well-designed organism might work.[1]

Inconveniently, the converse linguistic statement does not hold – that is:

- People who say “believing in” almost always mean the thing I’ll call “believing in”

- But people who say “beliefs” or “believing” (without the “in”) sometimes mean the Bayes/predictions thingy, and sometimes mean the thing I’ll call “believing in.” (For example, “I believe it takes a village to raise a child” is often used to indicate “believing in” a particular political project, despite how it does not use the word “in”; also, here’s an example from Avatar.)

A model of “believing in”

My model is that “I believe in X” means “I believe X will yield good returns if resources are invested in it.” Or, in some contexts, “I am investing (some or ~all of) my resources in keeping with X.”

(Background context for this model: people build projects that resources can be invested in – projects such as particular businesses or villages or countries, the project of living according to a particular ethical code, the project of writing a particular novel, the project of developing a particular scientific paradigm, etc.

Projects aren’t true or false, exactly – they are projects. Projects that may yield good or bad returns when particular resources are invested in them.)

For example:

- “I believe in being honest” → “I am investing resources in acting honestly” or “I am investing ~all of my resources in a manner premised on honesty” or “I am only up for investing in projects that are themselves premised on honesty” or “I predict you’ll get good returns if you invest resources in acting honestly.” (Which of these it is, or which related sentence it is, depends on context.)

- “I believe in this bakery [that I’ve been running with some teammates]” → “I am investing resources in this bakery. I predict that marginal resource investments from me and others will have good returns.”

- “I believe in you” → “I predict the world will be a better place for your presence in it. I predict that good things will result from you being in contact with the world, from you allowing the hypotheses that you implicitly are to be tested. I will help you in cases where I easily can. I want others to also help you in cases where they easily can, and will bet some of my reputation that they will later be glad they did.”

- “I believe in this party’s potential as a dance party” → “I’m invested co-creating a good dance party here.”

- “I believe in atoms” or “I believe in God” → “I’m invested in taking a bunch of actions / doing a bunch of reasoning in a manner premised on ‘atoms are real and worth talking about’ or ‘God is real.’”[2]

“Good,” in the above predictions about a given investment having “good” results, means some combination of:

- Good for my own goals directly;

- Good for others’ goals, in such a way that they’ll notice and pay me for the result (perhaps informally and not in money), so that it’s good for my goals after the payment. (E.g., the dance party we start will be awesome, and others’ appreciation will repay me for the awkwardness of starting it.) (Or again: Martin Luther King invested in civil rights, “believing in”-style; eventually social consensus moved; he now gets huge appreciation for this.)

I admit there is leeway here in what exactly I am taking “I believe in X” to mean, depending on context, as is common in natural language. However, I claim there is a common, ~lawlike shape to how to do “believing in”s well, and this shape would be at least partly shared by at least some alien species.

Some pieces of the lawlike shape of “believing in”s:

I am far from having a catalog of the most important features of how to do “believing in”s well. So I’ll list some basics kinda at random, dividing things into two categories:

- Features that differ between “believing in”s, and predictions; and

- Features that are in common between “believing in”s and predictions.

Some differences between how to “believe in” well, and how to predict well:

A. One should prefer to “believe in” good things rather than bad things, all else equal. (If you’re trying to predict whether it’ll snow tomorrow, or some other thing X that is not causally downstream of your beliefs, then whether X would be good for your goals has no bearing on the probability you should assign to X. But if you’re considering investing in X, you should totally be more likely to do this if X would be good for your goals.)

B. It often makes sense to describe “believing in”s based on strength-of-belief (e.g., “I believe very strongly in honesty”), whereas it makes more sense to describe predictions based on probabilities (e.g. “I assign a ~70% chance that ‘be honest’ policies such as mine have good results on average”).

One can in principle describe both at once – e.g. “Bob is investing deeply in the bakery he’s launching, and is likely to maintain this in the face of considerable hardship, even though he won’t be too surprised if it fails.” Still, the degree of commitment/resource investment (or the extent to which one’s life is in practice premised on X project) makes sense as part of the thing to describe, and if one is going to describe only one variable, it often makes sense as the one main thing to describe – we often care more about knowing how committed Bob is to continuing to launch the bakery, more than we care about his odds the bakery isn’t a loss.

C. “Believing in”s should often be public, and/or be part of a person’s visible identity. Often the returns to committing resources to a project flow partly from other people seeing this commitment – it is helpful to be known to be standing for the ethics one is standing for, it is helpful for a village to track the commitments its members have to it, it is helpful for those who may wish to turn a given party into a dance party to track who else would be in (so as to see if they have critical mass), etc.

D. “Believing in”s should often be in something that fits easily in your and/or others’ minds and hearts. For example, Marie Kondo claims people have an easier time tidying their houses when the whole thing fits within a unified vision that they care about and that sparks joy. This matches my own experience – the resultant tidy house is easier to “believe in,” and is thereby easier to summon low-level processes to care for. IME, it is also easier to galvanize an organization with only one focus – e.g. MIRI seemed to me to work a lot better once the Singularity Summits and CFAR were spun out, and it had only alignment research to focus on – I think because simpler organizations have an easier time remembering what they’re doing and “believing in” themselves.

Some commonalities between how to “believe in” well, and how to predict well:

Despite the above differences, it seems to me there is a fair bit of structure in common between “believing in”s and “belief as in prediction”s, such that English decided to use almost the same word for the two.

Some commonalities:

A. Your predictions, and your “believing in”s, are both premises for your actions. Thus, you can predict much about a person’s actions if you know what they are predicting and believing in. Likewise, if a person picks either their predictions or their “believing in”s sufficiently foolishly, their actions will do them no good.

B. One can use logic[3] to combine predictions, and to combine “believing in”s. That is: if I predict X, and I predict “if X then Y,” I ought also predict Y. Similarly, if I am investing in X – e.g., in a bakery – and my investment is premised on the idea that we’ll get at least 200 visitors/day on average, I ought to have a picture of how the insides of the bakery can work (how long a line people will tolerate, when they’ll arrive, how long it takes to serve each one) that is consistent with this, or at least not have one that is inconsistent.

C. One can update “believing-in”s from experience/evidence/others’ experience. There’s some sort of learning from experience (e.g., many used to believe in Marxism, and updated away from this based partly on Stalin and similar horrors).

D. Something like prediction markets is probably viable. I suspect there is often a prediction markets-like thing running within an individual, and also across individuals, that aggregates smaller thingies into larger currents of “believing in.”[4]

Example: “believed in” task completion times

Let’s practice disentangling predictions from “believing in”s by considering the common problem of predicting project completion times.

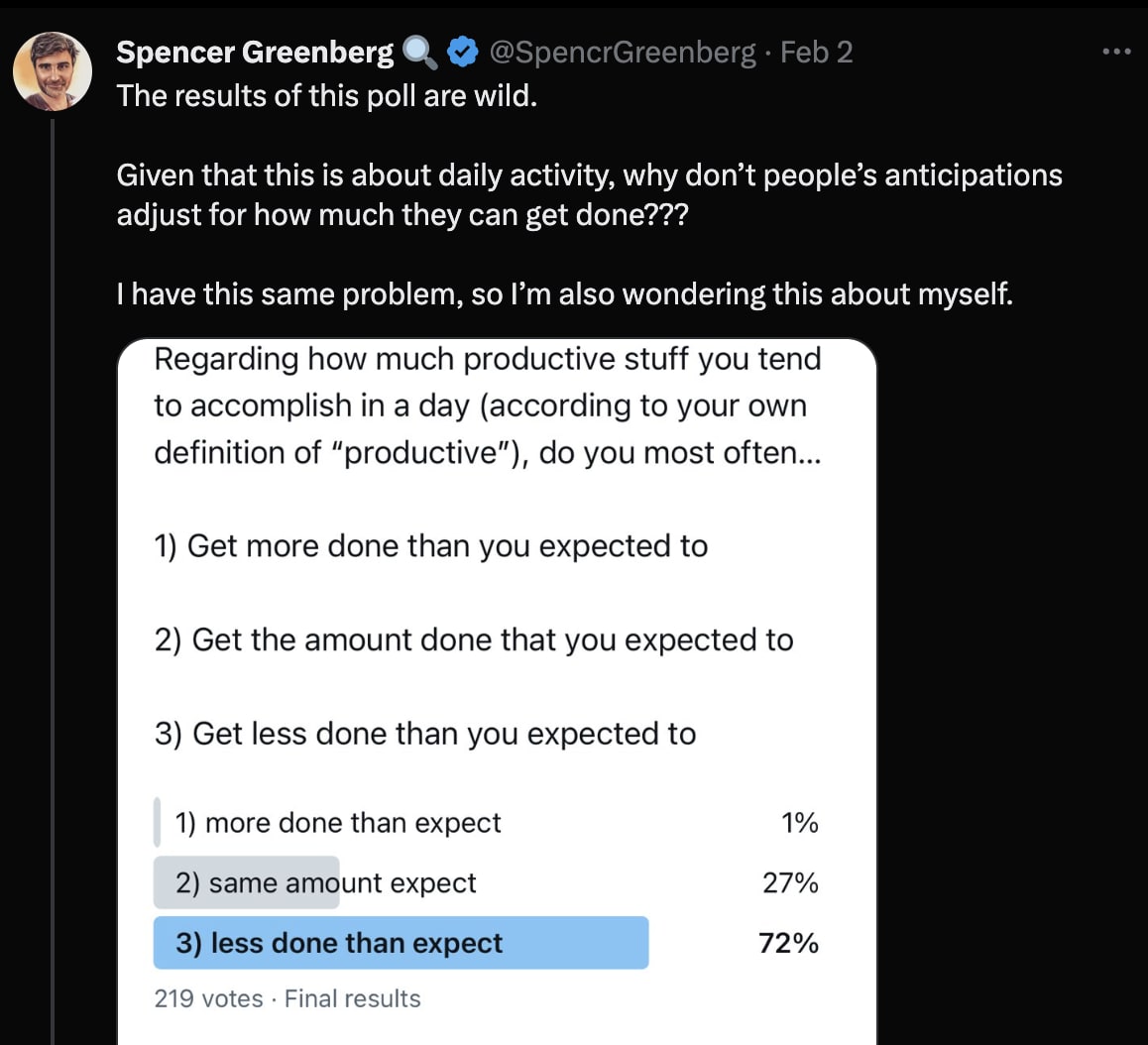

Spencer Greenberg puzzles on Twitter:

It seems to me Spencer’s confusion is due to the fact that people often do a lot of “believing in” around what they’ll get done in what time period (wisely; they are trying to coordinate many bits of themselves into a coherent set of actions), and most don’t have good concepts for distinguishing this “believing in” from prediction.

To see why it can be useful to “believe in” a target completion time: let’s say for concreteness that I’m composing a difficult email. Composing this email involves many subtasks, many of which ought be done differently depending on my chosen (quality vs time) tradeoff: I must figure out what word to use in a given spot; I must get clearer on the main idea I’m wanting to communicate; I must decide if a given paragraph is good enough or needs a rewrite; and so on. If I “believe in” a target completion time (“I’ll get this done in about three hours’ work” or “I’ll get this done before dinner”), this allows many, parallel, system one processes to make a coherent set of choices about these (quality vs time) tradeoffs, rather than e.g. squandering several minutes on one arbitrary sentence only to hurry through other, more important sentences. A “believing in” is here a decision to invest resources in keeping with a coherently visualized scenario.

Should my “believed in” time match my predicted time? Not necessarily. I admit I’m cheating here, because I’ve read research on planning fallacy and so am doing retrodiction rather than prediction. But it seems to me not-crazy to “believe in” a modal scenario, in which e.g. the printer doesn’t break and I receive no urgent phone calls while working and I don’t notice any major new confusions in the course of writing out my thoughts. This modal scenario is not a good time estimate (in a “predictions” sense), since there are many ways my writing process can be slower than the modal scenario, and almost no ways it can be faster. But, of the different “easy to hold in your head and heart” visualizations you have available, it may be the one that gives the most useful guidance to your low-level processes – you may want to tell your low-level processes “please contribute to the letter as though the 3-hours-in-a-modal-run letter-writing process underway.” (That is: “as though in a 3-hours-in-a-modal-run letter-writing” may offer more useful guidance to my low-level processes, than does “as though in a 10-hours-in-expectation letter-writing,” because the former may give a more useful distribution as to how long I should expect to spend looking for any given word/etc.)

“Believing in X” is not predicting “X will work and be good.”

In the above example about “believed in” task completion times, it was helpful to me to believe in completing a difficult email in three hours, even though the results of effort governed by this “believing in” was (predictably) email completion times of larger than three hours.

I’d like to belabor this point, and to generalize it a bit.

Life is full of things I am trying to conjure that I do not initially know how to imagine accurately – blog posts I’m trying to write but whose ideas I have not fully worked out; friendships I would like to cultivate, but where I don’t know yet what a flourishing friendship between me and the other party would look like; collaborations I would like to try; and so on.

In these and similar cases, the policy choice before me cannot be “shall I write a blog post with these exact words?” (or “with this exact time course of noticing confusions and rewriting them in these specific new ways?” or whatever). It is instead more like “shall I invest resources in [some combination of attempting to create and updating from my attempts to create, near initial visualization X]?”

The X that I might try “believing in,” here, should be a useful stepping-stone, a useful platform for receiving temporary investment, a pointer that can get me to the next pointer.

“Believing in X” is thus not, in general, “predicting that project X will work and be good.” It is more like “predicting that [investing-for-awhile as though building project X] will have good results.” (Though, also, a believing-in is a choice and not simply a prediction.)

Compatibility with prediction

Once you have a clear concept of “believing in” (and of why and how it is useful, and is distinct from prediction), is it possible to do good “believing in” and prediction at once, without cross-contamination?

I think so, but I could use info from more people and contexts. Here’s a twitter thread where I, Adam Scholl, and Ben Weinstein-Raun all comment on learning something like this separation of predictions and “believing in”s, though each with different conceptualizations.

In my case, I learned the skill of separating my predictions from my “believing in”s (or, as I called it at the time, my “targets”) partly the hard way – by about 20 hours of practicing difficult games in which I could try to keep acting from the target of winning despite knowing winning was fairly unlikely, until my “ability to take X as a target, and fully try toward X” decoupled from my prediction that I would hit that target.

I think part of why it was initially hard for me to try fully on “believing in”s I didn’t expect to achieve[5], is that I was experiencing “failure to achieve the targeted time” as a blow to my ego (i.e., as an event I flinched from, lest it cause me shame and internal disarray), in a way I wouldn’t have if I’d understood better how “believing in X” is more like “predicting it’ll be useful to invest effort premised on X” and less like “predicting X is true.”

In any case, I’d love to hear your experiences trying to combine these (either with this vocab, or with whatever other conceptualizations you’ve been using).

I am particularly curious how well this works with teams.

I don’t see an in-principle obstacle to it working.

Distinguishing “believing in” from deception / self-deception

I used to have “believing in” confused with deception/self-deception. I think this is common. I’d like to spend this section distinguishing the two.

If a CEO is running a small business that is soliciting investment, or that is trying to get staff to pour effort into it in return for a share in its future performance, there’s an incentive for the CEO to deceive others about that business’s prospects. Others will be more likely to invest if they have falsely positive predictions about the business’s future, which will harm them (in expectation) and help the CEO (in expectation).[6]

I believe it is positive sum to discourage this sort of deception.

At the same time, there is also an incentive for the CEO to lead via locating, and investing effort into, a “believing in” worthy of others’ efforts – by holding a particular set of premises for how the business can work, what initiatives may help it, etc, and holding this clearly enough that staff can join the CEO in investing effort there.

I believe it is positive sum to allow/encourage this sort of “believing in” (in cases where the visualized premise is in fact worth investing in).

There is in principle a crisp conceptual distinction between these two: we can ask whether the CEO’s statements would still have their intended effect if others could see the algorithm that generated those statements. Deception should not persuade if [LW · GW]listeners can see the algorithm that generated it [LW · GW]. “Believing in”s still should.

My guess is that for lack of good concepts for distinguishing “believing in” from deception, LessWrongers, EAs, and “nerds” in general are often both too harsh on folks doing positive-sum “believing in,” and too lax on folks doing deception. (The “too lax” happens because many can tell there’s a “believing in”-shaped gap in their notions of e.g. “don’t say better things about your start-up than a reasonable outside observer would,” but they can’t tell its exact shape, so they loosen their “don’t deceive” in general.)

Getting Bayes-habits out of the way of “believing in”s

I’d like to close with some further evidence (as I see it) that “believing in” is a real thing worth building separate concepts for. This further evidence is a set of good things that I believe some LessWrongers (and some nerds more generally) miss out on, that we don’t have to miss out on, due to mistakenly importing patterns for “predict things accurately” into domains that are not prediction.

Basically: there’s a posture of passive suffering / [not seeking to change the conclusion] that is is an asset when making predictions about outside events. “Let the winds of evidence blow you about as though you are a leaf, with no direction of your own…. Surrender to the truth as quickly as you can. Do this the instant you realize what you are resisting….”

However, if one misgeneralizes this posture into other domains, one gets cowardice/passivity of an unfortunate sort. When I first met my friend Kate at high school math camp, she told me how sometimes, when nobody was around, she would scoot around the room like a train making “choo choo” noises, or do other funny games to cheer herself up or otherwise mess with her state. I was both appalled and inspired – appalled because I had thought I should passively observe my mood so as to be ~truth-seeking; inspired because, once I saw how she was doing it, I had a sense she was doing something right.

If you want to build a friendship, or to set a newcomer to a group at ease, it helps to think about what patterns might be good, and to choose a best-guess pattern to believe in (e.g., the pattern “this newcomer is welcome; I believe in this group giving them a chance; I vouch for their attendance tonight, in a manner they and others can see”). I believe I have seen some LessWrongers stand by passively to see if friendships or [enjoying the walk they are on] or [having a conversation become a good conversation] would just happen to them, because they did not want to pretend something was so that was not so. I believe this passivity leads to a missing out on much potential good – we can use often reasoning to locate a pattern worth trying, and then “believe in” this pattern to give low-level heuristics a vision to try to live up to, in a way that has higher yields than simply leaving our low-level heuristics to their own devices, and that does not have to be contra epistemic rationality.

- ^

Caveat: I’m not sure if this is “could be part of a well-designed organism” the way e.g. Bayes or Newton’s laws could (where this would be useful sometimes even for Jupiter-brains), or only the way e.g. “leave three seconds’ pause before the car in front of me” could (where it’s pretty local and geared to my limitations). I have a weak guess that it’s the first; but I’m not sure.

- ^

I’m least sure of this example; it’s possible that e.g. “I believe in atoms” is not well understood as part of my template.

- ^

In the case of predictions, probability is an improvement over classical logic. I don’t know if there’s an analog for believing-ins.

- ^

Specifically, I like to imagine that:

* People (and subagents within the psyche) “believe in” projects (publicly commit some of their resources to projects).

* When a given project has enough “believing in,” it is enacted in the world. (E.g., when there’s a critical mass of “believing in” making this party a dance party, someone turns on music, folks head to the dance stage, etc.)

* When others observe someone to have “believed in” a thing in a way that yielded results they like, they give that person reputation. (Or, if it yielded results they did not like, or simply wasted their energies on a “believing in” that went nowhere, they dock them reputation.)

* Peoples’ future “believing in” has more weight (in determining which projects have above-threshold believing-in, such that they are acted on) when they have more reputation.For example, Martin Luther King gained massive reputation by “believing in” civil rights, since he did this at a time when “believe in civil rights” was trading at a low price, since his “believing in” had a huge causal affect in bringing about more equality, and since many were afterwards very glad he had done that.

Or, again, if Fred previously successfully led a party to transform in a way people thought was cool, others will be more likely to go along with Fred’s next attempt to rejigger a party.

I am not saying this is literally how the dynamics operate – but I suspect the above captures some of it, “toy model” style.

Some readers may feel this toy model is made of unprincipled duct tape, and may pine for e.g. Bayesianism plus policy markets. They may be right about "unprincipled"; I'm not sure. But it has a virtue of computational tractability -- you cannot do policy markets over e.g. which novel to write, out of the combinatorially vast space of possible novels. In this toy model, "believing in X" is a choice to invest one’s reputation in the claim that X has enough chance of acquiring a critical mass of buy-in, and enough chance of being good thereafter, that it is worth others’ trouble to consider it -- the "'believing in' market" allocates group computational resources, as well as group actions.

- ^

This is a type error, as explained in the previous section, but I didn’t realize this at the time, as I was calling them “targets.”

- ^

This deception causes other harms, too -- it decreases grounds for trust between various other sets of people, which reduces opportunity for collaboration. And the CEO may find self-deception their easiest route to deceiving others, and may then end up themselves harmed by their own false beliefs, by the damage self-deception does to their enduring epistemic habits, etc.

51 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2024-02-08T08:30:16.558Z · LW(p) · GW(p)

The theme of “believing in yourself” runs through the anime Gurren Lagann, and it has some fun quotes on this theme that I reflect on from time to time.

The following are all lines said to the young, shy and withdrawn protagonist Simon at times of crisis.

Kamina, Episode 1:

Listen up, Simon. Don't believe in yourself. Believe in me! Believe in the Kamina who believes in you!

Kamina, Episode 8:

Don't forget. Believe in yourself. Not in the you who believes in me. Not the me who believes in you. Believe in the you who believes in yourself.

Nia, Episode 15:

Replies from: mateusz-baginskiIf people's faith in you is what gives you your power, then I believe in you with every fiber of my being!

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-02-09T16:45:02.904Z · LW(p) · GW(p)

Seeing you quote TTGL fills me with joy

comment by niplav · 2024-02-08T13:14:13.880Z · LW(p) · GW(p)

This seems very similar to the distinction between Steam [LW · GW] and probability.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2024-02-08T16:10:20.709Z · LW(p) · GW(p)

Oh, man, yes, I hadn't seen that post before and it is an awesome post and concept. I think maybe "believing in"s, and prediction-market-like structures of believing-ins, are my attempt to model how Steam gets allocated.

Replies from: niplav↑ comment by niplav · 2024-02-08T22:53:46.307Z · LW(p) · GW(p)

Several disjointed thoughts, all exploratory.

I have the intuition that "believing in"s are how allocating Steam feels like from the inside, or that they are the same thing.

C. “Believing in”s should often be public, and/or be part of a person’s visible identity.

This makes sense if "believing in"s are useful for intra- and inter-agent coordination, which is the thing people accumulate to go on stag hunts together. Coordinating with your future self, in this framework, requires the same resource as coordinating with other agents similar to you along the relevant axes right now (or across time).

Steam might be thought of as a scalar quantity assigned to some action or plan, and which changes depending on the actions being executed or not. Steam is necessarily distinct from probability or utility because if you start making predictions about your own future actions, your belief estimation process (assuming it has some influence on your actions) has a fixed point in predicting the action will not be carried out, and then intervening to prevent the action from being carried out. There is also another fixed point in which the agent is maximally confident it will do something, and then just doing it, but it can't be persuaded to not do it.[1]

As stated in the original post, steam helps solve the procrastination paradox. I have the intuition that one can relate the (change) in steam/utility/probability to each other: Assuming utility is high,

- If actions/plans are performed, their steam increases

- If actions/plans are not performed, steam decreases

- If steam decreases slowly and actions/plans are executed, increase steam

- If steam decreases quickly and actions/plans are not executed, decrease steam even more quickly(?)

- If actions/plans are completed, reduce steam

If utility decreases a lot, steam only decreases a bit (hence things like sunk costs). Differential equations look particularly useful to talking more rigorously about this kind of thing.

Steam might also be related to how cognition on a particular topic gets started; to avoid the infinite regress problem of deciding what to think about, and deciding how to think about what to think about, and so on.

For each "category" of thought we have some steam which is adjusted as we observe our own previous thoughts, beliefs changing and values being expressed. So we don't just think the thoughts that are highest utility to think in expectation, we think the thoughts that are highest in steam, where steam is allocated depending on the change in probability and utility.

Steam or "believing in" seem to be bound up with abstraction à la teleosemantics [LW · GW]: When thinking or acting, steam decides where thoughts and actions are directed to create higher clarity on symbolic constructs or plans. I'm especially thinking of the email-writing example: There is a vague notion of "I will write the email", into which cognitive effort needs to invested to crystallize the purpose, and then bringing up the further effort to actually flesh out all the details.

This is not quite true, a better model would be that the agent discontinuously switches if the badness of the prediction being wrong is outweighed by the badness of not doing the thing. ↩︎

↑ comment by Mateusz Bagiński (mateusz-baginski) · 2024-02-09T16:52:44.580Z · LW(p) · GW(p)

I think this comment is great and worthy of being expanded into a post.

comment by AnnaSalamon · 2024-02-08T08:21:37.706Z · LW(p) · GW(p)

A point I didn’t get to very clearly in the OP, that I’ll throw into the comments:

When shared endeavors are complicated, it often makes sense for them to coordinate internally via a shared set of ~“beliefs”, for much the same reason that organisms acquire beliefs in the first place (rather than simply learning lots of stimulus-response patterns or something).

This sometimes makes it useful for various collaborators in a project to act on a common set of “as if beliefs,” that are not their own individual beliefs.

I gave an example of this in the OP:

- If my various timeslices are collaborating in writing a single email, it’s useful to somehow hold in mind, as a target, a single coherent notion of how I want to trade off between quality-of-email and time-cost-of-writing. Otherwise I leave value on the table.

The above was an example within me, across my subagents. But there are also examples held across sets of people, e.g. how much a given project needs money vs insights on problem X vs data on puzzle Y, and what the cruxes are that’ll let us update about that, and so on.

A “believing in” is basically a set of ~beliefs that some portion of your effort-or-other-resources is invested in taking as a premise, that usually differ from your base-level beliefs.

(Except, sometimes people coordinate more easily via things that’re more like goals or deontologies or whatever, and English uses the phrase “believing in” for marking investment in a set of ~beliefs, or in a set of ~goals, or in a set of ~deontologies.)

comment by Mikhail Samin (mikhail-samin) · 2024-02-10T20:14:52.424Z · LW(p) · GW(p)

Interesting. My native language has the same “believe [something is true]”/“believe in [something]”, though people don’t say “I believe in [an idea]” very often; and what you describe is pretty different from how this feels from the inside. I can’t imagine listing something of value when I’m asked to give examples of my beliefs.

I think when I say “I believe in you”, it doesn’t have the connotation of “I think it’s good that you exist”/“investing resources in what you’re doing is good”/etc.; it feels like “I believe you will succeed at what you’re aiming for, by default, on the current trajectory”, and it doesn’t feel to be related to the notion of it making sense to support them or invest additional resources into.

It feels a lot more like “if I were to bet on you succeeding, that would’ve been a good bet”, as a way to communicate my belief in their chances of success. I think it’s similar for projects.

Generally, “I believe in” is often more of “I think it is true/good/will succeed” for me, without a suggestion of willingness to additionally help or support in some way, and without the notion of additional investment in it being a good thing necessarily. (It might also serve to communicate a common value, but I don’t recall using it this way myself.)

“I believe in god” parses as “I believe god exists”, though maybe there’s a bit of a disconnection due to people being used to say “I believe in god” to ID, say the answer a teacher expects, etc., and believing in that belief, usually without it being connected to experience-anticipation.

I imagine “believe in” is some combination of something being a part of the belief system and a shorthand for a specific thing that might be valuable to communicate, in the current context, about beliefs or values.

Separately from what these words are used for, there’s something similar to some of what you’re talking about happening in the mind, but for me, it seems entirely disconnected from the notion of believing

comment by kave · 2024-02-08T20:25:16.304Z · LW(p) · GW(p)

I felt a couple of times that this is trying to separate a combined thing into two concepts. But possibly it is in fact two things that feed into each in a loop. For example, in your Avatar example, I hear Katara as pretty clearly making an epistemic/prediction claim. But she is also inviting me in to believing in and helping Aang. Which makes sense: the fact that (she thinks) Aang is capable of saving the world is pretty relevant to whether I should believe in him.

And similarly, a lot of your post touches on how believing in things makes them more belief-worthy (i.e. probable)

comment by Jacob G-W (g-w1) · 2024-02-08T16:53:39.786Z · LW(p) · GW(p)

Could you not just replace "I believe in" with "I value"? What would be different about the meaning? If I value something, I would also invest in it. What am I not seeing?

Replies from: Benito, g-w1↑ comment by Ben Pace (Benito) · 2024-02-09T02:34:13.083Z · LW(p) · GW(p)

"I believe in this team, and believe in our ability to execute on our goals" does not naturally translate into "I value this team, and value our ability to execute on our goals".

My read is that the former communicates that you'd like to invest really hard in the assumption that this team and its ability to execute are extremely high, and invest in efforts to realize this outcome; and my read is that the latter is just stating that it's currently high and that's good.

Replies from: wslafleur↑ comment by wslafleur · 2024-02-11T20:40:19.321Z · LW(p) · GW(p)

Surely it's obvious that these are all examples of what we in the business call a figure of speech. When somebody says "I believe in you!" they're offering reassurance by expressing confidence in you, as a person, or your abilities.

This is covered under most definitions of belief as:

2. Trust, faith, or confidence in someone or something. (a la Oxford Languages)

↑ comment by Jacob G-W (g-w1) · 2024-02-08T17:00:08.436Z · LW(p) · GW(p)

Hmm the meanings are not perfectly identical. For some things, like "believe in the environment" vs "I value the environment" they pretty much are.

But for things like "I believe in you," it does not mean the same thing as "I value you." It implies "I value you," but it means something more. It is meant to signal something to the other person.

comment by Chris_Leong · 2024-02-08T07:37:59.921Z · LW(p) · GW(p)

This ties in nicely with Wittgenstein’s notion of language games. TLDR: Look at the role the phrase serves, rather than the exact words.

Replies from: AnnaSalamon, CstineSublime↑ comment by AnnaSalamon · 2024-02-08T08:47:36.925Z · LW(p) · GW(p)

So, I agree there's something in common -- Wittgenstein is interested in "language games" that have function without having literal truth-about-predictions, and "believing in"s are games played with language that have function and that do not map onto literal truth-about-predictions. And I appreciate the link in to the literature.

The main difference between what I'm going for here, and at least this summary of Wittgenstein (I haven't read Wittgenstein and may well be shortchanging him and you) is that I'm trying to argue that "believing in"s pay a specific kind of rent -- they endorse particular projects capable of taking investment, they claim the speaker will themself invest resources in that project, they predict that that project will get yield ROI.

Like: anticipations (wordless expectations, that lead to surprise / not-surprise) are a thing animals do by default, that works pretty well and doesn't get all that buggy. Humans expand on this by allowing sentences such as "objects in Earth's gravity accelerate at a rate of 9.8m/s^2," which... pays rent in anticipated experience in a way that "Wulky Wilkisen is a post-utopian" doesn't, in Eliezer's example [LW · GW]. I'm hoping to cleave off, here, a different set of sentences that are also not like "Wulky Wilkinsen is a post-utopian" and that pay a different and well-defined kind of rent.

Replies from: barnaby-crook↑ comment by Paradiddle (barnaby-crook) · 2024-02-08T15:23:14.148Z · LW(p) · GW(p)

I actually think what you are going for is closer to JL Austin's notion of an illocutionary act than anything in Wittgenstein, though as you say, it is an analysis of a particular token of the type ("believing in"), not an analysis of the type. Quoting Wikipedia:

"According to Austin's original exposition in How to Do Things With Words, an illocutionary act is an act:

- (1) for the performance of which I must make it clear to some other person that the act is performed (Austin speaks of the 'securing of uptake'), and

- (2) the performance of which involves the production of what Austin calls 'conventional consequences' as, e.g., rights, commitments, or obligations (Austin 1975, 116f., 121, 139)."

Your model of "believing in" is essentially an unpacking of the "conventional consequences" produced by using the locution in various contexts. I think it is a good unpacking, too!

I do think that some of the contrasts you draw (belief vs. believing in) would work equally well (and with more generality) as contrasts between beliefs and illocutionary acts, though.

↑ comment by CstineSublime · 2024-02-08T08:19:20.428Z · LW(p) · GW(p)

I am very surprised that a cursory crtl+f of Anscombe translation of Wittgenstein's Philosophical Investigations, while containing a few tracts discussing the use of the phrase "I believe", doesn't contain a single instance of "I believe in".

One instance of his discussion of "I believe" in Part 2, section x explores the phrase, wondering how it distinguishes itself from merely stating a given hypothesis. Analogous to prefixing a statement with "I say..." such as "I say it will rain today" (which recalls the Tractatus distinguishing the expression of a proposition from the proposition itself):

"At bottom, when I say 'I believe . . .' I am describing my own state of mind—but this description is indirectly an assertion of the fact believed."—As in certain circumstances I describe a photograph in order to describe the thing it is a photograph of. But then I must also be able to say that the photograph is a good one. So here too: "I believe it's raining and my belief is reliable, so I have confidence in it."—In that case my belief would be a kind of sense-impression.

One can mistrust one's own senses, but not one's own belief.

If there were a verb meaning 'to believe falsely', it would not have any significant first person present indicative.

comment by Straw Vulcan · 2024-03-04T16:14:55.443Z · LW(p) · GW(p)

I found the bit at the end contrasting Bayesian truth-seeking posture from believing-in and fighting-to-change-something posture was helpful. Interesting that you've seen lots of LessWrongers just passively watch to see if something will go well instead of fighting for it because of the Bayesian posture.

It seems to me there's an important analog to descriptive statistics versus causal inference. The probability of X given Y is actually completely different from the probability of X given that I make Y happen. You can convert between them in aggregate sometimes with the do-calculus, so there is a link, but the one can be very different from the other in any given case. A thing can have really low probability in the base rate, or "if I do nothing about it" and yet have much higher probability if we rally around it to effect a change.

comment by Sinclair Chen (sinclair-chen) · 2024-02-21T18:29:08.895Z · LW(p) · GW(p)

what would the math for aggregating different "believing in"s across people in an incentive aligned, accurate way look like?

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-02-22T14:12:39.891Z · LW(p) · GW(p)

after thinking and researching for not-long, I think there may be nonzero prior art in gift economics, blockchain reputation systems (belief in people); public goods funding (like quadratic funding); and viewpoints.xyz or vTaiwan (polling platform that k-means "coalitions" and rewards people who "build bridges"). It in general feels like the kind of math problem that the RadicalXChange people would be interested in.

I am not impressed with the current versions of these technologies that are actually "in production."

I think the field is still very experimental. I think its so pre-paradigm that art / philosophy / anthropology / "traditional-ways-of-knowing" are probably ahead of us on this

comment by M Ls (m-ls) · 2024-02-11T22:01:40.478Z · LW(p) · GW(p)

Moss, Jessica, and Whitney Schwab. “The Birth of Belief.” Journal of the History of Philosophy 57, no. 1 (2019): 1–32. https://doi.org/10.1353/hph.2019.0000.

That covers the ancient invention of what we later in English call 'belief'. Belief/believing as an English world was used by Latin speaker Christians to explain it to warrior culture elites who wanted to be Roman empire too dude. It meant to 'hold dear'. Use of it (particularly by analytic philosphy streams centuries later ignoring it origins) to mean 'proposition that' is a subset in a long history. Your "belief as a bet" is a subset of that propositional use.

Belief/believing as a mental practice (it is taught) is one of the biggest mistakes we humans have ever made. Go Pyrrho of Elis! A better word would be to turn world into a gerund. To world, to live.

"Believing/belief" doubles down on intensitional states of mind (among others). This is not required to live, and it tends to stamp down onthe inquiring mind. I.E. it goes doctrinal and world-builds rather than worlds in a healthy way.

"I want to believe" is unhealthy but it has captured to who just want to say I want to live.

comment by David Jilk (david-jilk) · 2024-02-10T20:41:31.844Z · LW(p) · GW(p)

I've done some similar analysis on this question myself in the past, and I am running a long-term N=1 experiment by opting not to take the attitude of belief toward anything at all. Substituting words like prefer, anticipate, suspect, has worked just fine for me and removes the commitment and brittleness of thought associated with holding beliefs.

Also in looking into these questions, I learned that other languages do not have in one word the same set of disparate meanings (polysemy) of our word belief. In particular, the way we use it in American English to "hedge" (i.e., meaning "I think but I am not sure") is not a typical usage and my recollection (possibly flawed) is that it isn't in British English either.

comment by Gunnar_Zarncke · 2024-02-09T17:14:26.678Z · LW(p) · GW(p)

One should prefer to “believe in” good things rather than bad things, all else equal.

I think this explains what the word "should" means. When people say: "I/you should do X," they mean that they "believe in" X. Saying "should" is stronger than just professing believing in X. It means that there is an expectation that the other does too. The word "should" functions as a generator of common knowledge of desirable outcomes.

comment by Ben Pace (Benito) · 2024-02-10T20:58:17.671Z · LW(p) · GW(p)

Curated. This is a thoughtful and clearly written post making an effort to capture an part of human cognition that we've previously not done a good job of capturing in earlier discussions of human rationality.

Insofar as this notion holds together, I'd like to see more discussion of questions like "What are the rules for coming to believe in something?" and "How does one come to stop believing in something?" and "How can you wrongly believe in something?".

comment by ursusminimus · 2024-02-10T20:58:01.353Z · LW(p) · GW(p)

I was not aware of this distincion before, and I think it is correct. However, in practice I think (believe?) this can't be discussed in isolation without considering simulacrum levels. My impression is that often people don't make object-level statements when they say "I believe in", but rather want to associate themselves with something.

Maybe a rough example:

- "I believe that you will succeed" - object level, prediction about high probability of desired outcome

- "I believe in you" (Simulacrum Level 1) - as discussed here, "I am willing to support you/invest resources into you"

- "I believe in you" (Simulacrum Level 2) - I want to appear supportive to be considered a good friend by you, regardless of my willingness to actually invest resources if you demand them at some point.

- "I believe in you" (Simulacrum Level 3) - I say this because this statement is in the cluster of things that the category "good friend" is correlated with

- "I believe in you" (Simulacrum Level 4) - I say this because this statement is in the cluster of things that I believe the high status group thinks the category "good friend" is correlated with

Now that I think about it the Simulacrum Levels don't quite match with the definition, but I hope the general idea is clear. Maybe it helps to expand the framework.

comment by JenniferRM · 2024-02-10T20:09:38.381Z · LW(p) · GW(p)

I've written many essays I never published, and one of the reasons for not publishing them is that they get hung up on "proving a side lemma", and one of the side lemmas I ran into was almost exactly this distinction, except I used different terminology.

"Believing that X" is a verbal construction that, in English, can (mostly) only take a sentence in place of X, and sentences (unlike noun phrases and tribes and other such entities) can always be analyzed according to a correspondence theory of truth.

So what you are referring to as "(unmarked) believing in" is what I called "believing that".

((This links naturally into philosophy of language stuff across multiple western languages...

English: I believe that he's tall.

Spanish: Creo que es alto.

German: Ich glaube, dass er groß ist.

Russian: Я верю, что он высокий.

))

In English, "Believing in Y" is a verbal construction with much much more linguistic flexibility, with lets it do what you are referring to as "(quoted) 'believing in'", I think?

With my version, I can say, in conversation, without having to invoke air quotes, or anything complicated: "I think it might be true that you believe in Thor, but I don't think you believe that Thor casts shadows when he stands in the light of the sun."

There is a subtly of English, because "I believe that Sherlock Holmes casts shadows when he stands in the light of the sun" is basically true for anyone who has (1) heard of Sherlock, (2) understands how sunlight works, and (3) is "believing" in a hypothetical/fictional of belief mode similar to the mode of believe we invoke when we do math, where we are still applying a correspondence theory of truth, but we are checking correspondence between ideas (rather than between an idea and our observationally grounded best guess about the operation and contents of the material world).

The way English marks "dropping out of (implicit) fictional mode" is with the word "actual".

So you say "I don't believe that Sherlock Holmes actually casts shadows when he stands in the light of the sun because I don't believe that Sherlock Holmes actually exists in the material world."

Sometimes, sloppily, this could be rendered "I don't believe that Sherlock Holmes actually casts shadows when he stands in the light of the sun because I don't actually believe in Sherlock Holmes."

(This last sentence would go best with low brow vocal intonation, and maybe a swear word, depending on the audience because its trying to say, on a protocol level, please be real with me right now and yet also please don't fall into powertalk. (There's a whole other way of talking Venkat missed out on, which is how Philosophers (and drunk commissioned officers talk to each other.))

comment by Thomas Kersten (thomas-kersten) · 2024-10-13T16:20:23.109Z · LW(p) · GW(p)

It seems to me Spencer’s confusion is due to the fact that people often do a lot of “believing in” around what they’ll get done in what time period (wisely; they are trying to coordinate many bits of themselves into a coherent set of actions)

Those "bits" you talk about remind me of the concept of parts from the book "No Bad Parts" by Richard C. Schwartz. In his work, Schwartz highlights how different aspects of ourselves (called parts) often want our attention and influence our behavior. He shows how to get into contact with these parts and shift from being a neglectful into a caring parent figure for them. This parent figure is a part called "Self" which helps coordinate all the needs and wants of all parts within the larger system.

The idea of "believing in" resonates deeply with me, especially in relation to the parts work I've been practicing. Today, I realized that I "believe in" openness, curiosity, and connectedness. Meaning, I thought during my meditation that it is effective and efficient to invest my resources by aligning my behavior according to these values. Then I read your article about "believe in" and it struck the same tune, reinforcing my reflections. Granted, I am not sure how long this "believe in" will last. But right now it seems obvious to me that my current goals will flourish when I keep investing in those "believe in"s. This also shows that, as far as I know, my parts are in harmony with them and are likely to follow suit.

One and a half years prior, I struggled to even understand the meaning of having values like that. I would never have been able to make such sweeping statements about my values without the help of the meditation practices and the concept of parts, unblending, burdens, and Self, as they are outlined in the "No Bad Parts". At the time, I was not able to process the emotional information my parts had to offer, nor did I know they needed me to help them make sense of it.

comment by Review Bot · 2024-02-13T22:46:19.453Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by strigiformes (cary-jin) · 2024-02-11T09:29:16.295Z · LW(p) · GW(p)

I think Eliezer made a similar distinction before in the sequences: Moore's Paradox [LW · GW].

comment by jeffreycaruso · 2024-02-11T02:45:44.325Z · LW(p) · GW(p)

I've never seen prediction used in reference to a belief before. I'm curious to hear how you arrived at the conclusion that a belief is a prediction.

For me, a belief is something that I cannot prove but I suspect is true whereas a prediction is something that is based in research. Philip Tetlock's book Superforcasting is a good example. Belief has little to nothing to do with making accurate forecasts whereas confronting one's biases and conducting deep research are requirements.

I think it's interesting how the word "belief" can simultaneously reflect certainty and uncertainty depending upon how it's used in a sentence. For example, if I ask a project manager when her report will be finished, and she responds "I believe it will be finished on Tuesday", I'd press the issue because that suggests to me that it might not be finished on Tuesday.

On the other hand, if I ask my religious brother-in-law if he believes in God, he will answer yes with absolute certainty.

My difficulty probably lies in part with my own habits around when and how I'm accustomed to using the word "believe" versus your own.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2024-02-11T03:30:26.591Z · LW(p) · GW(p)

I'm curious to hear how you arrived at the conclusion that a belief is a prediction.

I got this in part from Eliezer's post Make your beliefs pay rent in anticipated experiences [LW · GW]. IMO, this premise (that beliefs should try to be predictions, and should try to be accurate predictions) is one of the cornerstones that LessWrong has been based on.

Replies from: jeffreycaruso↑ comment by jeffreycaruso · 2024-02-11T04:54:57.959Z · LW(p) · GW(p)

I just read the post that you linked to. He used the word "prediction" one time in the entire post so I'm having trouble understanding how that was mean't to be an answer to my question. Same with that it's a cornerstone of LessWrong, which, for me, is like asking a Christian why they believe in God, and they answer, because the Bible tells me so.

Is a belief a prediction?

If yes, and a prediction is an act of forecasting, then there must be a way to know if your prediction was correct or incorrect.

Therefore, maybe one requirement for a belief is that it's testable, which would eliminate all of our beliefs in things unseen.

Maybe there are too many meanings assigned to just that one word - belief. Perhaps instead of it being a verb, it should be a preposition attached to a noun; i.e., a religious belief, a financial belief, etc. Then I could see a class of beliefs that were predictive versus a different class of beliefs that were matters of faith.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-12T06:55:52.176Z · LW(p) · GW(p)

While he doesn't explicitly use the word "prediction" that much in the post, he does talk about "anticipated experiences", which around here is taken to be synonymous with "predicted experiences".

comment by PoignardAzur · 2024-02-11T00:26:04.764Z · LW(p) · GW(p)

The point about task completion times feels especially insightful. I think I'll need to go back to it a few times to process it.

comment by Jon Rowlands (jon-rowlands) · 2024-02-10T21:51:12.342Z · LW(p) · GW(p)

I've come to define a belief as a self-reinforcing opinion. That leaves open how it is reinforced, e.g. by testing against reality in the case of a prediction, or by confirmation bias in the case of a delusion.

Replies from: andrew-burns↑ comment by Andrew Burns (andrew-burns) · 2024-02-11T00:38:10.800Z · LW(p) · GW(p)

I don't know that an opinion that conforms to reality is self-reinforcing. It is reinforced by reality. The presence of a building on a map is reinforced by the continued existence of the building in real life.

comment by Raemon · 2024-02-09T15:50:21.501Z · LW(p) · GW(p)

In my case, I learned the skill of separating my predictions from my “believing in”s (or, as I called it at the time, my “targets”) partly the hard way – by about 20 hours of practicing difficult games in which I could try to keep acting from the target of winning despite knowing winning was fairly unlikely, until my “ability to take X as a target, and fully try toward X” decoupled from my prediction that I would hit that target.

what were the games here? I‘d guess naive calibration games aren’t sufficient.

comment by Ben Pace (Benito) · 2024-07-18T07:23:49.742Z · LW(p) · GW(p)

Oh so that’s why they say “Do you believe in God?”

Rather than “Do you believe that God exists?”.

comment by TekhneMakre · 2024-02-08T19:18:39.682Z · LW(p) · GW(p)

https://www.lesswrong.com/posts/KktH8Q94eK3xZABNy/hope-and-false-hope [LW · GW]

comment by Robert Feinstein (robert-feinstein) · 2024-03-12T15:04:33.751Z · LW(p) · GW(p)

Not mine, but this is what I think is going on when people say they believe in something

https://everythingstudies.com/2018/02/23/beliefs-as-endorsements/

comment by Wuschel Schulz (wuschel-schulz) · 2024-02-14T14:34:17.886Z · LW(p) · GW(p)

Under an Active Inference perspective, it is little surprising, that we use the same concepts for [Expecting something to happen], and [Trying to steer towards something happenig], as they are the same thing happening in our brain.

I don't know enough about this know, whether the active inference paradigm predicts, that this similarity on a neuronal level plays out as humans using similar language to describe the two phenomena, but if it does the common use of this "beliving in" - concept might count as evidence in its favour.

Replies from: dkhyland↑ comment by David Hyland (dkhyland) · 2024-03-06T12:00:36.032Z · LW(p) · GW(p)

I think a better active-inference-inspired perspective that fits well with the distinction Anna is trying to make here is that of representing preferences as probability distributions over state/observation trajectories, the idea being that one assigns high "belief in" probabilities to trajectories that are more desirable. This "preference distribution" is distinct from the agent's "prediction distribution", which tries to anticipate and explain outcomes as accurately as possible. Active Inference is then cast as the process of minimising the KL divergence between these two distributions.

A couple of pointers which articulate this idea very nicely in different contexts:

- Action and Perception as Divergence Minimization - https://arxiv.org/abs/2009.01791

- Whence the Expected Free Energy - https://arxiv.org/abs/2004.08128

- Alex Alemi's brilliant talk at NeurIPS - https://nips.cc/virtual/2023/73986

comment by Michael Roe (michael-roe) · 2024-02-11T23:19:42.220Z · LW(p) · GW(p)

There is believing in some moral value, and there is believing in some factual state of the world. Moral judgements aren't the same as facts, but it would seem that you can have beliefs about both.

Asking someone to write down something they believe is somewhat underspecified.

Also, saying "I believe X" rather than just "X" suggests either (a) you wish to signal some doubt about whether X actually is true or (b) X is the kind of thing where believing it is highly speaker-specific, e.g. a moral value.

comment by wslafleur · 2024-02-11T21:00:13.120Z · LW(p) · GW(p)

This seems like a bunch of noise to me. It's not that difficult to distinguish between truth claims and a figure of speech expressing confidence in a subject. Doing so 'deceptively', consciously or otherwise, is just an example of virtue-signaling.

comment by DusanDNesic · 2024-02-11T09:50:16.856Z · LW(p) · GW(p)

Great post Anna, thanks for writing - it makes for good thinking.

It reminds me of The Use and Abuse of Witchdoctors for Life by Sam[]zdat, in the Uruk Series (which I highly recommend). To summarize, our modern way of thinking denies us the benefits of being able to rally around ideas that would get us to better equilibria. By looking at the priest calling for spending time in devoted prayer with other community members and asking, "What for?" we end up losing the benefits of community, quiet time, and meditation. While we are closer to truth (in territory sense), we lost something, and it takes conscious effort to realize it is missing and replace it. It is describing the community version of the local problem of a LessWronger not committing to a friendship because it is not "true" - in marginal cases, believing in it can make it true!

(I recommend reading the whole series, or at least the article above, but the example it gives is "Gri-gri." "In 2012, the recipe for gri-gri was revealed to an elder in a dream. If you ingest it and follow certain ritual commandments, then bullets cannot harm you." - before reading the article, think about how belief in elders helps with fighting neighboring well-armed villages)

comment by David Gretzschel (david-gretzschel) · 2024-02-11T08:36:39.804Z · LW(p) · GW(p)

tl;dr: Words are hard and people are horrible at being precise with them, even when doing or talking about math.

[epistemic status: maybe I'd want an actual textbook here but good enough]

Looking into this, I have been grilling ChatGPT about what exactly a "belief" is in Bayesian Probability. According to it (from what I could gather), belief is a specific kind of probability. So a belief is just a number between 0 and 1 representing a probablity, but we use the label "belief" in specific contexts only. A prediction specifically is the pair of (statement about the future, belief). So a belief is not a prediction. Except when it is. I ask for examples and ChatGPT also loves going pars pro toto and belief is used synonymously with prediction a lot. And I've picked up the habit myself from reading here, before I ever learnt about the math at calculation level.

Personally, I dislike pars pro toto as I find that it makes things very confusing. So I will stop using the term "belief" to be synonymous with "prediction" from now on. Except when I won't, because I suspect that it's kind of convenient and sounds kind of neat.

source: https://chat.openai.com/share/03a62839-3efc-483d-bebd-4cefa9064dfd

comment by FallibleDan · 2024-02-11T05:30:25.455Z · LW(p) · GW(p)

[Edited regarding distinguishing “holding dear” and “being persuaded” (and “predicting”)]

Believe has two main strains of meaning that are adjacent but have opposite emotional valences. One has to do with beinng attracted, one has to do with being levered or persuaded.

The sense of "accept as true; credit upon the grounds of authority or testimony without complete demonstration,” is from early 14c. (persuaded strain)

The sense of "be persuaded of the truth of" (a doctrine, system, religion, etc.) is from mid-13c.; (persuaded strain)

The sense of was "be of the opinion, think" is from c. 1300 (bivalent: could be either d/t holding dear or being persuaded)

Before then, only the “hold dear” strain is seen:

-

In Middle English it was bileven

-

In Old English it was belyfan "to have faith or confidence" (in a person),

Even earlier forms were the Ingvaeonic (also known as North Sea Germanic, which were dialects from the 5th century) geleafa (Mercian), gelefa (Northumbrian), and gelyfan (West Saxon)

This in turn was from Proto-Germanic *ga-laubjan "hold dear (or valuable, or satisfactory), to love"

The Proto-Germanic came from the Proto-Indo-European root *leubh- "to care, desire, love"

[source: Etymonline]

The second broad category of the senses of believe, being persuaded, is perhaps due to English speakers being heavily exposed to it being used to translate the biblical Greek pisteuo which meant “to think to be true; to be persuaded of; to credit or place confidence in (of the thing believed); to credit or have confidence (in a moral or religious reference).”

As mentioned, this sense of believe was already present in the language by 13 c., but the use of this sense was vastly increased following the publication and wide use of the The King James Bible in 1611.

(Note: Tyndale’s New Testament of 1526 relied heavily on Luther’s Bible of 1522).

Pisteuo and its noun pistos is from PIE *bʰéydʰtis, equivalent to πείθω (peíthō, “I persuade”) + -τις (-tis).

So our AngloSaxon word bileven came from PIE “to hold dear, regard as satisfactory, love” but is highly colored by it later being used to convey the sense of pisteuo from the PIE “persuaded (to be true)”.

tl;dr: There are two broad senses of believe which should not be confused:

- holding dear, valuing, regarding as satisfactory (being attracted, the original Germanic sense)

- being persuaded (being persuaded or leveraged, colored by Greek pisteuo)

BTW, perhaps the color of leverage or persuasion is what OP is detecting when they are exploring whether “believe in” has anything to do with “being relied upon for prediction.”

PS: “Epistemology” has a different etymology than pistis. Greek epistasthai "know how to do, understand," literally "overstand," from epi "over, near" (see epi-) + histasthai "to stand," from PIE root *sta- "to stand, make or be firm."

PPS: A hostage taker’s blocked and frozen humanity can be instantly thawed by a negotiator empathizing and sincerely saying, “I BELIEVE you.” This is evidence of the hostage taker responding to someone holding them dear, not to the negotiator using leverage.

PPPS: Some people Believe in God/ ie Love God, and some even have faith in God, even though they don’t have proof and may even doubt God exists. Again, they are attracted by God, even though they aren’t persuaded he exists.

Replies from: andrew-burns, FallibleDan↑ comment by Andrew Burns (andrew-burns) · 2024-02-11T07:12:32.594Z · LW(p) · GW(p)

"To believe" in German is glauben, also from Proto-Germanic. Was this meaning also colored by Greek?

Replies from: FallibleDan↑ comment by FallibleDan · 2024-02-11T15:43:54.937Z · LW(p) · GW(p)

I am not familiar with the course of the many adjacent rivulets of meanings of “glauben” in German since Proto-Germanic, but it would not surprise me if the influence of translating “episteuo“ into ”glauben” had a similar effect on those rivulets of meaning as they have had in English.

By the way, German scholarship in Koine Greek was rudimentary in the early 1520s when Luther and his team were translating the Bible into a compromise dialect of German (indeed, Luther’s Bible had the effect of greatly influencing and standardizing what became modern German).

↑ comment by FallibleDan · 2024-02-11T05:46:06.153Z · LW(p) · GW(p)

The last PPPS reminds me of the joke:

In 1905, in Listowel, a folklorist asked an elderly lady if she believed in “the Good People” (fairies & leprechauns).

“Well, no I don’t. But it doesn’t seem to matter, they’re there just the same.”

This is a joke, but it shows that although the lady doesn’t believe or love the idea of the Good People existing, yet still she is persuaded they do.