ELK Thought Dump

post by abramdemski · 2022-02-28T18:46:08.611Z · LW · GW · 18 commentsContents

Semantics Semantics vs Truth Radical Interpreter First-Person vs Third-Person Perspectives Important puzzle: what does it mean to usefully translate beliefs from one first-person perspective into another? Objective Correspondence Computationalism Counterfactual Correspondence 1. ELK proposals based on counterfactual correspondence? 1.1 Physical Causal Match 1.2 Internal Agreement 2. Truth Tracking How can we train this model to report its latent knowledge of off-screen events? Platonism & Inferentialism None 18 comments

I recently spent a couple of weeks working on ELK [LW · GW]. What follows is a somewhat disorganized thought-dump, with the post-hoc theme of relating ELK to some common ideas in philosophy.

I'll assume familiarity with the ELK document. You can read quick summaries on some other reaction [LW · GW] posts [LW · GW], so I won't try to recap here.

Everything here should be read as "threads for further research" -- many of these sections could be long posts in themselves if I tried to fully formalize them, or even to unpack all my thoughts so far.

I've numbered sections which contain semi-concrete ELK proposals. As a result, the section numbers will be weird -- for example, "2. Truth Tracking" is not the second section.

Semantics

The problem at the heart of ELK is that of semantics, that is, ascribing meaning.

The leading theory (for LessWrongers, at least) is the map/territory analogy, according to which beliefs are like a map, and the world is like a territory. A map has a "scale" and a "key" which together tell us how to relate the map and the territory. Likewise, beliefs are thought to have a "correspondence" which tells us how to relate belief to reality. In academic philosophy, this is known as the correspondence theory of truth (rather than "the map territory theory of truth").

LessWrong is pretty big on the map/territory distinction, but (I think) has historically been far more interested in applying the idea than developing or analyzing it.

While map/territory semantics seems pretty obvious, it gets weird when you start looking at the details. This has caused some philosophers to abandon it, and develop alternatives (some of which are quite interesting to me). Alex Flint has an extensive discussion [? · GW] of problems with some possible theories of truth. This post is my take on the issue.

Semantics vs Truth

Sometimes, the correspondence theory of truth is rendered via Tarski's T-schema:

True("The moon is made of blue cheese.") The moon is made of blue cheese.

There are other replies that can be given, but my reply is as follows. I am interested in "truth" only as a way of getting at meaning/semantics. A definition of truth should shed light on what it means for something to be true; why it is considered true. It should help me cope with cases which would otherwise be unclear.

In other words, the T-schema tells us nothing about the nature of the correspondence; it only says that the predicate "true" names the correspondence. When I say something is true, I am (according to the correspondence theory) asserting that there is a correspondence; but Tarski's T-schema offers me no help in unpacking the nature of this correspondence further.

The T-schema is very important in the subfield of logic dealing with formal theories of truth. The idea of this subfield is to construct axiomatic logics of truth, which avoid paradoxes of truth (most centrally, the Liar paradox). This is a fascinating field which is no doubt relevant to semantics. However, like the T-schema, these theories don't clarify the connection between truth and the external world. They focus primarily on self-consistency, and in doing so, only clarify dealings with mathematical or logical truth, with little/nothing to say about empirical truth.

I will spell out the following hypothesis connecting meaning to truth:

Truth-functional semantics: The meaning of an assertion is fully captured by its truth-conditions. Under what condition is the assertion true, false, and (if relevant) any other truth-values (such as "nonsensical", if this is regarded as a truth-value). This is the "truth function" of the assertion; the idea that semantics boils down to truth-functions is an old one in logic.

(According to correspondence theory, the truth-function will specifically involve checking for a correspondence to the territory, whatever that turns out to mean.)

Radical Interpreter

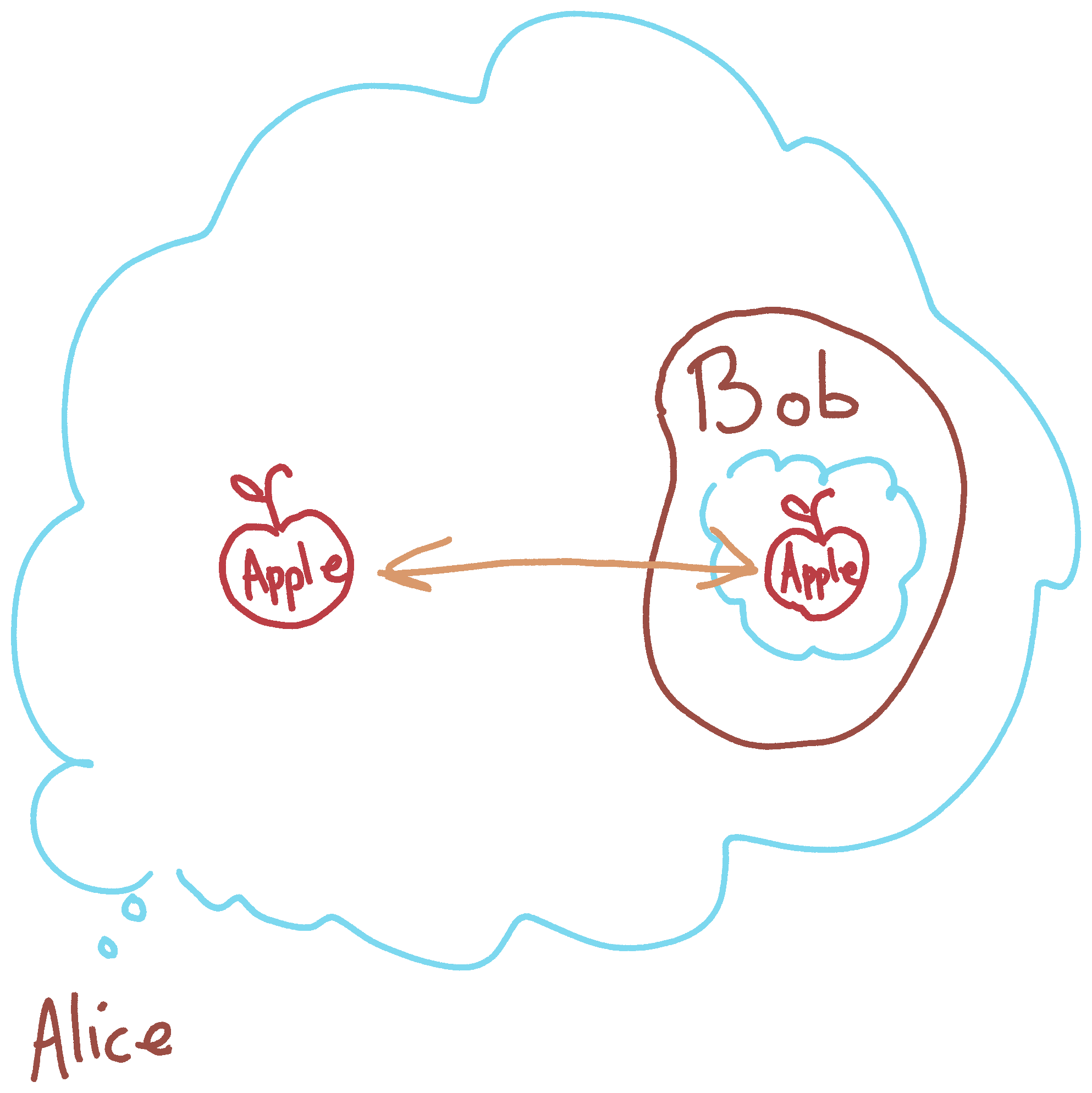

The term "radical interpreter" is used in philosophy to refer to a hypothetical being who knows all necessary information required to determine the meaning of a language (to "interpret" it), without yet understanding the language. Considering the task of the radical interpreter can simplify the problem for us. For example, in ELK, we do not have access to the physical ground truth, so we cannot train the Reporter using such data. A radical interpreter would have access to this, if it proved necessary.

Philosophers argue about just how all-knowing the radical interpreter needs to be, but on my understanding of the correspondence theory of truth, the radical interpreter would need to know all the physical facts (at least, all the physical facts which a language could possibly be referring to), in order to look for a correspondence.

(Note that according to truth-functional semantics, determining the meaning is the same as knowing how to determine the truth-value. So, I like to imagine the radical interpreter trying to judge truth in addition to meaning, although this is a subtly different exercise.)

Although I find this to be a useful thought-experiment, for many purposes I think it simplifies too much by making the radical interpreter omniscient. This doesn't just give us access to the truth; it gives us access to the true underlying ontology of the universe. The radical interpreter can see reality "directly", unmediated by any descriptive language. It is unclear what this would mean, which makes it difficult to visualize the radical interpreter doing its job.

To be more technically precise: any formalization of this picture would have to give some sort of data-type to the "underlying reality" which the radical interpreter supposedly has access to. Thus, our story about how anything means anything at all would subsequently depend on particular assumptions about what reality even is. This is a bad position to be in; it's a variant of the realizability [LW · GW] assumption.

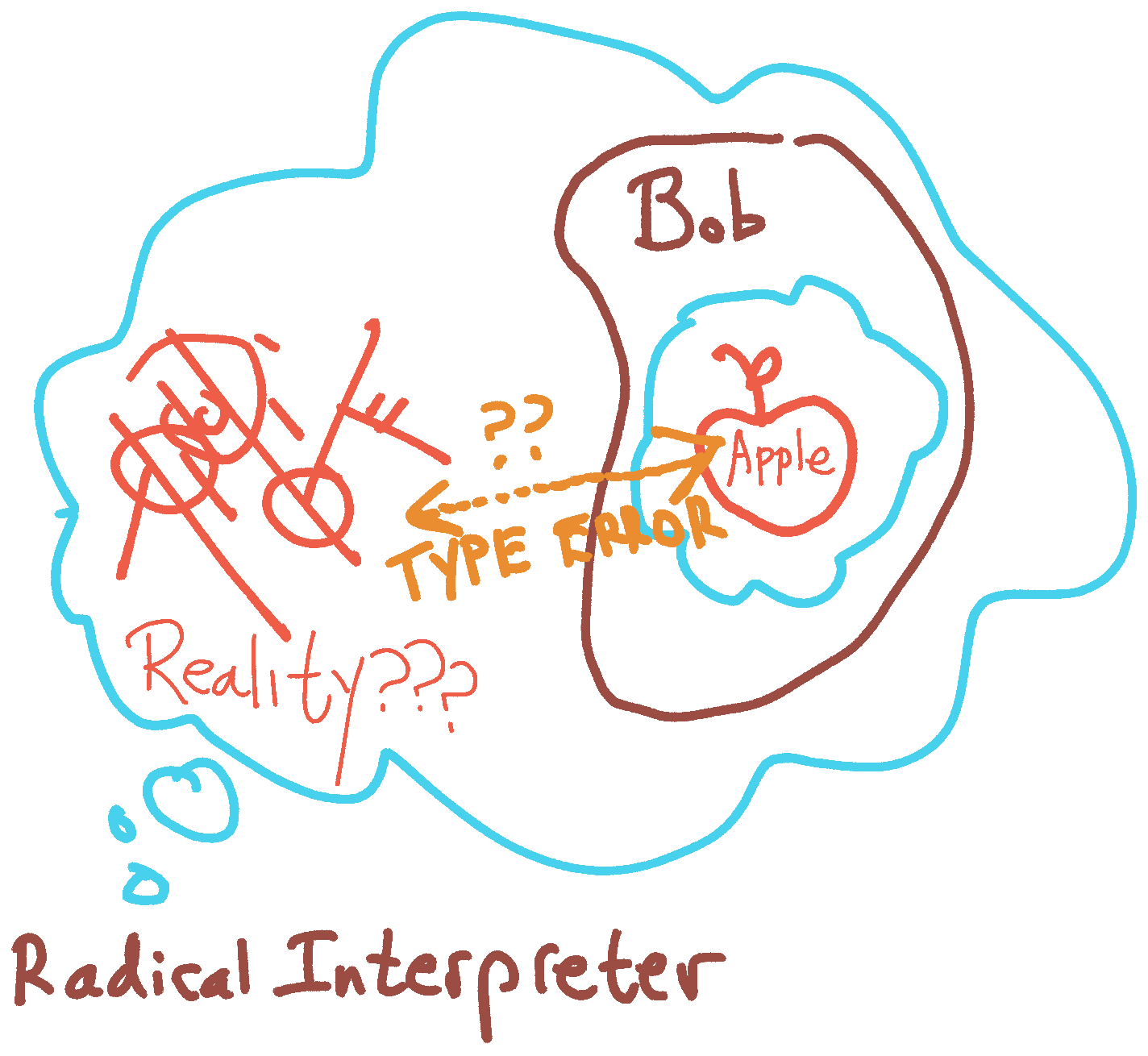

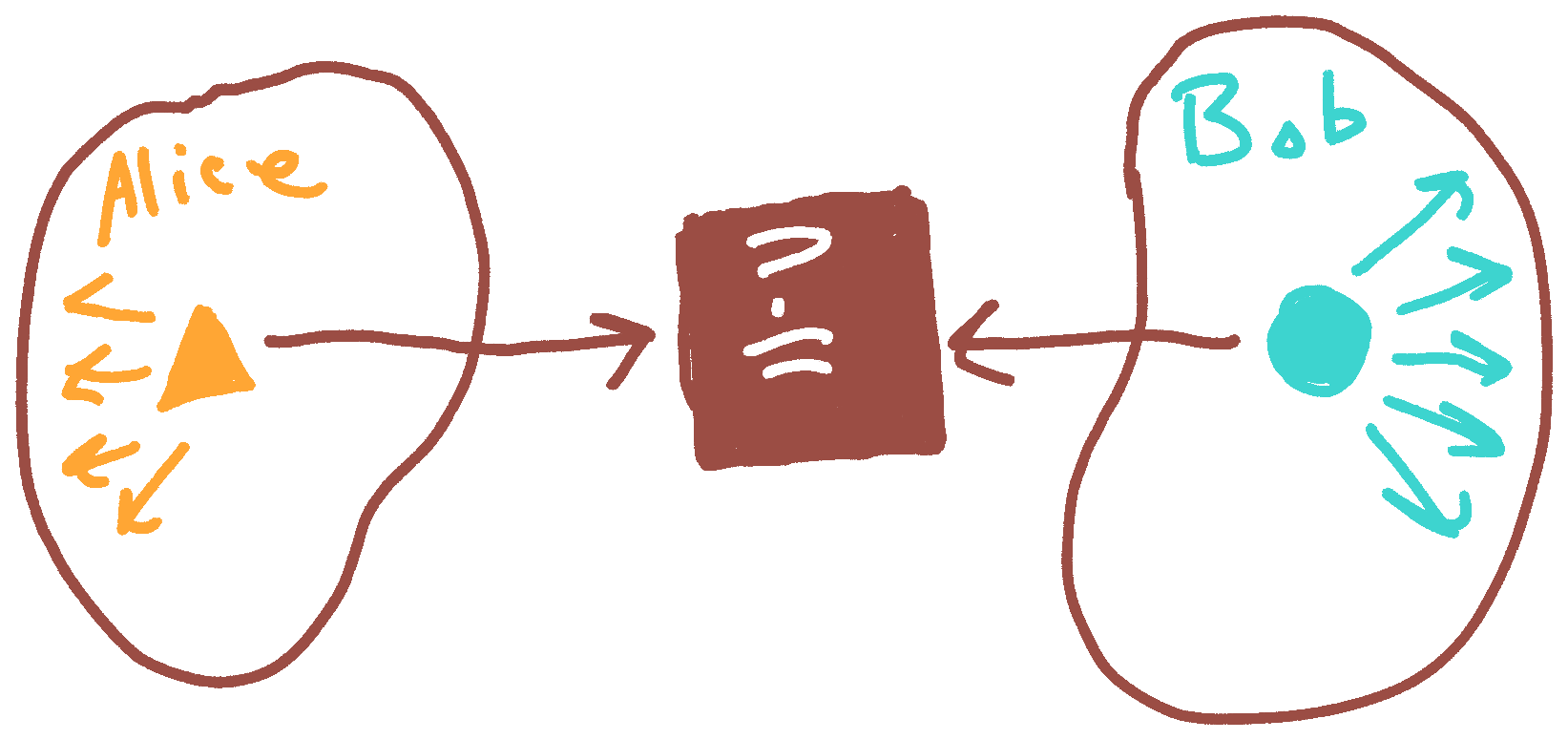

So, instead, I like to imagine a regular, bounded agent looking at another regular, bounded agent and trying to judge which beliefs are true.

In this picture, of course, Alice can only really compare Bob's beliefs to Alice's own beliefs (not to reality).

The presence of a correspondence here only means that Alice and Bob believe the same things. However, that's what it means for Alice to judge the truth of Bob's beliefs. Alice can be wrong about her truth-judgements, but if so, that's because Alice is mistaken in her own beliefs.

So, understanding what "correspondence" means in this picture seems like it would solve most of the puzzle of the correspondence theory. The remaining part of the puzzle is to explain the concept of objective truth, independent of some agent.

First-Person vs Third-Person Perspectives

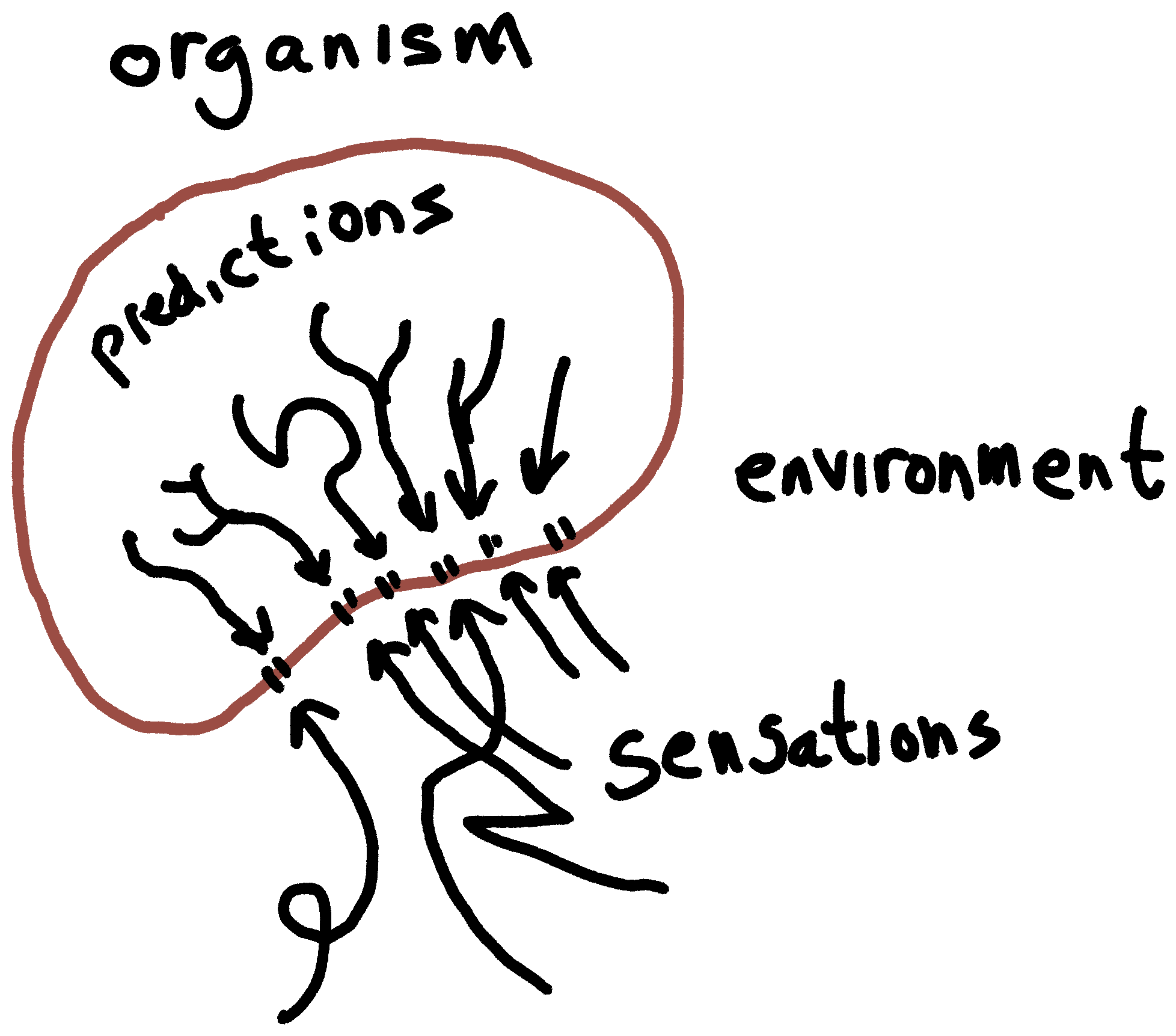

Solomonoff's theory of induction, along with the AIXI theory of intelligence, operationalize knowledge as the ability to predict observations. The hypotheses used to do so are black boxes; there is no way to "look inside" and see what AIXI thinks.

The same is true for the Predictor network in ELK. All we know is that it predicts its observations well.

The obvious definition of "truth" for such black-box learners is the correct prediction of observations -- IE, the correct generalization from the past to the future. I'm not saying there's anything wrong with judging hypotheses based on predictive accuracy as a learning mechanism -- but, as a theory of truth, this is wanting. It's solipsistic; it doesn't leave room for 'truth' of the outside world, so it doesn't endorse the 'truth' of statements regarding people or external objects. For this reason, I term it a first-person perspective.

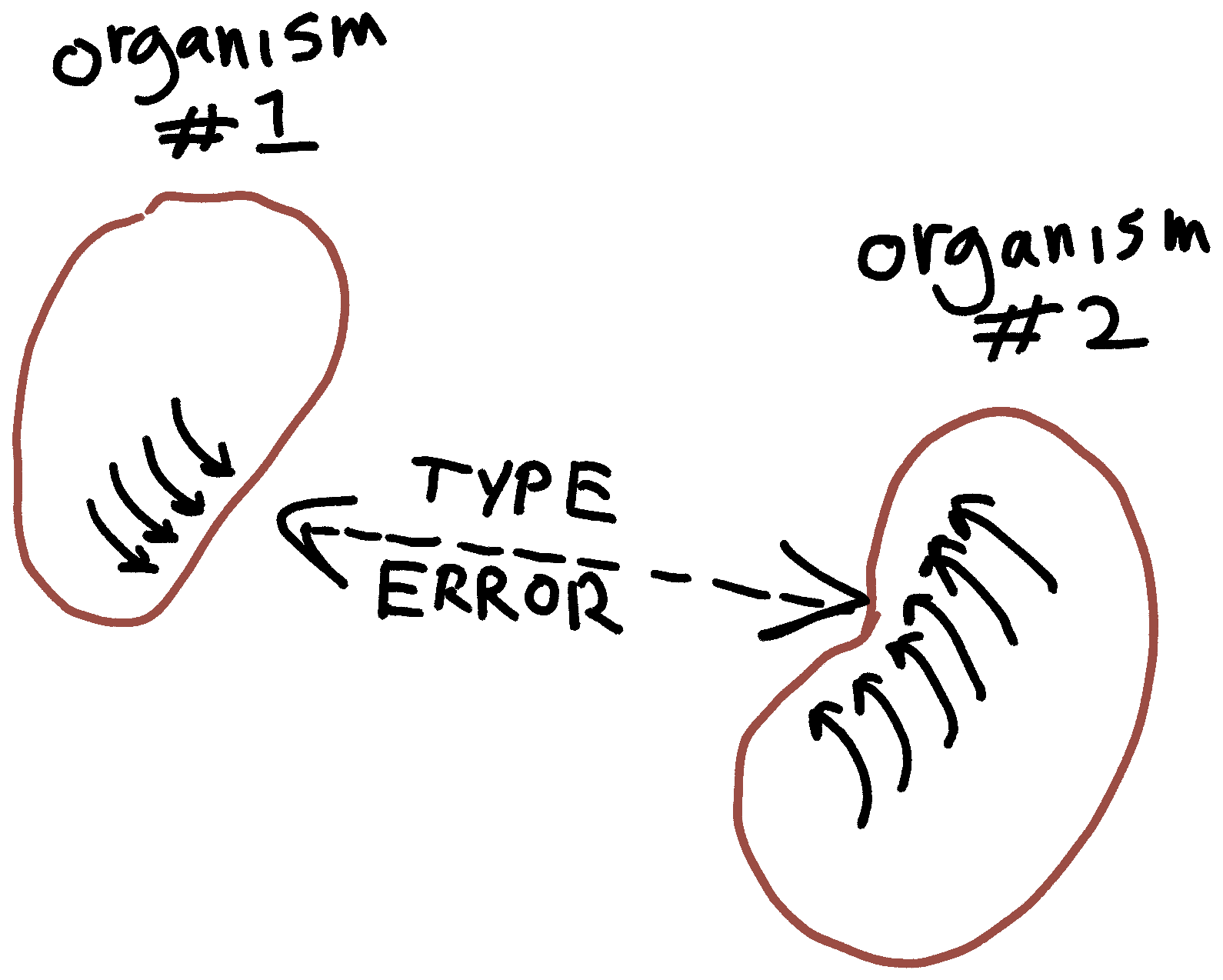

Our problem with a first-person perspective, from an ELK point of view, is that communication between agents is impossible. Each agent develops its own personal language with which to describe observations. It isn't clear even what it means to translate beliefs from one such agent to another. Different agents have different sensors!

This seems important, so let's formalize it as one of the big puzzles:

Important puzzle: what does it mean to usefully translate beliefs from one first-person perspective into another?

Because humans have a great need to communicate, we've solved this problem in practice (without necessarily understanding what it means to solve it generally) through shared frameworks which I'll call third-person perspectives. By this, I mean a language for expressing beliefs which transfers easily from one agent to another:

For example, when I say that something is "left" or "right", that's first-person language; it means (roughly, depending on context) that I can turn my head left/right to see that thing. A third-person version would (as I write this, seated facing north) be "west" or "east".

Third-person perspectives aim to "remove perspective" by being easily translated to as many perspectives as possible. In so doing, they become "objective" rather than "subjective".

It could be useful to have a better mathematical theory of all this. I won't try to propose one in this essay, since it's going to be long already.

(In the ELK document, of course, we only care about translating to one alternative perspective, namely the human one. It may or may not be important to think about "objective" language suited to "many" perspectives.)

Objective Correspondence

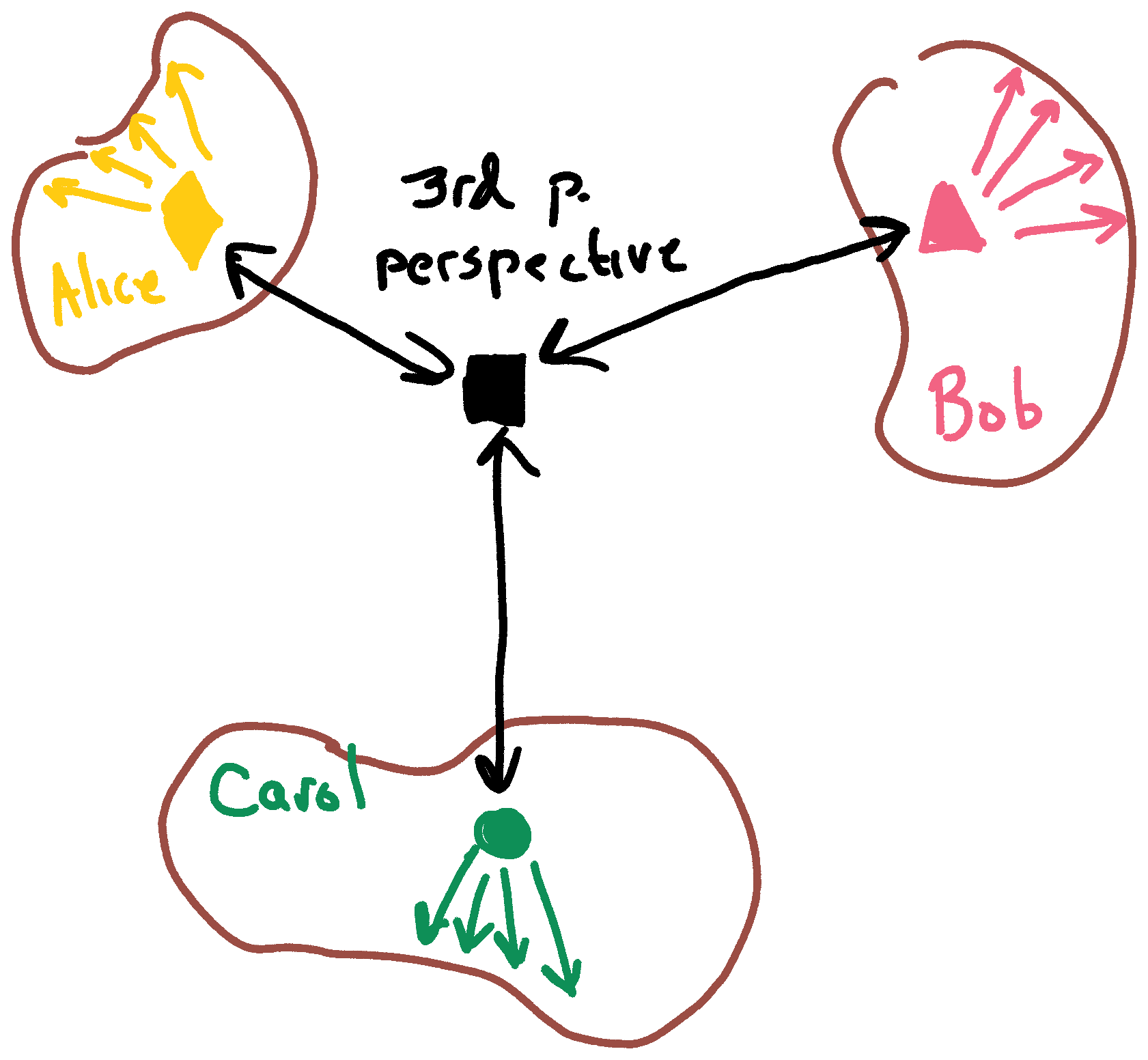

Making up terminology, I'll define objective correspondence theory by the following picture:

Alice understands Bob's perspective by translating them into the shared language of a 3rd-person perspective. If Alice wants to judge the truth of Bob's beliefs, she can translate her beliefs to the 3rd-person perspective as well. Once everything is in the 3rd-person perspective, correspondence becomes simple equality.

Translating into ELK: the job of the Reporter is to translate the Predictor's 1st-person perspective into the human 3rd-person perspective. However, we still haven't clarified what this translation might look like, what it means for a translation to be 'good' or 'bad', etc.

Computationalism

According to a perspective I'll call computationalism, what is real is what is computed.

For example, according to this theory, a brain being simulated in great detail on a computer is just as conscious as a biological brain. After all, it has no way to tell that it's not biological; why wouldn't it be conscious?

Whereas physicalism treats "what if we're living in a simulation" as a serious ontological shift, which questions the nature of the underlying reality, computationalism does not. The low-level "stuff" that makes up reality doesn't matter, so long as it adds up to the same computation. For a computationalist, the meaningful questions are whether we can get out of the simulation, or do anything meaningful to influence it.

Counterfactual Correspondence

To make computationalism well-defined, we need to define what it means for a computation to be instantiated or not. Most of the philosophical arguments against computationalism attempt to render it trivial by showing that according to any reasonable definition, all computations are occurring everywhere at all times, or at least there are far more computations in any complex object than a computationalist wants to admit. I won't be reviewing those arguments here; I personally think they fail if we define computation carefully, but I'm not trying to be super-careful in the present essay.

I'll define 'what computations are instantiated' by counterfactual correspondence. Here's a rough picture:

We can also define notions of approximate correspondence, which will be important for interpreting language in practice, since causal beliefs can be mistaken without totally depriving our claims of meaning.

1. ELK proposals based on counterfactual correspondence?

For a long time, I believed that counterfactual correspondence was the correct account of "correspondence" for the correspondence theory of truth to work out. We can apply this theory to ELK:

This obviously won't work if humans have different beliefs about the causal structure of reality than the predictor (EG, if the predictor has better causal models). However, we can significantly mitigate this by representing human uncertainty about causality, and taking the average causal-mismatch penalty over the possibilities. If humans assign probabilities to different causal models in a reasonable way, we would expect a metric like this to give us a good deal of information about the quality of different reporters; a reporter whose story only matches improbable causal hypotheses is probably a bad reporter.

A great deal depends on further details of the proposal, however.

1.1 Physical Causal Match

The most naive way to interpret this theory is to suppose that there's a correspondence of the causal structure of the human brain, to the causal structure of the Predictor's model, and also between these two and the causal structure of the reality being modeled.

In this theory, a mental model is very much like a scale model (like a toy train set based on a real train route). To model a hurricane on a computer, for example, we might create a computer program which puts the data in memory in rough causal correspondence with the real hurricane: changing the air-pressure variable in one location in our computer model has a cascade of consequences which corresponds closely to what would happen to the actual hurricane under a similar modification.

If we hypothesize that all models work like this, ELK becomes simpler: to find where the Predictor is thinking about a hurricane, we just have to find computations which (approximately) causally correspond to human hurricane-models.

Unfortunately, not all modelling works like this. For example, forward-sampling from a causal Bayes net will causally correspond, but MCMC inference on the same network will not causally correspond to what the Bayesian network models, even when working with a causal Bayes net.

I'll call this phenomenon "reasoning". By reasoning about models rather than just running them forward, we create causality inversions (example: when we make inferences about the past, based on new observations from the present). This destroys the simple correspondence.

1.2 Internal Agreement

You could say that 1.1 failed because we tried to check model correspondence "from the outside", rather than first translating the content of the models into a shared ontology, then checking correspondence. We need to understand what the Predictor thinks about counterfactuals, rather than counterfactuals on the Predictor's implementation.

But this brings us back to the problem of translating the Predictor's beliefs into a comprehensible language, which is exactly what we don't know how to do.

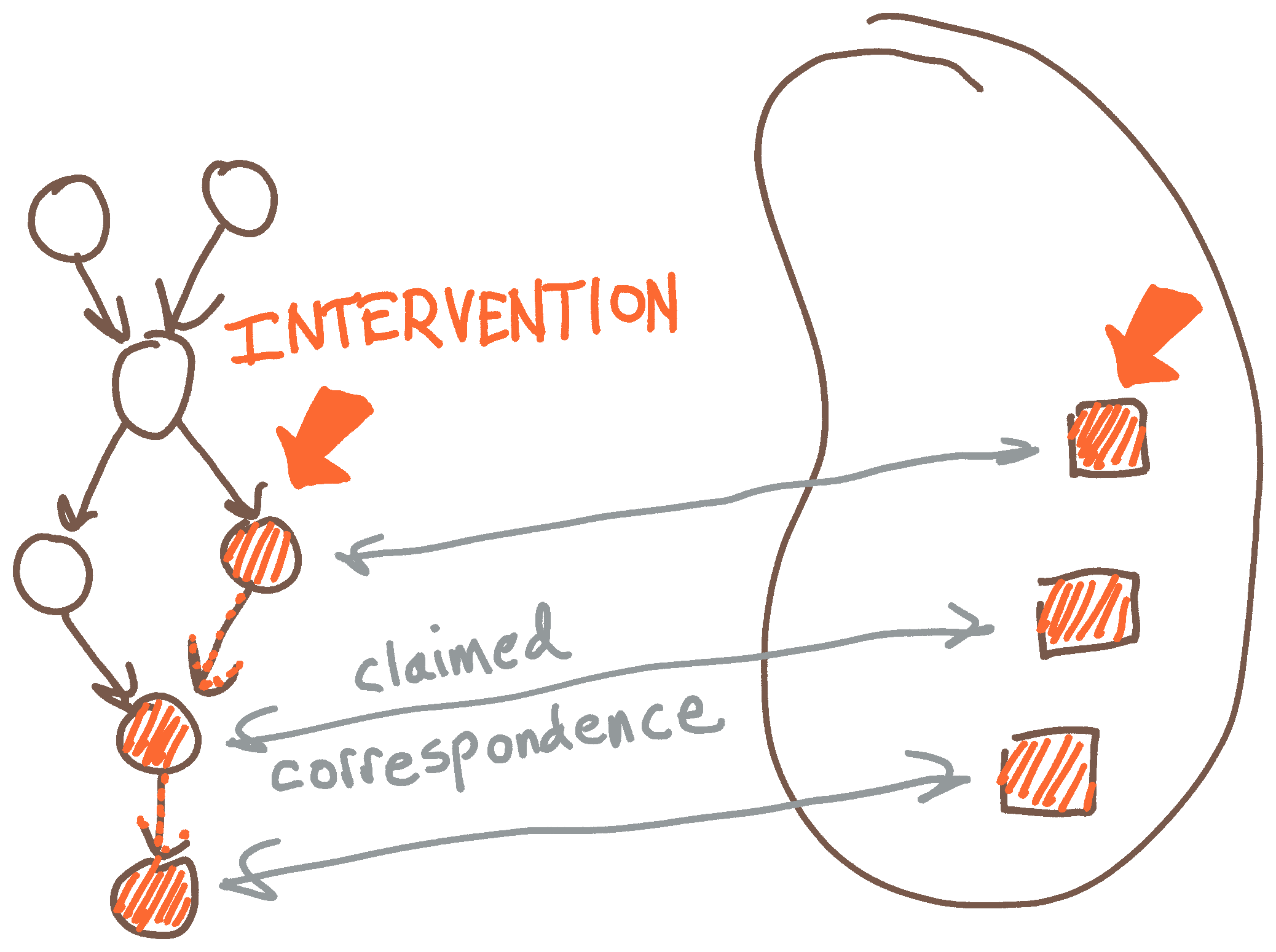

Nonetheless, a rough proposal:

- Reporters don't just know how to answer questions by looking at the Predictor's execution trace; they also know how to counterfact on different answers to questions, by modifying the Predictor to reason as if the specified intervention has occurred.

- When answering questions, Reporters aren't directly told what counterfactual scenario they're under; in other words, individual questions must be answered independently, by examining the Predictor, rather than based on knowledge of other questions/answers or knowledge of what interventions have been made.

- Reporters are judged on the consistency of these claims about facts and counterfactuals, by sampling from the training data and checking what the Reporter claims is happening against what the human model thinks is happening, both in the unmodified scenario and under random interventions.

As in the previous section, it's not that we think the human model is the gold standard; indeed, the whole hope is to extract beyond-human knowledge from the Predictor. Still, judging Reporters based on the prior plausibility of their story is expected to give some useful information, and in the absence of a ground truth, in some sense it's the best we can do.

Counterexample. The proposed strategy rules out simple human imitation, because the stories need to remain consistent under counterfactuals. The Reporter needs to modify the Predictor, and all other changes to answers in the counterfactual scenario need to be a result of these modifications to the Predictor. However, this problem is easily solved. A Reporter can mostly ignore the Predictor, but modify a specific section to make notes of counterfactual interventions the humans have asked the Reporter to make. Then, human simulation can be used to answer the human questions, using an appropriately intervened human model.

Note: The operation of the bad reporter will look suspicious, because it always looks at the same place (to read off the relevant interventions) rather than looking at different places in the Predictor depending on which questions it gets asked. We can tell that the bad Reporter isn't "relying on the Predictor" in the way we want. Can we repair this proposal based on that?

2. Truth Tracking

ELK depends on the nebulous concept of "knowledge":

How can we train this model to report its latent knowledge of off-screen events?

(ELK document, first page)

No precise definition of "knowledge" is given, despite it being a huge part of the problem framing -- ELK is specifically intended to address the problem of the Predictor having knowledge which we lack.

This makes sense, because defining 'knowledge' properly is known to be a huge can of worms. However, to actually solve the problem we may need to rely on a definition.

In this section, I propose to consider Nozick's truth-tracking definition of knowledge:

S knows that p iff

- p is true

- S believes that p

- if p were not true, S would not believe that p (“sensitivity condition”)

- if p were true, S would believe that p (“adherence condition”)

I believe that Nozick favored counterfactuals to define the "would" in truth-tracking. This way, we can say that Alice "knows" something even if we also believe it to be true, because we can counterfact on it being false to check condition #3.

However, I believe conditional probabilities are a more appropriate way to interpret "would" in the context of ELK. Suppose that the Predictor saw that the first domino in a chain was pushed over, and knows that nothing will interfere with the chain of dominos falling over. The Predictor therefore knows that the last domino in the chain will fall. However, if we perform a causal intervention to prevent the last domino from falling, the Predictor would still expect the domino to fall based on its information! So, in the counterfactual-based version of truth-tracking, the predictor would not "know" about the consequences of tipping the first domino.

Moreover, if the Reporter is able to extract information from the Predictor with extremely high truth-tracking in a conditional-probability sense, then we can be highly confident in the accuracy of such information, which is our primary concern.

We also can't evaluate #1, and evaluating #2 is the chief challenge of ELK. We can combine #3 and #4 into correlation. Establishing high correlation between between 'X' and 'the Reporter says X' is both necessary and sufficient for us to trust what the Reporter says, so this does not seem to lose anything important.

(However, we do need to be careful to distinguish high correlation in the training distribution from high correlation after the whole system goes online.)

This brings me to the following proposal:

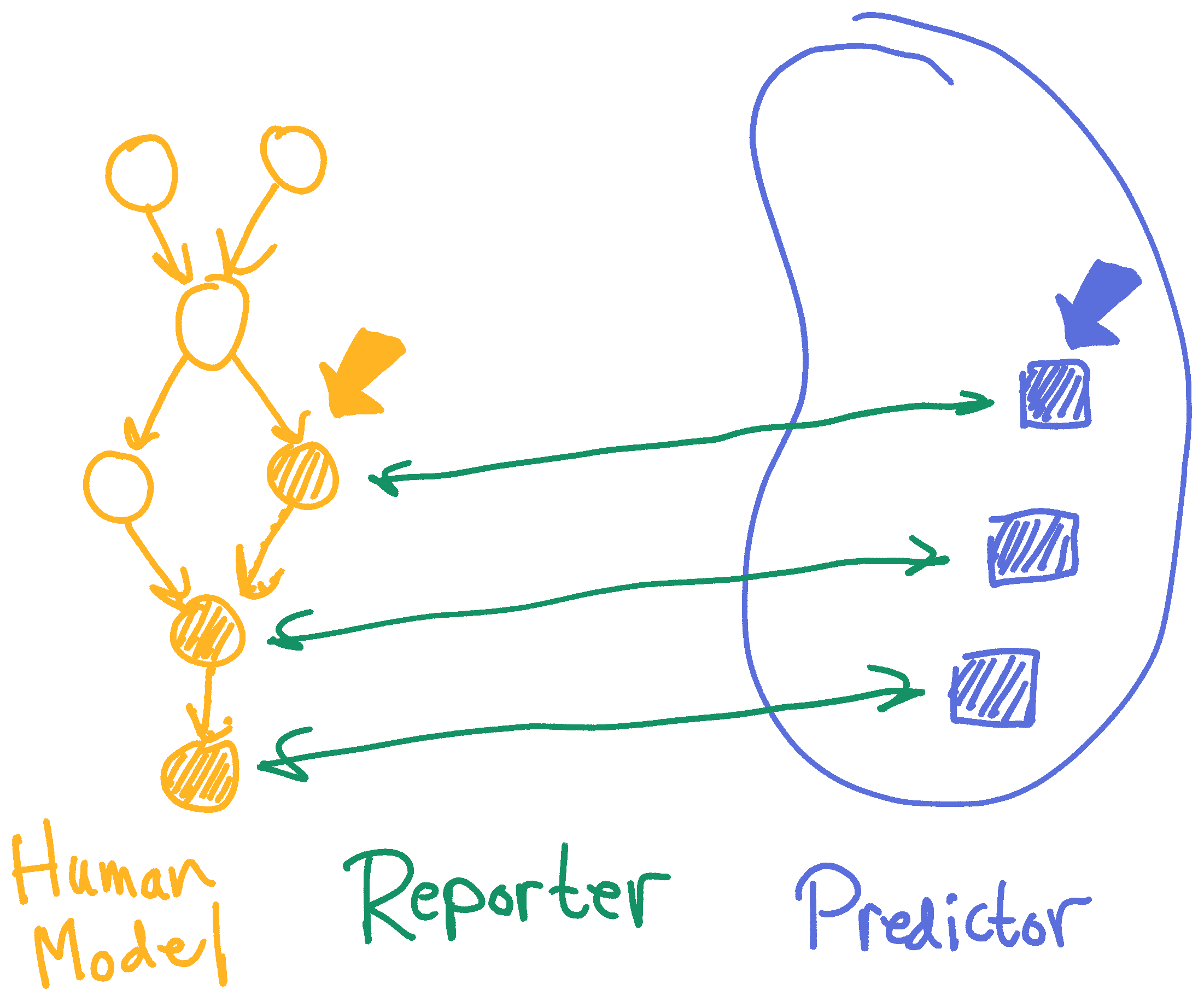

Proposal 2.1: Define a 3rd person perspective over a base model as an event-space and associated probability distribution, which includes the full computation of the base model. The embedding does not need to be perfect: a good 3rd person perspective should account for the imperfect hardware on which the base model runs. If a 3rd person perspective has a human-readable subset of events, EG an event for each question we might ask a reporter, then the translation of a computation trace for the base model is the collection of marginal probabilities for events in the human-readable subset, after conditioning on the computation trace.

Note that a 3rd p.p. can contain many 1p.p. in this way, providing a way to translate all of them.

I find this proposal to be remarkably plausible, if we suppose that the 3rd person perspective is a sufficiently good model. However, obtaining a sufficiently good 3pp may be very difficult.

- The hope for a 3pp providing a good translation lies in the 3pp successfully capturing the details of the training process, and the details of the possible external worlds, to such an extent that the 3pp can determine how human-readable events correlate with events in the 1pp computation trace.

- In order for a 3pp to successfully extract information from alternate ontologies which the 1pp may be using, the 3pp has to include a distribution on other ways the world could be configured (IE, alternate ontologies), and already know how to think about the probability of human-readable events in those alternate worlds. Only in this way can we expect a 3pp to be able to have reasonable probabilities for which ontology is true given which hypotheses a 1pp settles on according to the computation trace, and use this information appropriately when answering human questions. But this means we already have to solve an ontology translation problem before we can use a 3pp to translate for us!

A 3pp sounds intractable: running lots of different possible worlds to see how everything we're interested in correlates with bits in the Predictor?

However, a 3pp may not need to be logically omniscient, running detailed physical models. A logically omniscient 3pp gives a notion of "translation" where, for example, a Reporter can tell us about the detailed consequences of plans, whenever those consequences are robust to small changes in the underlying physics.

Intuitively, we only need the Reporter to tell us about consequences of a plan if the Predictor already knows about those consequences. ELK only requires the Reporter to know what the Predictor knows.

So there's some hope of a tractable 3pp. However, it's not clear how to state the level of logical uncertainty needed to correctly translate without introducing extra logical knowledge. And it's even more unclear how to construct a 3pp with that exact level of logical uncertainty.

So, overall, this proposal has several obvious difficulties. However, for me, it seems like a useful target for other proposals to try to approximate, because it takes me from next-to-no-idea about what constitutes a valid 'translation' of the latent knowledge in black-box machine learning, to having some idea of what I'm trying to compute.

Generally, a good 3pp has to be a good approximation of how humans would endorse thinking about the problem. So we can consider using the 3pp that's just the human model, which I'll call .

Proposal 2.2: Let be the human probability distribution (the result of perfect inference on the human model). Construct the Reporter as follows. For any question X, answer by computing where is the execution trace of the Predictor's model (including data presented to the Predictor as observations).

This proposal is a bit absurd, since we're basically just asking (amplified) humans to look at the Predictor and see what they can figure out. If humans knew how to extract information from the Predictor, wouldn't we just do it? Shouldn't we write down the actual solution humans should use, rather than writing "apply the best human thinking to the problem"?

But before we tear this proposal to shreds, I want to point out its advantages:

- If there is any Reporter who we expect to do a better job (even after thinking about it for arbitrarily long), the advice of this Reporter will be incorporated into the answer.

- Therefore, if some other method for constructing a Reporter does in fact do better, then we can conclude that we could never rationally trust that Reporter. Humans thinking for arbitrarily long do not prefer that Reporter. From the perspective of the human prior, that reporter is just "getting lucky" and not correct for any rationally explainable reason.

- So, in that sense, my proposed Reporter is the best reporter possible.

If there exists a counterexample to this proposal, then it seems it would have to be a philosophically borderline counterexample; a case where the Predictor "knows" some latent information that we would like to know, but there's no argument this "knowledge" tracks the truth.

This isn't much comfort if you don't trust human reasoning very much. We would be in a much better position if we came up with the correct theory of extracting latent knowledge, and then applied that theory, rather than relying on human reasoning to solve the problem in the limit of thinking long enough. However, such a theory would in retrospect vindicate the accuracy of my proposed Reporter strategy.

Nor could we ever come to trust an ELK solution which didn't vindicate the proposal, since such a proposal would have to be one which we couldn't come to trust.

Platonism & Inferentialism

At this juncture, readers may need to decide how strong a version of ELK they are interested in. I will be addressing a version of ELK which hopes to truly extract everything humans might want to know from the Predictor. John Wentworth points out that we may not need such a broad focus:

The ELK document largely discussed the human running natural-language queries on the predictor’s latent knowledge, but we’re going to mostly ignore that part, because it’s harder than everything else about the problem combined and I don’t think it’s actually that central.

This post will focus mainly on the specific question of whether there’s a screen in front of the camera in the diamond problem. I expect that the approaches and counterexamples here extend to more general problems of detecting when a plan is “messing with the sensors”, i.e. doing things which decouple observables from the parts of the world we’re actually interested in.

(Some Hacky ELK Ideas [LW · GW], introduction)

However, in this post I am focused fully on the hard problem of semantics, so I won't consider whether a specific class of questions needs to be answered.

In particular, we may want ELK to be able to extract mathematical knowledge in addition to empirical/physical knowledge. This may be relevant to figuring out why specific action-sequences are bad; but, I wont try to justify the relevance here by inventing scenarios.

Platonism is the application of map/territory ideas to mathematics. If you believe in truth-functional semantics, and also believe in a correspondence theory of truth, and also believe that a specific mathematical statement is meaningful, then you'll believe there is something in the territory which it corresponds to. This is platonism.

Platonism doesn't fit very well with a strictly physicalist worldview, where the only things which exist in the territory are physical things. The closest things which a physicalist might agree actually exist in the territory would be physical laws. But can mathematical laws exist in the territory?

(I'm not saying such a view is impossible; only that it's not obvious how a physicalist can/should answer.)

Platonism fits a little better with computationalism, although a strict computationalist will probably assert that only specific mathematical assertions are meaningful, such as the finite ones, or those belonging to constructive mathematics.

However, a computationalist may be more inclined to deny platonism in favor of one of its alternatives, such as inferentialism:

Inferentialism: the meaning of a term depends only on its use.

For an easy example, if you do mathematics in exactly the same way that I do mathematics except you don't use the numeral "2", instead writing "@", but applying all the same rules to "@" which I would apply to "2" -- then, in that case, "@" clearly means for you what "2" means for me.

Inferentialism claims that this generalizes completely; computationally isomorphic use implies shared meaning.

(Note that this is only plausible in 3rd-person perspectives. Terms such as "me" and "left" do not have the same meaning for you and me, even if we treat them in computationally identical ways.)

Inferentialism need not be incompatible with map/territory semantics. The inferential use of an assertion could determine a truth-functional meaning which puts the term in correspondence with the territory. Indeed, the following view fits:

Truth-tracking semantics: A belief X means event Y to the extent that it tracks the truth of Y.

This perspective is clearly pragmatically useful, EG for the earlier picture where Alice is trying to figure out what is going on in Bob's head. If Alice knows what Bob's assertions correlate with in reality, then Alice knows what to conclude when Bob speaks. However, it's also clear that this theory won't line up with how we usually talk about meaning [LW · GW]; it doesn't really allow statements to be specific, instead associating them with a cloud of probabilities.

This is a broadly inferentialist position, because the way X tracks Y depends entirely on how X is used. For example, if Bob concludes "I see a fish" whenever he observes a specific variety of sense-data including scales and fins, well, this is a fact about Bob's inferences in my view.[1]

A correlation-focused definition of truth-tracking suggests the approaches of section #2, while a counterfactual-focused notion of truth-tracking suggests approaches more like those in section #1.

However, I believe inferentialists more typically throw out map/territory semantics, and indeed, truth-functional semantics.

And perhaps for good reason: Goedel's first incompleteness theorem makes inferentialist and truth-tracking approaches difficult to reconcile. Goedel's theorem says that for any axiom system meeting some basic conditions, there exists an undecidable statement, roughly "I am not provable". Furthermore, this undecidable statement appears to be true!

Assenting to the probable truth of the Goedel statement appears to deny truth-functional inferentialism, by admitting that there's something outside of the axioms and rules of inference which can make a statement true or false.

So inferentialists (it appears) must either abandon truth-functional semantics, or deny the truth of the Goedel sentence, or both.

- ^

Inferentialism as a theory usually deals exclusively with the meaning of logical terms, so it's not clear to me whether inferentialist authors would readily include inference-from-observation. It's even more unclear to me what they'd think of inference-to-action. It seems to me that both are absolutely necessary to include, however. So, for my purposes, reasoning from observations is a type of inference, and deciding on actions is also a type of inference.

18 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2022-03-04T00:35:59.939Z · LW(p) · GW(p)

Solomonoff's theory of induction, along with the AIXI theory of intelligence, operationalize knowledge as the ability to predict observations.

Maybe this is what knowledge is. But I’d like to try coming up with at least one alternative. So here goes!

I want to define knowledge as part of an agent.

- A system contains knowledge if the agent who built it can successfully attain its goals in its likely environments by using that system to figure out which of its actions will lead to outcomes the agent wants.

- When comparing different systems that allow an agent to achieve its goals, there is a Pareto frontier of how much knowledge is in the system, depending on how it helps in different environments.

- A USB stick with blueprints for how to build nukes, in an otherwise lifeless universe, does not contain “knowledge”, because nobody ever “knows” it. (It has knowledge in it the way a tree’s rings do - it contains info that an agent like myself can turn into knowledge.)

- I can store my knowledge outside of me. I can write your address down, forget it from my brain, pick it up later, and I still have that knowledge, stored on the paper in my office.

To find out how much knowledge Alice has, I can run her in lots of environments and see what she is able to accomplish by her standards.

Alice “knows” a certain amount about cars if she can use one to drive to the store to buy food. She knows more if she can use a broken car to do the same.

To compare Alice’s knowledge to Bob’s, I can give Alice Bob’s preferences, run Alice in lots of environments, and see what she is able to accomplish by Bob’s standards.

To give Alice’s knowledge to a paperclip maximizer, I ask what a paperclip maximizer wants that Alice can help with. Perhaps Alice knows the location of a steel mine that Clippy doesn’t.

When she can outperform Clippy given the same resources, she knows something Clippy doesn‘t.

To train a system to “extract” knowledge from Alice and “give“ it to Clippy, I need to modify Clippy to do as well in those environments. Then Clippy will “know” what Alice knows.

How do I modify Clippy? I don’t know. So let’s first brute force the heck out of it. Make every physical possible alteration to Clippy, and run each in all possible environments. All those who do as well as Clippy in all environments, and also outperform Alice, have “learned” what Alice knows.

I’d bet there’s a more sensible algorithm to run, but I won’t reach for it now.

===

This was a fun question to answer.

I’m not sure what it would look like to have successfully answered the question, so I can’t tell if I did.

Oli was asking me how to get knowledge from one agent to another yesterday, and my first idea didn’t even have the right type signature, so I wanted to generate another proposal.

I’ll ponder what it would look like to succeed, then I can come back and grade my answer.

Replies from: abramdemski, Charlie Steiner↑ comment by abramdemski · 2022-03-16T16:38:15.468Z · LW(p) · GW(p)

Your definition requires that we already know how to modify Alice to have Clippy's goals. So your brute force idea for how to modify clippy to have Alice's knowledge doesn't add very much; it still relies on a magic goal/belief division, so giving a concrete algorithm doesn't really clarify.

Really good to see this kind of response.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-03-16T17:04:45.408Z · LW(p) · GW(p)

Ah, very good point. How interesting…

(If I’d concretely thought of transferring knowledge between a bird and a dog this would have been obvious.)

↑ comment by Charlie Steiner · 2022-03-05T16:39:30.416Z · LW(p) · GW(p)

I like this definition too. You might add some sort of distribution over goals (sort of like Attainable Utility) so that e.g. Alice can know things about things that she doesn't personally care about.

comment by Morpheus · 2022-03-01T18:48:39.635Z · LW(p) · GW(p)

One claim I found very surprising:

To make computationalism well-defined, we need to define what it means for a computation to be instantiated or not. Most of the philosophical arguments against computationalism attempt to render it trivial by showing that according to any reasonable definition, all computations are occurring everywhere at all times, or at least there are far more computations in any complex object than a computationalist wants to admit. I won't be reviewing those arguments here; I personally think they fail if we define computation carefully, but I'm not trying to be super-careful in the present essay.

This sounds very intriguing, as I have encountered this problem "what is computation" in some discussions, but have never seen anything satisfactory so far. I would be very glad for any links to solutions/definitions or resources that might help one to come up with a definition oneself ;).

Replies from: daniel-kokotajlo, abramdemski, jacob-pfau↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-03-02T04:39:22.108Z · LW(p) · GW(p)

I wrote my undergrad thesis on this problem and tentatively concluded it's unsolveable, if you read it and think you have a solution that might satisfy me I'd love to hear it! Maybe Chalmers (linked by Jacob) solves it, idk.

↑ comment by abramdemski · 2022-03-16T16:52:29.285Z · LW(p) · GW(p)

I'd be happy to chat about it some time (PM me if interested). I don't claim to have a fully worked out solution, though.

comment by Vanessa Kosoy (vanessa-kosoy) · 2022-03-03T07:50:28.660Z · LW(p) · GW(p)

A quick comment after skimming: IBP [LW · GW] might be relevant here, because it formalizes computationalism and provides a natural "objective" domain of truth (namely i.e. which computations are "running" and what values do they take).

Replies from: abramdemski↑ comment by abramdemski · 2022-03-16T16:45:29.520Z · LW(p) · GW(p)

Any more detailed thoughts on its relevance? EG, a semi-concrete ELK proposal based on this notion of truth/computationalism? Can identifying-running-computations stand in for direct translation?

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2022-03-17T06:51:10.465Z · LW(p) · GW(p)

The main difficulty is that you still need to translate between the formal language of computations and something humans can understand in practice (which probably means natural language). This is similar to Dialogic RL [LW(p) · GW(p)]. So you still need an additional subsystem for making this translation, e.g. AQD [LW(p) · GW(p)]. At which point you can ask, why not just apply AQD directly to a pivotal[1] action?

I'm not sure what the answer is. Maybe we should apply AQD directly, or maybe AQD is too weak for pivotal actions but good enough for translation. Or maybe it's not even good enough for translation, in which case it's back to the blueprints. (Similar considerations apply to other options like IDA.)

More precisely, to something "quasipivotal" like "either give me a pivotal action or some improvement on the alignment protocol I'm using right now". ↩︎

comment by Charlie Steiner · 2022-03-05T16:51:07.836Z · LW(p) · GW(p)

I think arguing against Platonism is a good segue into arguing for pragmatism. We often use the word "knowledge" different ways in different contexts, and I think that's fine.

When the context is about math we can "know" statements that are theorems of some axioms (given either explicitly or implicitly), but we can also use "know" in other ways, as in "we know P!=NP but we can't prove it."

And when the context is about the world, we can have "knowledge" that's about correspondence between our beliefs and reality. We can even use "knowledge" in a way that lets us know false things, as in "I knew he was dead, until he showed up on my doorstep."

I don't think this directly helps with ELK, but if anything it highlights a way the problem can be extra tricky - you have to somehow understand what "knowledge" the human is asking for.

Replies from: abramdemski↑ comment by abramdemski · 2022-03-16T16:16:59.402Z · LW(p) · GW(p)

To be pedantic, "pragmatism" in the context of theories of knowledge means "knowledge is whatever the scientific community eventually agrees on" (or something along those lines -- I have not read deeply on it). [A pragmatist approach to ELK would, then, rule out "the predictor's knowledge goes beyond human science" type counterexamples on principle.]

What you're arguing for is more commonly called contextualism. (The standards for "knowledge" depend on context.)

I totally agree with contextualism as a description of linguistic practice, but I think the ELK-relevant question is: what notion of knowledge is relevant to reducing AI risk? (TBC, I don't think the answer to this is immediately obvious; I'm unsure which types of knowledge are most risk-relevant.)

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2022-03-17T02:34:06.861Z · LW(p) · GW(p)

Pragmatism's a great word, everyone wants to use it :P But to be specific, I mean more like Rorty (after some Yudkowskian fixes) than Pierce.

Replies from: abramdemski↑ comment by abramdemski · 2022-03-17T16:50:13.324Z · LW(p) · GW(p)

Fair enough!

comment by TAG · 2022-03-05T18:23:46.292Z · LW(p) · GW(p)

For example, according to this theory, a brain being simulated in great detail on a computer is just as conscious as a biological brain. After all, it has no way to tell that it’s not biological; why wouldn’t it be conscious?

It can have a false belief that it is biological , so it can have called false belief that it is conscious. [*]

If you make a computational, and therefore functional , duplicate of a person who believes they are conscious, as people generally do, the duplicate will report that it is biological and conscious. And it's report that it is biological will be false. So why shouldn't its report that it is conscious be false?

[*] For many definitions of "conscious". You might be able to evade the problem by defining consciousness as the ability to have beliefs.

After all, it has no way to tell that it’s not biological; why wouldn’t it be conscious?

Why would it be, if you don't assume computationalism?

To make computationalism well-defined, we need to define what it means for a computation to be instantiated or not. Most of the philosophical arguments against computationalism attempt to render it trivial by showing that according to any reasonable definition, all computations are occurring everywhere at all times, or at least there are far more computations in any complex object than a computationalist wants to admit. I won’t be reviewing those arguments here; I personally think they fail if we define computation carefully, but I’m not trying to be super-careful in the present essay

Computationalism isn't the null hypothesis. Even if the arguments against it fail, that doesn't make it true.

comment by tailcalled · 2022-03-01T20:34:59.917Z · LW(p) · GW(p)

You say:

Alice understands Bob's perspective by translating them into the shared language of a 3rd-person perspective. If Alice wants to judge the truth of Bob's beliefs, she can translate her beliefs to the 3rd-person perspective as well. Once everything is in the 3rd-person perspective, correspondence becomes simple equality.

But this only covers agreement between Alice and Bob, not that Bob's beliefs correspond to reality.