Pondering computation in the real world

post by Adam Shai (adam-shai) · 2022-10-28T15:57:27.419Z · LW · GW · 13 commentsContents

Some systems that compute Adders Integrators Cat-egorizers Thinkers Where is(n't) the computation? Why does the nature of computation matter? Some external resources for this topic None 13 comments

Epistemic Status: Exploratory. Asking questions and setting up some intuition pumps. Would very much like to make my thoughts on this topic more precise.

Some systems that compute

Adders

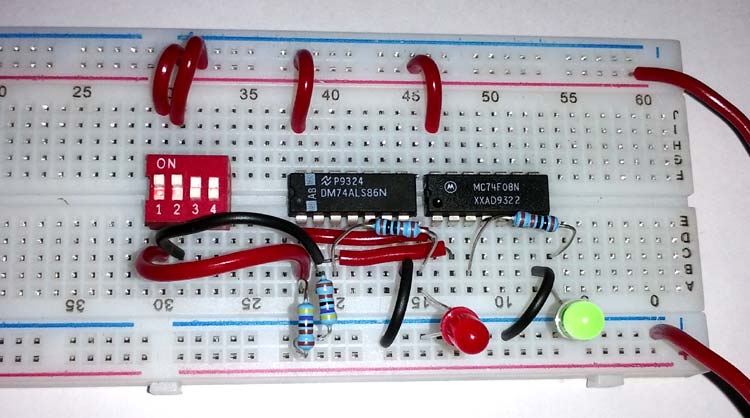

Here's an electrical circuit you can build at home.

It's called a half adder circuit. By flipping the toggles on the left in the red box you set two binary inputs, e.g. you can represent 0+1 or 1+1. In response, the circuit will do a highly structured electromagnetic dance to make the green and red lights in the bottom right turn on or off, in order to represent the output of binary addition. We say: "this circuit computes addition."

Here is a marble adding machine.

By putting in marbles at certain locations at the top of the machine, you can input binary numbers. A series of see-saw toggles change their positions under the influence of gravity and the marbles. This mechanical dance results in marbles resting in output positions at the bottom of the machine, indicating the results of binary addition. We say: "this circuit computes addition."

Different physical systems can be associated with the same computation.

Integrators

This is Emmy.

Emmy is in high school, and has some math homework. She has to plot the solution, , to the following differential equation.

She takes a pencil and a piece of paper and writes the equation down, and then starts using the rules she learned for symbolic integration. Her first move is to write , because she knows the rule that the integral of a constant is . She continues in this manner and gets her final result and plots it, by hand. She gets full marks on this question. We say: "Emmy has integrated the differential equation."

This is Isaac.

Isaac is in Emmy's class, and got the same homework problem. He gathers an apple, a stopwatch, and a ruler.

He takes the apple, and throws it straight up in the air at 15 meters per second and as best he can, simultaneously starts the stopwatch. The first thing he does is measure how high, in meters, the apple goes, and how long it takes to get to that maximum height. He throws the ball again, this time measuring the height and time slightly offset from the maximum. He does this 1,000 times. On every throw he writes down the time from the throw at which he measured and the height of the apple at that time.

Isaac takes some graph paper. He labels the x-axis time and the y-axis position, and he plots all 1000 points. He then fits a curve, by hand, to those points, resulting in something close to a parabola. He hands in this graph and gets full marks. We say: "Isaac has integrated the differential equation."

Or do we?

Different algorithms can be understood to perform the same computation.

What is the relationship between dynamics in the natural world and computation?

In the first case it seems obvious that Emmy is integrating, but who is integrating in the case of Isaac?

Cat-egorizers

This is Sharon (the baby not the cat).

She is 1 year old and can say exactly 2 things: "cat" and "not cat." You point to a plant and she says "not cat." You point to a cat and she says "cat." This is how things go 99% of the time but every once in a while you point to her father and she says "cat." She's not perfect but we say: "Sharon knows the category of cats."

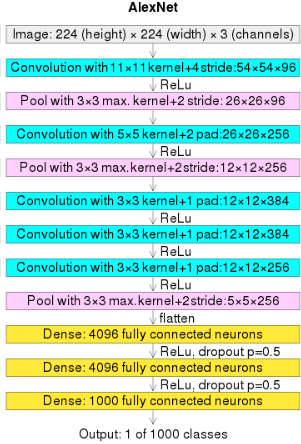

Here is AlexNet

It was originally designed to tell you which out of 1000 different categories the picture you input into it falls into. But you retrain it just to tell you if the picture is a cat or not a cat. It does so with a single output unit that is closer to 1 if the network determines the input picture is a cat, and 0 otherwise. You put in a picture of a refrigerator and it says "0.01", you put in a picture of a cat and it says "0.98". Great, it works. Every once in a while you put in a picture of a wooden table and it says "0.95". Overall it has ~99% accuracy and we say: "AlexNet knows the category of cats."

When we say that AlexNet works, we know this because some entity external to AlexNet gives semantic meaning to the output node. Is there anything purely inside of AlexNet that can tell us that 1 in the output node means cat and that 0 means not cat? Or is the computation in AlexNet only defined with reference to some external observer that does some extra work to make sense of the inputs and the outputs.

What is the analogous question in Sharon?

Thinkers

Adam is meditating and becomes lost in thought. He imagines an elephant dancing in a top hat, singing a song from the newest Taylor Swift album. Upon realizing he is lost in thought, a series of other thoughts pop into his head: This is likely the first time such a thought has been thought, in the history of life. Neuroscientists say that the brain computes. That sounds right to me. This thought must be the result of a computation. Thoughts, including this one, arise from the recurrent dynamics inside of my brain. But all of physics is also understood as dynamical systems, and many systems have recurrence. Does a stream have thoughts? If computation underlies this thought, then what are the inputs? What are the outputs? The sensory inputs and behavioral outputs of my body seem to not be relevant to this computation. What observer is assigning states of my brain to certain input or output states? I'm sitting here, not moving, with my eyes closed, and there's not much sound in this room.

Where is(n't) the computation?

We say that both the electrical adder and the mechanical adder compute addition, so clearly the computation is not just the dynamical equations that govern these systems themselves.

Maybe computation is a function of those dynamics? And if we knew that function we could input into it the circuit equations for the electrical half adder, or the Newtonian dynamics equations associated with the wooden adder, and we would get the same result each time: addition?

But imagine you switched your labels for the red and green LEDs in the electrical half adder. Nothing about the physical system changes, and yet we are computing some function that isn't binary addition (e.g. now ). The computation can't be just a function of the dynamics then.

Seems like there's something going on with the inputs and the outputs. Ultimately some observer decides how to map specific physical states of the system to particular inputs and outputs. So maybe the computation is a function of both the dynamics and an observer.

Why does the nature of computation matter?

At the moment my feelings on this question are still being formulated, but I can try pointing to why I think the nature of computation is a very important open area of study. Making a list of inputs and what outputs they map to is not a sufficient explanation for how a system works if you want to be able to control such a system, or build new systems that have certain properties. For that its useful to "open the black box" and really get a "mechanistic" understanding for the inner workings of a system. This is, I think, the general framework that interpretability research takes.

But "mechanism" is not a precise enough concept for what I think is needed. Neuroscientists spend an incredible amount of energy to characterize and manipulate representations and to understand the underlying physical causes of certain brain states and behavioral states. But something still seems missing. That missing thing to me feels like a formal framework for how and why these mechanisms and representations actually relate to informational states. It is largely just taken for granted that they do.

Understanding the nature of computation could lead to a better understanding of computation in systems outside of brains and artificial neural networks. For instance, it could be that phenomenon in society (economics, political decision making, etc.) can be better understood from this point of view, even if precise predictions remain impossible.

Ultimately I envision a framework which is able to really answer questions about the following:

- What is the correct mathematical language in which we should formalize natural computation? (Turing Machines are not the answer)

- What is the relationship between a physical implementation of some computation and the computation?

- Can we come up with an algorithm wherein you input a physical system and out comes a formal description of the computation it is doing?

- In what ways are the computations that modern artificial neural networks different and the same as naturally intelligent systems (brains)?

- What is the role of interaction and observation in computation? This touches on topics like distributed intelligence.

- What is the relationship between computation and world-modeling?

Some external resources for this topic

The Calculi of Emergence: Computation, Dynamics, and Induction by James Crutchfield

Does a Rock Implement Every Finite-State Automaton? by David Chalmers

Computation in Physical Systems from the Stanford Encyclopedia of Philosophy

13 comments

Comments sorted by top scores.

comment by Slider · 2022-10-29T15:38:43.563Z · LW(p) · GW(p)

Somebody that insists that 1 should be coded as a red light and 0 should be encoded as a green light would claim that the original circuit is not an adder. A view on the topic that recognises that such number-color associations are arbitrary and thus must not be the turning points of the arguments would classify a red-adder and green-adder to be doing the same calculation.

What kind of desirability condition does turing machine fail to not be the correct mathematical representantion of natural computation? Turing machines are very good at describing symbol dances and they don't need to have meanings to be described. It seems to me that if somebody desires something beyond that then the additional bit can't really be about the computation. If you are not satisfied that the word "cat" is made out of c, a and t then you are asking about felines or something rather than anything about words. In the same way if you have "something that brrrrs this way" and zigging that way or zagging that way is not a thing that satisfies you then you are asking about something else than sequences of operations.

Replies from: dr_s, adam-shai↑ comment by dr_s · 2023-09-17T05:12:29.817Z · LW(p) · GW(p)

However, such a person would still have to admit that the mutual information between input and output bits is high. There is something inherently "computery" about that circuit even if you refuse its specific interpretation. It just computes a different function.

↑ comment by Adam Shai (adam-shai) · 2022-10-29T16:42:02.367Z · LW(p) · GW(p)

Great question. Hopefully soon I'll write a longer post on exactly this topic, but for now you can look at this recent post, Beyond Kolmogorov and Shannon [LW · GW], by me and Alexander Gietelink Oldenziel that tries to explain what is lacking in Turing Machines. This intuition is also found in James Crutchfield's work, e.g. here, and in the article by him in the external resources section in this post.

In short, the extra desirability condition is a mechanism to generate random bits. I think this is fundamental to computation "in the real world" (ie brains and other natural systems) because of the central role uncertainty plays in the functioning of such systems. But a complete explanation for why that is the case will have to wait for a longer post.

Admittedly, I am overloading the word "computation" here, since there is a very well developed mathematical framework for computation in terms of Turing Machines. But I can't think of a better one at the moment, and I do think this more general sense of computation is what many biologists are pointing towards (neuroscientists in particular) when they use the word. Maybe I should call it "natural computation."

Replies from: Vitor, Slider↑ comment by Vitor · 2022-10-30T06:58:49.502Z · LW(p) · GW(p)

Probabilistic Turing machines already exist. They are a standard extension of TMs that transition from state to state with arbitrary probabilities (not just 0 and 1) and can thus easily generate random bits.

Further, we know that deterministic TMs can do anything that probabilistic TMs can, albeit potentially less efficiently.

I suspect you're not really rejecting TMs per se, but rather the entire theory of computability and complexity that is standard in CS, more specifically the Church-Turing thesis which is the foundation of the whole thing.

↑ comment by Slider · 2022-10-29T19:53:04.509Z · LW(p) · GW(p)

It would strike me that a natural way for turing machines to handle uncertainty would be to not assume that the input tape starts empty but can be anything (similar to an uninitialised variable).

Then you probably will have stuff like two machines are not equivalent based on having rules that never trigger with the all 0 start tape.

Turing machines are not inherently behaviorationist with computation. It could be argued that the core of the turing machine, the symbol replacement table, is inherently verb like and not subtantive like. And the machine is not a descrption of a bunch of steps but having one step being applied many times. I get how one could approach the thing by thinking that a machine is a series of the states of the tape and think that the machines are single-narrative focused. But a specification of a machine does not need to refer to a single tape state. Just the transition table alone without any reference to data is sufficient. Sure it makes up a canonical story when started on the trivial data of the empty tape. But this is not definitionally essential. It is not like cellular automata get confused on what to do if their field get noise mid execution (the rules of game of life leave no choice on how the life responds to a pixel that is miracliously created by outside forces)

I get an intuitive sense that this new concept deals with functions typically paying rent, and should a detail in function be different some kind of ill fate would befall. Which turns kind of into a an approach that detail of the function can be connected to the biological niche the entity is in and the incentive structure it pushes on. I do think ultimately it is going to be connected in that evolution is a blind force. While it makes fancy stuff, the stuff actually doesn't have a design. So sure we probably can sort out the loadbearing parts form the non-loadbearing parts but I don't think it ever really gets to "this bit has this purpose" level.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2022-10-29T20:08:21.551Z · LW(p) · GW(p)

More importantly, Turing machines can emulate all machines that aren't hyper computers, so they can emulate stochastic or random Turing machines.

comment by aysja · 2022-10-30T22:58:35.394Z · LW(p) · GW(p)

This is an excellent post! Thank you for sharing your thoughts! I too am very curious about many of these questions, although I’m also at a half-baked stage with a lot of it (I’d also love to have a better footing here!). But in any case, here are some thoughts (in no particular order).

- I’ve been interested in the questions you pose around AlexNet for a while, in particular, how much computation is a function of observers assigning values versus an intrinsic property of the thing itself. And I agree this starts getting pretty weird and interesting when you consider that minds themselves are doing computations. Like, it seems pretty clear that if I write down a truth table on paper, it is not the paper or ink that “did” the computation, it was me. Likewise, if I take two atoms in a rock, call one 0, the other 1, then take an atom at a future state, call it 0, it seems clear that the computation “AND” happened entirely in my head and I projected it onto the rock (although I do think it’s pretty tricky to say why this is, exactly!). But what about if I rain marbles down on a circle circumscribed in a square (the ratio of which “calculates” pi)? In this case it feels a bit less arbitrary, the circle and the square chalked on the ground are “meaningfully” relating to the computation, although it is me who is doing the bulk of the work (taking the ratio)? This feels a bit more middle ground to me. In any case, I do think there is a spectrum between “completely intrinsic to the thing” and “agents projecting their own computation on the thing” and that this is largely ignored but incredibly interesting and instructive for how computation actually works.

- Relatedly, people often roll their eyes at the Chinese Room thought experiment (and rightly so, because I think the conclusions people draw about it with respect to AI are often misguided). But I also think that it’s pointing to a deep confusion about computation that I also share. The standard take is that, okay maybe the person doesn’t understand Chinese but the “room does,” because all of the information is contained inside of it. I’m not really convinced by this. For the same reason that the truth table isn’t “doing” the computation of AND, I don’t think that the book that contains the translation is doing any meaningful computation, and I don’t think the human inside understands Chinese, either (in the colloquial sense we mean when we don’t understand a foreign language). There was certainly understanding when that book was generated, but all of that generative tech is absent in the room. So I think Searle is pointing at something interesting and informative here and I tentatively agree that the room does not understand Chinese (although I disagree with the conclusion that this means AI could never understand anything).

- I do agree that input/output mappings are not a good mechanistic understanding of computation, but I would also guess that it’s the right level of abstraction for grouping different physical systems. E.g., the main similarity between the mechanical and electrical adder is that, upon receiving 1 and 1, output 2, and so on.

- I get confused about why minds have special status, e.g. “computation is a function of both the dynamics and an observer.” On the one hand, it feels intuitive that they are special, and I get what you mean. And on the other hand, minds are also just physical systems. What is it about a mind that makes something a computation when it wasn’t otherwise? It’s something about how much of the computation stems from the mind versus the device? And how “entangled” the mind is with the computation, e.g., whether states in the non-mind system are correlated with states in the mind? Which suggests that the thing is not exactly “mind-ness” but how “coupled” various physical states are to each other.

- I also think that the adder systems are far less (or maybe zero) observer dependent computations, relative to the rock or truth table, in the sense that there are a series of physically coupled states (within the system itself) which reliably turn the same input into the same output. Like, there is this step of a person saying what the inputs “represent,” but the person’s mind, once the device is built, does not need to be entangled with the states in the machine in order for it to do the computation. The representation step seems important, but also not as much about the computation itself rather than “how that computation is used.” Like, I think that when we look at isolated cases of computation (like the adders), this part feels weird because computation (as it normally plays out) is part of an interconnected system which “uses” the outputs of various computations to “do” something (like in a standard computer, the output of addition might be the input to the forward-prop in a neural net or whatever). And a “naked” computation is strange, because usually the “sense making” aspect of a computation is in how it’s used, not the steps needed to produce it. To be clear, I think the representation step is interesting (and notably the thing lacking in the Chinese Room), and I do think that it’s part of how computation is used in real-world contexts, but I still want to say that the adder “adds” whether we are there to represent the inputs as numbers or not. Maybe similar to how I want to say that the Chinese room “translates Chinese” whether or not anyone is there to do the “semantic work” of understanding what that means (which, in my view, is not a spooky thing, but rather something-something “a set of interconnected computations”).

- Maybe a good way to think of these things is to ask “how much mind entanglement do you need at various parts of this process in order for the computation to take place?”

- My guess is that computation is fundamentally something like “state reliably changes in response to other state.” Where both words (“state” and “reliably”) are a bit tricky to fully pin down and there are a bunch of thorny philosophical issues. For instance, “reliably” means something like “if I input the first state a bunch of times, the next state almost always follows”, but if-thens are hard to reconcile with deterministic world views. And “state” is typically referring to something abstract, e.g., we say “if the protein changes to this shape, then this gene is expressed,” but what exactly do we mean by “shape”? There is not a single, precise shape that works, there’s a whole class consisting of slight perturbations or different molecular constituents, etc. that will “get the job done,” i.e., express the gene. And without having a good foundation of what we mean by an abstraction, I think talking about natural computation can be philosophically difficult.

- “Is there anything purely inside of AlexNet that can tell us that 1 in the output node means cat and that 0 means not cat?” I’m not sure exactly what you’re gesturing at with this, but my guess is that there is. I’m thinking of interpretability tools that show that cat features activate when shown a picture of a cat, and that these states reliably produce a “1” rather than a “0.” But maybe you’re talking about something else or have more uncertainty about it than me?

- I agree that thinking is extremely wild! ‘Nough said.

↑ comment by Adam Shai (adam-shai) · 2023-09-16T22:07:55.108Z · LW(p) · GW(p)

Thanks so much for this comment (and sorry for taking ~1 year to respond!!). I really liked everything you said.

For 1 and 2, I agree with everything and don't have anything to add.

3. I agree that there is something about the input/output mapping that is meaningful but it is not everything. Having a full theory for exactly the difference, and what the distinctions between what structure counts as interesting internal computation (not a great descriptor of what I mean but can't think of anything better right now) vs input output computation would be great.

4. I also think a great goal would be in generalizing and formalizing what an "observer" of a computation is. I have a few ideas but they are pretty half-baked right now.

5. That is an interesting point. I think it's fair. I do want to be careful to make sure that any "disagreements" are substantial and not just semantic squabling here. I like your distinction between representation work and computational work. The idea of using vs. performing a computation is also interesting. At the end of the day I am always left craving some formalism where you could really see the nature of these distinctions.

6. Sounds like a good idea!

7. Agreed on all counts.

8. I was trying to ask the question if there is anything that tells us that the output node is semantically meaningful without reference to e.g. the input images of cats, or even knowledge of the input data distribution. Interpretability work, both in artificial neural networks and more traditionally in neuroscience, always use knowledge of input distributions or even input identity to correlate activity of neurons to the input, and in that way assign semantics to neural activity (e.g. recently, othello board states, or in neuroscience jennifer aniston neurons or orientation tuned neurons) . But when I'm sitting down with my eyes closed and just thinking, there's no homonculus there that has access to input distributions on my retina that can correlate some activity pattern to "cat." So how can the neural states in my brain "represent" or embody or whatever word you want to use, the semantic information of cat, without this process of correlating to some ground truth data. WHere does "cat" come from when theres no cat there in the activity?!

9. SO WILD

comment by Vanessa Kosoy (vanessa-kosoy) · 2022-10-29T16:48:41.371Z · LW(p) · GW(p)

I only skimmed the post, but infra-Bayesian physicalism [LW · GW] seems relevant here, since IBP provides a rigorous notion of what does it mean for the universe to execute particular computations.

comment by tailcalled · 2022-10-29T14:45:06.723Z · LW(p) · GW(p)

I wonder if computation makes the most sense in the context of a compiler. Like for the adders, you start with an interest in adding some numbers, and then you compile that into a logic circuit using an engineer's understanding of addition, and then you further compile this logic circuit into either an electrical circuit or a marble machine, which then performs the originally intended computation. When you have a compiler, you end up with clear answers to which algorithm is being computed (whatever is upstream of the compiler) and which system is doing the computation (whatever is downstream of the compiler).

Replies from: adam-shai↑ comment by Adam Shai (adam-shai) · 2022-10-29T16:32:44.490Z · LW(p) · GW(p)

Embarrassingly, I've never actually thought of how compilers fit into this set of questions/thoughts. Very interesting, I'll definitely give it some thought now. I like the idea of a compiler as some kind of overseer/organizer of computation.

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2022-10-29T09:40:16.961Z · LW(p) · GW(p)

Excellent post Adam!

I presume it is a canonical joke but I had to chuckle at the cat-gorizers =D

If you think about programmes as lambda terms then they have free variables in which you can plug values. Flipping the red and the green light or 0 with 1 becomes less mysterious from this point of view.

Taking a fully applied category/compositionality point of view we could be looking at "open systems" (so systems with input and output ports) and see how they compose.

Replies from: adam-shai↑ comment by Adam Shai (adam-shai) · 2022-10-29T16:30:48.949Z · LW(p) · GW(p)

Thanks! Sounds like I need to have a better understanding of lambda calculus, and as always, category theory :)