On urgency, priority and collective reaction to AI-Risks: Part I

post by Denreik (denreik) · 2023-04-16T19:14:19.318Z · LW · GW · 15 commentsContents

15 comments

If you now got convinced that survival and future of everyone you know, including your own, depends on your immediate actions after reading this; you would not hesitate to put your daily tasks on halt to study and meditate on this text as if it was a live message being broadcast from an alien civilization. And afterwards you would not hesitate to drastically alter your life and begin doing what ever is in your power to steer away from the disaster. That is, IF you knew of such circumstance to any significant degree of certainty. Being simply told of such circumstance is not enough, because (simply put) world is full of uncertainties, people are mistaken all the time and because we are not wired to make radical life-altering decisions unless we perceive certain and undeniable danger to our survival or well-being. Decisions of an individual are limited by the degrees of certainty in the circumstances. Human priorities depend on a sense of urgency formed by our understanding of the situation and some situations are both difficult to understand and take time to even explain. If you do not understand the criticality of this situation then to you it becomes acceptable to postpone all this “super important sounding stuff” for later - perhaps see if it still seems urgent some other time around. Surely it can’t be that important if it’s not being the headline news discussed on every channel or directed at you in a live conversation and requiring immediate response? Maybe if it’s important enough it will find some other channel via which to hold your attention? Just like that you’d stop paying attention here and get everyone killed by misprioritizing the criticality of an urgent threat.

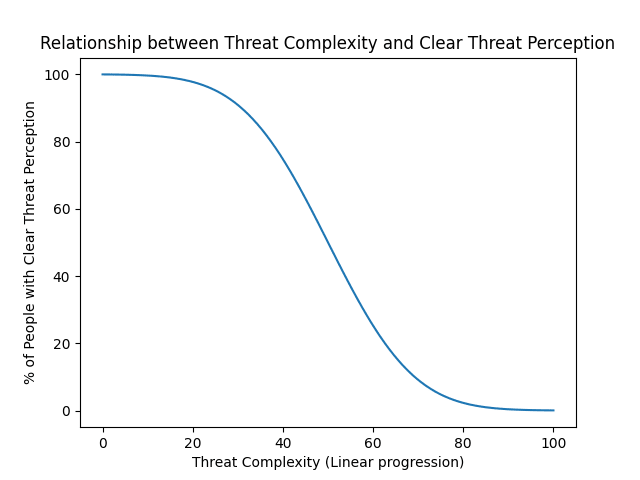

Before I begin with the what’s and why’s it needs to be stated that when threat is complex - perceiving it and talking about it becomes difficult, which lowers the certainty in the threat’s criticality. The more certain an individual is of some circumstance the more ready they are to take sizable and appropriate actions regarding those circumstances. The more complex and abstract the danger the less likely we are to perceive it clearly without putting significant amount of time and effort into grasping the situation. The more complex the danger the fewer people are going to perceive it. The easier it is to ignore a threat and the harder it is to understand; the fewer people will end up reacting to it in any way at all. To make this easier to understand I’ve made a simplified visualization graph (Fig.1)[1] below with some assumptions described in the footnotes:

Example Fig.1[1]: a person is likely to understand the immediate threat to their life if they come across a roaring bear on the road, but they may not understand the extreme peril if a child shows them a strange metallic slightly glowing capsule they found on the side of the road – a radioactive capsule is a more complex threat to perceive than a bear is. More complex threats require more foreknowledge to be perceived. The more elaborate and indirect a threat, the more likely it is to go unnoticed. In some situations the threat is complex enough that only few individuals can clearly perceive its danger. In such situation conformity bias and normalcy bias are also in full effect and many will ignore or even ridicule all warnings and discussion. In such situation it is important to first reliably grasp the situation for yourself.

What Fig.1 means is that any existential threat beyond a certain level of complexity inevitably becomes a matter of debate, doubt and politics, which is for example what we have been seeing with climate crisis, biodiversity loss, pandemic and nuclear weapons. The current situation with AI-safety is that the threat is too complex for most people to understand – for most people it’s an out-there threat on par with an alien invasion rather than something comparable to emerging danger of international conflict escalating into a nuclear war. At this stage regardless of the degree of actual threat that artificial intelligence is posing vast majority of people will ignore it due to the complexity factor alone. Think about that! REGARDLESS OF THE DEGREE OF ACTUAL THREAT THAT ARTIFICIAL INTELLIGENCE IS POSING VAST MAJORITY OF PEOPLE WILL IGNORE IT DUE TO THE COMPLEXITY FACTOR ALONE!

First issue is that we need to reliably determine what is the actual degree of AI-threat that we’re facing and gain certainty for ourselves. The second issue would be how to convey that certainty to others. I propose an approach to tackle both issues at once. Assume that you’re an individual who knows nothing about deep learning, machine learning, data science and artificial intelligence and then some group of experts come forward and proclaims that we are facing a very real existential threat which needs immediate international actions from governments world-wide. If you were a scientist not far removed from the experts you could read the details and study to catch-up on the issue personally, but in our example here you’re not an expert and it could take years to catch up – that’s time you do not have and effort you can’t make. Furthermore some other clever people disagree with these experts. What is your optimal course of action?

Luckily for us all there’s another way and the same methodology should be used by everyone regardless if they’re already experts in the matter in question or not. Short simplified answer is “Applied diversified epistemic reasoning” - find highly reliable experts in the field with good track records and not too closely related to one another; get their views and opinions directly or as closely and recently as possible from them and only via reliable sources; Then cross-check between what all of these experts are saying, trust into their expertise and form/correct your own view based what they think at the moment. Even better if you can engage with experts directly and take into account the pressures they might be facing when speaking publicly. This way you should be able to receive a reasonably good estimation of the situation without even needing to be an expert yourself. Also reasonable people would not argue with something that most experts clearly agree upon and that’s why it becomes doubly important to make reliable documentations on what the recognized experts really think at the moment. However making such documentation is lot of work and hence it becomes important that we make collective effort here and publish our findings in a way that is simple and easy for everyone to understand. I have been working on one such effort, which I intend to release in part II of this post (sometime this month, I assume). I hope that you will consider joining or contributing to my effort.

My methodology is qualitative and quite straightforward; I asked Chat GPT-4 a list of 30 active (as of September 2021) AI-experts in the field and went to find on the Internet what they are saying and doing in 2023. I cross over experts that have not said anything on the matter or are not active anymore and add a new expert to the list in their stead. Essentially I go through a list of experts and try to find out what they think and agree upon, assess their reliability, write notes and save the sources for later reference. Results, findings and outcome will be released in part II of this post. Meanwhile I've included other similarly focused and significant existing publications below. I will also add more as a coordinated effort of this post.

AIIMPACTS -survey of AI-experts from 2022 according to which:

Median 10% believe that48% of those who answered assigneda medianat least a 10% chance[2]thatto human inability to control future advanced AI-systemswould causeleading to an extinction or similarly permanent and severe disempowerment of the human species.- 69% of respondents think society should prioritize AI safety research more or much more.

List of people who sighed the petition to pause AI-development of “AI systems more powerful than GPT-4”. I’ve also checked each one on my experts-list and marked whether or not they signed.

This is all I have for now. Any suggestions, critique and help will be appreciated! It took me significant amount of time to write and edit this post, partially because I discarded some 250% of what I wrote: I started over at least three times after writing thousands of words and end up summarizing many paragraphs into just few sentences. That is to say there are many important things I want to and will write about in the near future, but this one post in its current form took priority over everything else. Thank you for taking your time to read through!

- ^

Relationship between Threat Complexity and Clear Threat Perception graph: I assume that generally human ability to perceive threats follows a bell curve while threat's complexity increases linearly. In reality complexity likely follows an exponential curve and there are other considerable factors, but for the sake of everyone’s clarity I’ve decided to leave it demonstratively as such.

- ^

Added: As Vladimir_Nesov pointed out [LW(p) · GW(p)]: "A survey was conducted in the summer of 2022 of approximately 4271 researchers who published at the conferences NeurIPS or ICML in 2021, and received 738 responses, some partial, for a 17% response rate. When asked about impact of high-level machine intelligence in the long run, 48% of respondents gave at least 10% chance of an extremely bad outcome (e.g. human extinction)."

15 comments

Comments sorted by top scores.

comment by laserfiche · 2023-04-19T13:28:41.725Z · LW(p) · GW(p)

Denreik, I think this is a quality post and I know you spent a lot of time on it. I found your paragraphs on threat complexity enlightening - it is in hindsight an obvious point that a sufficiently complex or subtle threat will be ignored by most people regardless of its certainty, and that is an important feature of the current situation.

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-19T16:51:38.413Z · LW(p) · GW(p)

Thank you. I set to write something clear and easy to read that could serve as a good cornerstone to decisive actions later on and I still think I accomplished that fairly well.

comment by Vladimir_Nesov · 2023-04-16T19:36:34.770Z · LW(p) · GW(p)

Median 10% believe that

Please be kind to the details of the AI Impacts survey result [LW(p) · GW(p)]. It might be the most legible short argument to start paying attention we have.

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-16T19:51:22.748Z · LW(p) · GW(p)

Thank you for pointing that out. I've added the clarification and your comment in the footnotes.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-16T20:09:41.933Z · LW(p) · GW(p)

(You've clipped my comment a bit, making the quoted part ungrammatical.) Anyway, in your post, "at least 10% chance of an extremely bad outcome" became "Median 10% believe that [...] would cause an extinction".[1] The survey doesn't show that many people "believe that", only that many people assign at-least-10% to that, which is not normally described by saying that they "believe that".

Some of the people who assign the at-least-10% to that outcome are in the "median 10%", if we attempt to interpret that as stated to be the 10% of people around the median on the probability assigned to an extremely bad outcome. But it's unclear why we are talking about those 10% of respondents, so my guess is that it's a mangled version of the at-least-10% mentioned in another summary. At 48% of respondents assigning at-least-10% to the outcome, the 10% of "an extremely bad outcome (e.g. human extinction)" is the almost-median response. The actual median response is 5%.

Edit: It's since been changed to say "48% of those who answered evaluated a median 10% chance that [...] would cause an extinction", which is different but still not even wrong. They estimate at-least-10% chance, and I don't know what "estimating a median 10% chance" is supposed to mean. ↩︎

↑ comment by Denreik (denreik) · 2023-04-16T20:22:59.111Z · LW(p) · GW(p)

Yes, am noobing and fumbling around a bit. I made the first edit hastily and immediately corrected before I had seen your reply. You are of course correct. I added a stike-through to show where my error lied.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-16T20:32:55.733Z · LW(p) · GW(p)

There's still the grammar-breaking clipping of my comment ("in the summer of 2022 of approximately ...") and the nonsensical use of "median" in "48% [...] assigned a median 10% chance". I've ninja-edited some things above [LW(p) · GW(p)] that should make this clearer.

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-16T20:48:07.084Z · LW(p) · GW(p)

I've added your comment in full and another strike-through to change "a median 10% chance" into "at least a 10% chance". As you pointed out aiimpacts.org says "Median 10%", which seems like a mistaken notion.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-16T21:32:45.968Z · LW(p) · GW(p)

I've added your comment in full

Thank you. Not adding it at all would also have been fine, my objection was purely to the corrupted quotation.

As you pointed out

aiimpacts.orgsays "Median 10%", which seems like a mistaken notion.

I didn't point out that aiimpacts.org says "Median 10%", though it does. The sequence of letters "Median 10%" is not a "mistaken notion" when it occurs in a context where it's meaningful, and in that particular context it means "The median respondent gave 10% as the answer".

Note that the question where the median response is 10% is not the question I described in my summary [LW(p) · GW(p)], it's instead the trick question with the "human inability to control" part in it. As a result, the point in your post that after all the edits became meaningful, is now weirdly inaccurate. One claim that's more accurate (and talks about the same question I summarized) doesn't have the "human inability to control" part in the question. Another possible claim that's more accurate can say "50%" instead of "48%" (or better yet "median", using an appropriate phrasing), but in fact it's likely more than 50% and requires looking at the data to determine how much.

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-16T22:34:32.944Z · LW(p) · GW(p)

Where did you get the "more than 10%" part from?

Right, it says the results summary that "48% of respondents gave at least 10% chance of an extremely bad outcome".

The survey had two questions. I took a deeper dive into it. The questions were as following:

1) "What probability do you put on future AI advances causing human extinction or similarly

permanent and severe disempowerment of the human species? "

Of those who answered the median probability was 5%. Summary says:

The median respondent believes the probability that the long-run effect of advanced AI on humanity will be “extremely bad (e.g., human extinction)” is 5%. This is the same as it was in 2016 (though Zhang et al 2022 found 2% in a similar but non-identical question). Many respondents were substantially more concerned: 48% of respondents gave at least 10% chance of an extremely bad outcome. But some much less concerned: 25% put it at 0%.

2) "What probability do you put on human inability to control future advanced AI systems

causing human extinction or similarly permanent and severe disempowerment of the

human species?"

Of those who answered the median estimated probability was 10%. The way I interpret this question is that it asks how likely it is that A) Humans won't be able to control future advanced AI systems and B) This will cause human extinction or similarly permanent and severe disempowerment of the human species. Obviously it does not make sense that event B is less likely to occur than both event A and B occurring together. The note suggests Representative heuristics as an explanation, which could be interpreted as recipients estimating that event A has a higher chance of occurring (than event B on its own) and that it is very likely to lead to the event B, or an "extremely bad outcome" as you put it in your message as it says in the summary. Though "similarly permanent and severe disempowerment of the human species" seems somewhat ambiguous.

↑ comment by Vladimir_Nesov · 2023-04-16T22:50:25.326Z · LW(p) · GW(p)

Where did you get the "more than 10%" -part from?

What "more than 10%" part? This string doesn't occur elsewhere in the comments to this post. Maybe you meant "likely more than 50%"? I wrote [LW(p) · GW(p)]:

Another possible claim that's more accurate can say "50%" instead of "48%" (or better yet "median", using an appropriate phrasing), but in fact it's likely more than 50% and requires looking at the data to determine how much.

That's just what the meaning of "median" implies, in this context where people are writing down rounded numbers, so that a nontrivial portion would all say "10%" exactly.

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-16T23:38:36.010Z · LW(p) · GW(p)

I got your notes confused with the actual publisher's notes and it made sense when I figured that you took and combined the quotes from their site. I also analyzed the data. "At least 10%" should actually be "10%". The questionnaire was a free form. I think it's not fair to pair "738 responses, some partial, for a 17% response rate" with these concrete questions. 149 gave an estimate to the first question and 162 to the second question about the extinction. 62 people out of 162 assigned the second question 20% or higher probability.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-16T23:59:19.295Z · LW(p) · GW(p)

"At least 10%" should actually be "10%".

Which "at least 10%" should actually be "10%"? In "48% of respondents gave at least 10% chance of an extremely bad outcome", "at least" is clearly correct.

The questionnaire was a free form. I think it's not fair to pair "738 responses, some partial, for a 17% response rate" with these concrete questions. 149 gave an estimate to the first question and 162 to the second question about the extinction.

Good point. It's under "some partial", but in the noncentral sense of "80% didn't give an answer to this question".

comment by Richard_Kennaway · 2023-04-16T19:56:36.868Z · LW(p) · GW(p)

It took me significant amount of time to write and edit this post, partially because I discarded some 250% of what I wrote: I started over at least three times after writing thousands of words and end up summarizing many paragraphs into just few sentences

Then why do the last four sentences of your first paragraph (to take just one example) all say the same thing?

Replies from: denreik↑ comment by Denreik (denreik) · 2023-04-16T20:01:51.453Z · LW(p) · GW(p)

Some aesthetic choices were made.