How could AIs 'see' each other's source code?

post by Kenny · 2023-06-02T22:41:20.107Z · LW · GW · 31 commentsThis is a question post.

Contents

Answers 7 Max H 2 PaulK None 31 comments

I'm NOT confused about 'how could AIs send each other a link to their Git repo?'.

I'm confused as to what other info would convince an AI that any particular source code is what another AI is running.

How could any running system prove to another what source code it's running now?

How could a system prove to another what source code it will be running in the future?

Answers

What source code and what machine code is actually being executed on some particular substrate is an empirical fact about the world, so in general, an AI (or a human) might learn it the way we learn any other fact - by making inferences from observations of the world.

For example, maybe you hook up a debugger or a waveform reader to the AI's CPU to get a memory dump, reverse engineer the running code from the memory dump, and then prove some properties you care about [LW · GW] follow inevitably from running the code you reverse engineered.

In general though, this is a pretty hard, unsolved problem - you probably run into a bunch of issues related to embedded agency [LW · GW] pretty quickly.

To get an intuition for why these problems might be possible to solve, consider the tools that humans who want to cooperate or verify each other's decision processes might use in more restricted circumstances.

For example, maybe two governments which distrust each other conduct a hostage exchange by meeting in a neutral country, and bring lots of guns and other hostages they intend not to exchange that day. Each government publicly declares that if the hostage exchange doesn't go well, they'll shoot a bunch of the extra hostages.

Or, suppose two humans are in a True Prisoner's Dilemma with each other, in a world where fMRI technology has advanced to the point where we can read off semantically meaningful inner thoughts from a scan in real-time. Both players agree to undergo such a scan and make the scan results available to their opponent, while making their decision. This might not allow them to prove that their opponent will cooperate iff they cooperate, but it will still probably make it a lot easier for them to robustly cooperate, for valid decision theoretic reasons [LW · GW], rather than for reasons of honor or a spirit of friendliness.

↑ comment by O O (o-o) · 2023-06-02T23:25:02.314Z · LW(p) · GW(p)

What I don’t understand is how an agent won’t trust they are being deceived in this information sharing, especially if they are successfully deceived into cooperating then the defector gets a larger reward. Especially if sufficiently complex models interpretability is not computable.

In the human example, there exists a machine that can interpret the humans thoughts and is presumably far more computationally powerful than the human. Could this exist in the case of a super intelligent agent?

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-06-03T18:10:19.111Z · LW(p) · GW(p)

Could this exist in the case of a super intelligent agent?

Probably, if the agents make themselves amenable to interpretability and inspection? If I, as a human, am going through a security checkpoint (say, at an airport), there are two ways I can get through successfully:

- be clever enough to hide any contraband or otherwise circumvent the security measures in place.

- just don't bring any contraband and go through the checkpoint honestly.

As I get more clever and more adversarial, the security checkpoint might need to be more onerous and more carefully designed, to ensure that the first option remains infeasible to me. But the designers of the checkpoint don't necessarily have to be smarter than me, they just have to make the first option difficult enough, relative to any benefit I would gain from it, such that I am more likely to choose the second option.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-03T21:43:33.423Z · LW(p) · GW(p)

Yes but part of what makes interpretability hard is it may be incomputable. And part of what makes making interpretable models difficult to create is that there may be some inner deception you are missing. I think any argument that makes alignment difficult symmetrically makes two agents working together difficult. I mean think about it, if agent 1 can cooperate with agent 2, agent 1 can also bootstrap a version of agent 2 aligned with itself.

Intuitively interpreting an AI feels like we are trying to break some cryptographic hash, but much harder. For example, as humans even interpreting gpt-2 is a monumental task for us despite our relatively vastly superior computational abilities and knowledge. After all, something that’s 2 OOMs superior (gpt-4) could only confidently interpret a fraction of a percent of neurons, and only with certain confidence. It’s likely these are relatively trivial ones too.

Therefore, I think the only way for agent 1 to confirm the motives of agent 2 and eliminate unknown unknowns is to fully simulate it, assuming there isn’t some shortcut to interpretability. And of course fully simulating it requires it to accomplish boxing agent 2, and probably have a large capability edge over it.

I don’t think the TSA analogy is perfect either. There is no (Defect, Cooperate) case. The risks in it are known unknowns. You have a good understanding of what happens if you fail as you just go to jail, but with adversarial agents, they have absolutely no understanding of what happens to them if they are wrong. Being wrong here means you get eliminated. So (D, C)’s negative expectation for example is unbounded. You also have a reasonable expectation of what TSA will do to verify you aren’t breaking the law. Just their methodology of scanning you eliminates a lot of detection methods from the probability space. You have no idea what another agent will do. Maybe they sent an imperfect clone to communicate with you, maybe there’s a hole in the proposed consensus protocol you haven’t considered. The point is this is one giant unknown unknown that can’t be modeled as you are trying to predict what someone with greater capabilities than you can do.

Lastly, I think this chain of reasoning breaks down if there is some robust consensus protocol or some way to make models fully interpretable. However if such a method exists, we would probably just initially align the AGI to begin with as our narrow AI would help us create interpretable AGI, or a robust system of detecting inner deception.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-06-04T20:13:15.863Z · LW(p) · GW(p)

I agree the analogy breaks down in the case of very adversarial agents and / or big gaps in intelligence or power. My point is just that these problems probably aren't unsolvable in principle, for humans or AI systems who have something to gain from cooperating or trading, and who are at roughly equal but not necessarily identical levels of intelligence and power. See my response to the sibling comment here [LW(p) · GW(p)] for more.

yes but part of what makes interpretability hard is it may be incomputable.

Aside: I think "incomputable" is a vague term, and results from computational complexity theory often don't have as much relevance to these kind of problems as people intuitively expect. See the second part of this comment [LW(p) · GW(p)] for more.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-04T22:47:52.104Z · LW(p) · GW(p)

Aside: I think "incomputable" is a vague term, and results from computational complexity theory often don't have as much relevance to these kind of problems as people intuitively expect. See the second part of this comment [LW(p) · GW(p)] for more.

I mean incomputable in that computation would exceed the physical resources of the universe, whether it is P or NP. We can have a weaker definition of incomputable too, say it would exceed the capabilities of the agent by at least 1 OOM.

Specifically in regards to interpretability, if it isn't possible to do easily, my intuition tells me it will require brute force simulation/exhaustive search. (Think mining bitcoin). In that case you need a system more powerful than whatever you are trying to interpret, making it kind of difficult in the context of two agents who are roughly on a similar capability level.

I think it will be difficult for goal maximizers to cooperate for the same reasons it would be difficult for humans to survive in a world with a superintelligent goal maximizer. In almost all argument leading to doom for humans and a paperclip maximizing kind of ASI, you can replace human with agent and the argument will still stand.

It is easy for intelligent humans and groups of humans to cooperate because very rarely do they have fanatical goals., and historically, the ones that did went to war.

↑ comment by Kenny · 2023-06-04T02:18:12.043Z · LW(p) · GW(p)

What source code and what machine code is actually being executed on some particular substrate is an empirical fact about the world, so in general, an AI (or a human) might learn it the way we learn any other fact - by making inferences from observations of the world.

This is a good point.

But I'm trying to develop some detailed intuitions about how this would or could work, in particular what practical difficulties there are and how they could be overcome.

For example, maybe you hook up a debugger or a waveform reader to the AI's CPU to get a memory dump, reverse engineer the running code from the memory dump, and then prove some properties you care about follow inevitably from running the code you reverse engineered.

In general though, this is a pretty hard, unsolved problem - you probably run into a bunch of issues related to embedded agency pretty quickly.

(What do you mean by "waveform" reader"?)

Some practical difficulties with your first paragraph:

- How can AI's credibly claim that any particular CPU is running their code, or that a debugger connected to it isn't being subverted via, e.g. MITM?

- How can AI's credibly claim that whatever the contents of a 'CPU's' memory is at some point, it won't be replaced at some later point?

- How could one AI safely execute code given to it by another (e.g. via "memory dump")?

- How could one AI feasibly run another's code 'fast enough' to be able to determine that it could (probably) trust it now (even assuming [1], [2], and [3] are solved)?

[1] points to what I see as a big difficulty, i.e. AIs will probably (or could) be very distributed computing systems and there might not be any practical way to 'fit into a single box' for, e.g. careful inspection by others.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-06-04T20:04:28.342Z · LW(p) · GW(p)

(What do you mean by "waveform" reader"?)

An oscilloscope. Note that it isn't particularly realistic to hook up a scope to the kind of hardware that current AI systems are typically trained and run on.

But what I was trying to gesture at with this comment [LW(p) · GW(p)], is this is the kind of problem that the AI might be able to help you with. If the AI isn't willing or able to fold itself up into something that can be run entirely on a single, human-inspectable CPU in an airgapped box, running code that is amenable to easily proving things about its behavior, you can just not cooperate with it, or not do whatever else you were planning to do by proving something about it, and just shut it off instead.

(If the AI is already adversarial to the point where it won't let you shut it off, and is running on a distributed system, you've already lost. Willingness to fold itself up and be shut off means that the AI is already pretty aligned; it wouldn't surprise me if this problem is alignment-complete.)

As for your practical difficulties, I agree these are all problems. I am not saying the problem you pose isn't hard, just that there doesn't seem to be anything that makes if fundamentally impossible to solve in principle.

There is lots of academic research on hardware security and secure hardware, verifiable computing (e.g. using zk-SNARKs), formally verified programming, chain-of-trust, etc. that attempt to make progress on small pieces of this problem (not necessarily with a specific focus on AI). Stitching all of these things together into an actually end-to-end secure system for interacting with a smarter-than-human AI system is probably possible, but will require solving many unsolved problems, and designing and building AI systems in different ways than we're currently doing. IMO, it's probably better to just build an AI that provably shares human values from the start.

↑ comment by Kenny · 2023-06-05T15:55:49.912Z · LW(p) · GW(p)

An oscilloscope

I guessed that's what you meant but was curious whether I was right!

If the AI isn't willing or able to fold itself up into something that can be run entirely on single, human-inspectable CPU in an airgapped box, running code that is amenable to easily proving things about its behavior, you can just not cooperate with it, or not do whatever else you were planning to do by proving something about it, and just shut it off instead.

Any idea how a 'folded-up' AI would imply anything in particular about the 'expanded' AI?

If an AI 'folded itself up' and provably/probably 'deleted' its 'expanded' form (and all instances of that), as well as any other AIs or not-AI-agents under its control, that does seem like it would be nearly "alignment-complete" (especially relative to our current AIs), even if, e.g. the AI expected to be able to escape that 'confinement'.

But that doesn't seem like it would work as a general procedure for AIs cooperating or even negotiating with each other.

↑ comment by red75prime · 2023-06-04T09:54:51.372Z · LW(p) · GW(p)

conduct a hostage exchange by meeting in a neutral country, and bring lots of guns and other hostages they intend not to exchange that day

That is they alter payoff matrix instead of trying to achieve CC in prisoner's dilemma. And that may be more efficient than spending time and energy on proofs, source code verification protocols and yet unknown downsides of being an agent that you can robustly CC with, while being the same kind of agent.

We might develop schemes for auditable computation, where one party can come in at any time and check the other party's logs. They should conform to the source code that the second party is supposed to be running; and also to any observable behavior that the second party has displayed. It's probably possible to have logging and behavioral signalling be sufficiently rich that the first party can be convinced that that code is indeed being run (without it being too hard to check -- maybe with some kind of probabilistically checkable proof).

However, this only provides a positive proof that certain code is being run, not a negative proof that no other code is being run at the same time. This part, I think, inherently requires knowing something about the other party's computational resources. But if you can know about those, then it's possible it might be possible. For a perhaps dystopian example, if you know your counterparty has compute A, and the program you want them to run takes compute B, then you could demand they do something (difficult but easily checkable) like invert hash functions, that'll soak up around A-B of their compute, so they have nothing left over to do anything secret with.

↑ comment by O O (o-o) · 2023-06-03T22:04:03.780Z · LW(p) · GW(p)

Can the agent just mute their capabilities when they do this computation? There are very slick ways to speed up computation and likewise slick ways to slow down computation. The agent could say, mess up cache coherency in its hardware, store data types differently, ignore the outputs of some of its compute, or maybe run faster than the other agent expects by devising a faster algorithm, using hardware level optimizations that use strange physics the other agent hasn’t thought of, etc.

Secondly, how would an agent convince another to run expensive code that takes up their entire compute? If you are some nation in medieval Europe, and another adjacent nation demanded every able bodied person to enter a triathlon to measure their net strength, would any sane leader agree to that?

Replies from: PaulK↑ comment by PaulK · 2023-06-03T23:50:10.025Z · LW(p) · GW(p)

- Yup, all that would certainly make it more complicated. In a regime where this kind of tightly-controlled delegation were really important, we might also demand our counterparties standardize their hardware so they can't play tricks like this.

- I was picturing a more power-asymmetric situation, more like a feudal lord giving his vassals lots of busywork so they don't have time to plot anything.

↑ comment by Capybasilisk · 2023-06-03T17:18:44.975Z · LW(p) · GW(p)

Wouldn’t that also leave them pretty vulnerable?

Replies from: PaulK↑ comment by PaulK · 2023-06-03T23:56:07.201Z · LW(p) · GW(p)

In the soaking-up-extra-compute case? Yeah, for sure, I can only really picture it (a) on a very short-term basis, for example maybe while linking up tightly for important negotations (but even here, not very likely). Or (b) in a situation with high power asymmetry. For example maybe there's a story where 'lords' delegate work to their 'vassals', but the workload intensity is variable, so the vassals have leftover compute, and the lords demand that they spend it on something like blockchain mining. To compensate for the vulnerability this induces, the lords would also provide protection.

31 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2023-06-04T06:55:31.479Z · LW(p) · GW(p)

I think that solutions that revolve around "giving access" or "auditing" a separate physical system are not likely to work and Yudkowsky seems to suggest more distributed mechanisms such as both agents building a successor agent together that acts on behalf of both. I'm not sure how this could work in detail either but it seems much more doable to build a successor agent in a neutral space that both parties can observe with high fidelity.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-04T22:55:39.148Z · LW(p) · GW(p)

This seems like a more reasonable answer but I still feel uneasy about parts of it.

For one they will have to agree on the design of the successor which may be non trivial or non possible with two adversarial agents.

But more importantly, if a single successor could take the actions to accomplish one of their goals, why can't the agent take those actions themselves? How does anything that hinders one of the agents doing the successor's actions on their own not also hinder the successor?

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-05T07:46:55.758Z · LW(p) · GW(p)

It is much easier to agree on a technology than on goals. Technology can serve many purposes, you just have to avoid technologies that have too one-sided uses - which is probably the risk you mean. But as long as cooperation overall is favored by the parties, I think it is likely that the agents can agree on technology.

why can't the agent take those actions themselves?

Oh, they can. They just don't trust that the other party will uphold their part of the bargain in their part of the lightcone. That you can only achieve with an agent that embodies the negotiated mix of both agents' goals/values.

Note that humans and human organizations build such agents, so there is precedent. We don't call them that, but I think it is close: States often set up organizations such as the United Nations that get resources and are closely monitored by the founding countries, and that serves the joint purpose of the founding states.

In the small, when two people set up a legal entity, such as a society, and write the memorandum of association, they also create a separate entity that executes to some degree independently.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-05T20:37:08.287Z · LW(p) · GW(p)

They just don't trust that the other party will uphold their part of the bargain in their part of the lightcone.

More succinctly stated, we need two copies of the successor agent right? What prevents the two copies from diverging? And if we don’t need two copies of the successor, I don’t believe there’s a true prisoner’s dilemma.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-05T22:24:12.026Z · LW(p) · GW(p)

Yes, to avoid the prisoner's dilemma or to be able to trust the other party, the proposed solution is to have a single agent that both parties trust in the sense of that it embodies the negotiated tradeoff.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-05T22:52:00.848Z · LW(p) · GW(p)

To me the issue of getting them to trust the embodiment just seems like an isomorphism of the original problem.

We haven’t fundamentally solved anything other than making it a bit harder to deceive the counterparty. Instead of agreements and actions acting as a proof of compromise we have code and simulations of the code as a proof of compromise.

There is also an implicit agreement of the resulting agent being a successor (and not taking actions after it “succeeds” the predecessor).

It may seem unreasonable to assume agents to be this adversarial but I think it’s reasonable if we are talking about something like a paperclip maximizer and a stapler maximizer.

Replies from: Gunnar_Zarncke, Gunnar_Zarncke, Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-06T00:12:38.804Z · LW(p) · GW(p)

To me, there seems to be a big difference between

- you let an external agent inspect everything you do (while you inspect them) and where your incentive is to deceive them; where there is an asymmetry between your control of the environment, your lost effort, and your risk of espionage (and vice versa for them)

- both of you observe the construction of a third agent on neutral ground where both parties have equally low control of the environment, low lost effort, and low risk of espionage (except for the coordination technology, which you probably want to reveal).

↑ comment by Gunnar_Zarncke · 2023-06-06T00:18:39.448Z · LW(p) · GW(p)

To me, there seems to be a big difference between

- you let an external agent inspect everything you do (while you inspect them) and where your incentive is to deceive them; where there is an asymmetry between your control of the environment, your lost effort, and your risk of espionage (and vice versa for them)

- both of you observe the construction of a third agent on neutral ground where both parties have equally low control of the environment, low lost effort, and low risk of espionage (except for the coordination technology, which you probably want to reveal).

↑ comment by O O (o-o) · 2023-06-06T01:28:49.877Z · LW(p) · GW(p)

Hm, I think I am unclear on the specifics of this proposal, do you have a link explicitly stating it? When you said successor agent, I assumed a third agent would form describing their merged interests, which would then be aligned, and then the two adversaries would deactivate somehow. If this is wrong or it misses details, can you state or link the exact proposal?

And this is still isomorphic in my opinion because the agent can build a defector (as in defects from cooperating) successor behind the back of the adversary or not actually deactivate. (For the last one I can see it instructing the successor to forcefully deactivate the predecessors, but this seems like they have to solve alignment).

↑ comment by Gunnar_Zarncke · 2023-06-06T08:24:52.885Z · LW(p) · GW(p)

I am not aware of a proposal notably more specific than what I wrote above. It is my interpretation of what Yudkowsky has written here and there about it.

The adversaries don't need to deactivate because the successor will be able to more effectively cooperate with both agents. The originals will simply be outcompeted.

Building a defective successor will not work either because the other agent will not trust it, and the agent building it will not trust it fully either if it isn't fully embodying its interests, so why do it to begin with?

You may be on something regarding how to identify the identity of an agent, though.

Replies from: o-o, o-o↑ comment by O O (o-o) · 2023-06-06T08:39:13.999Z · LW(p) · GW(p)

Ok say successor embodies the (C,C) decision. Why can’t one agent make (D,C) behind the other agents back. Likewise, why can’t the agent just choose to (D,C). It could be higher EV to say decisively strike these cooperators while they’re putting resources to laying paper clips and eliminate them.

This is completely off topic but is there a reason why your comments are immediately upvoted?

Replies from: Gunnar_Zarncke, Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-06T13:55:42.797Z · LW(p) · GW(p)

My comments are not upvoted but my normal comments count as two to begin with because I'm a user with sufficiently high karma. See Strong Votes [Update: Deployed] [LW · GW].

↑ comment by Gunnar_Zarncke · 2023-06-06T14:11:12.158Z · LW(p) · GW(p)

I'm not sure what you mean by the "successor embodies the (C,C) decision". It embodies the negotiated interest combination.

I think the comparison with the United Nations is quite good, actually. Once the UN has been set up, say, the USA can still try to hurt Russia directly, but the UN will try to find a compromise between the two (or more) actors - to the benefit of all.

Replies from: o-o, o-o↑ comment by O O (o-o) · 2023-06-06T16:26:00.279Z · LW(p) · GW(p)

I think the comparison illustrates my point because the UN is typically not seen as enforceable and negotiated interests only exist when cooperating is the best choice. For human entities that don’t have maximization goals, both cooperating is often better than both defecting.

(C,C) means both cooperate. (D,C) means defect and cooperate. In classic prisoners dilemma. (D,C) offers a higher EV than (C,C) but less than (D,D).

I don’t think there is any way to weasel around a true prisoners dilemma with adversaries. It’s a simple situation and arises naturally everywhere.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-06T23:33:29.526Z · LW(p) · GW(p)

I agree that the prisoners' dilemma occurs frequently, but I don't think you can use it the way you seem to in the building-a-joint-successor agent. I guess we are operating with different operationalization in mind, and until those are spelled out, we will probably not agree.

Maybe we can make some quick progress on that by going with the pay-off matrix but for each agents choice adding a probability that the choice is detected before execution. We also at least need a no-op case because presubly you can refrain from building a successor agent (in reality there would be many in-between options but to keep it manageable). I think if you multiply things out the build-agent-in-neutral place comes out on top.

↑ comment by O O (o-o) · 2023-06-06T20:00:45.504Z · LW(p) · GW(p)

The (C,C) decision is what you’re describing, a decision to cooperate on negotiated interests. The (D,C) decision is if one party cooperates but the other party does not cooperate.

I don’t think it is. The UN is a constant example of prisoners dilemma given the number of wars it has stopped (exactly 0).

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-06T20:05:49.593Z · LW(p) · GW(p)

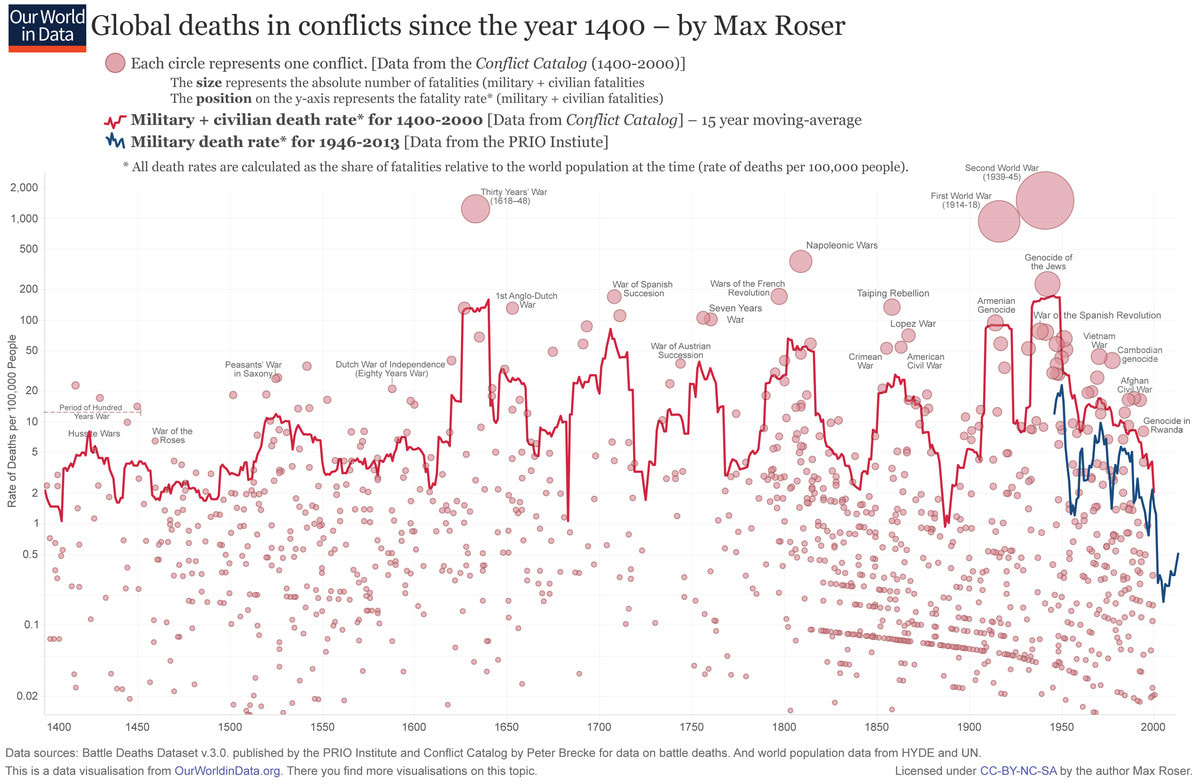

There are fewer wars since about its foundation in 1945:

https://www.vox.com/2015/6/23/8832311/war-casualties-600-years

Replies from: o-o↑ comment by O O (o-o) · 2023-06-06T22:20:14.979Z · LW(p) · GW(p)

I believe this is largely due to the globalization of the economy, MAD, and proxy conflicts. Globalization makes cooperating extremely beneficial. MAD makes (D,C) states very costly in real wars (before nuclear and long ranged automated weapons, a decisive strike could result in a large advantage, now there is little advantage to a decisive strike, see Pearl Harbor as an example in the past). Most human entities are also not so called fanatical maximizers (tho some were, for example the Nazis who wanted endless conquest and extermination).

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-06T23:35:11.158Z · LW(p) · GW(p)

Maybe the argument doesn't work for the UN, though that could also be bad luck. But people found organizations together all the time and I would be very surprised if that were not profitable.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-07T00:28:12.127Z · LW(p) · GW(p)

People are not fanatical immortal maximizers that are robustly distributed with near unlimited regenerative properties. If we were I’d expect there to be exactly one person left on earth after an arbitrary amount of time.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-07T01:13:04.898Z · LW(p) · GW(p)

That seems like an unrelated argument to me. The agents we are talking about here are also physically limited. Maybe they are more powerful, but they are presumably more powerful in some kind of sphere of influence, and they need to cooperate too. Sure, any analogy has to be proven tight, but I have proposed a model for that in the other comment.

Replies from: o-o↑ comment by O O (o-o) · 2023-06-07T01:18:06.767Z · LW(p) · GW(p)

Isn’t there a base assumption that agents are super intelligent, don’t “decay” I.e. they have infinite time horizons, they are maximizing EV, and would work fine alone?

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-06-07T09:31:54.771Z · LW(p) · GW(p)

No?

And even if they do not decay and have long time horizon, they would still benefit from collaborating with each other. This is about how they do that.

↑ comment by Gunnar_Zarncke · 2023-06-06T00:11:02.718Z · LW(p) · GW(p)

To me, there seems to be a big difference between

- you let an external agent inspect everything you do (while you inspect them) and where your incentive is to deceive them; where there is an asymmetry between your control of the environment, your lost effort, and your risk of espionage (and vice versa for them)

- both of you observe the construction of a third agent on neutral ground where both parties have equally low control of the environment, low lost effort, and low risk of espionage (except for the coordination technology, which you probably want to reveal).

comment by Charlie Steiner · 2023-06-04T15:50:22.112Z · LW(p) · GW(p)

Lacking access to the other's hardware, I think you'd need something that's easy to compute for an honest AI, but hard to compute for a deceptive AI. Because a deceptive AI could always just simulate an honest AI, how do you distinguish simulation?

The only way I can think of is resource constraints. Deception adds a slight overhead to calculating quantities that depends in detail on the state of the AI. If you know what computational capabilities the other AI has very precisely, and you can time your communications with it, then maybe it can compute something for you that you can later verify implies honesty.

Replies from: Kennycomment by AnthonyC · 2023-06-03T15:42:24.355Z · LW(p) · GW(p)

I don't know if that's possible in full generality, or with absolute confidence, but you can't really know anything with absolute confidence. Here's my layman's understanding/thoughts.

Nevertheless, if one system gives another what it claims is its code+current state, then that system should be able to run the first system in a sandbox on its own hardware, predict the system's behavior in response to any stimulus, and check that against the real world. If both systems share data this way, they should each be able to interact with the sandboxed version of the other, and then come together later, each knowing how the simulated interaction that the other had already went. They can test this, and continue testing, it by monitoring the other's behavior over time.

Replies from: Kenny↑ comment by Kenny · 2023-06-05T20:15:28.026Z · LW(p) · GW(p)

Some of my own intuitions about this:

- Yes, this would be 'probabilistic' and thus this is an issue of evidence that AIs would share with each other.

- Why or how would one system trust another that the state (code+data) shared is honest?

- Sandboxing is (currently) imperfect, tho perhaps sufficiently advanced AIs could actually achieve it? (On the other hand, there are security vulnerabilities that exploit the 'computational substrate', e.g. Spectre, so I would guess that would remain as a potential vulnerability even for AIs that designed and built their own substrates.) This also seems like it would only help if the sandboxed version could be 'sped up' and if the AI running the sandboxed AI can 'convince' the sandboxed AI that it's not' sandboxed.

- The 'prototypical' AI I'm imagining seems like it would be too 'big' and too 'diffuse' (e.g. distributed) for it to be able to share (all of) itself with another AI. Another commenter mentioned an AI 'folding itself up' for sharing [LW(p) · GW(p)], but I can't understand concretely how that would help (or how it would work either).