A Simplified Version of Perspective Solution to the Sleeping Beauty Problem

post by dadadarren · 2020-12-31T18:27:14.349Z · LW · GW · 39 commentsThis is a link post for https://www.sleepingbeautyproblem.com

Contents

40 comments

This post attempts to give a more intuitive explanation of my perspective-based solution to anthropic paradoxes. [LW · GW]By using examples, I want to show how perspectives are axiomatic in reasoning.

For each of us, our perceptions and experience originate from a particular perspective. Yet when reasoning we often remove ourselves as the first-person and present our logic in an objective manner. There are many names for this way of thinking, e.g. “take the third-person view”, “use an outsider’s perspective”, “think as an impartial observer”, “the god’s eye view”, “the view from nowhere”, etc.

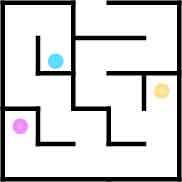

I think the best way to highlight the differences between god’s-eye-view reasoning and first-person reasoning is to use examples. Imagine during Alex’s sleep some mad scientist placed him in a maze. After waking up, he sees the scientist left a map of the maze next to him. Nonetheless, he is still lost. Here we can say, given the map, the maze is known. Alex is lost because he doesn’t know his location.

Figure 1. From a god’s eye view, the maze’s location is known. But Alex’s location is unknown. The colored dots show some possibilities given Alex’s immediate surroundings.

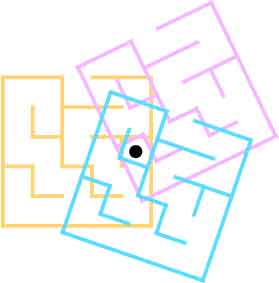

In contrast, an alternative interpretation is to think in Alex’s shoes. I can say of course I know where I am. Right here. I can look down and see the place I am standing on. No location in the world is more clearly presented to me than here. I’m lost because I don’t know how is the maze located relative to me, even with the map.

Figure 2. Here is known. The maze’s location is unknown. The colored parts show some possibilities.

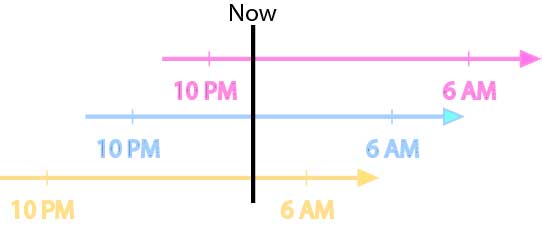

This difference also exists in examples involving time. Imagine a thunder wakes Alex up at night. Here we can say he doesn’t know what time it is. Maybe he went to bed at 10 pm and his alarm clock rings at 6 am. There is no information to place his awakening on the time axis.

Figure 3. From a god’s eye view, it is known when Alex went to bed and when the alarm clock is going to ring. But the time when the thunder wakes him up is not known. Colored lines show some possibilities.

Alternatively, we can think from Alex’s perspective as he wakes up. I can say of course I know what time it is. It is now. It is the time most immediate to my perception and experience. No other moment is more clearly felt. What I don’t know is how long ago was 10 pm when I went to bed, and how far in the future will my alarm clock ring at 6 am, i.e. how other moments locate relative to now.

Figure 4. Now is known. But how other moments locate relative to now is unknown. The colored part shows some possibilities.

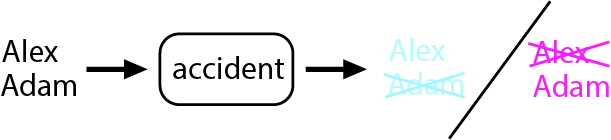

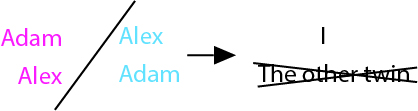

The same difference also exists when identities are involved. Imagine Alex and Adam are identical twins. They got into a fatal accident while traveling in the same vehicle. One of them died while the other suffers complete memory loss. If they look the same and have the same belongings, it can be said there is no way to tell who survived the tragedy.

Figure 5. The past is known. The survivor is unknown. The colored parts show two possible outcomes.

There are other ways to describe the situation. For example, if we take the perspective of the survivor, it is obvious who survived the accident. I did. This self-identification is based on immediacy to the only subjective experience available: all senses felt are due to this body. No other persons or things are more closely perceived. Whether I was Alex or Adam, however, is unknown. In a sense, I don’t know how was the world located relative to me.

Figure 6. Obviously, I survived the accident while the other twin didn’t. However, the past is unknown. Whether I was Adam or Alex is lost. Colored parts show the two possibilities.

These examples show how different perspectives analyze problems differently. Without getting into all the details I want to stress the following points:

- First-person thinking is self-centered. Special consideration is given to here, now, and I. The perspective center is regarded as primitively understood due to its closeness to perception and subjective experience. It is the reasoning starting point. Other locations, moments, and identities are defined by their relative relations to the perspective center.

- First-person and god’s-eye-view are two distinct ways of reasoning. They should not be used together. For example, in the maze problem, we cannot say both the maze’s and Alex’s locations are known. While the former is true from a god’s-eye-view and the latter is true from Alex’s first-person perspective, mixing the two would make the whole “lost in the maze” situation unexplainable.

Anthropic problems are unique because they are formulated from specific first-person perspectives (or a set of perspectives). There is no straightforward god’s-eye-view alternative.

Take the sleeping beauty problem as an example. It asks for the probability when beauty wakes up in the experiment. However, there may be two awakenings. From a god’s eye view, the problem is not fully specified. Which awakening is being referred to? Why update the probability base on that particular awakening? But things are clear from beauty’s first-person perspective. I should obviously update my belief base on my experience: base on this awakening right now, the one I have experience of. From beauty’s point of view, today is primitively understood, no clarification is needed to differentiate it from the other day. And we intuitively recognize the problem is asking about a specific awakening/day.

Paradoxes ensue when we try to answer the problem from a god’s eye view while keeping that intuition. As pointed above, the awakening is not specified from god’s eye view. We mistakenly try to fill in the blanks with additional assumptions. For example, regard this awakening as randomly selected from all awakenings (Self-Sampling Assumption), or regard today as randomly selected from all days (Self-Indication Assumption). By redefining the first-person center from the god’s eye view, these assumptions provide the seemingly missing part of the question: which specific awakening is being referred to, and why update the probability base on that particular awakening.

Different anthropic assumptions give different answers, which also leads to different paradoxes. However, these assumptions are redundant because the question should have been answered the same way it is asked: from Beauty’s first-person perspective. There is no reference class for I, here, and now. They are primitively identified right from the start, not selected from some similar group. Even the superficially innocent notion of “I am a typical observer” or “now is a typical moment” is false. From a first-person perspective, I, now, and here are inherently special as shown by the self-centeredness.

The correct answer is simple. From Beauty’s viewpoint, there is no new information as I wake up in the experiment. Because finding myself awake here and now is logically true from the first-person perspective. The probability stays at 1/2. From a god’s eye view, all that can be said here is there’s (at least) one awakening in the experiment.

Some argue there is new information. That being awake on a specific day is evidence suggesting there are more awakenings. Halfers typically disagree by saying it is unknown which day it is. Thirders counter that by saying Beauty knows it is today. Halfers often argue today is not an “objective” identification. Thirders response by saying all the details Beauty sees after waking up can be used to identify the particular day. For example, the experimenter can randomly choose one day and paint the room red and paint the room blue on the other day. If Beauty sees red after waking up, then we know Beauty is awake on the Red day. Which is unknown before. Some argue this new information favors more awakenings.

To that, I would ask: out of the two days, why update the probability base on what happens on the Red day? Why not focus on what happens on the Blue day and update the probability instead? Thirders may find this question painfully trivial: because what happens on the Blue day is not available. Beauty is experiencing the Red day. That’s reasonable. However, that means it doesn’t matter if we use the word today or use details observed after waking up, that particular day is still specified from Beauty’s first-person perspective. Yet from a first-person perspective finding myself awake here and now is expected. The argument for new information switches to a god’s eye view. As if an outsider randomly selects the Red day then finds Beauty awake. This argument depends on first-person reasoning -to specify the day, and god’s-eye-view reasoning -to calculate the prior probability of finding Beauty awake on said day. It mixes parts from two perspectives. (For a more detailed analysis see my argument against Full Non-Indexical Conditioning and perspective disagreements)

As Beauty wakes up in the experiment, some may ask “what is the probability that today is Monday?”. The answer is interesting: there is no such probability. Remember as the first-person, the perspective center is the reasoning starting point. This includes I, here, now, and by extension today. Like axioms, they cannot be explained by logic or underlying mechanics. They are identified by intuition- by their apparent closeness to subjective experience. So there is no logical way to formulate a probability about what the perspective center is.

One has to mix first-person and god’s-eye-view to validate this probability. For example, we can do so by accepting any one of the anthropic assumptions. They redefine today/this awakening as a randomly selected sample from some proposed reference class. Doing so means we can reason from a god’s-eye-view and justifies the principle of indifference to all days/awakenings. However, as discussed before, this mix is false. Now is a first-person concept that is inherently unique from all other moments. A principle of indifference categorically contradicts the self-centeredness of the first-person view. (For a more detailed discussion against self-locating probability, and why they are invalid from the frequentist or decision-making approach see my argument here).

39 comments

Comments sorted by top scores.

comment by Lotaria · 2021-03-10T11:43:49.040Z · LW(p) · GW(p)

I find this idea very interesting, especially since it seems to me that it gives different probabilities than most other version of halfing. I wonder if you agree with me about how it would answer this scenario (due to Conitzer):

Two coins are tossed on Sunday. The possibilities are

HH: wake wake

HT: wake sleep

TH: sleep wake

TT: sleep sleep

When you wake up (which is not guaranteed now), what probability should you give that the coins come up differently?

According to most versions of halfing, it would be 2/3. You could say that when you wake up you learn that you wake up at least once, eliminating TT. Alternatively, you could say that when you wake up the day is selected randomly from all the days you wake up in reality. Either way you get 2/3.

However, what if we say that "today" is not selected at all from our perspective? If "today" wasn't selected at all, it can't possibly tell us anything about the other day. So it would be 1/2 probability that the coins are different.

The weird thing about this is that if we change the situation into:

HH: wake wake

HT: wake sleep

TH: sleep wake

TT: wake wake

Now it seems like we are back to the original sleeping beauty problem, where again we would say 1/2 for the probability that the coins are different. How can the probability not change despite TT: sleep sleep turning into TT: wake wake?

And yet, from my own perspective, I could still say that "today" was not selected. So it still gives me no information about whether the other coin is different, and the probability has to stay at 1/2.

Replies from: dadadarren↑ comment by dadadarren · 2021-04-25T20:04:35.530Z · LW(p) · GW(p)

For the first question, perspective-based reasoning would still give the probability of 2/3 simply because there is no guaranteed awakening in the experiment. So finding myself awake during the experiment is new information even from the first-person perspective, eliminating the possibility of TT.

For the second question, the probability remains at 1/2. Due to no new information.

For either question "the probability of today being the first day" is not meaningful and has no answer.

Replies from: Lotaria↑ comment by Lotaria · 2021-04-27T06:06:02.521Z · LW(p) · GW(p)

There is new information in the first scenario, but how does it allow you to update the probability that the coins are different without thinking of today as randomly selected?

Imagine you are woken up every day, but the color of the room may be different. You are asked the probability that the coins are different.

HH: blue blue

HT: blue red

TH: red blue

TT: red red

Now you wake up and see "blue." That is new information. You now know that there is at least one "blue", and you can eliminate TT.

However, I think everyone would agree that the probability is still 1/2. It was 1/2 to begin with, and seeing "blue" as opposed to "red", while it is new information, is not relevant to deciding the coins are different.

Back to scenario 1:

HH: wake wake

HT: wake sleep

TH: sleep wake

TT: sleep sleep

Now you wake up. That is new information, and you can eliminate TT. But the question is, how is that relevant to the coins being different? If you are treating "today" as randomly selected from all days that exist in reality, then that would allow you to update. But if you are not treating "today" as randomly selected at all, then by what mechanism can you update?

Just going by intuition, I personally don't think you should update. In this scenario the coin doesn't need to be tossed until the morning. Heads they wake you up, tails they don't. So when you wake up, you do get new information just like in the blue/red example. But since the coins are independent of each other, how can learning about that morning's coin tell you something about the other coin you don't see? Unless you are using a random selection process in which "today" not primitive.

Replies from: dadadarren↑ comment by dadadarren · 2021-06-01T19:36:46.868Z · LW(p) · GW(p)

Sorry for the late reply, didnt check lesswrong for a month. Hope you are still around.

After your red/blue example I realized I was answering to rashly and made a mistake. Somehow I was under the impression the experiment just have multiple awakenings without memory wipes. That was silly of me cause then it wont even be an anthropic problem.

Yes, you are right. With memory wipes there should be no update. The probability of different toss result should remain at 1/2.

Replies from: Simon↑ comment by Simon · 2021-07-15T00:00:36.317Z · LW(p) · GW(p)

Because you’re a double halfer, I see a contradiction in your conclusion about Lotaria’s colour room example. You’ve previously made a distinction between self-locating events, which are guaranteed to happen, and random outcomes that have genuine probability. Your position has been that rules of conditionalisation apply only to random events, not to self location.

In the colour room example, the coin flips are random events. The subsequently experienced colour ‘blue’ is not a random event; it is a confirmation of one of the fixed self-locations within each possible outcome.

By double halfer reasoning, when she sees a blue room, no conditionalisation will be applied apart from the elimination of TT. Since self-locations can’t be conditioned on in the same way, she would say that HH, HT and TH have an equal credence of 1/3, even though red room awakenings in two of the outcomes have been eliminated. If the coins landed HH, she doesn’t know whether she’s in the first or second awakening. If the coins landed HT, she know she’s in the first. If the coins landed TH, she knows she’s in the second. But for a double halfer, this information about her location or lack of it can’t be conditioned on to increase or decrease the probability of how the coins landed.

So because you’re a double halfer who treats self-location differently, you would have to say the probability is 2/3 that the coin tosses were different in the coloured room example, just as you do in the wake/sleep version. Do you see the consistency of that?

Incidentally I don't share your view about self-location. I would argue that in the coloured room example, information about her self-location should be conditioned no differently than random events. If so, the answer is indeed 1/2 that the coin tosses are different. However, in the first scenario where she’s awake or asleep, I would agree it’s 2/3 the coin tosses are different. This makes me a pure halfer rather than a double halfer for the original SBP. I've touched on some of the inconsistencies I see with double-halfing.

Replies from: dadadarren↑ comment by dadadarren · 2021-07-25T20:47:56.654Z · LW(p) · GW(p)

The double-halfer logic you just described: not conditionalizing on self-locating information unless it rejects a possible-world (like seeing blue rejects TT in Loaria's example), is called the "halfer rule" by Rachael Briggs. It has obvious shortcomings very well countered by Michael Titelbaum in "An Embarrassment for Double Halfers" and by Vincent Contizer in "A Devastating Example for the Halfer Rule".

My position is different from any (double) halfer argument that I know of. I suggest perspectives cannot be reasoned or explained, they are defined by the subjective. So if we want to use "today" as a specific day in the logic, then we have to imagine being the subject waking up in the experiment. Here "today" is a primitively defined moment. Because it is primitive, there is no way to assign any probability to "today is the first day" or "today is the second day". I'm arguing self-locating probabilities like these simply cannot exist. Different from other double-halfer camps that think self-locating probability exists yet try to come up with special updating rules for self-locating information.

So there are a few points not consistent with my position. You said experiencing "blue" is not a random event, but I think it is. Imagine waking up during the experiment as the first-person, before checking the color, I understand the time is "today": a moment primitively defined. I do not know the color for today because it depends on today's coin toss: a random event. After seeing Blue I know today's toss is H, but knows nothing about the toss of "the other day". So the probability of both coin having the same result remains at 1/2. If you are interested in my precise position of self-locating probabilities check out my page here.

In this analysis whether "today" is the first or the second day was not part of the consideration. However if you really wish to dig into it then here is the analysis: If today is the first then the two possibilities are HT and HH, if today is the second then the two possibilities are HH and TH. In each case, the two are equally probable. But once again, there is no probability for "today is the first day" or "today is the second day". It is a primitive reasoning starting point that cannot be analyzed.

Replies from: Simon↑ comment by Simon · 2022-03-02T03:33:50.700Z · LW(p) · GW(p)

Hi. It's been a while. I still find the implications of your reasoning fascinating. I am seeking to explore whether I agree with it. I recognise there are several double halfer positions. I’ll attempt to work with your version.

For all double halfers, in the original Sleeping Beauty Problem, the probability of Heads is 1/2 before she’s told what day it is and 1/2 after she learn it’s Monday. Whereas for a pure halfer and thirder, the elimination of Tuesday within Tails is subject to Bayesian updating and treated just like the elimination of a random event that had probability. Thus a thirder starts with 1/3 and updates to 1/2 for Heads, while a halfer starts with 1/2 and updates to 2/3.

Your particular reasoning, as I understand it, is that eliminating Tuesday within Tails only ruled out a self-location inside an outcome without removing any evidence for that outcome. A Tuesday awakening was not a random event and had no probability. It was a pre-existing self-location inside the Tails world. Likewise, Monday was a self-location in both worlds and can't be assigned a probability. Tuesday’s elimination therfore did not reduce the probability of Tails having occurred.

Before she was told the day, Heads and Tails were equally likely. The only difference was that if the coin landed Heads her self-location was known, while if the coin landed Tails her self-location was unknown. In either case, only one self-location was relevant to her and this had no probability value. Regardless of the coin, she knew she was going to self-locate somewhere and her last memory would be Sunday. This was equally likely to happen with Heads and Tails. Being told it was Monday only confirmed and removed ignorance of when she currently existed within the coin outcome. It gave her no evidence about that outcome. If this is not your reasoning, you can correct me.

Now let’s do a familiar tweak. Suppose that, regardless of the coin, she is woken on both days with amnesia between. On Tuesday, if the coin landed Tails, her room is painted red. Otherwise her room is painted blue. What is her credence for the coin before she sees the room colour and after she sees that it’s blue. For pure halfers (and also thirders in this case), it’s straightforward. The probability of Heads is 1/2 before she sees the room colour, and 2/3 after she sees it’s blue.

How would you apply your self-location reasoning? From what I can tell it must logically be this. Seeing that the room is blue eliminates that her self-location is Tuesday within Tails. However, as with the original version, Tuesday within Tails was not a random event and had no probability. It’s elimination did not rule out, or reduce the probability of, Tails. Nor has she learnt anything new about the probability of Heads. Therefore the coin outcome is 1/2 both before and after she knows the room colour is blue. The only difference afterwards is that, if the coin landed Heads, her self-location remains unknown, while if the coin landed Tails she knows it's Monday. Again, you can correct me if this is not your reasoning.

Finally lets do a version that I think may confound you. As before, regardless of the coin, she is woken on both Monday and Tuesday with amnesia between. This time, regardless of the coin outcome, on one of the days the room will be painted blue while on the other it will be painted red. If the coin lands Heads, it will be randomly decided which day the room is painted blue and on which it is painted red. If the coin lands Tails, the room will be painted blue on Monday and red on Tuesday.

The above version exposes the self-location issue. We can agree there is only one coin flip (not two as we explored in our previous exchange). Everyone can also agree that before she sees the room colour, there is complete parity. Heads and Tails are equally likely.

Now suppose she observes that the colour of the room is blue. Seeing this colour would appear to give you different information if the coin landed Heads than if it landed Tails. If the coin landed Heads, the room being painted blue is identified as an independent random event from the coin flip that had equal probability; either blue or red could have been selected for this day. Therefore the event sequence of the coin landing Heads and the room being painted red must be eliminated for Bayesian updating. However if the coin landed Tails, the room being painted blue was linked to her self-location. Monday was not a random event and has no probability. Assuming the coin landed Tails, today is definitely Monday and blue was the only colour that could have been selected for this self-location. Tuesday within Tails is of course ruled out as being the alternative self-location inside that outcome but this can’t be used for Bayesian updating and it doesn’t reduce the probability of Tails.

Therefore, upon seeing blue, it is now 1/3 the coin landed Heads and 2/3 it landed Tails. The same is would be true if she’d seen the colour red. In this version, using your self-location reasoning, whichever colour she sees, Beauty must become a thirder. Therefore she should logically be a thirder before she even sees the colour.

I think we can both agree that this is wrong. If I’m missing something that supports your particular double halfer position and reasoning about self-location, I'd be curious to know it.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-02T17:44:33.314Z · LW(p) · GW(p)

Hi Simon. The double-halving argument you are using is different from my approach. It is actually similar to "compartmentalized conditionalization" by Meacham. That argument basically says, for whatever reason, eliminating the case of Tails and Tuesday should not change the probability of Tails as a whole, it only makes Tails and Monday the entire possibility for Tails. i.e. the conditionalization only applies within the "compartment" of Tails. In this case, you used "no self-locating probability" as that reason.

If I'm not mistaken this is the earliest form of double-halving argument ever appeared. And it has some problems. Your example is a good counter.

My position is that the first-person perspective, such as which person I am, which moment is now, etc, are basic axiomatic facts. Each of us inherently knows which one I am: all experience is due to this physical agent. It is so basic that there is no cause as to why "I am this person", nor is there any explanation to it. It just is. So there is no valid way to think about self-locating probability.

No self-locating probability does not mean the probability of Tails remains unchanged at 1/2 after updating, (as compartmentalized conditioning suggested). It means there is no valid way to update using the self-locating information "it is Monday" at all. Because beauty cannot comprehend "the probability that today is Monday". All that can be said is either it is Monday or not and she doesn't know. Yet Bayesian update would have to use probabilities like P(Monday), P(Monday and Heads), P(Monday and Tails), P(Monday|Heads), and P(Monday|Tails) to work.

So for your latter example: after seeing Red/Blue, I will not say Heads' probability is halved while Tails' remain the same. I will say there is no way to update.

What I find useful while thinking about the paradoxes is to use a repeatable anthropic experiment then use a frequentist approach.

Imagine during tonight's sleep, an advanced alien would split you into 2 halves right through the middle. He will then complete each part by accurately cloning the missing half onto it. By the end, there will be two copies of you with memories preserved, indiscernible to human cognition. After waking up from this experiment, and not knowing which physical copy you are, how should you reason about the probability that "my left side is the same old part from yesterday?"

This question is formulated using the first-person perspective: it questions my body. And it can be repeated. After waking up on the second day you (the first person) can participate in the same process again. When waking up on the third day, you have gone through the split a second time. You can ask the same question "Is my left side the same as yesterday (as in the second day overall)?" Notice the subject in question is always identified by the post-fission first-person perspective. Imagine doing this many times, there is no reason for the relative frequency of "my left side being the same old part yesterday" to approach any particular value. Because there is nothing to determine which copy "I" am in each experiment. I just am. But if an independent coin is tossed every night, then as the iterations go on, I will see about half Heads and Tails.

We can make a coloring scheme like your example. If the coin is Heads, then two copies are randomly put into a blue and a red room. If the coin is Tails then the left copy would be in the blue room and the right copy in the red. The relative frequency of Red vs Blue in all Tails cases would not approach any particular value. In Heads cases it will of course approach 1/2. Therefore there is no particular overall long-run frequency. So before opening my eyes there is no "probability that I will see Red". And after seeing red there is no way to update the probability of the coin toss.

Replies from: Simon↑ comment by Simon · 2022-03-03T19:51:50.862Z · LW(p) · GW(p)

Interesting Dadarren.

I sense that you’re close to being converted to become a ‘pure halfer’, willing to assign probability to self-locations. Let me address what you said.

“Your latter example: after seeing Red/Blue, I will not say Heads' probability is halved while Tails' remain the same. I will say there is no way to update. “

I assume you mean that the probability of 1/2 for Heads or Tails – before and after she sees either colour – remains correct. We can agree on that. What matters is why it is true. You’re arguing that no updating is possible after she sees a colour. I invite you to examine this again.

The moment she sees – for example – blue, we can agree that Tails/Red and Heads/Red are definitely ruled out. The difference is that Tails/Red reflects a self-location whereas Heads/Red does not. You’re claiming that because Tails/Red reflects a self-location - i.e the room colour isn’t a random event - no updating is allowed. But you can’t make the same claim about Heads/Red. With Heads, the room being painted red is a random event. For Beauty, the Heads/Red outcome had an unequivocal 1/4 chance of being encountered and it has just been eliminated by her seeing the colour blue. So how can Heads not be halved?

Suppose Beauty had her eyes closed with the same set up. Suppose, before she opens her eyes, she must be told straight away whether she’s in a Heads/Red awakening. It’s confirmed that she’s not. You’d agree that the probability of Heads is now halved while for Tails it isn’t. No problem updating. It’s 1/3 heads 2/3 Tails. Next, suppose she must be told whether she’s in a Tails/Tuesday awakening. It’s confirmed that she’s not. By your reasoning, this would not reduce the probability of Tails. Moreover, Heads has already been halved and there is no reason to change it back. Therefore, if self-locations are un-updatable, her probability must still be 1/3 for Heads and 2/3 for Tails. What’s more, the information she just received is the same as she would have got by opening her eyes and seeing blue.

The only logical reason the coin outcome is still 1/2, after she sees either colour, is because both Heads and Tails must both get halved. This means ignorance or information about self-location status, such as Monday/Tuesday or Original/Clone, are subject to the normal rules of probability and conditionalisation.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-04T18:36:16.836Z · LW(p) · GW(p)

Hi Simon, before anything let me say I like this discussion with you- using concrete examples. I find it helps to pinpoint the contention making thinking easier.

I think our difference is due to this here: "I assume you mean that the probability of 1/2 for Heads or Tails – before and after she sees either colour – remains correct." Yes and No, but most importantly not in the way you have in mind.

Just to quickly reiterate: I argue the first-person perspective is a primitive axiomatic fact. No way to explain or reason. It just is. Therefore no probability. Everything follows from here.

It means everything using self-locating probability is invalid. And that includes things like P(Heads|Red). So there is no "probability of Heads given I see a red room". Red cannot be conditioned on because it involves the probability "Now is Tuesday" vs "Now is Monday".

Let me follow your steps. If it is Heads then seeing the color is a random event, there is no problem at all, halving the chance is Ok. In the case of Tails, traditional updating would eliminate half the chance too because they give equal probability to Now is Monday vs Now is Tuesday. But because self-locating probability does not exist, there is no basis to split it evenly, or any other way for the matter of fact. There is no valid way to split it at all, that includes a 0-100 split.

So what I meant by "there is no way to update" is not saying the correct value of Tails remains unchanged at 1/2. I meant there is no correct value period. And you can't renormalize it with the Heads' chance of 1/4 to get anything.

This is why I suggested using the repeatable example with long-run frequencies. It makes the problem clearer. If you follow the first-person perspective of a subject then the long-run frequency of Heads is 1/2. However, the long-run frequency for Red or Blue would not converge to any particular value. And as you suggested, if you are always told if it is Heads and Red, and only counting iterations not being told so, then the relative frequency of Heads would indeed approach 1/3 as you suggested. Yet if you are always told whether it is Tails and Red and only counting the iterations not being told so, then there is still no particular long-run frequency for Tails. Overall there is no long-run frequency for Tails or Heads when you only count iterations of a particular color.

Back to your example, what is the probability of Heads after waking up? It is 1/2. That is the best you can give. But it is not the probability of Heads given Red. Using the color of the room in probability analysis won't yield any valid result. Because as the experiment is set up, the color of the room involves self-locating probability: it involves explaining why the first-person perspective is this particular observer/moment.

Replies from: Simon↑ comment by Simon · 2022-03-05T03:41:38.153Z · LW(p) · GW(p)

Ok let’s see if we can pin this down! Either Beauty learns something relevant to the probability of the coin flip, or she doesn't. We can agree on this, even if you think updating can't happen with self-location.

Let’s go back to a straightforward version of the original problem. It's similar to one you came up with. If Heads there is one awakening, if Tails there are two. If Heads, it will be decided randomly whether she wakes on Monday or Tuesday. If Tails, she will be woken on both days with amnesia in between. She is told in advance that, regardless of the coin flip, Bob and Peter will be in the room. Bob will be wake on Monday, while Peter is asleep. Peter will be awake on Tuesday, while Bob asleep. Whichever of the men wakes up, it will be two minutes before Beauty (if she’s woken). All this is known in advance. Neither man knows the coin result, nor will they undergo amnesia. Beauty has not met either of them, so although she knows the protocol, she won’t know the name of who's awake with her or the day unless he reveals it.

Bob and Peter’s perspective when they wake up is not controversial. Each is is guaranteed to find the other guy asleep. In the first two minutes, each will find Beauty asleep. During that time, both men’s probability is 1/2 for Heads and Tails. If Beauty is still asleep after two minutes, its definitely Heads. If she wakes up, it’s 1/3 Heads and 2/3 Tails.

A Thirder believes that Beauty shares the same credence as Bob or Peter when she wakes. As a Halfer, I endorse perspective disagreement. Unlike the guys, Beauty was guaranteed to encounter someone awake. Her credence therefore remains 1/2 for Heads and Tails, regardless of who she enounters.

What happens when the man awake reveals his name - say Bob? This reveals to Beauty that today is Monday. I would say that the probability of the coin remains unchanged from whatever it was before she got this information. For a Thirder this is 1/3 Heads, 2/3 Tails. For a Halfer it is still 1/2. I submit that the reason the probability remains unchanged is because something in both Heads and Tails was eliminated with parity. But suppose she got the information in stages.

She first asks the experimenter: is it true that the coin landed Tails and I'm talking to Peter? She’s told that this is not true. I regard this as a legitimate update that halves the probability of Tails, whereas you don't. Such an update would make her credence 2/3 Heads and 1/3 Tails. You would claim that no update is possible because what’s been ruled out is the self-location Tuesday/Tails. For you, ruling out Tuesday/Tails says nothing new about the coin. You argue that, whether the coin landed Heads or Tails, there is only one 'me' for Beauty and a guaranteed awakening applied to her. So the probability of Heads or Tails must be 1/2 before and after Tuesday/Tails is ruled out.

We might disagree whether ruling out the self-location Tuesday/Tails permitted an update or whether her credence must remain 1/2. But we can agree that, if she gets further information about a random event that had prior probability, she must update in the normal way. Even if ruling out a self-location told her nothing about the coin probability, it can't prevent her from updating if she does get this information.

So now she asks the experimenter: is it true that the coin landed Heads and I’m talking to Peter? She’s told that this is not true. This tells her that Bob is the one she's interacting with, plus she knows it's Monday. What's more, this is not a self-location that's been ruled out like before. The prior possibility of the coin landing Heads plus the prior possibility of her encountering Peter was a random sequence that has just been eliminated. It definitely requires an update. If her credence immediately before was 1/2 for Heads, it must be 1/3 now. If her credence was 2/3 for Heads – which I think was correct - then it is 1/2 now. Which is it?

That brings us back to Beauty’s position before the man says his name. Her credence for the coin is 1/2, before she learns who she’s with. Learning his identity rules out a possibility in both coin outcomes, as described above. The order in which she got the information makes no difference to what she now beleives. The fact remains that a random event with Heads, and a self-location with Tails, were both ruled out. It's the parity in updating that makes her credence still 1/2, whoever turns out to be awake with her.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-05T18:45:49.011Z · LW(p) · GW(p)

Let me lay out the difference between my argument and yours, following your example.

After learning the name/day of the week, halfer's probability is still 1/2. You said because something in both Heads and Tails was eliminated with parity. My argument is different, I would say there is no way to update the probability based on the name because it would involve using self-locating probability.

Let's break it down in steps as you suggested.

Suppose I ask: "is it true that the coin landed Tails and I'm talking to Peter? " and get a negative answer. You say this would eliminate half the probability for Tails. I say there is no way to say how much probability of Tails is eliminated (because it involves self-locating probability). So we cannot update the probability for this information. You say considering the answer, Tails is reduced to 1/4. I say considering the answer is wrong, if you consider it you get nothing meaningful, no value.

Suppose I ask: "is it true that the coin landed Heads and I’m talking to Peter?" and get a negative answer. You say this would eliminate half the probability for Heads making it 1/4. I agree with this.

Seeing Bob would effectively be the same as getting two negative answers altogether. How does it combine? You say Heads and Tails both eliminate half the probability (both 1/4 now), so after renormalizing the probability of heads remains unchanged at 1/2. I say since one of the steps is invalid the combined calculation would be invalid too. There is no probability condition on seeing Bob (again because it involves self-locating probability).

I suppose your next question would be: if the first question is invalid for updating, wouldn't I just update based on the second question alone? which will give a probability of Heads to 1/3?

That is correct as long as I indeed asked the question and got the answer. Like I said before: the long-run frequency for these cases would converge on 1/3. But that is not how the example is set up, it only gives information about which person is awake. If I actually askes this question then I would get a positive or negative answer. But no matter which person I see I could never get a positive result: even if I see Peter, there is still the possible case of a Tails-first awakening (which do not have a valid probability), so no positive answer. Conversely seeing Bob would mean the answer is negative but it also eliminates the case of Peter-tails (again no valid probability). So the combined probability of tails after seeing Bob and the probability of tails of getting a negative answer are not the same thing.

That is also the case for non-anthropic probability questions. For example, a couple has two children and you want to give the probability both of them are boys. Suppose you have a question in mind " is there a boy born on Sunday?". However, the couple is only willing to answer whether a child is born on a weekend. Meaning the question you have in mind would never have a positive answer. Anyway, the couple says "there is no boy born on a weekend". So your question got a negative answer. But that does not mean the probability of two boys given no boy born on the weekend is the same as the probability given no boy born on Sunday. You have to combine the case of no boy born on Saturday as well. This is straightforward. The only difference is in the anthropic example, the other part that needs to be combined together has no valid value. I hope this helps to pin down the difference. :)

Replies from: Simon↑ comment by Simon · 2022-03-06T14:26:53.809Z · LW(p) · GW(p)

I doubt we’ll persuade each other :) As I understand it, in my example you’re saying that the moment a self-location is ruled out, any present and future updating is impossible – but the last known probability of the coin stands. So if Beauty rules out Heads/Peter and nothing else, she must update Heads from 1/2 to 1/3. Then if she subsequently rules out Tails/Peter, you say she can’t update, so she will stay with the last known valid probability of 1/3. On the other hand, if she rules out Tails/Peter first, you say she can’t update so it’s 1/2 for Heads. However, you also say no further updating is possible even if if she then rules out Heads/Peter, so her credence will remain 1/2, even though she ends up with identical information. That is strange, to say the least.

I’ll make the following argument. When it comes to probability or credence from a first person perspective, what matters is knowledge or lack of it. Poeple can use that knowledge to judge what is likely to be true for them at that moment. Their ignorance doesn’t discriminate between unknown external events and unknown current realities, including self-locations. Likewise, their knowledge is not invalidated just because a first person perspective might happen to conflict with a third person perspective or because the same credence may not be objectively verfiable in the frequentist senes. In either case, it’s their personal evidence for what’s true that gives them their credence, not what kind of truth it is. That credence, based on ignorance and knowledge, might or might not correspond to an actual random event. It might reflect a pre-existing reality - such as there is a 1/10 chance that the 9th digit of Pi is 6. Or it might reflect an unknown self-location – such as “today is Monday or Tuesday”, or “I’m the original or clone”. Whatever they’re unsure about doesn’t change the validity of what they consider likely.

You could have exactly the same original Sleeping Beauty problem, but translated entirely to self-location without the need for a coin flip. Consider this version. Beauty enters the experiment on a Saturday and is put to sleep. She will be woken in both Week 1 and in Week 2. A memory wipe will prevent her from knowing which week it is, but whenever she wakes, she will definitely be in one or the other. She also knows the precise protocol for each week. In Week 1, she will be woken on Sunday, questioned, put back to sleep without a memory wipe, woken on Monday and questioned. This completes Week 1. She will return the following Saturday and be put to sleep as before. She is now given a memory wipe of both her awakenings from Week 1. She is then woken on Sunday and questioned but her last memory is of the previous Saturday when she entered the experiment. She doesn’t know whether this is the Sunday from Week 1 or Week 2. Next she is put to sleep without a memory wipe, woken on Monday and questioned. Her last memory is the Sunday just gone, but she still doesn’t know if it’s Week 1 or Week 2. Next she is put back to sleep and given a memory wipe, but only of todays’s awakening. Finally she woken on Tuesday and questioned. Her last memory is the most recent Sunday. She still won’t know which week she’s in.

The questions asked of her are as follows. When she awakens for what seems to be the first time – always a Sunday – what is her credence for being in Week 1 or Week 2? When she awakens for what seems to be the second time – which might be a Monday or Tuesday – what is her credence for being in Week 1 or Week 2?

Essentially this is the same Sleeping Beauty problem. The fact that she has uncertainty of which week she’s in rather than a coin flip, doesn’t prevent her from assigning a credence/probability based on the evidence she has. On her Sunday awakening, she has equal evidence to favour Week 1 and Week 2, so it is valid for her to assign 1/2 to both. On her weekday awakenings, Halfers and Thirders will disagree whether it’s 1/2 or 1/3 that she’s in Week 1. If she's told that today is Monday, they will disagree whether it's 2/3 or 1/2 that she's in Week 1.

We could add Bob to the experiment. Like Beauty, Bob enters the experiment on Saturday. His protocol is the same, except that he is kept asleep on the Sundays in both week. He is only woken Monday of Week 1, then Monday and Tuesday of Week 2. Each time he’s woken, his last memory is the Saturday he entered the experiment. He therefore disagrees with Beauty. From his point of view, it’s 1/3 that they’re in Week 1, whereas she says it’s 1/2. If told it's Monday, for him it's 1/2 that they're in Week 1, and for her it's 2/3.

This recreates perspective disagreement – but exclusively using self-location. You might be tempted to argue that neither Beauty or Bob can ever assign any probability or likelihood as to which week they’re in. I say it’s legitimate for them to do so, and to disagree.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-06T19:27:25.301Z · LW(p) · GW(p)

Let's not dive into another example right away. Something is amiss here. I never said anything about the order of getting the answers to "Is it Tails and Peter?" and "Is it Heads Peter?" would change the probability. I said we cannot update based on the negative answer of "Is it Tails Peter?" because it involves using self-locating probability. Whichever the order is, we can nevertheless update the probability of Heads to 1/3 when we get the negative answer to "Is it Heads and Peter?", because there is no self-locating probability involved here. But 1/3 is the correct probability only if Beauty did actually ask the question and get the negative response. I.E. There has to be a real question to update based on its answer. That does not mean Beauty would inevitably update P(Head) to 1/3 no matter what.

Before Beauty opens her eyes, she could ask: "Is it Heads and Peter?". If she gets a positive answer then the probability of Heads would be 1. If she gets a negative answer the probability of Heads would update to 1/3. She could also ask "Is it Heads and Bob?". And the result would be the same. Positive answer: P(Head)=1, negative answer: P(Head)=1/3. So no matter which of the two symmetrical questions she is asking, she can only update her probability after getting the answer to it. I think we can agree on this.

The argument saying my approach would always update P(Heads) to 1/3 no matter which person I see is as follows: first of all, no real question is asked, look at whether it is Peter or Bob. If I see Bob, then retroactively pose the question as "Is it Heads and Peter?" and get a negative answer. If I see Peter, then retroactively pose the question as "Is it Heads and Bob?" and get a negative answer. Playing the game like this would guarantee a negative answer no matter what. But clearly, you get the negative answer because you actively changing the question to look for it. We cannot update the probability this way.

My entire solution is suggesting there is a primitive axiom in reasoning: first-person perspective. Recognizing it resolves the paradoxes in anthropics and more. I cannot argue why perspective is axiomatic except it intuitively appears to be right, i.e. "I naturally know I am this person, and there seems to be no explanation or reason behind it." Accepting it would overturn Doomsday Argument (SSA), Presompetous Philosopher (SIA), it means no way to think about self-locating probability which resolves the reason for no-update after learning it's Monday, it explains why perspective disagreement in anthropics is correct, and it results in the agreement between Bayesian and frequentist interpretation in anthropics.

Because I regard it as primitive, if you disagree with it and argue there are good ways to reason and explain the first-person perspective and furthermore assign probabilities to it, then I don't really have a counter-argument. Except you would have to resolve the paradoxes your own way. For example, why do Bob and Beauty answer differently to the same question? My reason for perspective disagreement in anthropics is that the first-person perspective is unexplainable so a counterparty cannot comprehend it. What is your reason? (Just to be clear, I do not think there is a probability for it is Week 1 / Week 2, for either Beauty or Bob.) Do you think Doomsday Argument is right? What about Nick Bostrom's simulation argument, what is the correct reference class for oneself, etc.

Conversely, I don't think regarding the first-person perspective as not primitive, and subsequently, stating there are valid ways to think and assign self-locating probabilities is a counter-argument against my approach either. The merit of different camps should be judged on how well they resolve the paradoxes. So I like discussions involving concrete examples, to check if my approach would result in paradoxes of its own. I do not see any problem when applied to your thought experiments. The probability of Heads won't change to 1/3 no matter which person/color I see. I actually think that is very straightforward in my head. But I'm sensing I am not doing a good job explaining it despite my best effort to convince you :)

Replies from: Simon↑ comment by Simon · 2022-03-12T10:37:22.160Z · LW(p) · GW(p)

I’ve allowed some time to digest on this occasion. Let's go with this example.

A clone of you is created when you’re asleep. Both of you are woken with identical memories. Under your pillow are two envelopes, call them A and B. You are told that inside Envelope A is the title ‘original’ or ‘copy’, reflecting your body’s status. Inside Envelope B is also one of those titles, but the selection was random and regardless of status. You are asked the likelihood that each envelope contains ‘original’ as the title.

I’m guessing you'd say you can't assign any valid probability to the contents of Envelope A. However, you’d say it’s legitimate to assign a 1/2 probability that Envelope B contains ‘original’.

Is there a fundamental difference here, from your point of view? Admittedly if Envelope A contains 'original', this reflects a pre-existing self-location that was previously known but became unknown while you were asleep. Whereas if Envelope B contains 'original', this reflects an independent random selection that occured while you were asleep. However, your available evidence is identical for what could be inside each envelope. You therfore have identical grounds to assign likelihood about what is true in both.

Suppose it's revealed that both envelopes contain the same word. You are asked again the likelihood that the envelopes contain ‘original’. What rules do you follow? Would you apply the non-existence of Envelope A’s probability to Envelope B? Or would you extend the legitimacy of Envelope B’s probability to Envelope A?

I'm guessing you would continue to distinguish the two, stating that 1/2 was a still valid probability for Envelope B containing 'original' but no such likelihood existed for Envelope A - even knowing that whatever is true for Envelope B is true for Envelope A. If so, then it appears to be a semantic difference. Indeed, from a first person perspective, it seems like a difference that makes no difference. :)

Replies from: dadadarren↑ comment by dadadarren · 2022-03-12T19:48:20.909Z · LW(p) · GW(p)

Here is the conclusion based on my positions: the probability of Original for Envelop A does not exist; probability of Original for Envelop B is 1/2; probability of Original for Envelop B given contents are the same does not exist. Just like previous thought experiments, it is invalid to update based on that information.

Remember my position says first-person perspective is a primitive axiomatic fact? e.g. "I naturally know I am this particular person. But there is no reason or explanation for it. I just am." This means arguments that need to explain the first-person perspective, such as treating it as a random sample, are invalid.

And the difference between Envelop A and B is that probability regarding B does not need to explain the first-person perspective, it can just use "I am this person" as given. My envelope's content is decided by a coin toss. While probability A needs to explain the first-person perspective (like treating it as a random sample.)

Again this is easier seen with a frequentist approach.

If you repeat the same clone experiment a lot of times and keep recording whether you are the Original of the Clone in each iteration. Then even as time goes on there is no reason for the relative fraction of "I am the Original" to converge to any particular value. Of course, we can count everyone from these experiments and the combined relative fraction would be 1/2. But without additional assumptions such as "I am a random sample from all copies." that is not the same as the long-run frequency for the first person.

In contrast, I can repeat the cloning experiment many times, and the long-run frequency for Envelop B is going to converge to 1/2 for me, as it is the result of a fair coin toss. There is no need to explain why "I am this person" here. So from a first-person perspective, the probability for B describes my experience and is verifiable. While probability about A is not unless considering every copy together, which is not about the first-person anyway.

Even though you didn't ask this (and I am not 100% set on this myself) I would say the "probability that the Envelop contents are the same" is also 1/2. I am either the Orignal or the Clone, there is no way to reason which one I am. But luckily it doesn't matter which is the case, the probability that Envelop B got it right is still an even toss. And long-run frequency would validate that too.

But what Envelop B says given it got it right depends on which physical copy I am. There is no valid value for P(Envelop B says Orignal|the contents are the same). For example, say I repeat the experiment 1000 times. And it turns out I was the Clone in 300 of those experiments and the Original in 700 experiments. Due to fair coin tosses, I would have seen about an equal number of Original vs Clone (500 each) in Envelop B which corresponds to the probability of 1/2. And the contents would be the same about 150 times in experiments where I was the Clone and 350 times when I was the Original. (500 total which corresponds to the probability of "same content" being 1/2). Yet for all iterations where the contents are the same, 30% of which "I am the Clone".

But if I am the Original in all 1000 experiments, then for all iterations where the contents are the same, I am still 100% the Original. (The other two probabilities of 1/2 above still hold). i.e. the relative fraction of Orignal in Envelop B given contents are the same depends on which physical I am. And there is no way to reason about it unless some additional assumptions are made. (e.g. treating I as a random sample would cause it to be 1/2.)

In another word, to assign a probability to envelop B given contents are the same we must first find a way to assign self-locating probabilities. And that cannot be done.

Replies from: Simon↑ comment by Simon · 2022-03-12T23:43:10.951Z · LW(p) · GW(p)

I’ll come back with a deeper debate in good time. Meanwhile I’ll point out one immediate anomaly.

I was genuinely unsure which position you’d take when you learnt the two envelopes were the same. I expected you to maintain there was no probability assigned to Envelope A. I didn’t expect you to invalidate probability for the contents of Envelope B. You argued this because any statement about the contents of Envelope B was now linked to your self-location – even though Envelope B’s selection was unmistakably random and your status remained unknown.

It becomes even stranger when you consider your position if told that the two envelopes were different. In that event, any statement about the contents of Envelope B refers just as much to self-location. If the two envelopes are different, a hypothesis that Envelope B contains ‘copy’ is the same hypothesis that you’re the original - and vice versa. Your reasoning would equally compel you to abandon probability for Envelope B.

Therein lies the contradiction. The two envelopes are known in advance to be either the same or different. Whichever turns out to be true will neither reveal or suggest the contents of Envelope B. Before you discover whether the envelopes are the same or different, Envelope B definitely had a random 1/2 chance of containing ‘original’ or ‘copy’. Once you find out whether they’re the same or different, regardless of which turn out to be true, you're saying that Envelope B can no longer be assigned probability.

Something is wrong... :)

Replies from: dadadarren↑ comment by dadadarren · 2022-03-13T01:50:55.750Z · LW(p) · GW(p)

Haha, that's OK. I admit I am not the best communicator nor anthropic an easy topic to explain. So I understand the frustration.

But I really think my position is not complicated at all. I genuinely believe that. It just says take whatever the first-person perspective is as given, and don't come up with any assumptions to attempt explaining it.

Also want to point out I didn't say P(B say Original) as invalid, I said P(P says Original|contents are the same) is invalid. Some of your sentencing seems to suggest the other way. Just want to clear that up.

And I'm not playing any tricks. Remember Peter/Bob. I said the probability of Heads is 1/2, but you cannot update on the information that you have seen Peter? The reason being it involves using self-locating probability? It's the same argument here. There was a valid P(Heads) while no valid P(Heads|Peter). There is a valid P(B says Original) but no valid P(B says Original|Same) for the exact same reason.

And You can't update the probability given you saw Bob either. But just because you are either going to see Peter or Bob, that does not mean P(Heads) is invalidated, just can't update on Peter/bob that's all. Similarly, just because envelopes are either "same" or "different", doesn't mean P(B says Orignal) is invalid. Just cannot update on either.

And the coin toss and Envelop B are both random/unknown processes. So I am not trying to trick you. It's the same old argument.

And by suggesting think of repeating experiments and counting the long-run frequencies, I didn't leave much to interpretation. If you imagine repeating the experiments as a first-person and can get a long-run frequency, then the probability is valid. If there is no long-run frequency unless you come up with some way to explain the first-person perspective, then there is no valid probability. You can deduce what my position says quite easily like that. There aren't any surprises.

Anyway, I would still say arguing using concrete examples with you is an enjoyment. It pushes me to articulate my thoughts. Though I am hesitant to guess if you would say that's enjoyable :) I will wait for your rebuttal in good time.

Replies from: Simon↑ comment by Simon · 2022-03-13T16:46:26.247Z · LW(p) · GW(p)

Ok here's some rebuttal. :) I don’t think it’s your communication that’s wrong. I believe it’s the actual concept. You once said that yours is a view that no-one else shares. This does not in itself make it wrong. I genuinely have an open mind to understand a new insight if I’m missing it. However I’ve examined this from many angles. I believe I understand what you’ve put forward.

In anthropic problems, issues of self-location and first person perspective lie at the heart. In anthropic problems, a statement about a person’s self-location, such as ‘'”today is Monday” or “I am the original”, is indeed a first person perspective. Such a statement, if found to be true, is a fact that could not have been otherwise. It was not a random event that had a chance of not happening. From this, you’ve extrapolated – wrongly in my view - that normal rules of credence and Bayesian updating based on information you have or don’t yet have, are invalid when applied to self-location.

I’m reminded of the many worlds quantum interpretation. If we exist in a multiverse, all outcomes take place in different realities and are objectively certain. In a multiverse, credences would be deciding which world your first-person-self is in, not whether events happened. The multiverse is the ultimate self-location model. It denies objective probability. What you have instead are observers with knowledge or uncertainty about their place in the multiverse.

Whether theories of the multiverse prove to be correct or not, there are many who endorse them. In such a model – where probability doesn’t exist – it is still considered both legitimate and necessary for observers to assign likelihood and credence about what is be true for them, and apply rules of updating based on information available.

I have a realistic example that tests your position. Imagine you’re an adopted child. The only information you and your adopted family were given is that your natural parents had three children and that all three were adopted by different families. What is the likelihood that you were the first born natural child? For your adopted parents, it’s straightforward. They assign a 1/3 probability that they adopted the oldest. According to you, as it’s a first-person perspective question about self-location, no likelihood can be assigned.

It won't surprise you to learn that here I find no grounds for you to disagree with your adopted parents, much less to invalidate a credence. Everyone agrees that you are one of three children. Everyone shares the same uncertainty of whether you’re the oldest. Therefore the credence 1/3 that this is the case must also be shared.

I could tweak this situation to allow perspective disagreement with your adopted family, making it closer to Sleeping Beauty - and introducing a coin flip. I may do that later.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-14T18:34:17.521Z · LW(p) · GW(p)

While there isn't anything wrong with your summarization of my position. I wouldn't call it an extrapolation. Instead, I think it is other camps like SSA and SIA that are doing the extrapolation. "I know I am this person but have no explanation or reason for it" seems right, and I stick to it in reasoning. In my opinion, it is SSA and SIA that try to use random sampling in an unrelated domain to give answers that do not exist. Which leads to paradoxes.

I recognized my solution (PBR) as incompatible with MWI since the very beginning of forming my solution. I even explicitly wrote about it, right after the solution to anthropics on my website. The deeper reason is PBR actually has a different account of what scientific objective means. Self-locating probability is only the most obvious manifestation of this difference. I wrote about it in a previous post [LW · GW].

Nonetheless, the source of probability is the most criticized point about MWI. "why does a bifurcating world with equal coefficients guarantee I would experience roughly the same frequencies? What forces the mapping between the "worlds" and my first-person experience?" Even avid MWI supporters like Sean Carrol regard this as the most telling criticism of MWI. And especially hard to answer.

I would suggest not to deny PBR just because one likes MWI. Since nobody can be certain that MWI is the correct interpretation. Furthermore, there is no reason to be alarmed just because a solution of anthropics is connected with quantum interpretations. The two topics are connected, no matter which solution one prefers. For example, there is a series of debates between Darren Bradly and Alastair Wilson about whether or not SIA would naively confirm MWI.

Regarding the disagreement between the adopted son and parents about being firstborn, I agree there is no probability to "I am a firstborn". There simply is no way to reason about it. The question is set up so that it is easy to substitute the question with "what is the probability that the adopted child is firstborn." And the answer would be 1/3. But then it is not a question defined by your first-person perspective. It is about a particular unknown process (the adoption process). Which can be analyzed without additional assumptions explaining the perspective.

To the parents, not the firstborn means they got a different child in the adoption process. But for you, what does "not being the firstborn" even mean? Does my soul get incarnated into one of the other two children? Do I become someone/something else? Or maybe never came into existence completely? None of these makes any sense unless you come up with some assumptions explaining the first-person perspective.

I'm certain this answer won't convince you. It will be better there is a thought experiment with numbers. I will eagerly wait for your example for it. And maybe this time you can predict what my response would be by using the frequentist approach. :)

edit: Also please don't take it as criticizing you. The reason I think I am not doing a good job communicating is that I feel I have been giving the same explanation again and again. Yet my answer to the thought experiments seems to always surprise you. When I thought you would have already guessed what my response would be. And sometimes (like the order of the questions in Peter/Bob), your understanding is simply not what I thought I wrote.

I have always thought repeating the experiment and counting long-run frequencies is the surest way to communicate. It takes all theoretical metaphysical aspects out of the question and just presents solid numbers. But that didn't work very well here. Do you have any insights about how I should present my argument going forward? I need some advice.

Replies from: Simon↑ comment by Simon · 2022-03-16T10:43:33.477Z · LW(p) · GW(p)

Ok that’s fine. I agree MWI is not proven. My point was only that it is the absolute self-location model. Those endorsing it propose the non-existence of probability, but still assign the mathematics of likelihood based on uncertainty from an observer. Forgive me for stumbling onto the implications of arguments you made elsewhere. I have read much of what you’ve written over time.

I especially agree that perspective disagreement can happen. That's what makes me a Halfer. Self-location is at the heart of this, but I would say it is not because credences are denied. I would say disagreement arises when sampling spaces are different and lead to conflicting first-person information that can’t be shared. I would also say that, whenever you don't know which pre-existing time or identity applies to you, assigning subjective likelihood has much meaning and legitimacy as it does to an unknown random event. I submit that it’s precisely because you do have credences based on uncertainty about self-location that perspective disagreement can happen.

You can also have a situation where a reality might be interpreted subjectively both as random and self-location. Consider a version of Sleeping Beauty where we combine uncertainty of being the original and clone with uncertainty about what day it is.

Beauty is put to sleep and informed of the following. A coin will be (or has already been) flipped. If the coin lands Heads, her original self will be woken on Monday and that is all. If the coin lands Tails, she will be cloned; if that happens, it will be randomly decided whether the original wakes on Monday and the clone on Tuesday, or the other way round.

Is it valid here for Beauty to assign probability to the proposition “Today is Monday” or Today is Tuesday”? I’m guessing you will agree that, in this case, it is. If the coin landed Heads, being woken on Monday was certain and so was being the original. If it landed Tails, being woken on Monday or Tuesday was a separate random event from whichever version of herself she happens to be. Therefore she should assign 3/4 that today is Monday and 1/4 that today is Tuesday. We can also agree as halfers that the coin flip is 1/2 but, once she learns it’s Monday, she would update 2/3 to Heads and 1/3 to Tails. However, if instead of being told it's Monday, she's told that she's the original, then double halfing kicks in for you.

From your PBR position, the day she’s woken does have indpendent probability in this example, but her status as original or clone is a matter of self-location. Whereas I question whether there's any significant difference between the two kinds of determination. Also, in this example, the day she’s woken can be regarded as both externally random and a case of self-location. Whichever order the original and clone wake up if the coin landed Tails, there are still two versions of Beauty with identical memories of Sunday; one of these wakes on Monday, the other on Tuesday. If told that, in the event of Tails, the two awakenings were prearranged to correspond to her original and clone status instead of being randomly assigned, the number of awakenings are the same and nothing is altered for Beauty's knowledge of what day it is. I submit that in both scenarios, validity for credence about the day should the same.

Replies from: dadadarren↑ comment by dadadarren · 2022-03-16T18:29:47.531Z · LW(p) · GW(p)

But my explanation for perspective disagreement is based on the primitive nature of the first-person perspective. i.e. it cannot be explained therefore incommunicable. If we say there is A GOOD WAY to understand and explain it, and we must use assign self-locating probabilities this way, then why don't we explain our perspectives to each other as such, so we can have the exact same information and eliminate the disagreement?

If we say the question has different sample spaces for different people, which is shown by repeating the experiment from their respective perspective gives different relative frequencies. Then why say when there is no relative frequency from a perspective there is still a valid probability? This is not self-consistent.

To my knowledge, halfers have not provided a satisfactory explanation to perspective disagreement. Even though Katja Grace and John Pittard have pointed out for quite some time already. And if halfers want to use my explanation for the disagreement, and at the same time, reject my premises of primitive perspectives, then they are just putting assumptions over assumptions to preserve self-locating probability. To me, that's just because our nature of dislike saying "I don't know", even when there is no way to think about it.

And what do halfers get by preserving self-locating probability? Nothing but paradoxes. Either we say there is a special rule of updating which keeps the double-halving instinct, or we update to 1/3. The former has been quite conclusively countered by Michael Titelbaum: as long as you assign a non-zero value to the probability to "today is Tuesday" it will result in paradoxes. The latter has to deal with Adam Elga's question from the paper which jump-started the whole debate: After knowing it is Monday, I will put a coin into your hand. You will toss it. The result determines whether you will be wake again tomorrow with a memory wipe. Are you really comfortable saying "I believe this is a fair coin and the probability it will land Heads is 1/3?".

I personally think if one wants to reject PBR and embrace self-locating probability the better choice would be SIA thus becoming a thirders. It still has serious problems but not so glaring.

What you said about my position in the thought experiment is correct. And I still think the difference between self-locating and random/unknown processes is significant (which can be shown by relative frequencies' existence). Regarding this part: "the two awakenings were prearranged to correspond to her original and clone status instead of being randomly assigned", if by that it means it was decided by some rule that I do not know, then the probability is still valid. If I am disclosed what the rule is, e.g. original=Monday and clone=Tuesday, then there is no longer a valid probability.

Replies from: Simon↑ comment by Simon · 2022-04-14T18:11:18.377Z · LW(p) · GW(p)

Hi Dadarren. I haven’t forgotten our discussion and wanted to offer further food for thought. It might be helpful to explore definitions. As I see it, there are three kinds of reality about which someone can have knowledge or ignorance.

Contingent – an event in the world that is true or false based on whether it did or did not happen.

Analytic – a mathematical statement or expression that is true or false a priori.

Self-location – an identity or experience in space-time that is true or false at a given moment for an observer.

I’d like to ask two questions that may be relevant.

a) When it comes to mathematical propositions, are credences valid? For example, if I ask whether the tenth digit of Pi is either between 1-5 or 6-0, and you don’t know, is it valid for you to use a principal of indifference and assign a personal credence of 1/2?

b) Suppose you’re told that a clone of you will be created when you’re asleep. A coin will be flipped. If it lands Head, the clone will be destroyed and the original version of you will be woken. If it lands Tails, the original will be destroyed and the clone will be woken. Finding yourself awake in this scenario, is it valid to assign a 1/2 probability that you’re either the original or clone?

I would say that both these are valid and normal Bayesian conditioning applies. The answer to b) reflects both identity and a contingent event, the coin flip. For a), it would be easy to construct a probability puzzle with updatable credences about outcomes determined by mathematical propositions.

However I’m curious what your view is, before I dive further in.

Replies from: dadadarren↑ comment by dadadarren · 2022-04-15T18:38:49.213Z · LW(p) · GW(p)

For a, my opinion is while objectively there is no probability for the value of a specific digit of Pi, we can rightly say there is an attached probability in a specific context.

For example, it is reasonable to ask why I am focusing on the tenth digit of Pi specifically? Maybe I just happen to memorize up to the ninth digit, and I am thinking about the immediate next one. Or maybe I just arbitartily choose the number 10. Anyway, there is a process leading to the focus of that particular digit. If that process does not contain any information about what that value is, then a principle of indifference is warranted. From a frequentist approach, we can think of repeating such processes and checking the selected digit, which can give a long-run relative frequency.

For b, the probability is 1/2. It is valid because it is not a self-locating probability. Self-locating probability is about what is the first-person perspective in a given world, or the centered position of oneself in a possible world. But problem b is about different possible worlds. Another hint is the problem can be easily described without employing the subject's first-person perspective: what is the probability that the awakened person is the clone? Compare that to the self-locating probabilities we have been discussing: there are multiple agents and the one in question is specified by the first-person "I", which must be comprehended from the agent's perspective.

From a frequentist approach, that experiment can be repeated many times and the long-run frequency would be 1/2. Both from the first-person perspective of the experiment subject, and from the perspective of any non-participating observers.

Replies from: Simon↑ comment by Simon · 2022-04-16T22:32:31.057Z · LW(p) · GW(p)

Well in that case, it narrows down what we agree about. Mathematical propositions aren’t events that happen. However, someone who doesn’t know a specific digit of Pi would assign likelihood to it’s value with the same rules of probability as they would to an event they don’t know about. I define credence merely as someone’s rational estimate of what’s likely to be true, based on knowledge or ignorance. Credence has no reason to discriminate between the three types of reality I talked about, much less get invalidated.