The Assumed Intent Bias

post by silentbob · 2023-11-05T16:28:03.282Z · LW · GW · 13 commentsContents

The Assumed Intent Bias How to Avoid the Assumed Intent Bias None 13 comments

Summary: when thinking about the behavior of others, people seem to have a tendency to assume clear purpose and intent behind it. In this post I argue that this assumption of intent quite often is incorrect, and that a lot of behavior exists in a gray area where it’s easily influenced by subconscious factors.

This consideration is not new at all and relates to many widely known effects such as the typical mind fallacy [? · GW], the false consensus effect, black and white thinking and the concept of trivial inconveniences [LW · GW]. It still seems valuable to me to clarify this particular bias with some graphs, and have it available as a post one can link to.

Note that “assumed intent bias” is not a commonly used name, as I believe there is no commonly used name for the bias I’m referring to.

The Assumed Intent Bias

Consider three scenarios:

- When I quit my previous job, I was allowed to buy my work laptop from the company for a low price and did so. Hypothetically the company’s admins should have made sure to wipe my laptop beforehand, but they left that to me, apparently reasoning that had I had any intent whatsoever to do anything shady with the company’s data, I could have easily made a copy prior to that anyway. So they further assumed that anyone without a clear intention of stealing the company’s data would surely do the right thing then, and wipe the device themselves.

- At a different job, we continuously A/B-tested changes to our software. One development team decided to change a popular feature, so that using it required a double click instead of a single mouse click. They reasoned that this shouldn’t affect feature usage of our users, because anyone who wants to use the feature can still easily do it, and nobody in their right mind would say “I will use this feature if I have to click once, but two clicks are too much for me!”. (The A/B test data later showed that the usage of that feature had reduced quite significantly due to that change)

- In debates about gun control, gun enthusiasts sometimes make an argument roughly like this: gun control doesn’t increase safety, because potential murderers who want to shoot somebody will find a way to get their hands on a gun anyway, whether they are easily and legally available or not.[1]

These three scenarios all are of a similar shape: Some person or group (the admins; the development team; gun enthusiasts) make a judgment about the potential behavior (stealing sensitive company data; using a feature; shooting someone) of somebody else (leaving employees; users; potential murderers), and assume that the behavior in question happens or doesn’t happen with full intentionality.

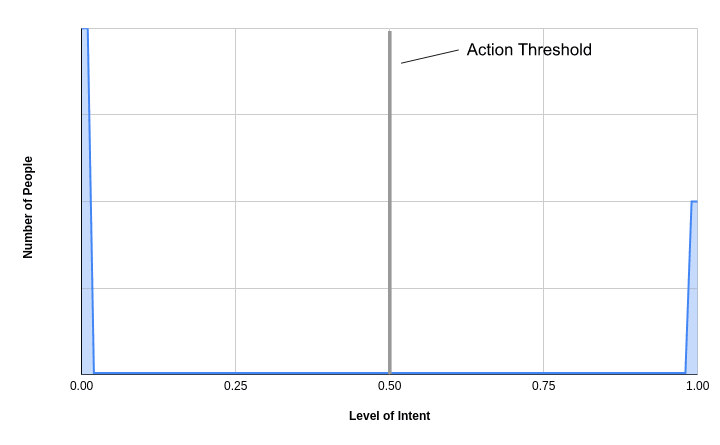

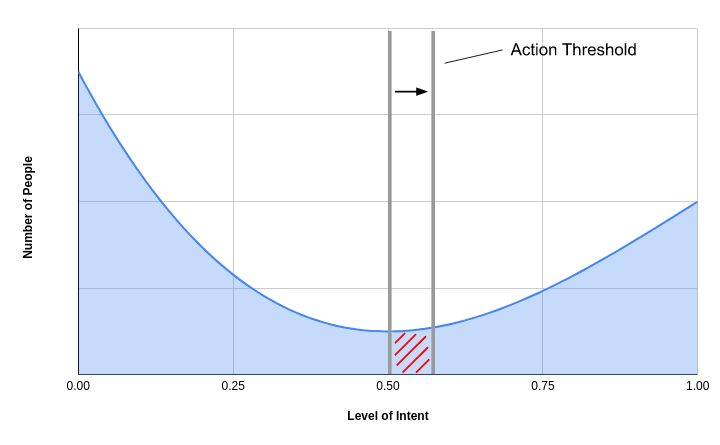

According to this view, if you plotted the number of people that have a particular level of intent with regards to some particular action, it may look somewhat like this:

This graph would represent a situation where practically every person either has a strong intention to act in a particular way (the peak on the right), or to not act in that way (peak on the left).

And indeed, in such a world, relatively weak interventions such as “triggering a feature on double click instead of single click”, or “making it more difficult to buy a gun” may not end up being effective: while such interventions would move the action threshold slightly to the right or left, this wouldn’t actually change people’s behavior, as everyone stays on the same side of the threshold. So everybody would still act in the same way they would otherwise.

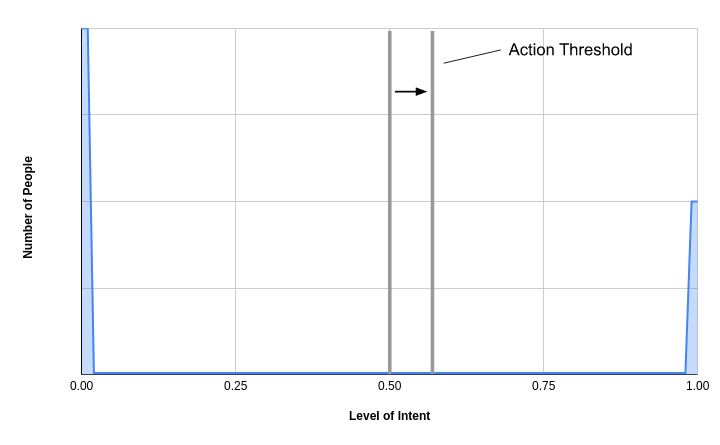

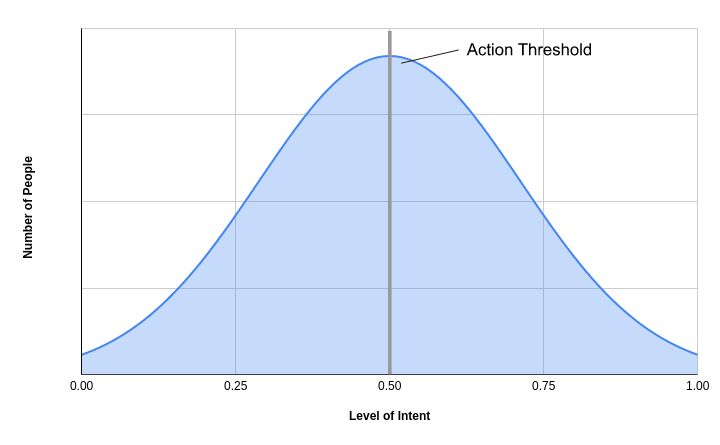

However, I think that in many, if not most, real life scenarios, the graph actually looks more like this:

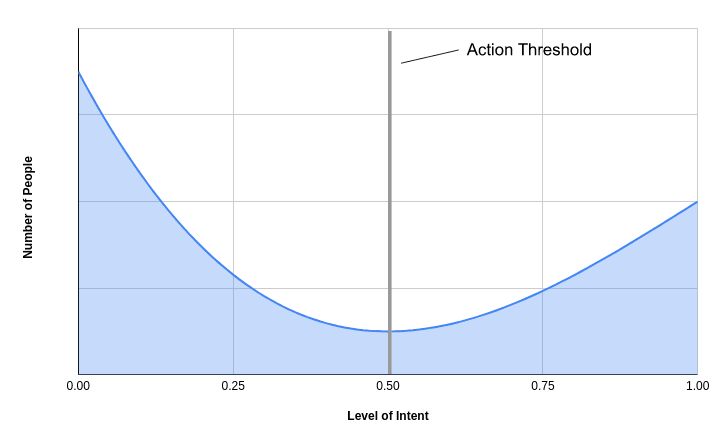

Or even this:

In these cases, only a relatively small number of people have a clear and strong intention with regards to the behavior, and a lot of people are somewhere in between. Here it gets apparent that even subtle interventions have an impact: moving the action threshold even slightly to either direction will mean that some people will now end up on the other side of the threshold than before – their intention was just weak enough that adding a tiny additional inconvenience will now avert them from that behavior, or vice versa, their intention was just strong enough that making the action a tiny bit easier causes them to now follow that behavior.

The impact of such interventions then depends on the distribution of people’s intent levels: the more people are undecided and/or don’t care about the given behavior, the greater the number of individuals that will react to interventions that move the threshold.

Of course, and I think this is partially where this fallacy is coming from, such cases are not as salient, and more difficult to imagine. It’s easy to think of a person who clearly wants to use a given feature of my software, or one who doesn't need that feature. But what does a user look like who uses the feature when it requires a single click, but doesn’t use the feature if it requires a double click? Clearly the data shows that these people exist, but I couldn’t easily point to a single person for whom that intervention is decisive in determining their behavior. What’s more, these people themselves are probably not even aware that this minor change affected them.

How to Avoid the Assumed Intent Bias

As with many biases, knowing the assumed intent bias is half the battle. In my experience the most common way in which people fall victim to this bias is when they argue that certain environmental changes, e.g. affecting how convenient or easy or obvious it is to follow some particular behavior, will not have any impact on how people actually behave. This view makes sense only when you assume the naive view of every person having clear intent with regards to that action. But it falls apart when taking into account that in most scenarios people are actually distributed over the whole spectrum of intention levels.

Software development and policy are domains in which this bias occurs rather frequently. But it basically affects any area that deals with influencing (or understanding) the behavior of others.

If you’re aware of the importance of trivial inconveniences [LW · GW] and are used to nuanced thinking, then you’re probably on the safe side. But it still doesn’t hurt to stay on the lookout for people (including yourself) arguing about the intentions of unidentified others. And if this happens, be aware that it’s easy to assume intent where there is little.

A lack of intent – in this case meaning being close to 0.5 on the graphs above – can mean at least two things: that people are simply undecided about something, or that they are unaware and/or don’t care. Most people don’t care to the same degree about what you care about. If I’ve been working for Generic Napkin Company for years and love the product, then it’s easy for me to assume that most of our customers also care deeply about our product. It might not occur to me that the vast majority of customers who buy the product don’t even know my company’s name, and would barely notice if their store decided to replace the product with that of a competing brand. They don’t waste a second thought on these napkins – they just buy them because it is the convenient and simple thing to do, and then they forget about it. And while this is not necessarily representative of most other decisions humans make, it still probably doesn’t hurt to realize that most people care much less about the decisions that we care about while we are thinking about how to change other people’s behavior.

(Update 2023-11-06: fixed a detail in the second graph)

- ^

Of course this is not the only or even the main argument; I’m not meaning to make any argument for or against gun control here, but just point out that one particular argument is flawed

13 comments

Comments sorted by top scores.

comment by qbolec · 2023-11-05T20:51:08.246Z · LW(p) · GW(p)

How to combine this with the fact that "the nudge" apparently doesn't work https://phys.org/news/2022-08-nudge-theory-doesnt-evidence-future.html ?

Replies from: silentbob↑ comment by silentbob · 2023-11-06T06:38:04.622Z · LW(p) · GW(p)

Interesting, hadn't heard of this! Haven't fully grasped the "No evidence for nudging after adjusting for publication bias" study yet, but at first glance it looks to me as if it is rather evidence for small effect sizes than for no effect at all? Generally, when people say "nudging doesn't work", this can mean a lot of things, from "there's no effect at all" to "there often is an effect, but it's not very large, and it's not worth it to focus on this in policy debates", to "it has a significant effect, but it will never solve a problem fully because it only affects the behavior of a minority of subjects".

There's also this article making some similar points, overall defending the effectiveness of nudging while also pushing for more nuance in the debate. They cite one very large study in particular that showed significant effects while avoiding publication bias (emphasis mine):

The study was unique because these organizations had provided access to the full universe of their trials—not just ones selected for publication. Across 165 trials testing 349 interventions, reaching more than 24 million people, the analysis shows a clear, positive effect from the interventions. On average, the projects produced an average improvement of 8.1 percent on a range of policy outcomes. The authors call this “sizable and highly statistically significant,” and point out that the studies had better statistical power than comparable academic studies. So real-world interventions do have an effect, independent of publication bias.

(...)

We can start to see the bigger problem here. We have a simplistic and binary “works” versus “does not work” debate. But this is based on lumping together a massive range of different things under the “nudge” label, and then attaching a single effect size to that label.

Personally I have a very strong prior that nudging must have an effect > 0 - it would just be extremely surprising to me if the effect of an intervention that clearly points in one direction would be exactly 0. This may however still be compatible with the effects in many cases being too small to be worth to put the spotlight on, and I suspect it just strongly depends on the individual case and intervention.

Replies from: silentbob↑ comment by silentbob · 2024-02-13T16:02:42.043Z · LW(p) · GW(p)

Just to note I wrote a separate post [LW · GW] focusing on pretty much that last point:

Personally I have a very strong prior that nudging must have an effect > 0 - it would just be extremely surprising to me if the effect of an intervention that clearly points in one direction would be exactly 0. This may however still be compatible with the effects in many cases being too small to be worth to put the spotlight on, and I suspect it just strongly depends on the individual case and intervention.

comment by romeostevensit · 2023-11-05T17:19:37.457Z · LW(p) · GW(p)

Two more biases it is related to are fundamental attribution error and is-ought fallacy. That is to say, intentions are often interpreted as outputs of essential qualities of the person rather than contingent on the situation, and intentions are often wrapped up with implicit oughts that multiple parties will interpret differently.

comment by NickH · 2023-11-06T08:06:42.230Z · LW(p) · GW(p)

This is a great article that I would like to see go further with respect to both people and AGI.

With respect to people, it seems to me that, once we assume intent, we build on that error by then assuming the stability of that intent (because peoples intents tend to be fairly stable) which then causes us to feel shock when that intent suddenly changes. We might then see this as intentional deceit and wander ever further from the truth - that it was only an unconscious whim in the first place.

Regarding AGI, this is linked to unwarranted anthropomorpism, again leading to unwarranted assumptions of stability. In this case the problem appears to be that we really cannot think like a machine. For an AGI, at least based on current understandings, there are, objectively, more or less stable goals, but our judgement of that stability is not well founded. For current AI, it does not even make sense to talk about the strength of a "preference" or an "intent" except as an observed statistical phenomenon. From a software point of view, the future value of two possible actions are calculated and one number is bigger than the other. There is no difference, in the decision making process, between a difference of 1,000,000 and 0.000001, in either case the action with the larger value will be pursued. Unlike a human, an AI will never perfrom an action halfheartedly.

comment by CrimsonChin · 2023-11-06T01:10:08.143Z · LW(p) · GW(p)

With this in mind. It seems odd that a lot of agile developers build software around "user stories". Seems to lead us right into the trap of imagining a users intentions.

Luckily I think the industry is moving away from user stories.

Replies from: NickH, silentbob↑ comment by NickH · 2023-11-06T07:43:37.923Z · LW(p) · GW(p)

I don't think this is relevant. It only seems odd if you believe that the job of developers is to please everyone rather than to make money. User Stories are reasonable for the goal of creating software that will make a large proportion of the target market want to buy that software. Numerous studies and real world evidence, show that the top few percent of products capture the vast majority of the market and therefore software companies would be unhappy if their developers did not show a clear bias. There would only be a downside if the market showed the U-shaped distribution and the developers were also split on this distribution potentially leading to an incoherent product, but this is normally prevented by having a design authority.

Replies from: CrimsonChin↑ comment by CrimsonChin · 2023-11-06T14:12:07.461Z · LW(p) · GW(p)

I think when you say, "I don't think this is relevant" you mean... I agree with your premise (that user stories are related to the assumed intent bias) but I don't think that we should upend user stories yet because they do what they are supposed to.

To which I agree.

Development is complex and realistically even with user stories, developers are considering other users (not in the narrative). If you were to take away user stories and focus on tasks, developers would still imagine user intent. By using user stories we are just shifting focus on intent. Which I think is usually a net positive. This post helped me illuminate in my head where it might not be a net positive.

↑ comment by silentbob · 2023-11-06T06:02:37.453Z · LW(p) · GW(p)

Unless I misunderstand your comment, isn't it rather the opposite of odd that user stories are so popular, given that this is what the bias would predict? That being said, maybe I've argued a bit too strongly in one direction with this post - I wouldn't even say that user stories are detrimental or useless. Depending on your product, it may well be that some significant ratio of users to have strong intent. My main claim is that in most situations, the number of people who are closer to the middle of the spectrum is >0. But it's not necessary for that group to dominate the distribution.

So in my view, it can still make sense to focus on a subgroup of your users who know what they're doing, as long as you remain aware that this will not apply to all users. E.g. when A/B testing, you should expect by default that making any feature even mildly less convenient to use will have negative effects. So you should not be surprised to see that result - but it may still be the right choice to make such a change nonetheless, depending on what benefits you hope to get from it.

Replies from: CrimsonChin↑ comment by CrimsonChin · 2023-11-06T13:53:14.200Z · LW(p) · GW(p)

I may have not been clear. I am agreeing with the entire post. I agree with your comment too that "user stories" arose most likely for the same reason as this bias.

I also agree with you that figuring out intention is an important part of development. A majority of users will use my software with the same intent.

I just meant to say that I immediately thought of "user stories" while reading this post. My initial thought was that user stories focus too much on intent. For example, if you are hyper focused on the user trying to reset their password you may neglect the user who accidentally clicked the reset password button and just want to navigate back to the log-in page. Would there be benefit to removing the user story as a goal and just make the goal, create a reset password page? I agree with you though, user stories serve their purpose and might be more of a net-good. This post just helped me recognize a potential pitfall of them.

comment by dirk (abandon) · 2024-02-13T16:44:27.566Z · LW(p) · GW(p)

But what does a user look like who uses the feature when it requires a single click, but doesn’t use the feature if it requires a double click? Clearly the data shows that these people exist, but I couldn’t easily point to a single person for whom that intervention is decisive in determining their behavior.

I use Discord, which recently switched the file-upload feature from requiring one click to requiring two (the first of which, if you single-click as you used to rather than double-clicking to skip, opens a context menu that I never use and did not want). I do still share images most of the time, but every single time I upload an image it's noticeably higher-friction and more frustrating than it previously was, and if I valued the feature less I can easily imagine ceasing to use it.

Prior to the update, the path from wanting to share a file to actually doing so was sufficiently short to feel effortless. The extra click, even though it's minor, adds a stumbling block in the middle of what was previously an effectively-atomic action (and moreover, adds latency; there's not very much more, if I remember to make my click a double, but there's still some, and as a user I consider latency one of the worst crimes a developer can commit).

Replies from: silentbob↑ comment by silentbob · 2024-02-17T13:26:30.165Z · LW(p) · GW(p)

Thanks for the example! It reminds me of how I once was a very active Duolingo user, but then they published some update that changed the color scheme. Suddenly the duolingo interface was brighter and lower contrast, which just gave me a headache. At that point I basically instantly stopped using the app, as I found no setting to change it back to higher contrast. It's not quite the same of course, but probably also something that would be surprising to some product designers -- "if people want to learn a language, surely something so banal as a brightening up the font color a bit would not make them stop using our app".

comment by denyeverywhere (daniel-radetsky) · 2023-11-06T09:06:18.418Z · LW(p) · GW(p)

In your first example, it's clear that expected loss is as important as intent. It's not just that you probably don't have a strong intent to misuse their data. It's that the cost of you actually having this intent is pretty small when you only have access to whatever data is left on your laptop, compared to when you had access to a live production database or whatever. In other words, it's not that they necessarily have some sort of binary model of intent to screw them. Even if they have some sort of distribution of the likelihood that either now or soon you will want to misuse the data, it doesn't matter because the expected loss is so small in any model.

To impute a binary model of intent to screw them, they'd have to do something like this: Previously we had lots of people who just had root access to our production environment. We now want to tighten up and give everyone permission sets tailored to their actual job requirements. However, silentbob has been with us for a while and would have screwed us by now if that was their intent, so let's just let them keep their old root account since that's slightly less work and might slightly improve productivity.

In your second example, I'm involuntarily screaming NO NO NO DO NOT MAKE THAT CHANGE WHAT THE FUCK IS WRONG WITH YOU before I even get to their reasoning. By the time I've read their reasoning I already want them fired. When you report the results of the A/B test data, I'm thinking: well of course the data showed that. How could you possibly think anything else could happen?

I'm starting to think I've been programming for too long. Like, I didn't even have to think to know what would actually happen. I just felt it immediately.

In your third example, I don't think that's how gun enthusiasts usually reason about this point, but I respect that this isn't really what you're getting at.