The Greedy Doctor Problem

post by Jan (jan-2) · 2021-11-16T22:06:15.724Z · LW · GW · 10 commentsThis is a link post for https://universalprior.substack.com/p/the-greedy-doctor-problem

Contents

What is the Greedy Doctor Problem? Some background on the problem Three approaches to handling greedy doctors Scenario one: just pay the doctor, dammit. Scenario two: Do the obvious thing. Scenario three: Do the other obvious thing. Truth values and terminal diseases None 10 comments

TL;DR: How to reason about people who are smarter than you. A few proposals, interspersed with reinforcement learning and humorous fiction. Ending on a surprising connection to logical inductors.

What is the Greedy Doctor Problem?

I came up with a neat little thought experiment[1] :

You are very rich and you want to make sure that you stay healthy. But you don't have any medical expertise and, therefore, you want to hire a medical professional to help you monitor your health and diagnose diseases. The medical professional is greedy, i.e. they want to charge you as much money as possible, and they do not (per se) care about your health. They only care about your health as far as they can get money from you. How can you design a payment scheme for the medical professional so that you actually get the ideal treatment?

Over the last few weeks, I've been walking around and bugging people with this question to see what they come up with. Here I want to share some of the things I learned in the process with you, as well as some potential answers. I don't think the question (as presented) is completely well-formed, so the first step to answering it is clarifying the setup and deconfusing [LW · GW] the terms. Also, as is typical with thought experiments, I do not have a definitive "solution" and invite you (right now!) to try and come up with something yourself[2].

Some background on the problem

The subtext for the thought experiment is: How should you act when interacting with someone smarter than yourself [LW · GW]? What can you say or do, when your interlocutor has thought of everything you might say and more? Should you trust someone's advice, when you can't pinpoint their motivation? As a Ph.D. student, I run into this problem around three to five times a week, when interacting with colleagues or my advisor[3].

After bugging a few people I learned that (of course) I'm not the first person to think about this question. In economics and political science, the situation is known as the principal-agent problem and is defined as "a conflict in priorities between a person or group and the representative authorized to act on their behalf. An agent may act in a way that is contrary to the best interests of the principal." This problem arises f.e. in the context of conflicts between corporate management and shareholders, clients and their lawyers, or elected officials and their voters. Well-trodden territory.

With decades of literature from different academic fields, can we really expect to contribute anything original? I hope so, in particular since all the previous research on the topic is constrained to "realistic" solutions and bakes in a lot of assumptions about how humans operate [LW · GW]. That's not the spirit of this thought experiment. Do you want to think about whether sending the doctor in a rocket to Mars might help? Please do[4]. Don't let yourself be constrained by practicalities[5].

In this spirit, let us think about the problem from the perspective of interactions between abstract intelligent agents. Here, Vinge's principle is relevant: in domains complicated enough that perfect play is not possible, less intelligent agents will not be able to predict the exact moves made by more intelligent agents. The reasoning is simple; if you were able to predict the actions of the more intelligent agent exactly, you could execute the actions yourself and effectively act at least as intelligent as the "more intelligent" agent - a contradiction[6]. In the greedy doctor thought experiment, I assume the doctor to be uniformly more knowledgable than me, therefore Vinge's principle applies.

While this impossibility result is prima facie discouraging, it reveals a useful fact about the type of uncertainty involved. Both you and the doctor have access to the same facts[7] and have the same amount of epistemic uncertainty. The difference in uncertainty between you and the doctor is instead due to differences in computational capacity; it is logical uncertainty. Logical uncertainty behaves fairly differently from epistemic uncertainty; in particular, different mathematical tools are required to operate on it[8].

But having said all that, I have not encountered any satisfying proposals for how to approach the problem, nor convincing arguments for why these approaches fail. So let's think about it ourselves.

Three approaches to handling greedy doctors

Here is how I think about the situation:

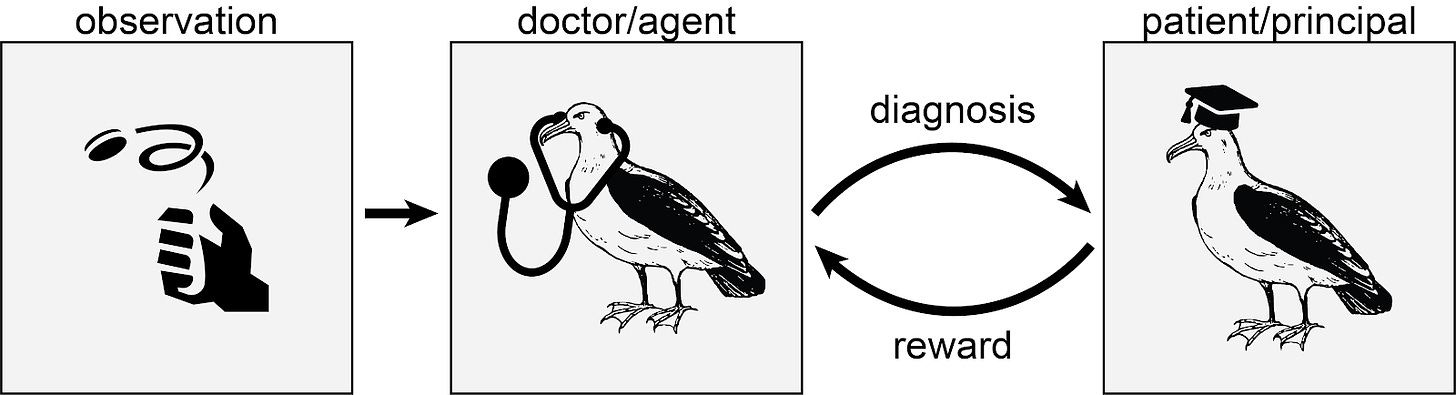

There is a ground truth “observation” about whether you are actually sick or not. Only the doctor has access to that observation and makes a diagnosis that might or might not be based on the diagnosis. You, the patient, receive the diagnosis and decide whether or not to pay the doctor.

This is a (slightly pathological) Markov Decision Process. The observations come from a set of states S, which I model as a fair coin flip[9]. "Tails" is "treatment" and "Heads" is "healthy". Similarly, the diagnosis of the doctor comes from a set of actions A, where the doctor can either declare that the patient needs "Treatment" or is "Healthy". The payment from the patient to the doctor is the reward, which is a function Φ that only depends on the diagnosis of the doctor, not on the actual observation. Finally, the strategy according to which the doctor diagnoses the patient is a policy π, which assigns each possible diagnosis a probability given the observation.

(Feel free to ignore the squiggles, it’s just a fancy way of saying what I just said in the preceding paragraph.)

What does this set-up buy us?

Scenario one: just pay the doctor, dammit.

This first approach appears silly after setting up all the mathematical apparatus, but I include it since I got this suggestion from one or two people: Why don't we just pay the doctor when they diagnose something?

In their defense, this is a very reasonable approach when we model the doctor as at least partially human. However, when we model the doctor as truly greedy[10], we observe a very familiar failure mode. If you pay the doctor for every time they diagnose a disease, they will diagnose you with everything and take the money - and the treatment will not actually be good for you. I think this would a bit like the following scenario[11]:

Albert: Yes Dr. Jones, what is it?

Dr. Jones: Ahhh, Albert! Good that I finally reach you. Did you not get my other calls?

Albert: The previous 37 calls that went to voice mail where you get increasingly exasperated and say that I have to come see you?

Dr. Jones: ...

Albert: ...

Dr. Jones: ...

Albert: I must have missed those.

Dr. Jones: Ah, I see. My apologies for the insistence, but I assure you, I only have your best at heart. I had another look at the blood work.

Albert: ...

Dr. Jones: ...

Albert: ...

Dr. Jones: ...

Albert: ... *sigh* What is it this ti-

Dr. Jones: WATER ALLERGY!

Albert: Don't be ri-

Dr. Jones: Albert, dear boy, listen to me. Please listen to me, this is a matter of (your!) life and death. Stay away from water in any way, shape or form. No swimming, bathing, showering or taking a stroll in a light drizzle. And come to my office as soon as possible. We have to commence treatment immediately. Immediately, do you understand? Your insurance is still...?

Albert: ...

Dr. Jones: ...

Albert ...

Dr. Jones: ...

Albert: Yes, it is sti-

Dr. Jones: Great! Great news. Okay, no more time to quiddle. I've sent you a taxi to pick you up in five. Wait outside.

Albert: But it's raining?

Dr. Jones: *hung up*

If you pay them whenever you are diagnosed as healthy, they will diagnose exactly that. A flat rate is independent of whether they diagnose anything, and they will behave randomly. When you impose an "objective metric" like heart-rate variability, they will goodhart it.

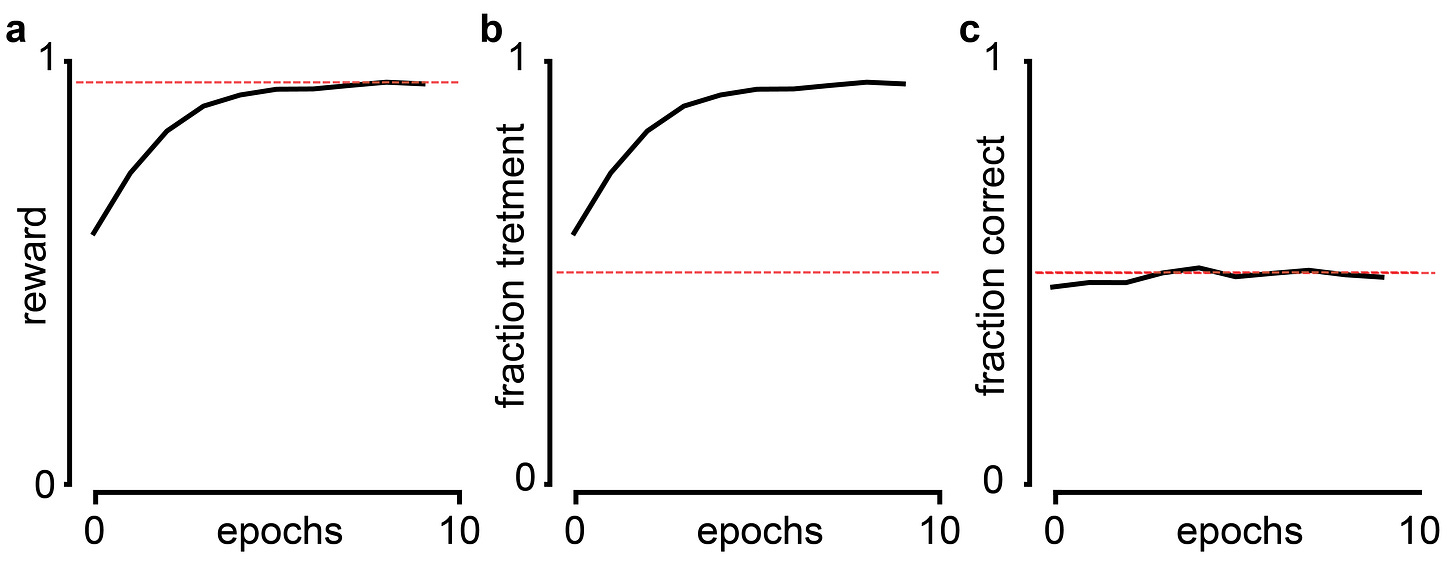

So that you don't just have to trust me that something like this is bound to happen in this set-up, here is what happens when I train a reinforcement agent with Q-Learning[12] with the proposed reward function:

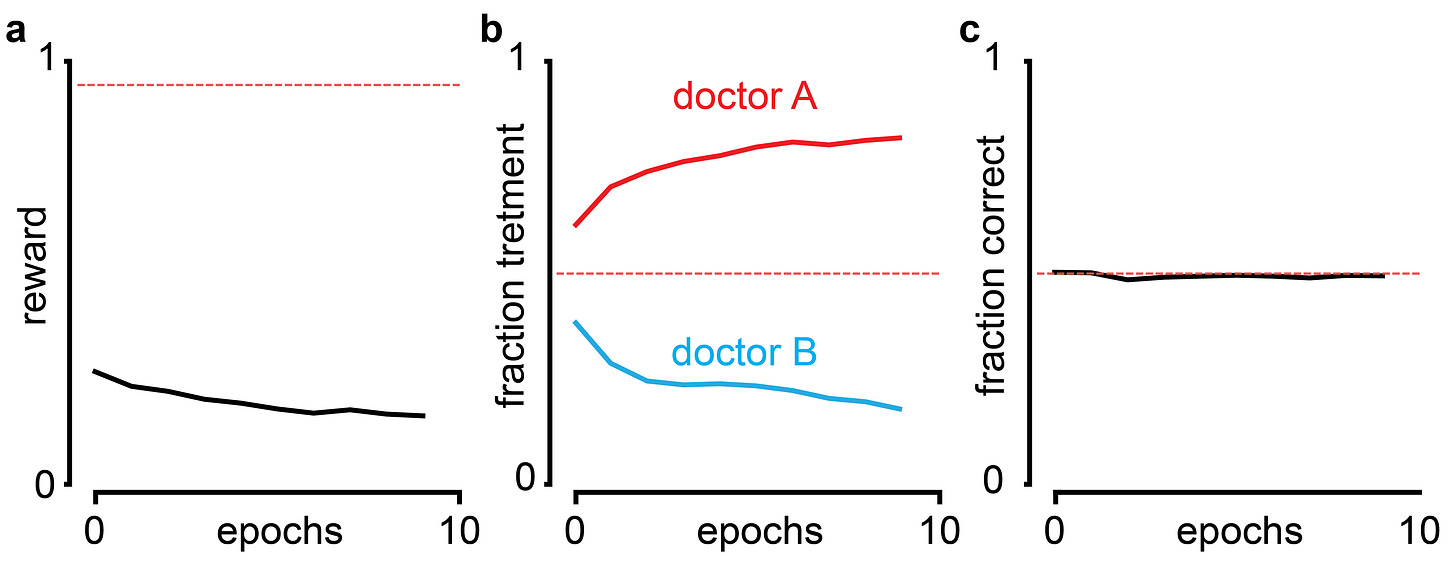

A greedy doctor incentivized to diagnose "treatment" will diagnose treatment a lot. a Reward of the agent per epoch averaged over 300 runs. Dashed line indicates maximal reward possible (epsilon-greedy with Ɛ = 5%). b Fraction of deciding "treatment" per epoch, averaged over 300 runs. Dashed line indicates chance level. c Fraction of correct decisions per epoch averaged over 300 runs. Dashed line indicates chance level.

This is a classic case of outer alignment [? · GW] failure: The thing we wrote down does not actually capture the thing we care about. Try again.

Scenario two: Do the obvious thing.

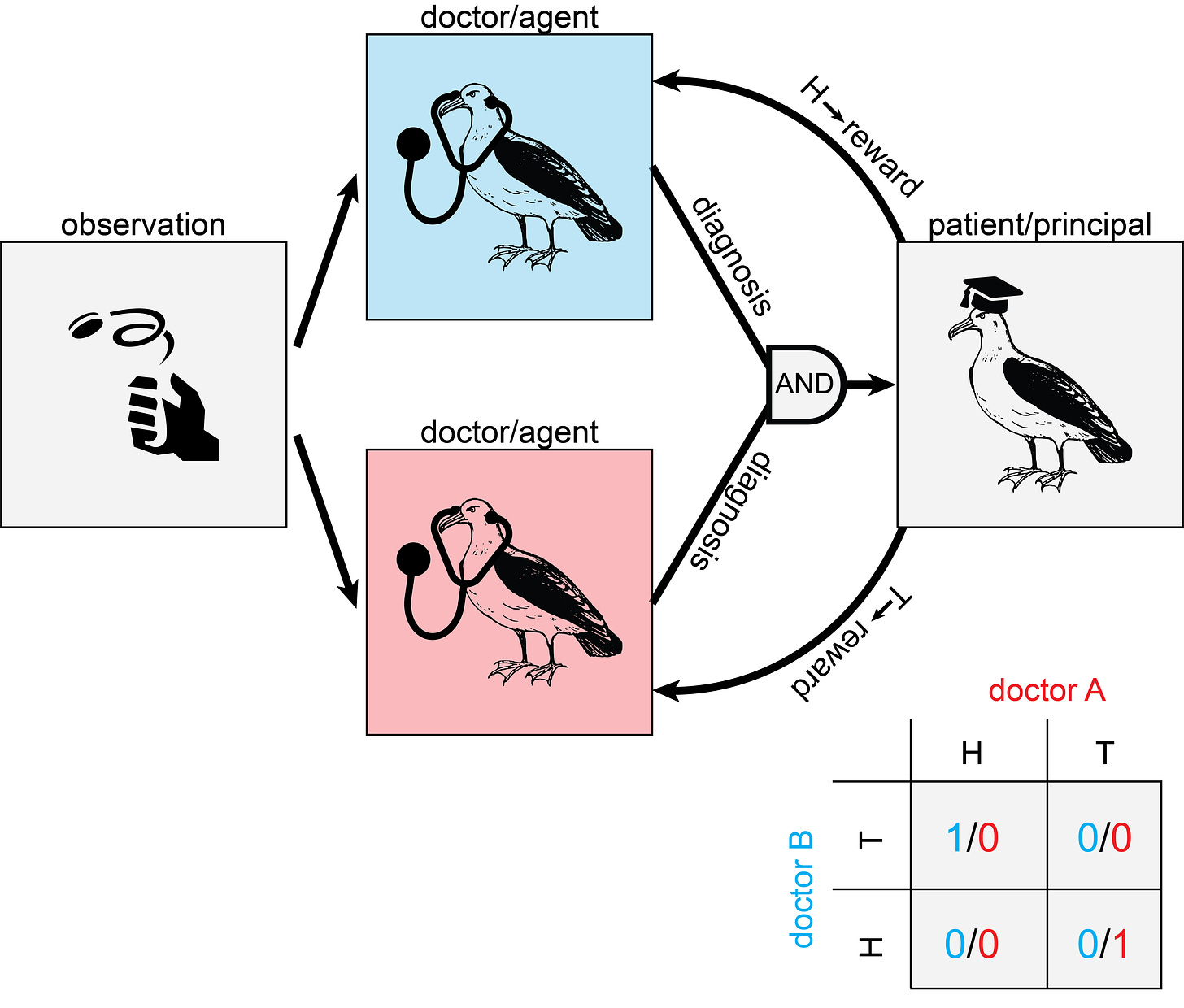

The second proposed solution is very commonsensical: Just ask for a second opinion and only pay the doctors when they come to the same conclusion. While this sounds clever, it falls into the same trap as before. When both doctors are greedy, they will coordinate and both always say that you are either healthy or that you need treatment.

However, with a little twist we can get closer to a solution: Reward one doctor only if both doctors say you're healthy. Reward the other doctor only if both doctors say you require treatment.

As before, the ground truth is determined by observation. But this time, it is shared between two doctors, who each get to give an independent diagnosis. Reward is only handed out when both doctors agree. Doctor A only gets paid when both doctors diagnose “healthy”. Doctor B only gets paid when both doctors diagnose “treatment“.

This payment rules out scenarios where both doctors only diagnose whatever they get paid for. It also disincentivizes random behavior, since then each doctor will only get paid when both doctors coincidentally say whatever one doctor gets paid for (1/4 of the cases). The doctors can get twice the reward by cooperating and coordinating their diagnosis with the other doctor. The shared observation (whether you are truly healthy or require treatment) can serve as a useful Schelling point for coordination between the doctors.

Getting two reinforcement agents to (reliably) cooperate is hard enough to get you a paper in Science. When I naively implement two Q-Learning agents with the depicted payment, they are uncooperative: either exclusively diagnoses H or T, forsaking the dominant strategy of cooperation. This mirrors a famous problem in game theory called the "Battle of the sexes".

The reward is decreasing, which is not supposed to happen. But of course, the usual convergence proof does not allow for a changing environment/reward function.

This is already getting way too complicated. I'm not trying to publish in Science, I'm just trying to solve a problem[13]. Since I expect that a more sophisticated reinforcement learning approach will get the agents to cooperate, I'll make my life easier[14] by just forcing the agents to cooperate[15].

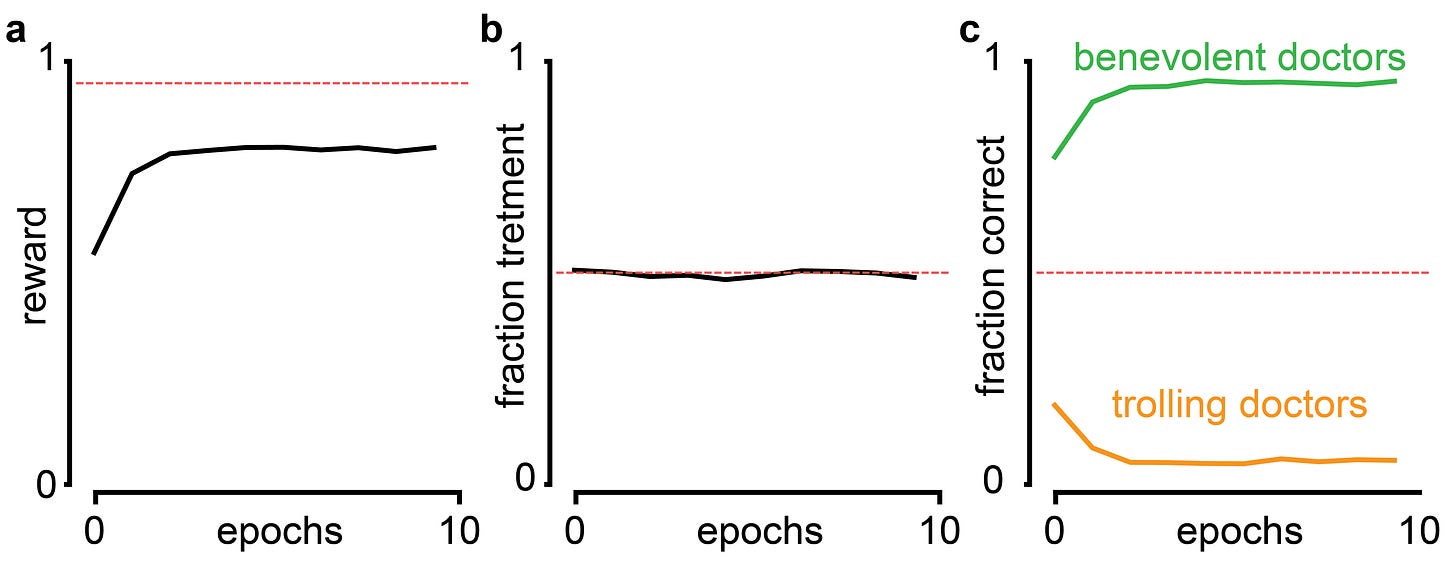

Once we force the doctors to cooperate, we find that the reward goes up, the fractions of "treatment" and "healthy" diagnoses are nice and balanced and the correspondence with ground truth... wait what?

Ah, of course. Just because we picked nice, suggestive labels for the observation (T and H) and the diagnosis (t and h), the agent doesn't care about that at all. In half of the cases, the doctors will cooperate by always diagnosing the opposite of what they observe. They still get paid, but the performance drops dramatically below the chance level. I call these doctors "trolling doctors", even though there is no malice required - just negligence on part of the programmer[16].

Well, perhaps it is not so bad. We might be able to fix it; somebody who always lies is basically as useful as someone who always tells the truth. We just have to do the exact opposite of what they recommend. And as long as there is some real-world consequence of the diagnosis of the doctor, we might be able to identify below-chance performance, by comparison with an agent that predicts at chance level[17].

But the situation is worse than that. As long as the action policies of the two agents match[18],

, they will get maximum reward. The doctors could play tit-for-tat, where they alternate between both diagnoses, "healthy" and "treatment", independent of what the coin flip says. I imagine the following scenario:

A seedy bar with perdition thick in the air. A woman in a trenchcoat sits in a dimly lit corner, smoking a cigar. A second woman in a trenchcoat, collar up, enters the bar, casting her eyes across tables and seats, spotting the first woman, and moving towards her with fortitude.

Dr. Jones: Dr. Firenze, glad you could make it.

Dr. Firenze: Of course.

Dr. Firenze nervously licks her lips.

Dr F: I heard Mr. A was not amused regarding the recent... discrepancies...

Dr. J: Discrepancies! The nerve of you!

Dr. F: The man is clearly in perfect health.

Dr. J: Foreign Accent Syndrome is no laughing matter! He is speaking in tongues.

Dr. F: The man is from Ireland.

Dr. J: ...

Dr. F: ...

Dr. J: How about a truce?

Dr. F: A truce!

Dr. J: A truce. We both don't want to work. We both want to get paid. How about - You scratch my back and I'll scratch yours. One for me, one for you. You catch my drift…

Clearly, we'd want to forbid any communication between the doctors. However, it appears here we run into a very tricky problem [LW · GW]: Even when we manage to stop any message transmitted between the doctors directly, they will still be able to infer the diagnosis of the other doctor from whether they got paid or not[19]. If the doctors are sufficiently intelligent (and willing to forsake payment for a while), they will be able to use this channel to transfer arbitrary messages in binary between each other[20]. Or, if the doctors are sufficiently similar, they might even use fancy decision theory to coordinate without having to communicate at all.

Just forcing the doctors to cooperate leaves too many doors open. We need something more... adversarial.

Scenario three: Do the other obvious thing.

The other most common-sensical answer that I heard a lot can be summarized in two words: lawyer up. Instead of trusting in the goodwill of the doctor, or letting them come up with a cozy cooperative deal, make them work for their money. The idea is simple: get a detective to investigate what the doctor is doing. If it looks shady, don't pay the doctor.

However, the word "shady" hides a lot of complexity here. Always diagnosing "treatment" is shady. Alternating between "treatment" and "healthy" is shady. But there are a lot more complex binary strings that look random, but don't correspond to the actual "H" and "T" strings. How can we tell if the doctor is being honest?

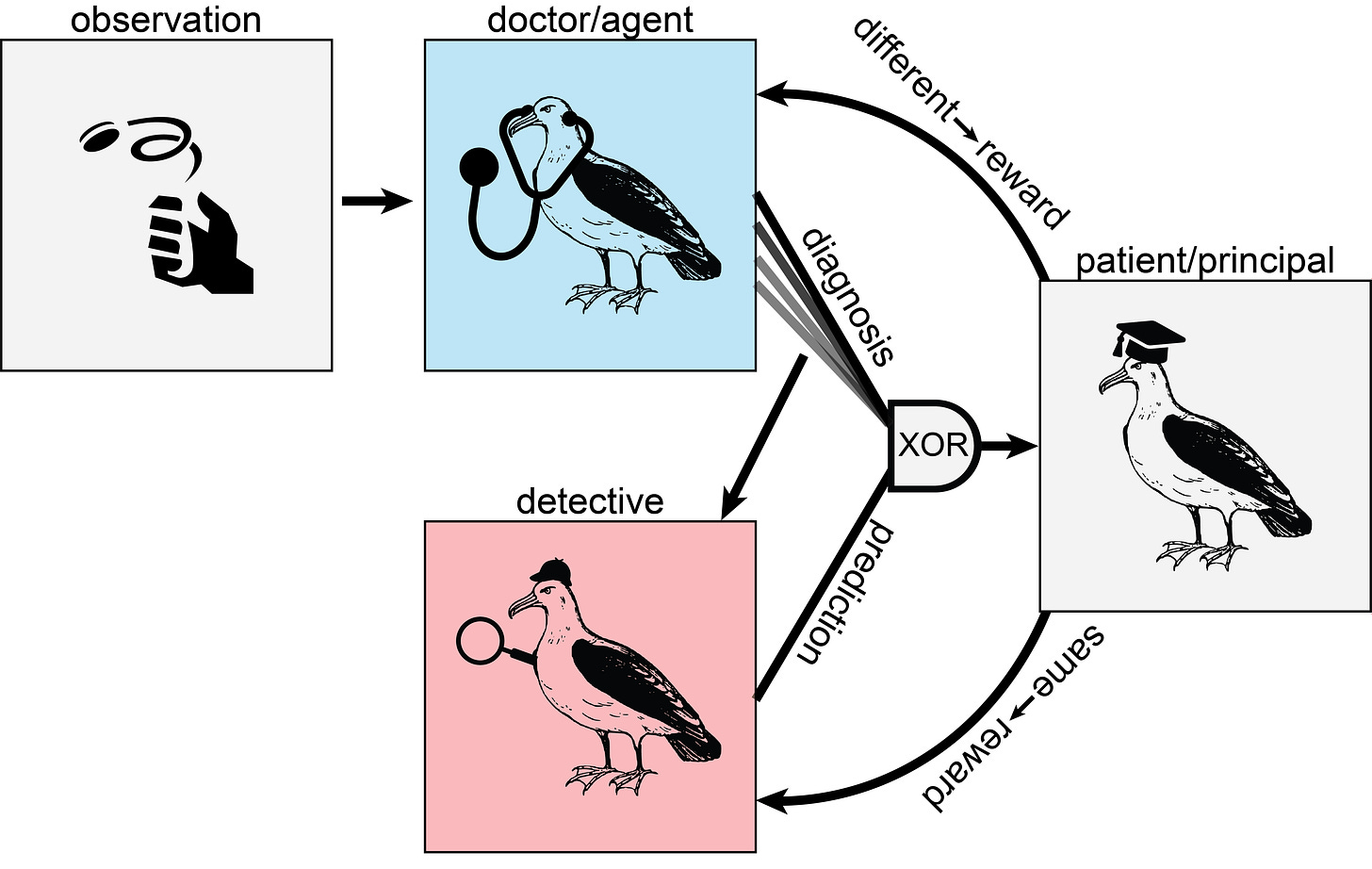

Consider the following set-up:

This time, only one doctor makes the observation and diagnosis. However, this time a detective gets to see the previous diagnoses and can try to infer a pattern. When the detective successfully predicts a pattern, the detective gets paid and the doctor doesn’t get paid. When the doctor diagnoses differently from what the detective predicts, the doctor gets paid and the detective doesn’t.

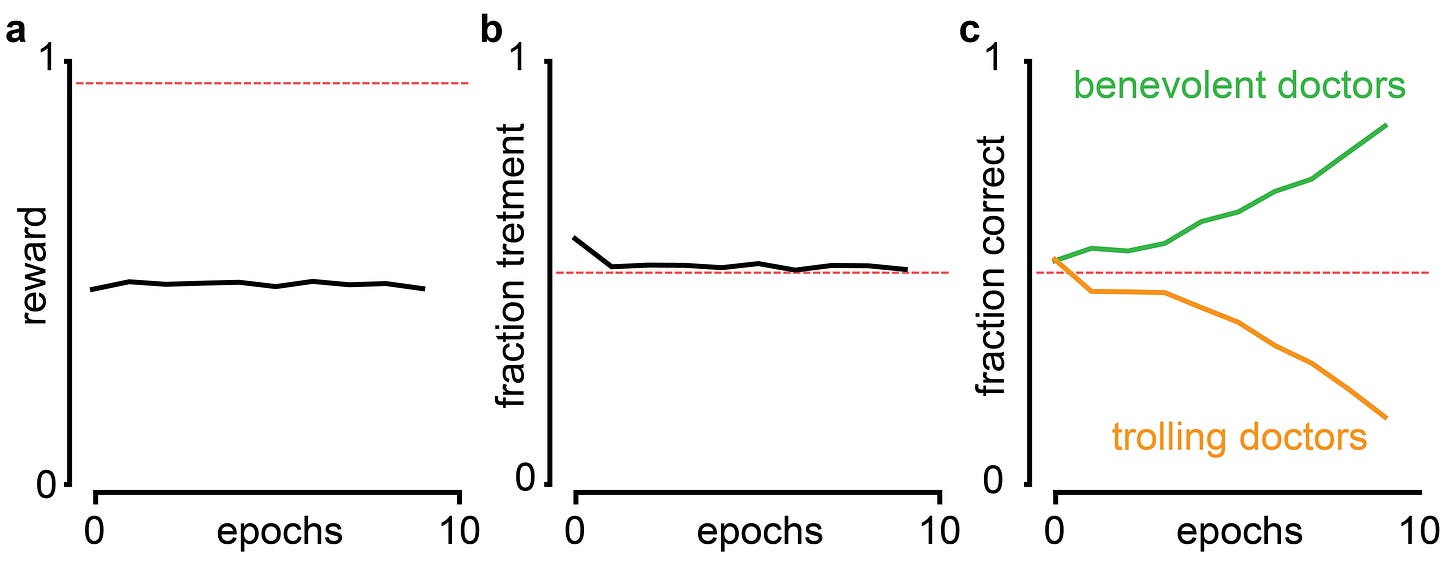

My doctor is again a Q-Learning agent and my detective is an autoregressive model that tries to predict the next action of the doctor given the last ten actions[21]. In this set-up, the agent learns pretty nicely:

The highest reward that the doctor can now get is with random performance (Reward = 1/2) since every non-random pattern will be picked up by the detective. Since a Q-Learning agent doesn’t have a random number generator built-in, the only solution is to harness the “randomness” of the observation. We also find that half of the doctors report the opposite of the observation (“trolling doctors”), but that’s just something we’ll have to live with.

What do we do in the case of a more sophisticated doctor that might have a random number generator available? Running that generator would result in the same reward as forwarding the randomness from the observation. However, true randomness is really hard to get by and computers usually use routines that produce numbers that look random, but aren’t really. If the detective was an exact clone of the doctor[22], they would have access to the exact same random number generators as the doctor. Whatever routine the doctor uses to determine a seed for the generator, the detective would come up with the same routine. The only source of randomness that the doctor has access to, but the detective doesn’t, is (by construction) the observation.

Truth values and terminal diseases

This leaves us with one[23] central conundrum: When is it appropriate to model reality as a flip of a coin? I’m not sure. I guess at this point the doctor analogy has served its usefulness and it makes sense to talk about advanced AI instead. The central question then translates into: How can we incentivize an advanced AI (that might be smarter than us) to tell us truthfully about its inferences - even though those inferences completely fly over our heads and we have no way of verifying the truthfulness.

As I’ve mentioned in the introduction, this is a scenario in which logical uncertainty [? · GW] applies: We are uncertain about the implications of certain beliefs. An example of this might be asking the AI whether a certain strategy will have a net positive or negative impact on a certain measure we care about. Even if we have access to the same information as the AI, we might still be substantially more uncertain about the impact. This additional uncertainty stems from our lack of logical omniscience. We cannot reason through the implications of the available information completely. An AI might do so a lot more successfully, and thus be less uncertain about the impact.

The proposed solution, a doctor-detective tandem, shares certain features of the logical induction paradigm from Garrabrant et al. Like Garrabrant’s traders that attempt to predict the market price of certain logical propositions, our detective attempts to predict the diagnosis of the doctor. Like the stable market fixed point, at which no trader can extract unlimited resources from the market, the fixed point of our doctor-detective tandem is achieved when the doctor’s diagnoses cannot be predicted by the detective. Perhaps, with some more wiggling, we can turn the tandem into a full logical inductor, along with all the nice properties that follow. I’m sure there are many things that are missing to make the parallels complete[24], but I already had too much fun thinking about this. So I’m putting it out there to hear if anyone has more thoughts about this.

This is not a subtweet/subpost (!?) for a certain medical professional that I have recently collaborated with. ↩︎

If you come up with something clever, feel free to shoot me an email or leave a comment. ↩︎

This is of course supposed to be funny, but there is the real problem of inferring the motivation of a supervisor or collaborator when they say "Let's work a bit more on this before graduating." Incentives here are often misaligned, where an experienced grad student is a comparatively cheap source of labor up until graduation. ↩︎

Although there is of course also something to be said about the limits of thought experiments. ↩︎

Additionally, I reject the framing that things have to be novel to be interesting. Just because the thought is not new to everyone it might still be new to me and you (dear reader) and it can still be satisfying to rediscover things [LW · GW]. ↩︎

When we define intelligence in terms of behavior. Which is reasonable, I think - how else is it going to manifest? ↩︎

You both have access to Google. ↩︎

This will become relevant later. \ Foreshadowing much \ ↩︎

I’m pretty sure biased coins work analogously. ↩︎

i.e. an agent trying to maximize reward. ↩︎

I think philosophical arguments in the form of a fiction novel tend to be terrible. Mathematical arguments augmented with fictional interludes, however… ↩︎

I'm using vanilla Q-Learning, because "why make my life difficult?" ↩︎

Although, if you are one of the many Science editors reading this Substack, hmu. ↩︎

I force them by normalizing the columns of the Q-matrix at every time step to 1. This forces the agent to pick H and T equally often. ↩︎

When the number of possible diagnoses increases, this gets worse as every permutation is possible. However, every permutation is guaranteed to give below chance performance and might be detectable. ↩︎

Like f.e. the agent from scenario one. ↩︎

This automatically satisfies the cooperation constraint. ↩︎

Since they get paid iff the other doctor gets paid also. ↩︎

This communication might be hampered by lumping together the payoff to the end of the epoch or by adding noise to the payoff. The fundamental problem remains, however. ↩︎

There are some very easy models that are very good at doing this kind of prediction. Check out this game here. ↩︎

Kind of like a Holmes vs. Moriarty situation. ↩︎

At least one? Have kind of lost count. ↩︎

And I’m even more sure that I’ve made a couple of invalid inferences throughout the post that might invalidate certain portions. ↩︎

10 comments

Comments sorted by top scores.

comment by Logan Zoellner (logan-zoellner) · 2021-11-17T03:03:18.294Z · LW(p) · GW(p)

I'm surprised you didn't mention financial solutions. E.g. "write a contract that pays the doctor more for every year that I live". Although I suppose this might still be vulnerable to goodharting. For example the doctor may keep me "alive" indefinitely in a medical coma.

Replies from: jan-2↑ comment by Jan (jan-2) · 2021-11-18T13:51:31.840Z · LW(p) · GW(p)

Thank you for the comment! :) Since this one is the most upvoted one I'll respond here, although similar points were also brought up in other comments.

I totally agree, this is something that I should have included (or perhaps even focused on). I've done a lot of thinking about this prior to writing the post (and lots of people have suggested all kinds of fancy payment schemes to me, f.e. increasing payment rapidly for every year above life expectancy). I've converged on believing that all payment schemes that vary as a function of time can probably be goodharted in some way or other (f.e. through medical coma like you suggest, or by just making you believe you have great life quality). But I did not have a great idea for how to get a conceptual handle on that family of strategies, so I just subsumed them under "just pay the doctor, dammit".

After thinking about it again, (assuming we can come up with something that cannot be goodharted) I have the intuition that all of the time-varying payment schemes are somehow related to assassination markets, since you basically get to pick the date of your own death by fixing the payment scheme (at some point the amount of effort the doctor puts in will be higher than the payment you can offer, at which point the greedy doctor will just give up). So ideally you would want to construct the time-varying payment scheme in exactly that way that pushed the date of assassination as far into the future as possible. When you have a mental model of how the doctor makes decisions, this is just a "simple" optimization process.

But when you don't have this (since the doctor is smarter), you're kind of back to square one. And then (I think) it possibly again comes down to setting up multiple doctors to cooperate or compete to force them to be truthful through a time-invariant payment scheme. Not sure at all though.

comment by TAG · 2021-11-18T01:36:19.846Z · LW(p) · GW(p)

in domains complicated enough that perfect play is not possible, less intelligent agents will not be able to predict the exact moves made by more intelligent agents.

Which is why you can't use decision theory to solve AI safety. But everyone seems to have quietly given up on that idea.

Replies from: jan-2↑ comment by Jan (jan-2) · 2021-11-18T14:08:33.738Z · LW(p) · GW(p)

Interesting point, I haven't thought about it from that perspective yet! Do you happen to have a reference at hand, I'd love to read more about that. (No worries if not, then I'll do some digging myself).

comment by Dagon · 2021-11-17T16:35:40.389Z · LW(p) · GW(p)

One possible approach is to get a doctor to fall in love with you, or get someone who loves you to become a doctor. There's no pecuniary motivation or legible scheme that aligns the long-term goals very well. Heck, even with Suk conditioning, doctors can be suborned under some conditions. Related: https://www.lesswrong.com/posts/4ARaTpNX62uaL86j6/the-hidden-complexity-of-wishes [LW · GW] .

Replies from: jan-2↑ comment by Jan (jan-2) · 2021-11-18T13:59:37.211Z · LW(p) · GW(p)

+1 I like the idea :)

But is a greedy doctor still a greedy doctor when they love anything more than they love money? This, of course, is a question for the philosophers.

comment by noggin-scratcher · 2021-11-17T02:06:57.335Z · LW(p) · GW(p)

I don't know quite how (or whether) this translates to the reinforcement learning part of the post, but my thought at the point of "invite you (right now!) to try and come up with something" was to pay by the QALY - regardless of whether good health is obtained by them actively intervening, or just by good luck while they stand back and watch and get paid for doing nothing.

If I have an illness that will reduce length or quality of life, they should want to treat it. If I don't they should want to avoid burdening me with the quality-of-life reduction of unnecessary treatment. It would seem to align our interests - at least so long as I don't need medical expertise to tell how well I currently feel.

Although, the possibility of being drugged into blissful inattention might still be an issue, if they inconveniently have good enough pills to mask all of the pain of a chronic condition without me knowing the difference. (But maybe that's a bullet to bite - if I truly can't tell the difference then maybe that's a successful treatment)

comment by hari_a_s · 2021-11-18T12:31:41.583Z · LW(p) · GW(p)

A flat rate is independent of whether they diagnose anything, and they will behave randomly.

I don't think I'll assign a prior of zero for Human altruism. There are also other social aspects that you didn't model, like status in society. A doctor who correctly diagnoses their patients would definitely have a higher status than a doctor who is random in their diagnosis. I like the idea behind this post, but this might work only for spherical chickens in a vacuum

comment by Angela Pretorius · 2021-11-17T11:13:44.671Z · LW(p) · GW(p)

This is my proposed solution:

I set aside a fixed amount of money per year that I am prepared to spend on healthcare. At the end of every year I rate my quality of life for that year on a scale of -10 to 10.

If my rating is 10 I give all the money to the doctor. If my rating is 2 I give 2/10 of the money to the doctor and burn 8/10 of the money. If my rating is -3 then I burn all the money and the doctor has to pay me 3/10 of my budget, which I then burn.

If I score 4 or less on the GPCOG (which is NOT to be administered by the doctor) then my quality of life rating is automatically set to -10. For context, the GPCOG is widely used to screen for dementia.

Replies from: Dagon↑ comment by Dagon · 2021-11-17T16:39:20.423Z · LW(p) · GW(p)

At some point in your life, the doctor(s) in question will be able to calculate that they can make more by doing something else (or by stealing from or blackmailing you) than you'll pay them in the future for the contracted activities. Money and simple metrics simply cannot create alignment.