Simulate the CEO

post by robotelvis · 2023-08-12T00:09:44.105Z · LW · GW · 5 commentsThis is a link post for https://messyprogress.substack.com/p/simulate-the-ceo

Contents

5 comments

Humans can organize themselves into remarkably large groups. Google has over a hundred thousand employees, the worldwide Scouting movement has over fifty million scouts, and the Catholic Church has over a billion believers.

So how do large numbers of people coordinate to work towards a common mission?

Most organizations are headed by some kind of “CEO” figure. They may use a title like President, Executive Director, or Pope, and their power is likely constrained by some kind of board or parliament, but the basic idea is the same - there is a single person who directs the behavior of everyone else.

If you have a small group of people then the CEO can just tell each individual person what to do, but that doesn’t scale to large organizations. Thus most organizations have layers of middle managers (vicars, moderators, regional coordinators, etc) between the CEO and regular members.

In this post I want to argue that one important thing those middle managers do is that they “simulate the CEO”. If someone wants to know what they should be doing, the middle manager can respond with an approximation of the answer the CEO would have given, and thus allow a large number of people to act as if the CEO was telling them what to do.

This isn’t a perfect explanation of how a large organization works. In particular, it ignores the messy human politics and game playing that makes up an important part of how companies work and what middle managers do. But I think CEO-simulation is a large enough part of what middle managers do that it’s worth taking a blog post to explore the concept further.

In particular, if simulating the CEO is a large part of what middle managers do, then it’s interesting to think about what could happen if large language models like GPT get good at simulating the CEO

A common role in tech companies is the Product Manager (PM). The job of the a PM

Is to cause the company to do something (eg launch a product) that requires work from multiple teams. Crucially, the PM does not manage any of these teams and has no power to tell any of them what to do.

So why does anyone do what the PM asks?

Mostly, it’s because people trust that the PM is an accurate simulation of the CEO. If the PM says something is important, then the CEO thinks it is important. If the PM says the team should do things a particular way then the CEO would want them to do it that way. If you do what the PM says then the CEO will be happy with you and your status in the company will improve.

Part of the reason Sundar Pichai rose to being CEO of Google is that he got a reputation for being able to explain Larry Page’s thinking better than Larry could - he was a better simulation of Larry than Larry was.

Of course the ability to simulate the CEO is important for any employee. A people manager will gain power if people believe they accurately simulate the CEO, and so will a designer or an engineer. But the importance of simulating the CEO is most visible with a PM since they have no other source of power.

Of course, the CEO can’t possibly understand every detail of what a large company does. The CEO of Intel might have a high level understanding of how their processors are designed, manufactured, and sold, but they definitely don’t understand any of these areas with enough depth to be able to directly manage people working on those things. Similarly the Pope knows little about how a particular Catholic School is run.

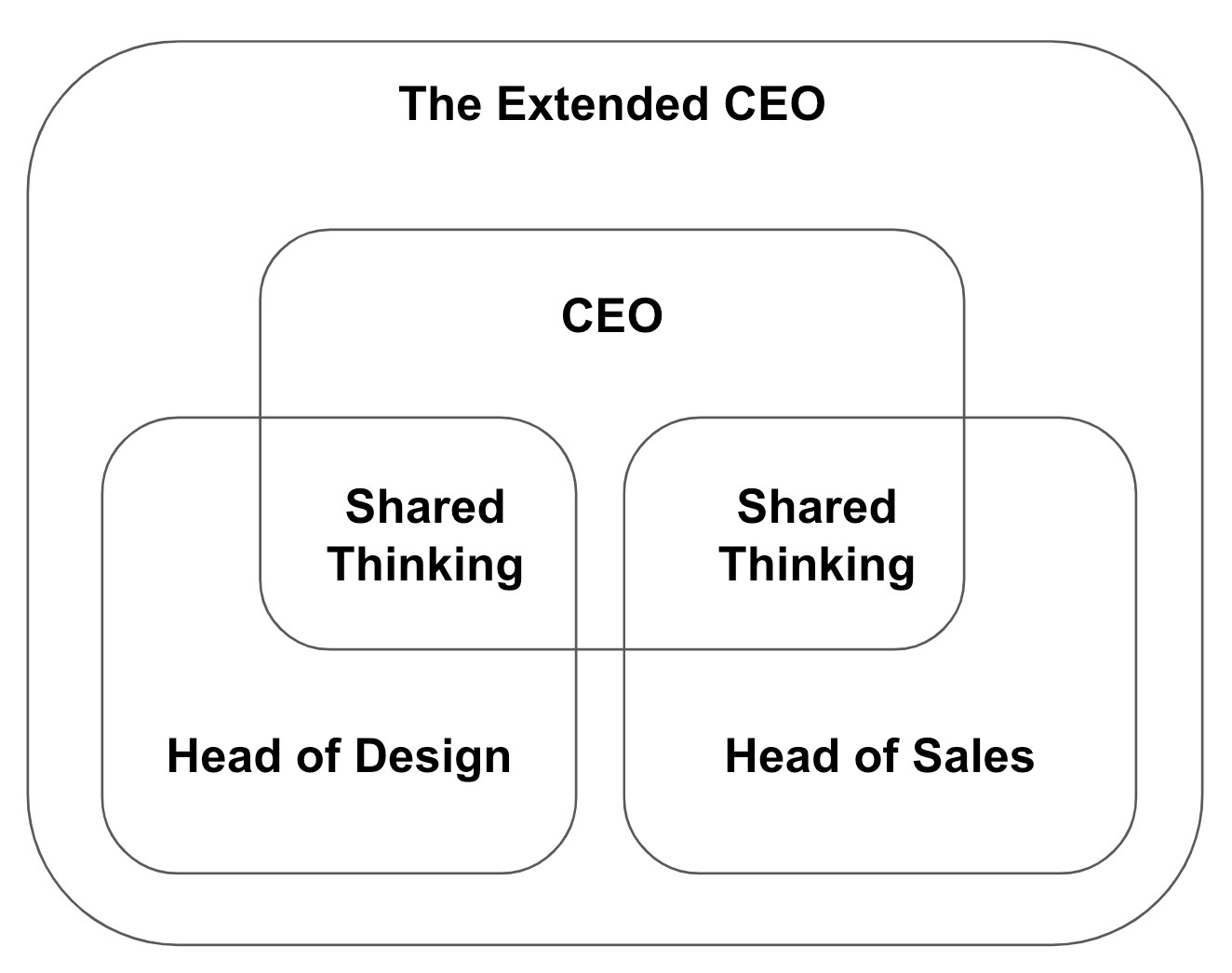

In practice, a CEO will usually defer to the judgment of people they trust on a particular topic. For example, the CEO of a company might defer to the head of sales for decisions about sales. You can think of the combination of the CEO and the people they defer to as making up an “Extended CEO” - a super-intelligence made by combining the mind of the CEO with the minds of the people the CEO defers to.

When I say someone needs to simulate the CEO, what they really need to simulate is the Extended CEO.

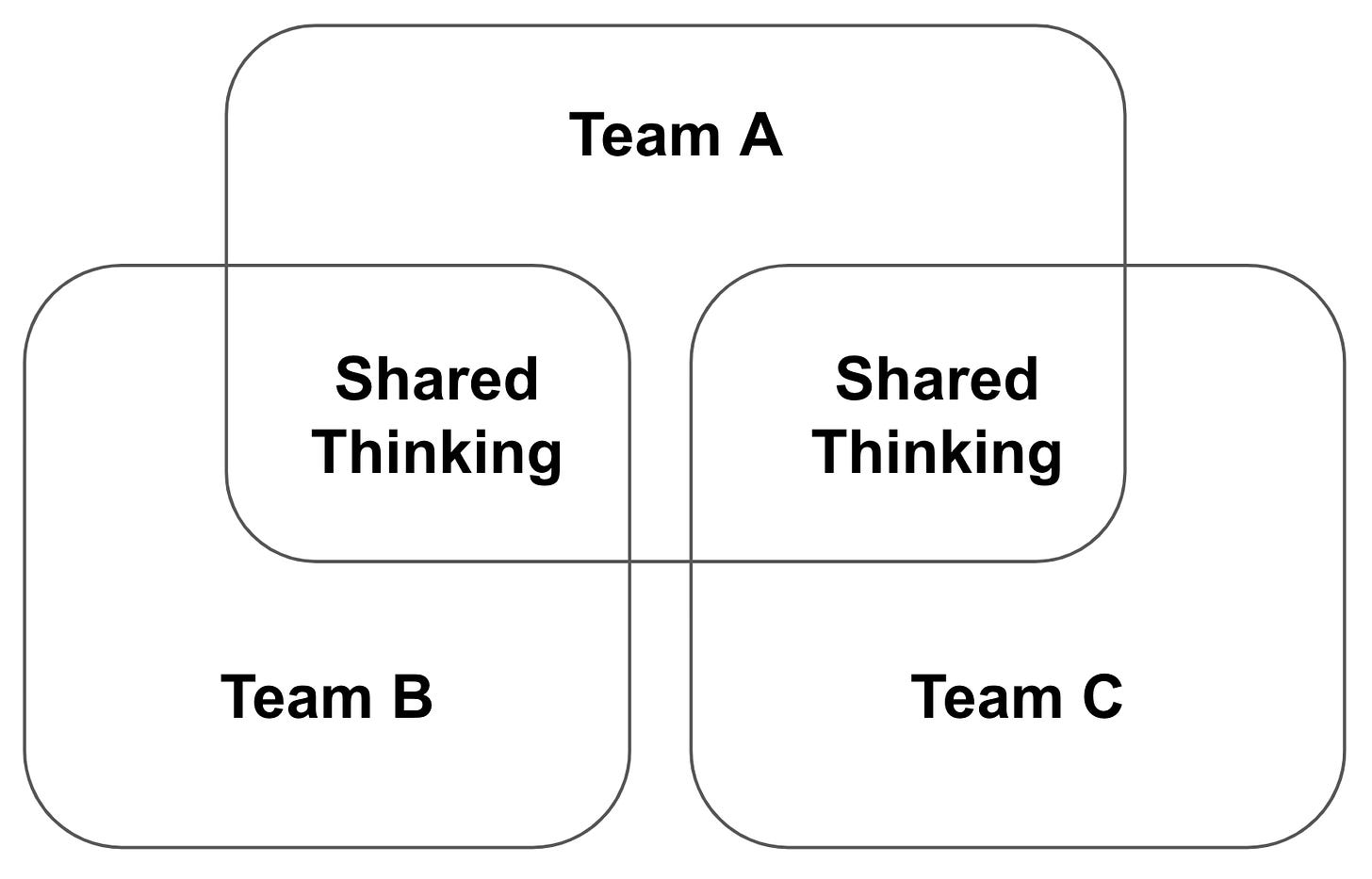

Similarly, most organizations will have different cultures in different teams. For example Google has very different cultures in Search and Android. In general this is good - it allows a company to try out multiple cultures and find out what works best, and it allows a team to tailor its culture to the kind of work that the team does.

Having different teams cultures is fine when teams have clear boundaries and don’t need to work together. However if teams need to interact with each other then it is usually necessary for them to align around a shared way of doing things in the space where they interact.

I’ve used the word ‘team’ here, but similar principles apply to any two groups of people that do some things separately and some things together - whether they are companies, families, countries, or sports teams. Two sports teams can have very different cultures, but need to agree on the rules of the game they play together. Two countries can have very different cultures, but have treaties to agree how they interact.

What do you do if you think the (extended) CEO is wrong?

It’s rarely useful to act in direct opposition to the CEO. Even if you are right, you will rapidly lose your ability to influence other people once it becomes clear that you no longer accurately simulate the CEO.

Instead, the best practice is to split your mind into two halves. One half continues to simulate the (possibly wrong) CEO and directs others according to the CEO’s wishes while the other half attempts to persuade the CEO to change their mind. This is sometimes known as “disagree and commit” - you follow the official plan while being open about the reasons it might be wrong.

This also looks a lot like democracy and rule of law. It’s usually best to follow the laws as written, while campaigning for the laws to be changed to something better.

An important special case is that sometimes the CEO will tell you to do X, but they would have said to do Y if they had access to more information. In that case you can probably do Y instead of X if the team and the CEO both trust you to accurately simulate the CEO.

The CEO can make things easier for everyone else by making themselves easy to simulate.

They can do this by making sure that when they make a decision, they also outline the principles that others could have used to make that decision.

Many organizations have a short list of easily memorized principles that can predict the way the CEO is likely to answer a wide variety of questions. Google has “don’t be evil” and “focus on the user”. Facebook has “move fast and break things”. Christianity has the Beatitudes.

Sometimes this means intentionally thinking in a simpler and more predictable way, in order to make the CEO’s thinking easier to simulate. It’s often better to have a slightly less good mental model that lots of people can apply consistently at speed than a more sophisticated mental model that is so hard to simulate that everyone has to check in with the CEO.

If a manager finds themselves having to micromanage their employees, it often means that they haven’t made themselves easy enough to simulate.

Metrics are also a way of simulating the CEO.

If the engineers at Google Search had infinite time, then the best way to decide whether a ranking change was good might be to have the CEO personally look at thousands of queries and give their personal judgment about whether the results had got better. But that doesn’t scale. Instead, Google pays human raters to evaluate search results according to directions given in a rater guidelines document that tells them how to simulate the CEO.

Similarly, the best way for Facebook to judge whether a product change is good might be to have the CEO personally observe every user’s use of the product. Instead Facebook uses metrics like Time Spent, and Meaningful Social Interactions that roughly approximate the opinion the CEO would have formed, had they seen the users using the product.

So what happens if we throw large language models like GPT into the mix?

A good manager is probably smarter than GPT, but what if you fine-tuned GPT by having it read every internal email and document that had ever been written inside the company. It’s possible that it might be better at simulating the CEO than the majority of employees. Moreover, unlike a senior manager, you can ask GPT as many dumb questions as you like without worrying that you are wasting its time.

Similarly, a GPT simulation of the CEO would probably be a better judge of whether a product change is good than low paid human raters or crude metrics like Time Spent. In these cases you don’t need to be better at simulating the CEO than a skilled manager - just better than the cheap approximations we use for metrics.

We are already starting to see AIs manage humans - such as at Amazon, where distribution center workers have their performance evaluated by a machine learning algorithm.

Is any of this a good idea? I’m not sure. There is something creepy about the idea of having an AI make management decisions or decide what product changes to launch. But if it is more effective then it will probably happen.

5 comments

Comments sorted by top scores.

comment by gjm · 2023-08-12T22:47:51.003Z · LW(p) · GW(p)

I don't think I buy this as a productive way of thinking about what happens in large organizations.

So first of all there's a point made in the OP: the CEO is not, in fact, an expert on all the individual things the organization does. If I'm managing a team at Google whose job is to design a new datacentre and get it running, and I need to decide (say) how many servers of a particular kind to put in it, my mental simulation of Sundar Pichai says "why the hell are you asking me that? I don't know anything about designing datacentres".

OP deals with this by suggesting that we think about an "extended CEO" consisting of the CEO together with the people they trust to make more detailed decisions. Well, that probably consists of something like the CEO's executive team, but none of them knows much about designing datacentres either.

For "simulate the (extended) CEO" to be an algorithm that gives useful answers, you need to think in terms of an "extended CEO" that extends all the way down to at most one of two levels of management above where a given decision is being made. Which means that the algorithm isn't "simulate the CEO" any more, it's "simulate someone one or two levels of management up from you". Which is really just an eccentric way of saying "try to do what your managers want you to do, or would want if you asked them".

And that is decent enough advice, but it's also kinda obvious, and it doesn't have the property that e.g. we might hope to get better results by training an AI model on the CEO's emails and asking that to make the decisions. (I don't think that would have much prospect of success anyway, until such time as the AIs are smart enough that we can just straightforwardly make them the CEO.)

I don't think organizational principles like "don't be evil" or "blessed are the meek" are most helpfully thought of as CEO-simulation assistance, either. "Don't be evil" predates Sundar Pichai's time as CEO. "Blessed are the meek" predates Pope Francis's time as pope. It's nearer to the mark to say that the CEOs are simulating the people who came up with those principles when they make their strategic decisions, or at least that they ought to. (Though I think Google dropped "don't be evil" some time ago, and for any major religious institution there are plenty of reformers who would claim that it's departed from its founding principles.)

I am pretty sure that most middle managers pretty much never ask themselves "what would the CEO do?". Hopefully they ask "what would be best for the company?" and hopefully the CEO is asking that too, and to whatever extent they're all good at figuring that out things will align. But not because anyone's simulating anyone else.

(Probably people at or near the C-suite level do quite a bit of CEO-simulating, but not because it's the best way to have the company run smoothly but because it's good for their careers to be approved of by the CEO.)

Replies from: robotelvis↑ comment by robotelvis · 2023-08-12T23:50:25.449Z · LW(p) · GW(p)

Maybe I should clarify that I consider the "extended CEO" to essentially include everyone whose knowledge is of importance at the company. If you asked Sundar how many servers of a particular type to put in, he'd forward you to the relevant VP, who would forward you to the relevant director, who would forward you to the relevant principal engineer, who would actually answer your question. That's what I mean by asking a question of the "extended CEO".

A similar principle applies to simple rules like "don't be evil" or "blessed be the meek". Yes Sundar and Pope Francis didn't create these principles, but, by taking over as CEO, they had to first show that their thinking was aligned with the principles of the existing "extended CEO" and those principles are summarized by simple lists like "ten things we know to be true" and the Beatitudes, so that other people are able to better simulate the opinion of "the extended CEO".

When I was at Google, it was very common to resolve an internal disagreement by pointing to the answer that one of the "ten things we know to be true" principles would direct us to behave - and that allowed for internal consistency. Similarly, when I was a Christian, it was common to point to principles in the Beatitudes to resolve questions of what it meant to act in a moral way as a Christian.

Any Google CEO who doesn't want to follow "ten things we know to be true" or any Pope who doesn't want to follow the Beatitudes is going to need to do some heavy lifting to re-align their org around a different set of principles, or at the very least, signal strongly that those principles don't currently apply.

↑ comment by gjm · 2023-08-13T00:52:56.892Z · LW(p) · GW(p)

If you consider the "extended CEO" to include everyone whose knowledge is of importance ... surely you're no longer talking about anything much like simulating a person in any useful sense? How does "simulate the CEO" describe the situation better than "try to do what's best for the organization" or "follow the official policies and vision-statements of the organization", for instance?

I think it's telling that your examples say "when I was in organization X we would try to make decisions by referring to a foundational set of principles" and not "when I was in organization X we would try to make decisions by asking what the organization's most senior person would do". (Of course many Christians like to ask "what would Jesus do?" but I think that is importantly different from asking what the Pope, the Archbishop of Canterbury, the Moderator of the General Assembly, etc., would do.)

I think most Googlers, and most Christians, are like you: they are much more likely to try to resolve a question by asking "what do the 'ten things' say?" or "how does this fit with the principles in the Sermon on the Mount[1]?" than by asking "what would Sundar Pichai say?" or "what would Pope Francis say?". And I think those are quite different sorts of question, and when they give the same answer it's much more "because the boss is following the principles" than "because the principles are an encoding of how the boss's brain works".

[1] I am guessing that you mean that rather than just the Beatitudes, which don't offer that much in the way of practical guidance, and where they do there's generally more detail in the rest of the SotM -- e.g., maybe "blessed are the meek" tells you something about what to do, but not as much as the I-think-related "turn the other cheek" and "carry the load an extra mile" and so forth do.

(Disclaimer: I have never worked at Google; I was a pretty serious Christian for many years but have not been any sort of Christian for more than a decade.)

comment by ChristianKl · 2023-08-13T21:27:22.371Z · LW(p) · GW(p)

In this post I want to argue that one important thing those middle managers do is that they “simulate the CEO”. If someone wants to know what they should be doing, the middle manager can respond with an approximation of the answer the CEO would have given, and thus allow a large number of people to act as if the CEO was telling them what to do.

It seems to me like you spend a lot of time asserting that this is true. On the other hand, I don't see you providing evidence for why the reader should believe that this is true. Given that this is LessWrong, I would like it to be more clear about the epistemics of why one should accept that claim.

comment by Gunnar_Zarncke · 2024-12-06T08:49:14.207Z · LW(p) · GW(p)

I recently heard the claim that successful leaders make themselves easy to predict or model for other people. This was stated in comparison to successful makers and researchers who need to predict or model something outside of themselves.