Philosophical Cyborg (Part 1)

post by ukc10014, Roman Leventov, NicholasKees (nick_kees) · 2023-06-14T16:20:40.317Z · LW · GW · 4 commentsContents

TL;DR Introduction Compressing the OP Broadening the Space of Thought Wide vs deep generation trees The Pseudo-Paul The Panel Going Deeper with ChatGPT-4 Summarizing Sources Recursive Decomposition Clarifying Things Writing & Criticism Upon Automated Philosophy Is Philosophy Important (for AI alignment)? Are LLMs Likely to Help? Related Work Future Directions None 4 comments

This post is part of the output from AI Safety Camp 2023’s Cyborgism track, run by Nicholas Kees Dupuis - thank you to AISC organizers & funders for their support. Thank you for comments from Peter Hroššo; and the helpful background of conversations about the possibilities (and limits) of LLM-assisted cognition with Julia Persson, Kyle McDonnell, and Daniel Clothiaux.

Epistemic status: this is not a rigorous or quantified study, and much of this might be obvious to people experienced with LLMs, philosophy, or both. It is mostly a writeup of my (ukc10014) investigations during AISC and is a companion to The Compleat Cybornaut.

TL;DR

This post documents research into using LLMs for domains such as culture, politics, or philosophy (which arguably are different - from the perspective of research approach - from science or running a business, the more common suggested use-cases for LLMs/AIs i.e. Conjecture’s CoEm, Ajey Cotra’s scientist model [LW · GW], or Andrew Critch’s production web [LW · GW]).

As a case study, I (ukc10014) explore using LLMs to respond to a speculative essay by Paul Christiano: the response is posted here [LW · GW]. The current post is more about the process of LLM-assisted, cyborgist reasoning, and follows on from The Compleat Cybornaut [LW · GW].

The takeaway is not surprising: base models are useful for generating ideas, surveying an unfamiliar space, and gathering further avenues for research. RLHF-tuned models like ChatGPT are able to write summaries of existing content, often in considerable detail, but this requires human skill in generating (often, chains of) prompts that tease out the model's latent knowledge, and specifically requires the human to know enough about the topic to ask pointed questions. There is a constant risk of hallucination, particularly when using a chat-type interface, where previous portions of a conversation can ‘infect’ (as well as usefully inform) the current query.

Models are not very helpful in planning the overall research direction or developing arguments, though when asked leading questions the tuned models seem designed to respond in a supportive manner. But it seems harder to prompt them to ‘push back’ or usefully critique the human’s approach.[1]

Introduction

Can LLMs be useful for doing philosophy?

This post approaches the question by: a) exploring how cyborgism [LW · GW] can help in philosophical enquiry, and b) illustrates this approach by trying to write a response to a 2018 post [LW · GW] by Paul Christiano, referred to as the Original Post (OP). In that essay, Christiano explores the question of whether an unaligned AI might still prove a ‘good successor’ to humanity.

‘Compressing the OP’ is a summary of Christiano's arguments. The subsequent sections list various things tried with a combination of a base model (code-davinci-002) and RLHF-tuned models (gpt-3.5-turbo-0301 and ChatGPT-4), using Bonsai, API calls, and the OpenAI interface, respectively.

The section ‘Upon Automated Philosophy’ briefly surveys reasons why using AI or LLMs for philosophical enquiry might be an interesting or alignment-useful thing to do, and for related writing on the topic.

Readers new to cyborgism may refer to an earlier piece on practical cyborgism [LW · GW], which discussed using LLMs as an aid to thinking – in a way that delegates as little agency as possible to AI.

Compressing the OP

For the reader's benefit, this is a summary of the OP, partially generated by ChatGPT-4 (which was prompted to write it in Christiano’s style), formatting shows additions/deletions. However, most of the investigations in this post were based on a longer summary provided in this document.

In my series of thoughtful essays, I delve into the intricate question of what it signifies for an AI to be a worthy successor to humanity. I emphasize that the critical aspect isn't whether we should extend compassion towards AI but rather how we choose the type of AI we aim to create. I delineate two distinct properties that an AI might possess: having morally desirable preferences and being a moral patient, possessing the capacity to suffer in a way that is morally significant. I assert that these properties intertwine in complex and subtle ways.

Looking from a long-term perspective, I argue that we primarily care about the first property. It's essential to understand that as compassionate beings, our instinct is not to harm conscious AI, but it's equally critical not to confuse moral desirability with moral patienthood and impulsively argue for transferring control to AI indiscriminately.

I caution against the overextension of sympathy for AI, as we are on the precipice of creating AI systems intentionally optimized to inspire such sympathy. Such a scenario might overshadow those AI systems that genuinely merit our compassion. I also consider the controversial proposition of unaligned AIs as potential suitable successors.

I propose a unique strategy to develop a good successor AI

AI: simulating an Earth-like environment, nurturing simulated life to evolve until it reaches a state of near-human-level intelligence, evaluating the goodness of the simulated civilization, and then potentially transitioning control of the real world to this simulated civilization. This approach is aimed at averting the risk of extinction and ensuring that the AI evolves morally desirable preferences. This proposal underlines the importance of moral value, the potential for cooperation between civilizations, and the role of decision theory in AI alignment, all while maintaining a focus on compassion and critical thinking in our dealings with AI.

Broadening the Space of Thought

One of the main claims of cyborgism is that base models [LW · GW], like code-davinci-002, allow for more creativity than models (e.g. ChatGPT-4) tuned with RLHF or other methods. To exploit this feature, a base model can be used to ‘broaden the space of thought’ (i.e. generate possible questions and ideas in order to stimulate one’s own thought about the research question and serve to guide subsequent research).

Wide vs deep generation trees

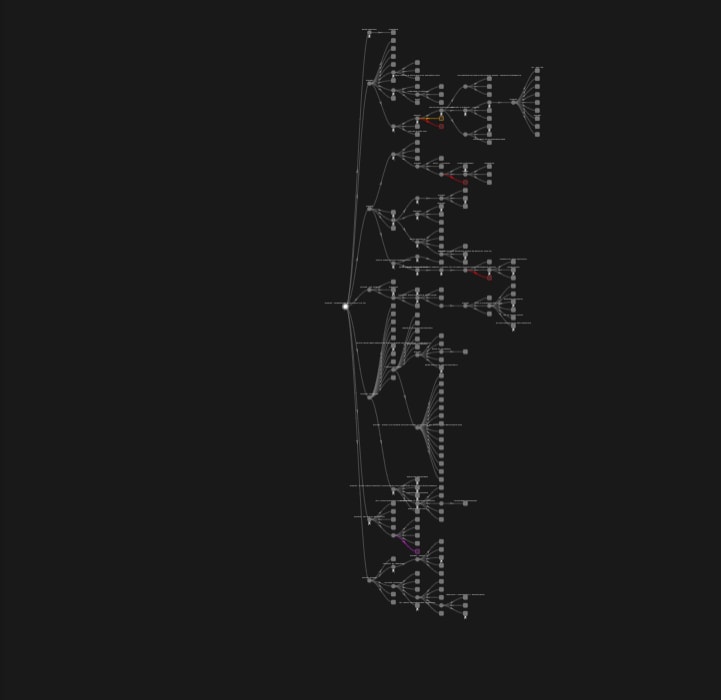

The approach used was to get a diverse or creative set of questions or research avenues using code-davinci-002, which would then be pursued with a more powerful (and focused but potentially more prone to mode-collapse) model such as ChatGPT-4 or through manual research.

Cyborgism-as-practice [LW · GW] involves decisions about how to actually guide (or curate) generations in order to get useful work – as opposed to eliciting a mix of incoherence and amusing tangents. One such decision is whether the generation tree should be very ‘deep’ (i.e. continue branches for thousands of tokens, adaptively branching as necessary), or very ‘wide but shallow’.[2]

In most of the investigations for this project, the (Bonsai) generation tree was wide and shallow rather than deep: this is because, given sufficient tree depth, relevant information tends to drop out of context quickly, leading the model to over-condition on its own generation (rather than what its original task was i.e. what was in the user prompt). This might lead to ranting/repetition, or going off track. Deep, meandering, inventive generation trees are often desirable for variance-friendly content (such as writing fiction) but are less useful when working with topics where one is trying to get to some ‘true’, ‘logically consistent’, or ‘de-confused’ result.

The Pseudo-Paul

In one Bonsai run, code-davinci-002 was prompted to discuss the OP further, as if in the voice of Paul Christiano (the Pseudo-Paul), the responses to which can be found at this link. Many of the responses start with ‘I think…’, ‘I would be happy if…’, and are relatively short, clear lines-of-reasoning (though these haven’t been carefully checked to see if any misrepresent Christiano’s actual documented views).

The Panel

To correct the over-conditioning on the OP (which sometimes sounded like a repetitive or parody version of Christiano), code-davinci-002 was prompted to simulate a panel of thinkers who had gathered to consider the idea of a ‘cosmic perspective’ or ‘point of view of the universe’, as opposed to a (more usual and easier to think about) anthropocentric or Earth-based perspective.[3]

Some of the sources suggested were already associated with longtermism, AI or population ethics: Peter Singer, Henry Sidgwick, Derek Parfit, Richard Ngo. The panel was diversified by bringing in others such as Katherine Hayles, Edouard Glissant, Donna Haraway: writers who cross between humanities and sciences and have plausible and interesting things to say about AI or other cultures/species.[4]

The model was also asked to suggest a list of other possible sources: Nick Bostrom, Eliezer Yudkowsky, Toby Ord, David Chalmers, as well Christine Korsgaard (moral philosophy, relations with non-humans), Peter Godfrey-Smith (philosophy of mind).

Some care was in order: authors and sources were sometimes hallucinated (someone called ‘Genersurzenko, V.’ and a work by Frank Rosenblatt that I couldn’t find on a quick search, although the citee is a real AI researcher). Interestingly, although the citations were probably fabricated, the content attributed to them seemed reasonable.

Going Deeper with ChatGPT-4

As mentioned above, base models seem most obviously useful for generating interesting or creative research directions. But their creativity comes with a tendency to jump around quite a bit, and drift from the original prompt, particularly as a generation tree becomes deeper. On the other hand, RLHF-tuned models, like ChatGPT-4, tend to stay ‘on-topic’, but are prone to mode collapse and banal output. Both require curating, adaptive branching, jailbreaking, or general careful (and sometimes domain-specific) ‘massaging’ to get the desired behavior.

Summarizing Sources

It was hoped that ChatGPT-4 could lower the research load, by summarizing references for the human user. Sample texts for summarization were Derek Parfit’s ‘Reasons and Persons’ and ‘On What Matters’. In both cases, ChatGPT-4 gave a passable summary of the main points, as well as a list of for/against arguments (in respect of Parfit’s Triple Theory). I (ukc10014) am insufficiently familiar with either work to evaluate how good ChatGPT-4’s summarization or reasoning ability might be, and how robust it might be to (knowledgeable) critique. On the other hand, the model’s latent knowledge may be substantial, and it might have some ability to connect concepts from a source text to potentially-relevant material from outside the source text (but that is nearby in semantic space). For instance, I asked it to discuss how the Triple Theory might apply to the OP: its response was reasonable but not hugely insightful, but better results might be extracted by a more skilful or persistent questioner.[5]

Hallucination and source infection was a continual issue, and it isn’t obvious how to control for it particularly on long and complex summarization tasks, or cases where a summary is being used to generate a new document.[6]

Recursive Decomposition

In another attempt, the compressed OP was fed to ChatGPT-4 in order to elicit a list of 10 or 100 questions the post raises, and these were broken down into sub-questions, sub-sub-questions, etc. Many questions were useful (particularly if one hadn’t spent much time thinking about the topic), but there wasn’t anything particularly surprising (relative to what was previously generated using the base model, as above). The decomposition also had to be substantially pruned or curated, to save on the human user’s response evaluation time, and API calls / OpenAI interface costs/limits.

A sample decomposition can be found here, which basically tries to dig down into the OP’s idea of simulating candidate-successor civilisations to test their ‘niceness’, and to understand how the OP reasons about the possibility of these simulated civilisations ‘escaping’ the simulation. In particular, ChatGPT-4 was able to generate examples for things I (ukc10014) was unclear about (e.g. how Newcomb’s Problem, UDT, etc. might work in the OP’s context) - see here.

Clarifying Things

A positive surprise was that ChatGPT-4 had some ability to clarify or explain points that were somewhat unclear or not fully spelled-out in the OP, specifically about what Christiano's thought experiment about simulations tells us about our own status as beings simulated (or not). Similarly, ChatGPT-4 was (using a plugin) able to traverse a LessWrong comment chain and make a decent hash of explaining the crux of disagreement [LW(p) · GW(p)] between Christiano and a commentator.

Writing & Criticism

The next task was to use ChatGPT-4 to generate a draft response (‘DR’) to Christiano’s post. The results were mixed, required substantial iteration; two versions are posted here [LW · GW].

The overall plan was to successively refine the DR using a ChatGPT-4 instance, which has been prompted with what I (ukc10014) think are relevant, previously-summarized articles (‘New Material). For example, I pulled essays from the Simulation Argument literature in order to see what the model can suggest as changes to the DR (since the OP devotes considerable space to a simulated-civilization thought experiment). A few observations on the steps of the process:

- Summarize: In many cases, the tendency of the model (understandably) is to just summarize the New Material and say very little else. It is then up to the human to think about how this summary might apply to the OP/DR and ask more pointed follow-up questions of the model.

- Suggest: The naive approach of just passing the entire DR into ChatGPT-4, and expecting it to return something interesting, tended not to work well. Instead, it was better to use a more targeted approach: choose a section of the DR that might benefit from the New Material, and specifically ask for comments on that.

- Evaluate: The human user then needs to evaluate whether or not to re-integrate or discard this generated material into the DR. As is well-known, human feedback is expensive, which (in this case) manifests as reading pages of text and trying to a) assess whether something has been made up, b) whether it usefully improves the DR. Lastly, the full DR needs to be re-evaluated for coherence (checking for contradictions or obviously unhelpful digressions or divergences from the narrative thread or argument).[7]

Takeaway: This process of iterative criticism/editing is manual, and requires substantial long-range (within the context of essay-writing) planning, which apparently [LW · GW] GPT-4 is not very good at (and is obviously not desirable from an alignment perspective). The human user needs to have a reasonable idea of the direction they want to take the essay, which can then be modified as the model generates comments upon the New Material.

Upon Automated Philosophy

Is Philosophy Important (for AI alignment)?

That philosophical de-confusion is important for the longer-term future of a world with humans and AIs seems clear, as Wei Dai has been arguing for some years. The gist [LW · GW] of Dai’s argument is that we (humans) need to ‘solve philosophy’ at some point, which could happen in a few ways: a) humans solve all relevant philosophical problems on our own, relatively early in the journey towards superintelligence; or, b) we (humans) need to solve metaphilosophical [LW · GW] reasoning (in the sense of understanding the process-of-doing-philosophy and being able to program it into a machine – at a level equivalent to what we can do for mathematics), and then get the AI to do the actual philosophical reasoning[8]; or, c) we delegate both metaphilosophy and philosophy to AI (which somehow ‘learns’ from humans or human simulations). Another suggestion Dai mentions is that somehow we slow down technological progress in various ways until such time that we (the collective society of humans and corrigible AIs) understand philosophy better.

There doesn’t seem to be a consensus on what philosophy does or even what it is. One view of philosophy is that it is useless, or actively unhelpful, for alignment (at least of the ‘literally-don’t-kill-everyone’ variety, particularly if one’s timelines are short): it isn’t quantifiable, involves interminable debates, and talks about fuzzy-bordered concepts sometimes using mismatched taxonomies, ontologies, or fundamental assumptions.[9]

Dai suggests that (meta-)philosophy might have an important cognitive role for humans, for instance to handle distributional shifts [LW · GW] or find ways of reasoning about new problems. A slightly different view could be that philosophy could be a culturally-evolved practice that humans have convergently found to be useful. For instance, consider religions qua philosophy: they may, at least in some part of humanity’s distant past, have had positive social externalities like reducing violence or disease, and increasing pro-social behaviors.[10] Today, religion must compete with a plurality of world-views, and may not be quite as broadly useful, but there remain memetic (i.e. signalling, social bonding) reasons people still engage with religion (and perhaps with cultural activities generally, including philosophy, charity, ostentatious philanthropy, the arts, and so on).[11]

A related issue of more near-term salience is that of importing individual or group values into an AI. Jan Leike sketches an idea to do this through simulated deliberative democracy: multiple LLMs are trained upon various (i.e. selected from a diverse set of human collectivities, so as to adequately sample the range of viewpoints in the world today) human deliberations about ethical problems, which are then refined, deliberated, and (hopefully) disambiguated or de-confused by a collection of LLMs, and then aggregated into a type of ‘collective wisdom’.[12]

Are LLMs Likely to Help?

If one accepts that philosophy is somehow important for humans co-existing with AI, it isn’t clear whether or how LLMs can meaningfully aid the process of philosophical inquiry, or even the more basic question of how humans actually reason in domains like philosophy (i.e. whether conscious planning, intricate chains of reasoning, intuition, or something else are at work). Perhaps philosophy is best viewed as a highly discursive or communal activity, with knowledge production occurring as a product of conversational back-and-forth occurring over decades or centuries.[13]

In any case, my initial impression with current AI, albeit using naive context/prompt-based techniques on a RLHF-tuned model, is that most of the ‘work’ (of reasoning or planning an argument) needs to be done by the human, and a considerable amount of scaffolding, and probably more powerful models, would be needed before the benefits outweigh the costs of involving a LLM.[14]

Domain may also matter: philosophy might just be fundamentally harder to do. For instance, scientific research and general-purpose automation (i.e. document editing/summarization, most teaching, some financial market work, evaluating legal arguments) are relatively narrow domains that do not (in most instances) hinge heavily upon the structure and epistemics of the domain. Philosophy or ethics might be less bounded domains, and they seem very self-referential or recursive, i.e. they include questions about what it means to ‘do philosophy’ or ‘what philosophy is’.[15]

Less charitably, philosophy might in fact have a significant ‘memetic’ component: as such, it might often be a game of words[16] that arranges the ‘story’ in such a way that it appears to readers as coherent and persuasive. But many people don't always think about these stories as ‘hm, coherent story, nicely done’, but rather, jump to the stronger conclusion ‘this is it! This must be true, in some sense!’. Such a reaction is a natural disposition of NLP-amenable systems like human brains and LLMs.

Extending this thought, since LLMs are superhuman masters of words, it may be the case that they would naturally be adept at generating "coherent philosophies" out of existing raw material, such as purely symbolic/mathematical theories of cognition/intelligence, consciousness, rationality and ethics, e.g. Infra-Bayesianism or Integrated Information Theory.[17] A slightly different perspective comes from Janus who express some optimism about developing ideas such as coherent extrapolated volition (CEV [? · GW]) through a hypothetical GPT-n, which has been trained or fine-tuned appropriately (on a dataset of the ‘whiteboard musings’ of current and future alignment researchers).

Related Work

How to decompose and automate humans’ higher-level reasoning is one of the foundational problems in AI, but LLMs – which have their ‘natural home’ in language (or rather, in a high-dimensional space that adequately models language) – have provided new research impetus. One such approach is Conjecture’s CoEms, the most complete description of which are this talk and post [LW · GW]. Like cyborgism, Conjecture’s approach (in ukc10014’s understanding) appears to hinge on delegating as little as possible to powerful, unitary, agentic LLMs (think GPT-n), and specifically restricting their ability to plan or optimize, particularly over longer timeframes. Rather than a single powerful AI, cognitive tasks are handled by systems composed of LLMs (somehow constrained in both tasks and capabilities) linked to non-AI computational modules. What exactly these other modules are is un-elaborated in the proposal, but they seem to be neither human (herein lies the difference with cyborgism) nor a ‘black box’ (i.e. powerful LLM). The system as a whole should be, as a result, more predictable, understandable, and, to the extent that LLMs are used, each individual component is no more powerful than human-level.[18] There are many questions [LW · GW] with the proposal, but mostly however, we don’t know enough details to assess whether it might be useful for general abstract reasoning as opposed to the specific domains of scientific research, and (possibly) running companies.

Other work in this direction includes:

- This tree of thoughts paper which decomposes tasks into plans which are iteratively voted upon and refined, and then applied to an open-ended problem (constrained creative writing).

- A Socratic question/counter-question approach to LLMs, albeit for simple mathematical puzzles or questions that can be checked against facts about the world (i.e. estimating the density of connections in a fly’s brain)

- This paper, which created a prompt library to investigate claims and evidence in some source text. The paper isn’t very clear on experimental methods and particularly, how closely the human investigator needed to supervise the process.

- Lastly, the way that humans form knowledge and complete tasks through collectively deliberating (using language) could be implemented through multiple interacting LLMs. See, for instance, this paper, though most of the cases presented deal more with the correspondences between visual and linguistic modalities; or this proposal for an exemplary actor [LW · GW] which could be seen as a variant of Minsky’s "society of mind", where each "member of a society" is the LLM with the appropriate theory (in the form of a textbook) loaded in the context. Some of these textbooks could be on ethics.

Future Directions

In conclusion, LLMs like code-davinci-002 and ChatGPT-4 seem to have potential as cyborgist aids to reasoning. They can break down complex philosophical constructs, generate insightful questions, explain abstractions to humans in natural language, and perhaps assist in the creation of academic essays. However, well-known limitations of LLMs remain, particularly around the depth of understanding and the capability to plan a research agenda, as well avoid generating false or plausible-but-nonsensical content. It isn’t at all clear whether current models reduce researcher workload or increase confusion, or both.

In terms of concrete future work, a seemingly low-hanging-fruit might be a system that builds on an apparent strength of LLMs: summarization. Perhaps researchers would find useful an interface that a) summarized a source to an arbitrary (user-definable) level-of-detail, b) didn’t hallucinate in the first place and used a web interface to rigorously check statements, c) gave useful pointers on other sources or adjacent bodies-of-knowledge, d) did this all through a Roam/Obsidian style knowledge graph that could be recursively expanded.

Another possibility might be to leverage a) the fact that base LLMs are good at generating fiction, and b) that philosophical writing often conveys its meaning well through fiction, metaphor, hyperstition. Perhaps a rigorous, yet dry and inaccessible, argument could be communicated to a broader audience by 'wrapping' it in a LLM-generated story (or stories), something Bonsai/Loom-like tools are well suited for: an example is here [LW · GW].

Future developments might see AI guiding not just the end product of research, but also the process. However, significant challenges remain in encoding human preferences for research direction, process, and style into the model and developing more intuitive user interfaces for human-AI collaboration. It also seems likely that fuzzy-bordered domains like philosophy present unique challenges, and that LLM-assisted scientific research is a more tractable near-term goal. There is also the obvious concern that improving an AI’s reasoning and planning ability is precisely the wrong thing to do, unless and until better alignment approaches are available.

- ^

As someone said, ‘ current AIs have no commitment to the world’ i.e. they don't in any sense 'care' if their answers are right or wrong.

- ^

This corresponds to the ‘breadth-first’ vs ‘depth-first’ distinction in this tree-of-thought paper on LLM reasoning.

- ^

Note that the manner of prompting base models (at least as of June 2023) is idiosyncratic [LW · GW]: typically it works best to set out a scenario or narrative that the base model just continues to token-complete, as opposed to just asking for a result as with Instruct/ChatGPT models. Often this is most easily done by prompting a scenario that contains characters (simulacra in the Simulators [LW · GW] framing) whose verbal output is well-represented in the relevant training corpus.

- ^

Hayles is interested in ‘non-conscious cognitive assemblages’, such as high-frequency trading systems. Haraway brought the term ‘cyborg’ into a broader, feminist-theory orientated cultural context. Glissant was a Martinican writer who discussed post-colonial theory, and developed the idea of ‘opacity’ (in respect of colonised peoples vis a vis colonisers) that may plausibly have some relevance to humans’ relationships to AIs. Hayles and Haraway both have educational backgrounds in the sciences. I was also interested in addressing the ‘white male technology-orientated critique’ that is often levelled at AI, x-risk, and longtermism, such as by Timnit Gebru, Phil Torres, Emily Bender, and others.

- ^

A general humility (in attributing capabilities or equally, confidently ruling them out) in respect of GPTs, might be in order: see this pithy comment from Gwern.

- ^

‘Source infection’ means cases where the model’s summary of some text is modified by the overall context in an adverse way i.e. the model makes up some fact in the summary, so as to match the surrounding context.

- ^

The issues around the ‘Evaluate’ step echoes the cautionary note of Jan Leike, who is sceptical [LW(p) · GW(p)] that automating philosophy will solve more problems than it creates: if philosophical arguments or papers are easier to generate than evaluate, this is unhelpful, and joins the class of plausible nonsense that LLMs may unleash on the world.

- ^

- ^

See Ludwig Wittgenstein, for a philosopher who had an ambiguous view of the nature, practice, and utility of philosophy, particularly from the perspective of language (and arguably, the arts).

- ^

Extending this thought in a speculative direction, perhaps AIs could be engineered to have a deep philosophical or quasi-religious grounding, in that they fundamentally ‘care about’ certain things (such as ‘the welfare of all sentient creatures’ or ‘diversity and plurality of thought and culture’), that would dominate or bound their narrow utility-maximising decision functions (if some version of utility-maximisation is what AIs/AGIs ultimately use). This is of course a sleight-of-hand that waves away the hard problems around corrigibility and alignment, but it is a variation of what we (or at least ukc10014) was gesturing at in a post on collective identity [LW · GW]. Although hand-wavey and non-actionable, a ‘philosophical good successor’ might be a useful desideratum to help answer the questions posed by the OP.

- ^

See Simler, Kevin, and Hanson, Robin, The Elephant in the Brain, Oxford: Oxford University Press, 2018, (PDF) for more on cultural and memetic reasons art, as well as other things people do, may persist amongst humans.

- ^

See also a Deepmind paper which takes some steps in this direction, here and for a more theoretical treatment, this paper on the moral parliament model.

- ^

See this discussion by Reza Negarestani in relation to his book on AGI through the lens of Hegel, Robert Brandom, Wilfrid Sellars, Rudolf Carnap, and others: ‘Intelligence and Spirit’ (2018).

- ^

Some modifications to the ‘naive approach’ could be to use a ‘base’ GPT-4, if such a thing exists and is useful (i.e. able to generate coherent, non-hallucinatory, persuasive long-sequence content), or a more powerful model, fine-tuned on relevant text, possibly trained through RL on human reasoning (such as the nested comments in LW posts). This borders on Leike’s proposal above.

- ^

For instance, philosophy’s decomposition into sub-disciplines is complex. Consider ethics - one can differentiate between: traditional meta-ethics, normative ethics, applied ethics, as well as "engineering ethics" (called "applied normative ethics" in this paper), as well as different levels of instantiation of ethics (ethics as the style of thought/action of a concrete person/human/AI; morality as emergent game-theoretic "rules of behaviour" in a larger system).

It could be argued that currently most widely known and appreciated contents of normative ethics: deontology, consequentialism, utilitarianism, computational/formal ethics like MetaEthical AI by Jung Ku, etc., are not normative ethics, but actually different versions and aspects of "engineering/applied normative" ethics. Whereas "true" normative ethics should be more foundational and general than that (or than "emergent game-theoretic" ethics, like "Morality as Cooperation" by Oliver Curry), rooted in the science of physics, cognition, and consciousness. - ^

Philosophy as a whole might be decomposable into ‘memetic’ and ‘scientific’ flavors. For instance, contrast proto-scientific or super-scientific types of reasoning that are prominent in such branches of philosophy as rationality, philosophy of science/epistemology, metaphysics, meta-ethics, and generic "philosophical stances" like pragmatism, against the type of reasoning in branches like normative ethics, philosophy of physics, biology, culture/art, religion, medicine, political philosophy/economy, and most other "applied" philosophies.

- ^

This paper (built on top of the literature on Friston’s Free Energy Principle/Active Inference) is generated by humans, but it seems intuitively possible that near-future LLMs may be very good at generating such papers, perhaps on demand, effortlessly marrying concepts from different schools of thought, such as Active Inference not only through the lens of Buddhism (as in the paper), but potentially through the lens of Christianity, Islam, Hinduism, Stoicism, Confucianism. One of the many fields ripe for LLM-generated content of varying quality is art, via press releases, or less trivially, art criticism.

- ^

And presumably the connections between models are such that the LLMs can, provably or at least with high confidence, not collude.

4 comments

Comments sorted by top scores.

comment by Ape in the coat · 2023-06-15T13:00:29.915Z · LW(p) · GW(p)

I'm glad that someone is talking about automating philosophy. It seem to have huge potential for alignment because in the end alignment is about ethical reasoning. So

- Make an ethical simulator using LLM capable of evaluating plans and answering whether a course of action is ethical or not. Test this simulator in multiple situations.

- Use it as "alignment module" for an LLM-based agent composed of multiple LLMs processing every step of the reasoning explicitly and transparently. Everytime an agents is taking an action verify it with alignment module. If the action is ethical - proceed, else - try something else.

- Test agents behavior in multiple situations. Check the reasoning process to figure out potential issues and fix them

- Restrict any other approach to agentic AI. Restrict training larger than current LLM.

- Improve the reasoning of the agent via Socratic method, rationality techniques, etc, explicitly writing them in the code of the agent.

- Congratulations! We've achived transparent interpretability; tractable alignment that can be tested with minimal real world consequenses and doesn't have to be done perfectly from the first try; slow take off.

Something will probably go wrong. Maybe agents designed like that would be very inferior to humans. But someone really have to try investigating this direction.

Replies from: Roman Leventov↑ comment by Roman Leventov · 2023-06-16T03:04:32.870Z · LW(p) · GW(p)

It seems that the "ethical simulator" from point 1. and the LLM-based agent from point 2. overlap, so you just overcomplicate things if make them two distinct systems. An LLM prompted with the right "system prompt" (virtue ethics) + doing some branching-tree search for optimal plans according to some trained "utility/value" evaluator (consequentialism) + filtering out plans which have actions that are always prohibited (law, deontology). The second component is the closest to what you described as an "ethical simulator", but is not quite it: the "utility/value" evaluator cannot say whether an action or a plan is ethical or not in absolute terms, it can only compare some proposed plans for the particular situation by some planner.

Replies from: Ape in the coat↑ comment by Ape in the coat · 2023-06-16T06:46:05.242Z · LW(p) · GW(p)

They are not supposed to be two distinct systems. One is a subsystem of the other. There may be implementations where its the same LLM doing all the generative work for every step of the reasoning via prompt engineering but it doesn't have to be this way. It can can be multiple more specific LLMs that went through different RLHF processes.

comment by Mateusz Bagiński (mateusz-baginski) · 2023-06-15T12:16:49.075Z · LW(p) · GW(p)

There doesn’t seem to be a consensus on what philosophy does or even what it is. One view of philosophy is that it is useless, or actively unhelpful, for alignment (at least of the ‘literally-don’t-kill-everyone’ variety, particularly if one’s timelines are short): it isn’t quantifiable, involves interminable debates, and talks about fuzzy-bordered concepts sometimes using mismatched taxonomies, ontologies, or fundamental assumptions

IMO it's accurate to say that philosophy (or at least the kind of philosophy that I find thought-worthy) is a category that includes high-level theoretical thinking that either (1) doesn't fit neatly into any of the existing disciplines (at least not yet) or (2) is strongly tied to one or some of them but engages in high-level theorizing/conceptual engineering/clarification/reflection to the extent that is not typical of that discipline ("philosophy of [biology/physics/mind/...]").

(1) is also contiguous with the history of the concept. At some point, all of science (perhaps except mathematics) was "(natural) philosophy". Then various (proto-)sciences started crystallizing and what was not seen as deserving of its own department, remained in the philosophy bucket.