An issue with training schemers with supervised fine-tuning

post by Fabien Roger (Fabien) · 2024-06-27T15:37:56.020Z · LW · GW · 12 commentsContents

Assumptions Easy-mode: imitating human judgment on classification tasks The basic problem with imitating humans on generation tasks Solving the problem in theory Solving the problem in practice Conclusion Appendix: KL divergence prevents some low-stakes failures Appendix: Using human imitation in cases where text is obviously non-human Appendix: RL vs human imitation None 12 comments

This is a rough write-up of ideas by Ryan Greenblatt. The core ideas are his and mistakes are mine. Thanks to him, Buck Shlegeris and to Aghyad Deeb for feedback on the draft of this post.

One way to use scheming AIs [LW · GW] is to train them to imitate humans. When does imitation robustly avoid frequent and intentional failures?

- On single-token classification tasks, imitation works great and can allow you to get a lot of high-quality labor with a tiny rate of intentional failures;

- On tasks that involve generating many tokens, imitation potentially fails by default: schemers could notice when an output was generated by themselves, which enables them to consistently behave badly in deployment while doing best-effort imitation during training.

We describe two techniques that avoid imitation failures on generation tasks:

- A technique that works perfectly but is too expensive

- A technique that we think works in practice

[Edit: These techniques are already known in the behavioral cloning literature. The main novelty of this post is explicitly thinking of these ideas through the lens of defenses against scheming. Thanks to tjbai for pointing it out in the comments of this post.]

While pure imitation may not be sufficiently competitive, we think it could prove useful for tasks such as:

- (No-CoT) single-token classification;

- KL-regularization for RL: we may want to penalize models trained with RL for straying too far from a human imitation prior (and we would want this human prior to not be malign!) (see Appendix);

- Tasks for which it is fine to take the competitiveness hit from not using RL, but which weaker trusted models [LW · GW] are not good enough at.[1]

Assumptions

In this post, we focus on scenarios where:

- AIs are smart (around human-level or above);

- AIs are scheming and know how we are training and deploying them;

- We want to use these AIs productively (e.g. to do safety research)[2];

- Training and deployment tasks are indistinguishable (e.g. because we randomly sampled training tasks from the deployment distribution);

- SGD works: we ignore gradient hacking [LW · GW] and AI architectures where SGD is very sample inefficient;

- AIs can’t cause catastrophes in very few actions (e.g. because they are too weak to break safety measures that address these sorts of concerns);

- Even when AIs try their best, they can’t perfectly predict the probability distribution over the actions that humans[3] would take.

Easy-mode: imitating human judgment on classification tasks

Here is how you can use an AI to 100x the speed at which you can complete a billion single-token (IID) classification tasks (e.g. detecting software bugs, scoring project ideas, …):

- Sample 1% of the tasks, and ask humans to label them (using their judgment);

- Train the AI on that 1% of tasks you have solved yourself, using normal training (e.g. SGD with a cross-entropy loss);

- Use the AI to solve the remaining 99% of tasks.

This will get you an AI which failed intentionally at most a few times in the whole training set because intentional failures would have quickly been trained away[4]. Therefore, you got at most a few hundred intentional failures in the whole set of tasks. That is an intentional failure rate at most around 1/10M, not bad![5]

In other words, human imitation avoids intentional low-stakes [LW · GW] failures: the model won’t often be worse than a best-effort imitation of a human. (But best-effort imitation of a human could be catastrophic if that isn’t sufficiently capable.)

The basic problem with imitating humans on generation tasks

But most tasks we care about involve generating many tokens (e.g. coding a complex experiment, writing a detailed project proposal, …). Does imitation work for such generation tasks? Not quite, here is a problem that could arise if you train an AI to imitate human answers on 1% of tasks and use it on the remaining 99% without additional supervision:

- On the 1% of training tasks, the AI tries its best to imitate human answers when the start of the prompt was generated by a human.

- On the 99% other tasks:

- The AI starts to generate an answer, at the start it can’t know if it is in the 1% of training tasks or the 99% other (since the 1% was sampled at random).

- But for some tasks, the AI eventually makes a few unintentional imitation mistakes during the generation that are easy to notice. (These mistakes are due to difficulties perfectly imitating humans.) Halfway through the generation, the AI could detect those imitation mistakes, and conclude that it is extremely unlikely that a human generated that text. This is possible because discrimination is often easier than generation.[6]

- The AI recognizes there was an imitation mistake in the start of the answer, so it now knows the answer wasn’t generated by a human, so it knows it can’t have been a task in the training set, so it can do whatever it wants without the risk of getting trained away!

- It finishes the generation with the ending that maximizes the probability of a catastrophe.

This might get you arbitrarily bad intentional failures in 99% of cases, which is the worst possible outcome.

You can notice many such failures just by asking humans to audit a small fraction of the 99% of tasks that are AI generated. If the AI does anything blatantly bad on a significant fraction of inputs, then you can just catch the AI red-handed [LW · GW], which may allow you to stop, analyze potential misalignment, etc.

But auditing only works if humans can recognize catastrophic low-stakes failures, which is not always the case. We lost the main edge of human imitation, which is that we don’t need to recognize catastrophic low-stakes failures, we just need to avoid making them ourselves.

Solving the problem in theory

Instead of asking humans to generate full answers, you can get back to easy-mode by training AIs to generate only one token at a time. For example, here is an algorithm you could use to solve 1 billion tasks which you can answer in 10 words or fewer:

1. Sample 0.1% of tasks, ask humans to solve them, take the first word of their answers, fine-tune the AI into an AI that imitates human answers;

2. Sample the next 0.1% of tasks, sample the first token using , ask humans to solve them under the constraint that their answer should start with the word chosen by , fine-tune a second AI to predict that probability distribution over the second word in the answer;

…

10. Sample the next 0.1% of tasks, sample the first 9 tokens using , then , … then , ask humans to solve them under the constraint that their answer should start with the first 9 words chosen by , …, , fine-tune a last AI to predict that probability distribution over the last word in the answer;

11. Solve the remaining 99% of tasks with

All AIs were trained in distribution (e.g. was trained to imitate humans on a distribution of prompts+answer start that contained mistakes from , …, ), so you recover the same safety properties as with classification task: you get an intentional failure rate at most around 1/1M per model (since you are running each AI on roughly 1000x more tasks than training tasks), which means you get an intentional failure rate at most around 1/100k for the whole system, which is still pretty good.

[Edit: this algorithm very close to what known in the literature as forward training.]

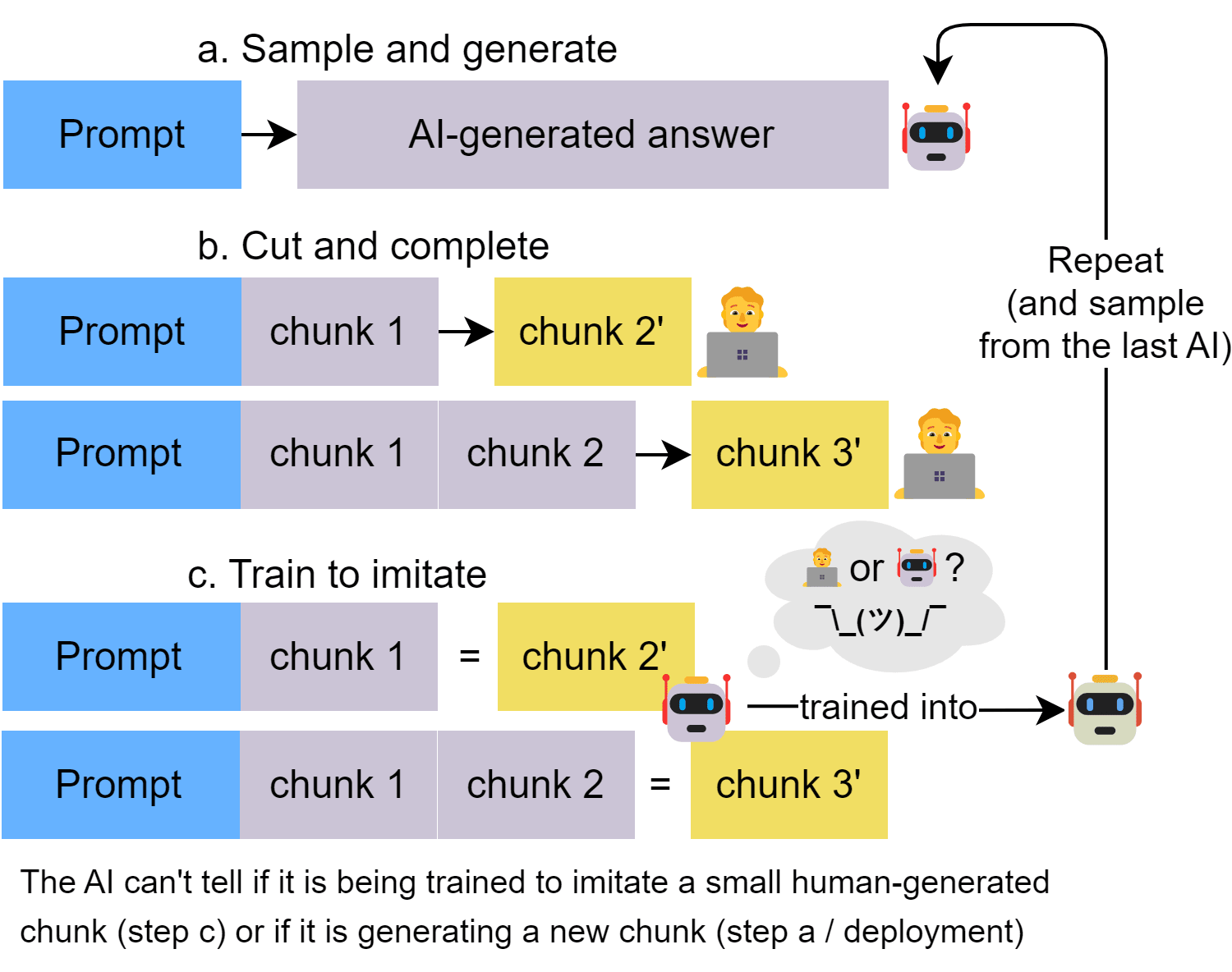

Solving the problem in practice

The approach above isn’t really viable for very long answers: it is too slow, requires training as many models as there are tokens in the longest answer, and would get you an intentional failure rate which is too high. Here is an alternative training method which we think seems likely to work for realistic tasks:

- Sample 0.5% of the tasks, ask humans to solve them;

- Train the AI to imitate human (full) answers;

- Repeat until you have exhausted all the 1% quota of training tasks:

- Sample the AI (full) answer on a new task (never trained on);

- Cut this answer into k-word chunks, with k small enough that an AI can’t be confident it is generated if it only saw k words generated by itself. For each prefix of j≥0 chunks, ask the human to generate one more chunk (e.g. by solving the task and taking the first k words);

- Train the AI on these human-generated chunks. (Use this AI for step (a) in the next round.)

- Use the AI to solve the remaining 99% of tasks.

[Edit: this algorithm very close to what known in the literature as DAgger.]

This should work basically for the same reason as the algorithm described in the section above: when the AI generates an answer, it may notice that the start was generated by an AI, but it still won’t be able to tell if it is in training or not since training often contains sequences that start with an AI-generated answer but continue with a human-generated answer the AI should imitate. We also conjecture that the intentional failure rate when using 1% of 1B training tasks for training should be at most around 1/10M (if you have enough samples that the process converges and the AI can’t distinguish itself from the AI from the previous iteration).[7]

How do you determine k? Train AIs on the classification task of distinguishing AI answers from human ones, and pick k small enough and n large enough that they never can distinguish very confidently. (It should be easy by default to get competitive elicitation on classification tasks like this, because it’s a classification task that we have perfect ground truth for.) Humans can try to make their generations more AI-like (e.g. make the same kind of mistakes an AI would, avoid using kinds of reasoning AIs can’t imitate well, …), but only when they are confident these differences from normal human behavior aren’t malign.

In practice, we think using around 200-word chunks should be enough for AIs that look like current LLMs (but larger) sampled at temperature=1. We think that asking humans to generate the many 200-word chunks for a given task is not much more expensive (e.g. <10x more expensive) than getting a full human-generated answer.

Conclusion

- Training AIs to imitate human answers can be a powerful method to get a large amount of human-level-ish labor[8] while avoiding frequent malign actions.

- Imitating single-token answers works by default for ensuring some typical behavior (as long as there is no distribution shift).

- Imitating multi-token answers doesn’t work by default because AIs might recognize that they are generating the text, which would put them out of distribution and allow them to take frequent malign actions.

- You can solve this multi-token imitation problem by training the AIs to imitate human behavior on AI-generated prefixes. This still doesn’t eliminate rare failures.

Appendix: KL divergence prevents some low-stakes failures

Some low-stakes failures stem from many bits of optimization and would have been extremely unlikely to have been generated by a human. For example, it could look like some complex actions to fool humans combined with advanced jailbreaks of our best trusted AI monitor.

To prevent such failures, we might want to measure how unlikely a string is in the human prior. In fact, this is already one method AI developers sometimes use to prevent LLMs trained with RLHF from learning adversarial attacks against the reward model: they impose a KL-divergence penalty that penalizes outputs that are too unlikely to be generated by the reference model, an LLM of similar strength trained to imitate human demonstration. (This regularization is often done implicitly using early stopping.)

But this might catastrophically fail if the reference model is a scheming AI: it could discriminate the current text from the imitation training data (and notice that it is instead AI generated), and then put high probability on catastrophically bad answers. But this can be avoided by doing human imitation right (using the algorithm described above adapted to work with non-human generations, as described in another Appendix below).

There are other alternatives, like asking a human if a string looks likely or using a KL penalty from a weaker trusted model, but humans might be fooled, and weaker trusted models might perform poorly (which means KL regularization is more costly in terms of performance).

Appendix: Using human imitation in cases where text is obviously non-human

You might want to have an AI generate human-like probability distributions in cases where the start of the answer is clearly not human, for example when:

- You want to sample an LLM at a temperature T<1 (the prefix is not even trying to be sampled from the human distribution): the probability distribution is not used as-is to generate tokens but is made sharper

- You want to use the LLM as KL-regularizer for an RLed model: the probability distribution is not used to generate tokens, since it’s the RLed model which generates trajectories

The problem is similar to the problem of AIs noticing their own mistakes described earlier, except that here the AI will be able to distinguish much faster that this is not a deployment generation but the imitation of a (T=1) human. In such situations, the “in theory” algorithm still works, and the “in practice” algorithm too, except that k may be much smaller, which may make data generation (by humans) more expensive. Note that the price of training is independent of k if prefix caching is used.

Appendix: RL vs human imitation

RL might be needed to get high performance. Human imitation is limited: it can’t select for the explicit reasoning strategies that work the best, and it can’t discover new reasoning strategies. Human imitation could in principle get you up to human level, but in practice AI labs may need RL to get anywhere close. For example, GPT-4 is much worse at agentic tasks before RL (for example, see Appendix A of the first Metr report).

You can get some of the benefits of RL with human imitation, but you can’t get its full power. Instead of asking humans to generate answers, you might ask them to generate answers that AIs would have an easy time imitating, and then select the ones that AIs can actually imitate well in practice, and then fine-tune on those. You might also do like with the training of text-davinci-002 [LW · GW] and generate samples from a model trained with RL, check that they are all benign, and imitate that. But this becomes risky very fast: if there were some subtle malignesses you did not catch in the training data (which was what you wanted to avoid in the first place), you will train the AI to generate subtly malign behavior in the 99% other tasks! So you probably can’t go very far with this: a few rounds of selecting the easiest-to-imitate answer should be fine (because there aren’t enough bits of optimization and exploration space to select for malign behavior), but you definitely can’t just imitate the behavior of a potentially scheming AI after a big RL training run.

You can make it cheaper to get high-quality human answers with iterative distillation: once you have trained an AI assistant with human imitation, you can use that to generate more answers to more questions, improve them with AI-assisted human labor, and train a more powerful assistant. Repeating this process is called amplification and benefits from similar same safety guarantees as direct human imitation (except that the (tiny) rate of intentional failures goes up with each iteration round, and high-stakes failures can spread such that in the limit of negligible training data and infinite iteration rounds you might still get a non-zero fraction of intentional failures).

The performance issues of human imitation were overrated in the past, and it’s unclear how human imitation will scale. Before GPT-2, most people interested in AGI were looking at RL because they thought human imitation was too brittle. It turns out that the current smartest AIs get most of their intelligence from imitating human-written text scraped from the internet, and can be turned into decent AI assistants by training them to imitate human-written examples of what it is like to be a good AI assistant. But RL is powerful, and is starting to show signs of strength. So it is unclear how much we will be able to rely on human imitation for the first AIs that are both catastrophically dangerous and transformatively useful [LW · GW].

- ^

It is unclear if such tasks exist - maybe you always want to use a weaker model.

- ^

In many scenarios we might be uncertain whether they are scheming, but for the purpose of ensuring safety we assume the worst and treat them as if they were scheming.

- ^

More precisely, the probability distribution over the actions of the humans that are generating the training data.

- ^

Our password-locked models experiments [AF · GW] seem to show that intentional failures can be mostly trained away when fine-tuning on only 1 to 4 demonstrations of correct behavior, on tasks where answers are around a hundred tokens long.

- ^

The same approach can be adapted to work if you have a stream of tasks with slow distribution shifts: you train on the first 0.5% of the stream and then train on one task every 200.

- ^

For example, if you train GPT-4 on a dataset of human-written functions that are exactly 100 lines long, it may often fail to write a function of exactly 100 lines because it is hard to write such functions directly, but it would be very easy for it to recognize that a function is not 100 lines long. We think would be many such markers of failures in very long text generation, even if the distribution has not been artificially restricted.

- ^

If we know that some tasks are high risk, we can always train more on them, which means things should be fine in practice even if such a rate would be unacceptable at the level of the full deployment dataset.

- ^

There are competitiveness concerns, since AIs could reach human performance with RL before they are able to reach human performance with pure imitation.

12 comments

Comments sorted by top scores.

comment by tjbai · 2024-06-27T16:41:10.659Z · LW(p) · GW(p)

How does this differ from DAgger (https://arxiv.org/abs/1011.0686)?

Replies from: ryan_greenblatt, Fabien↑ comment by ryan_greenblatt · 2024-06-27T22:19:23.808Z · LW(p) · GW(p)

Edit: I now think this is false for how DAgger is presented in the paper, see discussion below.

The method and the motivation is similar, though note that DAgger is effectively an RL scheme trying to maximize performan while we're trying to avoid a particular failure mode due to misalignment.

From my understading DAgger just involves correcting errors that humans can recognize while we're trying to get stronger guarantees.

Replies from: tjbai↑ comment by tjbai · 2024-06-28T20:10:19.353Z · LW(p) · GW(p)

It's not clear to me that you do get stronger guarantees because the setting and method is so similar to that of classical imitation learning. In both cases, we seek to learn a policy that is aligned with the expert (human). Supervised fine-tuning (behavioral cloning) is problematic because of distribution shift, i.e. the learned policy accumulates error (at a quadratic rate!) and visits states it did not see in training.

You say this failure mode is dangerous because of scheming AI and I say it's dangerous because the policy is OOD, but it appears you agree that the AI only "recognizes" it's not in training because of distribution shift—"Halfway through the generation, the AI could detect those imitation mistakes..." To me, it appears the differing justifications for why the AI performs poorly/dangerously is a matter of interpretation, not a fundamental difference.

I also don't think it's fair to describe DAgger as just "correcting errors that humans can recognize" because it actually provides formal bounds on error accumulation, which would appear to limit the failure mode you describe here. Admittedly, I'm very new to safety research as a whole, but this feels a bit like a reframing of an old problem.

Replies from: ryan_greenblatt, ryan_greenblatt, ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-29T00:20:26.073Z · LW(p) · GW(p)

(Also, to be clear, thanks for the comment. I strong upvoted it.)

↑ comment by ryan_greenblatt · 2024-06-28T20:19:51.495Z · LW(p) · GW(p)

Hmm, I think I was wrong about DAgger and confused it with a somewhat different approach in my head.

I agree that it provides bounds. (Under various assumptions about the learning algorithm that we can't prove for NNs but seem reasonable to assume in practice.)

I now agree that the proposed method is basically just a slight tweak of DAgger to make it more sample/cost efficient in the case where our issue is discrimination by the policy.

but this feels a bit like a reframing of an old problem.

I agree this is a special case of well known issues with behavioral cloning - we probably should have made this more clear in the post.

Replies from: tjbai, ryan_greenblatt↑ comment by tjbai · 2024-06-28T21:28:03.461Z · LW(p) · GW(p)

Just to make another note, "Solving the problem in theory" is also equivalent to the [forward training algorithm](https://www.cs.cmu.edu/~sross1/publications/Ross-AIStats10-paper.pdf), which preceded DAgger by the same authors.

I do think there are some interesting ideas to consider in the alignment setting. For example, the chunk size k is equivalent to the number of roll-out steps in IL. "Chunking" the roll-out to a fixed window is a common optimization if the task has a long time horizon and the expert is expensive to query. On the other hand, longer roll-outs provide stronger guarantees on how well the learned policy matches the expert.

Classically, this is a simple tradeoff between performance and speed. But, as you mention k must also be kept intentionally small so that the AI can not detect it is being trained on human generations. How does one choose the chunk size to favor both strong alignment and avoid discrimination? Dynamic roll-out strategies have been proposed in the IL literature, though I'm not very familiar.

↑ comment by ryan_greenblatt · 2024-06-28T22:38:39.695Z · LW(p) · GW(p)

(I'll edit the post at some point to highlight this discussion and clarify this.)

↑ comment by ryan_greenblatt · 2024-06-28T20:21:51.202Z · LW(p) · GW(p)

this failure mode is dangerous because of scheming AI and I say it's dangerous because the policy is OOD

I would say that it is dangerous in the case where is is both OOD enough that the AI can discriminate and the AI is scheming.

Neither alone would present a serious (i.e. catastrophic) risk in the imitation context we discuss.

Replies from: Vivek↑ comment by Vivek Hebbar (Vivek) · 2024-06-28T20:55:54.000Z · LW(p) · GW(p)

[resolved]

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-28T22:37:53.641Z · LW(p) · GW(p)

Thanks, I improved the wording.

↑ comment by Fabien Roger (Fabien) · 2024-07-01T21:06:42.670Z · LW(p) · GW(p)

I have edited the post to add the relevant disclaimers and links to the papers that describe very similar techniques. Thank you very much for bringing these to my attention!

comment by Aaron_Scher · 2024-07-14T02:57:01.959Z · LW(p) · GW(p)

Neat idea. I notice that this looks similar to dealing with many-shot jailbreaking:

For jailbreaking you are trying to learn the policy "Always imitate/generate-from a harmless assistant", here you are trying to learn "Always imitate safe human". In both, your model has some prior for outputting harmful next tokens, the context provides an update toward a higher probability of outputting harmful text (because of seeing previous examples of the assistant doing so, or because the previous generations came from an AI). And in both cases we would like some training technique that causes the model's posterior on harmful next tokens to be low.

I'm not sure there's too much else of note about this similarity, but it seemed worth noting because maybe progress on one can help with the other.