Open Thread - July 2023

post by Ruby · 2023-07-06T04:50:06.735Z · LW · GW · 35 commentsContents

35 comments

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library [? · GW], checking recent Curated posts [? · GW], seeing if there are any meetups in your area [? · GW], and checking out the Getting Started [? · GW] section of the LessWrong FAQ [? · GW]. If you want to orient to the content on the site, you can also check out the Concepts section [? · GW].

The Open Thread tag is here. The Open Thread sequence is here.

35 comments

Comments sorted by top scores.

comment by neurorithm · 2023-07-14T06:06:59.241Z · LW(p) · GW(p)

Hey Less Wrong! My name is Josh, and I'm an engineer interested in Less Wrong as a community and a social experiment :)

I read the Sequences back in 2011 when I was in college at UC Berkeley studying engineering – they were a little smaller and more readable back then, and Harry Potter and the Methods of Rationality was not yet at its full length. Over the next two years, I read the HPMOR and Sequences and read books on cognitive science like Thinking Fast and Slow, and Blink by Malcolm Gladwell. I also took a workshop with Valentine at CFAR in its early iteration - all I really remember is CFAR's summary of mindfulness as "wiggle your toes."

Since then I've been involved in computer science and AI research and discovery for ten years, more or less, mostly as a passionate reader and experimenter in the coding and concept space – looking at earlier models of ML pre-deep learning in its current form, probabilistic reasoning in robotics and control systems, and cybersecurity models in AI and social communication models. During my college years, I published a paper on measuring creativity with probabilistic models in ML using scikit-learn that was a little before its time - it was back in 2013! Now it seems LLMs and generative AI have really nailed how to do that.

I've hung out with a lot of Rats in my home base in Los Angeles, through the LW meetup, and also with folks in the Bay Area who live in Rat houses and organize meetups there. Over the last five years, I've been interested in Effective Altruism and attended several EA groups and events including EA Global in 2019 and organized EA events with guest speakers like Haseeb Qureshi and an EA/Rationality club in San Francisco which had about 10 members and sponsorship from the Center for Effective Altruism. Lately I have not been as involved in EA after watching the FTX and Sam Bankman-Fried problems break up the funding landscape and dilute core motivations for folks in problem areas of interest.

I hope to contribute to Less Wrong my unique model of thinking and decisionmaking which borrows from chaos theory, quantum entanglement, and family systems in psychology to describe how subtle perceptions of identity and influence form patterns in collective human decisionmaking. While I am by no means an expert in economics or game theory, I believe that the subtle clues of collective patterns in culture and social systems are everywhere if we know where to look. Rationality is a feature of a class of innovation culture communities which are seen throughout California and across the Western world in the US and Europe. I don't know about other countries because I haven't seen it there.

I am most interested in exploring the neural correlates of consciousness, meaning specifically software systems which influence and predict human decisionmaking, regardless of how we define consciousness, thoughts and feelings, and rationality both as a philosophy, a brand, and a lifestyle.

comment by duck_master · 2023-08-01T01:16:01.235Z · LW(p) · GW(p)

Hello LessWrong! I'm duck_master. I've lurked around this website since roughly the start of the SARS-CoV-2/COVID-19 pandemic but I have never really been super active as of yet (in fact I wrote my first ever post last month). I've been around on the AstralCodexTen comment section and on Discord, though, among a half-dozen other websites and platforms. Here's my personal website (note: rarely updated) for your perusal.

I am a lifelong mathematics enthusiast and a current MIT student. (I'm majoring in mathematics and computer science; I added the latter part out of peer pressure since computer science is really taking off in these days.) I am particularly interested in axiomatic mathematics, formal theorem provers, and the P vs NP problem, though I typically won't complain about anything mathematical as long as the relevant abstraction tower isn't too high (and I could potentially pivot to applied math in the future).

During the height of the pandemic in mid-2020, I initially "converted" to rationalism (previously I had been a Christian), but never really followed through and I actually became more irrational over the course of 2021 and 2022 (and not even in a metarational way, but purely in a my-life-is-getting-worse way). This year, I am hoping that I can connect with the rationalist and postrat communities more and be more systematic about my rationality practice.

comment by Wei Dai (Wei_Dai) · 2023-07-06T17:09:54.571Z · LW(p) · GW(p)

Epistemic status: very uncertain, could be very wrong, have been privately thinking in this direction for a while (triggered by FTX and DeepMind/OpenAI/Anthropic), writing down my thoughts for feedback

How much sense does encouraging individual agency [LW · GW] actually make, given that humans aren't safe and x-risk is almost entirely of anthropogenic origin?

Maybe certain kinds of lack of agency are good from a human/AI safety perspective: mild optimization, limited impact, corrigibility, non-power-seeking. Maybe we should encourage adoption of these ideas for humans as well as AIs?

With less individual agency generally, we'd be in a more "long reflection" like situation by default. Humanity as a whole would still needs agency to protect itself against x-risk, eventually reach the stars, etc., but from this perspective we need to think about and spread things like interest in reflection/philosophy/discourse, good epistemic/deliberation norms/institutions, ways to discourage or otherwise protect civilization against individual "rogue agents", with the aim of collectively eventually figuring out what we want to do and how to do it.

It could be that that ship has already sailed (we already have too much individual agency and can't get to a "long reflection" like world quickly enough by discouraging it), and we just have to "fight fire with fire" now. But 1) that's not totally clear (what is the actual mechanistic theory behind "fight fire with fire"? how do we make sure we're not just setting more fires and causing the world to burn down faster or with higher probability?) and 2) we should at least acknowledge the unfortunate situation instead of treating individual agency as an unmitigated good.

Replies from: daniel-kokotajlo, Nisan↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-03T21:21:51.308Z · LW(p) · GW(p)

My opinion is that the ship has already sailed; AI timelines are too short & the path to AGI too 'no agency required' that even a significant decrease in agency worldwide would not really buy us much time at all, if any.

The mechanistic theory behind "fight fire with fire" is all the usual stories for how we can avoid AGI doom by e.g. doing alignment research, governance/coordination, etc.

↑ comment by Nisan · 2023-07-06T19:29:27.679Z · LW(p) · GW(p)

A long reflection requires new institutions, and creating new institutions requires individual agency. Right? I have trouble imagining a long reflection actually happening in a world with the individual agency level dialed down.

A separate point that's perhaps in line with your thinking: I feel better about cultivating agency in people who are intelligent and wise rather than people who are not. When I was working on agency-cultivating projects, we targeted those kinds of people.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2023-07-07T02:29:00.229Z · LW(p) · GW(p)

A long reflection requires new institutions, and creating new institutions requires individual agency. Right?

Seems like there's already enough people in the world with naturally high individual agency that (if time wasn't an issue) you could build the necessary institutions by convincing them to work in that direction.

I have trouble imagining a long reflection actually happening in a world with the individual agency level dialed down.

Yeah if the dial down happened before good institutions were built for the long reflection, that could be bad, but also seems relatively easy to avoid (don't start trying to dial down individual agency before building the institutions).

I feel better about cultivating agency in people who are intelligent and wise rather than people who are not.

Yeah that seems better to me too. But this made me think of another consideration: if individual agency (in general, or the specific kind you're trying to cultivate) is in fact dangerous, there may also be a selection effect in the opposite direction, where the wise intuitively shy away from being cultivated (without necessarily being able to articulate why).

comment by Raemon · 2023-08-03T19:54:36.858Z · LW(p) · GW(p)

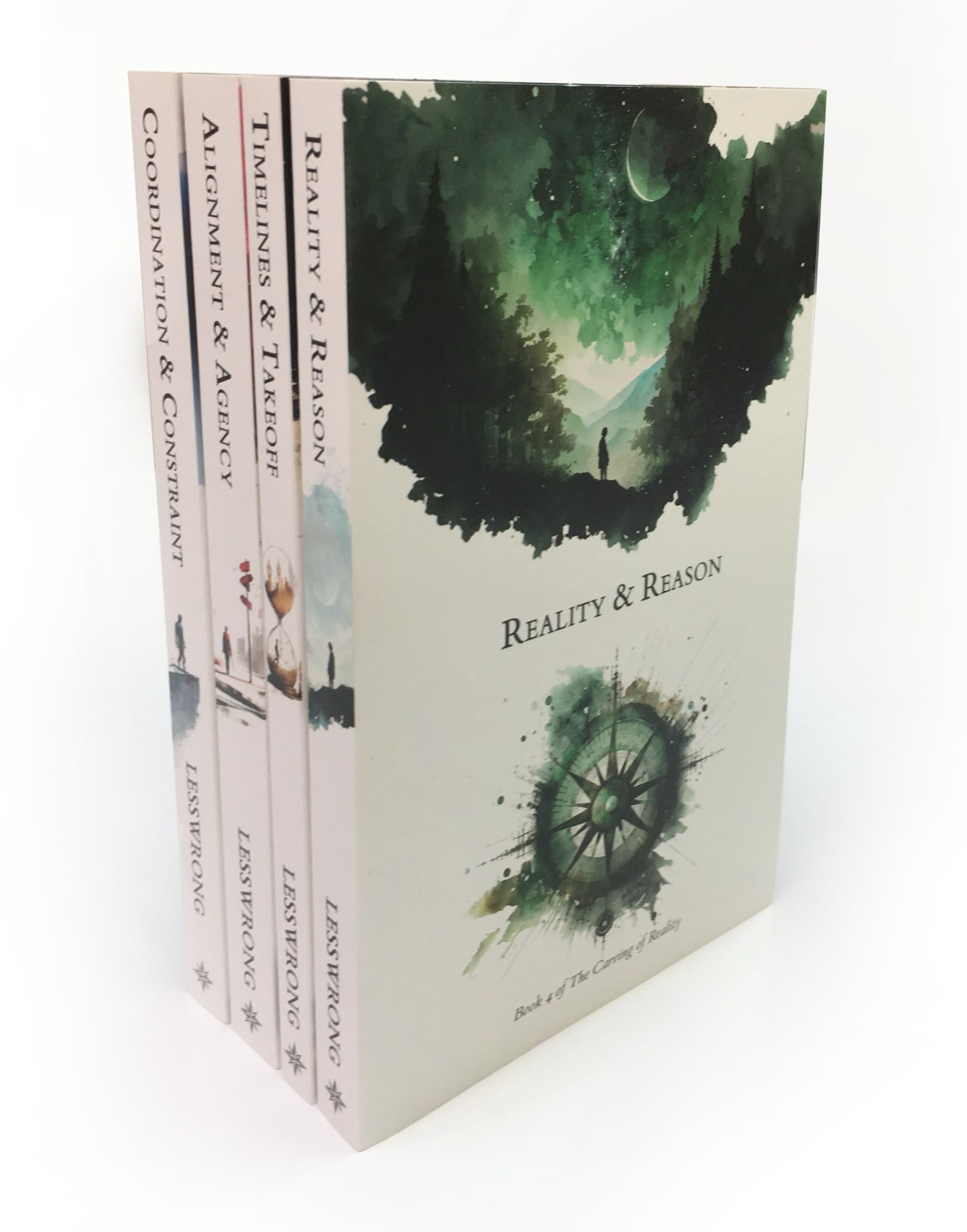

There'll be a formal announcement a bit later, but: the third series of the Best of LessWrong books are now available on Amazon. Apologies for the long wait. We've also restocked the previous two editions.

https://www.amazon.com/dp/B0C95MJJBK

comment by SherlockHolmes · 2023-07-22T21:29:09.349Z · LW(p) · GW(p)

Hello. I am new. I work in software and have a background in neuroscience. I recently wrote a short paper outlining my theory of consciousness, but am not sure where to put it. Found this place and am intrigued. Figure I'll lurk around a bit and see if anyone has written something similar. Thanks for having me.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2023-07-24T08:46:09.911Z · LW(p) · GW(p)

Did you publish it? If so, can you link it?

If not, you can just publish it in a post.

Replies from: SherlockHolmes↑ comment by SherlockHolmes · 2023-07-24T20:14:02.346Z · LW(p) · GW(p)

Yes I just put it online yesterday:

https://peterholmes.medium.com/the-conscious-computer-af5037439175

It's on medium but I'd rather move it here if you think it might get more readers. Thanks!

↑ comment by Rafael Harth (sil-ver) · 2023-07-25T12:11:40.601Z · LW(p) · GW(p)

I think it'd make most sense to post it as a link post here.

Replies from: SherlockHolmes↑ comment by SherlockHolmes · 2023-07-25T16:37:30.255Z · LW(p) · GW(p)

Ok cool, will do. Thanks!

comment by Joe Rogero · 2023-07-18T14:46:17.490Z · LW(p) · GW(p)

Greetings from The Kingdom of Lurkers Below. Longtime reader here with an intro and an offer. I'm a former Reliability Engineer with expertise in data analysis, facilitation, incident investigation, technical writing, and more. I'm currently studying deep learning and cataloguing EA projects and AI safety efforts, as well as facilitating both formal and informal study groups for AI Safety Fundamentals.

I have, and am willing to offer to EA or AI Safety focused individuals and organizations, the following generalist skills:

- Facilitation. Organize and run a meeting, take notes, email follow-ups and reminders, whatever you need. I don't need to be an expert in the topic, I don't need to personally know the participants. I do need a clear picture of the meeting's purpose and what contributions you're hoping to elicit from the participants.

- Technical writing. More specifically, editing and proofreading, which don't require I fully understand the subject matter. I am a human Hemingway Editor. I have been known to cut a third of the text out of a corporate document while retaining all relevant information to the owner's satisfaction. I viciously stamp out typos. I helped edit the last EA Newsletter.

- Presentation review and speech coaching. I used to be terrified of public speaking. I still am, but now I'm pretty good at it anyway. I have given prepared and impromptu talks to audiences of dozens-to-hundreds and I have coached speakers giving company TED talks to thousands. A friend who reached out to me for input said my feedback was "exceedingly helpful". If you plan to give a talk and want feedback on your content, slides, or technique, I would be delighted to advise.

I am willing to take one-off or recurring requests. I reserve the right to start charging if this starts taking up more than a couple hours a week, but for now I'm volunteering my time and the first consult will always be free (so you can gauge my awesomeness for yourself). Contact me via DM or at optimiser.joe@gmail.com if you're interested.

comment by Rafael Harth (sil-ver) · 2023-07-25T12:10:43.605Z · LW(p) · GW(p)

In your experience, if you just copy-paste a post you've written to GPT-4 and ask it to critique it, does it tell you anything useful?

Replies from: steve2152, duck_master↑ comment by Steven Byrnes (steve2152) · 2023-08-05T19:23:35.583Z · LW(p) · GW(p)

I have a list of prompts that are more specific than “critique it”, like “[The following is a blog post draft. Please create a bullet point list with any typos or grammar errors.]”, "Was there any unexplained jargon in that essay?", "Was any aspect of that essay confusing?", "Are there particular parts of this essay that would be difficult for non-native English speakers to follow?", “Please create a bullet-point list of obscure words that I use in the essay, which a non-native English speaker might have difficulty understanding.”, (If it’s an FAQ:) "What other FAQ questions might I add?", "What else should I add to this essay?", Add a summary/tldr at the top, then: "Is the summary at the top adequate?"

Does it tell me anything useful? Meh. Sometimes it finds a few things that I’m happy to know about. I very often don’t even bother using it at all. (This is with GPT-4, I haven’t tried anything else.)

I suspect the above prompts could be improved. I haven’t experimented much. I’m very interested to hear what other people have been doing.

↑ comment by duck_master · 2023-08-01T01:21:14.379Z · LW(p) · GW(p)

I haven't used GPT-4 (I'm no accelerationist, and don't want to bother with subscribing), but I have tried ChatGPT for this use. In my experience it's useful for finding small cosmetic changes to make and fixing typos/small grammar mistakes, but I tend to avoid copy-pasting the result wholesale. Also I tend to work with texts much shorter than posts, since ChatGPT's shortish context window starts becoming an issue for decently long posts.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-08-01T10:28:12.733Z · LW(p) · GW(p)

ChatGPT doesn't have a fixed context window size. GPT-4's context window is much bigger.

comment by David Mears (david-mears) · 2023-07-25T10:55:41.639Z · LW(p) · GW(p)

Feature suggestion: Up/downvoting a post shouldn’t be possible within 30 seconds of opening a (not very short) post (to prevent upvoting based on title only), or should be weighted less.

comment by Atc239 · 2023-07-24T19:44:47.261Z · LW(p) · GW(p)

Hi, I heard about LW from Tim Ferriss' newsletter. Received the intro email from LW and currently reading through the Sequences. I work in tech and just interested in learning more from reading people's posts. Overall interested in topics related to rationality, probability, and a variety of topics in between.

comment by Veedrac · 2023-08-06T04:38:59.537Z · LW(p) · GW(p)

Has there been any serious study of whether mirror life—life with opposite chemical chirality—poses an existential risk?

comment by Joseph Van Name (joseph-van-name) · 2023-07-26T20:04:03.686Z · LW(p) · GW(p)

Hello. I am Joseph Van Name. I have been on this site substantially this year, but I never introduced myself. I have a Ph.D. in Mathematics, and I am a cryptocurrency creator. I am currently interested in using LSRDRs and related constructions to interpret ML models and to produce more interpretable ML models.

Let be -matrices. Define a fitness function by letting ( denotes the spectral radius of while is the tensor product of and ). If locally maximizes , then we say that is an -spectral radius dimensionality reduction (LSRDR) of (and we can generalize the notion of an LSRDR to a complex and quaternionic setting).

I originally developed the notion of an LSRDR in order to evaluate the cryptographic security of block ciphers such as the AES block cipher or small block ciphers, but it seems like LSRDRs have much more potential in machine learning and interpretability. I hope that LSRDRs and similar constructions can make our AI safer (LSRDRs do not seem directly dangerous at the moment since they currently cannot replace neural networks).

Replies from: quinn-dougherty↑ comment by Quinn (quinn-dougherty) · 2023-07-26T20:18:30.976Z · LW(p) · GW(p)

I'm curious and I've been thinking about some opportunities for cryptanalysis to contribute to QA for ML products, particularly in the interp area. But I've never looked at spectral methods or thought about them at all before! At a glance it seems promising. I'd love to see more from you on this.

Replies from: joseph-van-name↑ comment by Joseph Van Name (joseph-van-name) · 2023-07-28T15:40:31.033Z · LW(p) · GW(p)

I will certainly make future posts about spectral methods in AI since spectral methods are already really important in ML, and it appears that new and innovative spectral methods will help improve AI and especially AI interpretability (but I do not see them replacing deep neural networks though). I am sure that LSRDRs can be used for QA for ML products and for interpretability (but it is too early to say much about the best practices for LSRDRs for interpreting ML). I don't think I have too much to say at the moment about other cryptanalytic tools being applied to ML though (except for general statistical tests, but other mathematicians have just as much to say about these other tests).

Added 8/4/2023: On a second thought, people on this site really seem to dislike mathematics (they seem to love discrediting themselves). I should probably go elsewhere where I can have higher quality discussions with better people.

comment by Richard_Kennaway · 2023-07-22T12:20:10.055Z · LW(p) · GW(p)

Why are neural nets organised in layers, rather than as a DAG?

I tried Google, but when I asked "Why are neural nets organised in layers?", the answers were all to the question "Why are neural nets organised in multiple layers instead of one layer?" which is not my question. When I added the stipulation "rather than as a DAG", it turns out that some people have indeed worked on NNs organized as DAGs, but I was very unimpressed with all of the references I looked at.

GPT 3.5, needless to say, failed to give a useful answer.

I can come up with one reason, which is that the connectivity of an NN can be specified with just a handful of numbers: the number of neurons in each layer. But in the vastly larger space of all DAGs, how does one locate a useful one? I can come up with another reason: layered NNs allow the required calculations to be organised as operations on large arrays, which can be efficiently performed in hardware. Perhaps the calculations required by less structured DAGS are not so amenable to fast hardware execution.

But I'm just guessing.

Can anyone give me a real answer to the question, why are neural nets organised in layers, rather than as a DAG? Has any real work been done on the latter?

Replies from: thoth-hermes, Razied↑ comment by Thoth Hermes (thoth-hermes) · 2023-07-22T13:38:28.798Z · LW(p) · GW(p)

It's to make the computational load easier.

All neural nets can be represented as a DAG, in principle (including RNNs, by unrolling). This makes automatic differentiation nearly trivial to implement.

It's very slow, though, if every node is a single arithmetic operation. So typically each node is made into a larger number of operations simultaneously, like matrix multiplication or convolution. This is what is normally called a "layer." Chunking the computations this way makes It easier to load them into a GPU.

However, even these operations can still be differentiated as one formula, e.g. in the case of matrix mult. So it is still ostensibly a DAG even when it is organized into layers. (This is how IIRC libraries like PyTorch work.)

↑ comment by Razied · 2023-07-22T14:02:11.580Z · LW(p) · GW(p)

I think you might want to look at the litterature on "sparse neural networks", which is the right search term for what you mean here.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-07-26T19:14:18.703Z · LW(p) · GW(p)

I don't think "sparse neural networks" fit the bill. All the references I've turned up for the phrase talk about the usual sort of what I've been calling layered NNs, but where most of the parameters are zero. This leaves intact the layer structure.

To express more precisely the sort of connectivity I'm talking about, for any NN, construct the following directed graph. There is one node for every neuron, and an arc from each neuron A to each neuron B whose output depends directly on an output value of A.

For the NNs as described in e.g. Andrej Karpathy's lectures (which I'm currently going through), this graph is a DAG. Furthermore, it is a DAG having the property of layeredness, which I define thus:

A DAG is layered if every node A can be assigned an integer label L(A), such that for every edge from A to B, L(B) = L(A)+1. A layer is the set of all the nodes having a given label.

The sparse NNs I've found in the literature are all layered. A "full" (i.e. not sparse) NN would also satisfy the converse of the above definition, i.e. L(B) = L(A)+1 would imply an edge from A to B.

The simplest example of a non-layered DAG is one with three nodes A, B, and C, with edges from A to B, A to C, and B to C. If you tried to structure this into layers, you would either find an edge between two nodes in the same layer, or an edge that skips a layer.

To cover non-DAG NNs also, I'd call one layered if in the above definition, "L(B) = L(A)+1" is replaced by "L(B) = L(A) ± 1". (ETA: This is equivalent to the graph being bipartite: the nodes can be divided into two sets such that every edge goes from a node in one set to a node in the other.)

It could be called approximately layered if most edges satisfy the condition.

Are there any not-even-approximately-layered NNs in the literature?

Replies from: Razied↑ comment by Razied · 2023-07-26T23:41:59.097Z · LW(p) · GW(p)

I'm fairly sure that there's architectures where each layer is a linear function of the concatenated activations of all previous layers, though I can't seem to find it right now. If you add possible sparsity to that, then I think you get a fully general DAG.

comment by gilch · 2023-07-06T18:11:43.908Z · LW(p) · GW(p)

Published just yesterday: https://openai.com/blog/introducing-superalignment

Superintelligence will be the most impactful technology humanity has ever invented, and could help us solve many of the world’s most important problems. But the vast power of superintelligence could also be very dangerous, and could lead to the disempowerment of humanity or even human extinction.

So they came out and said it. Overton Window is open.

We are dedicating 20% of the compute we’ve secured to date over the next four years to solving the problem of superintelligence alignment.

Significant resources then.

Our goal is to build a roughly human-level automated alignment researcher.

Hmm. The fox is guarding the henhouse. I just don't know if anything else has a chance of working in time.

[EDIT: just saw the linkpost [LW · GW]. Not sure why it didn't load in my feed before.]

comment by Rafael Harth (sil-ver) · 2023-07-19T08:21:43.432Z · LW(p) · GW(p)

Why is the reaction feature non-anonymous? It makes me significantly less likely to use them, especially negative ones.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-07-19T10:36:28.811Z · LW(p) · GW(p)

Because it's about communication. If someone uses a reaction to answer your comment with "this is a crux" or "not a crux" it matters a great deal whether the person you are replying is the OP or someone else.

comment by Amin A. (sab-s) · 2023-07-18T21:42:27.300Z · LW(p) · GW(p)

22 year old software student. Graduating in Apr 2023. Uni has been pretty useless so far. Lack of practical knowledge and good explanations. Bad professors + strict class schedules of only 24 lecture hours per class = bad learning experience.

Donated some money to effective altruism before.

Signed up here because I wanted to comment on someone's blog and this was one possible way to. This was the site: https://www.jefftk.com/p/3d-printed-talkbox-cap. The post I wanted to comment on didn't support lesswrong comments though so I got duped. Nice to meet yall.

comment by niplav · 2023-07-17T11:07:33.675Z · LW(p) · GW(p)

In case you were wondering: In my personal data I find no relation between recent masturbation and meditation quality, contrary to the claims of many meditative traditions.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-07-18T11:15:33.080Z · LW(p) · GW(p)

I'm quite unclear about the statistics. The standard approach would likely be to use statistics that output some p-value.

Did you follow some explicit other statistical approach that's standardized somewhere where you would argue that it's better for this problem?

Generally, if 17% of the variation of concentration would be explained by masturbation that doesn't seem to me like it would support saying that masturbation doesn't matter.