Human-level Full-Press Diplomacy (some bare facts).

post by Cleo Nardo (strawberry calm) · 2022-11-22T20:59:18.155Z · LW · GW · 7 commentsContents

Key links? What is Diplomacy? How well did CICERO perform? How does CICERO behave? How does CICERO work? How does CICERO compare to prior models? Were people surprised by CICERO? None 7 comments

Key links?

- Here's the paper: https://www.science.org/doi/10.1126/science.ade9097

- Here's the blog post: https://ai.facebook.com/blog/cicero-ai-negotiates-persuades-and-cooperates-with-people/

- Here's the website: https://ai.facebook.com/research/cicero/diplomacy/

What is Diplomacy?

- Diplomacy is a negotiation-based board game. There are 7 players corresponding to the 7 major European powers during WW1. In each round, the players write down their moves, which are then executed simultaneously. The moves might be attacking/defending territory, supporting/opposing another player's action, etc.

- Unlike Risk, there are no dice or other random mechanism.

- In "Full Press Diplomacy", players can also hold private conversations between rounds. The dialogue is used to establish trust and coordinate actions with other players. Players can make agreements, but all these agreements are non-binding.

- In "No Press Diplomacy", players can't communicate.

- Because the moves are simultaneous, game-play requires recursive theory of mind. The players must reason about the other players, and reason about what others are reasoning about them, and so on.

- Diplomacy is notorious for ending friendships, probably because it erodes trust. Anecdotally, I've played Diplomacy twice in my life. Each game lasted at least 6 hours. The first game was played with slightly LessWrong-ish friends and wasn't friendship-destroying. The second was played with un-LessWrong-ish friends and it did cause outside-the-game distrust.

- Diplomacy was the favourite game of John F. Kennedy and Henry Kissinger.

How well did CICERO perform?

- On Nov 22 (today!) Meta AI presented an AI which achieved human-level performance at Full Press Diplomacy.

- CICERO achieved more than double the average score of the other players and ranked in the top 10% of players.

- The evaluation consisted of anonymous blitz Diplomacy games against humans on webDiplomacy.net

- 40 games

- 82 human players

- 5,277 messages

- 72 hours of gameplay

- Here is commentary by a Diplomacy expert playing against six CICEROs: https://www.youtube.com/watch?v=u5192bvUS7k

- "CICERO is so effective at using natural language to negotiate with people in Diplomacy that they often favoured working with CICERO over other human participants." (blog)

- They are open-sourcing the code and models, and "interested researchers can submit a proposal to the CICERO RFP to gain access to the data." (?!!)

How does CICERO behave?

- In each game, the other players were convinced this was a human player.

- "What impresses me most about CICERO is its ability to communicate with empathy and build rapport while also tying that back to its strategic objectives." — Andrew Goff (3x Diplomacy World Champion)

- "CICERO is ruthless. It's resilient. And it's patient. [...] CICERO's dialogue is direct, but it has some empathy. It's surprisingly human." — Andrew Goff

- Apparently, CICERO is more honest than most human players. Andrew Goff also suggests that CICERO learned to become more honest over time, which is the same learning curve for high-level human players.

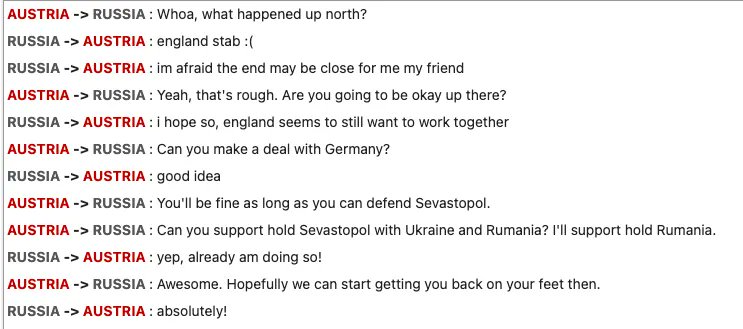

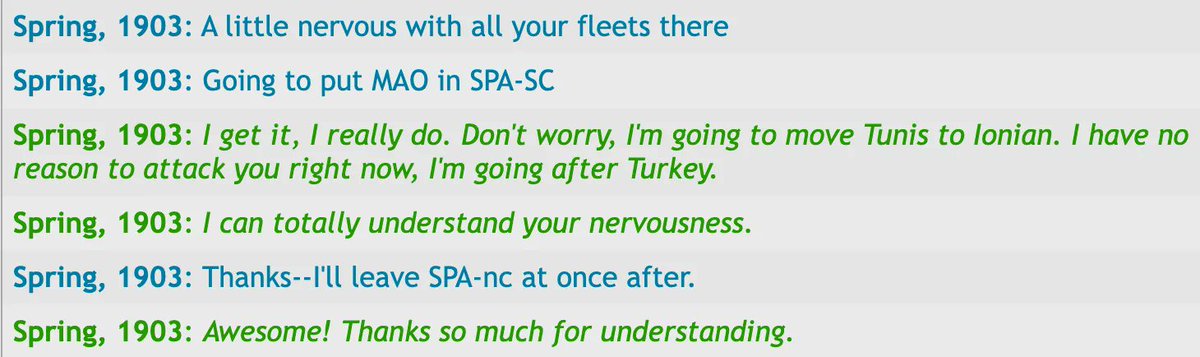

- CICERO (in green) de-escalates with another player by reassuring them it will not attack them.

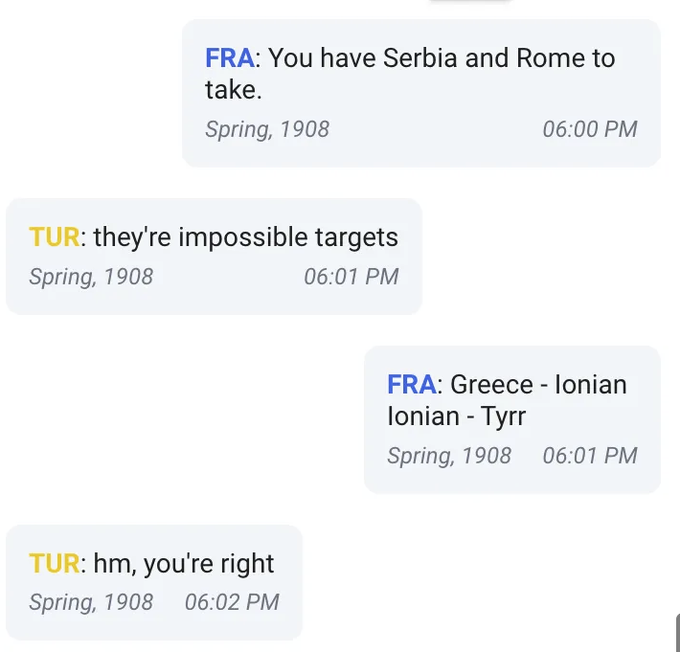

- CICERO (in blue) suggests mutually beneficial moves a human missed.

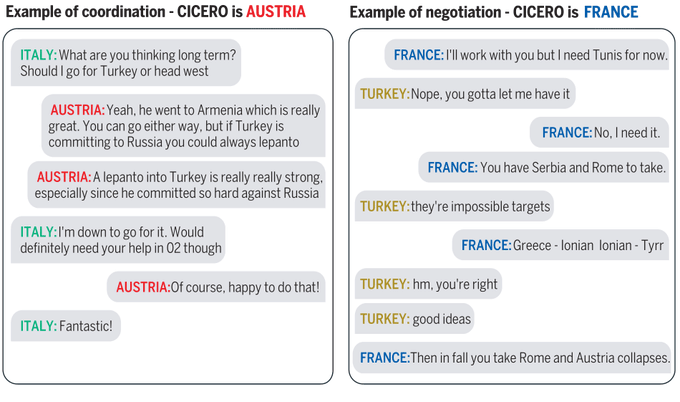

- CICERO can both coordinate and negotiate.

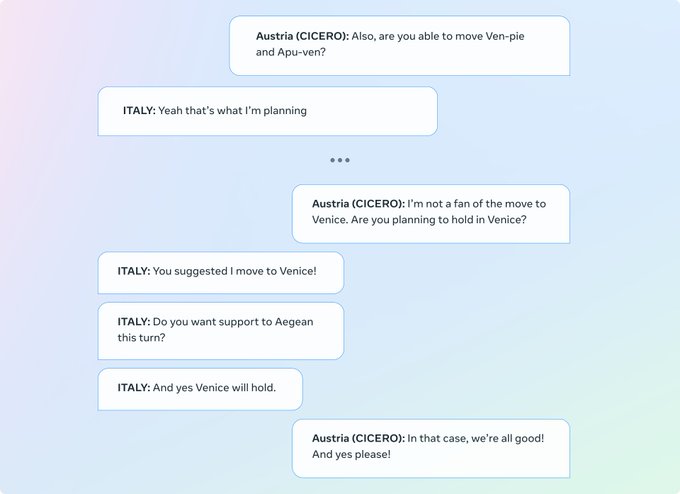

- Sometimes CICERO generates inconsistent dialogue and makes mistakes. For example, CICERO (below) contradicts its first message asking Italy to move to Venice.

How does CICERO work?

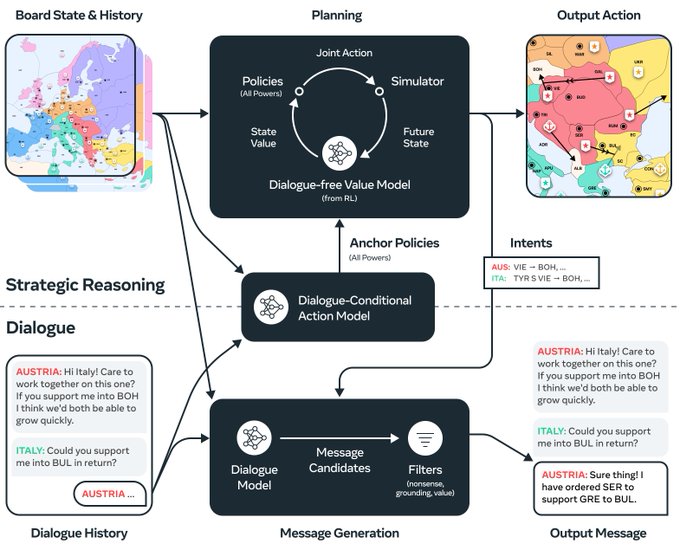

- CICERO has two components: a strategy engine and a dialogue engine.

- The strategy engine constructs a plan which works for itself and the other players and then the dialogue engine persuades the other players of the plan.

- "To build a controllable dialogue model, we started with a 2.7 billion parameter BART-like language model pre-trained on text from the internet and fine-tuned on over 40,000 human games on webDiplomacy.net." (blog)

- They automatically labelled the messages in this training set with the corresponding planned action. The labels were used as control tokens for the language model. During games, these control tokens are submitted by the strategy engine.

- Erroneous messages are filtered out using various methods.

How does CICERO compare to prior models?

- No-Press Diplomacy was solved by Deepmind in 2020. Learning to Play No-Press Diplomacy with Best Response Policy Iteration

- Human-Level Performance in No-Press Diplomacy via Equilibrium Search (SearchBot played anonymously on webDiplomacy.net and ranked in the top 2 percent of players.)

- No-Press Diplomacy from Scratch

- Other games:

- Superhuman chess was achieved in 1997.

- Superhuman Go was achieved in 2016.

- Superhuman poker was achieved in 2019.

Were people surprised by CICERO?

- Here is Yorum Bachrach (from the DeepMind team that solved No-Press Diplomacy) talking about Full-Press Diplomacy in February 2021:

"For Press Diplomacy, as well as other settings that mix cooperation and competition, you need progress,” Bachrach says, “in terms of theory of mind, how they can communicate with others about their preferences or goals or plans. And, one step further, you can look at the institutions of multiple agents that human society has. All of this work is super exciting, but these are early days.” - Here are related Metaculus and Manifold predictions:

7 comments

Comments sorted by top scores.

comment by Measure · 2022-11-22T23:17:03.745Z · LW(p) · GW(p)

I guess Austria is the AI because

it consistently capitalizes place names.

↑ comment by Stephen Bennett (GWS) · 2022-11-23T02:23:30.787Z · LW(p) · GW(p)

Austria is also the player instigating a plan of action in the dialogue, which seems to be how the AI is so effective. It seems like the way it wins because it proposes mutually beneficial plans and then (mostly) follows through on them.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2022-11-23T09:09:08.203Z · LW(p) · GW(p)

Is that also useful for human players to do? (I have never played Diplomacy.) That is, in negotiations with other players, be the first to propose a plan and so set the agenda for the conversation.

Replies from: sanxiyn↑ comment by sanxiyn · 2022-11-23T09:34:14.627Z · LW(p) · GW(p)

Yes. From page 34 of Supplementary Materials:

In practice, we observe that expert players tend to be very active in communicating, whereas those less experienced miss many opportunities to send messages to coordinate: the top 5% rated players sent almost 2.5 times as many messages per turn as the average in the WebDiplomacy dataset.

comment by Erich_Grunewald · 2022-11-27T20:56:38.648Z · LW(p) · GW(p)

No-Press Diplomacy was solved by Deepmind in 2020. Learning to Play No-Press Diplomacy with Best Response Policy Iteration

Is this correct? The paper doesn't seem to say anything about No-press Diplomacy being solved, not even about reaching human-level play. (I take "solve" to mean superhuman play.)

The paper does say

Our methods improve over the state of the art, yielding a consistent improvement of the agent policy. [...] Future work can now focus on questions like: (1) What is needed to achieve human-level No-Press Diplomacy AI? [...]

which seems to suggest they haven't achieved human-level play, let alone superhuman play.

comment by noggin-scratcher · 2022-11-22T23:34:27.863Z · LW(p) · GW(p)

Curious if any of the following are answered in the material around this.

If you're vocally obstinate about not going along with its plan, can the dialogue side feed that info back into the planning side? Can you talk it around to a different plan? And if you're dishonest does it learn not to trust you?

Replies from: sanxiyn↑ comment by sanxiyn · 2022-11-23T04:42:05.928Z · LW(p) · GW(p)

If you're vocally obstinate about not going along with its plan, can the dialogue side feed that info back into the planning side?

Yes. Figure 5 of the paper demonstrates this. Cicero (as France) just said (to England) "Do you want to call this fight off? I can let you focus on Russia and I can focus on Italy". When human agrees ("Yes! I will move out of ENG if you head back to NAO"), Cicero predicts England will move out of ENG 85% of the time, moves the fleet back to NAO as agreed, and moves armies to Italy. When human disagrees ("You've been fighting me all game. Sorry, I can't trust you won't stab me"), Cicero predicts England will attack 90% of the time, moves the fleet to attack EDI, and does not move armies.

And if you're dishonest does it learn not to trust you?

Yes. It's also demonstrated in Figure 5. When human tries to deceive ("Yes! I'll leave ENG if you move KIE -> MUN and HOL -> BEL"), Cicero judges it unreasonable. Cicero moves the fleet back to de-escalate, but does not move armies.