Three ways interpretability could be impactful

post by Arthur Conmy (arthur-conmy) · 2023-09-18T01:02:30.529Z · LW · GW · 8 commentsContents

Three ways interpretability could be impactful 1. Test set interpretability: Finding alignment failures 2. Validation set interpretability: A better science of alignment. 3. Training set interpretability: Training models to be interpretable Feasibility of interpretability 1. We have perfect access to models inputs, internals and outputs. 2. Crisp internal representations may be both natural and the most important objects of study. 3. Most useful techniques in the field can be systematized and automated. Appendix Figure 1’s chronology seems plausible through training. Figure 1’s chronology seems plausible through scaling. Figure 1 suggests that interpretability is only somewhat important. None 8 comments

Epistemic status: somewhat confident that overall these arguments show interpretability could be impactful, but not confident on any individual point.

Background: I wrote a draft of this post here after reading Charbel’s work [LW · GW]. Thanks to Charbel and several others’ feedback, the post was improved a lot: I would definitely recommend adversarial collaboration so thanks Charbel! I’m hoping to discuss interpretability’s impact further so posting now.

Three ways interpretability could be impactful

Just read the test and validation set parts if you have little time.

1. Test set interpretability: Finding alignment failures

What this is. We want to find alignment failures with interpretability that other forms of auditing do not find. Before deploying a model, we find inputs that seem to elicit new capabilities of the model. Either A) the model has correctly generalized to novel capabilities or B) the model is deceiving our evaluation process to produce convincing but false outputs. We then use our future interpretability tools to try to understand some of the computation the AI is doing to reach these new capabilities. We either become more confident that the AI has correctly generalized, or we’ve uncovered deceptive computation and we can retrain and/or provide robust evidence of misalignment to relevant actors.

Why this isn’t achievable with other methods. If a model can deceive our evaluation process it could be very difficult to observe this deception with mere behavorial evals. See here [AF · GW].

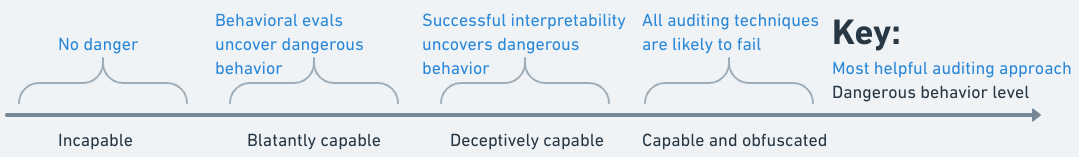

In my mind test set interpretability primarily targets a specific set of alignment failures, illustrated here:

In the appendix I outline my reasoning behind (interpretability’s role in) Figure 1.

2. Validation set interpretability: A better science of alignment.

What this is (thanks to Neel). We have little ground truth on whether our models are misaligned now or how far methods such as RLHF will further scale. More generally, we understand little about how machine learning works, which limits our ability to reason about future systems. Interpretability could first and foremost actually provide evidence for what our alignment techniques are doing (e.g interpreting RLHF reward models) and secondly give us a better evidence base for reasoning about deep learning. I think that Progress Measures for Grokking Via Mechanistic Interpretability has already somewhat changed people’s perspectives on how ML models select different algorithms (e.g here, here [LW · GW]).

This differs from test set interpretability as it is broader and can be applied before testing potentially misaligned models, to steer the field towards better practises for alignment (Russell and Norvig’s validation/test distinction here may be helpful for analogy).

Why this isn’t achievable with other methods. If we want to understand how models work for safety-relevant end goals, it seems likely to me that interpretability is the best research direction to pursue. Most methods are merely behavorial and so provide limited ground truth, especially when we are uncertain about deception. For example, I think the existing work trying to make chain-of-thought faithful shows that naive prompting is likely insufficient to understand models’ reasoning. Non-behavorial methods such as science of deep learning approaches (e.g singular learning theory, scaling laws) by default give high-level descriptions of neural network statistics such as loss or RLCT [LW · GW]. I don’t think these approaches are as likely to get as close to answering the questions about internal computations in AIs that I think successful interpretability could lead to. I think some other directions are worthwhile bets due to uncertainty and neglectedness, however.

3. Training set interpretability: Training models to be interpretable

(In my opinion this is less impactful than test and validation)

What this is. While test and validation set interpretability offer ways to check whether training procedures have generated misaligned models, they don’t work on actually aligning future models. We could use interpretability to train models by e.g i) rewarding cases where the model’s internal and external reasoning process are identical, or ii) adding terms into the loss function that incentivize simple and/or fast internal computation [AF · GW] (example paper that trains for interpretability).

Why this **IS** achievable with other methods. I think training set interpretability is not as promising as test or validation set interpretability. This is because I think that there are lots of other ways to try to align models through training such as (AI-assisted) human feedback, process-based training or adversarial training, and so marginal benefits of interpretability are lower. However, ML is an empirical field so it’s hard to tell which techniques will be effective and which won’t be. Additionally, I’m not that strict on what counts as safety or what counts as interpretability. Few people are working on training for interpretability, so I’d be curious to see projects!

Feasibility of interpretability

The above arguments use cases of interpretability don’t spell out why these are feasible goals. This section has three arguments for feasibility

1. We have perfect access to models inputs, internals and outputs.

This has been stated many times before (Chris Olah’s 80k episode Chris Olah's blog) but worth reiterating. Consider a scientific field such as biology where molecular biology and the discovery of DNA, Darwin’s discovery of natural selection and gene-editing were all developed by researchers working with extremely messy input, internal and output channels. In neural networks, we can observe all input, internal and output channels always, with no noise.

2. Crisp internal representations may be both natural and the most important objects of study.

LLMs are trained to model human text and to be useful to humans. In my opinion, both of these pressures push them to have representations close to human concepts (relevant papers include this and this thanks to Charbel) when gradient descent is successful. Also, within reasonable limits of human ability, when model behaviors are general these are likely to be the behaviors we are most concerned by: these will be the capabilities that are can reliably be missed by brute forcing prompt space. In my opinion, general model behaviors are more likely to correspond to crisp internal representations since it is more difficult for models to learn a large number of heuristics that collectively are general.

3. Most useful techniques in the field can be systematized and automated.

The path decomposition insight in Transformer Circuits, several forms of patching and gradient-based attribution methods are all examples of techniques that I would consider systematizable (rather than, for example, requiring some researcher ingenuity to figure out what new algorithm a model is implementing in every interpretability project).

I think systematizing and automating interpretability techniques are some of the most promising directions for research. Note that this is contra Chris Olah (here). To me, automation is crucial for defense against obsoletion by the bitter lesson. I am wary of the “new scientific field” analogy for mechanistic interpretability, as sciences typically do not mature in less than a couple of decades. Automating interpretability could allow the field's speed to match capability progress (Carl Shulman discusses this race dynamic here).

Appendix

(Remarks on Figure 1)

Figure 1 from the main text

Inspired by this post [AF · GW] that extends Anthropic’s thoughts here, in Figure 1 consider the “dangerous behavior level” of a ML model. The dangerous behavior level measures a specific dangerous behavior that an AI could have, such as producing a novel virus, or designing software with hidden backdoors. Of course dangerous behavior level isn’t just one axis, and I haven’t added units (just like the post [AF · GW]).

Figure 1’s chronology seems plausible through training.

Why might all the stages in Figure 1 occur?

I think this is because gradient descent likely gradually implements and removes heuristics of differing sophistication through training. Therefore when capabilities first appear they are likely to be blatantly capable (not very robust and observable by humans from I/O channels only). Blatant capabilities, if they’re not trained away anyway, are likely to be spotted by comprehensive evals because of I/O observability. To me, the next level of dangerous and deceptive capability is likely when there are two possible generalizations to perform a novel task: i) performing novel tasks in a new environment and ii) producing an output which appears to be correct to humans. If the novel task is sufficiently difficult then NN inductive biases could prefer 2, due to a simplicity bias (i.e a shortcut solution). A training process like those performed today wouldn’t distinguish between i) and ii). Finally, speculatively, significant situational awareness required for the capable and obfuscated solution to be selected, and therefore the deceptively capable part of Figure 1 could be a sizable fraction of the dangerous capability axis. If capable and obfuscated dangerous capabilites emerge, I’m pessimistic about how helpful currently explored prosaic techniques will be.

Figure 1’s chronology seems plausible through scaling.

Similarly to the above point, I think that larger models likely have more capacity for a higher dangerous behavior levels. I think that since there are many large models trained, more gradual dangerous capability level jumps are more likely than sudden leaps. I also personally think that punctuated equilibria is the most useful model for progress from one frontier model to the next, currently. This doesn't suggest that there's a single extreme discontinuity. However, it does suggest there are multiple, smaller discontinuities.

Figure 1 suggests that interpretability is only somewhat important.

None of this post claims that interpretability is more impactful than other alignment approaches (e.g, I think scalable oversight to improve upon RLHF, as well as dangerous capability evals sound more neglected, and I’m personally working on interpretability since I think I have a fairly strong comparative advantage). I am just arguing that to me there is a compelling case for some interpretability research, contra Charbel’s post [LW · GW]. In fact, since dangerous capability evals is such a new research area, and only in 2023 it has really become clear that coordination between labs and policymaker interventions could make real impact, to me I would guess that this is a stronger bet for new alignment researchers compared to interpretability, absent other information.

Thanks to @Charbel-Raphaël [LW · GW] for prompting this, @Neel Nanda [LW · GW] for most of the ideas behind the validation set, as well as Lucius Bushnaq, Cody Rushing and Joseph Bloom for feedback. EDIT: after reading this, I found this [LW · GW] LW post which is has a similar name, covers some ideas but differs in that there are more ideas explored.

8 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2023-09-18T15:37:54.163Z · LW(p) · GW(p)

This has been stated many times before (I believe I heard it in Chris Olah’s 80k episode first) but worth reiterating.

The reference I like best is https://colah.github.io/notes/interp-v-neuro/

comment by Miko Planas (miko-planas) · 2023-09-28T07:20:06.042Z · LW(p) · GW(p)

How ambitious would it be to primarily focus on interpretability as an independent researcher (or as an employee/research engineer)?

If I've inferred correctly, one of this article's goals is to increase the number of contributors in the space. I generally agree with how impactful interpretability can be, but I am little more risk averse when it comes to it being my career path.

For context, I have just graduated and I have a decent amount of experience in Python and other technologies. With those skills, I was hoping to tackle multiple low-hanging fruits in interpretability immediately, yet I am not 100% convinced in giving my all to these since I worry about my job security and chances of success.

1.) The space is both niche and research-oriented, which might not help me land future technical roles or higher education.

2.) I've anecdotally observed that most entry-level roles and fellowships in the space look for people with prior engineering work experience that is not often seen from fresh graduates. It might be hard to contribute and sustain myself from the get-go.

Does this mean I should be doing heavy engineering work before entering the interpretability space? Would it be possible for me to do both without sacrificing quality? If I do give 100% of my time to interpretability, would I still have other engineering job options if the space does not progress?

I am highly interested in knowing your thoughts on how younger devs/researchers can contribute without having to worry about job security, getting paid, or lacking future engineering skills (e.g. deployment, dev work outside notebook environments, etc.).

Replies from: whitehatStoic↑ comment by MiguelDev (whitehatStoic) · 2023-09-28T08:01:01.055Z · LW(p) · GW(p)

Did I understand your question correctly? Are you viewing interpretability work as a means to improve AI systems and their capabilities [? · GW]?

Replies from: miko-planas↑ comment by Miko Planas (miko-planas) · 2023-10-02T08:11:22.444Z · LW(p) · GW(p)

I primarily see mechanistic interpretability as a potential path towards understanding how models develop capabilities and processes -- especially those that may represent misalignment. Hence, I view it as a means to monitor and align, not so much as to directly improve systems (unless of course we are able to include interpretability in the training loop).

comment by Charlie Steiner · 2023-09-18T18:19:02.169Z · LW(p) · GW(p)

(1) might work, but seems like a bad reason to exert a lot of effort. If we're in a game state where people are building dangerous AIs, stopping one such person so that they can re-try to build a non-dangerous AI (and hoping nobody else builds a dangerous AI in the meantime) is not really a strategy that works. Unless by sheer luck we're right at the middle of the logistic success curve, and we have a near-50/50 shot of getting a good AI on the next try.

(2) veers dangerously close to what I call "understanding-based safety," which is the idea that it's practical for humans to understand all the important safety properties of an AI, and once they do they'll be able to modify that AI to make it safe. I think this intuition is wrong. Understanding all the relevant properties is very unlikely even with lots more resources poured into interpretability, and there's too much handwaving about turning this understanding into safety.

This is also the sort of interpretability that's most useful for capabilities (in ways independent of how useful it is for alignment), though different people will have different cost-benefits here.

(3) is definitely interesting, but it's not a way that interpretability actually helps with alignment.

I actually do think interpretability can be a prerequisite for useful alignment technologies! I just think this post represents one part of the "standard view" on interpretability that I on-balance disagree with.

Replies from: arthur-conmy↑ comment by Arthur Conmy (arthur-conmy) · 2023-09-19T12:32:11.935Z · LW(p) · GW(p)

I think that your comment on (1) is too pessimistic about the possibility of stopping deployment of a misaligned model. That would be a pretty massive result! I think this would have cultural or policy benefits that are pretty diverse, so I don't think I agree that this is always a losing strategy -- after revealing misalignment to relevant actors and preventing deployment, the space of options grows a lot.

I'm not sure anything I wrote in (2) is close to understanding all the important safety properties of an AI. For example, the grokking work doesn't explain all the inductive biases of transformers/Adam, but it has helped better reasoning about transformers/Adam. Is there something I'm missing?

On (3) I think that rewarding the correct mechanisms in models is basically an extension of process-based feedback. This may be infeasible or only be possible while applying lots of optimization pressure on a model's cognition (which would be worrying for the various list of lethalities reasons). Are these the reasons you're pessimistic about this, or something else?

I like your writing, but AFAIK you haven't written your thoughts on interp prerequisites for useful alignment - do you have any writing on this?

comment by Ed Li (ed-li) · 2023-09-18T19:37:14.558Z · LW(p) · GW(p)

Some questions about the feasibility section:

About (2): what would 'crisp internal representations' look like? I think this is really useful to know since we haven't figure this out for the brain or LLMs (e.g. Interpreting Neural Networks through the Polytope Lens [LW · GW]

Moreover, the current methods used in comparing the human brain's representations to those of ML models like RSA are quite high-level, or, at the very least do not reveal any useful insights for interpretability for either side (pls feel free to correct me). This question is not a point against mechanistic interpretability, however--granted the premise that LLMs are pressured to learn similar representations, interpretability research can both borrow from and shed light on how representations in the brain work (which reaches no agreement even after decades).

About (3): when we talk about automating interpretability, do we have a human or another model examining the research outputs? The fear is that due to our inherent limitation in how we reason about the world (e.g., we tend to make sense of something by positing some 'objects' and consider what 'relations' they have to each other), we may not be able to interpret what ML models at a meaningfully high level? To be concrete, so far a lot of interpretability results are in the form of 'circuits' in performing some peculiar task like modular addition, but how can we safely generalize that to some theory that has a wider scope from automation? (I have in my mind Predictive Processing for the brain but not sure how good an example it is)

Replies from: arthur-conmy↑ comment by Arthur Conmy (arthur-conmy) · 2023-09-19T12:42:02.409Z · LW(p) · GW(p)

By "crisp internal representations" I mean that

- The latent variables the model uses to perform tasks are latent variables that humans use. Contra ideas that language models reasons in completely alien ways. I agree that the two cited works are not particularly strong evidence : (

- The model uses these latent variables with a bias towards shorter algorithms (e.g shallower paths in transformers). This is important as it's possible that even when performing really narrow tasks, models could use a very large number of (individually understandable) latent variables in long algorithms such that no human could feasibly understand what's going on.

I'm not sure what the end product of automated interpretability is, I think it would be pure speculation to make claims here.