Evolution and the Low Road to Nash

post by Aydin Mohseni (aydin-mohseni), ben_levinstein (benlev), Daniel Herrmann (Whispermute) · 2025-01-22T07:06:32.305Z · LW · GW · 2 commentsContents

What is a Nash equilibrium? The High Rationality Road (Epistemic Game Theory) The Low Rationality Road (Evolutionary Game Theory) The Replicator Dynamics Example of an Error of Comission for Weak Nash Nash Equilibria Stability Under Replicator Dynamics Example of an Error of Ommission for Strict Nash Nash Equilibria Stability Under Replicator Dynamics The Evolutionarily Stable Strategy (ESS) Revisiting the Examples Even ESS Falls Short: Cycles, Spirals, and Chaos Deeper Reading Conclusion: Beyond Static Equilibria None 2 comments

Solution concepts in game theory—like the Nash equilibrium and its refinements—are used in two key ways. Normatively, they proscribe how rational agents ought to behave. Descriptively, they propose how agents actually behave when interactions settle into equilibrium. The Nash equilibrium[1] underpins much of modern game theory and its applications in economics, political science, and evolutionary biology.

Here, we focus on the descriptive use of the concept in game theory. To do so, our first question must be: when should we expect players to play Nash equilibrium strategies in practice?

It turns out that trying to understand the theoretical conditions under which agents might play the Nash equilibrium of a game has led theorists down two very different paths:

- High Rationality Road (Epistemic Game Theory): Model the (sometimes infinite) hierarchy of beliefs of hyper-rational agents. Each agent reasons not just about the game, but about the others’ beliefs about the game, and so on, potentially ad infinitum.[2]

- Low Rationality Road (Evolutionary Game Theory): Model how boundedly rational agents (or populations) learn and adapt using dynamical systems. The focus is on how strategies evolve over time rather than on perfect reasoning.

Both roads yield conditions under which Nash equilibrium can be seen as a plausible outcome of strategic interactions. However, they start from very different assumptions about how real agents reason and learn.

In this post, we’ll briefly touch on the high-rationality approach of epistemic game theory, then focus on the the low rationality approach of evolutionary game theory, showing how adaptive processes provide a rich picture for the conditions under which agents might converge to Nash-equilibrium play.

What is a Nash equilibrium?

Let's briefly review the concept of a Nash equilibrium so we can understand these two approaches.[3] For simplicity of explanation, we consider the definition for the restricted setting of two-player, symmetric, normal form games.

A Nash equilibrium (NE) in a two-player game is a pair of strategies such that neither player can strictly improve their payoff by unilaterally changing their own strategy. Formally, if is player ’s payoff function, then:

is a Nash equilibrium if and for all feasible alternative strategies .

In other words, at a Nash equilibrium, each player is best-responding to the other’s strategy.

If all these inequalities are strict whenever , we call it a strict Nash equilibrium. If some payoffs are equal (i.e., players are indifferent), we call it a weak Nash equilibrium.

The conceptual beauty of the NE is that it is a fixed point of the game under unilateral best response, and—as Nash proved in his 1950 thesis—at least one such fixed point is guaranteed to exist for any finite game.[4]

Examples: everyone driving on the right side of the road is a strict NE—no one gains by deviating, and any unilateral switch would be strictly worse. In Rock–Paper–Scissors, playing each move with 1/3 probability is a weak NE; you can’t do better by unilaterally changing your strategy, but you won’t do worse. By contrast, universal cooperation in a public goods game—as in the cases of pollution control, nuclear non-proliferation, or a pause on the AI arms race—is not an NE: even if everyone else contributes, one defector can free-ride and strictly improve their payoff.

So, what are the conditions under which we should expect real agents to actually end up at a NE?

The High Rationality Road (Epistemic Game Theory)

One context in which we would expect Nash equilibrium to arise is when players are highly rational and possess perfect reasoning along with full knowledge of each other’s rationality. This is the realm of epistemic game theory, where we can use epistemic logics to model hierarchies of beliefs: I know that you know that I know... and so on.

Under specific conditions—e.g., everyone is Bayesian rational, they have common priors, and it’s common knowledge that everyone meets these conditions—epistemic game theory demonstrates that players can deduce and play a Nash equilibrium.[5]

In reality, however, most agents—whether arms-race decision-makers, corporations, or individuals—do not meet these stringent requirements. They often face cognitive limits, hold incomplete or conflicting beliefs, or lack a common prior altogether. In such settings, the “high-rationality” framework struggles to explain key phenomena: for instance, social norms often emerge without deliberate reasoning from first principles; blog comment threads (even on LessWrong) rarely assume a shared understanding of rationality among participants; and individuals signaling on social media operate without identical models of their social network’s structure or goals.

These real-world cases point to the need for a different approach.

The Low Rationality Road (Evolutionary Game Theory)

In their foundational work, von Neumann and Morgenstern envisioned a path to simplifying strategic behavior by examining systems with large populations:

Only after the theory for moderate numbers of participants has been satisfactorily developed will it be possible to decide whether extremely great numbers of participants simplify the situation… We share the hope… that such simplifications will indeed occur…

—von Neumann and Morgenstern (1944, p. 14), Theory of Games and Economic Behavior

Nash himself later proposed a population-level, low rationality interpretation of his solution concept:

We shall now take up the “mass-action” interpretation of equilibrium points… It is unnecessary to assume that the participants have full knowledge of the total structure of the game, or the ability and inclination to go through any complex reasoning processes. But the participants are supposed to accumulate empirical information on the relative advantages of the various pure strategies at their disposal.

To be more detailed, we assume that there is a population (in the sense of statistics) of participants for each position of the game. Let us also assume that the “average playing” of the game involves n participants selected at random from the n populations, and that there is a stable average frequency with which each pure strategy is employed by the “average member” of the appropriate population.

—Nash (1950, p. 21), Non-cooperative Games

In this view, equilibrium strategies emerge not from rationality and common knowledge, but through population-level learning and adaptation.

The low rationality approach, as captured by evolutionary game theory, realizes von Neumann and Morgenstern's hope, and develops Nash's idea. It applies when agents adapt by trial & error, imitation, reinforcement, and various other boundedly rational processes of hill climbing. Over time, strategies that perform better tend to get amplified; those that do worse diminish. This perspective is often formalized via dynamical systems.[6]

While this evolutionary approach provides a powerful lens for understanding strategic behavior, it also reveals the limitations of the Nash equilibrium as a descriptive tool.

The Replicator Dynamics

"The replicator equation is the first and most important game dynamics studied in connection with evolutionary game theory."

—Cressman and Tao (2014, p. 1081) The replicator equation and other game dynamics

To explore how Nash equilibrium fares under evolutionary dynamics, we need a concrete model of population adaptation. We can use the replicator dynamics.[7] Its defining principle is simple yet powerful: strategies that earn above-average payoffs grow in proportion, while those that earn below-average payoffs shrink in proportion. Mathematically, for strategy with average payoff , its growth rate is proportional to

It’s a simple but remarkably universal model. The replicator dynamics is provably equivalent to a wide range of evolutionary dynamics[8] and provides a description of the average behavior (technically, the mean field dynamics) of a wide range of distinct stochastic processes. For example:

- Imitation of success: Agents copy strategies that perform better than theirs (Hofbauer & Sigmund, 1998, ch. 7).

- Pairwise proportional imitation: Agents switch strategies based on comparative payoffs (Weibull, 1995, ch. 5).

- Reinforcement learning: Agents upweight the probability of actions that yielded higher payoffs in the past (Borgers & Sarin, 1997).

- Bayesian inference: The discrete-time version of the replicator equation even has a very nice interpretation in Bayesian terms (Harper, 2009).[9]

If Nash equilibrium is indeed the “right” solution in many contexts, we might hope these adaptive processes converge to it—at least sometimes. Let’s see how that plays out.

Example of an Error of Comission for Weak Nash

Consider this 2×2 symmetric game:

Nash Equilibria

There are two NE: the states “all-A” and “all-B.”

If both players are playing A, then neither can improve their payoffs by changing their strategy, but neither will be worse off—this is a weak Nash equilibrium.

If both players are playing B, neither can improve their payoffs by switching, and they will be worse off if they do so—this is a strict Nash equilibrium.

Stability Under Replicator Dynamics

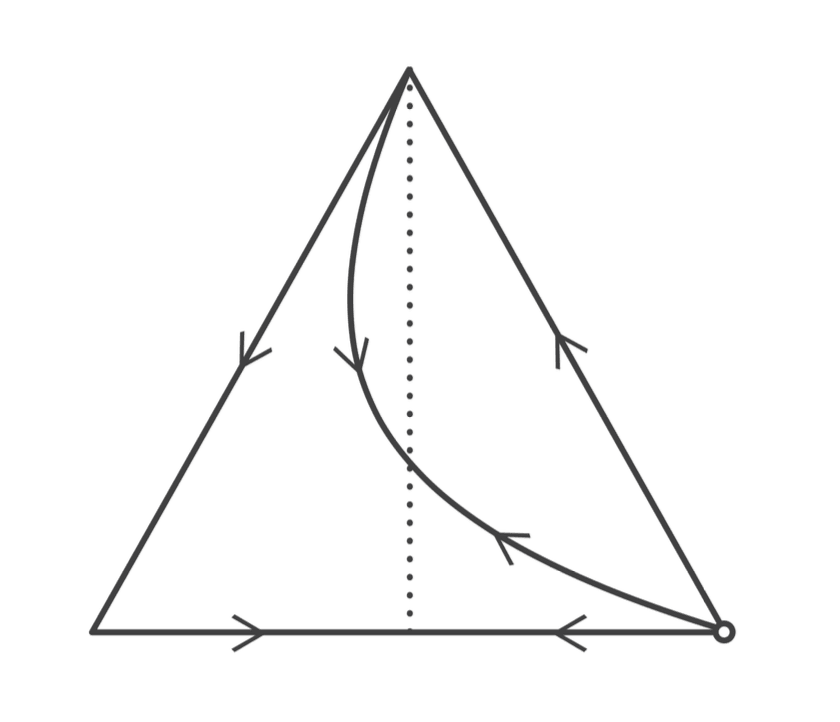

Suppose everyone currently plays A. A small mutant group playing B does strictly better (they earn 1 while players earn 0). That deviation invades and spreads, showing all-A is unstable under the replicator dynamics while all-B is stable.[10]

A phase diagram for replicator dynamics would show:

where A is unstable and B is stable and attracting. Under the replicator dynamics, the population converges to the all-B NE from any interior initial conditions.

Lesson: Weak NE can make errors of commission—picking out states that are unstable against even a small invasion of alternative strategies.

The strict Nash equilibrium, however, provides the correct prediction here. Is this always so?

Example of an Error of Ommission for Strict Nash

Now consider the Hawk-Dove game below:

Nash Equilibria

There are three NE: two pure strategy NE where one agent plays Hawk and the other Dove; and one mixed strategy NE where both players randomize.

Since we are considering single-population dynamics (where all individuals are drawn from the same population and interact symmetrically), the asymmetric equilibria are not relevant. This leaves only the mixed strategy NE with a population comprising ½ Hawks and ½ Doves.

Importantly, mixed strategy Nash equilibria are weak Nash equilibria, because players are indifferent between strategies at equilibrium.[11] Hence, the mixed-strategy NE cannot be a strict NE.

Stability Under Replicator Dynamics

If the entire population plays Hawk, a small mutant group of Doves will perform better. This is because Doves score 0 against Hawks, while Hawks score –1 against each other. Consequently, Doves will invade, and their proportion will grow. If the entire population plays Dove, a small mutant group of Hawks will outperform the population. Hawks score 2 against Doves, while Doves score 1 against each other. Hawks will invade, and their proportion will grow.

A phase diagram for replicator dynamics would show:

where all-Hawk and all-Dove are unstable, and the mixed state—½ Hawk, ½ Dove—is stable and attracting. Under replicator dynamics, the population converges to that mixed NE from any interior initial conditions.

Moral: Strict NE can make errors of omission—even excluding the uniquely most likely outcomes of the underlying dynamics of learning, imitation, or selection.

The Evolutionarily Stable Strategy (ESS)

Seeing how weak and strict NE each err in certain contexts, theorists introduced the Evolutionarily Stable Strategy (ESS).[12] In a symmetric two-player game, a strategy is an ESS if it satisfies two conditions for any alternative strategy :

Nash condition: ;

This ensures that the incumbent strategy is a Nash equilibrium; it must already be a best response to itself.

Local stability condition:

If a mutant strategy performs equally well against the incumbent , the incumbent must outperform the mutant when both are playing against the mutant. This ensures the equilibrium is not invaded by a small population of mutants.

The ESS concept strengthens Nash equilibrium by requiring stability against small perturbations. The strategy must be optimal against itself and resist invasion by any mutant strategy .

Revisiting the Examples

- The First Game with Two Weak Nash Equilibria:

In the game where “all-A” and “all-B” are Nash equilibria, only “all-B” satisfies the ESS conditions. The “all-A” equilibrium fails the local stability condition because mutants playing B earn strictly higher payoffs against A-players. - Hawk-Dove Game:

In the Hawk-Dove game, the mixed-strategy Nash equilibrium[13] is the unique ESS. It satisfies the Nash condition, and any small mutant deviation (a slight imbalance in the Hawk-Dove ratio) does not lead to an invasion because the mixed population corrects itself dynamically. (Can you see why?)[14]

Moral: ESS refines the Nash equilibrium by incorporating local stability under invasion by small populations of mutants. It eliminates weak NE that fail to withstand small perturbations while retaining other weak NE that are in fact stable under such perturbations.

Even ESS Falls Short: Cycles, Spirals, and Chaos

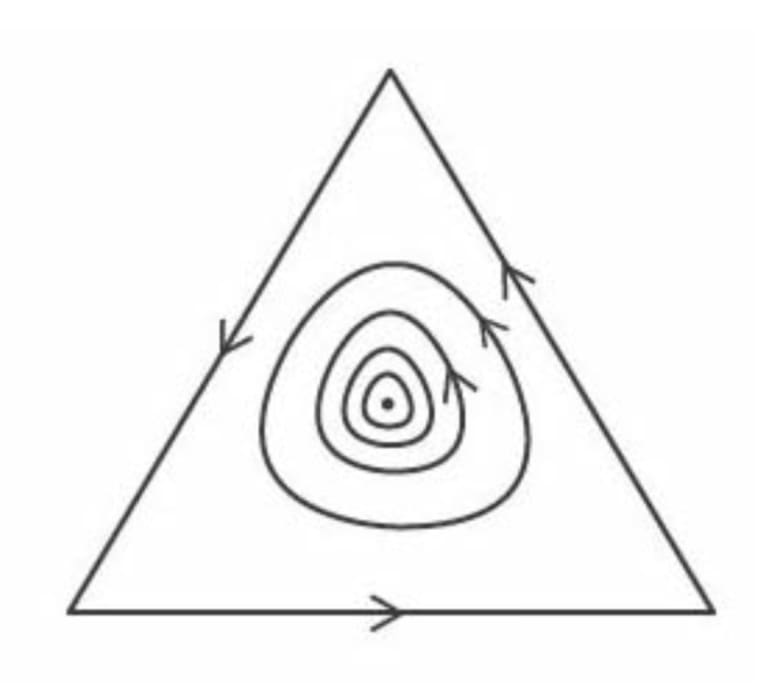

You might hope ESS is the final fix, but real dynamical processes can be more subtle and complicated. Some games generate collectively (but not individually) stable sets of states, limit cycles, spirals, or even chaotic trajectories.

For instance, above we have a set of states that are collectively stable (technically, Laypunov stable) but are not individually evolutionarily stable.[15]

Example: this is generically the case for genetical evolution when there are many neutral variants of alleles at various loci.

We see certain “rock-paper-scissors” variants produce cyclic dynamics, where the population never settles. Each strategy “preys” on the next, and the population composition keeps rotating.[16] No static equilibrium—Nash or ESS—can capture a continually cycling population.

Example: side-blotched lizards exhibit three male morphs in a rock-paper-scissors dynamic: orange males dominate blue males, blue males outcompete yellow males through cooperation, and yellow males exploit orange males by sneaking. Their population proportions cycle as predicted.

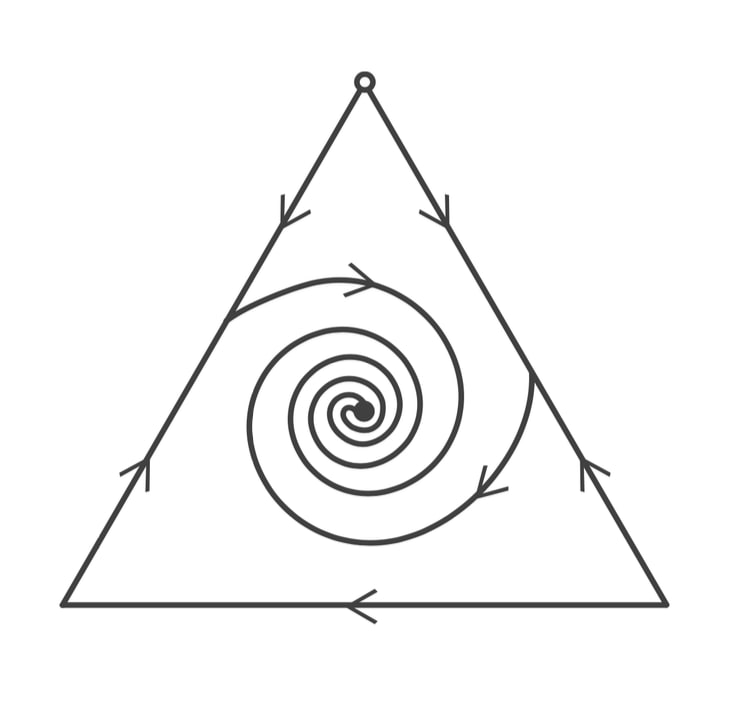

In other cases, there can be a stable interior point in the replicator dynamics that isn’t an ESS or strict Nash: trajectories reliably spiral inward and settle, but the usual equilibrium definitions don’t classify that point as stable or invulnerable to mutants.

Example: such a dynamic can occur in certain cases where heterozygotes outperform homozygotes (e.g., sickle cell anemia dynamics in malaria-endemic regions)—the homozygotes for either allele are less fit, but the population spirals inward to a heterozygote-dominant equilibrium.

Moral: These examples illustrate how concepts like NE or ESS fail to capture the richness of real-world dynamics. Instead, dynamical tools like Lyapunov stability, orbital stability, and many others are needed to understand adaptation and stability in complex systems.

An obvious weakness of the game-theoretic approach to evolution is that it places

great emphasis on equilibrium states, whereas evolution is a process of continuous, or at least periodic, change. It is, of course, mathematically easier to analyse equilibria than trajectories of change. There are, however, two situations in which game theory models force us to think about change as well as constancy. The first is that a game may not have an ESS, and hence the population cycles indefinitely. . .

The second situation. . . is when, as is often the case, a game has more than one ESS.

Then, in order to account for the present state of the population, one has to allow for

initial conditions—that is, for the state of the ancestral population.

—Maynard Smith (1982, p. 8) Evolution and the Theory of Games

Deeper Reading

For more on the limitations of equilibrium concepts in evolutionary game theory, see: Mohseni (2019) "The Limitations of Equilibrium Concepts in Evolutionary Game Theory," Huttegger & Zollman (2010) "The Limits of ESS Methodology," and Bomze's 1983 and 1995 papers from which much of the content of this post was drawn. For a poster of the classification of 3x3 symmetric normal form games under the replicator dynamics, see here. And for work on chaos in evolutionary games, see Skyrms (1992), Wagner (2012), and Sanders et al (2018).

Conclusion: Beyond Static Equilibria

The quest to understand Nash equilibrium has followed two paths:

- High Rationality Road (Epistemic Game Theory): Starting with hyper-rational agents who act rationally and share substantive knowledge.

- Low Rationality Road (Evolutionary Game Theory): Starting with boundedly rational agents who learn and adapt through various dynamic processes.

The low-rationality approach shows that Nash equilibrium and its refinements, like the ESS, can arise naturally—but not always:

- Weak Nash includes unstable outcomes.

- Strict Nash excludes stable mixed states.

- ESS misses spirals, cycles, and chaos.

The key insight from the low-rationality road is clear: static equilibrium concepts fail to capture the richness of complex dynamics. Populations may stabilize, oscillate, spiral inward, or follow intricate trajectories. In fields like AI or ecology, such non-equilibrium behaviors are often the norm. To understand strategy, learning, and adaptation, we have to focus on the dynamic processes driving them—the process, not just the endpoint, is what matters.

- ^

For other posts on LessWrong explaining the concept of Nash equilibrium, see here [LW · GW]; for calculating Nash equilibrium in some simple games, see here [LW · GW] and here [LW · GW]; for the relationship between Nash equilibria and Schelling points, see here [LW · GW]; for the existence of arbitrarily bad Nash equilibria, see here [LW · GW]; and for experimental extensions of game theory, see here [LW · GW] and here [LW · GW].

- ^

For key texts exploring the conditions under which rational agents might play the Nash equilibrium of a game, see Aumann & Brandenburger (1995) "The Epistemic Conditions for Nash Equilibrium," Stalnaker (1996) "Knowledge, Belief and Counterfactual Reasoning in Games," and Lewis (1969) Convention.

- ^

For treatments of classical game theory, we recommend A Course in Game Theory (1994) by Osborne and Rubinstein for advanced undergraduates and graduate students, and Game Theory (1991) by Fudenberg and Tirole for graduate students and researchers.

- ^

Nash’s original proof assumed finite players and strategies, but subsequent work has extended his result. The existence of a Nash equilibrium can be guaranteed as long as the mixed strategy space is compact and convex, and the best response function is continuous, enabling the application of fixed-point theorems such as Brouwer’s or Kakutani’s.

- ^

Though it is still unclear, when there are multiple NE, which one rational agents will choose. This is known as the problem of equilibrium selection. In such cases, we have to look to theories invoking further considerations—risk and payoff dominance, Schelling points, signaling and pre-play coordination, mechanism design, evolutionary approaches, etc.—for suggestions on how this might occur.

- ^

For treatments of evolutionary game theory, we recommend: Sandholm’s Population Games and Evolutionary Dynamics (2010) for a great unifying survey; Hofbauer and Sigmund’s Evolutionary Games and Population Dynamics (2003) for mathematical foundations; Cressman’s Evolutionary Dynamics and Extensive Form Games (2003) for the dynamics of extensive-form games; Weibull’s Evolutionary Game Theory (1995) for an emphasis on stability concepts; and Nowak’s Evolutionary Dynamics: Exploring the Equations of Life (2006) for a biological focus.

- ^

Formulated by Taylor and Jonker (1978) in “Evolutionarily stable strategies and game dynamics,” Mathematical Biosciences, 40(1–2), 145–156.

- ^

These include the Lotka-Volterra equations, game dynamical equations, and a version of the Price equation, with the quasispecies equation and adaptive dynamics emergng as special cases. See Page & Nowak's 2002 "Unifying evolutionary dynamics" for the derivations of these relationships.

- ^

This connection was originally derived by Shalizi in the appendix of his “Dynamics of Bayesian Updating with Dependent Data and Misspecified Models” (2009).

- ^

- ^

Can you see why?

- ^

Introduced by Maynard Smith & Price in “The logic of animal conflict” (1973).

- ^

In an evolutionary context, mixed strategy equilibria can be interpreted in two distinct ways: in terms of a population of heterogeneous agents playing pure strategies (with probabilities representing the population proportion of each type) or in terms of a population of homogenous agents playing mixed strategies (with probabilities representing dispositions or beliefs about others' strategies). For a discussion of the interpretations of mixes strategies and mixed strategy equilibria, see "On the normative status of mixed strategies" (2019) by Zollman, and §3.2 of A Course in Game Theory (1994) by Osborne and Rubinstein.

- ^

This is qualitatively the same dynamic used to explain the existence of 1:1 and extraordinary sex ratios. See Fisher's The Genetical Theory of Natural Selection (1930, pp. 137–141) and Hamilton's "Extraordinary sex ratios" (1967).

- ^

See Thomas' "On evolutionarily stable sets" (1985) for a set-valued generalization of the ESS that has some (imperfect) success in such cases.

- ^

See this neat video on the mating strategies of male side-blotched lizards.

2 comments

Comments sorted by top scores.

comment by Kenny2 (kenny-2) · 2025-01-22T23:38:59.436Z · LW(p) · GW(p)

I think this shows clearly that dynamics don't always lead to the same things as equilibrium rationality concepts. If someone is already convinced that the dynamics matter, this leads naturally to the thought that the equilibrium concepts are missing something important. But I think that at least some discussions of rationality (including some on this site) seem like they might be committed to some sort of "high road" idea under which it really is the equilibrium concept that is core to rationality, and that dynamics were at best a suggestive motivation. (I think I see this in some of the discussions of something like functional decision theory as "that decision theory that a perfectly rational agent would opt to self-program", but with the idea that you don't actually need to go through some process of self-re-programming to get there.)

Is there an argument to convince those people that the dynamics really are relevant to rationality itself, and not just to predictions of how certain naturalistic groups of limited agents will come to behave in their various local optima?

Replies from: aydin-mohseni↑ comment by Aydin Mohseni (aydin-mohseni) · 2025-01-24T04:45:56.163Z · LW(p) · GW(p)

That’s exactly right. Results showing that low-rationality agents don’t always converge to a Nash equilibrium (NE) do not provide a compelling argument against the thesis that high-rationality agents do or should converge to NE. As you suggest, to address this question, one should directly model high-rationality agents and analyze their behavior.

We’d love to write another post on the high-rationality road at some point and would greatly appreciate your input!

Aumann & Brandenburger (1995), “The Epistemic Conditions for Nash Equilibrium,” and Stalnaker (1996), “Knowledge, Belief, and Counterfactual Reasoning in Games,” provide good analyses of the conditions for NE play in strategic games of complete and perfect information.

For games of incomplete information, Kalai and Lehrer (1993), “Rational Learning Leads to Nash Equilibrium,” demonstrate that when rational agents are uncertain about one another’s types, but their priors are mutually absolutely continuous, Bayesian learning guarantees in-the-limit convergence to Nash play in repeated games. These results establish a generous range of conditions—mutual knowledge of rationality and mutual absolute continuity of priors—that ensure convergence to a Nash equilibrium.

However, there are subtle limitations to this result. Foster & Young (2001), “On the Impossibility of Predicting the Behavior of Rational Agents,” show that in near-zero-sum games with imperfect information, agents cannot learn to predict one another’s actions and, as a result, do not converge to Nash play. In such games, mutual absolute continuity of priors cannot be satisfied.