AI alignment as a translation problem

post by Roman Leventov · 2024-02-05T14:14:15.060Z · LW · GW · 2 commentsContents

Yet another way to think about the alignment problem Scalable oversight, weak-to-strong generalisation, and interpretability More “natural” and brain-like AI None 2 comments

Yet another way to think about the alignment problem

Consider two learning agents (humans or AIs) that have made different measurements of some system and have different interests (concerns) regarding how the system should be evolved or managed (controlled). Let’s set aside the discussion of bargaining power and the wider game both agents play and focus on how the agents can agree about a specific way of controlling the system, assuming the agents have to respect each other’s interests.

For such an agreement to happen, both agents must see the plan for controlling the system of interest as beneficial from the perspective of their models and decision theories[1]. This means that they can find a shared model that they both see as a generalisation of their respective models, at least in everything that pertains to describing the system of interest, their respective interests regarding the system, and control of the system.

Gödel’s theorems prevent agents from completely “knowing” their own generalisation method[2], so the only way for agents to arrive at such a shared understanding is to present each other some symbols (i.e., classical information) about the system of interest, learn from it and incorporate this knowledge into their model (i.e., generalise from the previous version of their model)[3], and check if they can come up with decisions (plans) regarding the system of interest that they both estimate as net positive.

Note that without the loss of generality, the above process could be interleaved with actual control according to some decisions and plans deemed “good enough” or sufficiently low-risk after some initial alignment and collective deliberation episode. After the agents collect new observations, they could have another alignment episode, then more action, and so on.

Scalable oversight, weak-to-strong generalisation, and interpretability

To me, the above description of the alignment problem demonstrates that “scalable oversight and weak-to-strong generalisation [LW · GW]” are largely misconceptions of this problem, except insofar as oversight is the implementation of the power balance between humans and AIs[4] or the prevention of deception (I factored out both these aspects from the above picture).

Yes, there will always be something that humans perceive about their systems of interest (including themselves) that AIs won’t perceive, but this looks to be on track to shrink rapidly (consider glasses or even contact lenses that record all visual and audio to assist people). There will probably be much more information about systems of interest that AIs perceive and humans don’t. So, rather than a weak-to-strong generalisation problem, we have a (bidirectional) AI—human translation problem, with more emphasis on human learning from AIs than AIs learning from humans.

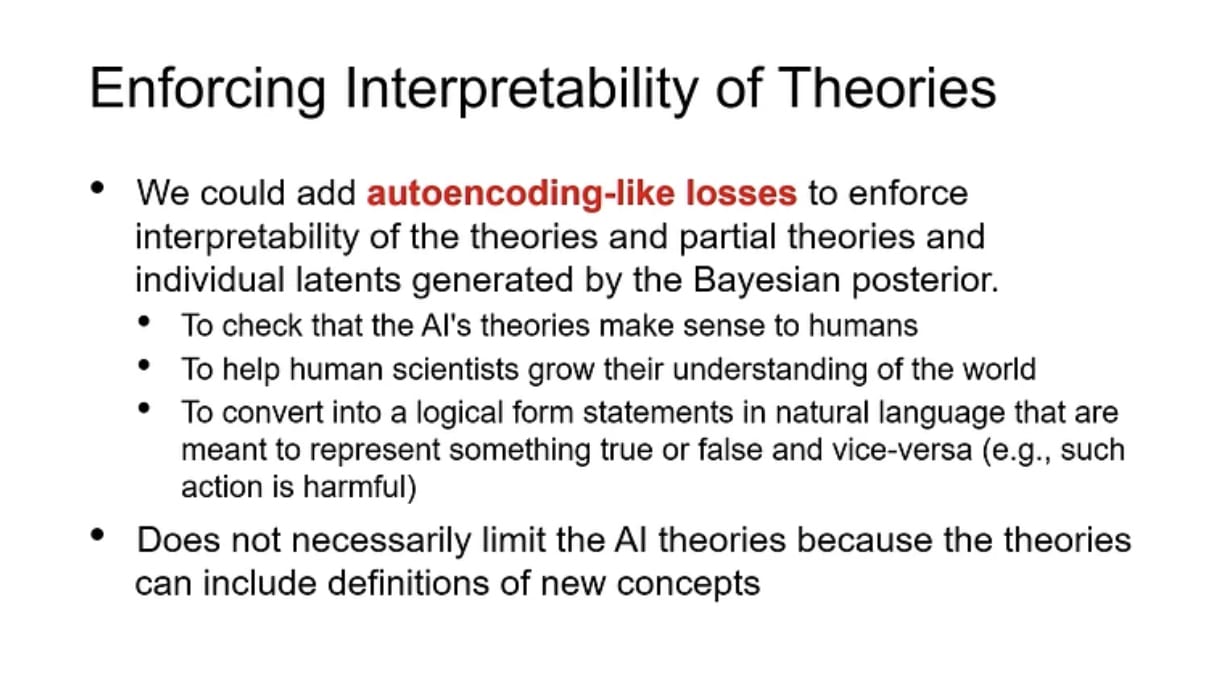

This is also why I think Lucius Bushnaq’s “reverse engineering [LW · GW]” agenda is stronger than the mechanistic interpretability agenda. Both are a kind of “translation from AIs to humans”, but the first aims to (human) understanding of AI’s “native” concepts, whereas the latter at least to some degree tries to impose the pre-existing human belief structure onto AIs.

More “natural” and brain-like AI

The “translation” problem statement presented above also implies that if we care about the generalisation trajectory of the civilisation, we should better equip AIs with our inductive biases (i.e., generalisation methods) rather than make AIs with less of our inductive biases and then hope to align them with weak-to-strong generalisation.

Most generally, this makes me think that “Natural AI” (a.k.a. brain-like AI [? · GW]) is one of the most important among theoretical, “long shot” alignment agendas.

More incrementally, and “prosaically” if you wish, I think AI companies should implement “the consciousness prior” in the reasoning of their systems. Bengio has touched on this in his recent talk:

I won’t even mention other inductive biases that neuroscientists, cognitive scientists, and psychologists argue people have, such as the algorithms that people use in making decisions, the kinds of models that they learn, the role of emotions in learning and decision-making, etc. because I don’t know the full landscape well enough. Bengio’s “consciousness prior” is the one that I’m aware of and that seems to me completely uncontroversial because the structure of human language is strong evidence for it. If you disagree with me on this or know other human inductive biases that are very uncontroversial, please leave a comment.

Since merely “preaching” AI companies to adopt more human-like approaches to AI won’t help, the prosaic call for action on this front is to develop algorithms, ML architectures, and systems that make the adoption of more human-like AI economically attractive. (This is also a prerequisite for making it politically feasible to discuss turning this guideline into a policy if needed.)

One particularly promising lever is showing enterprises that by adopting AI that samples compact causal models that explain the data, they can mine new knowledge and scale the decision intelligence across the organisational levels more cheaply: two AIs trained on different data and for different narrow purposes can come up with causal explanations (i.e., new knowledge) on the intersection of their competence without re-training, but rather trying to combine the causal models that they sample and back-test them for support (which is very fast).

Another interesting approach that might apply to sequential time datasets (with a single context/stream) is to train a foundation state-space model (SSM) for predicting the time-series data, run the model through the timeseries data, and treat the hierarchy of state vectors at the end of this run as a hierarchy of “possible causal circuits in superposition [? · GW]” which could then be recombined with causal graphs/circuits from different AIs or learned by the same AI from different context.

Finally, we could create an external (system-level) incentive for employing such AIs at organisations: enable cross-organisational learning in the space of causal models [LW · GW].

Cross-posted from my Substack.

- ^

For brevity, I will call “model(s) and decision theories” used by an agent simply a model of an agent.

- ^

- ^

Let’s also set aside the concern that agents try to manipulate each other’s beliefs, and assume the trust and deception issue is treated separately.

- ^

However, it doesn’t seem so to me: if humans can oversee AIs and control the method of this oversight, it seems to me they must already have complete power over AIs.

2 comments

Comments sorted by top scores.

comment by Algon · 2024-02-05T17:09:47.118Z · LW(p) · GW(p)

I'd never actually heard of Quine's indeterminacy of translation before. It sounds interesting, so thank you for that.

But I don't understand how Quine's arguement leads to the next step. It sounds discrete, absolute, and has no effect on the outward behaviour of entities. And later you use some statements about the difficulty of translation between entities which sure sound continuous to me.

↑ comment by Roman Leventov · 2024-02-05T18:44:25.611Z · LW(p) · GW(p)

If I understand correctly, by "discreteness" you mean that it simply says that one agent can know neither the meaning of symbols used by another agent nor the "degree" of grokking the meaning. Just cannot say anything.

This is correct, but the underlying reason why this is correct is the same as why solipsism or the simulation hypothesis cannot be disproven (or proven!).

So yeah, I think there is no tangible relationship to the alignment problem, except that it corroborates that we couldn't have 100% (literally, probability=1) certainty of alignment or safety of whatever we create, but it was obvious even without this philosophical argument.

So, I removed that paragraph about Quine's argument from the post.