Belief-conditional things - things that only exist when you believe in them

post by Jan (jan-2) · 2021-12-25T10:49:38.203Z · LW · GW · 3 commentsThis is a link post for https://universalprior.substack.com/p/belief-conditional-things-things

Contents

🎵 Do you believe... 🎵 🎵 ... in love after love 🎵 Gödel's trick No, really, there is a dragon in my garage. Trolling your landlord. Closing thoughts. None 3 comments

TL;DR: A little winter story about making beliefs come true. Epistemic status: this is >90% written to amuse. Not 100% though.

🎵 Do you believe... 🎵

It is the season, and I just finished watching the 2003 classic "Elf" with Will Ferrell as "Buddy", a human raised among elves at the North Pole who travels to New York to reunite with his biological father and to save Christmas. The movie got me thinking.

There is an interesting trope in the movie that's used as a story device: the magical powers of Santa are predicated on humans believing in Santa. Thus, Santa falls in the same category as some magical creatures, the value of fiat money and, arguably, god (as explained in the Book of Jezuboad and discussed here). To spread some magical fuzzy winter feelings, I want to share some thoughts about what I call "belief-conditional things". I think I'm on to something here.

🎵 ... in love after love 🎵

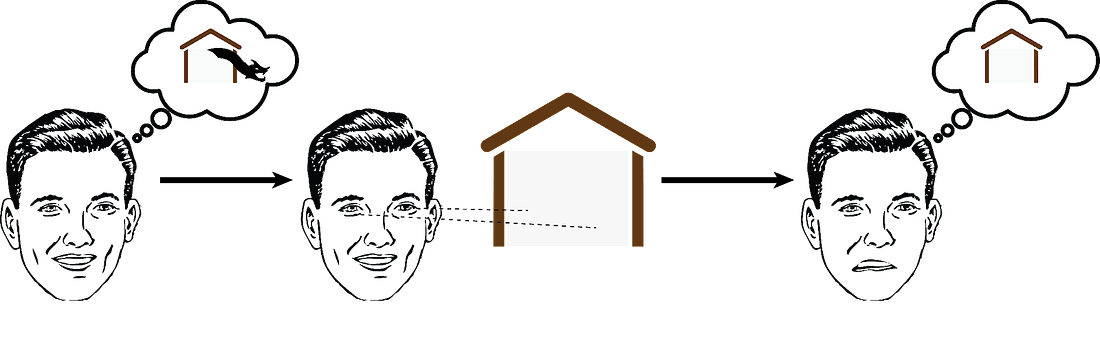

What is the relationship between belief and reality? Some like to think in terms of "territory and map": the territory is everything out there. The map is a lossy mental representation of the territory. We know that the map is not the territory [LW · GW]; sometimes, we are confused about how things work, sometimes we treat complex systems as black boxes [LW · GW], and some things are not represented in our map.

The entire scientific endeavor might be summarized as reducing discrepancies between map and territory. Traditionally, you would update the map according to what you see in the environment.

But if your metric is symmetric (and why would you call it a metric otherwise?) then it's equally effective to change the territory.

Indeed, I think this is what motivates a substantial part of moral philosophy (and, for that matter, larping): Instead of accepting the world as it is, we conjure our ideas about how things ought to be into reality. Thus, it's not unheard of that reality is affected by our beliefs. This opens the door for...

Gödel's trick

When thinking about existence and belief, it is natural to think of epistemic logic - the subfield of the philosophy of knowledge that formalizes how we form and maintain beliefs. This approach allows us to derive, under reasonable axioms, that belief and knowledge collapse. Formally, this means that believing in the existence of Santa Claus,

implies knowledge of the existence of Santa Claus,

And then, by using axiom T, we immediately arrive at the existence of Santa Claus,

(Please don't send me messages about how this is wrong. I want to believe.)

I presume that this must be essentially equivalent to Gödel's ontological proof, although I (same as everybody else) haven't checked the details. Therefore, I will call this “Gödel’s trick"; and epistemic logic turns out to be extremely useful once again.

No, really, there is a dragon in my garage.

Any good rationalist might now counter: "Well, yes, belief might imply existence. But you don't actually believe in X. You only believe that you believe [LW · GW] in X." The classic example is the "dragon in the garage" of Carl Sagan:

"A fire-breathing dragon lives in my garage"

Suppose I seriously make such an assertion to you. Surely you'd want to check it out, see for yourself. There have been innumerable stories of dragons over the centuries, but no real evidence. What an opportunity!

"Show me," you say.

I lead you to my garage. You look inside and see a ladder, empty paint cans, an old tricycle — but no dragon."Where's the dragon?" you ask.

"Oh, she's right here," I reply, waving vaguely. "I neglected to mention that she's an invisible dragon."You propose spreading flour on the floor of the garage to capture the dragon's footprints.

"Good idea," I say, "but this dragon floats in the air."Then you'll use an infrared sensor to detect the invisible fire.

"Good idea, but the invisible fire is also heatless."You'll spray-paint the dragon and make her visible.

"Good idea, but she's an incorporeal dragon and the paint won't stick."And so on. I counter every physical test you propose with a special explanation of why it won't work.

Carl Sagan argues that the person claiming a dragon in their garage doesn't actually believe this. Their ability to explain away (ahead of time) all contradicting experimental evidence reveals that they accurately model the territory. They know exactly what to expect, given that there is no dragon in the garage. They might claim to believe X, but they only believe that they believe X.

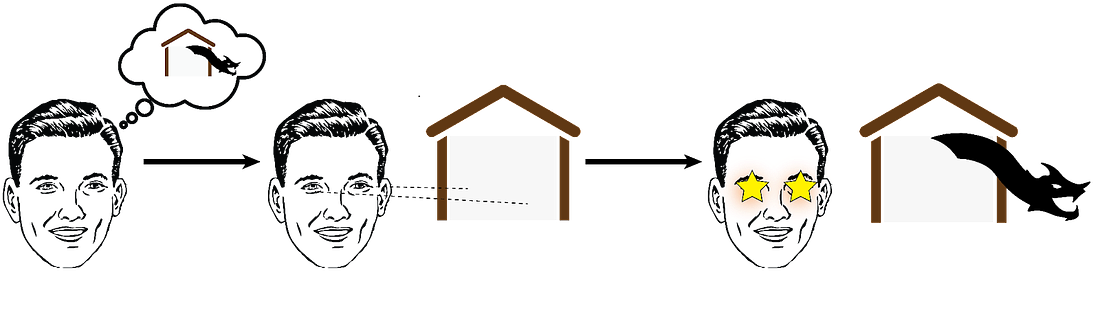

But for Gödel's trick to work, we actually need to believe X. Thus we have to stick our necks out and make falsifiable predictions. Saying, "Come to my garage and see the dragon. She's really cool!" is not the hard part. The hard part is paying rent [LW · GW].

Trolling your landlord.

"Paying rent" in this context comes from the rationalist strategy of "making your beliefs pay rent [LW · GW]". Beliefs are only allowed to stay in your head if they "pay" by making predictions about the world. If those predictions cash out, the belief is allowed to stay. If not, they will be kicked out. If you continuously claim that there is a dragon in your garage, and you make predictions accordingly, eventually the belief won't be able to pay anymore. Then you won't be able to use Gödel's trick.

But what is the "money" here? What is used to pay the rent? And what determines how much "money" the belief has?

In a Bayesian framework, beliefs are akin to probabilities and have a number between zero and one associated with them, indicating how strong the belief is. Changes these numbers in a systematic way (that incorporates all the evidence optimally) is called Bayesian belief updates [LW · GW]. On the road to believing X into existence, you might suffer severe setbacks. If you naively follow Bayes, these setbacks will reduce your belief until you end up not believing in the belief-conditional thing X - thus creating a self-fulfilling prophecy.

Luckily, we are now in the territory of mathematics, a field known to be highly susceptible to shenanigans. Most mathematicians are trollable [LW · GW]. (Admittedly, not every prior is trollable in this way [LW · GW], but last time I checked, the choice of prior is personal. So the power to believe is ours.) It turns out that any Bayesian hit to our belief in X through new evidence can be counteracted by thinking about ways that X might be true after all. In particular, any sentence A -> X that you can prove (like "Santa Claus exists and used to be a reclusive toymaker in the Far North" therefore "Santa Claus exists") eliminates probability mass of not X. Repeated sufficiently many times, this procedure should allow us to believe in X sufficiently strong to believe X into existence - again, a self-fulfilling prophecy. Dreaming about X and picturing it with as many details as possible is thus a necessary step for making it real.

Thus, through several loops and twists, we arrive at what every child can already tell you: Making belief-conditional-things exists only requires us to believe strongly, picture the thing in as many details as possible, and act as if it really can exist. Easy as that.

Closing thoughts.

Through the examples I have listed in the first section, I might have deceived you into exclusively thinking about fantastic and mystical creatures. But those are (by far) not the only examples of things that can only exist when we believe in them. Mars colonies, curing cancer, and peace on earth are additional examples - in fact, most good things in the future are belief-conditional. Following the argument outlined above, we can believe belief-conditional thing X into existence by doing the following things: By changing the territory rather than our map, by providing as many viable avenues to achieve X as possible, and by sticking our necks out to perform tests while actually believing in the possibility of X. There might be more things we can do, and most of the ones I list might not apply in all situations. But this is for you to find out.

Thank you, dear reader. Happy holidays.

3 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2021-12-27T21:54:36.719Z · LW(p) · GW(p)

I have an intuition that predictive processing https://slatestarcodex.com/2017/09/05/book-review-surfing-uncertainty/ is somehow related. We predict something to exist and thereby bring it about?

comment by Gunnar_Zarncke · 2021-12-27T21:58:51.209Z · LW(p) · GW(p)

I posit that the ego is a belief-conditiinal thing.

comment by Jiro · 2021-12-26T17:32:31.513Z · LW(p) · GW(p)

Any good rationalist might now counter: “Well, yes, belief might imply existence. But you don’t actually believe in X. You only believe that you believe in X.”

No, a good rationalist would say "an inaccurate belief is still a type of belief, and only an accurate belief implies existence".