Experimentation with AI-generated images (VQGAN+CLIP) | Solarpunk airships fleeing a dragon

post by Kaj_Sotala · 2021-07-15T11:00:05.099Z · LW · GW · 4 commentsContents

4 comments

A few days ago I found the Twitter account @images_ai, which posts AI-generated images and links to these instructions for generating your own. I started playing around with it; some of my choice picks:

The first image I generated, “sci-fi heroes fighting fantasy heroes”

“cute catboys having a party”

Someone had figured out that if you add words like “unreal engine” to your prompt, you get more realistic graphics.

so here’s “sci-fi heroes fighting fantasy heroes trending on artstation | unreal engine”

“young sorcerer heiress of a cyberpunk corporation, with dragons on her side | unreal engine”

“the cat is over the moon|unreal engine”

“Ghosts of Saltmarsh”

wikiart16384 model, “Fully Automated Luxury Gay Space Communism:2 | unreal engine:1 | logo:-1”

At this point I read a tweet suggesting that a 16:9 image ratio generally produces better results, so I switched to using that.

“solarpunk forest village | studio ghibli | trending on artstation”

“mountain expedition encounters stargate | full moon | matte painting”

“xcom fighting sectoids | matte painting”

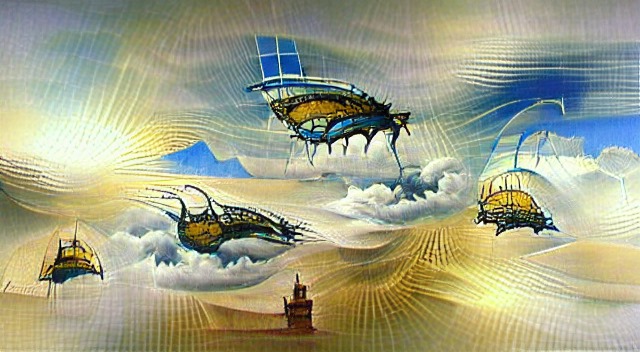

I decided to do some systematic experimentation: picking a starting prompt (“solarpunk airships fleeing a dragon”), fixing the random seed, and varying parts of the prompt to see how they changed.

In retrospect the initial prompt wasn’t great, since I’m not sure if any of the pictures really incorporated the “fleeing” aspect, so I could have picked a more fully meaningful prompt. In general, this AI seems to be better at understanding individual words than it is at understanding sentences. Oh well.

All of these use seed 13039289688260605078 (no special significance; it’s what got randomly rolled for the first one so I kept using it), image size 640*360.

I don’t have anything interesting conclude besides what you see from the images themselves. As I was posting these on Facebook, Marcello Herreshoff kindly joined in on the experimentation, coming up with additional prompts and generating pictures with them; I’ve credited his images where they appear. [EDIT: Marcello let me know that he hadn't remembered to fix the seed for his pictures, but they got similar enough to my pictures anyway that I never even suspected!]

“solarpunk airships fleeing a dragon”

“solarpunk airships fleeing a dragon | Dr. Seuss” (Marcello)”

“solarpunk airships fleeing a dragon | Dr. Seuss | text:-1” (Marcello)

“solarpunk airships fleeing a dragon | paul klee” (Marcello)

“solarpunk airships fleeing a dragon | Salvador Dali” (Marcello)

“solarpunk airships fleeing a dragon | Ukiyo-e” (Marcello)

“solarpunk airships fleeing a dragon | van gogh” (Marcello)

“solarpunk airships fleeing a dragon in the style of h.r. giger”

“solarpunk airships fleeing a dragon in the style of h.r. giger | pastel colors”

“solarpunk airships fleeing a dragon in the style of my little pony friendship is magic”

“solarpunk airships fleeing a dragon | studio ghibli”

“solarpunk airships fleeing a dragon | trending on artstation”

“solarpunk airships fleeing a dragon | studio ghibli | trending on artstation”

“solarpunk airships fleeing a dragon | unreal engine | trending on artstation”

“solarpunk airships fleeing a dragon | watercolor”

“matte painting of solarpunk airships fleeing a dragon”

“matte painting of solarpunk airships fleeing a dragon | trending on artstation”

“matte painting of solarpunk airships fleeing a dragon in the style of h.r. giger | studio ghibli | unreal engine | trending on artstation”

“children’s storybook illustration of solarpunk airships fleeing a dragon”

“solarpunk airships fleeing an octopus | trending on artstation”

“airships fleeing a dragon | trending on artstation”

“airships fleeing a dragon | ps1 graphics”

“airships fleeing a dragon | SNES graphics”

“airships fleeing a nuclear blast | trending on artstation”

“airships | trending on artstation”

“solarpunk trains fleeing a dragon | trending on artstation”

“solarpunk trains fleeing a dragon | ursula vernon”

“cyberpunk airships fleeing a dragon | trending on artstation”

“cyberpunk trains fleeing a dragon | trending on artstation”

“cyberpunk trains fleeing a baby | trending on artstation”

4 comments

Comments sorted by top scores.

comment by philip_b (crabman) · 2021-07-15T12:53:00.479Z · LW(p) · GW(p)

That is super awesome and surprisingly good.

comment by gianlucatruda · 2021-07-20T16:10:42.771Z · LW(p) · GW(p)

I present to you VQGANCLIP's take on a Bob Ross painting of Bob Ross painting Bob Ross paintings 😂 This surpassed my wildest expectations!

comment by platers · 2021-07-15T15:12:08.496Z · LW(p) · GW(p)

These are very impressive! It looks like it gets the concepts, but lacks global coherency.

Could anyone comment on how far we are from results of similar quality as the training set? Can we expect better results just by scaling up the generator or CLIP?

Replies from: gwern↑ comment by gwern · 2021-07-15T15:55:06.582Z · LW(p) · GW(p)

Using CLIP is a pretty weird way to go. It's like using a CNN classifier to generate images: it can be done, but like a dog walking, we're more surprised to see it work at all.

If you think about how a contrastive loss works, it's perhaps less surprising why CLIP-guided images look the way they do, and do things like try to repeat an object many time: if you have a prompt like "Mickey Mouse", what could be even more Mickey-Mouse-y than Mickey Mouse tiled a dozen times? That surely maximizes its embedding encoding 'Mickey Mouse', and its distance from non-Disney-related image embeddings like, say, "dog" or "Empire State Building"! Whether you can really induce sharp coherent images from any scaled-up CLIP like ALIGN is unclear: contrastive losses just might not learn these things, and the generative model needs to do all the work. No matter how exquisitely accurately a contrastive model learns every detail about Mickey Mouse, it seems like it'd still be the case that a dozen Mickey Mouses tiled together is 'more Mickey-Mouse-y' than a single beautifully coherent Mickey Mouse.

(CLIP would still remain useful as an extremely handy way to take any image generative model and quickly turn it into an text-editable model, or possible text->image model. One could probably do better by an explicit approach like DALL-E, but CLIP is available now and DALL-E is not.)

If you are interested in SOTA image generation quality rather than weird CLIP hacks, you should look at:

- "VQGAN: Taming Transformers for High-Resolution Image Synthesis", Esser et al 2020

- DALL-E/CogView

- "SR3: Image Super-Resolution via Iterative Refinement", Saharia et al 2021 / "Diffusion Models Beat GANs on Image Synthesis", Dhariwal & Nichol 2021

- DenseFlow

- "Alias-Free GAN (Generative Adversarial Networks)", Karras et al 2021