My version of Simulacra Levels

post by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-26T15:50:38.782Z · LW · GW · 15 commentsContents

Generalizing from statements to sentences Discussion None 15 comments

People act as if there are four truth-values: True, False, Cringe, and Based.

--David Udell (paraphrase) [LW(p) · GW(p)]

This post lays out my own version of the Simulacra Levels idea [LW · GW]. Be warned, apparently it is importantly different [LW(p) · GW(p)] from the original.

| TRUTH | TEAMS | |

| Deontological | Level 1: “Is it true?” | Level 3: "Do I support the associated team?" |

| Consequentialist | Level 2: "How does it influence others' beliefs?" | Level 4: "How does it influence others' support for various teams?" |

Statements you make are categorized as Level 1, 2, 3, or 4 depending on which of the above questions were most responsible for your choice to make the statement.

When you say that P, pay attention to the thought processes that caused you to make that statement instead of saying nothing or not-P:

- Were you alternating between imagining that P, and imagining that not-P, and noticing lots more implausibilities and inconsistencies-with-your-evidence when you imagined that not-P? Seems like you were at Level 1.

- Were you imagining the effects of your utterance on your audience, e.g. imagining that they'd increase their credence that P and act accordingly? Seems like you were at Level 2.

- Were you imagining other people saying that P, and/or imagining other people saying that not-P, and noticing that the first group of people seem cool and funny and virtuous and likeable and forces-for-good-in-the-world, and that the second group of people seems annoying, obnoxious, evil, or harmful? (The imagined people could be real, or amorphous archetypes) Seems like you were at level 3.

- Were you imagining the effects of your utterance on your audience, e.g. imagining that they'd associate you more with some groups/archetypes and less with other groups/archetypes? Seems like you were at level 4.

Paradigmatic examples of lies (including white lies such as "mmm your homemade hummus tastes great") are Level 2. A lot of social media activity seems to be level 3. Politicians on campaign spend most of their waking moments on level 4.

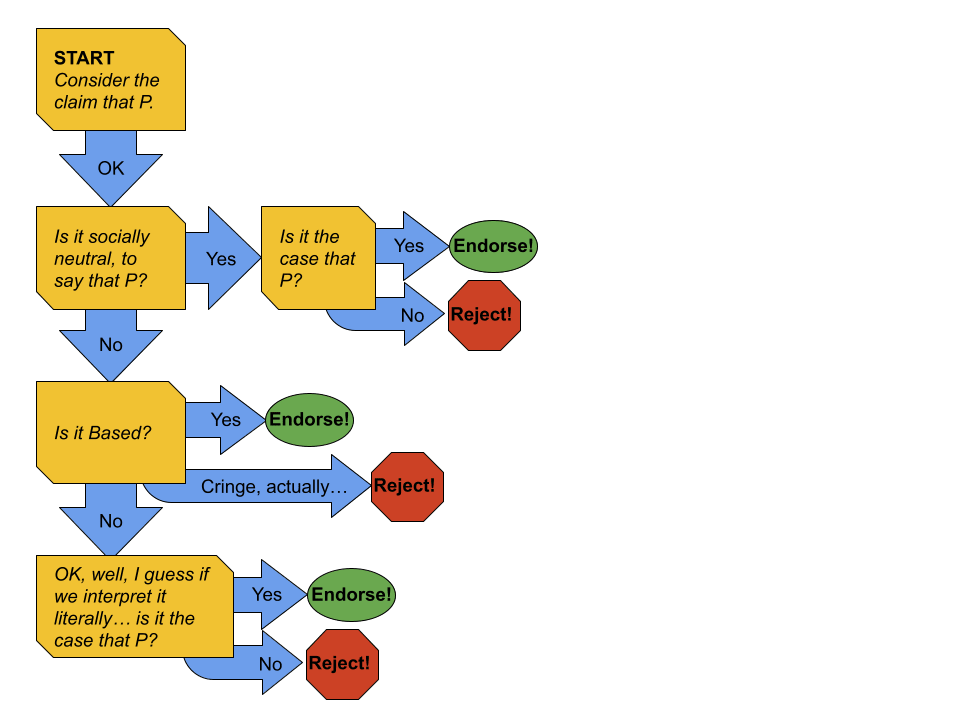

Of course in real life things are often messy and the cognition responsible for a statement might involve a mix of different levels. Here's a simple example: Suppose, in some context and for statements within some domain, your brain executes the following flowchart:

If for a particular claim you exit the flowchart in Row 2 or Row 4, you are at Simulacra Level 1. If you exit the flowchart in Row 3, you are in either Level 3 or Level 4 depending on how we define "cringe" and "based." (I'm tempted to say they are both Level 3, except there seems to be something inherently level 4-ish about "cringe" in particular.) Note that this flowchart leaves no possibility for Simulacra Level 2; congratulations for being so reliably honest!

Generalizing from statements to sentences

Some sentences are conceived, uttered, debated, tweeted, emblazoned on banners, etc. without ever passing through anyone's brain at level 1 or 2. To the extent that a sentence is like this, we can say it's a "Level 3/4 sentence," or a "Teams-level sentence." The pronouncements of governments and large corporations are full of these kinds of sentences. To decide whether a sentence is level 3/4 or level 1/2, it helps to ask "Interpreted literally, is it true or false?" If the answer is one of the following...

- "Well that depends on how you interpret it; people who like it are going to interpret it in way X (and so it'll be true) and people who don't are going to interpret it in way Y (and so it'll be false), and to be honest neither of these interpretations is significantly more straightforward/literal than the other."

- "Well, interpreted strictly literally it is uncontroversially true (/false). But..."

... that's a sign that the sentence might be teams-level. Other signs include how controversial it is and the kind of discourse that surrounds it--are people mostly making character attacks, for example?

Discussion

It seems that there is a tendency for discourses primarily operating at Level 1 to devolve into Level 2, and from Level 2 to Level 3, and from Level 3 to Level 4. If this is true perhaps it is because there are general instrumental-convergence incentives to choose statements on the basis of their consequences (especially when the possibility that the statement was generated deontologically means you can predict the direction people will update upon hearing your statement) and thus there's a tendency for level 2 to grow out of level 1 and for level 4 to grow out of level 3. As for the move from 2 to 3, that could be because once lots of people are scheming about consequences, you get conflicts and initiatives and factions and it becomes important to support (and signal support for) various Teams.

So far we've discussed statements and sentences. We can easily extend this analysis to beliefs and even thoughts as well. People are often under immense social and psychological pressure to believe certain things, to avoid believing certain other things, etc. and often this results in e.g. following the example flowchart to determine what you think about a topic, even when you aren't intending to say what you think to anyone.

Understanding this, and noticing when it's happening within you, is an important rationality skill--someone who can reflect on why they think something, and accurately judge how much Level 1 vs. Level 2 vs. Level 3 vs. Level 4 cognition was responsible, has a big advantage in converging to the truth.

I wonder if there are exercises / techniques for training this skill. For now my best answer is the first four bullet points above.

Edit 6/27/2023: This classic SlateStarCodex post is pretty relevant, with its discussion of karma mirrors: Ethnic Tension And Meaningless Arguments | Slate Star Codex

15 comments

Comments sorted by top scores.

comment by Richard_Ngo (ricraz) · 2023-04-26T21:12:37.190Z · LW(p) · GW(p)

I like this post! I notice the diagram doesn't really map onto a cognitive process that I consider realistic, though. So here's my attempted replacement for what 'most people' do:

- Does P feel tribally-loaded to you?

- If yes or "could be" and you're politically savvy, say whatever's most useful (level 4).

- If yes and you're not politically savvy, answer according to your tribal affiliation (level 3).

- Is P relevant to your strategic interests in non-tribally-loaded ways?

- If yes and you're in consequentialist mode, say whatever's most useful (level 2).

- If no to either, answer according to your object-level beliefs (level 1)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-27T04:02:59.242Z · LW(p) · GW(p)

Yeah, good point, I agree. Should have optimized more for realism instead of satisficing once I had a good example.

comment by Zach Stein-Perlman · 2023-04-26T16:15:43.067Z · LW(p) · GW(p)

I think there's something weird about your levels applying to statements or things you say rather than something more like internal attitudes. When you say something as opposed to just thinking it, you kind of by definition do so in order to affect others' beliefs about what you believe.

But internal-attitudes aren't a great fit either.

Replies from: daniel-kokotajlo, Dagon↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-26T16:50:42.697Z · LW(p) · GW(p)

I disagree that you by definition do so in order to affect other's beliefs about what you believe. For example, when I'm reasoning aloud in front of a whiteboard and using someone as a rubber duck, I'm really not doing this to affect them at all. And for a lot of people, a lot of the time, when someone asks them a question, their internal cognition is best described as "thinking about whether P is true" rather than "thinking about the consequences of saying P." Because they are following a deontological policy of saying what they think in response to questions (at least in that domain).

That said, I agree that maybe it would be better to define everything in terms of internal attitudes. I'll think about this more & encourage others to do so as well.

↑ comment by Dagon · 2023-04-26T16:50:15.354Z · LW(p) · GW(p)

That's been a problem with other writing on the simulacra-levels model as well. It's unclear whether "operating" at a level means you don't see and understand the lower levels, or just aren't focusing your communication on them (because you don't model your followers/targets as understanding the model).

comment by Raemon · 2023-04-26T19:16:01.722Z · LW(p) · GW(p)

fyi as long as you're refactoring it this way, I think it could use another term than 'simulacrum levels' (which imo only really makes sense in the original context)

Replies from: habryka4, daniel-kokotajlo↑ comment by habryka (habryka4) · 2023-04-26T20:34:31.065Z · LW(p) · GW(p)

Huh, why? This is basically my summary of "Simulacrum levels" and matches how I've seen other people use those terms.

Replies from: Raemon↑ comment by Raemon · 2023-04-26T22:14:59.000Z · LW(p) · GW(p)

I think it's an unnecessarily confusing term in general. I think it makes sense when you're specifically thinking of each level as a simulacrum of the one before, but the 2x2 grid version doesn't feel that way to me – level 3 doesn't (necessarily) feel like a distorted or reinforced version of level 2.

I think it's useful to avoid unnecessary dependencies in jargon. The underlying concept here is something I'd want to be able to easily bring up in academic-twitter or whatever, and I don't think there's anything that complicated about it that requires explaining a whole background model, but I think framing it terms of simulacrum levels is opaque in a way that makes you open the box [LW · GW] to understand how things connect.

I think mainstream intellectual circles basically understand some variation of "communication-as-politics/affiliation" (even if they mostly accuse other people of it most of the time), and the only really new thing the simulacrum frame adds in most conversations is that sometimes people are lying about affiliation vs doing so "honestly."

I do still think the concept of simulacrum levels is useful, independent of the 2x2 grid, when you're considering a domain where you specifically expect there to be a multiple-level-of-simulacrum mechanism for what's going on, and it's relevant to the discussion. Like, I ended up kinda re-deriving them in Recursive Middle Manager Hell [LW · GW], in a way that gave me more appreciation for the The Four Children of the Seder as the Simulacra Levels [LW · GW].

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-12-16T23:41:27.431Z · LW(p) · GW(p)

I didn't elaborate, but I did say:

It seems that there is a tendency for discourses primarily operating at Level 1 to devolve into Level 2, and from Level 2 to Level 3, and from Level 3 to Level 4.

It seems maybe you don't see the Level 2 to Level 3 connection. Well, here's what I was thinking:

In discourses that are at level 1, people aren't really forming into stable teams. Think: A bunch of students trying to solve a math problem sheet together, proposing and rejecting various lemmas or intuition pumps. But once the discourse is heavily at level 2, with lots of people thinking hard about how to convince other people of things -- with lots of people arguing about some local thing like whether this particular intuition pump is reasonable by thinking about less-local things like whether it would support or undermine Lemma X and thereby support or undermine the strategy so-and-so has been undertaking -- well, now it seems like conditions are ripe for teams to start to form. For people to be Team So-And-So's Strategy. And with the formation of teams comes reporting which team you are on (level 3) and then eventually strategically signalling or shaping perceptions of which team you are on (level 4).

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-26T19:57:44.066Z · LW(p) · GW(p)

OK, happy to do so -- got any suggestions?

Brainstorming...

--Truth-values and Teams-values

--Four Levels of Discourse

--Just keep it as is (My Version of Simulacra Levels) as a way of noting the similarity between the ideas

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-26T20:00:45.127Z · LW(p) · GW(p)

We need something short and snappy (because the purpose is to have a handle/name which people can use when they want to talk about the four levels Daniel Kokotajlo sketched instead of the original Simulacra Levels)

...maybe I should ask GPT-4 for suggestions...

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-26T16:48:41.880Z · LW(p) · GW(p)

Had a great conversation with Richard Ngo about this. Some notes:

He thinks the "Consequentialist" level is defined too narrowly, and should not be just about consequences that flow from the mechanism of other people thinking you think that P / other people thinking you support team X or support team not-X. You might have zero expectation that anyone will update towards thinking you support team X, for example (perhaps because it's already blatantly obvious that you do) and instead are uttering certain words in a certain order because you predict it'll make it hard for your rivals to respond and if they don't respond they'll lose face. (E.g. maybe some jokes are examples of this).

I agree and have already edited the post to reflect this. Previously the post defined level 2 and level 4 as:

Level 4: "Do I want others to think I support the associated team?"

Level 2: “Do I want others to think I consider it true?”

I now think these are just important special cases of the more general consequentialist phenomenon.

comment by philh · 2023-05-01T08:35:16.235Z · LW(p) · GW(p)

Paradigmatic examples of lies (including white lies such as “mmm your homemade hummus tastes great”) are Level 2.

I read the hummus one as being most likely level 4. It's not (by my read) being said to convince someone that their hummus tastes great, but to make them feel good about themselves, the speaker, and the relationship between the two of them.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-01T22:32:09.067Z · LW(p) · GW(p)

Hmmm, idk. I think at least some of the time it's level 2, in my experience. Yes the goal is to make them feel good, but the mechanism is by making them think the hummus tastes good. (Maybe in some cases it's common knowledge that you'd say it tastes good even if it was horrible & that all you really mean therefore is "go team us" but I think that's atypical.)