Thoughts to niplav on lie-detection, truthfwl mechanisms, and wealth-inequality

post by Emrik (Emrik North), niplav · 2024-07-11T18:55:46.687Z · LW · GW · 8 commentsContents

Postscript

The magic shop in the middle of everywhere: cheap spells against egregores, ungods, akrasia, and you-know-whos!

Mechanisms for progressive redistribution is good because money-inequality is bad

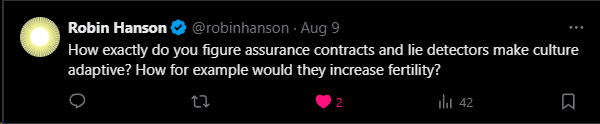

Aborted draft response to Robin re combining assurance contracts with lie-detection

Anecdote about what makes me honest while nobody's looking

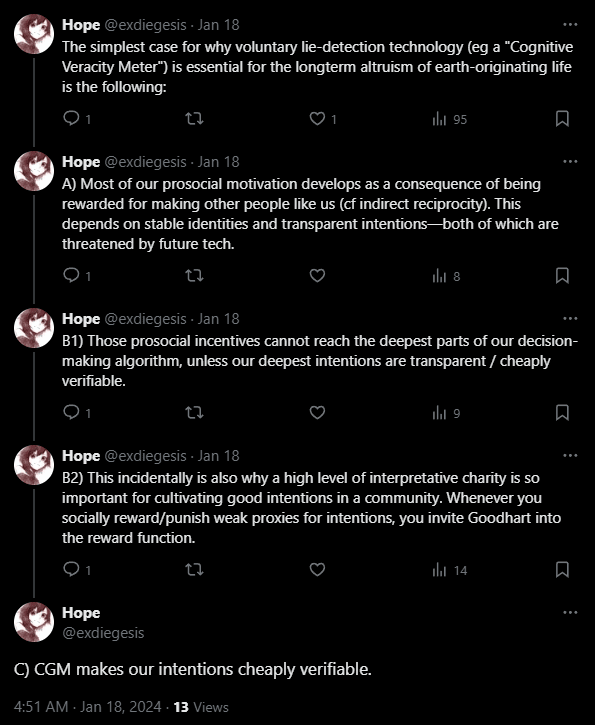

Premises

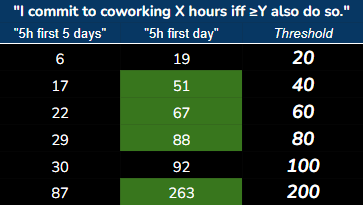

Assurance contract fictional example

Assurance contracts against pluralistic ignorance (Keynesian culture-bubbles)

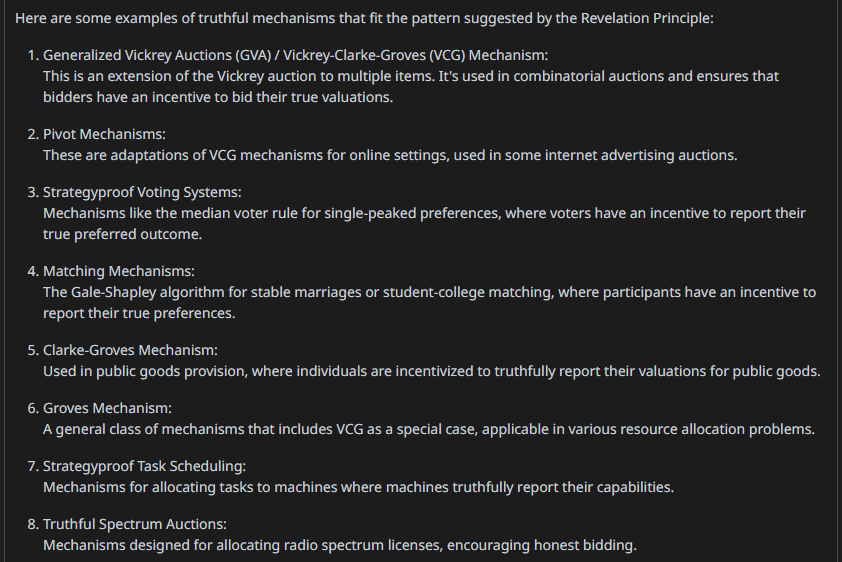

Assurance democracy obviates the need for VCG, because the "truthfwl mechanism" is just a device you can strap on

Re fertility & assurance democracy

None

9 comments

8 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2024-07-12T00:56:09.487Z · LW(p) · GW(p)

Hmm, this sure is a kind of weird edge-case of the coauthor system for dialogues.

I do think there really should be some indication that niplav hasn't actually responded here, but also, I don't want to just remove them and via that remove their ability to respond in the dialogue. I'll leave it as is for now and hope this comment is enough to clear things up, but if people are confused I might temporarily remove niplav.

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-07-12T20:42:21.626Z · LW(p) · GW(p)

I now sent the following message to niplav, asking them if they wanted me to take the dialogue down and republish as shortform. I am slightly embarrassed about not having considered that it's somewhat inconvenient to receive one of these dialogue-things without warning.

I just didn't think through that dialogue-post thing at all. Obviously it will show up on your profile-wall (I didn't think about that), and that has lots of reputational repercussions and such (which matter!). I wasn't simulating your perspective at all in the decision to publish it in the way I did. I just operated on heuristics like:

- "it's good to have personal convo in public"

- so our younglings don't grow into an environment of pluralistic ignorance, thinking they are the only ones with personality

- "it's epistemically healthy to address one's writing to someone-in-particular"

- eg bc I'm less likely to slip into professionalism mode

- and bc that someone-in-particular (𖨆) is less likely to be impressed by fake proxies for good reasoning like how much work I seem to have put in, how mathy I sound, how confident I seem, how few errors [LW · GW] I make, how aware-of-existing-research I seem, ...

- and bc 𖨆 already knows me, it's difficult to pretend I know more than I do

- eg if I write abt Singular Learning Theory to the faceless crowd, I could easily convince some of them that I like totally knew what I was talking about; but when I talk to you, you already know something abt my skill-level, so you'd be able to smell my attempted fakery a mile away

- "other readers benefit more (on some dimensions) from reading something which was addressed to 𖨆, because

- "It is as if there existed, for what seems like millennia, tracing back to the very origins of mathematics and of other arts and sciences, a sort of “conspiracy of silence” surrounding [the] “unspeakable labors” which precede the birth of each new idea, both big and small…"

— Alexander Grothendieck

- "It is as if there existed, for what seems like millennia, tracing back to the very origins of mathematics and of other arts and sciences, a sort of “conspiracy of silence” surrounding [the] “unspeakable labors” which precede the birth of each new idea, both big and small…"

---

If you prefer, I'll move the post into a shortform preceded by:

[This started as something I wanted to send to niplav, but then I realized I wanted to share these ideas with more people. So I wrote it with the intention of publishing it, while keeping the style and content mostly as if I had purely addressed it to them alone.]

I feel somewhat embarrassed about having posted it as a dialogue without thinking it through, and this embarrassment exactly cancels out my disinclination against unpublishing it, so I'm neutral wrt moving it to shortform. Let me know! ^^

P.S. No hurry.

Replies from: niplav↑ comment by niplav · 2024-07-12T23:47:03.174Z · LW(p) · GW(p)

In this particular instance, I'm completely fine this happening—because I trust & like you :-)

In general, this move is probably too much for the other party, unless they give consent. But as I said, I'm fine/happy with being addressed in a dialogue—and LessWrong is better for this than schelling.pt, especially for the longer convos we tend to have. Who knows, maybe I'll even find time to respond in a non-vacuous manner & we can have a long-term back & forth in this dialogue.

comment by Raemon · 2024-07-12T00:54:28.605Z · LW(p) · GW(p)

Quick mod note – this post seems like a pretty earnest, well intentioned version of "address a dialogue to someone who hasn't opted into it". But, it's the sort of thing I'd expect to often be kind of annoying. I haven't chatted with other mods yet about whether we want to allow this sort of thing longterm, but, flagging that we're tracking it as an edge case to think about.

comment by Emrik (Emrik North) · 2024-07-11T19:11:26.547Z · LW(p) · GW(p)

niplav

Just to ward of misunderstanding and/or possible feelings of todo-list-overflow: I don't expect you to engage or write a serious reply or anything; I mostly just prefer writing in public to people-in-particular, rather than writing to the faceless crowd. Treat it as if I wrote a Schelling.pt outgabbling in response to a comment; it just happens to be on LW. If I'm breaking etiquette or causing miffedness for Complex Social Reasons (which are often very valid reasons to have, just to be clear) then lmk! : )

comment by [deleted] · 2024-07-11T19:17:34.476Z · LW(p) · GW(p)

Wait, so is this a dialogue or not? It's certainly styled as one, and has two LW users as authors, but... only one of them has written anything (yet), and seemingly doesn't expect [LW(p) · GW(p)] the other one to engage? Wouldn't this have been better off as a post or a shortform or something like that?

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-07-11T19:35:05.337Z · LW(p) · GW(p)

I wanted to leave Niplav the option of replying at correspondence-pace at some point if they felt like it. I also wanted to say these things in public, to expose more people to the ideas, but without optimizing my phrasing/formatting for general-audience consumption.

I usually think people think better if they generally aim their thoughts at one person at a time. People lose their brains and get eaten by language games if their intellectual output is consistently too impersonal.

Also, I think if I were somebody else, I would appreciate me for sharing a message which I₁ mainly intended for Niplav, as long as I₂ managed to learn something interesting from it. So if I₁ think it's positive for me₂ to write the post, I₁ think I₁ should go ahead. But I'll readjust if anybody says they dislike it. : )

Replies from: None