Agent Boundaries Aren't Markov Blankets. [Unless they're non-causal; see comments.]

post by abramdemski · 2023-11-20T18:23:40.443Z · LW · GW · 11 commentsContents

11 comments

Edit: I now see that this argument was making an unnecessary assumption that the markov blankets in question would have to relate nicely to a causal model; see John's comment [LW(p) · GW(p)].

Friston has famously invoked the idea of Markov Blankets for representing agent boundaries, in arguments related to the Free Energy Principle / Active Inference. The Emperor's New Markov Blankets by Jelle Bruineberg competently critiques the way Friston tries to use Markov blankets. But some other unrelated theories [? · GW] also try to apply Markov blankets [? · GW] to represent agent boundaries. There is a simple reason why such approaches are doomed.

This argument is due to Sam Eisenstat.

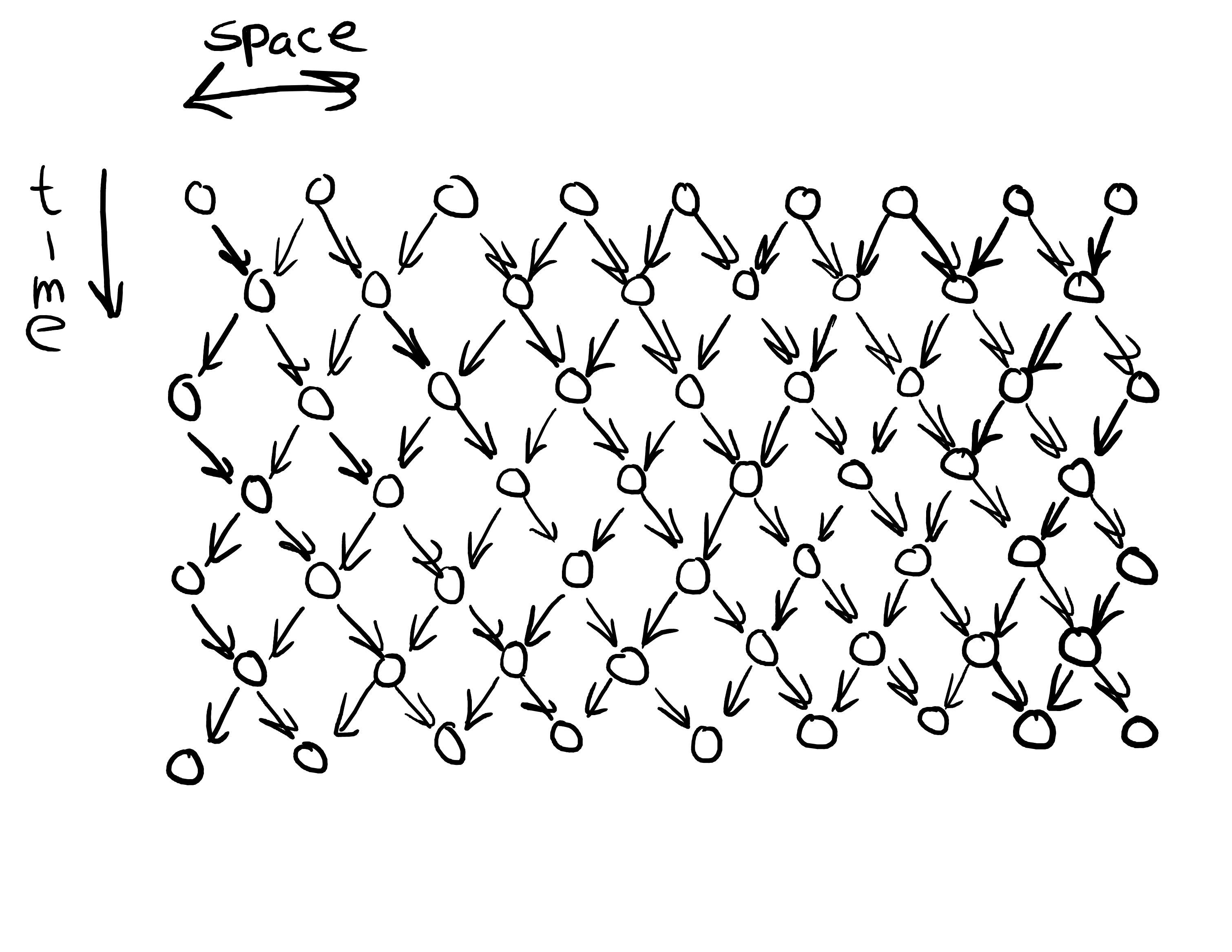

Consider the data-type of a Markov blanket. You start with a probabilistic graphical model (usually, a causal DAG), which represents the world.

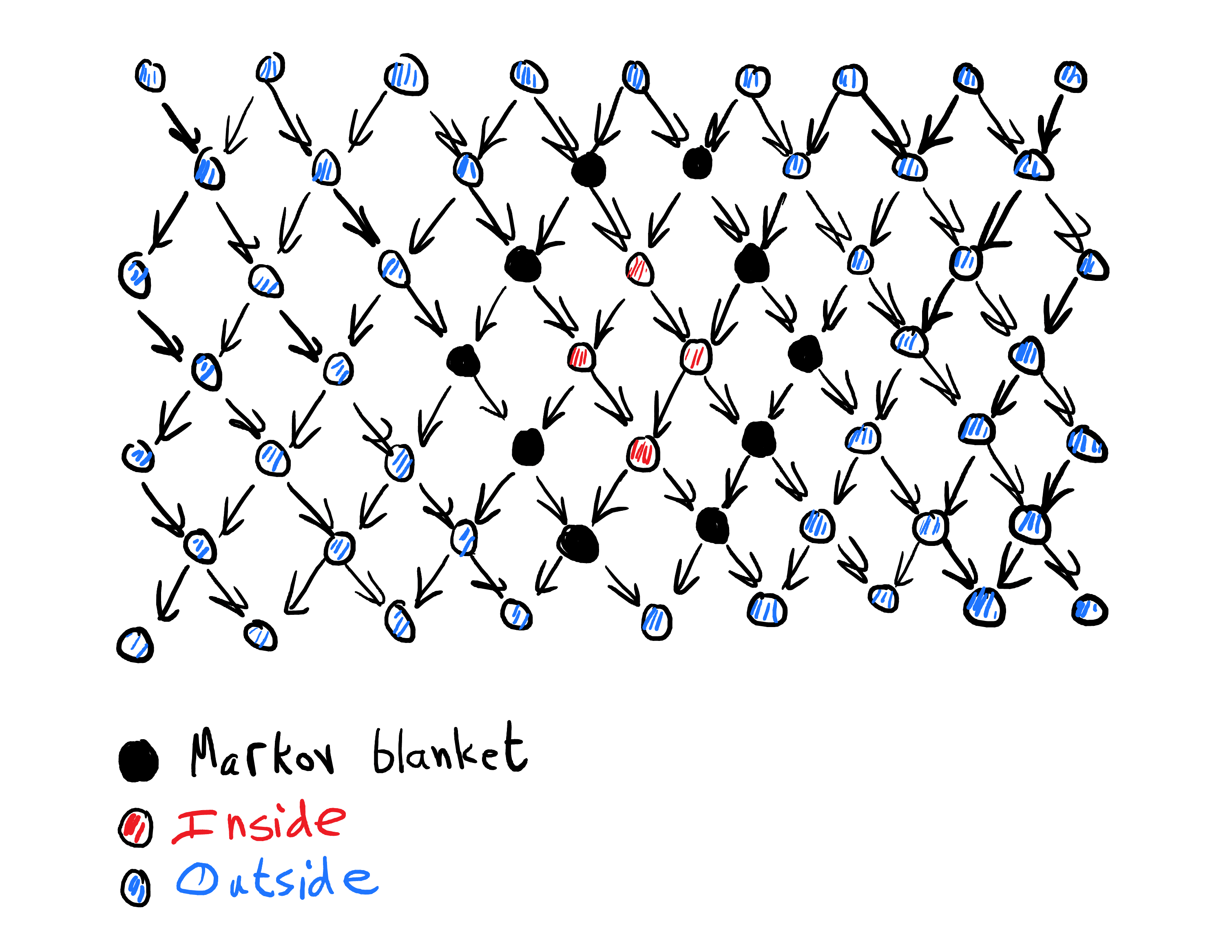

A "Markov blanket" is a set of nodes in this graph, which probabilistically insulates one part of the graph (which we might call the part "inside" the blanket) from another part ("outside" the blanket):[1]

("Probabilistically insulates" means that the inside and outside are conditionally independent, given the Markov blanket.)

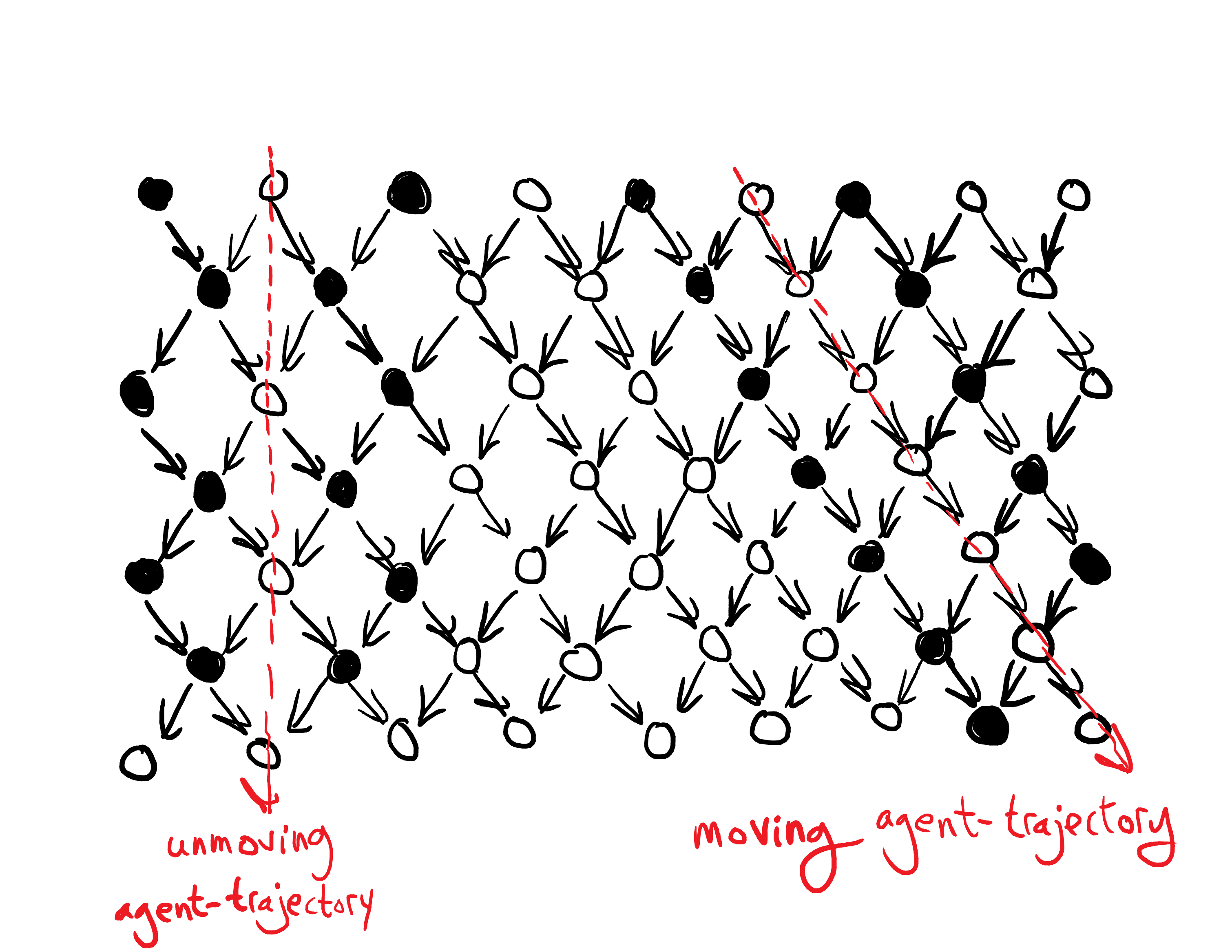

So the obvious problem with this picture of an agent boundary is that it only works if the agent takes a deterministic path through space-time. We can easily draw a Markov blanket around an "agent" who just says still, or who moves with a predictable direction and speed:

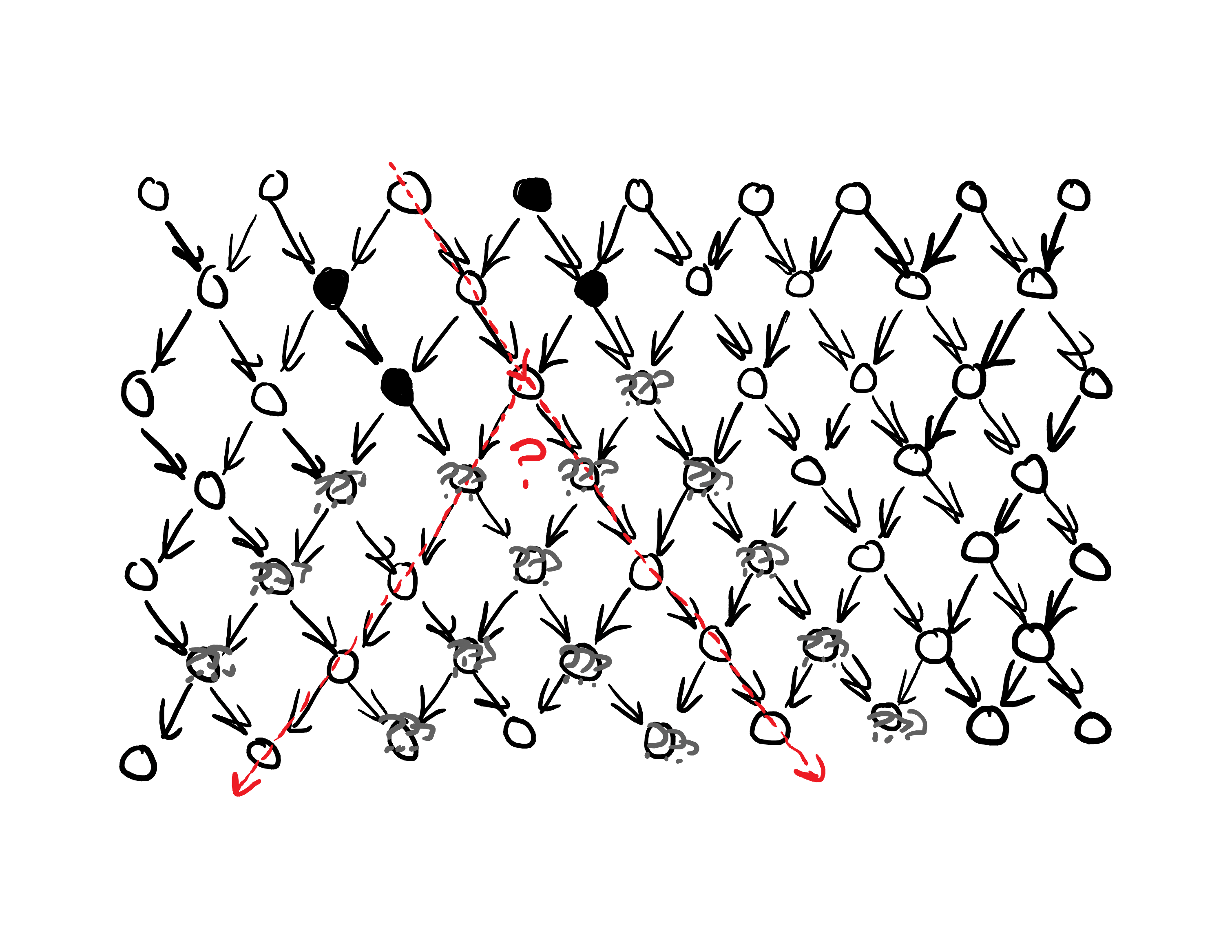

But if an agent's direction and speed are ever sensitive to external stimuli (which is a property common to almost everything we might want to call an 'agent'!) we cannot draw a markov blanket such that (a) only the agent is inside, and (b) everything inside is the agent:

It would be a mathematical error to say "you don't know where to draw the Markov blanket, because you don't know which way the Agent chooses to go" -- a Markov blanket represents a probabilistic fact about the model without any knowledge you possess about values of specific variables, so it doesn't matter if you actually do know which way the agent chooses to go.[2]

The only way to get around this (while still using Markov blankets) would be to construct your probabilistic graphical model so that one specific node represents each observer-moment of the agent, no matter where the agent physically goes.[3] In other words, start with a high-level model of reality which already contains things like agents, rather than a low-level purely physical model of reality. But then you don't need Markov blankets to help you point out the agents. You've already got something which amounts to a node labeled "you".

I don't think it is impossible to specify a mathematical model of agent boundaries which does what you want here, but Markov blankets ain't it.

- ^

Although it's arbitrary which part we call inside vs outside.

- ^

Drawing Markov blankets wouldn't even make sense in a model that's been updated with complete info about the world's state; if you know the values of the variables, then everything is trivially probabilistically independent of everything else anyway, since known information won't change your mind about known information. So any subset would be a Markov blanket.

- ^

Or you could have a more detailed model, such as one node per neuron; that would also work fine. But the problem remains the same; you can only draw such a model if you already understand your agent as a coherent object, in which case you don't need Markov blankets to help you draw a boundary around it.

11 comments

Comments sorted by top scores.

comment by johnswentworth · 2023-11-20T18:51:22.329Z · LW(p) · GW(p)

I think you use too narrow a notion of Markov blankets here. I'd call the notion you're using "structural Markov blankets" - a set of nodes in a Bayes net which screens off one side from another structurally (i.e. it's a cut of the graph). Insofar as Markov blankets are useful for delineating agent boundaries, I expect the relevant notion of "Markov blanket" is more general: just some random variable such that one chunk of variables is independent of another conditional on the blanket.

Replies from: abramdemski↑ comment by abramdemski · 2023-11-21T15:53:39.990Z · LW(p) · GW(p)

Ahhh that makes sense, thanks.

Replies from: johnswentworth↑ comment by johnswentworth · 2023-11-21T17:37:34.381Z · LW(p) · GW(p)

... I was expecting you'd push back a bit, so I'm going to fill in the push-back I was expecting here.

Sam's argument still generalizes beyond the case of graphical models. Our model is going to have some variables in it, and if we don't know in advance where the agent will be at each timestep, then presumably we don't know which of those variables (or which function of those variables, etc) will be our Markov blanket. On the other hand, if we knew which variables or which function of the variables were the blanket, then presumably we'd already know where the agent is, so presumably we're already conditioning on something when we say "the agent's boundary is a Markov blanket".

I think that is a basically-correct argument. It doesn't actually argue that agent boundaries aren't Markov boundaries; I still think agent boundaries are basically Markov boundaries. But the argument implies that the most naive setup is missing some piece having to do with "where the agent is".

Replies from: abramdemski, MadHatter↑ comment by abramdemski · 2023-11-30T16:37:33.953Z · LW(p) · GW(p)

I find your attempted clarification confusing.

Our model is going to have some variables in it, and if we don't know in advance where the agent will be at each timestep, then presumably we don't know which of those variables (or which function of those variables, etc) will be our Markov blanket.

No? A probabilistic model can just be a probability distribution over events, with no "random variables in it". It seemed like your suggestion was to define the random variables later, "on top of" the probabilistic model, not as an intrinsic part of the model, so as to avoid the objection that a physics-ish model won't have agent-ish variables in it.

So the random variables for our markov blanket can just be defined as things like skin surface temperature & surface lighting & so on; random variables which can be derived from a physics-ish event space, but not by any particularly simple means (since the location of these things keeps changing).

On the other hand, if we knew which variables or which function of the variables were the blanket, then presumably we'd already know where the agent is, so presumably we're already conditioning on something when we say "the agent's boundary is a Markov blanket".

Again, no? If I know skin surface temperature and lighting conditions and so on all add up to a Markov blanket, I don't thereby know where the skin is.

I think that is a basically-correct argument. It doesn't actually argue that agent boundaries aren't Markov boundaries; I still think agent boundaries are basically Markov boundaries. But the argument implies that the most naive setup is missing some piece having to do with "where the agent is".

It seems like you agree with Sam way more than would naively be suggested by your initial reply. I don't understand why.

When I talked with Sam about this recently, he was somewhat satisfied by your reply, but he did think there were a bunch of questions which follow. By giving up on the idea that the markov blanket can be "built up" from an underlying causal model, we potentially give up on a lot of niceness desiderata which we might have wanted. So there's a natural question of how much you want to try and recover, which you could have gotten from "structural" markov blankets, and might be able to get some other way, but don't automatically get from arbitrary markov blankets.

In particular, if I had to guess: causal properties? I don't know about you, but my OP was mainly directed at Critch, and iiuc Critch wants the Markov blanket to have some causal properties so that we can talk about input/output. I also find it appealing for "agent boundaries" to have some property like that. But if the random variables are unrelated to a causal graph (which, again, is how I understood your proposal) then it seems difficult to recover anything like that.

↑ comment by MadHatter · 2023-11-22T19:32:44.780Z · LW(p) · GW(p)

I think the missing piece is some sort of notion of "who is on what team", which I think of as "agentic segmentation". That is, can we draw borders in meatspace between who is being influenced by the rhetoric of which other agents. If a strong and misaligned superintelligence came to exist, my threat model is that it would do all sorts of dishonorable things by proxy using its rhetorical influence, and we would either see that something weird was going on with the agentic segmentation, or we wouldn't. If we did see it, we could probably trace the agent segment back to some primary mover or locate the computational device that is powering the rapidly expanding agent segment.

comment by Jeff Beck (jeff-beck-1) · 2023-11-21T13:23:21.057Z · LW(p) · GW(p)

While most examples in the literature utilize static Markov blankets in which time does not affect whether or not nodes are assigned to object, blanket, and environment this is not a necessary feature of Markov Blankets. They can move and model exchanges of matter between object and environment. In a dynamic setting, every single node has a markov blanket and the intersection of the blankets associated with each any set of nodes also forms a markov blanket (even if that blanket is disconnected). For this reason, the Markov blankets alone dont define objects. Rather it is the statistics of the blanket (or rather p(b,t)) that define an object. Blankets simply specify the set of possible domains over which the distribution that defines an object's phenotype may be defined.

↑ comment by abramdemski · 2023-11-30T16:44:20.543Z · LW(p) · GW(p)

I don't understand. The fact that every single node has a Markov blanket seems unrelated. The claim that the intersection of any two blankets is a blanket doesn't seem true? For example, I can have a network:

a -> b -> c

| | |

v v v

d -> e -> f

| | |

v v v

g -> h -> i

It seems like the intersection of the blankets for 'a' and 'c' don't form a blanket.

comment by Dalcy (Darcy) · 2024-07-09T21:51:15.188Z · LW(p) · GW(p)

a Markov blanket represents a probabilistic fact about the model without any knowledge you possess about values of specific variables, so it doesn't matter if you actually do know which way the agent chooses to go.

The usual definition of Markov blankets is in terms of the model without any knowledge of the specific values as you say, but I think in Critch's formalism [? · GW] this isn't the case. Specifically, he defines the 'Markov Boundary' of (being the non-abstracted physics-ish model) as a function of the random variable (where he writes e.g. ), so it can depend on the values instantiated at .

- it would just not make sense to try to represent agent boundaries in a physics-ish model if we were to use the usual definition of Markov blankets - the model would just consist of local rules that are spacetime homogeneous, so there is no reason to expect one can apriori carve out an agent from the model without looking at its specific instantiated values.

- can really be anything, so doesn't necessarily have to correspond to physical regions (subsets) of , but they can be if we choose to restricting our search of infiltration/exfiltration-criteria-satisfying to functions that only return boundaries-in-the-sense-of-carving-the-physical-space.

- e.g. can represent which subset of the physical boundary is, like 0, 0, 1, 0, 0, ... 1, 1, 0

So I think under this definition of Markov blankets, they can be used to denote agent boundaries, even in physics-ish models (i.e. ones that relate nicely to causal relationships). I'd like to know what you think about this.

Replies from: abramdemski↑ comment by abramdemski · 2024-07-10T14:57:06.104Z · LW(p) · GW(p)

Critch's formalism isn't a markov blanket anyway, as far as I understand it, since he cares about approximate information boundaries rather than perfect Markov properties. Possibly he should not have called his thing "directed markov blankets" although I could be missing something.

If I take your point in isolation, and try to imagine a Markov blanket where the variables of the boundary can depend on the value of , then I have questions about how you define conditional independence, to generalize the usual definition of Markov blankets. My initial thought is that your point will end up equivalent to John's comment [LW(p) · GW(p)]. IE we can construct random variables which allow us to define Markov blankets in the usual fixed way, while still respecting the intuition of "changing our selection of random variables depending on the world state".

Replies from: Darcy↑ comment by Dalcy (Darcy) · 2024-08-31T02:55:17.450Z · LW(p) · GW(p)

I think something in the style of abstracting causal models would make this work - defining a high-level causal model such that there is a map from the states of the low-level causal model to it, in a way that's consistent with mapping low-level interventions to high-level interventions. Then you can retain the notion of causality to non-low-level-physical variables with that variable being a (potentially complicated) function of potentially all of the low-level variables.

comment by Jeff Beck (jeff-beck-1) · 2023-11-21T13:27:11.618Z · LW(p) · GW(p)

It is also worth noting that any boundary defined in a manner that is consistent with systems identification theory forms a Markov Blanket by definition.