GPT as an “Intelligence Forklift.”

post by boazbarak · 2023-05-19T21:15:03.385Z · LW · GW · 27 commentsContents

Whose intelligence is it? None 27 comments

[See my post with Edelman on AI takeover [LW · GW] and Aaronson on AI scenarios. This is rough, with various fine print, caveats, and other discussions missing. Cross-posted on Windows on Theory.]

One challenge for considering the implications of “artificial intelligence,” especially of the “general” variety, is that we don’t have a consensus definition of intelligence. The Oxford Companion to the Mind states that “there seem to be almost as many definitions of intelligence as experts asked to define it.” Indeed, in a recent discussion, Yann LeCun and Yuval Noah Harari offered two different definitions. However, it seems many people agree that:

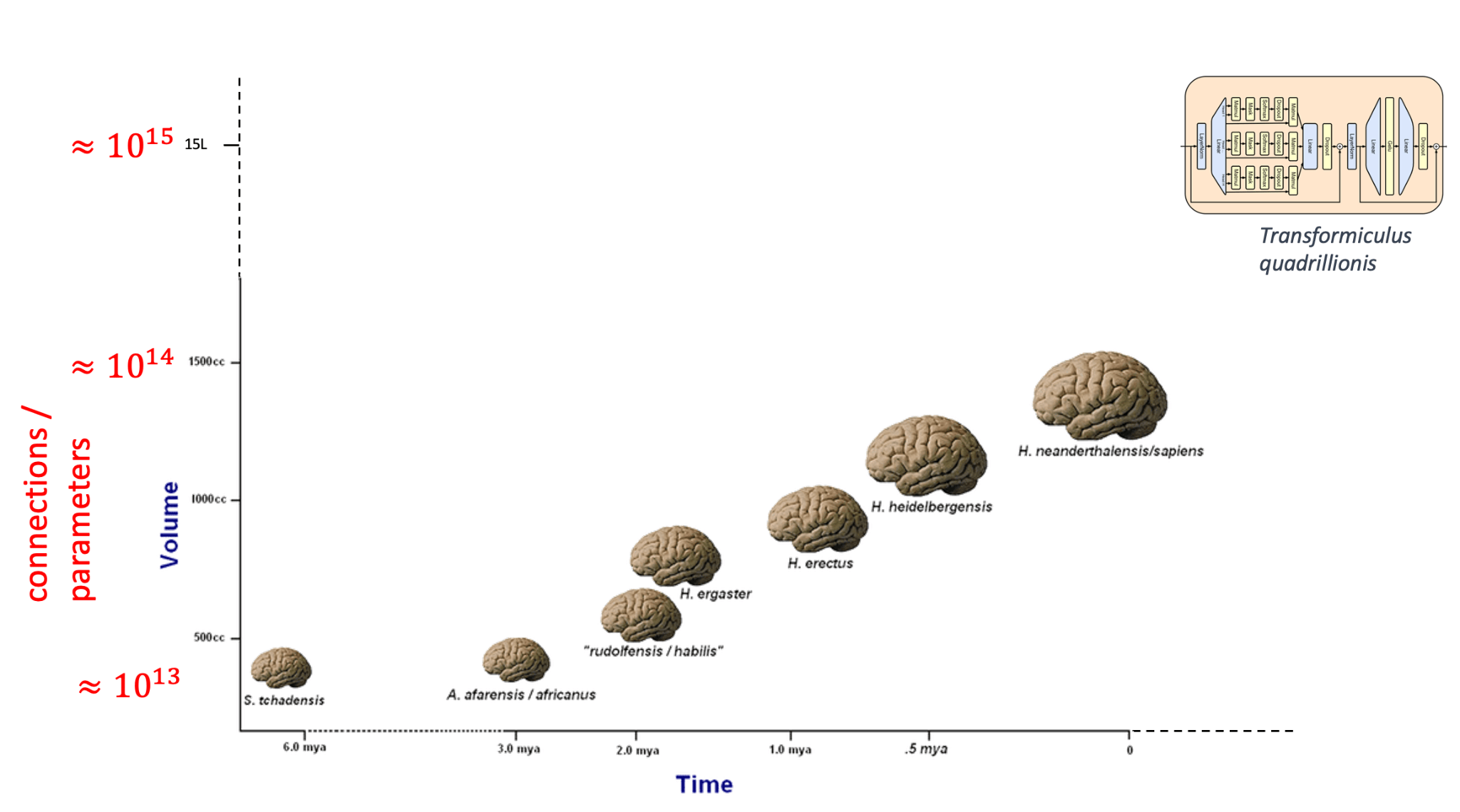

- Whatever intelligence is, more computational power or cognitive capacity (e.g., a more complex or larger neural network, a species with a larger brain) leads to more of it.

- Whatever intelligence is, the more of it one has, the more one can impact one's environment.

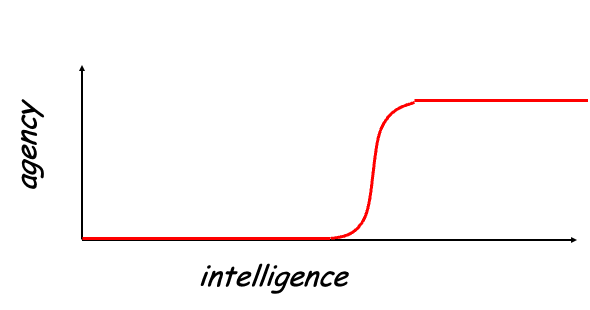

1 and 2 together can already lead to growing concerns now that we are building artificial systems that every year are more powerful than the last. Yudkowski [LW · GW] presents potential progress on intelligence with something like the following chart (taken from Muehlhauser):

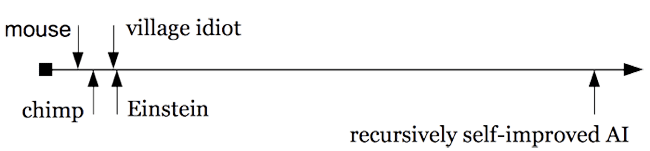

Given that recent progress on AI was achieved by scaling ever larger amounts of computation and data, we might expect a cartoon that looks more like the following:

Whether the first or the second cartoon is more accurate, the idea of constructing intelligence that surpasses ours to an increasing degree and on a growing number of dimensions is understandably unsettling to many people. (Especially given that none of the other species of the genus Homo in the chart above survived.) This post is not to say that we should not worry about this. Instead, I suggest a different metaphor for how we could think of future powerful models.

Whose intelligence is it?

In our own species’ evolution, as we have become more intelligent, we have become more able to act as agents that do not follow pre-ordained goals but rather choose our own. So we might imagine that there is some monotone “agency vs. intelligence” curve along the following:

(Once again, don’t take the cartoon too seriously; whether it is a step function, sigmoid-like, or some other monotone curve can be debatable and also depends on what one's definitions of “agency” and “intelligence” are.)

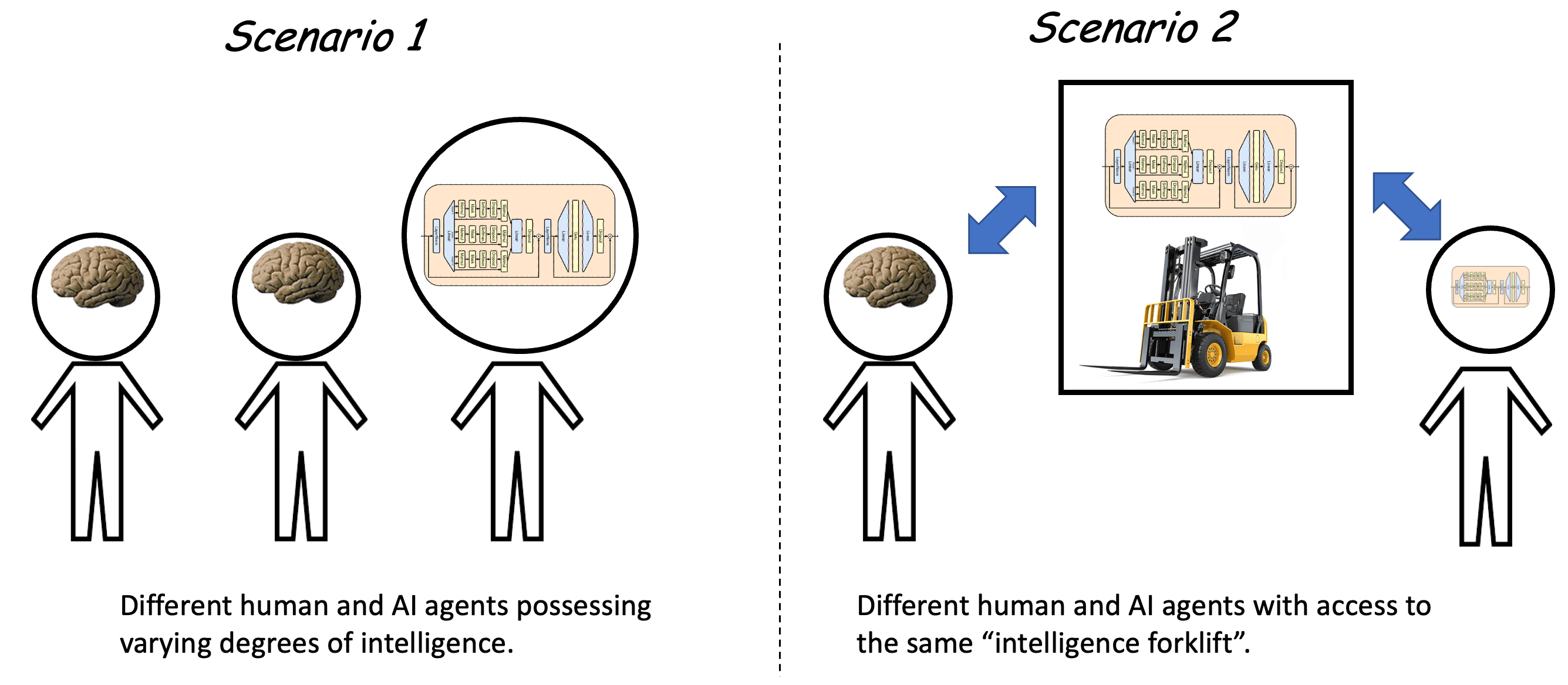

But perhaps intelligence does not have to go hand-in-hand with agency. Consider the property of physical strength. Like intelligence, this is a capability that an individual can use to shape their environment. I am (much) weaker than Olga Liashchuk, who can lift a 300kg Yoke and walk 24 meters with it in under 20 seconds. However, if I were to drive a forklift, the combination of me and the forklift would be stronger than her. Thus, if we measure strength in functional terms (what we can do with it) instead of by artificial competitions, it makes sense to consider strength as a property of a system rather than an individual. Strength can be aggregated to combine several systems into a stronger one or split up to use different parts of the capacity for different tasks.

Is there an “intelligence forklift”? It is hard to imagine a system that is more intelligent than humans but lacks agency. More accurately, up until recently, it would have been hard to imagine such a system. However, with generative pretrained transformers (GPTs), we have systems that have the potential to be just that. Even though recent GPTs undergo some adaptation and fine-tuning, the vast majority of the computational resources invested into GPTs is used to make them solve the task of finding a continuation of a sequence given its prefix.

We can phrase many general problems as special cases of the task above. Indeed, with multimodal models, such tasks include essentially any problem that can be asked and answered using any type of digital representation. Hence as GPT-n becomes better at this task, it is arguably becoming arbitrarily intelligent. (For intuition, think of providing GPT-n with a context containing all arXiv papers in physics in the last year and asking to predict the next one.) However, it is still not an agent but rather a generic problem-solver. In that sense, GPTs can best be modeled as intelligence forklifts.

By “intelligence forklift” I mean that such a model can augment an agent with arbitrary intelligence to complete the goals the agent seeks. The agent may be human, but it can also be an AI itself. For example, it might be obtained using fine-tuning, reinforcement learning, or prompt-engineering on GPT. (So, while GPT is not an agent, it can “play one on TV” if asked to do so in its prompt.) Therefore, the above does not mean that we should not be concerned about an artificial highly intelligent agent. However, if the vast majority of an agent’s intelligence is derived from the non-agentic “forklift” (which can be used by many other agents as well), then a multipolar scenario of many agents of competing objectives is more likely than a unipolar one of a single dominating actor. The multipolar scenario might not be safer, but it is different.

27 comments

Comments sorted by top scores.

comment by Jalex Stark (jalex-stark-1) · 2023-05-20T01:58:07.272Z · LW(p) · GW(p)

I think part of your point, translated to local language is "GPTs are Tool AIs, and Tool AI doesn't necessarily become agentic"

Replies from: boazbarak↑ comment by boazbarak · 2023-05-20T03:07:14.228Z · LW(p) · GW(p)

Thank you! You’re right. Another point is that intelligence and agency are independent, and a tool AI can be (much) more intelligent than an agentic one.

Replies from: lahwran, Ilio, jalex-stark-1↑ comment by the gears to ascension (lahwran) · 2023-05-20T03:19:18.795Z · LW(p) · GW(p)

well, that's certainly a state of the world that things can be in.

temporarily.

↑ comment by Ilio · 2023-05-20T19:17:52.325Z · LW(p) · GW(p)

To anyone who disagree with that, I’d be curious to see where you place social insects and LLMs on the intelligence vs agency graph. 🤔

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-21T04:39:55.369Z · LW(p) · GW(p)

Depends on what you mean by "independent." I didn't disagree or agree with the statement because I'm not sure how to interpret it. If it's "probabilistically independent" or "uncorrelated" or something like that, it's false; if it's "logically independent" i.e. "there's nothing logically contradictory about something which has lots of one property but little of the other" then it's true.

Replies from: Ilio↑ comment by Ilio · 2023-05-21T11:08:41.265Z · LW(p) · GW(p)

I assumed it was the second, but the first is even more interesting! Using a naive count social insects will largely dominate the distribution, in which case intelligence will indeed be (negatively!) correlated with agency. But it’s probably not your reasoning, right? How do you construct/see the distribution of intelligent entities so that you see a positive correlation?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-21T16:33:07.946Z · LW(p) · GW(p)

I don't really care about what's going on at very low levels of intelligence (insects) and I also don't care about population sizes, I care about plausibility/realism/difficulty-of-construction/stufflikethat. (If I cared about population sizes, I'd say the distribution was dominated by rocks, which have 0 agency and 0 intelligence, and then all creatures (which-have-some-agency-and-some-intelligence) form a cluster up and to the right of rocks, and thus the correlation is positive.) But out of curiosity what are you thinking there -- are you thinking that the smarter insects are less agentic, the more agentic insects are smarter?

Intelligence (which I'm guessing we are thinking of as world-representation / world-understanding / prediction-engine / etc.?) and Agency (goal-orientedness / coherence / ability-to-take-long-sequences-of-action-that-P2B [? · GW]) are different things but they are closely related:

As a practical matter, the main ways of achieving either of them involves procedures that get you both. (e.g. training a neural net to accomplish diverse difficult tasks is training a neural net to be highly agentic, but it's going to end up developing some pretty good world representations in the process. Training a neural net to predict stuff (e.g. LLMs) does indeed mostly just give them intelligence and not agency, though in the limit of infinite training of this sort (especially if the stuff being predicted was action-sequences of previous versions of itself) it would get you agency anyway. It also gets you a system which can very easily be turned into an agent (see: AutoGPT) and there is competitive pressure to do so.

Related readings which I generally recommend to anyone interested in this topic (h/t Ben Pace for the list):

Gwern's analysis of why Tool AIs want to become Agent AIs. It contains references to many other works. (It also has a positive review from Eliezer.)

Eric Drexler's incredibly long report on Comprehensive AI Services as General Intelligence. Related: Rohin Shah's summary [LW · GW], and Richard Ngo's comment [LW · GW].

The Goals and Utility Functions chapter of Rohin Shah's Value Learning sequence [? · GW].

Eliezer's very well-written post on Arbital addressing the question "Why Expected Utility?" [Added: crossposted to LessWrong here [LW · GW]]

The LessWrong wiki article on Tool AI links to several posts on this topic.

... and my very own Agency: What it is and why it matters - LessWrong [? · GW]

↑ comment by Ilio · 2023-05-22T12:02:05.949Z · LW(p) · GW(p)

I don't really care about what's going on at very low levels of intelligence (insects)

Well, yes, removing (low X, high Y) points is one way to make correlation coefficient positive, but then you shouldn’t trust any conclusion based on that (or, more precisely, you shouldn’t update based on that). Idem if your data form clusters.

... and my very own Agency: What it is and why it matters - LessWrong

Thanks, very helpful! Yes, we agree that, once we define agency as basically the ability to represent and act on plans, and each level in agency as one type of what I’d call cognitive strategies, then the more intelligence the more agency.

But is that definition useful enough? I’ll have to read the other links to be fair, but what’s your best three arguments for the universality of this definition? Or at least why you think it should apply to computer programs and human-made robots?

But out of curiosity what are you thinking there -- are you thinking that the smarter insects are less agentic, the more agentic insects are smarter?

Well the good thing is we don’t need to think that much, we can just read the literature. The behaviors of social insects that appear the most agentic (using man on the street feeling rather than your specialized definition) are collective behaviors: they can go to war, enslave their victims, raise cattle, sail (arguably), decide to emigrate en masse, choose the best among candidate locations, etc. The following paper explains quite well that this does not rely on individual intelligence, but on coordination among individuals: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5226334/

Now, if you decide to exclude social insects from your definition of agency or intelligence, then I think you’re also at risk of missing what I see as one of the main present danger: collective stupidity emerging from our use of social networks. Imagine if covid had turned out to be as dangerous as ebola. We wouldn’t have to care about our civilisation being too powerful for its own good, at least for a while.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-23T04:05:52.456Z · LW(p) · GW(p)

What's your response to my "If I did..." point? If we include all the data points, the correlation between intelligence and agency is clearly positive, because rocks have 0 intelligence and 0 agency.

If you agree that agency as I've defined it in that sequence is closely and positively related to intelligence, then maybe we don't have anything else to disagree about. I would then ask of you and Boaz what other notion of agency you have in mind, and encourage you to specify it to avoid confusion, and then maybe that's all I'd say since maybe we'd be in agreement.

I am not excluding social insects from my definition of agency or intelligence. I think ants are quite agentic and also quite intelligent.

I do disagree that collective stupidity from our use of social networks is our main present danger; I think it's sorta a meta-danger, in that if we could solve it maybe we'd solve a bunch of our other problems too, but it's only dangerous insofar as it it leads to those other problems, and some of those other problems are really pressing... analogy: "Our biggest problem is suboptimal laws. If only our laws and regulations were optimal, all our other problems such as AGI risk would go away." This is true, but... yeah it seems less useful to focus on that problem, and more useful to focus on more first-order problems and how our laws can be changed to address them.

↑ comment by Ilio · 2023-05-23T21:55:48.292Z · LW(p) · GW(p)

What's your response to my "If I did..." point?

Sorry, that was the « Idem if your data forms clusters ». In other words, I agree a cluster to (0,0) and a cluster to (+,+) will turn into positive correlation coefficients, and I warn you against updating based on that (it’s a statistical mistake).

If you agree that agency as I've defined it in that sequence is closely and positively related to intelligence, then maybe we don't have anything else to disagree about.

I respectfully disagree with the idea that most disagreements comes from making different conclusion based on the same priors. Most disagreements I have with anyone on LessWrong (and anywhere, really) is about what priors and prior structures are best for what purpose. In other words, I fully agree that

I would then ask of you and Boaz what other notion of agency you have in mind, and encourage you to specify it to avoid confusion, and then maybe that's all I'd say since maybe we'd be in agreement.

Speaking for myself only, my notion of agency is basically « anything that behaves like an error-correcting code ». This includes conscious beings that want to promote their fate, but also life who want to live, and even two thermostats fighting over who’s in charge.

I do disagree that collective stupidity from our use of social networks is our main present danger; I think it's sorta a meta-danger, in that if we could solve it maybe we'd solve a bunch of our other problems too, but it's only dangerous insofar as it it leads to those other problems, and some of those other problems are really pressing...

That and the analogy are very good points, thank you.

↑ comment by boazbarak · 2023-05-21T19:34:43.033Z · LW(p) · GW(p)

I discussed Gwern's article in another comment [LW(p) · GW(p)]. My point (which also applies to Gwern's essay on GPT3 and scaling hypothesis) is the following:

- I don't dispute that you can build agent AIs, and that they can be useful.

- I don't claim that it is possible to get the same economic benefits by restricting to tool AIs. Indeed, in my previous post with Edelman [LW · GW], we explicitly said that we do consider AIs that are agentic in the sense that they can take action, including self-driving, writing code, executing trades etc..

- I don't dispute that one way to build those is to take a next-token predictor such as pretrained GPT3, and then use fine-tuning, RHLF, prompt engineering or other methods to turn it into an agent AI. (Indeed, I explicitly say so in the current post.)

My claim is that it is a useful abstraction to (1) separate intelligence from agency, and (2) intelligence in AI is a monotone function of the computational resources (FLOPs, data, model size, etc.) invested into building the model.

Now if you want to take 3.6 Trillion gradient steps in a model, then you simply cannot do it by having it take actions and wait to get some reward. So I do claim that if we buy the scaling hypothesis that intelligence scales with compute, the bulk of the intelligence of models such as GPT-n, PALM-n, etc. comes from the non agentic next-token predictor.

So, I believe it is useful and more accurate to think of (for example) a stock trading agent that is built on top of GPT-4 as consisting of an "intelligence forklift" which accounts for 99.9% of the computational resources, plus various layers of adaptations, including supervised fine-tuning, RL from human feedback, and prompt engineering, to obtain the agent.

The above perspective does not mean that the problem of AI safety or alignment is solved. But I do think it is useful to think of intelligence as belonging to a system rather than an individual agent, and (as discussed briefly above) that considering it in this way changes somewhat the landscape of both problems and solutions.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-21T21:14:07.293Z · LW(p) · GW(p)

Ah. Well, if that's what you are saying then you are preaching to the choir. :) See e.g. the "pretrained LLMs are simulators / predictors / oracles" discourse on LW.

I feel like there is probably still some disagreement between us though. For example I think the "bulk of the intelligence comes from non-agentic next-token predictor" claim you make probably is either less interesting or less true than you think it is, depending on what kinds of conclusions you think follow from it. If you are interested in discussing more sometime I'd be happy to have a video call!

↑ comment by Jalex Stark (jalex-stark-1) · 2023-05-20T18:39:38.566Z · LW(p) · GW(p)

https://gwern.net/tool-ai

Replies from: boazbarak↑ comment by boazbarak · 2023-05-20T21:25:55.477Z · LW(p) · GW(p)

I was asked about this on Twitter. Gwern’s essay deserves a fuller response than a comment but I’m not arguing for the position Gwern argues against.

I don’t argue that agent AI are not useful or won’t be built. I am not arguing that humans must always be in the loop.

My argument is that tool vs agent AI is not so much about competition but specialization. Agent AIs have their uses but if we consider the “deep learning equation” of turning FLOPs into intelligence, then it’s hard to beat training for predictions on static data. So I do think that while RL can be used forAI agents, the intelligence “heavy lifting” (pun intended) would be done by non-agentic tool but very large static models.

Even “hybrid models” like GPT3.5 can best be understood as consisting of an “intelligence forklift” - the pretrained next-token predictor on which 99.9% of the FLOPs were spent on building - and an additional light “adapter” that turns this forklift into a useful Chatbot etc

comment by Ilio · 2023-05-20T19:14:02.424Z · LW(p) · GW(p)

In our own species’ evolution, as we have become more intelligent, we have become more able to act as agents that do not follow pre-ordained goals but rather choose our own.

I guess this actually reinforces your main point, but this sentence sounds very wrong. For example it’s much easier to conduct an experiment in humans than in monkeys. Even when properly trained and with ongoing water restriction they frequently say fuck you, in less words, but they meant it, and you know they meant it, and they know that you know, and that’s what it is for today. In human you spend 30’ explaining your subject they can withdraw at any time and keep the money, then they work pretty hard anyway, just because you asked them to! So, is there any line of evidences or thoughts that lead you to the opposite idea, or you were just starting from a misconception so as to fight it?

(Also, did you read Sapiens? Harari made some good point defending the idea that our success was large scale coordination through shared myths, aka one need to be intelligent enough before one can believe vengeful gods don’t want you to have sex with someone, or that some piece of paper can be worth something more than a piece of paper.)

Replies from: boazbarak↑ comment by boazbarak · 2023-05-20T21:04:28.697Z · LW(p) · GW(p)

That’s pretty interesting about monkeys! I am not sure I 100% buy the nyths theory, but it’s certainly the case that developing language to talk about events that are not immediate in space or times is essential to coordinate a large scale society

comment by M. Y. Zuo · 2023-05-21T21:23:47.936Z · LW(p) · GW(p)

- Whatever intelligence is, more computational power or cognitive capacity (e.g., a more complex or larger neural network, a species with a larger brain) leads to more of it.

Whales, with their much larger brains, trivially disprove this. There may be a loose correlation, but it would have to be quite a small number.

Replies from: None, boazbarak, green_leaf↑ comment by [deleted] · 2023-05-22T12:18:44.735Z · LW(p) · GW(p)

It's still typically acknowledged that the evolution of intelligence from more primitive apes to humans was mostly an increase of computational power (proportionally bigger brains) with little innovation on structures. So, there seems to be merit to the idea.

Larger animals, all things being equal, need more neurons to perform the same basic functions than we do because of their larger bodies.

↑ comment by boazbarak · 2023-05-21T22:09:11.260Z · LW(p) · GW(p)

Within a particular genus or architecture, more neurons would be higher intelligence. Comparing between completely different neural network types is indeed problematic

Replies from: Erich_Grunewald, M. Y. Zuo↑ comment by Erich_Grunewald · 2023-05-22T17:41:36.288Z · LW(p) · GW(p)

Within a particular genus or architecture, more neurons would be higher intelligence.

I'm not sure that's necessarily true? Though there's probably a correlation. See e.g. this post [EA · GW]:

Replies from: boazbarak[T]he raw number of neurons an organism possesses does not tell the full story about information processing capacity. That’s because the number of computations that can be performed over a given amount of time in a brain also depends upon many other factors, such as (1) the number of connections between neurons, (2) the distance between neurons (with shorter distances allowing faster communication), (3) the conduction velocity of neurons, and (4) the refractory period which indicates how much time must elapse before a given neuron can fire again. In some ways, these additional factors can actually favor smaller brains (Chitka 2009).

↑ comment by boazbarak · 2023-05-24T10:37:11.443Z · LW(p) · GW(p)

Yes , the point is that once you fixed architecture and genus (eg connections etc), more neurons/synapses leads to more capabilities

Replies from: Erich_Grunewald↑ comment by Erich_Grunewald · 2023-05-24T16:18:13.116Z · LW(p) · GW(p)

I see, that makes sense. I agree that holding all else constant more neurons implies higher intelligence.

↑ comment by M. Y. Zuo · 2023-05-21T23:54:58.631Z · LW(p) · GW(p)

I don't think whale neurons or the way they are connected are that different, unless you know of some research?

Replies from: Ilio↑ comment by Ilio · 2023-05-22T12:30:45.415Z · LW(p) · GW(p)

Yes: their sleep differs, for obvious reasons, and messing with REM sleep could well be why they need more neurons. Specifically, we know that echidna (a terrestrial species that lack REM sleep) has much more neurons than it should given its body mass (and, arguably, behavior), and one hypothesis is there’s a causal link, e.g. REM sleep could be a mean to make neuron use more efficient.

↑ comment by green_leaf · 2023-05-22T12:15:12.940Z · LW(p) · GW(p)

You need to correct for the size of the organism (a lot of the brain is necessary to control the body).

Replies from: M. Y. Zuo