Forget Everything (Statistical Mechanics Part 1)

post by J Bostock (Jemist) · 2024-04-22T13:33:35.446Z · LW · GW · 7 commentsContents

Forget as much as possible, then find a way to forget some more.

Particle(s) in a Box

The Piston

Maths of the Piston

Conclusions

You can only forget, never remember.

None

7 comments

EDIT: I somehow missed that John Wentworth and David Lorell are also in the middle of a sequence have written one post on this same topic here [LW · GW]. I will see where this goes from here! This sequence will continue!

Introduction to a sequence on the statistical thermodynamics of some things and maybe eventually everything. This will make more sense if you have a basic grasp on quantum mechanics, but if you're willing to accept "energy comes in discrete units" as a premise then you should be mostly fine.

The title of this post has a double meaning:

- Forget the thermodynamics you've learnt before, because statistical mechanics starts from information theory.

- The main principle of doing things with statistical mechanics is can be summed up as follows:

Forget as much as possible, then find a way to forget some more.

Particle(s) in a Box

All of practical thermodynamics (chemistry, engines, etc.) relies on the same procedure, although you will rarely see it written like this:

- Take systems which we know something about

- Allow them to interact in a controlled way

- Forget as much as possible

- If we have set our systems correctly, the information that is lost will allow us to learn some information somewhere else.

For example, consider a particle in a box.

What does it mean to "forget everything"? One way is forgetting where the particle is, so our knowledge of the particle's position could be represented by a uniform distribution over the interior of the box.

Now imagine we connect this box to another box:

If we forget everything about the particle now, we should also forget which box it is in!

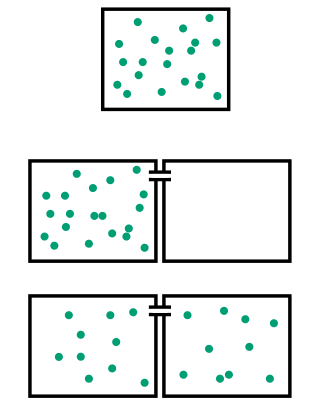

If we instead have a lot of particles in our first box, we might describe it as a box full of gas. If we connect this to another box and forget where the particles are, we would expect to find half in the first box and half in the second box. This means we can explain why gases expand to fill space without reference to anything except information theory.

A new question might be, how much have we forgotten? Our knowledge gas particle has gone from the following distribution over boxes 1 and 2

To the distribution

Which is the loss of 1 bit of information per particle. Now lets put that information to work.

The Piston

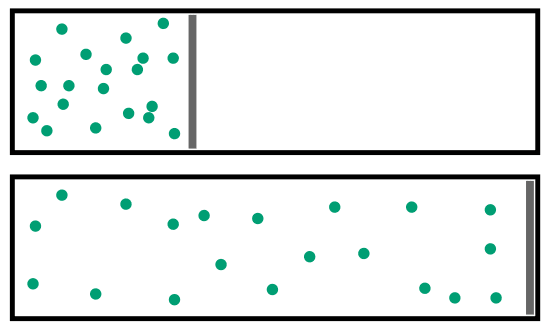

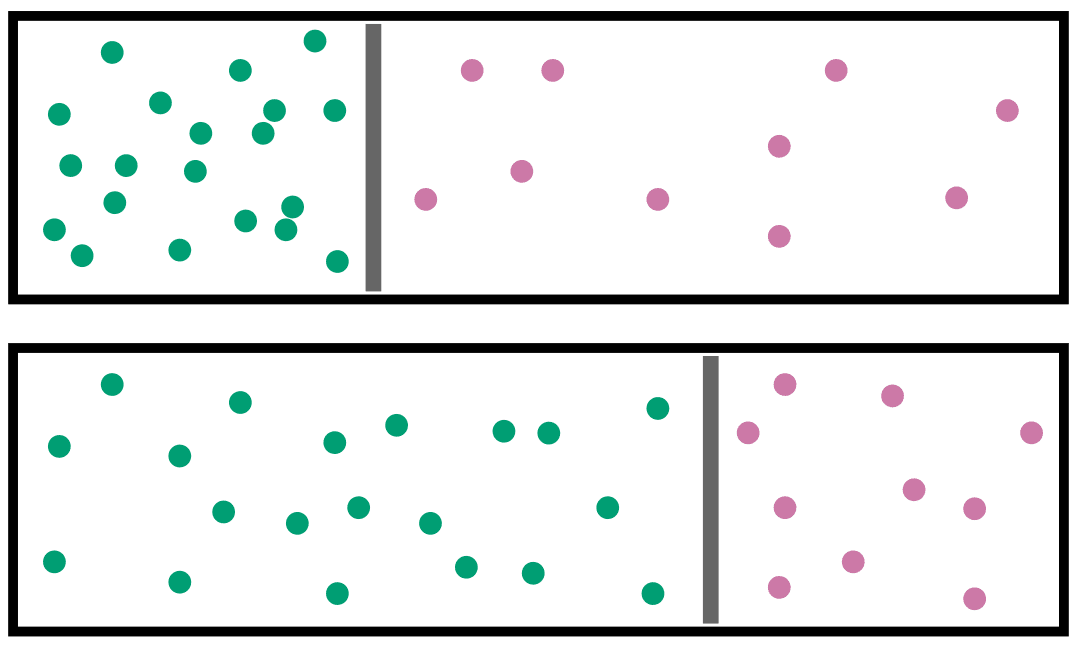

Imagine a box with a movable partition. The partition restricts particles to one side of the box. If the partition moves to the right, then the particles can access a larger portion of the box:

In this case, to forget as much as possible about the particles means to assume they are in the largest possible space, which involves the partition being all the way over to the right. Of course there is the matter of forgetting where the partition is, but we can safely ignore this as long as the number of particles is large enough.

What if we have a small number of particles on the right side of the partition?

We might expect the partition to move some, but not all, of the way over, when we forget as much as possible. Since the region in which the pink particles can live has decreased, we have gained knowledge about their position. By coupling forgetting and learning, anything is possible. The question is, how much knowledge have we gained?

Maths of the Piston

Let the walls of the box be at coordinates 0 and 1, and let be the horizontal coordinate of the piston. The position of each green particle can be expressed as a uniform distribution over , which has entropy , and likewise each pink particle's position is uniform over , giving entropy .

If we have green particles and pink particles, the total entropy becomes , which has a minimum at . This means that the total volume occupied by each population of particles is proportional to the number of particles.

If we wanted to ditch this information-based way of thinking about things, we could invent some construct which is proportional to for the green particles and for the pink particles, and demand they be equal. Since the region with the higher value of this construct presses harder on the partition, and pushes it away, we might call this construct "pressure".

If we start with and , we will end up with . We will have "forgotten" bits of information and learned bits of information. In total this is a net loss of bits of information, which are lost to the void.

The task of building a good engine is the task of minimizing the amount of information we lose.

Conclusions

We can, rather naturally and intuitively, reframe the behaviour of gases in a piston in terms of information first and pressure later. This will be a major theme of this sequence. Quantities like pressure and temperature naturally arise as a consequence of the ultimate rule of statistical mechanics:

You can only forget, never remember.

7 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-04-22T16:37:20.752Z · LW(p) · GW(p)

I somehow missed that John Wentworth and David Lorell are also in the middle of a sequence on this same topic here.

Yeah, uh... hopefully nobody's holding their breath waiting for the rest of that sequence. That was the original motivator, but we only wrote the one post and don't have any more in development yet.

Point is: please do write a good stat mech sequence, David and I are not really "on that ball" at the moment.

comment by Alex_Altair · 2024-04-25T03:42:15.042Z · LW(p) · GW(p)

This will make more sense if you have a basic grasp on quantum mechanics, but if you're willing to accept "energy comes in discrete units" as a premise then you should be mostly fine.

My current understanding is that QM is not-at-all needed to make sense of stat mech. Instead, the thing where energy is equally likely to be in any of the degrees of freedom just comes from using a measure over your phase space such that the dynamical law of your system preservers that measure!

Replies from: AnthonyC↑ comment by AnthonyC · 2025-03-10T06:23:52.783Z · LW(p) · GW(p)

For learning the foundational principles of stat mech, yes, you can start that way. For example, you can certainly come up with definitions of pressure and temperature for an ideal gas or calculate the heat capacity of an Einstein solid without any quantum considerations. But once you start asking questions about real systems, the quantization is hugely important for e.g. understanding the form of the partition function.

comment by Gunnar_Zarncke · 2024-04-22T14:33:05.777Z · LW(p) · GW(p)

Interesting to see this just weeks after Generalized Stat Mech: The Boltzmann Approach [LW · GW]

Replies from: Jemist↑ comment by J Bostock (Jemist) · 2024-04-22T15:11:45.212Z · LW(p) · GW(p)

I uhh, didn't see that. Odd coincidence! I've added a link and will consider what added value I can bring from my perspective.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-04-22T17:27:55.450Z · LW(p) · GW(p)

I think it is often worth for multiple presentations of the same subject to exist. One may be more accessible for some of the audience.

comment by Gurkenglas · 2024-05-13T15:34:39.960Z · LW(p) · GW(p)

Recall that every vector space is the finitely supported functions from some set to ℝ, and every Hilbert space is the square-integrable functions from some measure space to ℝ.

I'm guessing that similarly, the physical theory that you're putting in terms of maximizing entropy lies in a large class of "Bostock" theories such that we could put each of them in terms of maximizing entropy, by warping the space with respect to which we're computing entropy. Do you have an idea of the operators and properties that define a Bostock theory?