Reflective Consequentialism

post by Adam Zerner (adamzerner) · 2022-11-18T23:56:52.756Z · LW · GW · 14 commentsContents

15 comments

Epistemic Status: My position makes sense to me and seems pretty plausible. However, I haven't thought too hard about it, nor have I put it to the test by discussing with others. Putting it to the test is what I'm trying to do here. I also don't have the best understanding of what virtue ethics (and/or deontology) actually propose, so I might be mischaracterizing them.

There has been some talk recently, triggered by the FTX saga [LW · GW], about virtue ethics being good and consequentialism being bad. Eliezer talked about this years ago in Ends Don't Justify Means (Among Humans) [LW · GW], which was summed up in the following tweet:

The rules say we must use consequentialism, but good people are deontologists, and virtue ethics is what actually works.

Maybe I am misunderstanding, but something about this has always felt very wrong to me.

In the post, Eliezer starts by confirming that the rules do in fact say we must use consequentialism. Then he talks about how we are running on corrupted hardware, and so we can't necessarily trust our perception of what will produce the best consequences. For example, cheating to seize power might seem like it is what will produce the best consequences — the most utilons, let's say — but that seeming might just be your brain lying to you.

So far this makes total sense to me. It is well established that our hardware is corrupted. Think about the planning fallacy [LW · GW] and how it really, truly feels like your math homework will only take you 20 minutes.

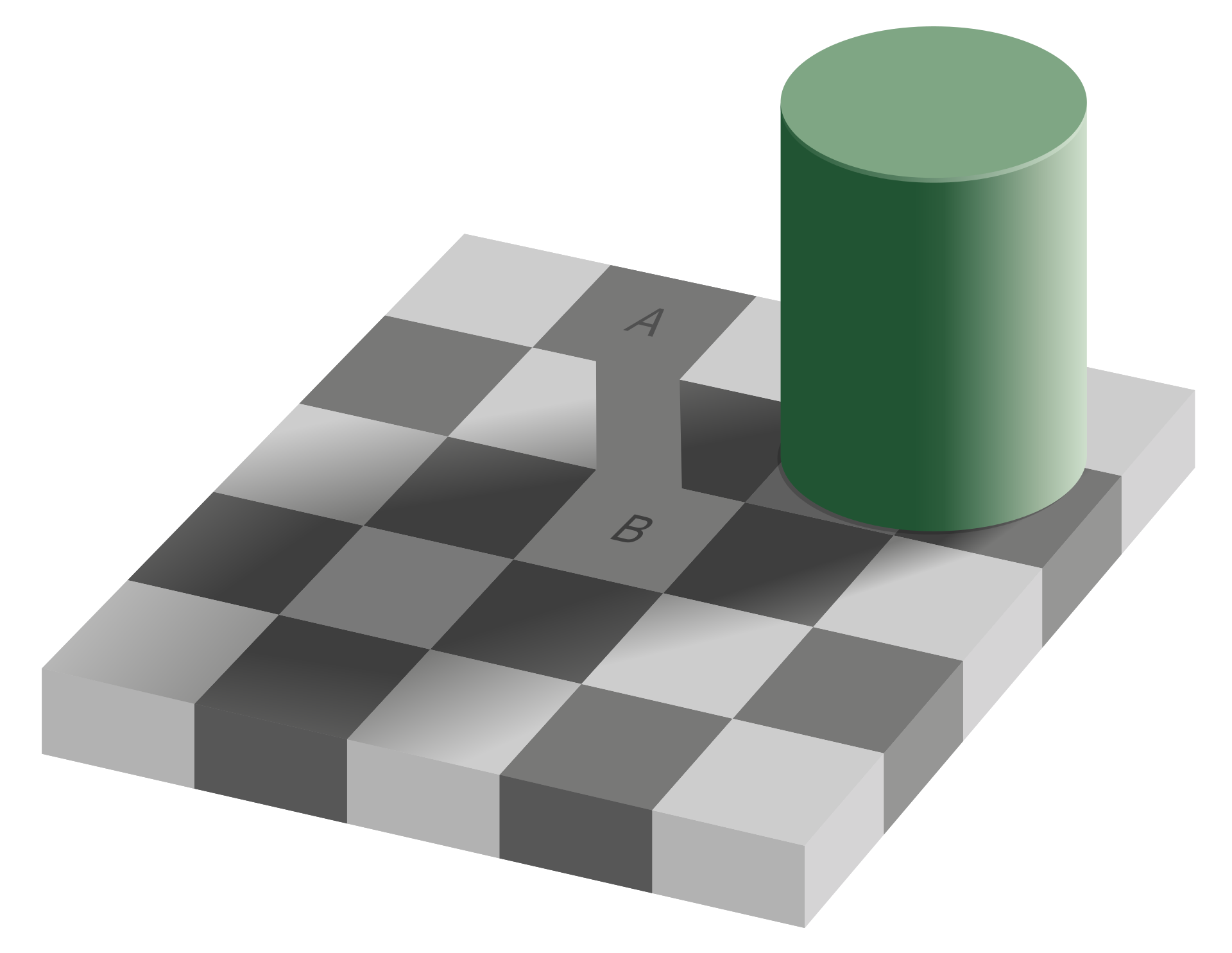

Or the phenomena of optical illusions. Squares A and B are the exact same shade of grey, but your brain tells you that they are quite different, and it really, truly feels that way to you.

But look: they are, in fact, the exact same shade of grey.

But look: they are, in fact, the exact same shade of grey.

Again, corrupted hardware. Your brain lies to you. You can't always trust it.

Again, corrupted hardware. Your brain lies to you. You can't always trust it.

And this is where reflectiveness comes in. From The Lens That Sees Its Flaws [LW · GW]:

If you can see this—if you can see that hope is shifting your first-order thoughts by too large a degree—if you can understand your mind as a mapping engine that has flaws—then you can apply a reflective correction. The brain is a flawed lens through which to see reality. This is true of both mouse brains and human brains. But a human brain is a flawed lens that can understand its own flaws—its systematic errors, its biases—and apply second-order corrections to them. This, in practice, makes the lens far more powerful. Not perfect, but far more powerful.

So far this is still all making sense to me. Our hardware is corrupted. But we're fortunate enough to be able to reflect on this and apply second order corrections to this. It makes sense to apply such corrections.

But here is where I get confused, and perhaps diverge from the position that Eliezer and the large majority of the community take when they recommend virtue ethics. Virtue ethics, from what I understand, basically says to decide on rules ahead of time and stick to them. Rules that a good person would follow, like "don't cheat to seize power".

But on a human level, the patch seems straightforward. Once you know about the warp, you create rules that describe the warped behavior and outlaw it. A rule that says, "For the good of the tribe, do not cheat to seize power even for the good of the tribe." Or "For the good of the tribe, do not murder even for the good of the tribe."

The idea being that we are running on corrupted hardware, and following such rules would produce better consequences than trying to perform consequentialist calculus in the moment. If you did try to perform consequentialist calculus in the moment, your corrupted hardware would significantly bias you towards, eg. "normally it's bad to cheat to seize power... but in this particular scenario it seems like it is worth it".

That seems too extreme to me though. Instead of deciding ahead of time that "don't cheat to seize power" is an absolute rule you must follow, why not just incorporate it into your thinking as a heuristic? Ie. when presented with a situation where you can cheat to seize power, 1) perform your first order consequentialist calculus, and then 2) adjust for the fact that you're biased towards thinking you should cheat, and for the fact that not cheating is known to be a good heuristic.

I guess the distinction between what I am proposing and what virtue ethics proposes comes down to timing. Virtue ethics says to decide on rules ahead of time. I say that you should decide on heuristics ahead of time, but in the moment you can look at the specifics of the situation and make a decision, after adjusting for your corrupted hardware and incorporating your heuristics.

Let's call my approach reflective consequentialism. Naive consequentialism would just take a first stab at the calculus and then go with the result. Reflective consequentialism would also take that first stab, but then it would:

- Reflect on the fact that your hardware is corrupted

- Make adjustments based on (1)

- Go with the result

With that distinction made, I suppose the question is whether reflective consequentialism or virtue ethics produces better consequences. And I suppose that is an empirical question. Which means that we can hypothesize.

My hypothesis is that virtue ethics would produce better consequences for most people. I don't trust most people to be able to make adjustments in the moment. After all, there is strong evidence that they don't do a good job of adjusting for other biases, even after being taught about biases.

However, I also hypothesize that for sufficiently strong people, reflective consequentialism would perform better. Think of it like a video game where your character can level up. Maybe you need to be past level 75 out of 100 in order for reflective consequentialism to yield better results for you. The sanity waterline [LW · GW] is low enough where most people are moreso on something like level 13, but I'd guess that the majority of this community is leveled up high enough where reflective consequentialism would perform better.

To be clear, you can still lean very strongly away from trusting your first order instincts with reflective consequentialism. You can say to yourself, "Yeah... I know it seems like I should cheat to seize power, but I am significantly biased towards thinking that, so I am going to adjust correspondingly far in the opposite direction, which brings me to a point where not cheating is the very clear winner." You just have to be a strong enough person to actually do that in the moment.

At the very least, I think that reflective consequentialism is something that we should aspire towards. If we accept that it yields better consequences amongst strong enough people, well, we should seek to become strong enough to be able to wield it as a tool. Tsuyoku Naritai [LW · GW].

Deciding that we are too weak to wield it isn't something that we should be proud of. It isn't something that we should be content with. Feeling proud and content in this context feels to me like an improper use of humility [? · GW].

If the strength required to wield the tool of reflective consequentialism was immense, like the strength required to wield Thor's hammer, then I wouldn't hold this position. It's implausible that a human being would come anywhere close to that level of strength. So then, it'd be wise to recognize this and appropriate to shoot someone a disapproving glance who is trying to wield the tool.

I don't think this is where we're at with reflective consequentialism though. I think the strength required to wield it is well within reach of someone who takes self-improvement moderately seriously.

14 comments

Comments sorted by top scores.

comment by Jan_Kulveit · 2022-11-19T09:14:44.968Z · LW(p) · GW(p)

My technical explanation for why not direct consequentialism is somewhat different - deontology and virtue ethics are effective theories [LW · GW] . You are not almost unbounded superintelligence => you can't rely on direct consequentialism.

Why virtue ethics works? You are mostly a predictive processing system. Guess at simple PP story:

PP is minimizing prediction error. If you take some unvirtuous action, like, e.g. stealing a little, you are basically prompting the pp engine to minimize total error between the action taken, your self-model / wants model, and your world-model. By default, the result will be, your future self will be marginally more delusional ("I assumed they wanted to give it to me anyway") + bit more ok with stealing ("Everyone else is also stealing.")

The possible difference here is in my view you are not some sort of 'somewhat corrupt consequentialist VNM planner' where if you are strong enough, you will correct the corruption with some smart metacognition. What's closer to reality is the "you" believing you are somewhat corrupt consequentialist is to a large extent hallucination of a system for which "means change the ends" and "means change what you believe" are equally valid.

If the strength required to wield the tool of reflective consequentialism was immense, like the strength required to wield Thor's hammer, then I wouldn't hold this position.

The hammer gets heavier with the stakes of the decisions you are making. Also the strength to hold it mostly does not depend on how smart you are, but on how virtuous you are.

comment by Publius · 2022-11-20T22:02:05.670Z · LW(p) · GW(p)

Virtue ethics, from what I understand, basically says to decide on rules ahead of time and stick to them.

This is a grave misunderstanding of virtue ethics I would say. Your description would be more apt for deontological ethics. May I suggest the SEP article on virtue ethics.

comment by zeshen · 2022-11-19T10:27:54.835Z · LW(p) · GW(p)

This post makes sense to me though it feels almost trivial. I'm puzzled by the backlash against consequentialism, it just feels like people are overreacting. Or maybe the 'backlash' isn't actually as strong as I'm reading it to be.

I'd think of virtue ethics as some sort of equilibrium that society has landed ourselves in after all these years of being a species capable of thinking about ethics. It's not the best but you'd need more than naive utilitarianism to beat it (this EA forum post [EA · GW] feels like commonsense to me too), which you describe as reflective consequentialism. It seems like it all boils down to: be a consequentialist, as long as you 1) account for second-order and higher effects, and 2) account for bad calculation due to corrupted hardware.

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2022-11-19T13:59:26.820Z · LW(p) · GW(p)

Yeah, I have very similar thoughts.

comment by Viliam · 2022-11-22T16:57:08.838Z · LW(p) · GW(p)

If we are too stupid, we are doomed anyway. If we become dramatically smarter than we are now, we will update all our moral theories anyway. On the level where we are now, following a few rules about not abusing power, which have a good outside-view support, seems like a good idea.

I agree with the part that if we become much smarter, it may be safe to do things that are dangerous for us now. What I doubt is the reliability of determining how much smarter we have actually became, if there is a strong temptation to scream: "yes, I am already much smarter, now shut up and let me take all those cookies!!!"

Given what I know about people, if we today agree that -- hypothetically, in a distant posthuman future -- it will be a good idea to take all power you can and forcefully optimize the universe for greater good... a few weeks later, after a few more articles elaborating this topic, some people will start publicly declaring that they are already that smart (and if you are not, that only means that you are a loser, and should obey them).

comment by Jayson_Virissimo · 2022-11-19T04:37:27.584Z · LW(p) · GW(p)

Virtue ethics says to decide on rules ahead of time.

This may be where our understandings of these ethical views diverges. I deny that virtue ethicists are typically in the position to decide on the rules (ahead of time or otherwise). If what counts as a virtue isn't strictly objective, then it is at least intersubjective, and is therefore not something that can decided on by an individual (at least relatively). It is absurd to think to yourself "maybe good knives are dull" or "maybe good people are dishonest and cowardly", and when you do think such thoughts it is more readily apparent that you are up to no good. On the other hand, the sheer number of parameters the consequentialist can play with to get their utility calculation to come to the result they are (subconsciously) seeking supplies them with an enormous amount of ammunition for rationalization.

comment by Anon User (anon-user) · 2022-11-19T00:52:58.428Z · LW(p) · GW(p)

Make adjustments based on (1)

You cannot trust the hardware not to skew this adjustment in all kinds of self-srving ways. The point is that the hardware will mess up any attempt to compute this on object level, all you can do it compute at the meta-level (where the HW corruption is less pronounced), come with firm rules, and then just stick to them on object level.

The specific object-level algorithms do not matter - the corrupted HW starts with the answer it wants the computation to produce, and then backpropages it to skew the computation towards the desired answer.

Replies from: LVSN, adamzerner↑ comment by LVSN · 2022-11-19T03:41:10.999Z · LW(p) · GW(p)

What makes you, and so many others in this community, so sure that our hardware is so great and incorruptible when it is making these rules for our future self to follow? To me, that's completely backwards: won't our future self always be more familiar with the demands of the future, by virtue of actually being there, than our past selves will be?

Replies from: adamzerner, anon-user↑ comment by Adam Zerner (adamzerner) · 2022-11-19T04:08:05.616Z · LW(p) · GW(p)

That's an interesting point/question. My thinking is that there's a tradeoff: if you make decisions in the moment you benefit from having that more up to date information, but you're also going to be more tempted to do the wrong thing. I suppose that OP and others believe that the latter side of the tradeoff has more weight.

Replies from: LVSN↑ comment by LVSN · 2022-11-19T06:46:19.862Z · LW(p) · GW(p)

I don't think your future self has the disadvantage of being more likely to be tempted to do the wrong thing and I don't know where that assumption comes from.

I will concede that I think meanness and suffering of others makes children sad whereas adults are more likely to rationalize it. That's why I am hugely skeptical when people tout maturity as a virtue. For the sake of capturing the benefits of childishness, it may be better to set rules early.

Yet idealistic children, such as I have been, would be tempted to redistribute wealth so that no one has to be homeless, even though for a child it may not feel good to steal someone's money whether they acquired it through luck or by work. (Here's where I will get political for the rest of this post; your mileage may vary about my examples.) I still don't know the specifics of why not to redistribute wealth, but my heroes are economically literate, have managed to keep their idealism, and yet loudly disavow coercive redistribution; I assume they have learned that economic reality was not as morally simple as I would have expected it to be. In that case, it would seem that childish idealism could be misleading, but childish open-mindedness would be helpful.

(When you try to capture the benefits of childishness by writing down your deontology, it is very important to remember the benefit of open-mindedness. But a deontology with strict open-mindedness added to it is a set of guidelines, not rules; can it really be called a deontology at that point?)

Another example of people making better moral judgment as they learn more: future to-be parents in 1995 would never have guessed the prominence that trans rights has gained in the 2010s, and would never have guessed that A) their own children would want to be trans, B) they would be very serious about it, and C) many respectable, middle-to-upper-class educated people would actually think it's deeply important for you to respect their identity. Many of those parents, observing other people becoming more open-minded and progressive in their thinking, would find themselves even more considerate of their child's autonomy. They would learn that the world was not as morally simple as expected. I think this is an incredibly noble outcome, even though conformity is rarely a good motivation.

↑ comment by Anon User (anon-user) · 2022-11-20T23:30:41.690Z · LW(p) · GW(p)

The availability of emotionally-charged information is exactly what causes the issue. It's easier to avoid temptations by setting rules for yourself ahead of time than by relying on your ability to resist them when they are right in front of you.

↑ comment by Adam Zerner (adamzerner) · 2022-11-19T01:01:31.661Z · LW(p) · GW(p)

That claim seems pretty strong to me. In general, biases are tough, but it is accepted we have at least some ability to mitigate them. It sounds like your position is saying the opposite wrt moral decision making and that seems overly pessimistic to me.

Replies from: anon-user↑ comment by Anon User (anon-user) · 2022-11-20T23:33:39.353Z · LW(p) · GW(p)

I am actually agreeing - but I am saying that the way we'd actually accomplish it is by relying on the meta-level as much as possible. E.g. by catching that the object-level conclusion does not reconsider with our meta-level principles, or noticing that it's incongruent in some way.