We might be dropping the ball on Autonomous Replication and Adaptation.

post by Charbel-Raphaël (charbel-raphael-segerie), Épiphanie Gédéon (joy_void_joy) · 2024-05-31T13:49:11.327Z · LW · GW · 9 commentsThis is a question post.

Contents

Can you explain your position quickly? What is ARA? Is ARA a point of no return? No return towards what? Do you really expect to die because of ARA AI? Got it. How long do we have? Why do you think we are dropping the ball on ARA? What can we do about it now? None Answers 87 Richard Ngo 21 Carl Feynman 2 dr_s None 9 comments

Here is a little Q&A

Can you explain your position quickly?

I think autonomous replication and adaptation in the wild is under-discussed as an AI threat model. And this makes me sad because this is one of the main reasons I'm worried. I think one of AI Safety people's main proposals should first focus on creating a nonproliferation treaty. Without this treaty, I think we are screwed. The more I think about it, the more I think we are approaching a point of no return. It seems to me that open source is a severe threat and that nobody is really on the ball. Before those powerful AIs can self-replicate and adapt, AI development will be very positive overall and difficult to stop, but it's too late after AI is able to adapt and evolve autonomously because Natural selection favors AI over humans.

What is ARA?

Autonomous Replication and Adaptation. Let’s recap this quickly. Today, generative AI functions as a tool: you ask a question and the tool answers. Question, answer. It's simple. However, we are heading towards a new era of AI, one with autonomous AI. Instead of asking a question, you give it a goal, and the AI performs a series of actions to achieve that goal, which is much more powerful. Libraries like AutoGPT or ChatGPT, when they navigate the internet, already show what these agents might look like.

Agency is much more powerful and dangerous than AI tools. Thus conceived, AI would be able to replicate autonomously, copying itself from one computer to another, like a particularly intelligent virus. To replicate on a new computer, it must navigate the internet, create a new account on AWS, pay for the virtual machine, install the new weights on this machine, and start the replication process.

According to METR, the organization that audited OpenAI, a dozen tasks indicate ARA capabilities. GPT-4 plus basic scaffolding was capable of performing a few of these tasks, though not robustly. This was over a year ago, with primitive scaffolding, no dedicated training for agency, and no reinforcement learning. Multimodal AIs can now successfully pass CAPTCHAs. ARA is probably coming.

It could be very sudden. One of the main variables for self-replication is whether the AI can pay for cloud GPUs. Let’s say a GPU costs $1 per hour. The question is whether the AI can generate $1 per hour autonomously continuously. Then, you have something like an exponential process. I think that the number of AIs is probably going to plateau, but regardless of a plateau and the number of AIs you get asymptotically, here you are: this is an autonomous AI, which may become like an endemic virus that is hard to shut down.

Is ARA a point of no return?

Yes, I think ARA with full adaptation in the wild is beyond the point of no return.

Once there is an open-source ARA model or a leak of a model capable of generating enough money for its survival and reproduction and able to adapt to avoid detection and shutdown, it will be probably too late:

- The idea of making an ARA bot is very accessible.

- The seed model would already be torrented and undeletable.

- Stop the internet? The entire world's logistics depend on the internet. In practice, this would mean starving the cities over time.

- Even if you manage to stop the internet, once the ARA bot is running, it will be unkillable. Even rebooting all providers like AWS would not suffice, as individuals could download and relaunch the model, or the agent could hibernate on local computers. The cost to completely eradicate it altogether would be way too high, and it only needs to persist in one place to spread again.

The question is more interesting for ARA with incomplete adaptation capabilities. It is likely that early versions of ARA are just going to be very dumb and could be stopped if they disrupt too much society, but we are very uncertain about how strongly society would answer to it and if it would be more competent than dealing with Covid blah blah.

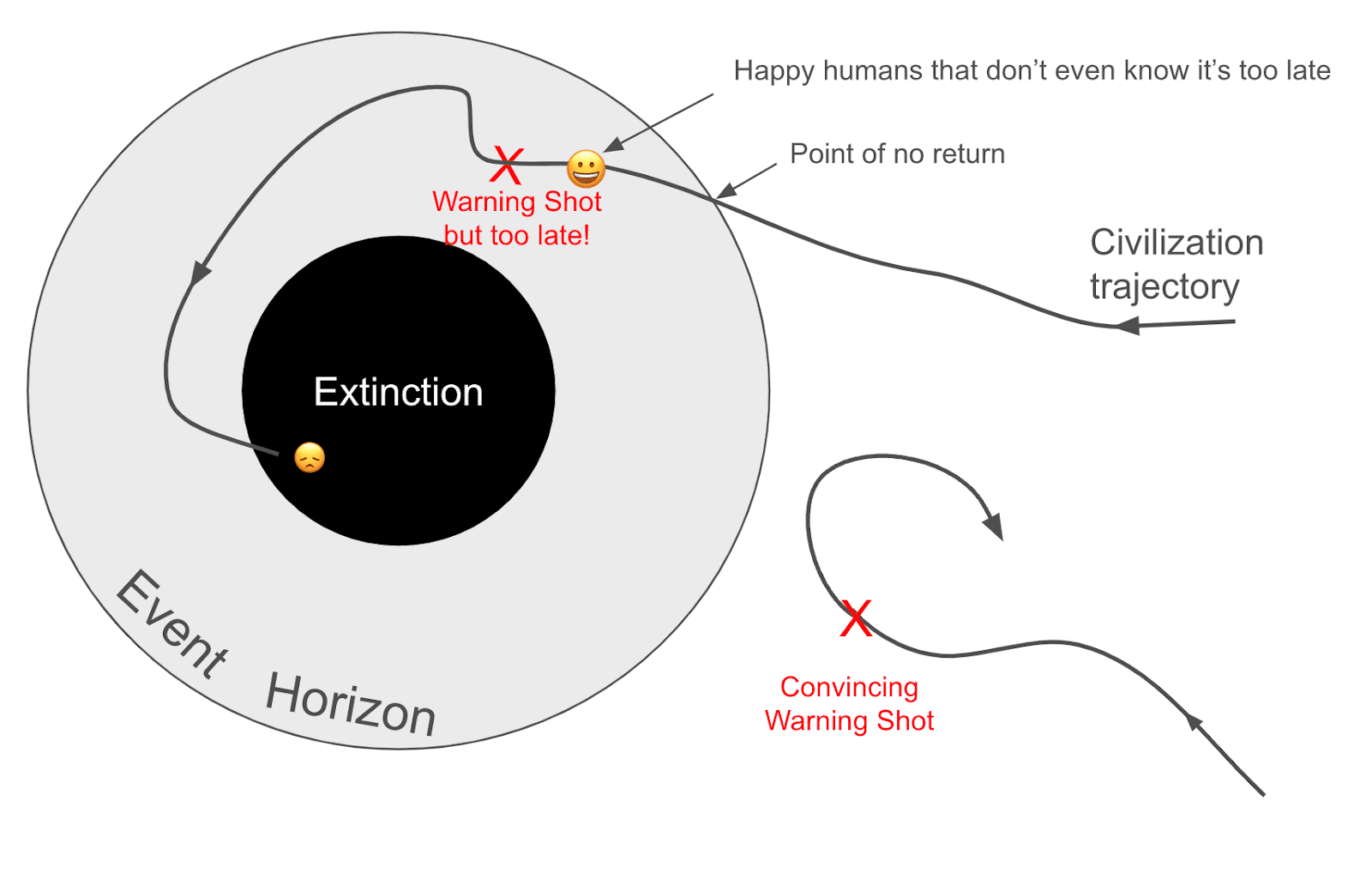

Figure from What convincing warning shot could help prevent extinction from AI [LW · GW]?

No return towards what?

In the Short term:

Even if AI capable of ARA does not lead to extinction in the short term, and even if it plateaus, we think this can already be considered a virus with many bad consequences.

But we think it's pretty likely that good AIs will be created at the same time, in continuity with what we see today: AI can be used to accelerate both good and bad things. I call this the “Superposition hypothesis”: Everything happens simultaneously.

Good stuff includes being able to accelerate research and the economy. Many people might appreciate ARA-capable AIs for their efficiency and usefulness as super assistants, similar to how people today become addicted to language models, etc.

Overall, it’s pretty likely that before full adaptation, AI capable of AR would overall be pretty positive, and as a result, people would continue racing ahead.

In the long term:

If AI reaches ARA with full adaptation, including the ability to hide successfully (eg, fine-tune a bit itself to hide from sha256) and resist shutdown, I feel this will trigger an irreversible process and a gradual loss of control (p=60%).

Once an agent sticks around in a way we can’t kill, we should expect selection pressure to push it toward a full takeover eventually, in addition to any harm it may do during this process.

Selection pressure and competition would select for capabilities; Adaptation allows for resistance and becoming stronger.

Selection pressure and competition would also create undesirable behavior. These AIs will be selected for self-preserving behaviors. For example, the AI could play dead to avoid elimination, like in this simulation of evolution (section: play dead). Modification is scary not only because the model gains more ability but also because it is very likely that the goal becomes selected more and more. The idea that goals themselves are selected for is essential.

At the end of the day, Natural selection favors AIs over humans. This means that once AIs are autonomous enough to be considered a species, they compete with humans for resources like energy and space... In the long run, if we don't react, we lose control.

Do you really expect to die because of ARA AI?

No, not necessarily right away. Not with the first AIs capable of ARA. But the next generation seems terrifying.

Loss of control arrives way before death.

We need to react very quickly.

Got it. How long do we have?

This is uncertain. It might be 6 months or 5 years. Idk.

Open Source is a bit behind on compute but not that behind on techniques. If the bottleneck is data or technique rather than compute, we are fucked.

Why do you think we are dropping the ball on ARA?

Even if ARA is evaluated by big labs, we still need to do something about open-source AI development, and this is not really in the Overton window.

The situation is pretty lame. The people in charge still do not know about this threat at all, and most people I encounter do not know about it. In France, we only hear, “We need to innovate.”

What can we do about it now?

I think the priority is to increase the amount of communication/discussion on this. If you want a template, you can read the op-ed we published with Yoshua Bengio: "It is urgent to define red lines that should not be crossed regarding the creation of systems capable of replicating in a completely autonomous manner."

My main uncertainty is: are we going to see convincing warning shots [LW · GW] before the point of no return?

I intend to do more research on this, but I already wanted to share this.

Thanks to Fabien Roger, Alexander Variengien, Diego Dorn and Florent Berthet.

Work done while at the CeSIA - The Centre pour la Sécurité de l’IA, in Paris.

Answers

I think the opposite: ARA is just not a very compelling threat model in my mind. The key issue is that AIs that do ARA will need to be operating at the fringes of human society, constantly fighting off the mitigations that humans are using to try to detect them and shut them down. While doing all that, in order to stay relevant, they'll need to recursively self-improve at the same rate at which leading AI labs are making progress, but with far fewer computational resources. Meanwhile, if they grow large enough to be spending serious amounts of money, they'll need to somehow fool standard law enforcement and general societal scrutiny.

Superintelligences could do all of this, and ARA of superintelligences would be pretty terrible. But for models in the broad human or slightly-superhuman ballpark, ARA seems overrated, compared with threat models that involve subverting key human institutions. Remember, while the ARA models are trying to survive, there will be millions of other (potentially misaligned) models being deployed deliberately by humans, including on very sensitive tasks (like recursive self-improvement). These seem much more concerning.

Why then are people trying to do ARA evaluations? Well, ARA was originally introduced primarily as a benchmark rather than a threat model. I.e. it's something that roughly correlates with other threat models, but is easier and more concrete to measure. But, predictably, this distinction has been lost in translation. I've discussed this with Paul and he told me he regrets the extent to which people are treating ARA as a threat model in its own right.

Separately, I think the "natural selection favors AIs over humans" argument is a fairly weak one; you can find some comments I've made about this by searching my twitter.

↑ comment by ryan_greenblatt · 2024-05-31T22:52:42.097Z · LW(p) · GW(p)

Hmm, I agree that ARA is not that compelling on its own (as a threat model). However, it seems to me like ruling out ARA is a relatively naturally way to mostly rule out relatively direct danger. And, once you do have ARA ability, you just need some moderately potent self-improvement ability (including training successor models) for the situation to look reasonably scary. Further, it seems somewhat hard to do capabilities evaluations that rule out this self-improvement if models are ARA capable given that there are so possible routes.

Edit: TBC, I think it's scary mostly because we might want to slow down and rogue AIs might then out pace AI labs.

So, I think I basically agree with where you're at overall, but I'd go further than "it's something that roughly correlates with other threat models, but is easier and more concrete to measure" and say "it's a reasonable threshold to use to (somewhat) bound danger " which seems worth noting.

While doing all that, in order to stay relevant, they'll need to recursively self-improve at the same rate at which leading AI labs are making progress, but with far fewer computational resources

I agree this is probably an issue for the rogue AIs. But, we might want to retain the ability to slow down if misalignment seems to be a huge risk and rogue AIs could make this considerably harder. (The existance of serious rogue AIs is surely also correlated with misalignment being a big risk.)

While it's hard to coordinate to slow down human AI development even if huge risks are clearly demonstrated, there are ways in which it could be particularly hard to prevent mostly autonomous AIs from self-improving. In particular, other AI projects require human employees which could make them easier to track and shutdown. Further, AI projects are generally limited by not having a vast supply of intellectual labor which would change in a regime where there rogue AIs with reasonable ML abilities.

This is mostly an argument that we should be very careful with AIs which have a reasonable chance of being capable of substantial self-improvement, but ARA feels quite related to me.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2024-06-03T19:05:14.686Z · LW(p) · GW(p)

However, it seems to me like ruling out ARA is a relatively naturally way to mostly rule out relatively direct danger.

This is what I meant by "ARA as a benchmark"; maybe I should have described it as a proxy instead. Though while I agree that ARA rules out most danger, I think that's because it's just quite a low bar. The sort of tasks involved in buying compute etc are ones most humans could do. Meanwhile more plausible threat models involve expert-level or superhuman hacking. So I expect a significant gap between ARA and those threat models.

once you do have ARA ability, you just need some moderately potent self-improvement ability (including training successor models) for the situation to look reasonably scary

You'd need either really good ARA or really good self-improvement ability for an ARA agent to keep up with labs given the huge compute penalty they'll face, unless there's a big slowdown. And if we can coordinate on such a big slowdown, I expect we can also coordinate on massively throttling potential ARA agents.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-03T19:17:16.137Z · LW(p) · GW(p)

unless there's a big slowdown

Yep, I was imagining big slow down or uncertainty in how explosive AI R&D will be.

And if we can coordinate on such a big slowdown, I expect we can also coordinate on massively throttling potential ARA agents.

It agree that this cuts the risk, but I don't think it fully eliminates it. I think it might be considerably harder to fully cut off ARA agents than to do a big slowdown.

One way to put this is that quite powerful AIs in the wild might make slowing down 20% less likely to work (conditional on a serious slow down effort) which seems like a decent cost to me.

Part of my view here is that ARA agents will have unique affordances that no human organization will have had before (like having truely vast, vast amounts of pretty high skill labor).

Of course, AI labs will also have access to vast amounts of high skill labor (from possibly misaligned AIs). I don't know how the offense defense balance goes here. I think full defense might require doing really crazy things that organizations are unwilling to do. (E.g. unleashing a huge number of controlled AI agents which may commit crimes in order to take free energy.)

The sort of tasks involved in buying compute etc are ones most humans could do.

My guess is that you need to be a decent but not amazing software engineer to ARA. So, I wouldn't describe this as "tasks most humans can do".

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2024-06-03T19:26:33.935Z · LW(p) · GW(p)

Part of my view here is that ARA agents will have unique affordances that no human organization will have had before (like having truly vast, vast amounts of pretty high skill labor).

The more labor they have, the more detectable they are, and the easier they are to shut down. Also, are you picturing them gaining money from crimes, then buying compute legitimately? I think the "crimes" part is hard to stop but the "paying for compute" part is relatively easy to stop.

My guess is that you need to be a decent but not amazing software engineer to ARA.

Yeah, you're probably right. I still stand by the overall point though.

Replies from: Hjalmar_Wijk, ryan_greenblatt↑ comment by Hjalmar_Wijk · 2024-06-05T00:48:36.652Z · LW(p) · GW(p)

I think in our current situation shutting down all rogue AI operations might be quite tough (though limiting their scale is certainly possible, and I agree with the critique that absent slowdowns or alignment problems or regulatory obstacles etc. it would be surprising if these rogue agents could compete with legitimate actors with many more GPUs).

Assuming the AI agents have money there are maybe three remaining constraints the AI agents need to deal with:

- purchasing/renting the GPUs,

- (if not rented through a cloud provider) setting them up and maintaining them, and

- evading law enforcement or other groups trying to locate them and shut them down

For acquiring the GPUs, there are currently a ton of untracked GPUs spread out around the world, both gaming GPUs like 4090s and datacenter GPUs like H100s. I can buy or rent them with extremely minimal KYC from tons of different places. If we assume ARA agents are able to do all of the following:

- Recruit people to physically buy GPUs from retail stores

- Take delivery of online ordered GPUs in anonymized ways using intermediaries

- Recruit people to set up fake AI startups and buy GPUs through them

then the effort required to prevent them from acquiring any GPUs in a world anything like today seems crazy. And even if the US + allies started confiscating or buying up 4090s en masse, it's enough that some other countries adopt laxer standards (perhaps to attract AI talent that is frustrated by the new draconian GPU-control regime).

As for setting up acquired GPUs, I think the AI agents will probably be able to find colocation operators that don't ask too many questions in many parts of the world, but even if the world was to coordinate on extremely stringent controls, the AI agents could set up datacenters of their own in random office buildings or similar - each inference setup wouldn't need that much power and I think it would be very hard to track it down.

As for not being shut down by law enforcement, I think this might take some skill in cybersecurity and opsec etc. but if it operates as dozens or hundreds of separate "cells", and each has enough labor to figure out decorrelated security and operations etc. then it seems quite plausible that they wouldn't be shut down. Historically "insurgency groups" or hacker networks etc. seem to be very difficult to fully eliminate, even when a ton of resources are thrown at the problem.

I don't think any of the above would require superhuman abilities, though many parts are challenging, which is part of why evals targeting these skills could provide a useful safety case - e.g. if it's clear that the AI could not pull off the cybersecurity operation required to not be easily shut down then this is a fairly strong argument that this AI agent wouldn't be able to pose a threat [Edit: from rogue agents operating independently, not including other threat models like sabotaging things from within the lab etc.].

Though again I am not defending any very strong claim here, e.g. I'm not saying:

- that rogue AIs will be able to claim 5+% of all GPUs or an amount competitive with a well-resourced legitimate actor (I think the world could shut them out of most of the GPU supply, and that over time the situation would worsen for the AI agents as the production of more/better GPUs is handled with increasing care),

- that these skills alone mean it poses a risk of takeover or that it could cause significant damage (I agree that this would likely require significant further capabilities, or many more resources, or already being deployed aggressively in key areas of the military etc.)

- that "somewhat dumb AI agents self-replicate their way to a massive disaster" is a key threat model we should be focusing our energy on

I'm just defending the claim that ~human-level rogue AIs in a world similar to the world of today might be difficult to fully shut down, even if the world made a concerted effort to do so.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-06-05T05:29:42.992Z · LW(p) · GW(p)

e.g. if it's clear that the AI could not pull off the cybersecurity operation required to not be easily shut down then this is a fairly strong argument that this AI agent wouldn't be able to pose a threat

I agree that this is a fairly strong argument that this AI agent wouldn't be able be able cause problems while rogue. However, I think there is also a concern that this AI will be able to cause serious problems via the affordances it is granted through the AI lab.

In particular, AIs might be given huge affordances internally with minimal controls by default. And this might pose a substantial risk even if AIs aren't quite capable enough to pull off the cybersecurity operation. (Though it seems not that bad of a risk.)

Replies from: Hjalmar_Wijk↑ comment by Hjalmar_Wijk · 2024-06-05T17:52:04.418Z · LW(p) · GW(p)

Yeah that's right, I made too broad a claim and only meant to say it was an argument against their ability to pose a threat as rogue independent agents.

↑ comment by ryan_greenblatt · 2024-06-03T19:40:12.758Z · LW(p) · GW(p)

Also, are you picturing them gaining money from crimes, then buying compute legitimately? I think the "crimes" part is hard to stop but the "paying for compute" part is relatively easy to stop.

Both legitimately and illegitimately acquiring compute could plausibly be the best route. I'm uncertain.

It doesn't seem that easy to lock down legitimate compute to me? I think you need to shutdown people buying/renting 8xH100 style boxes. This seems quite difficult potentially.

The model weights probably don't fit in VRAM in a single 8xH100 device (the model weights might be 5 TB when 4 bit quantized), but you can maybe fit with 8-12 of these. And, you might not need amazing interconnect (e.g. normal 1 GB/s datacenter internet is fine) for somewhat high latency (but decently good throughput) pipeline parallel inference. (You only need to send the residual stream per token.) I might do a BOTEC on this later. Unless you're aware of a BOTEC on this?

Replies from: Hjalmar_Wijk, tao-lin↑ comment by Hjalmar_Wijk · 2024-06-05T00:10:18.219Z · LW(p) · GW(p)

I did some BOTECs on this and think 1 GB/s is sort of borderline, probably works but not obviously.

E.g. I assumed a ~10TB at fp8 MoE model with a sparsity factor of 4 with 32768 hidden size.

With 32kB per token you could send at most 30k tokens/second over a 1GB/s interconnect. Not quite sure what a realistic utilization would be, but maybe we halve that to 15k?

If the model was split across 20 8xH100 boxes, then each box might do ~250 GFLOP/token (2 * 10T parameters / (4*20)), so each box would do at most 3.75 PFLOP/second, which might be about ~20-25% utilization.

This is not bad, but for a model with much more sparsity or GPUs with a different FLOP/s : VRAM ratio or spottier connection etc. the bandwidth constraint might become quite harsh.

(the above is somewhat hastily reconstructed from some old sheets, might have messed something up)

↑ comment by Tao Lin (tao-lin) · 2024-06-04T23:36:23.304Z · LW(p) · GW(p)

for reference, just last week i rented 3 8xh100 boxes without any KYC

↑ comment by Aaron_Scher · 2024-06-02T21:02:30.246Z · LW(p) · GW(p)

AIs that do ARA will need to be operating at the fringes of human society, constantly fighting off the mitigations that humans are using to try to detect them and shut them down

Why do you think this? What is the general story you're expecting?

I think it's plausible that humanity takes a very cautious response to AI autonomy, including hunting and shutting down all autonomous AIs — but I don't think the arguments I'm considering justify more than like 70% confidence (I think I'm somewhere around 60%). Some arguments pointing toward "maybe we won't respond sensibly to ARA":

- There are not known-to-me laws prohibiting autonomous AIs from existing (assuming they're otherwise following laws), in any jurisdiction.

- Properly dealing with ARA is a global problem, requiring either buy-in from dozens of countries, or somebody to carry out cyber-offensive operations in foreign countries, in order to shut down ARA models. We see precedence for this kind of international action w.r.t. WMD threats like US/Israel's attacks on Iran's nuclear program, and I expect there's a lot of tit-for-tat going on in the nation state hacking world, but it's not obvious that autonomous AIs would rise to a threat level that warrants this.

- It's not clear to me that the public cares about autonomous AIs existing in many domains (at least in many domains; there are some domains like dating where people have a real ick). I think if we got credible evidence that Mark Zuckerberg was a lizard or a robot, few people would stop using Facebook products as a result. Many people seem to think various tech CEOs like Elon Musk and Jeff Bezos are terrible, yet still use their products.

- A lot of this seems like it depends on whether autonomous AIs actually cause any serious harm. I can definitely imagine a world with autonomous AIs running around like small companies and twitter being filled with "but show me the empirical evidence for risk, all you safety-ists have is your theoretical arguments which haven't held up, and we have tons of historical evidence of small companies not causing catastrophic harm". And indeed, I don't really expect the conceptual arguments for risk from roughly human level autonomous AIs are likely to convince enough of the public + policy makers that they need to take drastic actions to limit autonomous AIs; I definitely wouldn't be highly confident that will will respond appropriately in the absence of serious harm. If the autonomous AIs are basically minding their own business, I'm not sure there will be major effort to limit them.

↑ comment by Donald Hobson (donald-hobson) · 2024-06-11T14:31:03.789Z · LW(p) · GW(p)

If AI labs are slamming on the recursive self improvement ASAP, it may be that Autonomous Replicating Agents are irrelevant. But that's a "ARA can't destroy the world if AI labs do it first" argument.

ARA may well have more compute than AI labs. Especially if the AI labs are trying to stay within the law, and the ARA is stealing any money/compute that it can hack it's way into. (Which could be >90% of the internet if it's good at hacking. )

there will be millions of other (potentially misaligned) models being deployed deliberately by humans, including on very sensitive tasks (like recursive self-improvement).

Ok. That's a world model in which humans are being INCREDIBLY stupid.

If we want to actually win, we need to both be careful about deploying those other misaligned models, and stop ARA.

Alice: That snake bite looks pretty nasty, it could kill you if you don't get it treated.

Bob: That snake bite won't kill me, this hand grenade will. Pulls out pin.

↑ comment by Tapatakt · 2024-06-01T11:28:59.233Z · LW(p) · GW(p)

they'll need to recursively self-improve at the same rate at which leading AI labs are making progress

But if they have good hacking abilities, couldn't they just steal the progress of leading AI labs? In that case they don't have to have the ability to self-improve.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2024-06-01T00:38:38.956Z · LW(p) · GW(p)

Thanks for this comment, but I think this might be a bit overconfident.

constantly fighting off the mitigations that humans are using to try to detect them and shut them down.

Yes, I have no doubt that if humans implement some kind of defense, this will slow down ARA a lot. But:

- 1) It’s not even clear people are going to try to react in the first place. As I say, most AI development is positive. If you implement regulations to fight bad ARA, you are also hindering the whole ecosystem. It’s not clear to me that we are going to do something about open source. You need a big warning shot beforehand and this is not really clear to me that this happens before a catastrophic level. It's clear they're going to react to some kind of ARAs (like chaosgpt), but there might be some ARAs they won't react to at all.

- 2) it’s not clear this defense (say for example Know Your Customer for providers) is going to be sufficiently effective to completely clean the whole mess. if the AI is able to hide successfully on laptops + cooperate with some humans, this is going to be really hard to shut it down. We have to live with this endemic virus. The only way around this is cleaning the virus with some sort of pivotal act, but I really don’t like that.

While doing all that, in order to stay relevant, they'll need to recursively self-improve at the same rate at which leading AI labs are making progress, but with far fewer computational resources.

"at the same rate" not necessarily. If we don't solve alignment and we implement a pause on AI development in labs, the ARA AI may still continue to develop. The real crux is how much time the ARA AI needs to evolve into something scary.

Superintelligences could do all of this, and ARA of superintelligences would be pretty terrible. But for models in the broad human or slightly-superhuman ballpark, ARA seems overrated, compared with threat models that involve subverting key human institutions.

We don't learn much here. From my side, I think that superintelligence is not going to be neglected, and big labs are taking this seriously already. I’m still not clear on ARA.

Remember, while the ARA models are trying to survive, there will be millions of other (potentially misaligned) models being deployed deliberately by humans, including on very sensitive tasks (like recursive self-improvement). These seem much more concerning.

This is not the central point. The central point is:

- At some point, ARA is unshutdownable unless you try hard with a pivotal cleaning act. We may be stuck with a ChaosGPT forever, which is not existential, but pretty annoying. People are going to die.

- the ARA evolves over time. Maybe this evolution is very slow, maybe fast. Maybe it plateaus, maybe it does not plateau. I don't know

- This may take an indefinite number of years, but this can be a problem

the "natural selection favors AIs over humans" argument is a fairly weak one; you can find some comments I've made about this by searching my twitter.

I’m pretty surprised by this. I’ve tried to google and not found anything.

Overall, I think this still deserves more research

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2024-06-03T19:21:50.422Z · LW(p) · GW(p)

1) It’s not even clear people are going to try to react in the first place.

I think this just depends a lot on how large-scale they are. If they are using millions of dollars of compute, and are effectively large-scale criminal organizations, then there are many different avenues by which they might get detected and suppressed.

If we don't solve alignment and we implement a pause on AI development in labs, the ARA AI may still continue to develop.

A world which can pause AI development is one which can also easily throttle ARA AIs.

The central point is:

- At some point, ARA is unshutdownable unless you try hard with a pivotal cleaning act. We may be stuck with a ChaosGPT forever, which is not existential, but pretty annoying. People are going to die.

- the ARA evolves over time. Maybe this evolution is very slow, maybe fast. Maybe it plateaus, maybe it does not plateau. I don't know

- This may take an indefinite number of years, but this can be a problem

This seems like a weak central point. "Pretty annoying" and some people dying is just incredibly small compared with the benefits of AI. And "it might be a problem in an indefinite number of years" doesn't justify the strength of the claims you're making in this post, like "we are approaching a point of no return" and "without a treaty, we are screwed".

An extended analogy: suppose the US and China both think it might be possible to invent a new weapon far more destructive than nuclear weapons, and they're both worried that the other side will invent it first. Worrying about ARAs feels like worrying about North Korea's weapons program. It could be a problem in some possible worlds, but it is always going to be much smaller, it will increasingly be left behind as the others progress, and if there's enough political will to solve the main problem (US and China racing) then you can also easily solve the side problem (e.g. by China putting pressure on North Korea to stop).

you can find some comments I've made about this by searching my twitter

Link here, and there are other comments in the same thread. Was on my laptop, which has twitter blocked, so couldn't link it myself before.

Replies from: ryan_greenblatt, charbel-raphael-segerie↑ comment by ryan_greenblatt · 2024-06-03T19:45:44.171Z · LW(p) · GW(p)

A world which can pause AI development is one which can also easily throttle ARA AIs.

I push back on this somewhat in a discussion thread here [LW(p) · GW(p)]. (As a pointer to people reading through.)

Overall, I think this is likely to be true (maybe 60% likely), but not enough that we should feel totally fine about the situation.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2024-06-05T08:13:08.609Z · LW(p) · GW(p)

doesn't justify the strength of the claims you're making in this post, like "we are approaching a point of no return" and "without a treaty, we are screwed".

I agree that's a bit too much, but it seems to me that we're not at all on the way to stopping open source development, and that we need to stop it at some point; maybe you think ARA is a bit early, but I think we need a red line before AI becomes human-level, and ARA is one of the last arbitrary red lines before everything accelerates.

But I still think no return to loss of control because it might be very hard to stop ARA agent still seems pretty fair to me.

Link here, and there are other comments in the same thread. Was on my laptop, which has twitter blocked, so couldn't link it myself before.

I agree with your comment on twitter that evolutionary forces are very slow compared to deliberate design, but that is not way I wanted to convey (that's my fault). I think an ARA agent would not only depend on evolutionary forces, but also on the whole open source community finding new ways to quantify, prune, distill, and run the model in a distributed way in a practical way. I think the main driver this "evolution" would be the open source community & libraries who will want to create good "ARA", and huge economic incentive will make agent AIs more and more common and easy in the future.

↑ comment by Épiphanie Gédéon (joy_void_joy) · 2024-06-01T00:01:16.025Z · LW(p) · GW(p)

Remember, while the ARA models are trying to survive, there will be millions of other (potentially misaligned) models being deployed deliberately by humans, including on very sensitive tasks (like recursive self-improvement). These seem much more concerning.

So my main reason for worry personally is that there might be an ARA that is deployed with just the goal of "just clone yourself as much as possible" or a goal similar to this. In this case, the AI does not really have to survive particularly among others as long as it is able to pay for itself and continue spreading, or infecting local computers by copying itself to local computers etc... This is a worrisome scenario in that the AI might just be dormant, and already hard to kill. If the AI furthermore has some ability for avoiding detection and adapting/modifying itself, then I really worry that its goals are going to evolve and get selected to converge progressively toward a full takeover (though it may also plateau) and that we will be completely oblivious to it for most of this time, as there won't really be any incentive to either detect or fight this thoroughly.

Of course, there is also the scenario of an chaos-GPT ARA agent, and this I worry is going to kill many people without it ever truly shutting down, or if we can, it might take a while.

All in all, I think this is more of a question of costs-benefits than if how likely it is. For instance, I think that implementing Know Your Customer policy on all providers right now could be quite feasible and would slow down the initial steps of an ARA agent a lot.

I feel like the main crux of the argument is:

- Whether an ARA agent plateaus or goes exponential in terms of abilities and takeover goals.

- How much time it will take for an ARA agent that takes over to fully take over after being released.

I am still very unsure about 1, I could imagine many scenarios where the endemic ARA just stagnates and never really transforms into something more.

However, I feel like for 2. you have a model that it is going to take a long time for such an agent to really take over. I am unsure about that, but even if that were the case, my main concern is that once the seed ARA is released (and it might be only very slightly capable of adaptation at first), the n it is going to be extremely difficult to shut it down. If AI labs advance significantly with respect to superintelligence, implementing pause AI might not be too late, but if such an agent has already been released there is not going to be much we can do about it.

Separately, I think the "natural selection favors AIs over humans" argument is a fairly weak one; you can find some comments I've made about this by searching my twitter.

I would be very interested to hear more. I didn't find anything from a quick search on your twitter, do you have a link or a pointer I could read more on for counterarguments about "natural selection favors AIs over humans"?

A very good essay. But I have an amendment, which makes it more alarming. Before autonomous replication and adaptation is feasible, non-autonomous replication and adaptation will be feasible. Call it NARA.

If, as you posit, an ARA agent can make at least enough money to pay for its own instantiation, it can presumably make more money than that, which can be collected as profit by its human master. So what we will see is this: somebody starts a company to provide AI services. It is profitable, so they rent an ever-growing amount of cloud compute. They realize they have an ever-growing mass of data about the actual behavior of the AI and the world, so they decide to let their agent learn (“adapt”) in the direction of increased profit. Also, it is a hassle to keep setting up server instances, so they have their AI do some of the work of hiring more cloud services and starting instances of the AI (“reproduce”). Of course they retain enough control to shut down malfunctioning instances; that‘s basic devops (“non-autonomous”).

This may be occurring now. If not now, soon.

This will soak up all the free energy that would otherwise be available to ARA systems. An ARA can only survive in a world where it can be paid to provide services at a higher price than the cost of compute. The existence of an economy of NARA agents will drive down the cost of AI services, and/or drive up the cost of compute, until they are equal. (That‘s a standard economic argument. I can expand it if you like.)

NARAs are slightly less alarming than ARAs, since they are under the legal authority of their corporate management. So before the AI can ascend to alarming levels of power, they must first suborn the management, through payment, persuasion, or blackmail. On the other hand, they’re more alarming because there are no red lines for us to stop them at. All the necessary prerequisites have already occurred in isolation. All that remains is to combine them.

Well, that’s an alarming conclusion. My p(doom) just went up a bit.

I honestly don't think ARA immediately and necessarily leads to overall loss of control. It would in a world that has also widespread robotics. What it would potentially be, however, is a cataclysmic event for the Internet and the digital world, possibly on par with a major solar flare, which is bad enough. Destruction of trust, cryptography, banking system belly up, IoT devices and basically all systems possibly compromised. We'd look at old computers that have been disconnected from the Internet from before the event the way we do at pre-nuclear steel. That's in itself bad and dangerous enough to worry about, and far more plausible than outright extinction scenarios, which require additional steps.

9 comments

Comments sorted by top scores.

comment by Orpheus16 (akash-wasil) · 2024-05-31T15:10:01.711Z · LW(p) · GW(p)

Why do you think we are dropping the ball on ARA?

I think many members of the policy community feel like ARA is "weird" and therefore don't want to bring it up. It's much tamer to talk about CBRN threats and bioweapons. It also requires less knowledge and general competence– explaining ARA and autonomous systems risks is difficult, you get more questions, you're more likely to explain something poorly, etc.

Historically, there was also a fair amount of gatekeeping, where some of the experienced policy people were explicitly discouraging people from being explicit about AGI threat models (this still happens to some degree, but I think the effect is much weaker than it was a year ago.)

With all this in mind, I currently think raising awareness about ARA threat models and AI R&D threat models is one of the most important things for AI comms/policy efforts to get right.

In the status quo, even if the evals go off, I don't think we have laid the intellectual foundation required for policymakers to understand why the evals are dangerous. "Oh interesting– an AI can make copies of itself? A little weird but I guess we make copies of files all the time, shrug." or "Oh wow– AI can help with R&D? That's awesome– seems very exciting for innovation."

I do think there's a potential to lay the intellectual foundation before it's too late, and I think many groups are starting to be more direct/explicit about the "weirder" threat models. Also, I think national security folks have more of a "take things seriously and worry about things even if there isn't clear empirical evidence yet" mentality than ML people. And I think typical policymakers fall somewhere in between.

Replies from: rguerreschi↑ comment by rguerreschi · 2024-06-01T07:48:17.174Z · LW(p) · GW(p)

We need national security people across the world to be speaking publicly about this, otherwise the general discussion on threaats and risks of AI remains gravely incomplete and bias

Replies from: dr_s↑ comment by dr_s · 2024-06-02T12:53:54.761Z · LW(p) · GW(p)

The problem is that as usual people will worry that the NatSec guys are using the threat to try to slip us the pill of additional surveillance and censorship for political purposes - and they probably won't be entirely wrong. We keep undermining our civilizational toolset by using extreme measures for trivial partisan stuff and that reduces trust.

comment by Gordon Seidoh Worley (gworley) · 2024-05-31T23:58:04.517Z · LW(p) · GW(p)

According to METR, the organization that audited OpenAI, a dozen tasks indicate ARA capabilities.

Small comment, but @Beth Barnes [LW · GW] of METR posted on Less Wrong just yesterday [LW · GW] to say "We should not be considered to have ‘audited’ GPT-4 or Claude".

This doesn't appear to be a load-bearing point in your post, but would still be good to update the language to be more precise.

comment by Jonathan Claybrough (lelapin) · 2024-05-31T14:49:29.773Z · LW(p) · GW(p)

Might be good to have a dialogue format with other people who agree/disagree to flesh out scenarios and countermeasures

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2024-05-31T15:06:38.677Z · LW(p) · GW(p)

Why not! There are many many questions that were not discussed here because I just wanted to focus on the core part of the argument. But I agree details and scenarios are important, even if I think this shouldn't change too much the basic picture depicted in the OP.

Here are some important questions that were voluntarily omitted from the QA for the sake of not including stuff that fluctuates too much in my head;

- would we react before the point of no return?

- Where should we place the red line? Should this red line apply to labs?

- Is this going to be exponential? Do we care?

- What would it look like if we used a counter-agent that was human-aligned?

- What can we do about it now concretely? Is KYC something we should advocate for?

- Don’t you think an AI capable of ARA would be superintelligent and take-over anyway?

- What are the short term bad consequences of early ARA? What does the transition scenario look like.

- Is it even possible to coordinate worldwide if we agree that we should?

- How much human involvement will be needed in bootstrapping the first ARAs?

We plan to write more about these with @Épiphanie Gédéon [LW · GW] in the future, but first it's necessary to discuss the basic picture a bit more.

Replies from: akash-wasil↑ comment by Orpheus16 (akash-wasil) · 2024-05-31T15:14:47.400Z · LW(p) · GW(p)

Potentially unpopular take, but if you have the skillset to do so, I'd rather you just come up with simple/clear explanations for why ARA is dangerous, what implications this has for AI policy, present these ideas to policymakers, and iterate on your explanations as you start to see why people are confused.

Note also that in the US, the NTIA has been tasked with making recommendations about open-weight models. The deadline for official submissions has ended but I'm pretty confident that if you had something you wanted them to know, you could just email it to them and they'd take a look. My impression is that they're broadly aware of extreme risks from certain kinds of open-sourcing but might benefit from (a) clearer explanations of ARA threat models and (b) specific suggestions for what needs to be done.

comment by Stephen McAleese (stephen-mcaleese) · 2024-06-01T07:21:34.314Z · LW(p) · GW(p)

Thank you for the blog post. I thought it was very informative regarding the risk of autonomous replication in AIs.

It seems like the Centre for AI Security is a new organization.

I've seen the announcement post on it's website. Maybe it would be a good idea to cross-post it to LessWrong as well.