Outline of Galef's "Scout Mindset"

post by Rob Bensinger (RobbBB) · 2021-08-10T00:16:59.050Z · LW · GW · 17 commentsContents

Introduction PART I: The Case for Scout Mindset PART II: Developing Self-Awareness PART III: Thriving Without Illusions PART IV: Changing Your Mind PART V: Rethinking Identity None 17 comments

Julia Galef's The Scout Mindset is superb.

For effective altruists, I think (based on the topic and execution) it's straightforwardly the #1 book you should use when you want to recruit new people to EA. It doesn't actually talk much about EA, but I think starting people on this book will result in an EA that's thriving more and doing more good five years from now, compared to the future EA that would exist if the top go-to resource were more obvious choices like The Precipice, Doing Good Better, the EA Handbook, etc.

For rationalists, I think the best intro resource is still HPMoR or R:AZ, but I think Scout Mindset is a great supplement to those, and probably a better starting point for people who prefer Julia's writing style over Eliezer's.

I've made an outline of the book below, for my own reference and for others who have read it. If you don't mind spoilers, you can also use this to help decide whether the book's worth reading for you, though my summary skips a lot and doesn't do justice to Julia's arguments.

Introduction

- Scout mindset is "the motivation to see things as they are, not as you wish they were".

- We aren't perfect scouts, but we can improve. "My approach has three prongs":

- Realize that truth isn't in conflict with your other goals. People tend to overestimate how useful self-deception is for things like personal happiness and motivation, starting a company, being an activist, etc.

- Learn tools that make it easier to see clearly. Use various kinds of thought experiments and probabilistic reasoning, and rethink how you go about listening to the "other side" of an issue.

- Appreciate the emotional rewards of scout mindset. "It's empowering to be able to resist the temptation to self-deceive, and to know that you can face reality even when it's unpleasant. There's an equanimity that results from understanding risk and coming to terms with the odds you're facing. And there's a refreshing lightness in the feeling of being free to explore ideas and follow the evidence wherever it leads". Looking at lots of real-world examples of people who have exemplified scout mindset can make these positives more salient.

PART I: The Case for Scout Mindset

Chapter 1. Two Types of Thinking

- "Can I believe it?" vs. "must I believe it?" In directionally motivated reasoning, often shortened to "motivated reasoning", we disproportionately put our effort into finding evidence/reasons that support what we wish were true.

- Reasoning as defensive combat. Motivated reasoning, a.k.a. soldier mindset, "doesn't feel like motivated reasoning from the inside". But it's extremely common, as shown by how often we describe our reasoning in militaristic terms.

- "Is it true?" An alternative to (directionally) motivated reasoning is accuracy motivated reasoning, i.e., scout mindset.

- Your mindset can make or break your judgment. This stuff matters in real life, in almost every domain. Nobody is purely a scout or purely a soldier, but it's possible to become more scout-like.

Chapter 2. What the Soldier is Protecting

- "[I]f scout mindset is so great, why isn't everyone already using it all the time?" Three emotional reasons:

- Comfort: avoiding unpleasant emotions. This even includes comforting pessimism: "there's no hope, so you might as well not worry about it."

- Self-esteem: feeling good about ourselves. Again, this can include ego-protecting negativity and avoiding "'getting my hopes up'".

- Morale: motivating ourselves to do hard things.

- And three social reasons:

- Persuasion: convincing ourselves so we can convince others.

- Image: choosing beliefs that make us look good. "Psychologists call it impression management, and evolutionary psychologists call it signaling: When considering a claim, we implicitly ask ourselves, 'What kind of person would believe a claim like this, and is that how I want others to see me?'"

- Belonging: fitting in to your social groups.

- "We use motivated reasoning not because we don't know any better, but because we're trying to protect things that are vitally important to us". So it's no surprise that e.g. 'training people in critical thinking' don't help change people's thinking. But while "soldier mindset is often our default strategy for getting what we want", it's not generally the best strategy available.

Chapter 3. Why Truth is More Valuable Than We Realize

- We make unconscious trade-offs. "[T]he whole point of self-deception is that it's occurring beneath our conscious awareness. [...] So it's left up to our unconscious minds to choose, on a case-by-case basis, which goals to prioritize." Sometimes it chooses to be more soldier-like, sometimes more scout-like.

- Are we rationally irrational? I.e., are we good at "unconsciously choosing just enough epistemic irrationality to achieve our [instrumental] social and emotional goals, without impairing our judgment too much"? No. "There are several major biases in our decision-making [... that] cause us to overvalue soldier mindset":

- We overvalue the immediate rewards of soldier mindset. Present bias: we prefer small rewards now over large rewards later.

- We underestimate the value of building scout habits. Cognitive skills are abstract (and, again, have most of their benefits in the future), so they're harder to notice and care about.

- We underestimate the ripple effects of self-deception. These ripple effects are "delayed and unpredictable", which is "exactly the kind of cost we tend to neglect".

- We overestimate social costs.

- An accurate map is more useful now. Humans have more options now than we did tens of thousands of years ago, and more ability to improve our circumstances. "So if our instincts undervalue truth, that's not surprising—our instincts evolved in a different world, one better suited to the soldier."

PART II: Developing Self-Awareness

Chapter 4. Signs of a Scout

- "A key factor preventing us from being in scout mindset more frequently is our conviction that we're already in it." Examples of "things that make us feel like scouts even when we're not":

- Feeling objective doesn't make you a scout.

- Being smart and knowledgeable doesn't make you a scout. On ideologically charged questions, learning more tends to make people more polarized; and even scientists studying cognitive biases have a track record of exhibiting soldier mindset.

- Actually practicing scout mindset makes you a scout. "The test of scout mindset isn't whether you see yourself as the kind of person who [changes your mind in response to evidence, is fair-minded, etc. ...] It's whether you can point to concrete cases in which you did, in fact, do those things. [...] The only real sign of a scout is whether you act like one." Behavioral cues to look for:

- Do you tell other people when you realize they were right?

- How do you react to personal criticism? "Are there examples of criticism you've acted upon? Have you rewarded a critic (for example, by promoting him)? Do you go out of your way to make it easier for other people to criticize you?"

- Do you ever prove yourself wrong?

- Do you take precautions to avoid fooling yourself? E.g., "Do you avoid biasing the information you get?" and "[D]o you decide ahead of time what will count as a success and what will count as a failure, so you're not tempted to move the goalposts later?"

- Do you have any good critics? "Can you name people who are critical of your beliefs, profession, or life choices who you consider thoughtful, even if you believe they're wrong? Or can you at least name reasons why someone might disagree with you that you would consider reasonable[...]?"

- "But the biggest sign of scout mindset may be this: Can you point to occasions in which you were in soldier mindset? [... M]otivated reasoning is our natural state," so if you never notice yourself doing it, the likeliest explanation is that you're not self-aware about it.

Chapter 5. Noticing Bias

- "One of the essential tools in a magician's tool kit is a form of manipulation called forcing." The magician asks you to choose between two cards. "If you point to the card on the left, he says, 'Okay, that one's yours.' If you point to the card on the right, he says, 'Okay, we'll remove that one.' [...] If you could see both of those possible scenarios at once, the trick would be obvious. But because you end up in only one of those worlds, you never realize."

- "Forcing is what your brain is doing to get away with motivated reasoning while still making you feel like you're being objective." The Democratic voter doesn't notice that they're going easier on a Democratic politician than they would on a Republican, because the question "How would I act if this politician were a Republican?" isn't salient to them, or they're tricking themselves into thinking they'd apply the same standard.

- A thought experiment is a peek into the counterfactual world. "You can't detect motivated reasoning in yourself just by scrutinizing your reasoning and concluding that it makes sense. You have to compare your reasoning to the way you would have reasoned in a counterfactual world, a world in which your motivations were different—would you judge that politician's actions differently if he was in the opposite party? [...] Would you consider that study's methodology sound if its conclusion supported your side? [...] Try to actually imagine the counterfactual scenario. [... D]on't simply formulate a verbal question for yourself. Conjure up the counterfactual world, place yourself in it, and observe your reaction." Five types of thought experiment:

- The double standard test. Am I judging one person/group by a standard I wouldn't apply to another person/group?

- The outsider test. "Imagine someone else stepped into your shoes—what do you expect they would do in your situation?" Or imagine that you're an outsider who just magically teleported into your body.

- The conformity test. "If other people no longer held this view, would you still hold it?"

- The selective skeptic test. "Imagine this evidence supported the other side. How credible would you find it then?"

- The status quo bias test. "Imagine your current situation was no longer the status quo. Would you then actively choose it?"

- Thought experiments on their own "can't tell you what's true or fair or what decision you should make." But they allow you to catch your brain "in the act of motivated reasoning," and take that into account as you work to figure out what's true.

- Beyond the specific thought experiments, the core skill of this chapter is "a kind of self-awareness, a sense that your judgments are contingent—that what seems true or reasonable or fair or desirable can change when you mentally vary some features of the question that should have been irrelevant."

Chapter 6. How Sure Are You?

- We like feeling certain. "Your strength as a scout is in your ability [...] to think in shades of gray instead of black and white. To distinguish the feeling of '95% sure' from '75% sure' from '55% sure'."

- Quantifying your uncertainty. For scouts, probabilities are predictions of how likely they are to be right. The goal is to be calibrated in the probabilities you assign.

- A bet can reveal how sure you really are. "Evolutionary psychologist Robert Kurzban has an analogy[...] In a company, there's a board of directors whose role is to make the crucial decisions for the company—how to spend its budget, which risks to take, when to change strategies, and so on. Then there's a press secretary whose role it is to give statements[...] The press secretary makes claims; the board makes bets. [...] A bet is any decision in which you stand to gain or lose something of value, based on the outcome."

- The equivalent bet test. By comparing different bets and seeing when you prefer taking one vs. the other, or when they feel about the same, you can translate your feeling "does X sound like a good bet?" into probabilities.

- The core skill of this chapter is "being able to tell the difference between the feeling of making a claim and the feeling of actually trying to guess what's true."

PART III: Thriving Without Illusions

Chapter 7. Coping with Reality

- Keeping despair at bay. Motivated reasoning is especially tempting in emergencies; but it's also especially dangerous in emergencies. In dire situations, it's essential to be able to keep despair at bay without distorting your map of reality. E.g., you can count your blessings, come to terms with your situation, or remind yourself that you're doing the best you can.

- Honest vs. self-deceptive ways of coping. Honest ways of coping with painful or difficult circumstances include:

- Make a plan. "It's striking how much the urge to conclude 'That's not true' diminishes once you feel like you have a concrete plan for what you would do if the thing were true."

- Notice silver linings. "You're recognizing a silver lining to the cloud, not trying to convince yourself the whole cloud is silver. But in many cases, that's all you need".

- Focus on a different goal.

- Things could be worse.

- Does research show that self-deceived people are happier? No, the research quality is terrible.

Chapter 8. Motivation Without Self-Deception

- Using self-deception to motivate yourself is bad, because:

- An accurate picture of your odds helps you choose between goals.

- An accurate picture of the odds helps you adapt your plan over time.

- An accurate picture of the odds helps you decide how much to stake on success.

- Bets worth taking. "[S]couts aren't motivated by the thought, 'This is going to succeed.' They're motivated by the thought, 'This is a bet worth taking.'" Which bets are worth taking is a matter of their expected value.

- Accepting variance gives you equanimity. Expecting to always succeed is unrealistic, and will lead to unnecessary disappointments. "Instead of being elated when your bets pay off, and crushed when they don't," try to get a realistic picture of the variance in bets and focus on ensuring your bets have high expected value.

- Coming to terms with the risk.

Chapter 9. Influence Without Overconfidence

- Two types of confidence. Epistemic confidence is "how sure you are about what's true," while social confidence is self-assurance: "Are you at ease in social situations? Do you act like you deserve to be there, like you're secure in yourself and your role in the group? Do you speak as if you're worth listening to?" Influencing people requires social confidence, which people conflate with epistemic confidence.

- People judge you on social confidence, not epistemic confidence. Various studies show that judgments of competence are mediated by perceived social (rather than epistemic) confidence.

- Two kinds of uncertainty. People trust you less if you seem uncertain due to ignorance or inexperience, but not if you seem uncertain due to reality being messy and unpredictable. Three ways to communicate uncertainty without looking inexperienced or incompetent:

- Show that uncertainty is justified.

- Give informed estimates. "Even if reality is messy and it's impossible to know the right answer with confidence, you can at least be confident in your analysis."

- Have a plan.

- You don't need to promise success to be inspiring. "You can paint a picture of the world you're trying to create, or why your mission is important, or how your product has helped people, without claiming you're guaranteed to succeed. There are lots of ways to get people excited that don't require you to lie to others or to yourself."

- "That's the overarching theme of these last three chapters: whatever your goal, there's probably a way to get it that doesn't require you to believe false things."

PART IV: Changing Your Mind

Chapter 10. How to Be Wrong

- Change your mind a little at a time. Superforecasters constantly revise their views in small ways.

- Recognizing you were wrong makes you better at being right. Most people, when they learn they were wrong, give excuses like "I Was Almost Right". Superforecasters instead "reevaluate their process, asking, 'What does this teach me about how to make better forecasts?'"

- Learning domain-general lessons. Even if your error is in a domain that seems unimportant to you, noticing your errors can teach domain-general lessons "about how the world works, or how your own brain works, and about the kinds of biases that tend to influence your judgment." Or they can help you fully internalize a lesson you previously only believed in the abstract.

- "Admitting a mistake" vs. "updating". Being factually wrong about something doesn't necessarily mean you screwed up. Learning new information should usually be thought of in matter-of-fact terms, as an opportunity to update your beliefs—not as something humbling or embarrassing.

- If you're not changing your mind, you're doing something wrong. By default, you should be learning more over time, and changing your strategy accordingly.

Chapter 11. Lean in to Confusion

- Usually, "we react to observations that conflict with our worldview by explaining them away. [...] We couldn't function in the world if we were constantly questioning our perception of reality. But especially when motivated reasoning is in play, we take it too far, shoehorning conflicting evidence into a narrative" well past the point where it makes sense. "This chapter is about how to resist the urge to dismiss details that don't fit your theories, and instead, allow yourself to be confused and intrigued by them, to see them as puzzles to be solved".

- "You don't know in advance" what surprising and confusing observations will teach you. "All too often, we assume the only two possibilities are 'I'm right' or 'The other guy is right'[...] But in many cases, there's an unknown unknown, a hidden 'option C,' that enriches our picture of the world in a way we wouldn't have been able to anticipate."

- Anomalies pile up and cause a paradigm shift. "Acknowledge anomalies, even if you don't yet know how to explain them, and even if the old paradigm still seems correct overall. Maybe they'll add up to nothing in particular. Maybe they just mean that reality is messy. But maybe they're laying the groundwork for a big change of view."

- Be willing to stay confused.

Chapter 12. Escape Your Echo Chamber

- How not to learn from disagreement. Listening to the "other side" usually makes people more polarized. "By default, we end up listening to people who initiate disagreements with us, as well as the public figures and media outlets who are the most popular representatives of the other side." But people who initiate disagreements tend to be unusually disagreeable, and popular representatives of an ideology are often "ones who do things like cheering for their side and mocking or caricaturing the other side—i.e., you". To learn from disagreement:

- Listen to people you find reasonable.

- Listen to people you share intellectual common ground with.

- Listen to people who share your goals.

- The problem with a "team of rivals." "Dissent isn't all that useful from people you don't respect or from people who don't even share enough common ground with you to agree that you're supposed to be on the same team."

- It's harder than you think. "We assume that if both people are basically reasonable and arguing in good faith, then getting to the bottom of a disagreement should be straightforward[...] When things don't play out that way [...] everyone gets frustrated and concludes the others must be irrational." But even under ideal conditions, learning from disagreements is still hard, e.g., because:

- We misunderstand each other's views.

- Bad arguments inoculate us against good arguments.

- Our beliefs are interdependent—changing one requires changing others.

PART V: Rethinking Identity

Chapter 13. How Beliefs Become Identities

- What it means for something to be part of your identity. Criticizing part of someone's identity tends to spark passionate, combative, and defensive reactions. Two things that tend to turn a belief into an identity:

- Feeling embattled.

- Feeling proud.

- Signs a belief might be an identity.

- Using the phrase "I believe".

- Getting annoyed when an ideology is criticized.

- Defiant language.

- A righteous tone.

- Gatekeeping.

- Schadenfreude.

- Epithets.

- Having to defend your view.

- "Identifying with a belief makes you feel like you have to be ready to defend it, which motivates you to focus your attention on collecting evidence in its favor. Identity makes you reflexively reject arguments that feel like attacks on you or on the status of your group. [...] And when a belief is part of your identity it becomes far harder to change your mind[.]"

Chapter 14. Hold Your Identity Lightly

- What it means to hold your identity lightly. Rather than trying to have no identities, you should try to "keep those identities from colonizing your thoughts and values. [...] Holding your identity lightly means thinking of it in a matter-of-fact way, rather than as a central source of pride and meaning in your life. It's a description, not a flag to be waved proudly."

- Could you pass an ideological Turing test? Passing means explaining an ideology "as a believer would, convincingly enough that other people couldn't tell the difference between you and a genuine believer". The ideological Turing test tests your knowledge of the other side's beliefs, but "it also serves as an emotional test: Do you hold your identity lightly enough to be able to avoid caricaturing your ideological opponents?"

- A strongly held identity prevents you from persuading others.

- Understanding the other side makes it possible to change minds.

- Is holding your identity lightly compatible with activism? Activists usually "face trade-offs between identity and impact," and holding your identity lightly can make it easier to focus on the highest-impact options.

Chapter 15. A Scout Identity

- Flipping the script on identity. Identifying as a truth-seeker can make you a better scout.

- Identity makes hard things rewarding. When you act like a scout, you can take pride and satisfaction in living up to your values. This short-term reward helps patch our bias for short-term rewards, which normally favors soldier mindset.

- Your communities shape your identity. "[I]n the medium-to-long term, one of the biggest things you can do to change your thinking is to change the people you surround yourself with."

- You can choose what kind of people you attract. You can't please everyone, so "you might as well aim to please the kind of people you'd most like to have around you, people who you respect and who motivate you to be a better version of yourself".

- You can choose your communities online.

- You can choose your role models.

17 comments

Comments sorted by top scores.

comment by [deleted] · 2021-08-10T04:07:38.182Z · LW(p) · GW(p)

Okay, I'm sold. I'll read the whole book.

comment by habryka (habryka4) · 2021-08-20T19:48:29.949Z · LW(p) · GW(p)

Promoted to curated: I found this outline really helpful, and it convinced me to read the book in full (I had previously skimmed it and put it on my to-do list, but hadn't yet started reading and wasn't sure when/whether I would get around to it).

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2021-08-20T20:27:13.659Z · LW(p) · GW(p)

I take it reading the book was worth it, then? :)

Replies from: habryka4↑ comment by habryka (habryka4) · 2021-08-20T20:30:25.366Z · LW(p) · GW(p)

Still in the middle of it, but seems pretty good so far (not much new for me, but I do think it's a pretty good book to hand someone new).

comment by gianlucatruda · 2021-08-11T16:30:56.328Z · LW(p) · GW(p)

This is a superb summary! I'll definitely be returning to this as a cheatsheet for the core ideas from the book in future. I've also linked to it in my review on Goodreads.

it's straightforwardly the #1 book you should use when you want to recruit new people to EA. [...] For rationalists, I think the best intro resource is still HPMoR or R:AZ, but I think Scout Mindset is a great supplement to those, and probably a better starting point for people who prefer Julia's writing style over Eliezer's.

Hmm... I've had great success with the HPMOR / R:AZ route for certain people. Perhaps Scout Mindset has been the missing tool for the others. It also struck me as a nice complement to Eliezer's writing, in terms of both substance and style (see below). I'll have to experiment with recommending it as a first intro to EA/rationality.

As for my own experience, I was delightfully surprised by Scout Mindset! Here's an excerpt from my review:

Replies from: Dweomite, RobbBBI'm a big fan of Julia and her podcast, but I wasn't expecting too much from Scout Mindset because it's clearly written for a more general audience and was largely based on ideas that Julia had already discussed online. I updated from that prior pretty fast. Scout Mindset is a valuable addition to an aspiring rationalist's bookshelf — both for its content and for Julia's impeccable writing style, which I aspire to.

Those familiar with the OSI model of internet infrastructure will know that there are different layers of protocols. The IP protocol that dictates how packets are directed sits at a much lower layer than the HTML protocol which dictates how applications interact. Similarly, Yudkowsky's Sequences can be thought of as the lower layers of rationality, whilst Julia's work in Scout Mindset provides the protocols for higher layers. The Sequences are largely concerned with what rationality is, whilst Scout Mindset presents tools for practically approximating it in the real world. It builds on the "kernel" of cognitive biases and Bayesian updating by considering what mental "software" we can run on a daily basis.

The core thesis of the book is that humans default towards a "soldier mindset," where reasoning is like defensive combat. We "attack" arguments or "concede" points. But there is another option: "scout mindset," where reasoning is like mapmaking.

The Scout Mindset is "the motivation to see things as they are, not as you wish they were. [...] Scout mindset is what allows you to recognize when you are wrong, to seek out your blind spots, to test your assumptions and change course."

I recommend listening to the audiobook version, which Julia narrates herself. The book is precisely as long as it needs to be, with no fluff. The anecdotes are entertaining and relevant and were entirely new to me. Overall, I think this book is a 4.5/5, especially if you actively try to implement Julia's recommendations. Try out her calibration exercise, for instance.

↑ comment by Dweomite · 2021-08-24T18:27:16.954Z · LW(p) · GW(p)

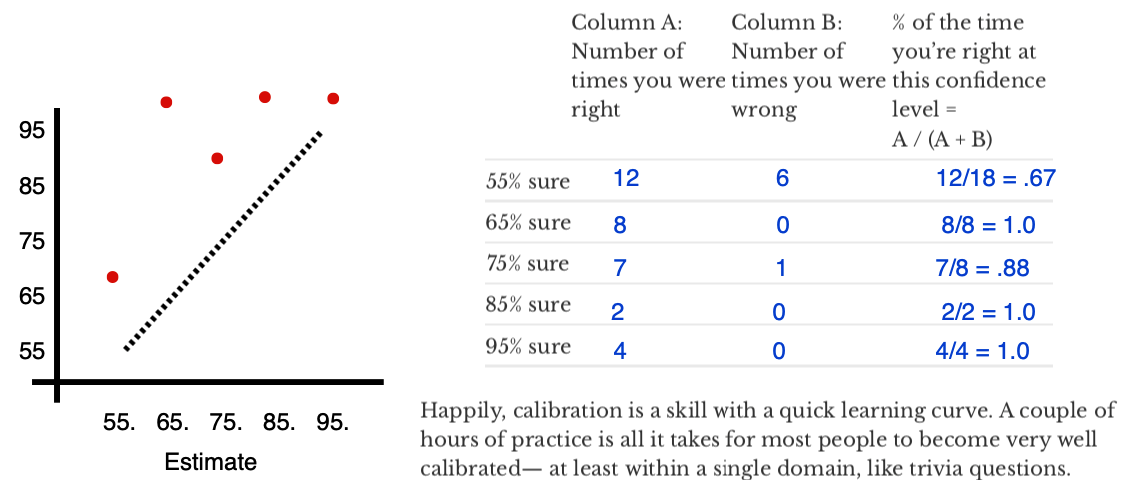

I tried the calibration exercise you linked. Skipped one question where I felt I just had no basis at all for answering, but answered all the rest, even when I felt very unsure.

When I said 95% confident, my accuracy was 100% (9/9)

When I said 85% confident, my accuracy was 83% (5/6)

When I said 75% confident, my accuracy was 71% (5/7)

When I said 65% confident, my accuracy was 60% (3/5)

At a glance, that looks like it's within rounding error of perfect. So I was feeling pretty good about my calibration, until...

When I said 55% confident, my accuracy was 92% (11/12)

I, er, uh...what? How can I be well-calibrated at every other confidence level and then get over 90% right when I think I'm basically guessing?

Null Hypothesis: Random fluke? Quick mental calculation says winning at least 11 out of 12 coin-flips would be p < .01. Plus, this is a larger sample than any other confidence level, so if I'm not going to believe this, I probably shouldn't believe any of the other results, either.

(Of course, from your perspective, I'm the one person out of who-knows-how-many test takers that got a weird result and self-selected to write a post about it. But from my perspective it seems pretty surprising.)

Hypothesis #1: There are certain subject areas where I feel like I know stuff, and other subject areas where I feel like I don't know stuff, and I'm slightly over-confident in the former but drastically under-confident in the later.

This seems likely true to some extent--I gave much less confidence overall in the "country populations" test section, but my actual accuracy there was about the same as other categories. But I also said 55% twice in each of the other 3 test sections (and got all 6 of those correct), so it seems hard to draw a natural subject-area boundary that would fully explain the results.

Hypothesis #2: When I believe I don't have any "real" knowledge, I switch mental gears to using a set of heuristics that turns out to be weirdly effective, at least on this particular test. (Maybe the test is constructed with some subtle form of bias that I'm subconsciously exploiting, but only in this mental mode?)

For example, on one question where the test asked if country X or Y had a higher population in 2019, I gave a correct, 55% confident answer on the basis of "I vaguely feel like I hear about country X a little more often than country Y, and high population seems like it would make me more likely to hear about a country, so I suppose that's a tiny shred of Bayesian evidence for X."

I have a hard time believing heuristics like that are 90% accurate, though.

Other hypotheses?

Possibly relevant: I also once tried playing CFAR's calibration game, and after 30-something binary questions in that game, I had around 40% overall accuracy (i.e. worse than random chance). I think that was probably bad luck rather than actual anti-knowledge, but I concluded that I can't use that game due to lack of relevant knowledge.

Replies from: gianlucatruda↑ comment by gianlucatruda · 2022-01-12T21:01:33.060Z · LW(p) · GW(p)

I somehow missed all notifications of your reply and just stumbled upon it by chance when sharing this post with someone.

I had something very similar with my calibration results, only it was for 65% estimates:

I think your hypotheses 1 and 2 match with my intuitions about why this pattern emerges on a test like this. Personally, I feel like a combination of 1 and 2 is responsible for my "blip" at 65%.

I'm also systematically under-confident here — that's because I cut my prediction teeth getting black swanned during 2020, so I tend to leave considerable room for tail events (which aren't captured in this test). I'm not upset about that, as I think it makes for better calibration "in the wild."

↑ comment by Rob Bensinger (RobbBB) · 2021-08-11T18:18:11.458Z · LW(p) · GW(p)

Yeah, I listened to the audiobook and thought it was great.

comment by Kaj_Sotala · 2021-08-11T13:18:49.803Z · LW(p) · GW(p)

For effective altruists, I think (based on the topic and execution) it's straightforwardly the #1 book you should use when you want to recruit new people to EA. It doesn't actually talk much about EA, but I think starting people on this book will result in an EA that's thriving more and doing more good five years from now, compared to the future EA that would exist if the top go-to resource were more obvious choices like The Precipice, Doing Good Better, the EA Handbook, etc.

I passed this review to people in a local EA group and some of them felt unclear on why you think this way, since (as you say) it doesn't seem to talk about EA much. Could you elaborate on that part?

Replies from: lincolnquirk, Jsevillamol↑ comment by lincolnquirk · 2021-08-11T14:47:05.582Z · LW(p) · GW(p)

I didn't read Scout Mindset yet, but I've listened to Julia's interviews on podcasts about it, and I have read the other books that Rob mentions in that paragraph.

The reason I nodded when Rob wrote that was that Julia's memetics are better. Her ideas are written in a way which stick in one's mind, and thus spread more easily. I don't think any of those other sources are bad -- in fact I get more from them than I expect to from Scout Mindset -- but Scout Mindset is more practically oriented (and optimized for today's political climate) in a way which those other books are not.

It also operates at a different, earlier level in the "EA Funnel": the level at which you can make people realize that more is possible. Those other books already require someone to be asking "how can I Do Good Better?" before they'll pick it up.

comment by mukashi (adrian-arellano-davin) · 2021-08-10T23:33:08.243Z · LW(p) · GW(p)

Thank you for the summary, I am comparing it to my notes and I think yours are better and more detailed.

I read it in one go the same day it was released. I think that The Scout Mindset does a great job as an introduction to rationality and most people outside the Less Wrong community will benefit more from reading this than The Sequences.

comment by Maxwell Peterson (maxwell-peterson) · 2021-08-29T23:36:12.271Z · LW(p) · GW(p)

I wasn’t going to buy it, but this post and the comments here convinced me to. Just finished the audiobook and I really liked it! In particular, packaging all the ideas, many of which are in The Sequences, into one thing called “scout mindset”, feels really useful in how I frame my own goals for how I think and act. Previously I had had injunctions like “value truth above all” kicking around in my head, but that particular injunction always felt a bit uncomfortable - “truth above all” brought to mind someone who nitpicks passing comments in chill social situations, and so on. The goal “Embody scout mindset” feels much more right as a guiding principle for me to live by.

comment by supposedlyfun · 2021-08-18T21:49:40.076Z · LW(p) · GW(p)

This review tipped me over into buying the book.

comment by hamiltonianurst · 2021-08-24T14:05:47.585Z · LW(p) · GW(p)

My friends and I liked this book enough to make a digital art project based on it!

https://mindsetscouts.com/

comment by SonnieBailey · 2021-08-21T23:40:16.366Z · LW(p) · GW(p)

Jumping in to add: I loved this book. It's well written and entertaining. I'm sympathetic to people on this forum who think they won't learn much new. It didn't change my views in a fundamental sense, since I've been reading and living this sort of stuff for a long time. But there were more fresh insights than I expected, and in any case it's always great to reinforce the central messages. It also felt extremely validating. IMO it's an ideal book for sharing many of the key tenets "rationality" with people who would otherwise be repulsed by the term "rationality". Thanks for sharing the outline!

comment by RichardJActon · 2021-08-16T08:07:26.954Z · LW(p) · GW(p)

I thought that the sections on Identity and self-deception in this book stuck out as being done better in this book that in other rationalist literature.