Calculating Kelly

post by abramdemski · 2021-02-22T17:32:38.601Z · LW · GW · 18 commentsContents

18 comments

Jacob Falcovich tells us that we should Kelly bet on everything [LW · GW]. I discuss [LW · GW] whether we should Kelly bet when we'd normally make small-money bets on disagreements. Lsusr reminds us [LW · GW] that we aren't very good at intuitively grasping what the Kelly formula actually will say.

Due to Lsusr's post, I took another look at how I actually calculate Kelly. I'll describe the improved formulation I came up with. I'm curious to hear everyone else's thoughts on the quickest ways, or what formula you prefer, or how you intuitively estimate. If you already have opinions, take a moment to think what they are before reading further.

In the comments to my post on Kelly, Daniel Filan mentioned [LW(p) · GW(p)]:

FWIW the version that I think I'll manage to remember is that the optional fraction of your bankroll to bet is the expected net winnings divided by the net winnings if you win.

I've found that I remember this formulation, but the difference between "net winnings" and "gross winnings" is enough to make me want to double-check things, and in the few months since writing the original post, I haven't actually used this to calculate Kelly.

"expected net winnings divided by net winnings if you win" is easy enough to remember, but is it easy enough to calculate? When I try to calculate it, I think of it this way:

[probability of success] [payoff of success] + [probability of failure] [payoff of failure] all divided by [payoff of success].

This is a combination of five numbers (one being a repeat). We have to calculate probability of failure from the probability of success (ie, 1-p). Then we perform two multiplications, one addition, and one division -- five steps of mental arithmetic.

But the formula is really a function of two numbers (see Lsusr's graph [LW · GW] for a vivid illustration). Can we formulate the calculation in a way that feels like just a function of two numbers?

Normally the formula is stated in terms of , the net winnings if you win. I prefer to state it in terms of . If there's an opportunity to "double" your money, while ; so I think is more how people intuitively think about things.

In these terms, the break-even point is just . This is easy to reconstruct with mental checks: if you stand to double your money, you'd better believe your chances of winning are at least 50%; if you stand to quadruple your money, chances had better be at least 25%; and so on. If you're like me, calculating the break-even point as is much easier than "the point where net expected winnings equals zero" -- the expectation requires that I combine four numbers, while requires just one.

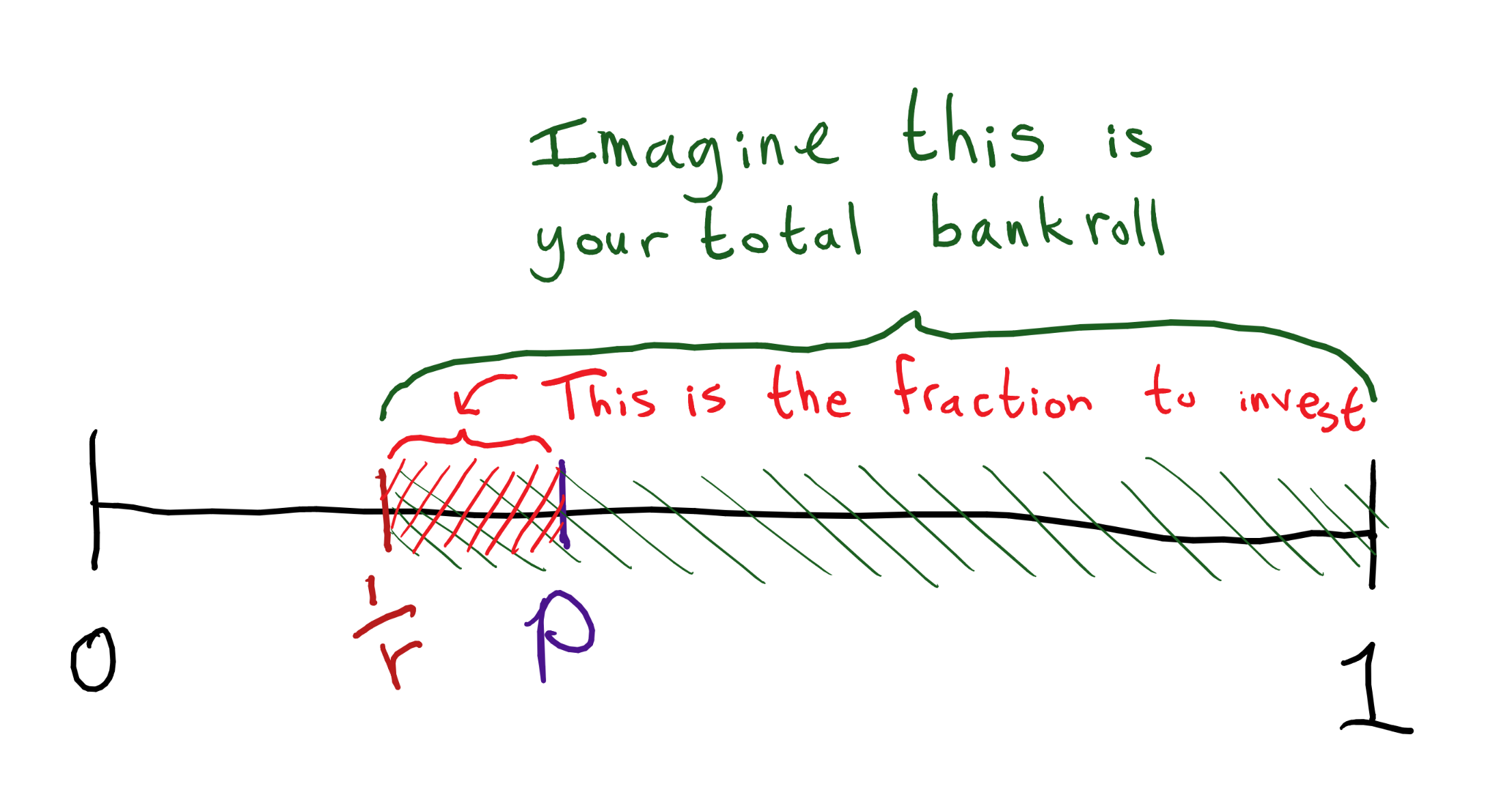

The Kelly formula is just a linear interpolation from 0% at the break-even point to 100% on sure things. (Again, see the graph [LW · GW].)

So one way to calculate Kelly is to ask how much along the way between and we are.

For example, if , then things start to be profitable after . If , we're 3/5ths of the way, so would invest that part of our bankroll.

If , then things start to be profitable after . If , then it's half way to , so we'd spend half of our bankroll.

If you're like me, this is much easier to calculate than "expected net winnings divided by the net winnings if you win". First I calculate . Then I just have to compare this to , to find where stands along the way from to 1. Technically, the formula for this is:

That's a combination of three numbers (counting the repeat), with four steps of mental arithmetic: finding , two subtractions, and one division. So that's a bit better. But, personally, I find that I don't really have to think in terms of the explicit formula for simple cases like the examples above. Instead, I imagine the number line from 0 to 1, and ask myself how far along is from to 1, and come up with answers.

This also seems like a better way to intuit approximate answers. If I think an event has a 15% chance, and the potential payoff of a bet is to multiply my investment by 30, then I can't immediately tell you what the Kelly bet is. However, I can immediately tell you that 15% is less than halfway along the distance from to 100%, and that it's more than a tenth of the way. So I know the Kelly bet isn't so much as half the bankroll, but it also isn't so little as 10%. (Erm, I got this example wrong on the first go and had to revise it when a commenter pointed out the mistake, so maybe actually check the math rather than just intuiting.)

Don't mix up and -- it's critical to remember which of these has to be higher, and not get them reversed. can be thought of as the house odds. You have an edge over the house if your estimate is higher; the house has an edge over you if its estimate is higher.

Keep in mind that the most you ever invest is p. For very small probabilities of very large payoffs, the percentage of your bankroll to invest remains small. You still want to check whether is more than . For example, a 1% chance of a 1000x payoff is good, because 1% is , which is more than . The amount you actually invest is a little less than : in our example, is almost th of the way from th to 1, so we know we'd invest about 1%. (The actual number is , so, a little more than .9%.)

So, what's your favorite version of the Kelly formula? Do you think you can beat mine in terms of ease of calculation?

18 comments

Comments sorted by top scores.

comment by SarahNibs (GuySrinivasan) · 2021-02-22T23:30:02.537Z · LW(p) · GW(p)

This kind of synthesis, boiling down an expression to a very few, very human components, is incredibly valuable to me. Thank you.

Other examples I learned from this community:

lnO(A|B) = lnO(A) + lnL(A|B)

(U(X)-U(Y)) / (U(Z)-U(Y)) = (V(X)-V(Y)) / (V(Z)-V(Y)) when U and V are isomorphic utility functions

comment by johnswentworth · 2021-02-22T22:48:34.241Z · LW(p) · GW(p)

Personally, I use Kelly more often for pencil-and-paper calculations and financial models than for making yes-or-no bets in the wild. For this purpose, far and away the most important form of Kelly to remember is "maximize expected log wealth". In particular, this is the form which generalizes beyond 2-outcome bets - e.g. it can handle allocating investments across a whole portfolio. It's also the form which most directly suggests how to derive the Kelly criterion, and therefore the situations in which it will/won't apply.

comment by lsusr · 2021-02-22T18:58:50.670Z · LW(p) · GW(p)

I agree that it is better to state this in terms of instead of . I intuitively think about probability in terms of , not . If I were to rewrite my post, I would do so in terms of instead of . I also like how you visualize the whole equation as two points on a one-dimensional unit interval because it easyifies the mental arithmetic.

comment by Dave Orr (dave-orr) · 2021-02-22T18:15:34.226Z · LW(p) · GW(p)

The way pro gamblers do this is: figure out how big your edge is, then bet that much of your bankroll. So if you're betting on a coin flip at even odds where the coin is actually weighted to come up heads 51% of the time, your edge is 2% (51% win probability - 49% loss probability) so you should bet 2% of your bankroll each round.

I guess whether this is easier or harder depends on how hard it is to calculate your edge. Obviously trivial in the "flip a coin" case but perhaps not in other situations.

Replies from: abramdemski↑ comment by abramdemski · 2021-02-22T18:31:08.160Z · LW(p) · GW(p)

I've heard this, but I have not yet been able to find a precise definition of this term "edge". All the articles I've come across seem to either assume the concept is intuitively obvious, or give an informal definition like "your advantage over the house" (or "the house's advantage over you", more probably), or expect you to derive the concept from one example calculation. All I know about edge is that it's supposed to be equal to the Kelly formula, which doesn't really get me anywhere.

So what's your definition of edge? How are you calculating it when the bet isn't even-money?

Replies from: SimonM↑ comment by SimonM · 2021-02-22T19:20:48.360Z · LW(p) · GW(p)

Edges is p - q (your probability minus the odds implied probability 1/r in your notation).

I don't think pro-gamblers do think in those terms. I think most pro-gamblers fall into several camps:

1. Serious whales. Have more capital than the market can bet. Bet sizing is not an issue, so they bet as much as they can every time.

2. Fixed stake bettors. They usually pick a number 1%, 2% are common and bet this every time. (There are variants of this, eg staking to win a fixed X)

3. (Fractional) Kelly bettors. I think this is closest to your example. A 1/2-Kelly bettor would see 51% vs 49% and say bet 0.5 * (51-49)/(100 - 49) ~ 0.5 * 4% = 2%

↑ comment by abramdemski · 2021-02-22T20:47:59.532Z · LW(p) · GW(p)

Edges is p - q (your probability minus the odds implied probability 1/r in your notation).

I just want to note for the record that this doesn't agree with Dave Orr's calculation, nor with the rumor that Kelly betting is "betting your edge". So perhaps Dave Orr has some different formula in mind.

As for myself, I take this as evidence that "edge" really is an intuitive concept which people inconsistently put math to.

Replies from: dave-orr↑ comment by Dave Orr (dave-orr) · 2021-02-22T22:58:38.125Z · LW(p) · GW(p)

At this point I will admit that my gambling days were focused on poker, and Kelly isn't very useful for that.

But here's the formula as I understand it: EV/odds = edge, where odds is expressed as a multiple of one. So for the coinflip case we're disagreeing about, EV is .02, odds are 1, so you bet .02.

If instead you had a coinflip with a fair coin where you were paid $2 on a win and lose $1 on a loss, your EV is .5/flip, odds are 2, so bet 25%.

Replies from: abramdemski↑ comment by abramdemski · 2021-02-22T23:08:25.098Z · LW(p) · GW(p)

EV/odds = edge

Right, so this is basically the "net expected value divided by net win value" formula which I examine in the post, because "odds" is the same as , net winnings per dollar invested. (Double-or-nothing means odds of 1, triple-or-nothing means odds of 2, etc.)

At this point I will admit that my gambling days were focused on poker, and Kelly isn't very useful for that.

Yeah? How do you decide how much you're willing to bet on a particular hand? Kelly isn't relevant at all?

Replies from: dave-orr↑ comment by Dave Orr (dave-orr) · 2021-02-23T00:30:39.843Z · LW(p) · GW(p)

It's too cumbersome and only addresses part of the issue. Kelly more or less assumes that you make a bet, it gets resolved, now you can make the next bet. But in poker, with multiple streets, you have to think about a sequence of bets based on some distribution of opponent actions and new information.

Also with Kelly you don't usually have to think about how the size of your bet influences your likelihood to win, but in poker the amount that you bluff both changes the probability of the bluff being successful (people call less when you bet more) but also the amount you lose if you're wrong. Or if you value bet (meaning you want to get called) then if you bet more they call less but you win more when they call. Again, vanilla Kelly doesn't really work.

I imagine it could be extended, but instead people have built more specialized frameworks for thinking about it that combine game theory with various stats/probability tools like Kelly.

The Math of Poker, written by a couple of friends of mine, might be a fun read if you're interested. It probably won't help you to become a better poker player, but the math is good fun.

Replies from: abramdemski↑ comment by abramdemski · 2021-02-23T15:44:45.837Z · LW(p) · GW(p)

Thanks!

comment by SimonM · 2021-02-22T22:11:13.293Z · LW(p) · GW(p)

This also seems like a better way to intuit approximate answers. If I think an event has a 12% chance, and the potential payoff of a bet is to multiply my investment by 3.7, then I can't immediately tell you what the Kelly bet is. However, I can immediately tell you that is less than halfway along the distance from 12% to 100%, or that it's more than a tenth of the way. So I know the Kelly bet isn't so much as half the bankroll, but it also isn't so little as 10%.

1/3.7 ~ 27% >> 12% so you shouldn't be betting anything

Replies from: abramdemski↑ comment by abramdemski · 2021-02-22T22:41:14.494Z · LW(p) · GW(p)

Ah whoops, that's what I get for actually intuiting rather than calculating in my example of intuiting rather than calculating :P

I'll fix the example...

Replies from: SimonMcomment by Peter Ahl (peter-ahl) · 2022-09-02T15:34:47.576Z · LW(p) · GW(p)

From a professional trading perspective, the Kelly fraction (F) is simply F=eR/P, where eR is the expected return, and P is the payout ratio of a winning vs losing outcome. Writing Kelly thus as F=(P W-L)/P . From a financial markets perspective, we think more intuitively in expected return space, hence F=eR/P. eR is given by (P*W-L).

From a gambler's perspective, the Kelly fraction is simply equal to the 'edge' as noted here and elsewhere, i.e. W-L, when a bet has a symmetrical pay-off for a loss vs a win.

My 2 cents

comment by Anon User (anon-user) · 2021-02-22T19:30:43.068Z · LW(p) · GW(p)

Note that Kelly is valid under the assumption that you know the true probabilities. II do not know whether it is still valid when all you know is a noisy estimate of true probabilities - is it? It definitely gets more complicated when you are betting against somebody with a similarly noisy estimate of the same probably, as at some level you now need to take their willingness to bet into account when estimating the true probability - and the higher they are willing to go, the stronger the evidence that your estimate may be off. At the very least, that means that the uncertainty of your estimate also becomes the factor (the less certain you are, the more attention you should pay to the fact that somebody is willing to bet against you). Then the fact that sometimes you need to spend money on things, rather than just investing/betting/etc, and that you may have other sources of income, also complicates the calculus.

Replies from: abramdemski, SimonM↑ comment by abramdemski · 2021-02-22T21:00:05.567Z · LW(p) · GW(p)

Note that Kelly is valid under the assumption that you know the true probabilities.

I object to the concept of "know the true probabilities"; probabilities are in the map, not the territory.

II do not know whether it is still valid when all you know is a noisy estimate of true probabilities - is it?

Is Kelly really valid even when you "know the true probabilities", though?

From a Bayesian perspective, there's something very weird about the usual justification of Kelly, as I discuss (somewhat messily) here [LW · GW]. It shares more in common with frequentist thinking than with Bayesian. (Hence why it's tempting to say things like "it's valid when you know the true probabilities" -- but as a Bayesian, I can't really make sense of this, and would prefer to state the actual technical result, that it's guaranteed to win in the long run with arbitrarily high probability in comparison with other methods, if you know the true frequencies.)

Ultimately, I think Kelly is a pretty good heuristic; but that's all. If you want to do better, you should think more carefully about your utility curve for money-in-hand. If your utility is approximately logarithmic in money, then Kelly will be a pretty good strategy for you.