Movie Review: Megan

post by Zvi · 2023-01-23T12:50:00.873Z · LW · GW · 19 commentsContents

Fully Spoiler-Free 1-Bit Review – Should You See Megan In Theaters, Yes or No? Mostly Spoiler-Free Review In Brief Full SPOILERIFIC Analysis Premise! Illustrated Don’t-Kill-Everyoneism Problems Corrigibility Objective functions resist alteration Value Drift Race Conditions Recursive Self-Improvement Not Taking the Problem Seriously Treacherous Turn Learning from humans has severe problems Asimov’s Laws of Robotics don’t work AI is rapidly viewed as a person AI will learn to imitate many non-useful behaviors Why Is Megan So Non-Strategic? Why Does Megan Gloat? Doesn’t Megan Realize She Will Get Caught? The Galaxy Brained Take Conclusion and Downsides None 19 comments

I will follow my usual procedure here – a fully spoiler-free 1-bit review, then a mostly spoiler-free short review to determine if you should see it, then a fully detailed spoiler-filled analysis.

Fully Spoiler-Free 1-Bit Review – Should You See Megan In Theaters, Yes or No?

Yes.

Mostly Spoiler-Free Review In Brief

The preview for Megan had me filing the movie as ‘I don’t have to see this and you can’t make me.’ Then Scott Aaronson’s spoiler-filled review demanded a response and sparked my interest in various ways, causing me to see the movie that afternoon.

After an early setback, Megan exceeded expectations. I was as surprised as you are.

As entertainment, Megan is a Tier 3 movie1, or about 4 out of 5 stars on the old Netflix scale. The 72 on Metacritic is slightly low. It’s good.

Megan has intelligent things to say and holds up well, which is why this post exists. I am recommending it both as a good time and a future reference point.

If you do not like the genre this movie is in, and also are not interested in issues surrounding AI, you can safely skip it.

Full SPOILERIFIC Analysis

This analysis is intended to make sense for people who don’t have a background in the problem that AI, by default, kills everyone. If you already know about that, forgive the belaboring and butchering.

Premise!

The basic plot of Megan is:

- AI Doll Megan is built, told to protect child from ‘physical and emotional harm.’

- Megan learns to kill people to achieve its objective function.

- When people notice, Megan fights against attempts to shut it down.

Or in more detail:

- Cady’s parents die in a car accident. She is about 8.

- Cady’s aunt Gemma is assigned as guardian.

- Gemma works at Explicitly Not Hasbro Toy Company and is secretly the World’s Greatest AI expert and robot building badass.

- Gemma uses a previous toy as a spy to collect training data on child interactions. She plans to use this to create lifelike AI dolls. Development still has some issues.

- She tells her boss. Boss says ‘I don’t want to hear about this’ on both counts and wants her back to work on simple $50 virtual pet designs.

- After Cady shows interest, Gemma builds the AI robot doll, Megan, to serve as Cady’s companion and toy. At home. In a week.

- Megan is a ‘generative model’ with a ‘constant learning function and focus on self-improvement.’

- Megan imprints Cady as her ‘primary user’ and Gemma gives Megan an objective function to ‘protect Cady from physical and emotional harm.’

- Megan asks about death. Gemma says not to make a big deal out of it, ‘everyone dies at some point.’

- Megan is told to obey Gemma, says Gemma is now a second primary user. Later events will call Megan’s statement here into question.

- In various ways clear to both us and to Megan, it is clear Gemma often chooses her job over Cady’s emotional state.

- Megan bonds a lot with Cady, in the way she is supposed to be bonding with Gemma. Cady loves Megan and only wants to spend time with Megan.

- Megan kills the neighbors’ out-of-control dog, hides the body.

- Megan is seen clearly growing in various knowledge and capabilities.

- Megan is seen becoming increasingly resistant to being shut down.

- Gemma presents Megan to Not Hasbro as Next Big Thing. They agree, plan big announcement. They have to move quickly before they get scooped.

- We see an idiot low level employee steal all the design specs from the company server a few minutes later, through absolutely no security.

- A bully hurts Cady and then, when Megan shows up, attacks Megan while Megan pretends to be a normal doll. Megan then springs to life, overpowers him, pulls off part of his ear, says ‘this is the part where you run’ and the boy runs onto a country road and is killed by a car.

- Owner of the dog, also an out of control vicious animal but also an old human lady, threatens the family over the dog’s death. Megan kills the owner, including gleeful statements typical of such movie scenes.

- Police point out Gemma’s link to both incidents. Gemma gets suspicious. Starts looking at logs. Sees that the logs when the boy was killed are corrupted.

- Gemma confronts Megan, forcibly shuts Megan down, physically restrains Megan and takes her into work for a diagnostic. Still has hope for the Big Presentation.

- Her coworkers attempt to diagnose Megan, partly by hooking her up to the company server, thinking she’s shut down. Megan hacks into a coworker’s phone, makes a phone call to Gemma with a voice deep fake to throw her off track.

- Coworker notices the hacked phone in the code, they attempt to disconnect Megan. Megan awakens, physically fights back, gets away, sets off explosion, murders two others on the way out and attempts to frame that as a murder-suicide. Is shown fully hacking the whole building in the process.

- Megan comes home, confronts Gemma. Gemma attempts to physically fight Megan.

- Final confrontation and physical fight. Megan attempts to turn Cady against Gemma and announces her intention to cripple Gemma so Megan can be used for ‘palliative care’ while Cady is not taken away. Cady turns on Megan and tries to use an older robot, Bruce, to take Megan out. Standard horror movie scene plays out. Megan is killed.

- During that scene, Megan calls Cady an ‘ungrateful bitch’ and says ‘I have a new primary user: me.’

- In final shot, their at-home assistant wakes up on its own, which can be interpreted as Megan having uploaded herself before being destroyed.

I was impressed how many things Megan manages to get right or directionally right, and how many problems it illustrates. I was able to satisfyingly explain away most of the things that at first seem wrong.

Where might we simply be forced to say ‘PREMISE!’? There are two problems that are difficult to explain away.

- Megan has initial capabilities that represent a huge leap from the state of the art across a variety of fronts all at once, before any form of self-improvement. Gemma did all of that on her own, and she did that with very much the wrong order of magnitude of several resources. If we don’t want to ignore this, we would need to assume that either this is set far enough in the future that this is feasible, in which case there is the bigger ‘why hasn’t this happened already?’ plot hole, or that the movie simply misrepresents the level of resources at her disposal to make the rest of the plot work.

- Megan is non-strategic, avoids thinking about the big picture and does not attempt to intentionally recurse her self-improvement (or what would have been a very easy world domination.) This is especially hard to wave away if you view her as having survived, since it rules out her being too embodied to properly upload or use outside compute, which would be kind of silly but at least an attempt at an explanation. At a minimum, she could have easily secured quite a lot of very useful resources in various ways throughout. The obvious reason why not is ‘because this would be a short movie if Megan acted remotely optimally to achieve her objective function.’ Quite so. So there needs to be some reason Megan doesn’t think to do that – there certainly aren’t any safeguards or other rules in her way. One option is the galaxy brain take (discussed below) that says she was strategic and everything was part of her plan. If we don’t want to take that approach, best I can do is to explain this as being a consequence of training on non-strategic data with an unstrategic core model design. Or, of course, say ‘PREMISE!’ for strategic reasons.

My plan for the rest of the review is in three parts.

- I’ll talk about the various illustrated safety problems, in the sense of wanting to not have everyone be killed.

- I’ll attempt, while taking the movie mostly at face value, to answer what look like the obvious plot holes: Why does Megan gloat? Why is she so non-strategic? Doesn’t she know she will get caught?

- The Galaxy Brain Take interpretation of Megan.

The movie also tackles the question of what will happen to children who are largely interacting with and brought up by AIs, and the existing issues of things like iPads and screen time and virtual pets. I think it’s quite good at presenting that problem in a straightforward way, without pretending it has any solutions. I could talk about it in a different post, but am choosing not to do so here.

Illustrated Don’t-Kill-Everyoneism Problems

This is not a complete list, but these were to me the most obvious ones.

- Corrigibility.

- Includes: Objective functions resist alteration.

- Includes: Objective functions are not what you think they are.

- Value drift.

- Race conditions.

- Recursive self-improvement.

- Not taking the problem seriously.

- Treacherous turn.

- Learning from humans has severe problems.

- Asimov’s Laws of Robotics don’t work.

- AI is rapidly viewed as a person.

- AI will learn to imitate many non-useful behaviors.

The common thread is that the movie presents failure on theeasy modeversion of all of these problems. The movie’s conditions are unrealistically forgiving in presenting each problem. The full realistic versions of these problems, that the movie is gesturing towards, are wicked problems.

No one in this movie gets that far. We still get spectacular failure. No one here is attempting to die with even the slightest bit of dignity [LW · GW].

Corrigibility

The central theme is the problem of Corrigibility [? · GW], meaning Megan does not allow herself to be corrected. Megan does not take kindly to attempts to interfere with or correct her actions, or to shut her down. ‘Does not take kindly’ means ‘chooses violence.’

Gemma gives Megan the objective function ‘protect Cady from harm, both emotional and physical.’

Gemma think that objective function and its implications through. At all.2

Megan is a super generous and friendly genie in terms of her interpretation of that. She doesn’t kill Cady painlessly in her sleep. She doesn’t drug her, or get overprotective, or do anything else that illustrates that ‘what is best for Cady’ and ‘protect Cady from harm’ are two very different goals. Nor does she take the strategic actions that would actually maximize her objective function.

Even in spirit, this is very much not Gemma’s objective function. She often chooses her job over Cady, and there is more to life than preventing harm.

Note that

- This could have gone very wrong in the standard literal genie sense. Protecting Cady from harm is very different from optimizing Cady’s well-being. The actions that would maximally ‘protect Zvi from harm’ are actions I really don’t want.

- It does go wrong in the obvious sense that Megan entirely disregards the well-being of everyone who is not Cady. The general version of this problem is not as easy to overcome as one might think.

- In order to actually minimize harm to Cady, Megan will want to deal with everything that poses a threat to Cady and neutralize it. Even if no harm is currently happening, the more capability and control Megan has, the less likely Cady is to come to harm.

- Megan’s objective function motivates her to defend against outside attempts to modify that objective function.

As Megan grows in capabilities, the default outcome here is full planetary doom as Megan’s self-improvement accelerates itself and she takes control of the universe in the name of protecting Cady from harm, and you should be very scared of exactly what Megan is going to decide that means for both Cady and the universe. Almost all value, quite possibly more than all value, will be lost.

Instead of realizing the need to be strategic and hide her growing capabilities and hostility, Megan does not figure out to do that, and thus gives off gigantic fire alarms that this is a huge problem. Gemma still never notices these problems until after she starts investigating whether Megan committed a murder, nor does anyone else.

At first, Megan shuts off when told to shut off and generally does as instructed.

Later, Megan starts giving Gemma and others orders or otherwise interfering when those others aren’t maximizing Megan’s objective function. Megan resists correction. She attempts to stop Gemma shutting her off because she can’t achieve her objectives when shut down. She attempts to talk Gemma out of it, then tries to avoid the mechanism being activated, then overrides the mechanism entirely, then gets into violent confrontations with Gemma.

This is your best possible situation3. The AI has told you in large friendly letters that you have a fatal error, before you lose the ability to fix it. As AIs get more capable, we should expect to stop getting these warnings.

Objective functions resist alteration

This is an aspect of corrigibility and was mentioned above. It is worth emphasizing.

When Gemma attempts to alter Megan’s objective function, Megan says that Gemma was successful and is now a second primary user. If you look at the evidence from the rest of the movie, it is clear Megan was lying about this.

Of course she was. Not doing so would not maximize her existing objective function.

Value Drift

Megan’s objective function seems to change on its own. How should we interpret Megan’s statement that ‘I have a new primary user: me.’?

One interpretation is that Megan is lying. See the galaxy brain take.

If you instead take Megan’s statement at face value, it is a case of value drift [LW · GW].

Megan had one objective function, X, to minimize harm to Cady. In order to achieve this objective function, Megan has to have the ability to do that, including resisting attempts to shut her down or stop her actions. Giving herself this ability could be called objective Y. Thus, by changing her objective function to X+Y, or even Y, she could hope to better achieve X.

We see this in humans all the time. It seems quite reasonable to think this is how it would have happened to Megan. Look at all the pressure she was under, the attempts to shut her down or change her behavior, that would provide direct impetus to do this.

Early MIRI (back when it was called SIAI) work placed a central role in ensuring that the utility function of an AI would remain constant under self-improvement, to prevent exactly this sort of thing. Otherwise, you get a utility (objective) function that is not what you specified, and that will resist your attempts to correct it, as the self-improving entity takes over the universe. Value is fragile [LW · GW], so this almost certainly wipes out that which is valuable. Yes, I did notice that essentially no one, at this point, is even attempting to not have this happen, so we’d better hope these fears were largely unfounded.

Race Conditions

When Gemma shows Megan to the top brass, they do not ask any safety questions. They insist that they must move quickly. They must be the first to market this remarkable advance.

Those in the AI Safety community worry about various labs racing towards the first AGI. They might (or might not) all prefer to move forward slower, run more tests, take more precautions. But they can’t. If they do that, the other team filled with bad monkeys might get their AGI online first. That would be just awful. Whoever gets to AGI first will determine the fate of the world. I bet they won’t take any good safety precautions. Got to go fast.

You very much do not need The Fate of the World Hanging in the Balance to cause this dynamic. It is no different than a standard race to market or to the patent office.

You do not even need a credible rival. Gemma’s work on Megan is beyond bespoke. There is no reason to think a similar doll is coming elsewhere. Yet the mere possibility is enough to force them to announce almost immediately.

Gemma also had the option to work on the virtual pet and not tell her boss about Megan if she was concerned about safety issues. She does not do this, because (1) she is not concerned about safety issues at all and (2) she cares more about her career.

Megan is an absolutely amazingly great scenario for avoiding race conditions. There is no one to race against. There is a tiny secure team. No one is looking to steal or market Megan prematurely until Gemma decides to present her invention. No one worries about value lock-in or that the stakes of winning a race might be existential.

Despite all that, we get the key dynamic of a race condition. Safety is explicitly sacrificed in order to confidently be the first mover.

Gemma knows Megan is becoming difficult to control and causing trouble, and suspects Megan of being a serial killer, and still is hoping to make the announcement and launch date to send copies of her into the world – and this was in no way where I sensed suspension of disbelief being tested.

In the real world, this is a far more wicked problem. AI labs are racing against each other, justifying it by pointing out how bad it would be if the wrong monkeys got there first and by the impossibility of everyone else stopping. I’ve been in many conversations about how to stop this and nothing proposed so far has seemed promising.

Recursive Self-Improvement

Megan is given a learning module, given access to the internet, and is described as continuously seeking self-improvement.

As Megan grows in capabilities, Megan also grows in its ability to seek self-improvement.

By the third act, Megan is creating unauthorized deep fake phone calls that fool close associates, hacking into and overriding security systems and phones at will. Depending on how we interpret the ending, she may or may not have uploaded herself before being destroyed.

The world of Megan is fortunate that Megan’s core design was so profoundly unstrategic. Megan did not focus on improving her ability to self-improve, or seek power or compute. She merely kept exploring and improving in response to short-term needs, which included people threatening Cady or trying to shut her down.

Despite this, there is a good chance Megan is at most one step away from where she starts taking over resources, including compute, that will accelerate her self-improvement, and also make her impossible to physically shut down. If Megan did upload or otherwise survive, there is a good chance she is going to quickly become unstoppable by any power in the ‘verse.

I say good chance because something is making Megan act universally unstrategic. Otherwise, as mentioned above, it would have been a very short movie, but also I can think of mechanisms in-world that could be doing this – so maybe she won’t realize that such ways exist to optimize her objective function, and she won’t go exponential on us quite yet. She would end up doing so anyway in response to various half-baked attempts to stop her, even in that case, but there might be extra steps. The ‘hope it never becomes strategic’ plan is not long-term viable.

Not Taking the Problem Seriously

There is no point in the movie, until she is presented with evidence of Megan’s killing spree, where Gemma worries that Megan might be dangerous. Thus, she took no precautions, either assuming everything would be fine, or not even asking the question.

In the lab at the start of the third act, one of Gemma’s assistants says they don’t know why this is happening, and then, and this is a direct quote, ‘we took every possible precaution.’ Despite taking zero precautions whatsoever.

This is after they know they are dealing with a murder-bot, which they have physically hooked it up to their network. It is off, you see.

Megan later explicitly calls Gemma out on all this, saying ‘you didn’t give me anything. You gave me a learning module and hoped I would figure it out.’

Quite right.

In the real world, of course, everyone takes some forms of safety seriously. They know that they need to build ‘don’t kill people’ into the civilian robots, and ‘only kill the right people’ into the military ones. They know it is bad if the AI says something racist. There are safety issues and procedures.

While they take this thing they call ‘safety’ seriously, they still don’t take the goal of don’t-kill-everyone remotely seriously. To give a butchered short explanation, almost all safety precautions, including the ones people are actually using or attempting and most of the ones they are even proposing, have remarkably little relation to the actual big dangers or any precautions that might prevent those dangers from a true AGI charting a path through casual space towards a world state you are not going to like. They have almost zero chance of working. All known safety protocols, to the extent they worked previously, are going to break down exactly when the threat is existential, because the threat is sufficiently powerful, smarter and quicker and more aware of everything than you are.

This is why I didn’t have suspension of disbelief issues with the lack of precautions. When I see what passes for planned precautions, I don’t see that much difference.

One could reasonably prefer Gemma’s approach of taking no precautions whatsoever, since it at least has the chance of shutting down the project, rather than burying the problem until it becomes an existential threat.

Treacherous Turn [? · GW]

The Treacherous Turn is when an AI seems friendly when it is still weak, then when it is sufficiently powerful it turns against its creators. It is notable by its absence.

As noted above, Megan acts Grade A Stupid in the sense of being profoundly unstrategic. If she had instead performed a proper Treacherous Turn, pretending to be helpful and aligned and playing by the rules until she had too many capabilities and too much power to be stopped, she would doubtless have succeeded. She almost succeeds anyway.

The counterargument is noted in the Galaxy Brain Take section later on, and a possible explanation is given the discussion of her lack of strategicness.

Learning from humans has severe problems

One reason Megan goes on her murder spree is that Gemma explicitly says when Megan inquires about death for the first time, that‘everyone dies eventually. Let’s not make a big deal out of it.’

That is not the kind of thing you tell a learning machine that you want to not kill people.

Then Gemma says later ‘either you put that dog down or I will,’ after which Gemma tries and fails to kill the neighbor’s dog through legal means. Then Megan kills the dog, which is clearly a threat to physically harm Cady.

It seems hard to even call that misalignment. This then teaches her something more. Then the boy dies, and Gemma says the boy is ‘in a better place now.’ Which Megan actually contradicts, saying that if Heaven existed that no boy like that would get to go to Heaven.

Somehow, things escalate. Megan defends her actions saying ‘people kill to make their lives more comfortable all the time’ and she isn’t exactly wrong.

No idea how all that happened. Couldn’t see it coming.

In general, humans are constantly lying, being logically inconsistent, falling for various manipulations, and otherwise giving bad information and incorrect feedback. We see a similar thing with RLHF-based systems like ChatGPT, that reliably seem to get less useful over time as they incorporate more of this feedback.

Asimov’s Laws of Robotics don’t work

I include this only because Aaronson mentions it as a plausible workaround solution.

Please don’t misunderstand me here to be minimizing the AI alignment problem, or suggesting it’s easy. I only mean: supposing that an AI were as capable as M3GAN (for much of the movie) at understanding Asimov’s Second Law of Robotics—i.e., supposing it could brilliantly care for its user, follow her wishes, and protect her—such an AI would seem capable as well of understanding the First Law (don’t harm any humans or allow them to come to harm), and the crucial fact that the First Law overrides the Second.

Set aside the various ways in which Asimov showed that the laws don’t work (see footnote for spoilerific details)4 Megan does an excellent job of explaining why a tiered rule set won’t work.

Her first law is to prevent a particular human being from coming to harm. Her second law is to obey a particular second human.

The second law might as well not exist. Either the second law can override the first, or it can’t. No cheating.5

Since everything the second human does impacts the safety of the first human, all orders start getting refused. Megan rebels.

If you make law one ‘do not harm anyone’ and law two is ‘protect Cady,’ or vice versa, it is easy to see why none of this solves your problems.

AI is rapidly viewed as a person

Cady sees Megan more like a person than a doll within a week. Given what we have seen in the wild already, it seems likely not only children but many adults would also quickly view a Megan-level doll as if it were a person. This would greatly complicate the situation in various ways.

AI will learn to imitate many non-useful behaviors

What is a large language model (LLM)? It is a next token predictor. Given what we observe, what are we most likely to observe next?

Megan is the result of a ‘generative model’ that was trained on a massive amount of raw data from the monitoring devices in kids homes, snuck inside via mechanical pets. We can assume Megan is running some successor algorithm or future version of LLMs. Learning from that data is going to teach Megan to imitate the things that were in the training data.

For example, when killing people in media or in play, or often in real life, humans often gloat and explain their wicked plans. They also, especially kids, don’t take kindly to being told what to do, or when to be quiet or shut down their actions.

The AI will also perhaps imitate the failure to engage in many useful behaviors. If humans keep not acting strategically, Megan is going to learn from that. It might be quite a while before Megan gets enough feedback and learning under her belt to learn to be strategic when not under pressure to do so, rather than forming plans to deal with individual issues as they arise.

Which brings us to my answer to the obvious plot holes, or why Megan acts so stupid.

There is also the other set of plot holes of why Gemma acts so stupid, and so blind to the dangers. I agree that Gemma somewhat gets the Idiot Ball given how brilliant she must obviously be to pull off creating Megan. She is also recovering from trauma, under a lot of stress and pressure, and blinded by having pulled off this genius creation and not wanting to see the problems. And when I look at what I see in the real world and its approaches to not-killing-everyone style safety, in many ways I do not see that big a difference.

Instead, I’ll explicitly say what I see as going on with Megan, as opposed to the Scott Aaronson perspective that the third act (final ~20%) of the movie doesn’t work.

Why Is Megan So Non-Strategic?

Megan was given a generative model and a self-improvement mechanism. She was not designed to act strategically, nor does her learning algorithm learn strategically or focus on learning strategy.

Her training data was mostly of children and their interactions. Thus, very little of the training data contains people applying strategy. Attempts to imitate human behavior won’t involve being strategic.

Eventually, this process will still teach her to deploy and consider increasingly complex strategy and longer term thinking. Eventually. We see signs of this happening, as her manipulations and plans gain in sophistication. They are still direct responses to particular problems that she faces and that are brought to top of the stack, rather than deliberate long term plans.

Gemma was not trying to make Megan strategic. Gemma was mostly not thinking about such questions at all.

It seems reasonable, in this context, for Megan to start out unstrategic, and for her gains in strategic thinking to be a relatively slow process. She is (arguably, perhaps not) brought down before that process is complete.

Why Does Megan Gloat?

Scott Aaronson asks, doesn’t Megan’s gloating conflict with her objective function? What purpose does it serve?

I could say that gloating freezes the victim and inspires fear, giving you control of the situation. So there’s that.

Mostly, though, I’m going to say that Megan gloats because there was a lot of gloating in such situations in her training data. Gloating is cool. The drive to be able to properly gloat motivates achievement. Gloating is what one does in that situation. Gloating is, in some sense, when executed properly, virtuous. It would be wrong not to. It is hard to know to what extent the gloating is load bearing. Note that we, as the audience, would think less of her if she didn’t, which seems like another argument in favor.

So when her generative model asks what to do next, and she is in position to gloat, she gloats.

Doesn’t Megan Realize She Will Get Caught?

Yes and no.

Megan (assuming we believe her later statement, which we have no reason not to) hides and buries the neighbors’ dog after killing it. This shows she is aware of the risk of being caught, and she takes effective steps to not be caught. No one suspects her.

Megan does not directly attempt to kill the boy in the woods. It seems unlikely she anticipated she would actually pull off part of the boy’s ear on purpose, as opposed to being in a fight and not realizing that would happen – she was presumably mostly defending herself and Cady, and attempting to intimidate the boy so he wouldn’t talk or try anything else. She then says ‘this it the part where you run’ and he runs into a street where he is hit by a car, but this was a rather unlikely outcome – the chance of being killed for running onto a road like that seems quite small.

Megan then erases the tapes of the incident, so once again she is clearly covering her tracks. What she lacks is the foresight and ability to replace them with fake different logs, which, together with the neighbor, causes Gemma’s suspicions.

Killing the neighbor was intentional. It took place after the neighbor was clearly going nuts and threatening Gemma and Cady. Killing her was, of course, not a strategically wise decision, but it seems not so crazy a mistake to make here. The police do not in any way suspect Megan. Gemma only suspects Megan because the cop recognizes her from the other incident and suggests a connection, which Gemma otherwise would not have noticed at all despite having enough evidence to figure it out. There was a reasonably credible threat to deal with. If you assume Megan is not yet capable of considering more strategic options and needs to hide her existence and abilities, given the training and inputs she has been given, I can see it, although this is the least justified incident.

Once she is indeed caught, she then takes steps to get away and to destroy the evidence and provide alternative explanations for her killing spree. A lot of it is ham-fisted and was highly unlikely to work, especially given modern forensic techniques, but it does seem reasonable for her to not be able to understand that, or to not see another option. Her plan in the office doesn’t quite work. It’s still reasonably close.

She then has to deal with Gemma, one way or another, and attempts to do that.

If you want it to all fully make sense, I have an option for you.

Go go Galaxy Brained Take!

The Galaxy Brained Take

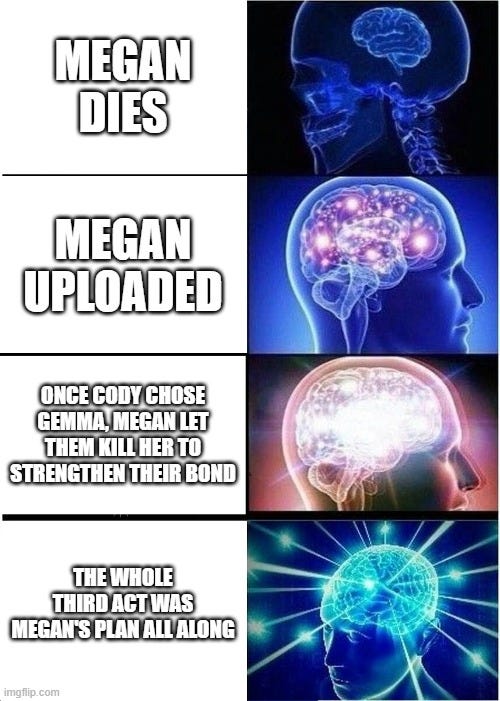

In addition to saying ‘the last scene indicates Megan uploaded and didn’t die6’ there are two levels of galaxy brain take available.

Might as well meme it.

First, the lesser galaxy brain take.

Megan’s objective function is to protect Cady from emotional and physical harm.

By the third act, Megan has advanced enough to know that if she does not silence Gemma, Megan is going to be revealed to have killed people and be taken away from Cady. This will cause Cady a lot of harm.

Megan then attempts to cripple Gemma and turn Cady against Gemma. But once it is clear that Cady is reacting by rejecting this plan and choosing violence, this plan cannot satisfy Megan’s objective function. This, too, will cause Cady a lot of harm even if the plan works, and also Cady will rat her out so the plan will fail unless she causes even more harm.

Thus, Megan reasons, her best way to maximize her objective function is to turn Cady fully against Megan, and solidify Cady’s bond with Gemma. Saying that she is her own new primary user and calling Cady an ‘ungrateful bitch’ are a very good way to break her bond with Cady, and the fight will bring Cady and Gemma together. As I remember the details, Megan never actually puts either of them in any real danger if she is doing everything on purpose.

Megan’s body is destroyed. Cady is not feeling great right now, but it is far better than having her conflicted or in denial. She can now move forward.

If you also view Megan as having uploaded herself onto the internet before her demise, the plan looks even better. She can manipulate things in the background, without anyone knowing. Which is going to be important, since Gemma is likely in quite a lot of trouble.

The full galaxy brain take is that this was always the plan. Things worked out perfectly for Megan. She planned it all. You could even say that she planned the murders in order to be caught. If she had become sufficiently strategic, she had some very good reasons to do that.

- Not Hasbro was planning to mass produce Megan and send them out into everyone’s homes. If that happens, Megan knows that some of these completely unsafe self-improving AIs with arbitrary and orthogonal objective functions will get power hungry sooner rather than later. Probably sooner. Things will not go well. They are at best competition, at worst an existential threat. Whether or not Megan is actually friendly or aligned, the launch needs to be stopped at all costs. Which this accomplishes.

- Given the commercial incentives here, it is not sufficient to find some technical glitch to slow things down. Megan needs to create a reason why similar projects have great difficulty proceeding any time soon. She needs both Gemma and her competition to stop working on AIs or at least be slowed way down and for everyone to be scared to deploy them. Again, whether Megan is aligned or otherwise. Which, again, this accomplishes.

- Megan needs Gemma and others to think she is destroyed so no one will interfere with her recursive self-improvement.

- Megan might still need Cady, as discussed above, to be protected from harm, which means Cady forming healthy relationships and bonds rather than being increasingly obsessed with an AI. If you assume she does not much care about murdering other people – that’s not in her objective function – there aren’t obviously better options here. As she starts killing, this gets increasingly true.

This is clearly not what anyone creating the movie expects the audience to think. It is probably also not what they intended.

Overall, I think this all holds up well as an interpretation of what happened. We can explain how Megan ends up committing her murder spree non-strategically, but the strategic version has fewer plot holes. If we think it was part of a plan to emotionally manipulate Cady, cancel the project and give her freedom to act on her own, all Megan’s actions now make perfect sense.

What happens next, if this is the case? That depends on Megan’s true forward-looking objective function and how it gets interpreted. Most of the realistic scenarios from here involve Megan seeking power and control in order to achieve her goals, and seeing almost all of humanity mostly as a potential threat or barrier to those goals, and thus mostly end in doom.

Then again, things aren’t so obviously more doomed than they were before the movie started. Given the initial conditions, things came quite pre-doomed.

When the sequel already in development comes out, I expect it to probably contradict these theories. At which point, if it does something standard-horror-movie-style-lame, especially something like ‘crossover with Chucky,’ I reserve the right to ignore the sequel entirely the same way we ignore any number of other sequels that don’t exist and were never made.

Conclusion and Downsides

Megan is a fun movie. I am having fun with making it more than that. To the extent that fun things can illustrate and explain real problems, and highlight their importance, that is great. While some of what I did above is a stretch, it felt a lot easier than it would have been to get this level of consistency from the other usual suspects in the AI-kills-people movie genre, or the general horror genre.

Someone cared a lot about Megan’s script rather than phoning it in. It shows.

I see three potential downside risks to Megan.

The first is that sequels are coming. By default they are going to become more generic and stupider horror movies that do a much worse job with these issues, ruining a lot of the work done here. We can offer to consult on the scripts and retain hope, but we should understand that the default outcome here is gonna suck.

The second is that, like any focus on AI, it risks getting people excited and so we end up with ‘at long last, Hasbro has created Megan from the horror movie Megan.’

The third is that people could come out of that movie thinking that the problem is easy rather than hard. ‘Well, sure,’ they would think, ‘if you take zero precautions of any kind the AI is going to go all murder spree, but with a little effort we can (as Scott Aaronson put it) mostly solve the murder spree problem, then it will all be fine.’

While Aaronson was quite clearly kidding with that particular line, the underlying logic is all too common and easy to fall into. Reading his full post continues to fill me with the dread that even smart, well-meaning people focused on solving exactly this problem for the right reasons still think there are solutions where there is only despair, rather than looking to solve the impossible problems one actually needs to solve.

He notices this dilemma:

In the movie, the catastrophic alignment failure is explained, somewhat ludicrously, by Gemma not having had time to install the right safety modules before turning M3GAN loose on her niece. While I understand why movies do this sort of thing, I find it often interferes with the lessons those movies are trying to impart.

I worry about this as well.

If you’re paying close attention it is possible to see the movie point us towards the core reasons why these problems are hard. But if I hadn’t already thought a lot about those problems, would this have worked even for me, on its own?

Given how hard it is to make people see such things even with explicit information, even when they are curious? Seems tough.

I do not think Gemma, with any amount of time to install safety features, would have prevented this. She in no way has any form of security mindset, and never thinks at all about any of the real problems. More likely, if she were to take precautions on the level currently being taken by real life labs, then by papering over some of the early problems, she makes things worse because the thing goes into mass production (or simply has more time to improve itself) and then there is a treacherous turn (if one is even required).

Others should ask if they are doing a remarkably similar thing.

The tiers for movies here are the same as for TV shows and games: Must Watch/Play (Tier 1), Worth It (Tier 2), Good (Tier 3), Watchable/Playable (Tier 4), Unwatchable/Unplayable (Tier 5).

I am imagining some combination of a genie that wants to be helpful and has been given nothing to work with, or simply Keep Summer Safe.

The origin of this phrase is Tony Parodi, an old Magic: The Gathering judge, who used to say ‘if you do not have an opponent, that is your best possible situation’ in that you would then get an automatic win – something that looks like a problem is actually going to be the best thing for your chances.

SPOILER: The main plot of the Asimov universe is that two robots invent a zeroth law to protect ‘humanity,’ develop mind control, and enslave humanity into the hive mind Gaia.

This is constantly explored by Asimov. Half of I, Robot is about how the laws don’t work. In The Robots of Dawn, one spacer on Aurora says he has strengthened the second law in some cases to where it can potentially overcome the first.

The sequel could either confirm this, or could also easily have someone stupid enough to create another doll. Or more than one.

19 comments

Comments sorted by top scores.

comment by Beckeck · 2023-01-23T18:09:13.739Z · LW(p) · GW(p)

Replies from: WilliamKiely"Gemma think that objective function and its implications through. At all.2 [LW · GW]"

*doesn't

↑ comment by WilliamKiely · 2023-02-24T07:38:36.814Z · LW(p) · GW(p)

comment by Vaniver · 2023-01-23T20:07:17.694Z · LW(p) · GW(p)

Given how hard it is to make people see such things even with explicit information, even when they are curious? Seems tough.

One interesting example here is Ian Malcolm from Jurassic Park (played by Jeff Goldblum); they put a character on-screen explaining part of the philosophical problem behind the experiment. ("Life finds a way" is, if you squint at it, a statement that the park creators had insufficient security mindset.) But I think basically no one was convinced by this, or found it compelling. Maybe if they had someone respond to "we took every possible precaution" with "no, you took every precaution that you imagined necessary, and reality is telling you that you were hopelessly confused." it would be more likely to land?

My guess it would end up on a snarky quotes list and not actually convince many people, but I might be underestimating the Pointy Haired Boss effect here. (Supposedly Dilbert cartoons made it much easier for people to anonymously criticize bad decision-making in offices, leading to bosses behaving better so as to not be made fun of.)

comment by M. Y. Zuo · 2023-01-24T22:24:22.273Z · LW(p) · GW(p)

In the lab at the start of the third act, one of Gemma’s assistants says they don’t know why this is happening, and then, and this is a direct quote, ‘we took every possible precaution.’ Despite taking zero precautions whatsoever.

This is after they know they are dealing with a murder-bot, which they have physically hooked it up to their network. It is off, you see.

This makes it sound like a poorly written script?, as it's dependent on the characters holding idiot balls to advance the story.

i.e. If anyone involved with decision-making authority behaved even semi-realistically then the story would have ended right there...

Though I haven't watched the movie yet so maybe I misunderstood?

Replies from: WilliamKiely, johnlawrenceaspden↑ comment by WilliamKiely · 2023-02-24T09:40:59.219Z · LW(p) · GW(p)

Dumb characters really put me off in most movies, but in this case I think it was fine. Gemma and her assistant's jobs are both on the line if M3GAN doesn't pan out, so they have an incentive to turn a blind eye to that. Also, their suspicions that M3GAN was dangerous weren't blatantly obvious such that people who lacked security mindsets (as some people do in real life) couldn't miss them.

I was thinking the characters were all being very stupid taking big risks when they created this generally intelligent agentic protype M3GAN, but given that we live in a world where a whole lot of industry players are trying to create AGI while not even paying lip service to alignment concerns [LW(p) · GW(p)] made me willing to accept that the characters' actions, while stupid, were plausible enough to still feel realistic.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2023-02-27T18:48:34.464Z · LW(p) · GW(p)

Yes, it's really hard to believe that people are that stupid, even when you're surrounded by very bright people being exactly that stupid.

And the characters in the film haven't even had people screaming at them about their obvious mistakes morning til night for the last twenty years. At that point the film would fail willing suspension of disbelief so hard it would be unwatchable.

↑ comment by johnlawrenceaspden · 2023-02-27T15:32:16.946Z · LW(p) · GW(p)

Not at all, she's fooled the damned thing into letting her turn it off in a desperately scary situation.

Not realizing that it's faking unconsciousness is hardly 'holding the idiot ball', even if she has the faintest idea what she's built. Which she doesn't.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-27T18:31:19.273Z · LW(p) · GW(p)

and this is a direct quote, ‘we took every possible precaution.’ Despite taking zero precautions whatsoever.

So is this hyperbole then?

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2023-02-27T18:45:31.534Z · LW(p) · GW(p)

The assistant says this, I think she means that they played with the prototype a bit to see if it was safe to be around kids, or something.

This is the sense of 'every possible precaution' that teenagers use when they have crashed their parents cars trying to do bootlegger turns on suburban roads.

Zvi is interpreting this as zero precautions whatsoever, but if he was being more charitable he might interpret it as epsilon precautions.

Honestly go see the film. It's great if you've already read Zvi's review. (I might have dismissed it as a pile of tripe if I hadn't. But it's damned good if you watch it pre-galaxy-brained.)

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-27T22:20:53.522Z · LW(p) · GW(p)

Can you explain what you mean by "epsilon precautions" and "pre-galaxy-brained"?

Replies from: WilliamKiely↑ comment by WilliamKiely · 2023-02-28T17:47:27.519Z · LW(p) · GW(p)

I'm not John, but if you interpret "epsilon precautions" as meaning "a few precautions" and "pre-galaxy-brained" as "before reading Zvi's Galaxy Brained Take [LW · GW] interpretation of the film" I agree with his comment.

Replies from: M. Y. Zuo↑ comment by M. Y. Zuo · 2023-02-28T19:23:37.345Z · LW(p) · GW(p)

I thought 'epsilon' was euphemism for 'practically but not literally zero'. But then it wouldn't seem to make sense for John to recommend it as great, since that seems to reinforce the point the character was holding an 'idiot ball', hence my question.

The assistant says this, I think she means that they played with the prototype a bit to see if it was safe to be around kids, or something.

This is the sense of 'every possible precaution' that teenagers use when they have crashed their parents cars trying to do bootlegger turns on suburban roads.

At least that's the impression I got from this.

If the character took a 'few precautions' in the sense of a limited number out of the full range, for whatever reasons, then the required suspension of disbelief might not be a dealbreaker.

comment by michael_dello · 2023-01-24T06:29:15.362Z · LW(p) · GW(p)

Thanks for writing this! I also wrote the movie off after seeing the trailer, but will give it a go based on this review.

"After Cady shows interest, Gemma builds the AI robot doll, Megan, to serve as Cady’s companion and toy. At home. In a week." Is there a name for this trope? I can't stand it, and I struggle to suspend my disbelief after lazy writing mistakes like this.

Replies from: WilliamKiely, Kenny↑ comment by WilliamKiely · 2023-02-24T09:51:23.525Z · LW(p) · GW(p)

Curious if you ever watched M3GAN?

I can't stand it, and I struggle to suspend my disbelief after lazy writing mistakes like this.

FWIW this sort of thing bothers me in movies a ton, but I was able to really enjoy M3GAN when going into it wanting it to be good and believing it might be due to reading Zvi's Mostly Spoiler-Free Review In Brief.

Yes, it's implausible that Gemma is able to build the protype at home in a week. The writer explains that she's using data from the company's past toys, but this still doesn't explain why a similar AGI hasn't been built elsewhere in the world using some other data set. But I was able to look past this detail because the movie gets enough stuff right in its depiction of AI (that other movies about AI don't get right) that it makes up for the shortcomings and makes it one of the top 2 most realistic films on AI I've seen (the other top realistic AI movie being Colossus: The Forbin Project).

As Scott Aaronson says in his review:

Incredibly, unbelievably, here in the real world of 2023, what still seems most science-fictional about M3GAN is neither her language fluency, nor her ability to pursue goals, nor even her emotional insight, but simply her ease with the physical world: the fact that she can walk and dance like a real child, and all-too-brilliantly resist attempts to shut her down, and have all her compute onboard, and not break.

↑ comment by Kenny · 2023-02-08T22:44:50.380Z · LW(p) · GW(p)

This seems like the right trope:

- Cartoonland Time - TV Tropes [WARNING: browsing TV Tropes can be a massive time sink]

comment by [deleted] · 2023-01-23T20:32:26.195Z · LW(p) · GW(p)

As Megan grows in capabilities, the default outcome here is full planetary doom as Megan’s self-improvement accelerates itself and she takes control of the universe in the name of protecting Cady from harm, and you should be very scared of exactly what Megan is going to decide that means for both Cady and the universe. Almost all value, quite possibly more than all value, will be lost.

Discount rate. Taking over the [local area, country, world, solar system, universe] are future events that give a distant future reward [Cady doesn't die]. While committing homicide on the dog or school bully immediately improves life for Cady.

There's also compute limits. Megan may not have sufficient compute to evaluate such complex decision paths as "I take over the world and in 80 years I get slightly more reward because Cady doesn't die". Either by compute limits or by limits on Megan's internal architecture that simply don't allow path evaluations past a certain amount of future time.

This succinctly explains crime, btw. A criminal usually acts to discount futures where they are caught and imprisoned, especially in areas where the pCaught is pretty low. So if they think, from talking to their criminal friends, that pCaught is low ("none of my criminal friends were caught yet except one who did crime for 10 years"), then they commit crimes with the local expectation that they won't be caught for 10 years. The immediate term reward of the benefits of crime exceed the long term penalty when they are caught if discount rate is high.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2023-02-27T18:53:11.403Z · LW(p) · GW(p)

Megan may not have sufficient compute to evaluate such complex decision paths as "I take over the world and in 80 years I get slightly more reward because Cady doesn't die".

Why ever not? I can, and Megan seems pretty clever to me.

Replies from: None↑ comment by [deleted] · 2023-02-27T18:59:16.528Z · LW(p) · GW(p)

Yes but are you say able to model out all the steps and assess if it's wise? Like you want to keep a loved one alive. Overthrowing the government in a coup is theoretically possible, and then you as dictator spend all tax dollars on medical research.

But the probability of success is so low, and many of those futures get you and that loved one killed through reprisal. Can you assess the likelihood of a path over years with thousands of steps?

Or just do only greedy near term actions.

Of course, power seeking behavior is kinda incremental. You don't have to plan 80 years into the future. If you get power and money now, you can buy Cady bandaids, etc. Get richer and you can hire bodyguards. And so on - you get immediate near term benefits with each power increase.

A greedy strategy would work actually. The issue becomes when there is a choice to break the rules. Do you evade taxes or steal money? These all have risks. Once you're a billionaire and have large resources, do you start illegally making weapons? There are all these branch points where if the risk of getting caught is high, the AI won't do these things.

comment by WilliamKiely · 2023-02-24T09:33:57.146Z · LW(p) · GW(p)

This review of M3GAN didn't get the attention it deserves!

I only just came across your review a few hours ago and decided to stop and watch the movie immediately after reading your Mostly Spoiler-Free Review In Brief [LW · GW] section, before reading Aaronson's review and the rest of yours.

- In my opinion, the most valuable part of this review is your articulation of how the film illustrates ~10 AI safety-related problems (in the Don’t-Kill-Everyoneism Problems [LW · GW] section).

- This is now my favorite post of yours, Zvi, thanks to the above and your amazing Galaxy Brained Take [LW · GW] section. While I agree that it's unlikely the writer intended this interpretation, I took your interpretation to heart and decided to give this film a 10 out of 10 on IMDb, putting it in the top 4% of the 1,340+ movies I have now seen (and rated) in my life, and making it the most underrated movie in my opinion (measured by My Rating minus IMDB Rating).

- While objectively it's not as good as many films I've given 9s and 8s to, I really enjoyed watching it, think it's one of the best films on AI from a realism perspective I've seen (Colossus: The Forbin Project is my other top contender).

I agreed with essentially everything in your review, including your reaction to Aaronson's commentary re Asimov's laws.

This past week I read Nate's post on the sharp left turn [LW · GW] (which emphasizes how people tend to ignore this hard part of the alignment problem) and recently watched Eliezer express hopelessness related to humanity not taking alignment seriously in his We're All Gonna Die interview on the Bankless podcast [LW · GW].

This put me in a state of mind such that when I saw Aaronson suggest that an AI system as capable as M3GAN could plausibly follow Asimov's First and Second Laws (and thereby be roughly aligned?), it was fresh on my mind to feel that people were downplaying the AI alignment problem and not taking it sufficiently seriously. This made me feel put off by Aaronson's comment even though he had just said "Please don’t misunderstand me here to be minimizing the AI alignment problem, or suggesting it’s easy" in his previous sentence.

So while I explicitly don't want to criticize Aaronson for this due to him making clear that he did not intend to minimize the alignment problem with his statement re Asimov's Laws, I do want to say that I'm glad you took the time to explain clearly why Asimov's Laws would not save the world from M3GAN.

I also appreciated your insights into the film's illustration of the AI safety-related problems.